Abstract

Dietary Reference Intakes (DRIs) are used in Canada and the United States in planning and assessing diets of apparently healthy individuals and population groups. The approaches used to establish DRIs on the basis of classical nutrient deficiencies and/or toxicities have worked well. However, it has proved to be more challenging to base DRI values on chronic disease endpoints; deviations from the traditional framework were often required, and in some cases, DRI values were not established for intakes that affected chronic disease outcomes despite evidence that supported a relation. The increasing proportions of elderly citizens, the growing prevalence of chronic diseases, and the persistently high prevalence of overweight and obesity, which predispose to chronic disease, highlight the importance of understanding the impact of nutrition on chronic disease prevention and control. A multidisciplinary working group sponsored by the Canadian and US government DRI steering committees met from November 2014 to April 2016 to identify options for addressing key scientific challenges encountered in the use of chronic disease endpoints to establish reference values. The working group focused on 3 key questions: 1) What are the important evidentiary challenges for selecting and using chronic disease endpoints in future DRI reviews, 2) what intake-response models can future DRI committees consider when using chronic disease endpoints, and 3) what are the arguments for and against continuing to include chronic disease endpoints in future DRI reviews? This report outlines the range of options identified by the working group for answering these key questions, as well as the strengths and weaknesses of each option.

Keywords: Dietary Reference Intakes, chronic disease, intake response, evidentiary challenges, evidence assessments

I. EXECUTIVE SUMMARY

Background

Dietary Reference Intakes (DRIs)21 represent a common set of reference intake values used in Canada and the United States in planning and assessing diets of apparently healthy individuals and population groups. Past expert committees that developed these reference values took into consideration the deficiencies, inadequacies, and toxicities of nutrients and related food substances as well as relevant chronic disease outcomes. The increasing proportions of elderly citizens, the growing prevalence of chronic diseases, and the persistently high prevalence of overweight and obesity, which predispose to chronic disease, in Canada and the United States highlight the importance of understanding the impact of nutrition on chronic disease prevention and control, and on health promotion.

The approaches that expert committees have used to establish the DRIs usually worked well when these groups considered classical nutrient deficiencies and/or toxicities. However, when committees concluded that there was sufficient evidence to base a reference value on a chronic disease endpoint, deviations from the frameworks that were initially developed for DRI use were often required. In some cases, committees were unable to establish reference values for intakes that affected chronic disease outcomes despite evidence that supported relations between intakes and chronic disease outcomes.

Current project

A multidisciplinary working group sponsored by Canadian and US government DRI steering committees met from November 2014 to April 2016 to identify key scientific challenges that past DRI committees encountered in the use of chronic disease endpoints to establish reference values. The working group focused its discussions on 3 key questions:

1) What are the important evidentiary challenges for selecting and using chronic disease endpoints in future DRI reviews?

2) What intake-response models can future DRI committees consider when using chronic disease endpoints?

3) What are the arguments for and against continuing to include chronic disease endpoints in future DRI reviews?

Currently, DRIs apply to apparently healthy populations, but changing demographics (e.g., an aging population) and health status (e.g., increasing rates of obesity) suggest a possible need for broader population coverage. Past DRIs generally focused on intakes achievable by dietary strategies, but the growing ability to modify intakes through fortification and supplementation is increasingly relevant to future DRI development. In addition to these evolving concerns, future DRI committees need to continue to take into account the broad and diverse uses of DRIs when considering options for DRIs, including those based on chronic disease endpoints.

The sponsors asked the working group to identify a (not necessarily exhaustive) range of options for answering each of the key questions and the strengths and weaknesses of each option, while keeping in mind current and future DRI contexts and uses. The sponsors did not ask the group to reach a consensus on which options have the highest priority. Final decisions about the feasibility and options for specific approaches for deriving DRIs on the basis of chronic disease outcomes will be made by a future DRI committee.

Judging the evidence

The DRI process includes 2 key scientific decisions: 1) whether the available evidence supports a causal relation between the food substance of interest and a selected outcome and, 2) if so, which DRIs are appropriate based on the available data. DRI committees make these decisions for both beneficial and adverse effects. In the current project, the outcome of interest is a chronic disease.

Challenges in evaluating the evidence

When a DRI committee assesses whether the intake of a given food substance is causally related to a chronic disease or attempts to determine the nature of an intake-response relation between a food substance and a chronic disease, it considers the characteristics of individual study designs and overarching issues that apply across different types of study designs. One of these overarching issues is the risk of bias, which depends on the design, conduct, and analysis of a study and is useful for assessing whether evidence is likely to support a conclusion about a causal relation. Randomized controlled trials (RCTs) when they are well conducted and have adequate statistical power can minimize or eliminate many sources of bias, whereas observational studies are more vulnerable to confounding and sample-selection bias. Causality can be directly assessed with RCTs but must be inferred or its likelihood assessed from observational studies.

In RCTs, the food-substance intervention is known. Randomization increases the likelihood that measurement error or bias associated with dietary intake assessment will be evenly distributed among the groups. In contrast, assessing relations between food substances and chronic diseases in observational studies is particularly challenging because the assessment of intake is most often based on self-reported dietary intakes, which are subject to systematic bias, particularly intakes of energy. Unlike RCTs, in which valid comparisons among randomly assigned groups are possible without the use of dietary-assessment data, the validity and usefulness of observational studies depend on the accuracy and precision of the dietary assessments these studies use. Systematic reviews and meta-analyses, when they are well designed, can provide useful and well-documented summaries of the evidence on a relation between food substances and chronic diseases. However, the use of data from such analyses also requires caution because these analyses have the same biases and confounding problems as the original studies.

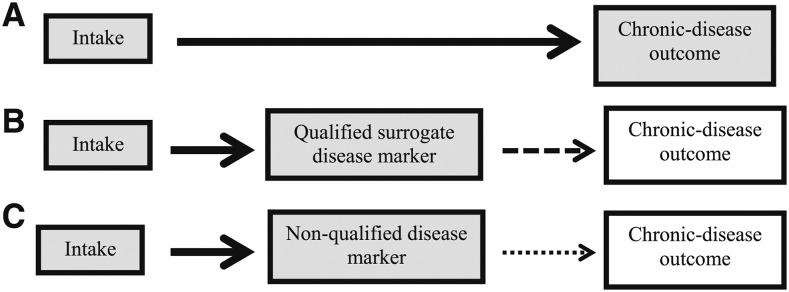

Which outcome measures a DRI committee selects for assessing the causality of a relation between food substances and chronic diseases is also important. It is possible to measure the occurrence of a chronic disease of interest directly or indirectly. Confidence that an observed relation between a food substance and a chronic disease outcome is causal is greatest when a study directly measures the chronic disease event or incidence. An indirect measurement involves a substitute measure (e.g., a qualified surrogate disease marker such as LDL cholesterol or a nonqualified disease marker such as carotid intima-media thickness for coronary heart disease). Some uncertainty is associated with the use of qualified surrogate disease markers, and considerable uncertainty is associated with the use of nonqualified disease markers as outcome measures.

Tools for assessing the evidence

Tools are available to assess 1) individual study quality and 2) the overall strength of the totality of the evidence. Tools to assess individual study quality include the Bradford Hill criteria, quality-assessment instruments, and risk-of-bias tools. Quality-assessment instruments, such as the Scottish Intercollegiate Guidelines Network 50 (SIGN 50) methodology, assess the quality of a study from conception to interpretation. Risk-of bias tools assess the accuracy of estimates of benefit and risk in RCTs and nonrandomized studies. Other tools evaluate the quality of systematic reviews and meta-analyses [e.g., A Measurement Tool to Assess Systematic Reviews (AMSTAR)] or provide criteria for grading the evidence [e.g., Grading of Recommendations Assessment, Development, and Evaluation (GRADE)]. For DRI applications, reviewers might need to add nutrition-specific measures to generic assessment tools when they evaluate relations between food substances and chronic diseases (e.g., information on baseline or background nutritional status, assay methods used to measure biomarkers).

Options for addressing evidence-related challenges

An early challenge in the DRI decision-making process is the identification of potentially useful measures (indicators) that reflect a health outcome associated with the food substance of interest. One option is to select an endpoint that is assessed as the chronic disease event (i.e., chronic disease defined by accepted diagnostic criteria) or by a qualified surrogate disease marker (e.g., LDL cholesterol for coronary heart disease). An alternative option would expand the types of outcome measures of chronic disease to include nonqualified disease markers. This would increase the number of relations between food substances and chronic disease outcomes for which committees could establish DRIs but is associated with considerable uncertainty as to whether the relation of the food substance and the chronic disease is causal.

Another challenge is to specify the acceptable level of confidence in the data that a DRI committee uses to establish causality. The level of confidence is based on the type of endpoint measured and the overall strength of the evidence. One option is to specify an acceptable level of confidence in (e.g., high or moderate) about the validity of the results that must be met before a reference value can be established. Another option is to use the actual level of certainty (e.g., high, moderate, or low) to describe the evidence associated with a given reference value. A final option is to let committees make this decision on a case-by-case basis.

Intake-response relations

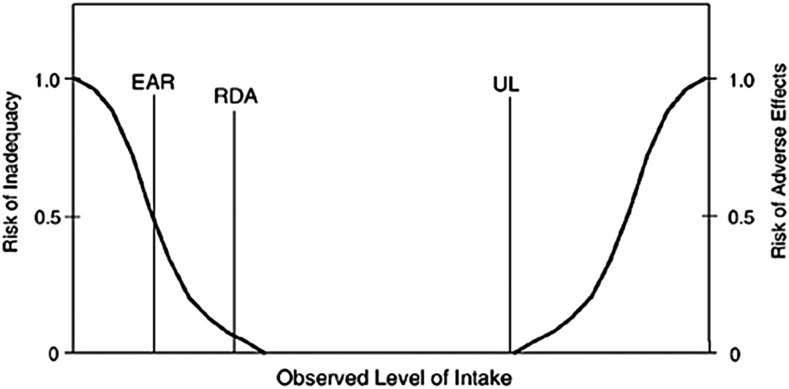

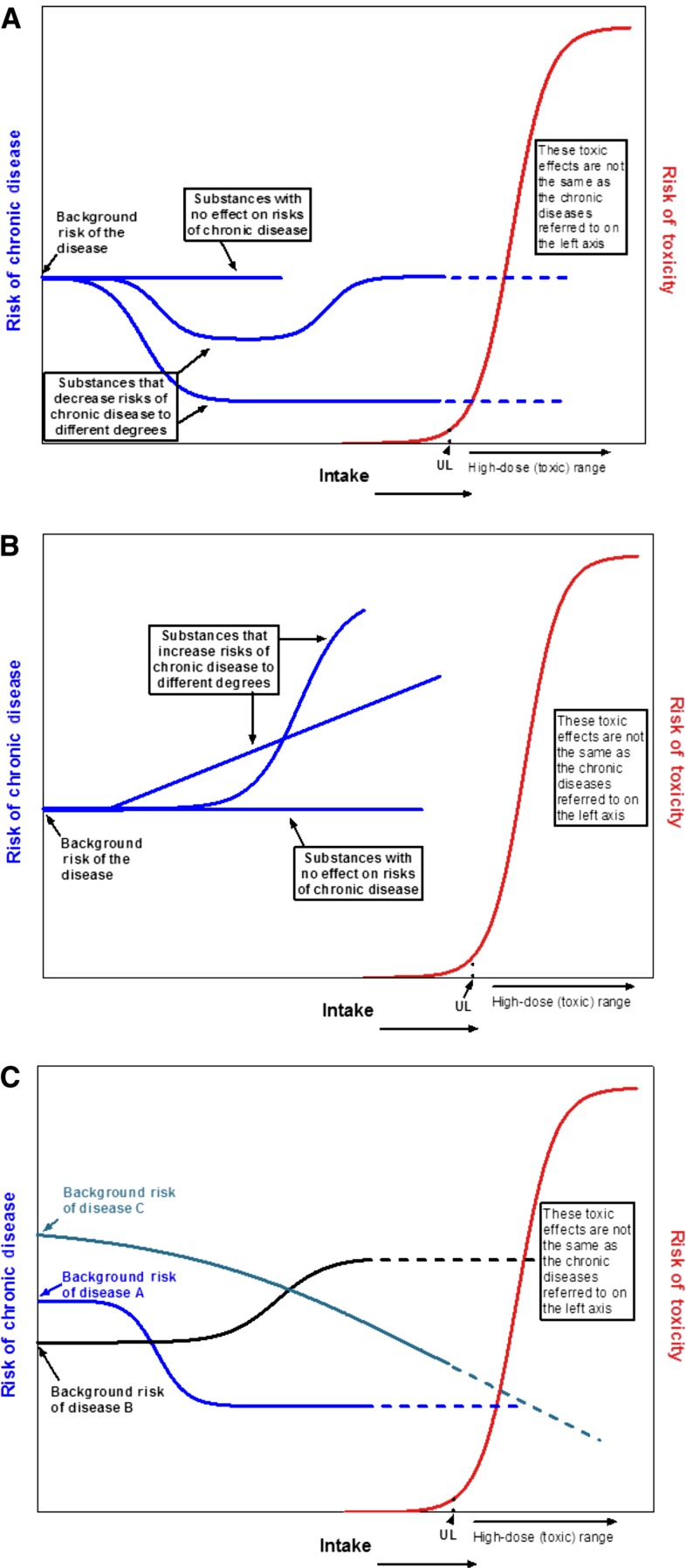

Intake-response relations for classical nutrient requirements and adverse events associated with excessive intakes differ from those associated with chronic diseases. Traditional deficiency relations are based on absolute risk, in which an inadequate intake of the nutrient is both necessary and sufficient to cause a deficiency and an adequate intake is both necessary and sufficient to treat a deficiency. The intake-response relation between a nutrient and a deficiency disease is linear or monotonic within the range of inadequacy. In contrast, food substance–chronic disease relations are often expressed as relative risks, in which the baseline risk of a chronic disease is never zero and changes in intake may alter risk by relatively small amounts. In addition, reductions in relative risk are achievable through >1 intervention, which means that the food substance of interest may not be necessary or sufficient to increase or decrease the relative risk of the disease. The relation between a food substance and a chronic disease indicator can be diverse (e.g., linear, monotonic, or nonmonotonic). A single food substance can have a causal relation with >1 chronic disease, and intake-response curves for these different relations can differ.

Options for determining an acceptable level of confidence

Several options are available for determining the acceptable level of confidence in the data that a DRI committee uses to determine intake-response relations once it has data that establish a causal relation. One option is to require a high level of confidence by, for example, using RCTs with a chronic disease or qualified surrogate disease marker as the outcome measure. Another option is to accept a moderate level of confidence in the data, which would allow for inclusion of data on chronic disease outcomes or qualified surrogate markers of disease from observational studies. A third option is to “piece together” different relations in which the outcome marker of interest is a common factor when direct evidence of the outcome marker’s presence on the causal pathway between the food substance and a chronic disease is lacking. Therefore, if data show a quantitative relation between a food-substance intake and the outcome marker of interest and other data show a quantitative relation between the outcome marker of interest and the chronic disease, this evidence could be combined to establish a quantitative reference intake value for the chronic disease risk, if the confidence in the data is at an acceptable level.

Options for types of reference values

If data for an acceptable level of confidence are available, a reference value based on chronic disease risk reduction can be determined. The challenges presented by the use of chronic disease endpoints to set reference values by using the traditional framework suggest the need for different types of reference values than are used for classical nutrient deficiencies and toxicities. For cases in which increasing intakes will reduce the risk of a chronic disease, one option is to estimate a chronic disease risk-reduction intake value [e.g., a chronic disease risk-reduction intake value, such as a chronic disease (CD) value for reduced cardiovascular disease (CVD) reduction, could be denoted as CDCVD] that is specific to a chronic disease outcome and is based on data reported as relative rather than absolute risk. Within this type of approach, 3 possible adaptations are identified: 1) set a single chronic disease value at a level above which higher intakes are unlikely to achieve additional risk reduction for a specified disease (i.e., point estimate), 2) set multiple reference values in relation to the expected degree of disease risk reduction across a spectrum of intakes to give a “family of targeted reductions,” or 3) set multiple chronic disease–related values (e.g., CDCVD, CDcancer) if the food substance is related to multiple diseases at different intakes. Another option is to express reference intakes as ranges of beneficial intakes.

Options for the derivation of Tolerable Upper Intake Levels (ULs) include the use of either one or both traditional adverse events (i.e., toxicities) and chronic disease endpoints, depending on the nature and strength of available evidence. One option is to derive ULs on the basis of a threshold approach by using traditional adverse events, if the UL based on chronic disease risk would be higher than a UL associated with a traditional adverse effect. A second option is to use chronic disease endpoints to set a UL in cases in which intakes associated with increased chronic disease risk are at a level below those associated with traditional adverse events. These values could be denoted as a chronic disease UL (ULCD) to distinguish them from a traditional UL. For this second option, approaches analogous to the derivation of CD values (e.g., the development of 1 or multiple values for specified levels of relative risk) or a threshold approach (e.g., identifying the inflection point at which absolute or relative risk increases) could be used. When increased chronic disease risks are observed over a range of intakes and the intake-response curve shows an inflection point that supports a threshold effect, the inflection point could be set as a ULCD. If there is no clear inflection point, then a single ULCD value or a set of ULCD values could be based on intakes that reduce risk at specified levels with the acknowledgment that it may not be possible to eliminate the targeted risk. Basing ULCD values on risk reduction or minimization rather than risk elimination would further differentiate ULCD values from traditional UL values. Such an option would entail the provision of adequate guidance to users with regard to their uses and application. A third option is to develop multiple values on the basis of both traditional adverse events and chronic disease endpoints with guidance provided to users with regard to the strengths and weaknesses of derived values, and examples of their appropriate uses. For all options, the feasibility of avoiding or minimizing the food substance in the diet must be considered when there is no threshold for risk.

Options for resolving overlaps between benefit and harm

Intake distributions for some food substances associated with disease risk reduction might overlap with intake distributions associated with adverse events, including higher chronic disease risk. Several descriptive options are proposed for dealing with this issue. One option is to ensure that no point estimate or range of beneficial intakes for chronic disease risk reduction extends beyond the intake at which the risk of adverse events, including chronic diseases, increases. A second option is to predetermine criteria related to the severity and prevalence of targeted chronic diseases and the degree of change in the risk of specified intakes required to set a reference value. A third option is to simply describe the nature of the evidence and the public health implications of benefits and risks across the full range of intakes in which inferences are reasonably possible together with remaining uncertainties. Users would choose an appropriate balance between benefit and harm for the population of concern.

Options for selecting an indicator or indicators and specifying intake-response relations

Several possible options are identified to address examples of challenges likely to be encountered when intake-response curves are based on chronic disease endpoints. One possible approach is to identify alternatives for addressing different types of outcome markers [e.g., chronic diseases defined by accepted diagnostic criteria (clinical diseases per se) compared with qualified surrogate disease markers and nonqualified disease markers] to derive intake-response relations. In this approach, several possible options are identified. One option is to select a single outcome indicator on the causal pathway, provided that it is sufficiently sensitive to quantify the relation between the food substance and the chronic disease. Another option is to integrate information from multiple indicators for a given chronic disease if they add substantially to the accuracy of the intake-response relation and reference value variation. A third option may be required when a single food substance is related to multiple chronic disease outcomes, each with a distinct intake-response relation. In this case, criteria for selecting appropriate endpoints or surrogate endpoints to establish intake-response relations, methods to integrate multiple endpoints, and methods to account for interindividual variability in the relations of interest need to be developed. Another option is to use a biological mode-of-action framework instead of a statistical approach in establishing quantitative reference intakes.

In applying these possible approaches, several factors that influence or confound quantitative intake-response relations need to be considered. The accuracy of intake-response relations is dependent on the accuracy of the measurements of intakes and outcomes. Systematic bias due to substantial underreporting (e.g., intakes, particularly energy intakes) is of particular concern. When available, the use of qualified and accurately measured biomarkers of nutrient and food-substance intakes may overcome biases in self-reported intakes. Another factor relates to the common problem of data being available on some, but not all, life-stage groups for which DRIs are established. Two options for dealing with this issue are identified, including limiting the establishment of DRI values based on chronic disease endpoints to populations that are identical or similar to the studied groups. Alternatively, extrapolation could be considered when sufficient evidence is available that specific intakes of a food substance can increase or decrease the risk of a chronic disease.

DRI process

Arguments for or against including chronic disease endpoints in future DRIs

Evidence-based reference intake values and/or recommendations with regard to food substances causally related to the chronic diseases are desirable from public health and clinical perspectives. Yet, despite the growing chronic disease burden and continued use of DRIs, substantial challenges persist related to both the paucity of sufficiently relevant and robust evidence for evaluating putative causal relations between intakes and a chronic disease and the often-poor fit of the current Estimated Average Requirement (EAR)/Recommended Dietary Allowance (RDA) and UL frameworks for deriving DRIs on the basis of chronic disease endpoints. There is a clear desire to include chronic disease endpoints in the DRIs; however, the challenges reviewed in this report underscore the fact that the broader incorporation of chronic disease endpoints requires more sophisticated approaches than those previously used. These must also include approaches to issues concerning processes and starting points.

Options for process components

The current DRI values were set by a process that reviews a group of essential nutrients and related food substances and clearly focuses on intakes required for health maintenance and chronic disease risk reduction. Two possible options for organizing future reviews and derivations of DRIs based on chronic disease endpoints are identified. The first option is to continue incorporating chronic disease endpoint considerations in future DRI reviews but to expand the types of reference values that could be set, while clearly differentiating between values based on classical nutrient adequacy and chronic disease endpoints. A second option is to create 2 separate but complementary, and possibly iterative and/or integrated, processes for the development of reference values on the basis of chronic disease endpoints and/or deficiency diseases. For example, a review is initiated specifically to set DRIs on the basis of chronic disease endpoints or when an existing independent process could be used.

Options for starting point

The starting point of current DRI processes is individual food substances, and all pertinent outcomes related to varying intakes of given food substances are considered. If chronic disease endpoints are to be considered, one option is to focus on individual food substances or small groups of interrelated nutrients, an approach that is similar to the current DRI process. Conversely, another option is to focus on a specific chronic disease and its relation with multiple food substances.

Forthcoming tools

Examples are discussed of forthcoming tools and novel study designs with potential utility in overcoming anticipated hurdles, such as complexities related to multiple, interactive etiologies and longitudinal characteristics of chronic diseases. These include the identification and use of new dietary intake biomarkers, the potential for the use of Mendelian randomization studies to inform causality, the use of U-shaped dose-risk relation modeling based on severity scoring and categorical regression analysis, consideration of enhanced function endpoints, the use of systems science, and the application of principles subsumed under the umbrella of precision medicine.

Conclusions

The development of the DRIs has proven to be critical for the successful elimination of diseases of deficiency in Canada and the United States. If the DRI framework could be improved to more effectively incorporate chronic disease outcomes, the potential impact on public health would be even greater. The next steps are to assess the feasibility of including chronic disease endpoints in future DRI reviews, to evaluate the relevance and appropriateness of expanding DRIs to populations beyond those currently targeted, and to determine which of the options and/or their adaptations identified in this report may warrant inclusion in a future chronic disease DRI framework.

II. BACKGROUND

DRIs are a common set of reference intake values that the Canadian and US governments, individuals, and organizations use for planning and assessing the diets of apparently healthy individuals and populations (1–3). The Food and Nutrition Board (FNB) periodically convenes ad hoc expert committees to develop DRIs for specified food substances. DRIs are guides for achieving safe and adequate intakes of nutrients and other food substances from foods and dietary supplements. The DRI committees establish DRIs within a public health context for the prevention of nutrient deficiencies, for reduction in risk of other diseases, and for the avoidance of potential adverse effects of excessive intakes. DRIs are available for 22 groups based on age, sex, pregnancy, and lactation in apparently healthy populations. Future DRI committees might need to review whether the population coverage should be expanded to include morbidities of high prevalence.

The definition of “food substances” for this report is provided in Text Box 1. Future DRI committees might find it useful to review and revise this definition.

Text Box 1.

Food substances consist of nutrients that are essential or conditionally essential, energy nutrients, or other naturally occurring bioactive food components.

Previous DRI committees have used the term “apparently healthy populations” as defined in Text Box 2.

Text Box 2.

DRIs are reference intakes for apparently healthy populations. DRI intake levels are not necessarily sufficient for individuals who are malnourished, have diseases that result in malabsorption or dialysis treatments, or have increased or decreased energy needs because of disability or decreased mobility (1).

There is no single uniform definition of “chronic disease” (4) and defining this concept for DRI evaluations, although highly relevant, is outside this project’s scope. Future DRI committees will probably need to define this term. Existing definitions of this term differ with respect to whether a chronic disease requires medical attention, affects function, has multiple risk factors, or can be cured. There are many definitions of chronic disease, several examples of which are shown in Text Box 3.

Text Box 3.

Examples of definitions of chronic diseases

WHO: Noncommunicable diseases, also known as chronic diseases, are not passed from person to person. They are of long duration and generally slow progression. The 4 main types of noncommunicable diseases are CVDs, cancers, chronic respiratory diseases, and diabetes (5).

US Department of Health and Human Services: Chronic illnesses are conditions that last ≥1 y and require ongoing medical attention and/or limit activities of daily living (4).

Institute of Medicine Biomarkers Committee: A chronic disease is a culmination of a series of pathogenic processes in response to internal or external stimuli over time that results in a clinical diagnosis or ailment and health outcomes (e.g., diabetes) (6).

History of nutrient intake reference values

The establishment of quantitative nutrient intake reference values in the United States and Canada began around 1940 with a single type of reference value in each country: 1) the Recommended Nutrient Intakes, or RNIs, for Canadians and 2) the RDAs for the United States (1). These values were the intakes of essential nutrients that the experts who developed them expected would meet the known nutrient needs of practically all healthy persons.

In 1994, an FNB committee recommended that future intake reference values reflect more explicit statistical constructs of distributions of requirements across individuals (7). As a result, DRI committees began deriving reference values from population-specific estimates of average requirements (EARs) and associated population variability (RDAs) (1, 3). This approach allowed DRI users to calculate the prevalence of inadequacy in populations and the probability of inadequacy in individuals (1, 8–10). The FNB committee also recommended adding a reference value that reflects an upper safe level of intake (UL) (7, 11). All DRI reports published after 1996 implemented these recommendations (Table 1). However, with the progressive implementation of the revised DRI process, the committees that produced these reports recognized that the EAR and RDA model and the UL model were inappropriate for some outcomes of interest. Therefore, DRI committees added new reference values, as follows: 1) Adequate Intake (AI), 2) Acceptable Macronutrient Distribution Range (AMDR), and 3) Estimated Energy Requirement, or EER (Table 1).

TABLE 1.

DRIs and their definitions1

| DRIs | Definition |

| Based on 1994 Food and Nutrition Committee recommendations | |

| EAR | The average daily nutrient intake level that is estimated to meet the requirements of half of the healthy individuals in a particular life stage and sex group. |

| RDA | The average daily dietary nutrient intake level that is sufficient to meet the nutrient requirements of nearly all (97–98%) healthy individuals in a particular life stage and sex group. |

| UL | The highest average daily nutrient intake level that is likely to pose no risk of adverse health effects for almost all individuals in the general population. As intake increases above the UL, the potential risk of adverse effects may increase. |

| Added by DRI committees in 1994–2011 | |

| AI | The recommended average daily intake level based on observed or experimentally determined approximations or estimates of nutrient intake by a group (or groups) of apparently healthy people that are assumed to be adequate; used when an RDA cannot be determined. |

| AMDR | The range of intakes of an energy source that is associated with a reduced risk of chronic disease, yet can provide adequate amounts of essential nutrients; expressed as a percentage of total energy intake. |

| EER | The average dietary energy intake that is predicted to maintain energy balance in a healthy adult of a defined age, sex, weight, height, and level of physical activity consistent with good health. In children and pregnant and lactating women, the EER includes the needs associated with the deposition of tissues or the secretion of milk at rates consistent with good health. |

From reference 1. AI, Adequate Intake; AMDR, Acceptable Macronutrient Distribution Range; DRI, Dietary Reference Intake; EAR, Estimated Average Requirement; EER, Estimated Energy Requirement; RDA, Recommended Dietary Allowance; UL, Tolerable Upper Intake Level.

In response to evolving science that suggests beneficial effects of diets and dietary components in reducing the risk of chronic disease (12), the 1994 FNB committee also recommended that DRI committees include reduction in the risk of chronic disease in the formulation of future reference values when sufficient data on efficacy and safety are available (7). All 7 subsequently published DRI reports placed a high priority on an evaluation of potential chronic disease endpoints for all of the nutrients they reviewed (13, 14). However, these panels based only a limited number of DRIs on chronic disease endpoints: dietary fiber and coronary heart disease, fluoride and dental caries, potassium and both hypertension and kidney stones, and sodium and CVD (15).

Uses of DRIs

The uses of reference intake values have expanded considerably beyond the original intent of helping governments plan and evaluate nutrition programs and policies. Uses now include general nutrition education and guidance for the public, dietary management of clinical patients, identification of research gaps and priorities, research design and interpretation, food product development, regulatory applications, and guidance for international and other organizational reference values.

The evolving range of diverse uses and users of reference intake values underscores the need for the transparent documentation of scientific decisions made by DRI committees and for reference intake values that lend themselves to a wide range of applications. DRI reports focus on the scientific and public health aspects of the intakes of nutrients and food substances, but they do not make policy recommendations, with one notable exception. The 1997 amendments to the US Food, Drug, and Cosmetic Act mandated that food manufacturers could use “authoritative statements” from certain scientific bodies, including the National Academies of Sciences, Engineering, and Medicine, as health claims on food labels in the US marketplace without undergoing usual US Food and Drug Administration review and authorization procedures (16). This latter policy is not operative in Canada.

Report overview

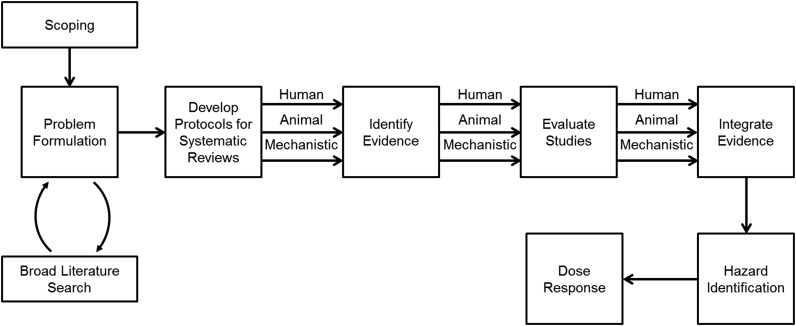

This report, in section III, provides an overview of the current project, whose purpose is to critically evaluate key scientific challenges in the use of chronic disease endpoints to establish reference intake values. Section IV describes the framework that the working group used as background information for this project. Sections V-A, V-B, and V-C describe options that the working group identified to assess evidentiary challenges related to determining whether relations between food substances and targeted chronic diseases are causal. Options for establishing intake-response relations between food substances and chronic disease endpoints are the focus of section VI. Section VII addresses considerations for future DRI committee processes, and section VIII discusses some forthcoming tools that could be applied to the establishment or application of DRI values based on chronic disease endpoints. Section IX offers a few conclusions and next steps.

III. CURRENT PROJECT

This section describes the rationale for this project as well as its objectives and key questions. Motivations for the project were well-established links between diet and health throughout the life course and the expectation that evidence-based changes in the intakes of food substances would enhance well-being and reduce disease risk. The broad application of reference intake values, increasing rates of chronic diseases among US and Canadian populations, growing financial and quality-of-life burdens represented by that dynamic, and shortcomings of the EAR/RDA and UL models provided additional reasons to undertake this effort.

Several US and Canadian government agencies are continuing DRI-related harmonization efforts initiated in the mid-1990s by jointly sponsoring the current project. These agencies convened a working group with a broad and diverse range of scientific and DRI experience (Table 2). The group had numerous discussions via conference calls and at a public workshop (17). The sponsors also solicited public comment on the working group deliberations.

TABLE 2.

Working group members and their institutions

| Working group member | Institution |

| Jamy D Ard, MD | Associate Professor, Wake Forest School of Medicine, Wake Forest University |

| Stephanie Atkinson, PhD, FCAHS | Professor, Department of Pediatrics, McMaster University |

| Dennis M Bier, MD | Professor of Pediatrics, and Director, Children's Nutrition Research Center, Baylor College of Medicine |

| Alicia L Carriquiry, PhD | Distinguished Professor, Department of Statistics, Iowa State University |

| Cutberto Garza, MD, PhD (Chair) | Professor, Boston College, and Visiting Professor, George Washington University Milken Institute School of Public Health and Johns Hopkins University |

| William R Harlan, MD, FACP, FACPM, FAAFP, FAHA | Research Consultant (retired), NIH |

| Dale B Hattis, PhD | Research Professor, The George Perkins Marsh Institute, Clark University |

| Janet C King, PhD | Executive Director, Children's Hospital Oakland Research Institute, and Professor Emeritus, University of California, Berkeley and Davis |

| Daniel Krewski, PhD | Professor and Director, McLaughlin Centre for Population Health Risk Assessment, University of Ottawa |

| Deborah L O'Connor, PhD, RD | Professor, Department of Nutritional Sciences, University of Toronto, and Senior Associate Scientist, The Hospital for Sick Children |

| Ross L Prentice, PhD | Member, Public Health Sciences Division, Fred Hutchinson Cancer Research Center, and Professor of Biostatistics, University of Washington |

| Joseph V Rodricks, PhD, DABT | Principal, Ramboll-Environ International Corporation |

| George A Wells, PhD, MSc | Professor, Department of Epidemiology and Community Medicine, University of Ottawa Heart Institute |

The focus of the current project was on the relation between food-substance intakes and chronic disease endpoints. The working group applied elements of the traditional DRI-related context to its work: a prevention (public health) orientation, intakes that are achievable within a dietary context (and, in a few highly selected cases, through dietary supplements, such as folate supplements during pregnancy), and primary applicability to the apparently healthy population.

Objectives

One objective of this project was to critically evaluate key scientific issues involved in the use of chronic disease endpoints to establish reference intake values. A second objective was to provide options for future decisions about whether and/or how to incorporate chronic disease endpoints into the process for establishing DRI values. The sponsors asked the working group not to try to reach consensus on which options were best, but rather, to identify a range of options and their strengths and weaknesses. None of the options in this report excludes other possibilities, and the order of presentation or amount of space devoted to each option is not intended to convey relative priorities. Subsequent expert groups will make final decisions about future DRI approaches to chronic disease endpoints. The key scientific decisions that are the backbone of DRI development (Table 3) provided context for the working group’s discussions.

TABLE 3.

DRI decisions and considerations1

| 1. Causality: Is the relation between the food substance and the chronic disease or diseases causal? |

| a. Objective assessment of the relevance and robustness of available studies |

| b. Clear identification of the putative benefit or increased risk ascribed to targeted food substance or substances (e.g., amelioration or exacerbation of absolute or relative risks, level of severity) |

| c. Selection of candidate chronic disease outcomes (e.g., chronic disease event, surrogate disease marker, nonqualified outcome) that reflects targeted causal relations |

| d. Delineation of uncertainties related to determination of causality |

| e. Evaluation of challenges likely to be encountered because of the extrapolation of causality from studied to unstudied groups |

| 2. Intake-response relation: What is an appropriate DRI value (provided that causality has already been determined)? |

| a. Objective assessment of the relevance and robustness of available evidence |

| b. Determination of the type of reference value that is most appropriate given the available data (e.g., mean ± variances, ranges) and user needs (e.g., planning or assessment for individuals or groups) |

| c. Selection of candidate indicators for establishing an intake-response relation (i.e., endpoints for quantification) |

| i. What are the complexities of the intake-response relation (e.g., linear, curvilinear, overlapping of benefit, or increased risk curves)? |

| ii. What are the characteristics of possible indicators (e.g., chronic disease event or biomarker relative to the causal pathway between intake and the chronic disease)? |

| d. Identification of statistical models or other approaches (e.g., statistical, population-derived) to quantify the relation |

| e. Delineation of uncertainties in the available data |

| f. Identification of adjustments that may be necessary (e.g., about bioavailability, bias in exposure, outcome measures) |

| g. Evaluation of challenges likely to be encountered in the extrapolation of a reference intake value from studied to unstudied groups |

Evaluations of the effect of increasing intakes on both benefit (i.e., decreased risk of chronic disease) and safety (i.e., increased risk of chronic disease) as intakes increase are a core part of the DRI review process. Although DRI committees often review benefit and safety separately, the generic nature of the issues they must address in their review are likely to be the same for both types of review. This report focuses on the key questions related to causality and the intake-response relation. DRI, Dietary Reference Intake.

The working group identified a (not necessarily exhaustive) range of options for answering each of 3 key questions and identifying strengths and weaknesses of each option, while keeping in mind current and future DRI uses. The key questions are listed in the following sections.

Key question 1: What are the important evidentiary challenges for selecting and using chronic disease endpoints in future DRI reviews?

The types of scientific evidence in the DRI-development process that are necessary to establish the essentiality of nutrients differ from the type of evidence needed to evaluate relations between food substances and chronic diseases (7). A key challenge is the limited availability of RCTs that are designed to establish that a food substance of interest is causally related to a given chronic disease outcome. A much larger body of evidence based on prospective cohort and other observational studies is available that shows associations between food substances and chronic diseases, but common study design limitations in such instances make it challenging to determine causality (18). The availability of studies that measured functional and other intermediate biomarkers (including qualified surrogate disease markers and nonqualified disease markers) of chronic disease risk has strengthened the ability to determine the utility of different study designs and endpoints for accurately predicting the impact of reference intakes on chronic disease outcomes (6).

The availability of recently developed evaluation tools and techniques (e.g., SIGN 50 methodology) (19) and grading tools (e.g., GRADE) (20) have enhanced the ability to assess the quality of individual studies and the overall strength of the totality of the available evidence. Although developers did not design and validate these types of tools for DRI applications (21), DRI committees can adapt them for DRI applications to help address the evidentiary challenges that are discussed more fully in sections V-A, V-B, and V-C.

A re-evaluation of the appropriateness of chronic disease endpoints and development of criteria for their use is timely because of the substantive knowledge base that has emerged in recent decades on relations between food substances and chronic diseases. The working group focused on options for addressing evidentiary challenges that future DRI committees must consider when they evaluate and select chronic disease endpoints.

Key question 2: What intake-response models can future DRI committees consider when using chronic disease endpoints?

The DRI intake-response relation models best equipped to deal with deficiency endpoints often are not appropriate for chronic disease endpoints (13, 22). For the purpose of this report, “intake” refers to intake exposure to a food substance. “Intake-response relation” refers to the impact on physiologic processes of a range of dietary intakes. Related challenges include difficulties in the use of nutrient-status indicators (e.g., serum nutrient concentrations) to estimate optimal intakes on the basis of chronic disease endpoints. In addition, it is often difficult to use the relative risk data commonly available on relations between food substances and chronic diseases to calculate a population average and variance, as is necessary for deriving EARs and RDAs. DRI committees have generally found AIs to be useful for deriving chronic disease endpoints, but DRI users have found AIs difficult to apply when assessing and planning diets for groups (13).

DRI committees have also encountered challenges in basing ULs on chronic disease endpoints. These committees did find convincing evidence that higher intakes of several food substances were associated with increased risks of certain chronic diseases. However, the absence of an apparent threshold effect for the associated intake-response relations resulted in either failure to establish a UL or the establishment of an arbitrary UL on the basis of considerations other than the traditional model for establishing DRIs (23, 24). It is therefore important to identify other approaches and models for deriving quantitative reference values that are related to both benefits and risks of food-substance intakes for chronic disease outcomes.

Key question 3: What are the arguments for and against continuing to include chronic disease endpoints in future DRI reviews?

The 1994 FNB committee was concerned about the need to consider differences among relations between nutrients and diseases of deficiency compared with those between food substances and chronic diseases in decisions about whether to combine these 2 types of relations or to address them separately (7). Subsequent evaluations of the DRI process have continued to question whether a single process or separate processes are most appropriate for this purpose (13, 22).

IV. CURRENT PROJECT FRAMEWORK

This section describes the framework that the working group used in its reviews and deliberations. Chronic diseases are the leading cause of death and disability in the United States and Canada, and they account for a major proportion of health care costs (25, 26). Globally, 38 million people die annually from chronic diseases, and almost three-quarters of these deaths occur in low- and middle-income countries (5). With changing demographics (e.g., aging populations) and increasing rates of overweight and obesity, public health concerns and costs related to chronic diseases are expected to increase further in the coming decades.

Published evidence shows that “healthy” dietary choices and lifestyles can help prevent or control several chronic diseases (27). The technological capabilities of assessing individual and population risks of chronic diseases and options for modifying foods and behaviors that affect diets are likely to expand. At the same time, the understanding of the development of chronic diseases through the life course is increasing.

The evaluation of relations between food substances and chronic diseases is complex, and a single conceptual model is unlikely to fit all cases. Chronic diseases are generally considered to be pathologic processes that are noncommunicable, of long duration, of slow progression, and of multifactorial etiologies, which, in turn, may be influenced by genetic backgrounds, age and sex, comorbidities, environments, lifestyles, and an increasing prevalence of obesity (5, 25). They represent a wide range of conditions, including heart disease, cancer, arthritis, diabetes, and macular degeneration. Chronic diseases have varying public health importance, severity, prevalence, and availability of effective treatments and prevention strategies. These diseases begin years before signs and symptoms become evident with the use of current diagnostic technologies. Complex factors interact to influence chronic disease progression, including interactions between food substances. In some cases, one factor (e.g., a particular food substance) may only exert an effect if other factors are also present or absent. Food-substance effects are often small in individuals but can have significant beneficial or detrimental effects on populations. Defining populations at risk of a chronic disease is also challenging because many diseases are associated with, or modified by, other morbidities (e.g., obesity is associated with several comorbidities in the elderly) and demographic characteristics (e.g., proportions of individuals aged ≥65 y and changing pharmaceutical uses).

Because the human diet is a complex mixture of interacting components that cumulatively affect health (28), isolating the effects on chronic disease risk of a single food substance or a small number of them can be challenging. In addition, the risks of chronic disease can be associated with either decreasing or increasing intakes of food substances (e.g., of fiber or saturated fat, respectively). The observed intake-response characteristics generally do not fit the threshold-based EAR/RDA and UL approaches that are based on absolute risk and that DRI committees use to set reference values for nutrient deficiencies and related toxicities (22).

Intake-response curves have varied shapes. Both high and low intakes of some substances may increase the risk of a chronic disease, and high and low intakes of the same food substance sometimes have overlapping effects [e.g., the intake-response curve for the decreasing effect of increasing fluoride intakes on dental caries overlaps with the intake-response curve for the effect of increasing fluoride intakes on fluorosis (29)]. Observational data suggest that a given food substance can be related to multiple chronic disease outcomes, and each relation can have its own distinctive intake-response curve (22, 30). These complexities indicate the need for a multidisciplinary approach to developing nutrient-specific and context-specific frameworks that involves scientists with a wide range of expertise.

It is useful to compare the reference value concepts traditionally used for nutrient requirements and toxicities with the concepts that pertain to chronic disease risk reduction (Table 4). Historically, the food substances for which expert panels established reference values tended to be essential or conditionally essential nutrients or those that supplied energy (31). With its inception, the DRI-development process broadened this concept to include food substances with documented effects on chronic disease risk (e.g., fiber, saturated fats, and trans fats). Today, there is considerable interest in expanding future DRIs to include other bioactive components with documented health effects (32–34). Although essential nutrients have a direct and specific effect on nutrient deficiencies, other food substances alone might be neither necessary nor sufficient to reduce disease risk. Even if research has established a causal relation between a food substance and a chronic disease outcome, the mechanisms of action are often unknown or poorly understood. Research results on chronic disease risks are often expressed as relative risks as opposed to the reporting of absolute risks that experts typically use to define nutrient requirements for essential nutrients. Although the evidence may be reported as relative risks, DRI decisions may also need to consider the relation of a food substance and chronic disease within an absolute risk context (35).

TABLE 4.

Traditional and chronic disease endpoints for DRIs1

| Issue | Eligibility for consideration | Focus | Characteristics | Expression of risk |

| Traditional endpoints | Food substances that are essential or conditionally essential or that are components of energy nutrients (e.g., fats, proteins, and carbohydrates). | Nutrient requirements | Adequate intakes are essential for preventing and treating deficiency diseases. | Average inflection point between adequate and inadequate intakes (EAR) of a group and its associated population variance (RDA). |

| Nutrient toxicities | Intakes at some level above adequate intakes may pose the risk of adverse health effects. | Highest intake of a group that is unlikely to pose a risk of adverse effects and above which the risk of adverse effects increases (UL). | ||

| Chronic-disease endpoints | Naturally occurring food substances, including nutrients, for which changes in intake have been demonstrated to have a causal relationship to the risk of one or more chronic diseases. | ↑Intakes of “beneficial” substances | With ↑ intakes, the relative risk ↓ compared with baseline intakes. | Relative risk (ratio of the probability of an event occurring in a group with higher intakes to the probability of an event in a comparison group with lower intakes). |

| ↓Intakes of “harmful” substances | With ↓ intakes, the relative risk ↓ compared with baseline intakes. | Relative risk (ratio of the probability of an event occurring in a group with lower intakes to the probability of an event in a comparison group with higher intakes). |

DRI, Dietary Reference Intake; EAR, Estimated Average Requirement; RDA, Recommended Dietary Allowance; UL, Tolerable Upper Intake Level; ↑, increased or increases; ↓, decreased or decreases.

V-A. JUDGING THE EVIDENCE: EVIDENTIARY CHALLENGES

This section and the next 2 sections discuss ways to assess the strength of the evidence on causal relations between food substances of interest and targeted chronic diseases. This section focuses on study designs and related issues that affect the use of evidence to assess the causality of these relations in DRI evaluations.

The DRI process involves 2 key decisions: 1) whether available evidence supports a causal relation between the food substance of interest and the chronic disease and, 2) if so, what DRIs may be appropriately derived from the available data. DRI committees make these 2 key decisions for both beneficial and adverse effects as guided by 2 key questions and their component characteristics (Table 3). When DRI committees find causal relations between food substances and chronic diseases, they can then derive DRI values that are appropriate given the evidentiary base that supports the intake-response relations. Tolerance of uncertainty is likely to vary for decisions about beneficial compared with adverse effects and for decisions involving causal compared with intake-response relations.

Judging evidence to develop DRIs on the basis of chronic disease endpoints has been an evolutionary process that continues to present major challenges. The 1994 FNB committee noted that consideration of chronic disease endpoints often requires a different type of evidence than the evidence that committees have used for determinations of nutrient requirements on the basis of classical deficiency diseases (7). In the 6 DRI reports published between 1997 and 2005, the totality of the evidence from both observational and intervention studies, appropriately weighted, formed the basis for conclusions with regard to causal relations between food-substance intakes and chronic disease outcomes (23, 24, 29, 36–38). The 2011 DRI Committee on Calcium and Vitamin D stated that RCTs provided stronger evidential support over observational and ecologic studies and were therefore necessary for the committee to further consider a health-outcome indicator (14). This committee also considered whether evidence from published RCTs and high-quality observational studies was concordant and whether strong biological plausibility existed. The paucity of studies specifically designed to support the development of DRIs continues to be a challenge.

Overarching challenges

When a DRI committee considers the strength of the evidence for its decisions, it considers overarching challenges that apply across different types of study designs and specific study design characteristics. This section discusses 3 overarching challenges: sources of bias, selection of chronic disease outcome measures, and statistical issues.

Sources of bias

A bias consists of systematic (not random) errors in estimates of benefits or risks due to a study’s design or in the collection, analysis, interpretation, reporting, publication, and/or review of data (39). Bias results in erroneous (as opposed to less precise) estimates of the effects of exposures (e.g., food substances) on outcomes (e.g., risk of chronic disease).

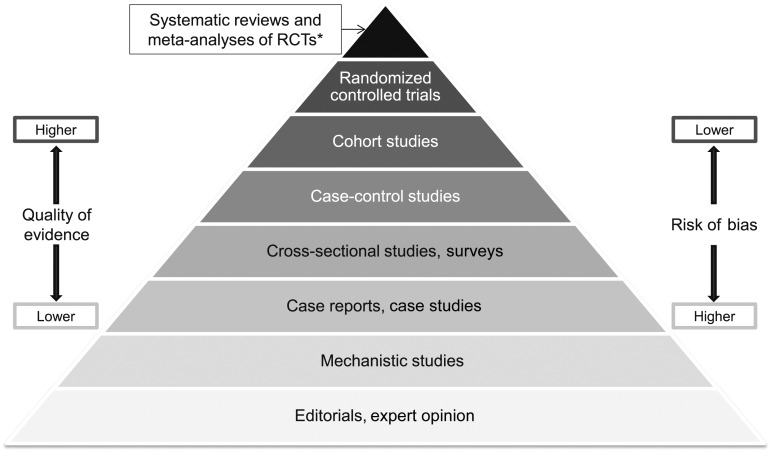

Evaluations of whether evidence likely supports a conclusion about causation often use risk-of-bias concepts. Risk of bias varies by study design (Figure 1) (40–43). At each ascending level in the pyramid in Figure 1, the quality of evidence is likely to improve (i.e., the risk of bias decreases) and the quantity of available studies usually declines. Within each level, however, quality varies by study design and implementation, which can blur the quality differences among hierarchies in the pyramid. Confidence in whether relations of interest are causally related generally increases toward the top of the pyramid.

FIGURE 1.

Hierarchy of evidence pyramid. The pyramidal shape qualitatively integrates the amount of evidence generally available from each type of study design and the strength of evidence expected from indicated designs. In each ascending level, the amount of available evidence generally declines. Study designs in ascending levels of the pyramid generally exhibit increased quality of evidence and reduced risk of bias. Confidence in causal relations increases at the upper levels. *Meta-analyses and systematic reviews of observational studies and mechanistic studies are also possible. RCT, randomized controlled trial.

Table 5 lists sources and types of bias that can affect nutrition studies. Table 6 describes examples of criteria for assessing the risk of bias associated with different study types. It is possible to avoid or minimize some types of biases in the study design, conduct, and analysis stages by using, for example, double-blinding, management of confounding by matching and/or multivariable analyses, or assessment of objective exposure. A major source of bias in studies of relations between food substances and chronic diseases is the use of self-reported intake assessments (e.g., food-frequency questionnaires, 24-h recalls, or food diaries) (44). Zheng et al. (45) provided an example of the dominant influence that uncorrected nonrandom measurement error in energy intake estimates from self-reported diets may have on associations with risks of CVD, cancer, and diabetes in a cohort-study context.

TABLE 5.

Types of bias that can affect nutrition studies1

| Bias due to confounding |

| • Confounding: error in the estimated effect of an exposure on an outcome due to the presence of a common cause of the outcome or to baseline differences between exposure groups in the risk factors for the outcome or because factors predicting the outcome (prognostic factors) are related to the exposure that the person experiences |

| Related terms |

| • Allocation bias: error in the estimate of an effect caused by the lack of valid random allocation of participants to the intervention and control groups in a clinical trial |

| • Others: selection bias, case-mix bias |

| Bias in selection of participants for the study |

| • Selection bias: systematic error resulting from participant-selection procedures and factors that influence participation, systematic differences between baseline characteristics of the groups compared, or exclusion of some participants from the analysis (i.e., some participants are excluded initially or during follow-up), thereby changing the association between the exposure and the outcome |

| Related terms: |

| • Sampling bias: systematic error due to the methods or procedures for selecting the sample (e.g., participants, scientific papers), includes errors due to sampling of a nonrandom population |

| • Others: inception bias, lead-time bias, immortal time bias |

| Bias in measurement of exposures: misclassification of exposure status or introduction of systematic bias by use of self-reported intake methodologies |

| Related terms: |

| • Dietary exposure assessment bias: error associated with the use of self-reporting tools for assessing dietary intakes |

| • Misclassification bias: systematic error due to inaccurate measurements or classifications of participants’ exposure status; may be differential (related to the risk of the outcome) or nondifferential (unrelated to the risk of the outcome with an estimated effect that is usually biased toward the null) |

| • Recall bias: systematic error due to differences in accuracy of recall, particularly relevant to case-control studies because cases are more likely to recall potentially important events |

| • Others: observer bias, detection bias |

| Bias in measurement of outcomes: erroneous measurement or classification of outcomes |

| Related terms: |

| • Misclassification bias: systematic error due to inaccurate measurements or classifications of participants’ outcome status |

| • Nondifferential measurement error: can be systematic (e.g., measurements that are all too high), which does not cause bias or affect precision, or can be random, which affects precision but does not cause bias |

| • Detection bias (also known as differential measurement error): systematic differences between groups in how outcomes are determined. This bias can occur when outcome assessors are aware of participants’ exposure status and the outcome is subjective; the researchers use different methods to assess outcomes in different groups (e.g., questionnaires for the study group and medical records for the control group); or measurement errors are related to exposure status or a confounder of the exposure-outcome relation. Blinding of outcome assessors can help address this bias but is often not possible. Studies with self-reported outcomes have a higher risk of bias than those with clinically observed outcomes. |

| • Recall bias: see above |

| Bias in selection of reported findings |

| • Reporting bias: systematic differences between reported and unreported results |

| Related terms: |

| • Outcome-reporting bias: reporting on some, but not all, of the available outcome measures (e.g., reporting the most favorable results of multiple measurements or the results of the most favorable subscale of the many that are available) |

| • Analysis-reporting bias: investigators select results from exposure effects that they measured in multiple ways (e.g., multiple analyses with and without adjustment for different sets of potential confounders or use of a continuously scaled measure analyzed at different cutoffs) |

| Bias due to departures from intended exposures |

| • Performance bias: systematic differences between groups in care provided or in exposure to factors beyond the intended exposures |

| • Time-varying bias: change in the exposure over the follow-up period and postexposure prognostic factors that affect the exposure after baseline |

| Bias due to data missing not at random: can be due to attrition (loss to follow-up), missed appointments, incomplete data collection, or exclusion of participants from the analysis |

| Related terms: |

| • Attrition bias: systematic differences between groups in withdrawals from a study |

| • Selection bias: see above |

| Publication bias: result of the tendency for journals to publish articles with positive results, particularly if the articles report new findings, or of the tendency of authors to cite studies that conform to their or their sponsor’s preconceived ideas or preferred outcomes |

| Conflict of interest from sponsor bias: may be incurred when there is financial conflict; sponsor participation in data collection, analysis, and interpretation of findings can compromise the validity of the findings. This may result from the choice of design and hypothesis, selective outcome reporting, inadequacy of reporting, bias in presentation of results, or publication biases. |

Data are from references 39 and 41–43. “Exposure” refers to the variable with the causal effect to be estimated (e.g., a food substance). In the case of a randomized controlled trial, the exposure is an intervention; “outcome” is a true state or endpoint of interest (e.g., a health condition). Lists of related terms are not intended to be exhaustive but to offer pertinent examples.

TABLE 6.

Examples of criteria to assess the risk of bias by study type1

| Study type |

|||||

| Type of bias | Criterion | RCT | Cohort study | Case-control study | Cross-sectional study |

| Bias due to confounding | Were relevant confounding factors prespecified and considered? | NA | ✓ | ✓ | ✓ |

| Were study groups balanced with respect to the distribution of confounding factors? | NA | ✓ | ✓ | ✓ | |

| Were confounding factors taken into account in the design and/or analyses? | NA | ✓ | ✓ | ✓ | |

| Was the assignment of participants to study groups randomized? | ✓ | NA | NA | NA | |

| Was an adequate method of concealment of allocation to study groups used? | ✓ | NA | NA | NA | |

| Bias in selection of participants for the study | Were the same inclusion and exclusion criteria used for all study groups? | ✓ | ✓ | ✓ | ✓ |

| Was the likelihood that some participants might have the outcome before the exposure or intervention assessed and taken into account in the design and/or analysis? | ✓ | ✓ | ✓ | ✓ | |

| Was the percentage of eligible nonparticipants in each study group below an acceptable value? | ✓ | ✓ | ✓ | ✓ | |

| Bias in measurement of exposures and interventions | Was the exposure or intervention status measured in an accurate and sufficiently precise way? | ✓ | ✓ | ✓ | ✓ |

| Bias due to departures from intended exposures and interventions | Were there systematic differences between study groups in the care provided and/or in exposures to factors beyond those intended by study design? | ✓ | ✓ | ✓ | ✓ |

| Was the exposure or intervention status assessed more than once or in >1 way to help ensure fidelity to the study design? | ✓ | ✓ | ✓ | ✓ | |

| Bias due to missing data | Was the percentage of participants dropping out in each study group below an acceptable value? | ✓ | ✓ | ✓ | ✓ |

| Were missing data appropriately handled (e.g., intention-to-treat analysis, imputation)? | ✓ | ✓ | ✓ | ✓ | |

| Bias in measurement of outcomes | Were all relevant outcomes measured in an appropriately accurate and sufficiently precise way (e.g., valid and reliable) and done consistently across all study participants? | ✓ | ✓ | ✓ | ✓ |

| Was the length of follow-up among study groups in prospective studies the same, or in case-control studies were the times between exposures or interventions and targeted outcomes the same in cases and controls? | ✓ | ✓ | ✓ | ✓ | |

| Was the assessment of outcome made “blind” to exposure or intervention status or, when blinding was not possible, was there recognition that knowledge of exposure or intervention status could have influenced the assessment of the outcome or outcomes? | ✓ | ✓ | ✓ | ✓ | |

| Bias in selection of the reported result | Were the prespecified outcomes partially reported or not reported because of the statistical significance or magnitude of the effect of the exposure or intervention? | ✓ | ✓ | ✓ | ✓ |

| Is there evidence that the results from all participants, not only a subset, were analyzed or that all multiple-adjusted analyses, not only selected ones, were fully reported? | ✓ | ✓ | ✓ | ✓ | |

NA, not applicable; RCT, randomized controlled trial; ✓, applicable to the study type.

Selection of chronic disease outcome measures

A second overarching challenge in evaluating the strengths and weaknesses of evidence relates to the selection of an outcome measure for assessing whether a relation between food substances and chronic diseases is causal and identifying an indicator for intake-response analysis. It is possible to measure a chronic disease outcome directly (e.g., as an incident event) or indirectly by using a substitute measure (e.g., a qualified surrogate disease marker or a nonqualified disease marker). The type of outcome measured affects the level of confidence in whether the relation between a food substance and chronic disease is causal. The selection of an indicator for deriving intake-response relations also depends on whether the indicator is on the causal pathway between the intake and the disease outcome.

For this report, the outcome of interest is a chronic disease. Ideally, the measured outcome in available studies consists of the incidence (event) of the chronic disease as determined by appropriate diagnostic criteria. Data on this type of outcome from an RCT provide the most direct assessment of a relation between a food substance and a chronic disease outcome and a high degree of confidence that the relation is causal (Figure 2).

FIGURE 2.

Conceptual framework for assessing causality on the basis of level of confidence that the intake–chronic disease relation is causal. Panel A: Direct assessment involving the measurement of both intake and chronic disease outcome (event or incidence); highest confidence that relation is causal. Panel B: Indirect assessment involving the measurement of a qualified surrogate disease marker as a substitute for a direct measurement of the chronic disease per se; provides a reasonable basis, but not absolute certainty, that the relation between the intake and the chronic disease is causal. Panel C: Indirect assessment involving the measurement of a nonqualified disease marker as a substitute for a direct measurement of the chronic disease; because this type of outcome measure lacks sufficient evidence to qualify as a substitute for the chronic disease of interest, there is considerable uncertainty as to whether the relation between the intake and the chronic disease is causal. Shaded boxes indicate variables and outcomes that are measured directly. Nonshaded boxes indicate variables or outcomes that are not measured but whose presence on the causal pathway is inferred. Arrows indicate a unidirectional, causal relation. This type of relation can be directly assessed by randomized controlled trials. If observational studies (e.g., prospective cohort studies) are being assessed, the observed relations are associations, not causal links. Solid bold arrows indicate a relation with high confidence. Dashed arrows indicate relations with some uncertainty. Lighter arrows indicate less certainty than bolder arrows. If any part of the causal pathway between intake and chronic disease outcome has uncertainty, then the entire causal pathway has uncertainty. “Qualified” biomarkers of outcome require strong evidence that their use as substitutes for unmeasured outcomes can accurately and reliably predict the outcome of interest. “Qualification” has a contextual basis in that the evidence about its use as a substitute for an unmeasured outcome needs to be relevant to the proposed use of the biomarker (e.g., relation between food-substance intake and a chronic disease). Intakes can be assessed directly or by measurement of qualified biomarkers of intake.

The limiting factor is that studies that use a chronic disease outcome may not be available or even feasible, and DRI committees might then consider the use of a qualified surrogate disease marker or a nonqualified disease marker as the outcome measure. Most of these outcomes are biomarkers or are based on biomarkers, as defined in Text Box 4.

Text Box 4.

A biomarker is “a characteristic that is objectively measured and evaluated as an indicator of normal biological processes, pathogenic processes, or pharmacologic responses to [a]n … intervention” (6). (“Objectively” means reliably and accurately measured.)

The types of outcomes that can substitute for direct measures of a chronic disease outcome can range from biomarkers close to the disease (e.g., blood pressure for CVD or LDL cholesterol for coronary heart disease) to those that are more distant from the disease (e.g., indicators of inflammation or immune function for CVD and cancer). One type of substitute disease outcome is the qualified surrogate disease marker, defined in Text Box 5, a short-term outcome measure that has the same association with the intake of a food substance as a long-term primary endpoint.

Text Box 5.

A surrogate disease marker (also known as a surrogate marker, surrogate endpoint, or surrogate disease outcome marker) predicts clinical benefit (or harm, or lack of benefit or harm) based on epidemiologic, therapeutic, pathophysiologic, or other scientific evidence (6). A surrogate disease marker is qualified for its intended purposes.

The use of a surrogate marker enables a more rapid determination of the effectiveness of changes in intake on the risk of the chronic disease. Achieving “surrogate” status requires strong evidence and a compelling context (6, 46). That is, the outcome measure must be qualified for its intended purpose (e.g., to show that changing the intake of a food substance can prevent or alter the risk of the chronic disease). A qualified surrogate marker has prognostic value (i.e., correlates with the chronic disease outcome), is on the causal pathway between the intake and the chronic disease, and substantially captures the effect of the food substance on the chronic disease. DRI committees have used LDL-cholesterol concentrations as a surrogate disease marker for coronary heart disease and blood pressure as a surrogate marker for CVD (15, 23, 24). The use of a surrogate marker instead of the incidence of a chronic disease can provide a reasonable basis, but not absolute certainty, for evaluating whether a relation between a food substance and a chronic disease is causal (Figure 2). The second type of substitute disease outcome is an outcome that has not been qualified as a surrogate disease marker, referred to in this report as a nonqualified disease marker as defined in Text Box 6.

Text Box 6.

A nonqualified disease marker (also known as an intermediate disease outcome marker or intermediate endpoint) is a possible predictor of a chronic disease outcome but lacks sufficient evidence to qualify as an accurate and reliable substitute for that outcome.

An example of a nonqualified outcome for CVD is carotid intima-media thickness (47). A nonqualified outcome marker is associated with considerable uncertainty about whether the relation between a food substance and a chronic disease is causal (Figure 2).

Statistical issues

For any study design, careful interpretation of findings by experts is necessary to reach appropriate conclusions about the strength of the evidence. The use of inappropriate statistical methods (e.g., multiple statistical comparisons involving several outcomes and/or subpopulations without adjustment) can undermine the validity of conclusions. The primary outcome of an RCT and other study types is the endpoint for which the study is designed and powered and that investigators use to define inclusion and exclusion criteria. Secondary endpoints and post hoc endpoints might not have adequate statistical power, participants may not be appropriately randomized (in the case of RCTs), and participant inclusion and exclusion criteria might not be adequate for the analysis of secondary and post hoc outcomes. Importantly, reports on secondary and post hoc outcomes of RCTs and analyses of subsets of the trial cohort need to account for multiple tests of different trial hypotheses. Caution is therefore necessary in the use of secondary outcomes and post hoc analyses of RCTs or other study types when those outcomes were not part of the original study protocols.

Study designs

Past DRI committees have described how the known strengths and weaknesses of different study designs influenced their DRI evaluations and decisions (14, 23, 24, 29, 36–38). Concurrently, evolving science provided new insights into how study designs can affect evaluations of relations between food substances and chronic diseases. Below, we integrate the perspectives of past DRI committees and newer science as to the potential usefulness of types of study designs for DRI contexts.

RCTs

RCTs with a chronic disease event or qualified surrogate disease marker as the primary outcome.

RCTs can minimize or eliminate the likelihood of some key types of bias when they use randomization, concealment, and double-blinding protocols and have adequate statistical power (14, 23, 24, 29, 36–38). It is possible to compare disease incidence among randomly assigned groups receiving different interventions (e.g., supplement compared with placebo) by using the so-called intention-to-treat analyses, without using any dietary-assessment data, thus avoiding the systematic biases associated with reliance on self-reported intakes to determine exposures in observational studies. Dietary assessments need only provide assurance that a trial has adequate precision (i.e., statistical power), and they can also provide useful background information for evaluating that adherence to interventions has been followed or to account for background intake when supplements are added. RCTs often allow testing of small effects that observational studies cannot reliably detect. RCTs usually are the only type of study that allows direct assessment of causation, although other approaches, such as Mendelian randomization, may offer an alternative in special situations (48–52).

RCTs have the following limitations:

The costs are typically high for outcomes based on chronic disease events.

Persons agreeing to undergo randomization might be a select subset of the population of interest, which limits the generalizability of trial results.

For practical reasons, RCTs usually measure only a single or limited intake range of one food substance or a few food substances.