Significance

Measuring the degree of causal influences among multiple elements of a system is a fundamental problem in physics and biology. We propose a unified framework for quantifying any combination of causal relationships between elements in a hierarchical manner based on information geometry. Our measure of integration, called geometrical integrated information, quantifies the strength of multiple causal influences among elements by projecting the probability distribution of a system onto a constrained manifold. This measure overcomes mathematical problems of existing measures and enables an intuitive understanding of the relationships between integrated information and other measures of causal influence such as transfer entropy. Inspired by the integration of neural activity in consciousness studies, our measure should have general utility in analyzing complex systems.

Keywords: integrated information, mutual information, transfer entropy, information geometry, consciousness

Abstract

Assessment of causal influences is a ubiquitous and important subject across diverse research fields. Drawn from consciousness studies, integrated information is a measure that defines integration as the degree of causal influences among elements. Whereas pairwise causal influences between elements can be quantified with existing methods, quantifying multiple influences among many elements poses two major mathematical difficulties. First, overestimation occurs due to interdependence among influences if each influence is separately quantified in a part-based manner and then simply summed over. Second, it is difficult to isolate causal influences while avoiding noncausal confounding influences. To resolve these difficulties, we propose a theoretical framework based on information geometry for the quantification of multiple causal influences with a holistic approach. We derive a measure of integrated information, which is geometrically interpreted as the divergence between the actual probability distribution of a system and an approximated probability distribution where causal influences among elements are statistically disconnected. This framework provides intuitive geometric interpretations harmonizing various information theoretic measures in a unified manner, including mutual information, transfer entropy, stochastic interaction, and integrated information, each of which is characterized by how causal influences are disconnected. In addition to the mathematical assessment of consciousness, our framework should help to analyze causal relationships in complex systems in a complete and hierarchical manner.

Quantitative assessment of causal influences among elements in a complex system is a fundamental problem in many fields of science, including physics (1), economics (2), gene networks (3), social networks (4), ecosystems (5), and neuroscience (6). There have been many previous attempts to quantify causal influences between elements in stochastic systems. Information theory has played a pivotal role in these endeavors, leading to various measures, including predictive information (7), transfer entropy (8), and stochastic interaction (9). Drawn from consciousness studies involving measurement of integration of neural activity (10, 11), the mathematical concept of integrated information is also useful as a framework for analyzing causal relationships in complex systems with multiple elements.

Recent research suggests that the brain loses the ability to integrate information when consciousness is lost during dreamless sleep (12), general anesthesia (13), or vegetative states (14), suggesting that quantifying integration of information can serve as a neurophysiological marker of consciousness (10, 11, 15). The integrated information theory (IIT) of consciousness (16, 17) proposes a measure of integration called integrated information that quantifies multiple causal influences among elements of a system. Integrated information is theoretically motivated by the holistic property of consciousness experienced as a unified whole that is irreducible into separate parts or experiences. Whereas the original motivation for integrated information is intended to elucidate the neural substrate of consciousness, it can in principle be applied to many research fields.

Despite its broad potential impact, the application of integrated information (16, 18) to experimental data is severely limited (19, 20) due to the original measure’s derivation under restricted conditions, wherein the probability distribution of past states in a system is assumed to be uniform, variable discrete (18). In an effort to broaden the applicability, several measures have been proposed under general conditions (9, 19, 21). However, these proposed measures are limited by mathematical problems. Quantification of a pairwise causal influence from one element to another can be achieved with existing measures, but to quantify multiple causal influences among many parts poses the problems of overestimation and confounding noncausal influences. To overcome these problems, we propose a unified framework for quantifying causal influences based on information geometry (22). The measure we propose, called “geometric integrated information” , overcomes the described difficulties, provides geometric interpretations of existing measures, and elucidates the relationships among the measures in a hierarchical manner. The mathematical solution we derive should have broad utility in elucidating complex systems.

Three Postulates on Strength of Influences

We propose a unified theoretical framework for quantifying the strength of spatiotemporal influences based on three postulates. Let us consider a stochastic dynamical system in which the past and present states of the system are given by and , respectively, where is the number of elements in the system. Information about is integrated by influences among elements and transmitted to . The spatiotemporal influences of the system are fully characterized by the joint probability distribution . We call a “full model”. In a dynamical system characterized by , there are three different types of influences. Influences between elements at the same time (called equal-time influences) can be quantified by analyzing only the marginal distributions or . Influences across different time points (called across-time influences) can be further divided into those among different units (cross-influences) and those within the same unit (self-influences). The across-time influences can be quantified from the conditional probability distribution . They are also known as causal influences (2, 8), in the sense of causality that is statistically inferred from conditional probability distributions although it does not necessarily mean actual physical causality (23). Here, we use the term causality in this context and focus on quantifying causal influences.

For quantifying causal influences (both self- and cross-influences) among elements of and , consider approximating the probability distribution by another probability distribution in which the influences of interest are statistically disconnected. We call a “disconnected model.” The strength of influences can be quantified by to what extent the corresponding disconnected model can approximate the full model . The goodness of the approximation can be evaluated by the difference between the two probability distributions and . Minimizing a difference between and corresponds to finding the best approximation of by a disconnected model . From this reasoning, we propose the first postulate as follows.

Postulate 1. Strength of influences is quantified by a minimized difference between the full model and a disconnected model.

The second postulate is used to define a disconnected model. Consider partitioning the elements of a system into parts, and , where and contain the same elements in a system. To avoid the confounds of noncausal influences, we should minimally disconnect only the influences of interest without affecting the rest. To define such a minimal operation of statistically disconnecting influences from to , we propose the second postulate as follows.

Postulate 2. A disconnected model, where influences from to are disconnected, satisfies the Markov condition , where is the complement of in ; that is, .

The Markov condition means that and are conditionally independent given ,

| [1] |

Under the Markov condition, there is no direct influence from on given the states of the other elements being fixed.

The third postulate defines the measure of a difference between the full model and a disconnected model, which is denoted by . There are many possible ways to quantify the difference between two probability distributions (22, 24). We consider several theoretical requirements that the measure of difference should satisfy to have desirable mathematical properties (details in Supporting Information): (i) should be nonnegative and becomes 0 if and only if , (ii) should be invariant under invertible transformations of random variables, (iii) should be decomposable, and (iv) should be flat. We can prove that the only measure that satisfies all of the theoretical requirements is the well-known Kullback–Leibler (KL) divergence (22). Thus, we propose the third postulate as follows.

Postulate 3. A difference between the full model and a disconnected model is measured by KL divergence.

Taken together, the strength of causal influences from to , , is quantified by the minimized KL divergence,

| [2] |

under the constraint of the Markov condition given by Eq. 1.

A Unified Derivation of Existing Measures

In this section, we derive existing measures from the unified framework and provide the interpretations of them.

Total Causal Influences: Mutual Information.

First, consider quantifying the total strength of causal influences between the past and present states. From the operation of disconnections given by Eq. 1, the influences from all elements to are disconnected by forcing and to be independent,

| [3] |

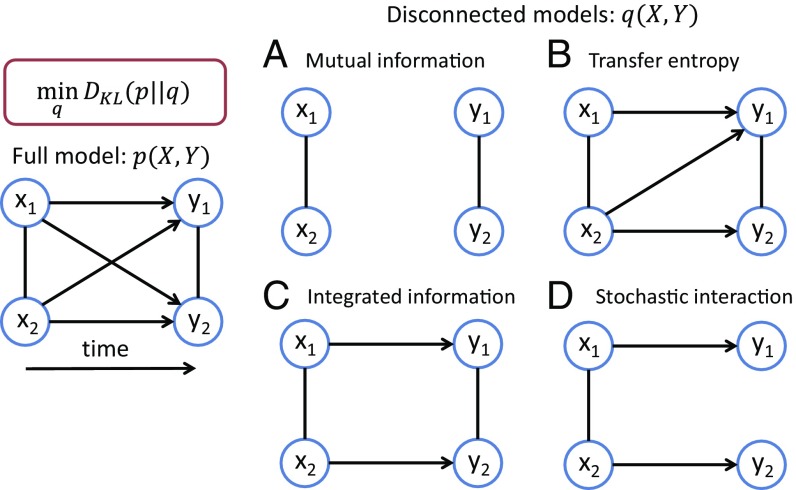

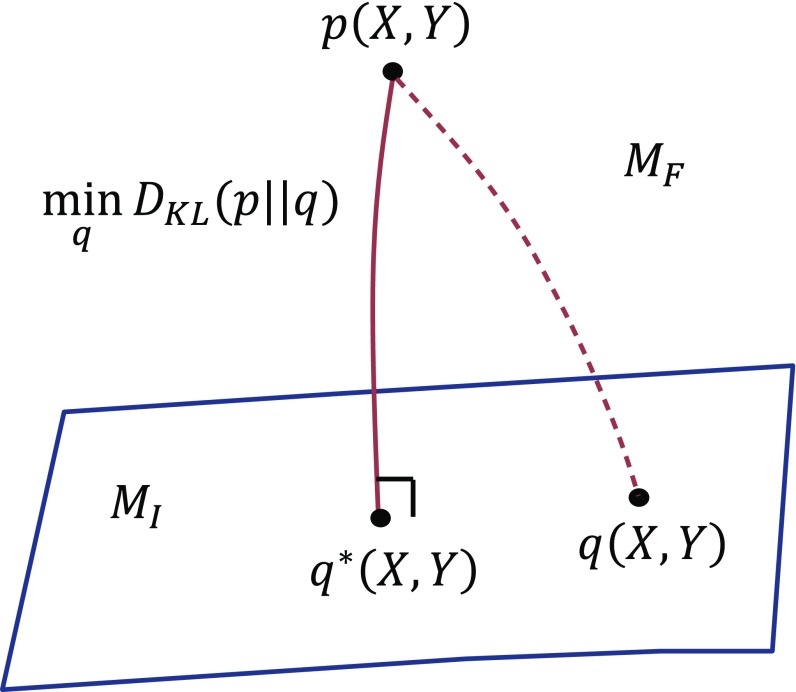

The disconnected model is graphically represented in Fig. 1A. To introduce the perspective of information geometry, consider a manifold of probability distributions , where each point in the manifold represents a probability distribution (a full model). Consider also a manifold where and are independent, which means that there are no causal influences between and . A probability distribution (a disconnected model) is represented as a point in the manifold . In general, the actual probability distribution is represented as a point outside the submanifold (Fig. 2). The difference between the two probability distributions is quantified by KL divergence,

| [4] |

Fig. 1.

(A–D) Minimizing the Kullback–Leibler (KL) divergence between the full and the disconnected model leads to various information theoretic quantities: (A) mutual information, (B) transfer entropy, (C) integrated information, and (D) stochastic interaction. Constraints imposed on the disconnected model are graphically shown.

Fig. 2.

Information geometric picture for minimizing the KL divergence between the full model , which resides in the manifold , and the disconnected model , which resides in the manifold . is the point in that is closest to .

We consider finding the closest point to within the submanifold , which minimizes the KL divergence between and (Fig. 2). This corresponds to finding the best approximation of . The minimizer of KL divergence is derived by orthogonally projecting the point to the manifold according to the projection theorem in information geometry (22) (Supporting Information). In the present case, , the closest point , and any point in form an orthogonal triangle. Thus, the following Pythagorean relation holds: . From the Pythagorean relation, we can find that the KL divergence is minimized when the marginal distributions of over and are both equal to those of the actual distribution ; i.e., and . The minimized KL divergence is given by

| [5] |

| [6] |

where is the entropy of , is the conditional entropy of given , and is the mutual information between and . From the derivation, we can interpret the mutual information between and as the total causal influences between and . The mutual information between the present and past states can be also interpreted as the degree of predictability of the present states given the past states and has been termed as predictive information (7).

Partial Causal Influences: Conditional Transfer Entropy.

Next, consider quantifying a partial causal influence from one element to another in the system. From the operation of disconnections in Eq. 1, a partial causal influence from to is disconnected by , satisfying

| [7] |

where is the past states of all of the variables other than . Under the constraint, the KL divergence is minimized when , , and (Supporting Information). The minimized KL divergence is found to be equal to the conditional transfer entropy,

| [8] |

| [9] |

where is the conditional transfer entropy from to given . Thus, we can interpret the conditional transfer entropy as the strength of the partial causal influence from to .

A Measure of Integrated Information

Integrated information is defined as a measure to quantify the strength of all causal influences among parts of the system. In the case of two units, integrated information should quantify both of the causal influences from to and from to . It aims to quantify the extent to which the whole system exerts synergistic influences on its future more than the parts of a system independently do and, thus, irreducibility of the whole system into independent parts (16). Accordingly, integrated information is theoretically required that it should be nonnegative and upper bounded by the total causal influences in the whole system, which is the mutual information between the past and present states in our framework as shown above (20). Based on Postulates 1–3, we uniquely derive a measure of integrated information by imposing the corresponding constraints, which naturally satisfies the theoretical requirement.

Consider again partitioning a system into parts. By applying the operation in Eq. 1 for all pairs of and (), we can find that all causal influences among the parts are disconnected by the condition

| [10] |

To quantify integrated information, we consider a manifold constrained by Eq. 10. Note that within , the present states in a part directly depend only on the past states of itself, , and thus the transfer entropies from one part to all of the other parts () are 0. Now we propose a measure of integrated information, called geometric integrated information , as the minimized KL divergence between the actual distribution and the disconnected distribution within :

| [11] |

The manifold formed by the constraints for integrated information (Eq. 10) includes the manifold formed by the constraints for mutual information (Eq. 3); i.e., . Because minimizing the KL divergence in a larger space always leads to a smaller value, is always smaller than or equal to the mutual information :

| [12] |

Thus, , uniquely derived from Postulates 1–3, naturally satisfies the theoretical requirements as integrated information.

Comparisons with Other Measures

The Sum of Transfer Entropies.

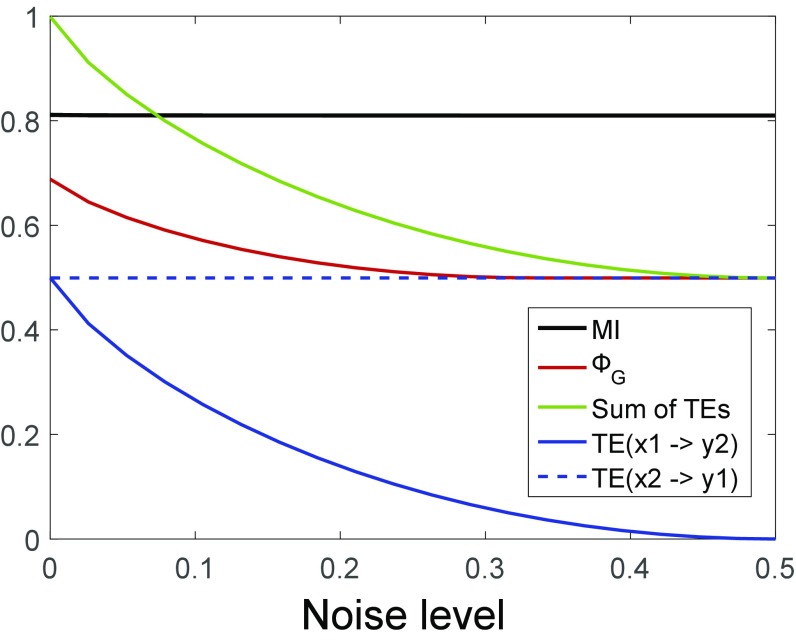

For simplicity, consider a system consisting of two variables (Fig. 1). Conceptually, a measure of integrated information should be designed to quantify the strength of two causal influences from to and from to (Fig. 1C). Because each causal influence is quantified by the transfer entropy, or , one may naively think that the sum of transfer entropies can be used as a valid measure of integrated information and may be the same as . In contrast with this naive intuition, the sum of transfer entropies is not equal to and moreover, it can exceed the mutual information between and , which violates the important theoretical requirement as a measure of integrated information (Eq. 12). When there is strong dependence between and , simply taking the sum of transfer entropies leads to overestimation of the total strength of causal influences. An extreme case where such overestimation occurs is when and are copies of each other.

As a simple example, consider a system consisting of two binary units, each of which takes one of the two states, or . Assume that the probability distribution of the past states of and is a uniform distribution; i.e., . The present state of unit , , is determined by the AND operation of the past state and , that is, becomes 1 if both and are 1, and it becomes 0 otherwise. On the other hand, is determined by a “noisy” AND operation where the state of flips with certain probability ; i.e., if and if , where determines the noise level. As the noise level of the noisy AND operation decreases, the dependence between and gets stronger. When there is no noise, i.e., , and are completely equal. We varied the strength of dependence by changing the noise level and calculated transfer entropies and (see Supporting Information for the computation of in the binary case) (Fig. 3). As the noise level decreases, the transfer entropy from to increases but the mutual information stays the same because , which is a noisy AND gate, does not add any additional information about the input above the information already provided by , which is the perfect AND gate. When the noise level is low and thus the dependence between and is strong, the sum of transfer entropies exceeds the amount of mutual information.

Fig. 3.

Comparison between integrated information and the sum of transfer entropies (TE). A system consists of two binary units whose states are determined by an AND gate and a noisy AND gate. When the noise level of the noisy AND gate is low and thus the dependence between the units is strong, the sum of transfer entropies (green line) exceeds the mutual information (black line) whereas integrated information (red line) is always less than the mutual information. Each transfer entropy (blue solid and dotted lines) is always less than or equal to .

On the other hand, never exceeds the amount of mutual information (Fig. 3). avoids the overestimation by simultaneously evaluating the strength of multiple influences. In contrast, the sum of transfer entropies separately quantifies causal influences by considering only parts of the system. For example, when the transfer entropy from to is quantified, is not taken into consideration, which leads to the overestimation. To accurately evaluate the total strength of multiple influences, we need to take a holistic approach as we proposed to do with . The flaw of the simple sum of transfer entropies illuminates the limitation of the part-based approach and the advantage of the holistic approach.

A related quantity with the sum of transfer entropies has been proposed as causal density (21). Originally, causal density was proposed as the normalized sum of the conditional Granger causality from one element to another (21). Because transfer entropy is equivalent to Granger causality for Gaussian variables (25), the normalized sum of the conditional transfer entropies can be considered as a generalization of causal density. Although a simple sum of Granger causality or transfer entropies is easy to evaluate and would be useful for approximately evaluating the total strength of causal influences, we need to be careful about the problem of overestimation.

Stochastic Interaction.

Another measure, called stochastic interaction (9), was proposed as a different measure of integrated information (19). In the derivation of stochastic interaction, Ay (9) considered a manifold where the conditional probability distribution of given is decomposed into the product of the conditional probability distributions of each part (Fig. 1D):

| [13] |

This constraint satisfies the constraint for the integrated information (Eq. 10). Thus, . In addition to that, this constraint further satisfies conditional independence among the present states of parts given the past states in the whole system :

| [14] |

This constraint corresponds to disconnecting equal-time influences among the present states of the parts given the past states of the whole in addition to across-time influences (Fig. 1D). On the other hand, the constraint in Eq. 10 corresponds to disconnecting only across-time influences (Fig. 1C).

The KL divergence is minimized when and (9). The minimized KL divergence is equal to stochastic interaction :

| [15] |

| [16] |

In contrast to the manifold considered for , the manifold formed by the constraints for stochastic interaction (Eq. 13) does not include the manifold formed by the constraints for the mutual information between and (Eq. 3). This is because not only causal influences but also equal-time influences are disconnected in (Fig. 1D). Stochastic interaction can therefore exceed the total strength of causal influences in the whole system, which violates the theoretical requirement as a measure of integrated information (Eq. 12). Notably, stochastic interaction can be nonzero even when there are no causal influences, i.e., when the mutual information is 0 (20). To summarize, stochastic interaction does not purely quantify causal influences but rather quantifies the mixture of causal influences and simultaneous influences.

Analytical Calculation for Gaussian Variables

Although we cannot derive a simple analytical expression for in general, it is possible to derive it for Gaussian variables. In this section, we analytically compute when the probability distribution of a system is Gaussian. We also show a close relationship between the proposed measure of integrated information and multivariate Granger causality. Consider the following multivariate autoregressive model,

| [17] |

where and are the past and present states of a system, is the connectivity matrix, and is Gaussian random variables with mean and covariance matrix , which are uncorrelated over time. The multivariate autoregressive model is the generative model of a multivariate Gaussian distribution. Regarding Eq. 17 as a full model, we consider the following as a disconnected model:

| [18] |

The constraints for (Eq. 10) correspond to setting the off-diagonal elements of to 0:

| [19] |

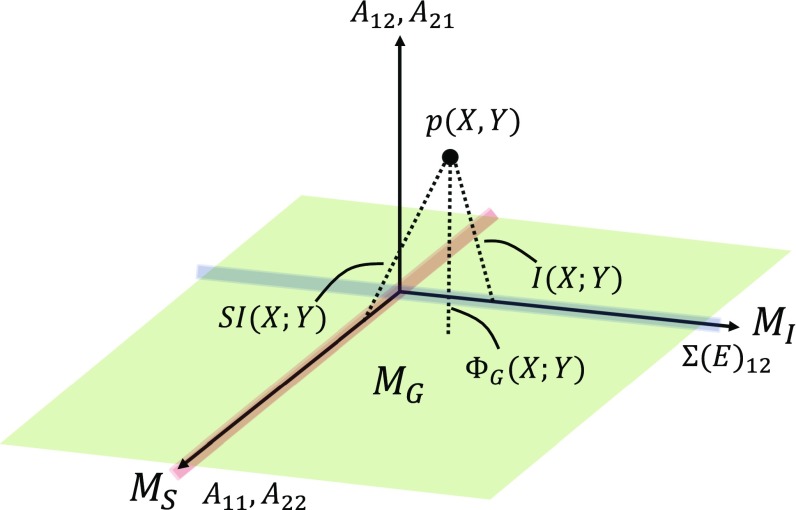

It is instructive to compare this with the constraints for the other information theoretic quantities introduced above: the constraints for mutual information (Fig. 1A), transfer entropy from to (Fig. 1B), and stochastic interaction (Fig. 1D). They correspond to , , and the off-diagonal elements of and being 0, respectively. Fig. 4 shows the relationship between the manifolds formed by the constraints for mutual information , stochastic interaction , and integrated information . We can see that and are included in . Thus, is smaller than or . On the other hand, there is no inclusion relation between and .

Fig. 4.

Relationships between manifolds for mutual information (gray line), stochastic interaction (orange line), and integrated information (green plane) in the Gaussian case. is the line where , is the line where and are 0, and is the plane where are 0.

By differentiating the KL divergence between the full model and a disconnected model with respect to , , and , we can find the minimum of the KL divergence, using the following equations (details in Supporting Information):

| [20] |

| [21] |

| [22] |

By substituting Eqs. 20–22 into the KL divergence, we obtain

| [23] |

is called the generalized variance, which is used as a measure of goodness of fit, i.e., the degree of prediction error, in multivariate Granger causality analysis (26, 27). In the Gaussian case, can be interpreted as the difference in the prediction error on comparison of the full and the disconnected model, in which the off-diagonal elements of are set to 0. Thus, is consistent with multivariate Granger causality based on the generalized variance. can be rewritten as the difference between the conditional entropy in the full model and that in the disconnected model,

| [24] |

For comparison, mutual information, transfer entropy, and stochastic interaction are given as , , , where () is the covariance of the conditional probability distribution .

Hierarchical Structure

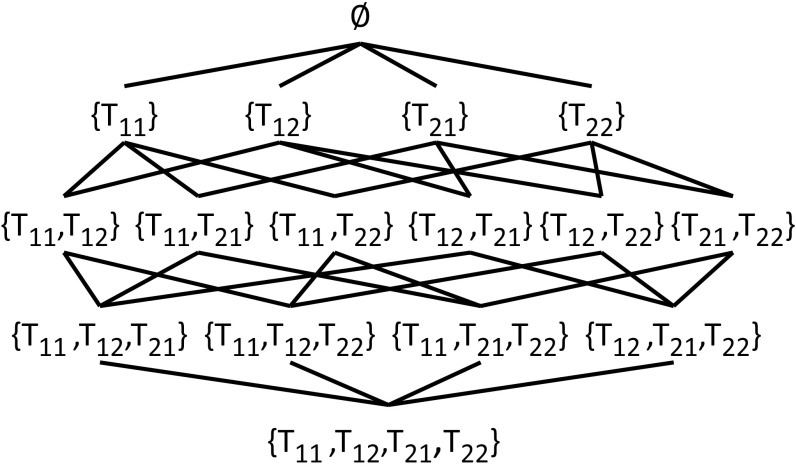

We can construct a hierarchical structure of the disconnected models and then use it to systematically quantify all possible combinations of causal influences (28). For example, in a system consisting of two elements, there are four across-time influences, , , , and , which are denoted by , , , and , respectively. Although we consider only the cross-influences, and for transfer entropy and integrated information, we can also quantify self-influences and by imposing the corresponding constraints, such as and , respectively. A set of all possible disconnected models forms a partially ordered set with respect to KL divergence between the full and the disconnected models (Fig. 5). If a given disconnected model is related to another one with a removal or an inclusion of an influence, the two models are connected by a line in Fig. 5. From Bottom to Top in Fig. 5, information loss increases as more influences are disconnected. Note that there is no ordering relationship between the disconnected models at the same level of the hierarchy. In Fig. 5, Top, all four influences are disconnected, and thus information loss is maximized, which corresponds to the mutual information . The hierarchical structure generalizes related measures mentioned in this article and provides a clear perspective on the relationship among different measures.

Fig. 5.

A hierarchical structure of the disconnected models where across-time influences are broken in a system consisting of two units. All possible combinations of influences retained in the disconnected model are displayed. If two models are related with the addition or removal of one influence, they are connected by a line. The KL divergence between the full and the disconnected model increases from Bottom to Top.

Discussion

In this paper, we proposed a unified framework based on information geometry, which enables us to quantify multiple influences without overestimation and confounds of noncausal influences. With the framework, we uniquely derived the measure of integrated information, . Moreover, our framework enables the complete description of causal relationships within a system by quantifying any combination of causal influences in a hierarchical manner as shown in Fig. 5. We expect that our framework can be used in diverse research fields, including neuroscience (29, 30), where network connectivity analysis has been an active research topic (31), and in particular consciousness researchers (32–34) because information integration is considered to be a key prerequisite of conscious information processing in the brain (10, 11).

To apply the measure of integrated information in real data, we need to resolve several practical difficulties. First, the computational costs increase exponentially with the system size. Thus, some way of approximating data is necessary. As we showed in this paper, the Gaussian approximation enables us to analytically compute integrated information, allowing us to compute integrated information in a large system (Eqs. 20–23). However, in real world systems, including brains, nonlinearity can be often significant and the Gaussian approximation may poorly fit to data. In such cases, transforming time series data into a sequence of discrete symbols can result in more accurate approximation (34, 35). Our measure of integrated information can be computed in such discrete distributions as shown in Supporting Information. Second, we need to find an appropriate partition of a system, which is an important problem in IIT (16). The computational costs for finding the optimal partition also exponentially increase. To overcome this difficulty, some effective optimization method needs to be used, possibly methods from discrete mathematics.

From a theoretical perspective, we could consider replacing Postulates 2 and 3 with different ones as interesting future research. As for Postulate 2, which defines the operation of disconnecting causal influences, we can use the interventional formalism (23, 36), which quantifies causal influences based on mechanisms of a system rather than observation of the system. As for Postulate 3, which defines the difference between the full model and a disconnected model, we can replace the KL divergence with other measures (24), such as the optimal transport distance, a.k.a, earth mover’s distance, which is considered to be important in IIT (17) and also has been shown to be useful in statistical machine learning (37). Our framework based on information geometry can be generally used for deriving different measures of causal influences from such different postulates and for analyzing the different geometric structures induced by them.

Manifold of Probability Distributions

Information geometry deals with a manifold of probability distributions and elucidates geometry in the manifold. Each point in the manifold represents a particular probability distribution. For example, consider a discrete probability distribution where is a discrete random variable taking different values . Let be the probability that takes the value ,

| [S1] |

Because the sum of probabilities has to be 1,

| [S2] |

the probability distribution is parameterized by a vector of probabilities

| [S3] |

Thus, the set of all possible probability distributions forms an -dimensional manifold. The vector of probabilities, , is considered as a coordinate of the manifold. This manifold is called the probability simplex and is denoted by .

Another example is a univariate Gaussian distribution,

| [S4] |

where is a random continuous variable, is the mean, and is the variance. A Gaussian distribution is specified by two variables, and . Thus, a set of Gaussian distribution forms a two-dimensional manifold parameterized by and . The coordinate system of the manifold is .

Discrete probability distributions and a Gaussian distribution belong to a broad class of probability distributions called an exponential family. The probability distributions included in the exponential family are written in the form

| [S5] |

where and are functions of a random variable and is the normalization factor. A set of parameters specifies the probability distributions. Thus, the exponential family of probability distributions forms an -dimensional manifold with the coordinate system .

Requirements for a Measure of Difference

As detailed in the main text, we postulated that the strength of influences is quantified by a minimized difference between the full model and a disconnected model , which is denoted by . There are many possible ways to quantify a difference between probability distributions (22, 24). We consider four theoretical requirements that should be satisfied by the measure of a difference so that the measure has desirable mathematical properties. We can prove that the only measure of a difference that satisfies all four requirements is the Kullback–Leibler (KL) divergence.

The first requirement is as follows.

Requirement 1. should be a divergence.

A divergence, , is a quantity that measures a degree of separation between two points and , satisfying the following criteria:

-

i)

.

-

ii)

if and only if .

-

iii)When and are sufficiently close, by denoting their coordinates by and , the Taylor expansion of is written as

and matrix is positive definite.[S6]

The first and second criteria are considered as the minimum requirements so that the quantity can be interpreted as a measure of the separation between two points. A divergence is a weaker notion than a distance because it does not satisfy the axioms of distance; i.e., a divergence is not symmetric, , and does not satisfy the triangle inequality. When the third criterion is satisfied, a manifold determined by the positive-definite matrix is said to be Riemannian. As explained in the previous section, a probability distribution of the full model and a disconnected model can be represented as a point in the manifold of probability distributions, . We consider the two points corresponding to the full model and a disconnected model as and , respectively.

The second requirement is as follows.

Requirement 2. should be invariant under invertible transformations of random variables.

should be invariant when a random variable is transformed by an invertible function , where and are one-to-one mapping. When and are one-to-one mapping, there is no information loss due to the transformation of variables. Invariance is also a necessary condition because the measure of a difference should not depend on a particular choice of variables but rather should be invariant under such lossless transformations of variables,

| [S7] |

We can arbitrarily make countless invariant divergences. In general, when a certain invariant divergence, , is given, is also an invariant function where is an arbitrary monotonic function that satisfies and . To resolve such arbitrariness, the third requirement is necessary.

Requirement 3. should be decomposable.

When a divergence can be written in an additive form of component-wise divergences for some function ,

| [S8] |

it is said to be decomposable. It has been proved that an invariant and decomposable divergence is uniquely written in the form (22)

| [S9] |

where is a differentiable convex function satisfying

| [S10] |

This type of divergence is called divergence.

There still remains an arbitrariness in the choice among the -divergence functions. The arbitrariness can be resolved by the requirement of flatness in the manifold of probability distributions.

Requirement 4. should be flat.

A divergence induces a Riemannian metric and a dual pair of affine connections coupled by the metric. It is said to be “flat” when it induces a flat structure in the underlying manifold where its dual curvatures are 0. The dually flat manifold has useful properties as it can be considered as a generalization of a Euclidean space. Importantly, information geometry shows that the generalized Pythagorean theorem and the related projection theorem hold in a dually flat manifold. These theorems play pivotal roles in this paper as we show below, as well as in many applications in various fields including statistics, machine learning, and information theory because the minimization of the divergence can be easily solved by these theorems. As we quantify causal influences as the minimized divergence, this property is beneficial for our purpose.

With the requirement of dual flatness, the divergence is now uniquely determined and is found to be the well-known KL divergence (22),

| [S11] |

To summarize, the KL divergence is the only divergence that is invariant, decomposable, and flat, satisfying all of the theoretical requirements for a measure of difference with the desired mathematical properties. Thus, as in Postulate 3 in the main text, we propose that the difference between the full model and a disconnected model should be quantified by the KL divergence.

Pythagorean Theorem

As stated in the previous section, the KL divergence induces a dually flat structure in the manifold of probability distributions. A dually flat manifold can be considered as a generalization of Euclidian space. In a dually flat manifold, a generalized Pythagorean theorem holds. Let us consider three points (probability distributions) , , and in a dually flat manifold. When the dual geodesic connecting and is orthogonal to the geodesic connecting and , the following Pythagorean theorem holds:

| [S12] |

Here, the term “geodesic” does not mean the shortest path connecting two points. It is used to mean a straight line connecting two points. The geodesic connecting and is represented as

| [S13] |

where and represent the positions of and , respectively, in a coordinate system . Its tangent vector is given by

| [S14] |

In a dually flat manifold, there is a dual coordinate system that is coupled with via Legendre transformation. The dual geodesic connecting and is represented as

| [S15] |

where and represent the positions of and , respectively, in the dual coordinate system . Its tangent vector is given by

| [S16] |

That the two geodesics are orthogonal means that the two tangent vectors (Eqs. S14 and S16) are orthogonal,

It can be shown that the above equation representing the relationship of the orthogonal triangle is equivalent to the Pythagorean relation in Eq. S12 (22).

Projection Theorem and Pythagorean Relations

We consider minimizing the KL divergence between the full model and a disconnected model under constraints

| [S17] |

where is the minimizer of the KL divergence and is a submanifold where the constraints are satisfied. According to the projection theorem in information geometry, the closest point can be found by orthogonally projecting the point to the submanifold . The orthogonal projection means that the dual geodesic connecting and is orthogonal to any tangent vector in at the intersection. If the submanifold is flat, the dual geodesic connecting and is orthogonal to geodesics connecting and any point . In a flat submanifold, any geodesic connecting any two points in the submanifold is included in the submanifold. Thus, the three points , the closest point , and any point form an orthogonal triangle. As explained in the previous section, the following Pythagorean relation holds for the orthogonal triangle:

| [S18] |

Total Causal Influences: Mutual Information

Total causal influences can be quantified by minimizing the KL divergence under the constraint where the past and future states of a system and are independent. The constraint is given by

| [S19] |

As explained in the previous section, the Pythagorean relation (Eq. S18) holds because the submanifold determined by the constraint is flat. From the Pythagorean relation, we have

| [S20] |

From Eq. S20, we find that and must be equal to the marginal distributions of over and , respectively:

The minimized KL divergence is given by

where is the entropy and is the mutual information between and .

Partial Causal Influences: Transfer Entropy

Partial causal influences from one element to another can be quantified by minimizing the KL divergence under the constraint where and are conditionally independent given the past states of a system that excludes only . The constraint is given by

| [S21] |

where is the past states of a system except for . The constraint disconnects the causal interaction from to . Under the constraint, the disconnected model is expressed as

where is the present states of a system except for . The KL divergence between and can be decomposed into the following three KL divergences,

| [S22] |

When we minimize the KL divergence in Eq. S22, we can separately minimize the three KL divergences. Because the constraint does not affect the first term and the third term, we can easily find that the first term and the third term are minimized (become 0) when and are equal to the corresponding distributions and , respectively. Thus, the minimization of the KL divergence in Eq. S22 is simply expressed as

| [S23] |

We can find the minimizer of the KL divergence by using the Pythagorean relation because the submanifold determined by the constraint in Eq. S21 is flat. From the Pythagorean relation, we have

| [S24] |

where the relation that is used. From Eq. S24, we find that

| [S25] |

The minimized KL divergence is calculated as

where [or ] is the conditional entropy given [or ] and is the conditional transfer entropy from to given .

Integrated Information

We propose to quantify integrated information as the minimized KL divergence,

| [S26] |

under the constraint

| [S27] |

where and represent the past and present states of the elements in the th subsystem, respectively. In general, it is difficult to analytically solve the minimization of the KL divergence under the above constraints unlike in the case of mutual information or transfer entropy. Note that the submanifold determined by the constraints is not flat and thus the Pythagorean relation in Eq. S18 does not hold. We need to numerically solve the constrained minimization of KL divergence by using optimization methods such as Newton’s method.

Integrated Information for Discrete Variables

In the following, we show how to compute when the states of units are represented by discrete variables. As the simplest case, we consider a system consisting of two binary units whose states are or . Generalization to the other cases is quite straightforward although computations are more complicated. In the case of two units, the constraints for integrated information are given by

| [S28] |

| [S29] |

Under the constraint, the disconnected model is expressed as

where we used the first constraint (Eq. S28). We cannot further simplify by using the second constraint (Eq. S29). Thus, we need to introduce the Lagrange multipliers for the second constraint. Note that as we are currently considering the binary variables, the second constraint is equivalent to the constraint

| [S30] |

With the method of Lagrange multipliers, the Lagrangian is given by

| [S31] |

where , , , and are Lagrange multipliers. The constraint in Eq. S30 is imposed on only one of the states of (we set without loss of generality) because the constraint for the other state of , i.e., , is automatically satisfied due to the constraint of .

The disconnected model that minimizes the KL divergence can be found by differentiating the Lagrangian with respect to , , and and setting the partial derivatives to 0,

| [S32] |

| [S33] |

| [S34] |

From the first equation (Eq. S32), we can simply find that

| [S35] |

However, the other two equations cannot be analytically solved. Thus, to find the solutions of the above equations, we need to resort to a numerical method. When we computed in a system consisting of two binary units in Fig. 3 of the main text, we used Newton’s method.

Integrated Information for Gaussian Variables

When the probability distributions are Gaussian distributions, can be analytically computed. For simplicity, we consider partitioning a system into individual elements, meaning that there are subsystems, each subsystem containing only one unit. The constraints are expressed as

| [S36] |

Consider the following multivariate autoregressive model,

| [S37] |

where is the past state of a system, is the present state of a system, is the connectivity matrix, and is Gaussian random variables, which are uncorrelated over time. The multivariate autoregressive model is the generative model of a multivariate Gaussian distribution. The joint probability distribution of and is expressed as

| [S38] |

where and are the covariance matrices of and , respectively. We consider combining and and define a new vector ,

| [S39] |

Then, the joint probability distribution of and is rewritten as

| [S40] |

where the covariance matrix of is given by

| [S41] |

and the inverse of is given by

| [S42] |

Similarly, the joint probability distributions in the disconnected model are given by

| [S43] |

| [S44] |

where and are the covariance matrices of and in the disconnected model, respectively, and is the connectivity matrix in the disconnected model. The constraints in Eq. S36 correspond to setting the off-diagonal elements of to 0:

| [S45] |

The KL divergence between the full model and a disconnected model is given by

| [S46] |

where is the number of variables in or and is the total number of variables in and . The determinant of can be calculated as

| [S47] |

| [S48] |

Thus, the KL divergence is rewritten as

| [S49] |

The trace of is calculated as

| [S50] |

The derivatives of the KL divergence with respect to the components of , , can be calculated as

| [S51] |

| [S52] |

| [S53] |

where we used

| [S54] |

Thus, the KL divergence is minimized when

| [S55] |

| [S56] |

| [S57] |

By substituting Eqs. S55 and S57 into Eq. S50, we find that the trace of is ,

| [S58] |

Thus, the minimized KL divergence, , is simply written as

| [S59] |

Acknowledgments

We thank Charles Yokoyama, Matthew Davidson, and Dror Cohen for helpful comments on the manuscript. M.O. was supported by a Grant-in-Aid for Young Scientists (B) from the Ministry of Education, Culture, Sports, Science, and Technology of Japan (26870860). N.T. was supported by the Future Fellowship (FT120100619) and the Discovery Project (DP130100194) from the Australian Research Council. M.O. and N.T. were supported by CREST, Japan Science and Technology Agency.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1603583113/-/DCSupplemental.

References

- 1.Ito S, Sagawa T. Information thermodynamics on causal networks. Phys Rev Lett. 2013;111(18):180603. doi: 10.1103/PhysRevLett.111.180603. [DOI] [PubMed] [Google Scholar]

- 2.Granger CW. Some recent development in a concept of causality. J Econom. 1988;39(1):199–211. [Google Scholar]

- 3.Bansal M, Belcastro V, Ambesi-Impiombato A, Di Bernardo D. How to infer gene networks from expression profiles. Mol Syst Biol. 2007;3(1):78. doi: 10.1038/msb4100120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Xiang R, Neville J, Rogati M. Proceedings of the 19th International Conference on World Wide Web. Association for Computing Machinery; New York: 2010. Modeling relationship strength in online social networks; pp. 981–990. [Google Scholar]

- 5.Sugihara G, et al. Detecting causality in complex ecosystems. Science. 2012;338(6106):496–500. doi: 10.1126/science.1227079. [DOI] [PubMed] [Google Scholar]

- 6.Park HJ, Friston K. Structural and functional brain networks: From connections to cognition. Science. 2013;342(6158):1238411. doi: 10.1126/science.1238411. [DOI] [PubMed] [Google Scholar]

- 7.Bialek W, Nemenman I, Tishby N. Predictability, complexity, and learning. Neural Comput. 2001;13(11):2409–2463. doi: 10.1162/089976601753195969. [DOI] [PubMed] [Google Scholar]

- 8.Schreiber T. Measuring information transfer. Phys Rev Lett. 2000;85(2):461. doi: 10.1103/PhysRevLett.85.461. [DOI] [PubMed] [Google Scholar]

- 9.Ay N. Information geometry on complexity and stochastic interaction. Entropy. 2015;17(4):2432–2458. [Google Scholar]

- 10.Koch C, Massimini M, Boly M, Tononi G. Neural correlates of consciousness: Progress and problems. Nat Rev Neurosci. 2016;17(5):307–321. doi: 10.1038/nrn.2016.22. [DOI] [PubMed] [Google Scholar]

- 11.Tononi G, Boly M, Massimini M, Koch C. Integrated information theory: From consciousness to its physical substrate. Nat Rev Neurosci. 2016;17(7):450–461. doi: 10.1038/nrn.2016.44. [DOI] [PubMed] [Google Scholar]

- 12.Massimini M, et al. Breakdown of cortical effective connectivity during sleep. Science. 2005;309(5744):2228–2232. doi: 10.1126/science.1117256. [DOI] [PubMed] [Google Scholar]

- 13.Alkire MT, Hudetz AG, Tononi G. Consciousness and anesthesia. Science. 2008;322(5903):876–880. doi: 10.1126/science.1149213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gosseries O, Di H, Laureys S, Boly M. Measuring consciousness in severely damaged brains. Annu Rev Neurosci. 2014;37:457–478. doi: 10.1146/annurev-neuro-062012-170339. [DOI] [PubMed] [Google Scholar]

- 15.Casali AG, et al. A theoretically based index of consciousness independent of sensory processing and behavior. Sci Transl Med. 2013;5(198):198ra105. doi: 10.1126/scitranslmed.3006294. [DOI] [PubMed] [Google Scholar]

- 16.Tononi G. Consciousness as integrated information: A provisional manifesto. Biol Bull. 2008;215(3):216–242. doi: 10.2307/25470707. [DOI] [PubMed] [Google Scholar]

- 17.Oizumi M, Albantakis L, Tononi G. From the phenomenology to the mechanisms of consciousness: Integrated information theory 3.0. PLoS Comput Biol. 2014;10(5):e1003588. doi: 10.1371/journal.pcbi.1003588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Balduzzi D, Tononi G. Integrated information in discrete dynamical systems: Motivation and theoretical framework. PLoS Comput Biol. 2008;4(6):e1000091. doi: 10.1371/journal.pcbi.1000091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Barrett AB, Seth AK. Practical measures of integrated information for time-series data. PLoS Comput Biol. 2011;7(1):e1001052. doi: 10.1371/journal.pcbi.1001052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Oizumi M, Amari S, Yanagawa T, Fujii N, Tsuchiya N. Measuring integrated information from the decoding perspective. PLoS Comput Biol. 2016;12(1):e1004654. doi: 10.1371/journal.pcbi.1004654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Seth AK, Barrett AB, Barnett L. Causal density and integrated information as measures of conscious level. Philos Trans A Math Phys Eng Sci. 2011;369(1952):3748–67. doi: 10.1098/rsta.2011.0079. [DOI] [PubMed] [Google Scholar]

- 22.Amari S. Information Geometry and Its Applications. Springer; Tokyo: 2016. [Google Scholar]

- 23.Pearl J. Causality. Cambridge Univ Press; Cambridge, UK: 2009. [Google Scholar]

- 24.Tegmark M. 2016. Improved measures of integrated information. arXiv:1601.02626.

- 25.Barnett L, Barrett AB, Seth AK. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys Rev Lett. 2009;103(23):2–5. doi: 10.1103/PhysRevLett.103.238701. [DOI] [PubMed] [Google Scholar]

- 26.Geweke J. Measurement of linear dependence and feedback between multiple time series. J Am Stat Assoc. 1982;77(378):304–313. [Google Scholar]

- 27.Barrett AB, Barnett L, Seth AK. Multivariate granger causality and generalized variance. Phys Rev E. 2010;81(4):041907. doi: 10.1103/PhysRevE.81.041907. [DOI] [PubMed] [Google Scholar]

- 28.Ay N, Olbrich E, Bertschinger N, Jost J. A geometric approach to complexity. Chaos. 2011;21(3):037103. doi: 10.1063/1.3638446. [DOI] [PubMed] [Google Scholar]

- 29.Deco G, Tononi G, Boly M, Kringelbach ML. Rethinking segregation and integration: Contributions of whole-brain modelling. Nat Rev Neurosci. 2015;16(7):430–439. doi: 10.1038/nrn3963. [DOI] [PubMed] [Google Scholar]

- 30.Boly M, et al. Stimulus set meaningfulness and neurophysiological differentiation: A functional magnetic resonance imaging study. PLoS One. 2015;10(5):e0125337. doi: 10.1371/journal.pone.0125337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bullmore E, Sporns O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat Rev Neurosci. 2009;10(3):186–198. doi: 10.1038/nrn2575. [DOI] [PubMed] [Google Scholar]

- 32.Lee U, Mashour GA, Kim S, Noh GJ, Choi BM. Propofol induction reduces the capacity for neural information integration: Implications for the mechanism of consciousness and general anesthesia. Conscious Cogn. 2009;18(1):56–64. doi: 10.1016/j.concog.2008.10.005. [DOI] [PubMed] [Google Scholar]

- 33.Chang JY, et al. Multivariate autoregressive models with exogenous inputs for intracerebral responses to direct electrical stimulation of the human brain. Front Hum Neurosci. 2012;6:317. doi: 10.3389/fnhum.2012.00317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.King JR, et al. Information sharing in the brain indexes consciousness in noncommunicative patients. Curr Biol. 2013;23(19):1914–1919. doi: 10.1016/j.cub.2013.07.075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bandt C, Pompe B. Permutation entropy: A natural complexity measure for time series. Phys Rev Lett. 2002;88(17):174102. doi: 10.1103/PhysRevLett.88.174102. [DOI] [PubMed] [Google Scholar]

- 36.Ay N, Polani D. Information flows in causal networks. Adv Complex Sys. 2008;11(01):17–41. [Google Scholar]

- 37.Cuturi M. Sinkhorn distances: Lightspeed computation of optimal transport. Adv Neural Inform Process Syst. 2013:2292–2300. [Google Scholar]