Abstract

Background

Many studies have found age-related declines in emotion recognition, with older adult (OA) deficits strongest for negative emotions. Some evidence suggests that OA also show worse performance in decoding complex mental states. However, no research has investigated whether those deficits are stronger for negative states.

Methods

We investigated OA (ages 65–93) and younger adult (YA, ages 18–22) performance on the Reading the Mind in the Eyes Test (RME), a well-validated measure of the ability to decode complex mental states from faces.

Results

We replicated findings showing OA deficits in this task. Using a multilevel logistic model, we found that the poorer performance of OA was due to worse performance on items for which a negative state was the correct answer. When analyzing each age group separately, OA scored worse on negative than positive items, whereas YA performance did not vary as a function of item valence. These age differences on the RME could not be explained by differences in lower level visual function.

Conclusion

Our findings show that previously documented OA deficits in perceiving basic negative emotional expressions are also present in reading complex mental states.

Many research studies have shown age-related declines in the ability to accurately label basic emotional expressions in faces (Ruffman Henry, Livingstone, & Phillips, 2008). These deficits are strongest for negative emotions, which, along with other evidence for attentional biases away from negative emotional information in older adults (OA, see Mather & Carstensen, 2005, Murphy & Isaacowitz, 2008; Reed, Chan, & Mikels, 2014) suggests that OA may have a specific deficit in accurately decoding negative mental states from faces, in addition to basic emotional expressions. The ability to decode more subtle and complex mental states, which we refer to as mentalizing,1 is arguably more significant for social functioning than decoding basic emotions, as it is rare in our everyday lives that we have to decode and label intense prototypical expressions (Isaacowitz & Stanley, 2011). Since it is unclear whether OA show deficits in this ability, we investigated whether OA showed deficits in mentalizing and whether these deficits were more pronounced for negative than positive states.

Decoding Complex Mental States and Aging

Research has found considerable evidence for age-related declines in perceiving basic emotional expressions. According to a meta-analysis of 17 studies, OA were significantly worse at labeling emotional expressions than younger adults (YA; Ruffman Henry, Livingstone, & Phillips, 2008). Though deficits in labeling emotions were present for all basic emotions except disgust, the effect sizes when age differences occurred were stronger for negative emotions, with larger effect sizes for anger (r = .34), sadness (r = .34), and fear (r = .27), than for happiness (r = .08) or surprise (r = .07). Additional evidence that OA deficits in emotion perception may be most acute for negative expressions is provided by the finding that OA show reduced memory and attention to negative emotional information in faces (Mather & Carstensen, 2003, see also Reed et al., 2014).

The aging-related deficits in emotional labeling tasks predict some deficits in social functioning as they mediate age deficits in detecting deception (Stanley & Blanchard-Fields, 2008) and detecting social gaffes (Halberstadt, Ruffman, Murray, Taumoepeau, & Ryan, 2011). However, decoding prototypical emotional expressions is only a small part of mentalizing based on facial appearance alone. Accurately determining other social cues from faces is also important in smooth social function. Unlike prototypical emotional expressions, it is less clear whether OA show deficits in decoding other socially-relevant information from faces. For instance, although OA trait impressions of faces are more positive than those of YA (Castle et al., 2012; Zebrowitz, Franklin, Hillman, & Boc, 2013), OA are just as accurate as YA in judging both positive and negative traits from facial appearance alone. Specifically, OA ratings of aggressiveness, competence, and health are just as predictive of individuals’ actual levels of aggressiveness, cognitive functioning, and health as are YA ratings (Boshyan et al., 2013; Zebrowitz et al., 2014). In addition, OA are just as sensitive as YA to negative emotional information when making trait ratings of faces (Franklin & Zebrowitz, 2013). Since OA are able to use negative information in making accurate trait inferences from faces, it is unclear whether OA will show deficits in decoding complex negative emotional expressions and mental states from faces.

One popular test involved in examining the ability to decode complex mental states from faces is the Reading the Mind in the Eyes Test (RME; Baron-Cohen, Wheelwright, Hill, Raste, & Plumb, 2001). The RME uses cropped images showing only the eye region of faces and has participants choose which of four mental state adjectives best describes a face. The RME is a well-validated test of mentalizing ability with convergent validity with other social-cognitive tests of mentalizing (Baron-Cohen et al.). Furthermore, performance on the RME reliably differentiates nonclinical samples from clinical samples in several psychopathological conditions, including autism spectrum disorders (Baron-Cohen et al.), amygdalotomy (Adolphs, Baron-Cohen, & Tranel, 2002), mood disorders (Harkness, Sabbagh, Jacobson, Chowdrey, & Chen, 2005; Lee, Harkness, Sabbagh & Jacobson, 2005), and borderline personality disorder (Fertuck et al., 2009; Scott, Levy, Adams, & Stevenson, 2011).

Some evidence suggests OA may show deficits in the RME. Pardini & Nichelli (2009) investigated RME performance in young adulthood (20–25 yrs), early (45–55) and late (55–65) middle age, and in elderly individuals (70–75). When comparing each age group against the other age groups, they found a linear pattern of age-related declines with each age group performing significantly worse than the prior age group. However, this study only looked at performance on the entire test and did not investigate whether the age-related declines differed for positive vs negative mental states.

In order to examine this question, we tested whether a large sample of OA show deficits in the RME and whether those deficits are particularly acute for negatively-valenced items. To our knowledge, no studies have investigated the role of item valence in age differences on the RME, although some have found effects of valence on performance in other participant groups (Harkness et al., 2005; Scott et al., 2011). We predicted that we would replicate previous findings that OA perform worse than YA on the RME. In addition, we predicted that OA deficits in performance would be most pronounced for negative items.

Method

Participants

We examined RME data from 127 subjects (64 YA, 63 OA) who completed tests of facial perception. YA participants, aged 18–22 (M = 18.8, SD = 1.1), were recruited from a university and received course credit or $15 payment. OA participants, aged 65–93, (M = 74.3, SD = 6.14), were recruited from the local community and were paid $25. OA were screened for dementia using the Mini-Mental State Examination (Folstein et al., 1975), and all scored above 26 out of 30 (M = 28.84 , SD = 1.21).

Stimuli

Stimuli came from the Reading the Mind in the Eyes Test Revised Version (Baron-Cohen et al., 2001). These images consist of 36 grayscale photographs of the eye region of faces, cropped to show the eyebrows, eyes, and part of the nose. Photographs were sized to an approximate size of 15 × 6 cm and were placed on a white background. The stimuli were validated by having two researchers generate a series of correct target words and incorrect foil words for each of the 36 images. They tested each of the items, showing each picture with the target word and three foils to a group of eight individuals. Each item was used if five or more judges chose the correct target word and no more than two judges chose any of the incorrect foil words.

Surrounding each of the four photographs were mental state adjectives placed at each corner of the screen. Each adjective was paired with a number (1–4) and participants chose which adjective they thought matched the image by pressing the corresponding button on the keyboard..

Ratings of valence were taken from Harkness et al. (2005). In order to assess the valence of each of the items, the authors of that study collected rating data of each of the correct words without seeing the images or the incorrect items. Twelve female participants rated each of the 36 correct adjectives on a 7 point scales with 1-very negative, 4-neutral and 7-very positive. The values we used to measure the valence of each item were t-values taken from a one-sample t-test comparing the rating for each trait adjective to the midpoint of the scale. Negative values reflected items rated below 4 and positive values reflected items rated above 4. In these ratings, 21 of the 36 traits were rated below the midpoint, with 12 of those items significantly below the midpoint. Fifteen items were rated above the midpoint, with eight of those significantly above the midpoint.

Measures

In addition to the RME, participants completed a series of control tasks assessing visual and cognitive functioning both to describe OA and YA samples and also to determine whether any of these measures could explain age differences on the RME (see Table 3).

Table 3.

Performance of older adult and younger adult participants on various control measures administered.

| Measure | Older Adults | Younger Adults |

||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | t- value |

p- value |

|

| Snellen Visual Acuity (denominator) | 32.78 | 13.31 | 19.56 | 5.47 | 7.29 | < .001 |

| Contrast Sensitivity (Mars Perceptrix, Cappaqua, NY) |

1.57 | .14 | 1.74 | .05 | 9.20 | < .001 |

| Benton Facial Recognition Test (Benton, Van Allen, Hamsher, & Levin, 1983) |

45.94 | 4.02 | 47.48 | 3.26 | 2.37 | .019 |

| Processing Speed (Pattern Comparison Test, Salthouse, 1993) |

28.68 | 5.15 | 42.3 | 6.34 | 13.34 | < .001 |

| Shipley Vocabulary Test (Shipley, 1946) | 35.51 | 3.45 | 31.83 | 3.58 | 5.90 | .019 |

| Level of Education* | 4.65 | 1.59 | 2.67 | .47 | ||

Level of Education was coded for highest level attained: 1 – no high school diploma, 2 – high school diploma, 3 – some college, 4 – Bachelor’s degree, 5 – some graduate work, 6 – Masters degree, 7 – Doctorate degree.

Design and Procedure

The RME was administered as a control task after one of three studies on face perception (see Boshyan et al., 2013; Zebrowitz et al., 2013; Franklin & Zebrowitz, 2013). Upon completion of that study, participants were seated at a computer terminal and instructed that they were going to see a series of images showing the eye region of faces and they would be asked to choose which of the four displayed adjectives best matched what the person in the picture was thinking or feeling. Participants were shown the 36 images in random order and responded by pressing the keyboard keys 1 – 4 to make their response.

Results

Accuracy Analysis

In order to examine age differences on this task and the influence of positivity on accuracy, we used a multilevel modeling approach with a binary logistic model for dichotomous data. In this model, accuracy was the dependent variable, scored 0 or 1 for incorrect or correct responses. Trial level responses were modeled as a first-level variable, with ratings of the valence of the correct response for each face as a trial level variable. Participant age was modeled as a second-level variable, scored -1 for YA and 1 for OA.

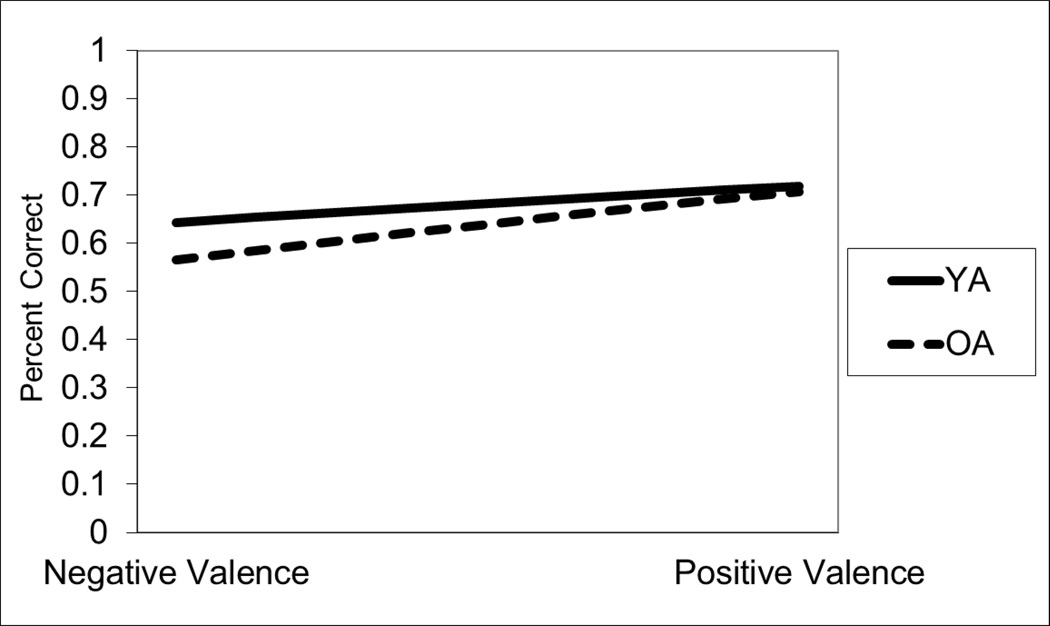

In this analysis, we report two models (see Tables 1 and 2). The first model examines participant age alone as a factor predicting performance. Based on this model, OA performed marginally worse than YA. In the second model, we added valence and the interaction term between subject age and valence. In this case, we found a significant moderation effect, as the interaction term was significant. As shown in Figure 1, OA were just as accurate as YA when the correct answer was a positive mental state, whereas OA were less accurate when it was a negative mental state, and this effect was more pronounced with the most negative items.

Table 1.

Logistic multilevel model with subject age, stimulus valence, and interaction as predictors of performance on the RME.

| Model 1 - Subject Age | Model 2 - Subject age X Valence | |||||||

|---|---|---|---|---|---|---|---|---|

| Predictor | Estimate | SE | Z- value |

P- Value |

Estimate | SE | Z- value |

P- Value |

| Intercept | 0.9561 | 0.1115 | 8.572 | <.001 | 0.9637 | 0.112 | 8.58 | <.001 |

| Subject Age | -0.1943 | 0.1051 | -1.849 | 0.065 | -0.1601 | 0.106 | -1.504 | 0.132 |

| Valence | 0.01057 | 0.0258 | 0.4 | 0.682 | ||||

| Subject Age X Valence | 0.037 | 0.018 | 2.013 | 0.044 | ||||

Table 2.

Logistic multilevel models examining valence as a predictor of RME performance for older and younger adult participants separately.

| Older Adults | Younger Adults | |||||||

|---|---|---|---|---|---|---|---|---|

| Predictor | Estimate | SE | Z- value |

P- Value |

Estimate | SE | Z- value |

P- Value |

| Intercept | 0.790 | 0.097 | 8.097 | <.001 | 1.017 | 0.147 | 6.897 | <.001 |

| Valence | 0.047 | 0.023 | 2.074 | 0.038 | 0.009 | 0.034 | 0.273 | 0.785 |

Figure 1.

Performance on the RME as a function of participant age and item valence. Chance performance is .25.

We also investigated whether valence significantly affected accuracy for OA and YA separately (see Table 2). For OA, valence was a significant predictor of performance, with greater accuracy for positively than negatively valenced mental states, whereas for YA, valence was not associated with performance.

Finally, we investigated whether the poorer visual acuity, contrast sensitivity, or face recognition shown by OA on the control measures (Table 3) might account for their poorer performance on the RME. Face recognition was the only measure that correlated with RME performance, being significantly correlated for OA, r(61) = .26, p = .039 but not for YA, r(62) = .19, p = .14 respectively, a difference that was not significant, Z = .41, p = .68. None of the other measures were significantly correlated with RME (all ps > .13). Age differences on overall RME performance or the interaction with valence were still significant when face recognition was entered into the multilevel model as a control variable.

Discussion

In this study, we examined whether a large sample of OA would show deficits in performance on the RME and whether these deficits vary with the valence of the cues. We found that a large sample of healthy, community-dwelling OA show a marginally significant deficit on the RME. In addition, we found that positivity moderated that deficit, such that OA showed less accuracy in identifying negatively than positively valenced mental states, whereas YA performance was unrelated to the positivity of the mental state. Finally, age differences in RME accuracy could not be explained by age differences in low level visual processes or by face recognition abilities.

Our finding that OA show less accuracy than YA when decoding negative complex mental states extends previously documented age differences in decoding basic emotional states (e.g. Ruffman et al., 2008). These OA deficits in accurately labeling negative basic emotions and more complex states provide an interesting contrast with evidence that OA are just as accurate as YA when decoding negative trait information from faces (e.g., Boshyan et al., 2013; Zebrowitz et al., 2014). One possible explanation for this discrepancy may be methodological differences between the two tasks. Specifically, OA deficits on the RME and emotion labeling tasks may reflect difficulty experienced when responding to forced choice tests with multiple responses. Indeed, two studies that used a reduced version of the RME with only two possible answers presented with each face, rather than the standard four answers used in the present study, failed to find age differences in performance, although they did not compare recognition of negative vs. positive mental states (Castelli et al., 2010; Li et al., 2013). These findings suggest that differences between rating tasks and multiple choice tests also may contribute to age differences in accuracy recognizing basic emotions, an interesting question for future research.

Our findings suggest that OA deficits when making perceptual judgments involved in social functioning may not only be mediated by poorer recognition of basic emotions (Halberstadt et al., 2011; Ruffman et al., 2008; Stanley & Blanchard-Fields, 2008) , but also by poorer recognition of complex negative mental states. This speculation, which warrants future research, is plausible because decoding complex mental states plays such an important role in understanding other people and fostering smooth social behavior (Franklin, Stevenson, Ambady, & Adams, 2015).

There are some limitations to our findings using the RME. Despite the fact that the RME items are authentic expressions taken from published magazines, and thus have more ecological validity than posed emotional expressions, age differences in responses to these items may not generalize to theory of mind abilities in other contexts. It remains to be seen whether the effect of valence on age differences in understanding the meaning of static images of the eye regions of faces are a good proxy for differences when trying to understand another person in a social interaction where much more information is available. One context in which age differences in theory of mind have been absent is assessing the mental states of characters in written stories. Although theory of mind abilities are largely retained in healthy aging when measured in such verbal tasks (e.g. Happé, Winner, & Brownwell, 1998; MacPherson, Phillips, & Della Sala, 2002), the research has not examined moderating effects of story valence. Our results suggest that OA may show deficits in theory of mind tasks when determining what others are feeling or thinking in negative stories but not in positive stories, a question for future research..

Footnotes

Although mentalizing is used to refer to understanding what another person is thinking based on many cues in addition to facial cues, we use the term mentalizing to refer to decoding what another is thinking based on facial cues alone.

Contributor Information

Robert G. Franklin, Jr., Anderson University

Leslie A. Zebrowitz, Brandeis University

References

- Adolphs R, Baron-Cohen S, Tranel D. Impaired recognition of social emotions following amygdala damage. Journal of Cognitive Neuroscience. 2002;14:1264–1274. doi: 10.1162/089892902760807258. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The 'Reading the mind in the eyes' Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry. 2001;42(2):241–251. [PubMed] [Google Scholar]

- Benton A, Van Allen M, Hamsher K, Levin H. Test of facial recognition manual. Iowa City: Benton Laboratory of Neuropsychology; 1983. [Google Scholar]

- Boshyan J, Zebrowitz LA, Franklin RG, Jr, McCormick CM, Carré JM. Age similarities in recognizing threat from faces and diagnostic cues. Journals of Gerontology, Series B: Psychological Sciences and Social Sciences. 2013;69:710–718. doi: 10.1093/geronb/gbt054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castelli I, Baglio F, Blasi V, Alberoni M, Falini A, Liverta-Sempio O, Nemni R, et al. Effects of aging on mindreading ability through the eyes: An fMRI study. Neuropsychologia. 2010;48(9):2586–2594. doi: 10.1016/j.neuropsychologia.2010.05.005. [DOI] [PubMed] [Google Scholar]

- Castle E, Eisenberger NI, Seeman TE, Moons WG, Boggero IA, Grinblatt MS, Taylor SE. Neural and behavioral bases of age differences in perceptions of trust. Proceedings of the National Academy of Sciences. 2012;109(51):20848–20852. doi: 10.1073/pnas.1218518109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fertuck EA, Jekal A, Song I, Wyman B, Morris MC, Wilson ST, et al. Enhanced “Reading the Mind in the Eyes” in borderline personality disorder compared to healthy controls. Psychological Medicine. 2009;39:1979–1988. doi: 10.1017/S003329170900600X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. Mini-Mental State: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Franklin RG, Jr, Stevenson MT, Ambady N, Adams RB., Jr . Cross-cultural reading the mind in the eyes and its consequences for international relations. In: Warnick JE, Landis D, editors. Neuroscience in Intercultural Contexts. New York: Springer; 2015. [Google Scholar]

- Franklin RG, Jr, Zebrowitz LA. Older adults’ trait impressions of faces are sensitive to subtle resemblance to emotions. Journal of Nonverbal Behavior. 2013;37:139–152. doi: 10.1007/s10919-013-0150-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halberstadt J, Ruffman T, Murray J, Taumoepeau M, Ryan M. Emotion perception explains age-related differences in the perception of social gaffes. Psychology and Aging. 2011;26:133–136. doi: 10.1037/a0021366. [DOI] [PubMed] [Google Scholar]

- Happé FG, Winner E, Brownell H. The getting of wisdom: theory of mind in old age. Developmental Psychology. 1998;34(2):358–362. doi: 10.1037//0012-1649.34.2.358. [DOI] [PubMed] [Google Scholar]

- Harkness K, Sabbagh M, Jacobson J, Chowdrey N, Chen T. Enhanced accuracy of mental state decoding in dysphoric college students. Cognition & Emotion. 2005;19(7):999–1025. [Google Scholar]

- Isaacowitz DM, Stanley JT. Bringing an ecological perspective to the study of aging and recognition of emotional facial expressions: Past, current, and future methods. Journal of Nonverbal Behavior. 2011;35:261–278. doi: 10.1007/s10919-011-0113-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee L, Harkness KL, Sabbagh MA, Jacobson JA. Mental state decoding abilities in clinical depression. Journal of Affective Disorders. 2005;86:247–258. doi: 10.1016/j.jad.2005.02.007. [DOI] [PubMed] [Google Scholar]

- Li X, Wang K, Wang F, Tao Q, Xie Y, Cheng Q. Aging of theory of mind: The influence of educational level and cognitive processing. International Journal of Psychology. 2013;48(4):715–727. doi: 10.1080/00207594.2012.673724. [DOI] [PubMed] [Google Scholar]

- MacPherson SE, Phillips LH, Della Sala S. Age, executive function and social decision making: a dorsolateral prefrontal theory of cognitive aging. Psychology and Aging. 2002;17(4):598–609. [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Sciences. 2005;9(10):496–502. doi: 10.1016/j.tics.2005.08.005. [DOI] [PubMed] [Google Scholar]

- Murphy NA, Isaacowitz DM. Preferences for emotional information in older and younger adults: a meta-analysis of memory and attention tasks. Psychology and Aging. 2008;23:263–286. doi: 10.1037/0882-7974.23.2.263. [DOI] [PubMed] [Google Scholar]

- Pardini M, Nichelli PF. Age-related decline in mentalizing skills across adult life span. Experimental Aging Research. 2009;35(1):98–106. doi: 10.1080/03610730802545259. [DOI] [PubMed] [Google Scholar]

- Reed AE, Chan L, Mikels JA. Meta-analysis of the age-related positivity effect: Age differences in preferences for positive over negative information. Psychology and Aging. 2014;29:1–15. doi: 10.1037/a0035194. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience & Biobehavioral Reviews. 2008;32(4):863–881. doi: 10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Salthouse TA. Speed and knowledge as determinants of adult age differences in verbal tasks. Journal of Gerontology. 1993;48:29–36. doi: 10.1093/geronj/48.1.p29. [DOI] [PubMed] [Google Scholar]

- Shipley WC. Institute of Living Scale. Los Angeles: Western Psychological Services; 1946. [Google Scholar]

- Scott LN, Levy KN, Adams RB, Jr, Stevenson MT. Mental state decoding abilities in young adults with borderline personality disorder traits. Personality Disorders: Theory, Research, and Treatment. 2011;2:98–112. doi: 10.1037/a0020011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanley J, Blanchard-Fields F. Challenges older adults face in detecting deceit: The role of emotion recognition. Psychology and Aging. 2008;23(1):24–32. doi: 10.1037/0882-7974.23.1.24. [DOI] [PubMed] [Google Scholar]

- Zebrowitz LA, Franklin RG, Jr, Boshyan J, Luevano V, Agrigoroaei S, Milosavljevic B, Lachman M. Older and younger adults’ accuracy in discerning competence and health in older and younger faces. Psychology and Aging. 2014;29:454–468. doi: 10.1037/a0036255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zebrowitz LA, Franklin RG, Jr, Hillman S, Boc H. Comparing older and younger adults’ first impressions from faces. Psychology and Aging. 2013;28:202–212. doi: 10.1037/a0030927. [DOI] [PMC free article] [PubMed] [Google Scholar]