Abstract

Adults exhibit enhanced attention to negative emotions like fear, which is thought to be an adaptive reaction to emotional information. Previous research, mostly conducted with static faces, suggests that infants exhibit an attentional bias toward fearful faces only at around 7 months of age. In a recent study (Journal of Experimental Child Psychology, 2016, Vol. 147, pp. 100–110), we found that 5-month-olds also exhibit heightened attention to fear when tested with dynamic face videos. This indication of an earlier development of an attention bias to fear raises questions about developmental mechanisms that have been proposed to underlie this function. However, Grossmann and Jessen (Journal of Experimental Child Psychology, 2016, Vol. 153, pp. 149–154) argued that this result may have been due to differences in the amount of movement in the videos rather than a response toemotional information. To examine this possibility, we tested a new sample of 5-month-olds exactly as in the original study (Heck, Hock, White, Jubran, & Bhatt, 2016) but with inverted faces. We found that the fear bias seen in our study was no longer apparent with inverted faces. Therefore, it is likely that infants’ enhanced attention to fear in our study was indeed a response to emotions rather than a reaction to arbitrary low-level stimulus features. This finding indicates enhanced attention to fear at 5 months and underscores the need to find mechanisms that engender the development of emotion knowledge early in life.

Keywords: Emotion processing, Attention, Dynamic facial emotion, Face inversion effect, Social perception

Introduction

In the realm of socioemotional development during infancy, much research has focused on how infants process emotional faces and on determining at what point infants demonstrate knowledge of those emotions (e.g., matching across modalities, social referencing). Recently, we found that 5-month-olds, but not 3.5-month-olds, exhibit greater attention to a dynamic face relative to a peripheral checkerboard when the face was fearful than when it was happy or neutral (Heck et al., 2016). This finding was significant because previous studies using static images indicated that the transition to this attentional increase for fear does not occur until around 7 months of age (e.g., Peltola, Leppänen, Mäki, & Hietanen, 2009; for reviews, see Grossmann, 2010; Leppänen & Nelson, 2012). However, Grossmann and Jessen (2016) challenged our conclusions, suggesting that 5-month-olds’ performance on the task may have been based solely on differences in the amount of movement in the videos used to depict different emotions rather than something specific about the emotions themselves.

The point made by Grossmann and Jessen (2016) is a valid one; both their analyses and our subsequent analysis of the amount of movement in the videos used in Heck and colleagues (2016) indicate that, at least in the case of two of the three models used in that study, the fearful faces exhibited more movement than either the happy or neutral faces. As such, it is possible that this potentially arbitrary difference was driving the attentional bias for fear in the conditions where the fear videos contained the most movement. To examine this possibility, and to account for other low-level differences between emotional stimuli, we implemented an inversion condition in the current study using the exact stimuli from the original study rotated 180 degrees with a new sample of 5-month-olds.

We tested infants with inverted stimuli because inversion is frequently used as a control for low-level differences that may be found in a variety of stimuli. In addition, inversion is thought to disrupt configural processing of faces and bodies (Bertin & Bhatt, 2004; Bhatt, Bertin, Hayden, & Reed, 2005; Maurer, Le Grand, & Mondloch, 2002; Missana & Grossmann, 2015; Zieber, Kangas, Hock, & Bhatt, 2015). For example, adults show general processing deficits for inverted faces and bodies, such as reduced accuracy in same–different judgment tasks, but not when tested with inverted images of houses (Reed et al., 2007) or scrambled bodies (Reed, Stone, Grubb, & McGoldrick, 2006).

Beyond general face and body processing deficits seen with inverted stimuli, there are also specific inversion effects found with emotional faces and bodies. For example, Kestenbaum and Nelson (1990) found that 7-month-olds were not able to categorize happy facial expressions across models when the images were inverted, although they were able to do so when the images were upright. Similarly, Zieber, Kangas, Hock, and Bhatt (2014a, 2014b) found that 6.5-month-olds demonstrated preferences for, and were able to match dynamic and static emotion bodies to, emotional sounds only when the bodies were upright but not inverted, indicating that infants were not responding solely to some low-level property of the stimuli (see also Missana, Atkinson, & Grossmann, 2015; Missana & Grossmann, 2015; Missana, Rajhans, Atkinson, & Grossmann, 2014).

This inversion effect with emotional stimuli is not limited to infants, however; Bannerman, Milders, de Gelder, and Sahraie (2009) found that adults were slower overall to locate fearful and neutral faces and bodies in an inverted condition than in an upright condition. Moreover, faster response time to fear compared with neutral stimuli was seen only when the images were upright. In addition, Pallett and Meng (2015) found that adults’ discrimination of morphed happy and angry emotional faces was impaired when the stimuli were inverted compared with upright images, but there was no difference in the discrimination of upright versus inverted non-face objects in the same tasks. Likewise, Sato, Kochiyama, and Yoshikawa (2011) found reduced amygdala activity when adults viewed inverted neutral and fearful faces compared with upright versions of the same images. All of these studies suggest that any differences found in the upright condition were due to something specific about the emotions being expressed rather than some low-level feature of the stimuli used; therefore, testing infants in an inverted condition using the identical materials and procedure of Heck and colleagues (2016) provides the ability to examine whether differences in the amount of movement or other low-level features can explain the findings.

In our original study (Heck et al., 2016), we were attempting to replicate as closely as possible the methodology used by Peltola, Leppänen, and colleagues with static images, which did not include an inverted condition (Peltola, Hietanen, Forssman, & Leppänen, 2013; Peltola, Leppänen, Palokangas, & Hietanen, 2008; Peltola, Leppänen, Vogel-Farley, Hietanen, & Nelson, 2009). However, given that the stimuli we used were dynamic and may have confounded emotion with amount of motion, it is appropriate to examine infants’ response to motion by testing with inverted stimuli. If infants’ behavior in the inversion condition is similar to performance in the upright condition of Heck and colleagues (2016), it would cast doubt on their claim that 5-month-olds were responding to emotions. If, however, infants’ performance in the inverted condition differs from their performance in the upright condition, it would be evidence that low-level features like movement per se did not drive infants’ performance in the upright condition, making it more likely that infants were responding to emotions.

In addition, in an attempt to further examine whether 5-month-olds’ performance in Heck and colleagues (2016) was driven by overall movement differences across emotion videos, we analyzed infants’ performance on the subset of upright stimuli that did not differ in motion across emotions during the attention overlap test. Note that Heck and colleagues reported that performance on these stimuli was not statistically different from that on stimuli in which there were motion differences. Nevertheless, we reasoned that it would be informative to separately analyze performance on the videos that did not differ in overall degree of movement. We report the results of this analysis below.

Method

Participants

A total of 24 5-month-old infants (14 male; Mage = 151.29 days, SD = 6.20) from predominately middle-class Caucasian families participated in this experiment. Participants were recruited through birth announcements and from a local hospital. Data from 2 additional infants were excluded due to insufficient looking during the test (<20% of trials with valid data). Data from an additional 4 infants were lost due to equipment failure.

Stimuli

The videos used were from the Amsterdam Dynamic Facial Expression Set (ADFES; Van der Schalk, Hawk, Fischer, & Doosje, 2011) and were identical to those tested by Heck and colleagues (2016) except that they were rotated 180 degrees.

Apparatus and procedure

The equipment and procedures used were identical to those used by Heck and colleagues (2016). In this attention overlap task, a dynamic face was presented in the center of a Tobii TX300 eye-tracking monitor for 3000 ms; subsequently, with the face remaining in the center of the screen, a peripheral stimulus (a checkerboard) appeared on the left or right side of the screen. Both the face and the checkerboard remained on the screen for an additional 3000 ms, totaling 6000 ms for each trial. There were 36 total trials, with each emotion (fear, happy, and neutral) repeated 12 times. Randomization and counterbalancing of the stimuli were identical to Heck and colleagues (2016), with the left–right location of the checkerboard and order of emotion videos being randomized with constraint. Areas of interest (AOIs) on the face and peripheral stimulus were likewise identical to those in the original study.

Results

The primary question addressed in this experiment was whether inversion would affect 5-month-olds’ attention to fearful, happy, and neutral faces in an overlap task. As in Heck and colleagues (2016), our main analysis focused on the final 3000 ms of each trial, which constitutes the overlap period. Moreover, as in the original study, individual trials were excluded if there were no looks to the screen during the trial, if there were anticipatory looks to the peripheral target area prior to or within 180 ms of stimulus onset, or if the infant looked at the peripheral target without first fixating on the face. On average, 2.74 trials (SE = 0.47) per emotion were excluded out of the 36 trials experienced by each participant. We conducted a repeated measures analysis of variance (ANOVA) to ensure that the number of excluded trials did not vary by emotion. The effect of emotion was not significant, F(2, 46) = 0.07, p = .94, , so there were no systematic differences between emotions in the number of trials excluded.

The primary dependent measure, calculated in the same way as in Heck and colleagues (2016), was the proportional look duration during the last 3000 ms to the face relative to the checkerboard (i.e., the total fixation duration to the face divided by the total fixation duration to the face + checkerboard and the ratio multiplied by 100) across trials for each emotion.

Inverted versus upright

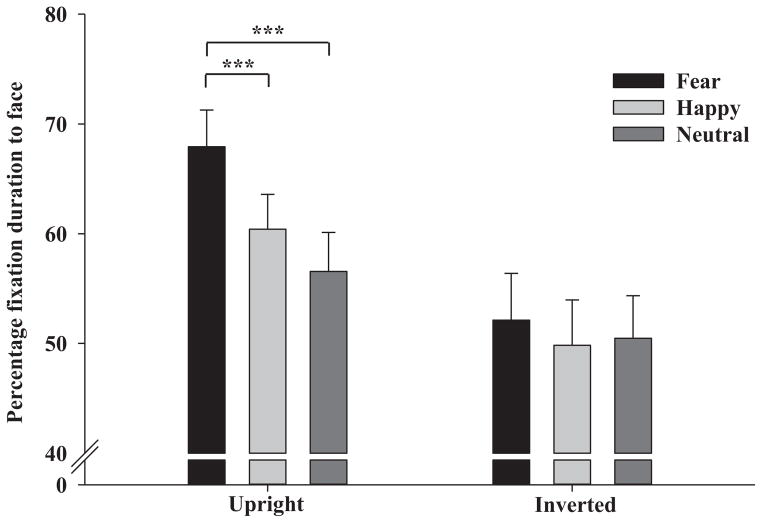

Fig. 1 displays the means and standard errors for the inverted condition in the current study and the upright condition from Heck and colleagues (2016). We first tested for between-group differences for the inverted condition in the current study and the upright condition in the original study. An Emotion (fear, happy, or neutral) × Orientation (upright or inverted) mixed ANOVA revealed significant main effects of emotion, F(2, 92) = 11.60, p < .001, , and orientation, F(1, 46) = 4.61, p = .04, , qualified by a significant Emotion × Orientation interaction, F(2, 92) = 5.94, p = .004, . The main effect of orientation by itself suggests that infants in the upright condition of Heck and colleagues (2016) were responding to some content in the videos beyond just the overall degree of movement because movement is equivalent in upright and inverted stimuli.

Fig. 1.

Mean percentage fixation duration to the face relative to the peripheral target exhibited by the 5-month-olds in the upright condition of Heck and colleagues (2016) and the 5-month-olds in the inverted condition in the current study. The infants in the original study (Heck et al., 2016) looked significantly longer at the face relative to the checkerboard when the face was fearful compared with both happy and neutral faces. In contrast, the 5-month-olds in the inverted condition did not significantly differentiate their attention as a function of emotion. The error bars indicate 1 standard error. ***p < .001.

Furthermore, note that a significant main effect of emotion was found for 5-month-olds in the upright condition in Heck and colleagues (2016), F(2, 46) = 14.40, p < .001, , with infants fixating significantly longer on the face relative to the checkerboard during fear trials compared with both the happy trials, t(23) = 3.80, p = .001, d = 0.47, and neutral trials, t(23) = 4.67, p < .001, d = 0.67 (see Fig. 1). In contrast, a repeated measures ANOVA conducted on data from the inverted condition in the current study failed to find a significant main effect of emotion, F(2, 46) = 0.85, p = .43, (see Fig. 1).

Thus, the significant Emotion × Orientation interaction combined with the significant effects of emotion in the upright condition, but not in the inverted condition, clearly signifies an inversion effect at 5 months of age. This indicates that infants in the original study (Heck et al., 2016) were not responding to low-level features in videos such as differences in the amount of movement.

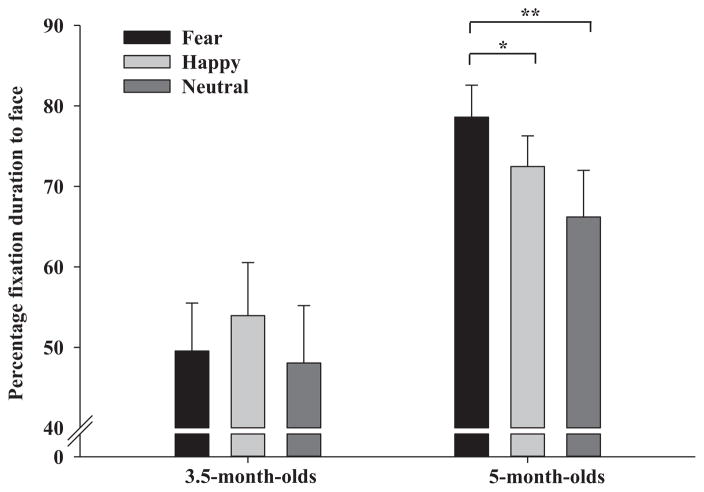

Upright stimulus movement analyses

To further explore our original findings and account for potential alternative explanations, we also conducted our own movement-based analyses of the ADFES videos used in Heck and colleagues (2016), which essentially replicated the luminance differences in pixels found by Grossmann and Jessen (2016). As noted by Grossmann and Jessen, videos with one of the three models (F05) did not follow the same pattern as the others in that there were no differences in the amount of movement between emotions during the attention overlap test, whereas the amount of movement was higher for fear compared with happy and neutral for the other two models (see Fig. 1, right panel, in Grossmann & Jessen, 2016). However, even when analyzing data from only the subset of infants who were tested on this model (n = 8 at 5 and 3.5 months), there is a significant main effect of emotion, F(2, 28) = 4.14, p = .03, , and a significant difference between the two age groups, F(1, 14) = 8.66, p = .01, . Although the Emotion × Age interaction did not reach significance, F(2, 28) = 2.79, p = .08, , likely due to the small sample size for each group, the same pattern of differences between emotions and age groups seen in the full sample with all three models hold for this subset of infants (see Fig. 2 in the current article). In the case of 5-month-olds, there was significantly more looking to the face relative to the checkerboard in the fear condition compared with both the happy condition, t(7) = 2.34, p = .05, d = 0.56, and the neutral condition, t(7) = 3.35, p = .01, d = 0.88; the difference between the happy and neutral conditions was not significant, t(7) = 1.31, p = .23, d = 0.45. In contrast, 3.5-month-olds tested on Model F05 failed to exhibit significant differences across emotions: fear versus happy, t(7) = −1.20, p = .27, d = 0.25; fear versus neutral, t(7) = 0.34, p = .75, d = 0.08; happy versus neutral, t(7) = 2.26, p = .06, d = 0.30 (see Fig. 2).

Fig. 2.

Mean percentage fixation duration to the upright face relative to the peripheral target by emotion and age group for Model F05 in Heck and colleagues (2016). The F05 videos did not differ in overall degree of movement across emotions during the attention overlap test. The same pattern was found for this subset as in the full sample. The error bars indicate 1 standard error. *p = .05; **p = .01.

Thus, even when only the data from the videos used in Heck and colleagues (2016) that did not differ in the amount of movement were analyzed, there is clear evidence that 5-month-olds attend more to faces than to competing stimuli when the face is fearful than when it is happy or neutral. Moreover, 3.5-month-olds failed to exhibit this pattern of performance. This provides further evidence that 5-month-olds in the original study were responding to something specific about fear rather than to arbitrary low-level features such as the amount of movement.

Discussion

In our original study (Heck et al., 2016), we found that 5-month-olds fixated significantly longer on a face relative to a checkerboard when the face was fearful compared with when it was happy or neutral. The current study examined whether this finding could be due to differences in the amount of movement in the stimulus videos rather than the emotions. To this end, we tested infants on inverted stimuli while repeating the procedures and analyses from the original study. Infants failed to exhibit any differences in attention to fearful stimuli compared with happy or neutral stimuli, in contrast to the findings with the upright videos in the original study. Thus, the current data indicate that 5-month-olds were not responding to the dynamic face videos solely on the basis of the amount of movement because they showed a clear inversion effect. Moreover, analyses of infants’ performance on videos in the original study that did not differ in the total amount of movement across emotions also indicated that 5-month-olds, but not 3.5-month-olds, attended to fear faces more than to happy and neutral faces. These findings provide further evidence of some level of emotion understanding, particularly of fear, at an earlier age than previously thought, prompting the need for further investigations into the developmental mechanisms behind this shift.

Grossmann and Jessen (2016) also suggest that 3.5-month-olds in Heck and colleagues (2016) did not exhibit sensitivity to the differences in the amount of motion in the internal features of the faces due to the immaturity of their visual system, whereas 5-month-olds’ performance may have been facilitated by their ability to process differences in amount of motion. However, this explanation seems unlikely because there is evidence that even 3.5-month-old infants are able to recognize dynamic happy and sad expressions and match corresponding emotional vocalizations when viewing video-recordings of their own mothers but not of an unfamiliar female (Kahana-Kalman & Walker-Andrews, 2001). Moreover, in a live peekaboo paradigm, Montague and Walker-Andrews (2001) found that 4-month-old infants looked longer during anger and fear trials than during sad and happy trials, and the infants’ own affective responses varied by emotion as well. These results underscore the importance of contextual information and also suggest that young infants are indeed capable of detecting differences in the movements of internal facial features in the videos of the sort used in Heck and colleagues (2016), but the meaningfulness of the emotional expressions might not yet be generalized to unfamiliar adults or unfamiliar situations at that age. Because even 3.5-month-olds show a degree of heightened sensitivity to negative emotions under particular circumstances, it is likely that 5-month-olds’ heightened attention to fear in Heck and colleagues’ original study was due to some aspect of the emotion itself, which is highlighted with the use of dynamic stimuli, and not due solely to irrelevant artifacts, such as degree of movement, related to the stimuli.

Although relatively few studies using dynamic faces have been conducted with young infants, there are several examples from the adult literature indicating that dynamic information enhances processing of emotions in faces. Chiller-Glaus, Schwaninger, Hofer, Kleiner, and Knappmeyer (2011) found that adults recognize dynamic emotional faces more easily than static faces in a composite paradigm where the top and bottom halves of the face depicted either the same emotion or different emotions (see also Ambadar, Schooler, & Cohn, 2005; Bould & Morris, 2008; McKelvie, 1995). In addition, the use of dynamic faces improves recognition of subtle emotions compared with static faces (Ambadar et al., 2005; Bould & Morris, 2008). Thus, the use of dynamic emotional stimuli by Heck and colleagues (2016) may have facilitated infants’ performance and led to enhanced attention to fear compared with happy and neutral faces.

Although the results of the current study indicate that our original findings were not due solely to arbitrary differences in the amount of movement in the particular videos used, it is important to note that motion itself is a feature that is inherently different across emotions. For example, the amount, direction, type (i.e., wave-like, parabolic; Chafi, Schiaratura, & Rusinek, 2012), timing (Bould & Morris, 2008), and location of movement (i.e., upper vs. lower face areas) have been found to differ across emotions. Of special relevance to the current study is the difference between fear and happy emotions, with fear identification being driven primarily by changes in the upper region (i.e., the widening of the eyes; Calder, Young, Keane, & Dean, 2000; Fiorentini & Viviani, 2009), whereas happiness is identified primarily through changes in the lower region (i.e., pulling the corners of the mouth into a smile; Calder et al., 2000; Fiorentini & Viviani, 2009).

Also important are the differences in the number of moving parts across emotions. For example, using the Facial Action Coding System (FACS) action units (AUs) description from Ekman, Friesen, and Hager (2002), Fiorentini, Schmidt, and Viviani (2012) identified a number of characteristics present across emotion expressions for their actors, including the temporal sequence of the onset of different AUs and the total number of AUs activated. Across their actors, the lowest number of AUs activated (3) was found for the happy facial expression, whereas the highest number activated (10) was found for the fearful facial expression. This measure indicated the complexity of the emotion being expressed, which resulted in happiness being grouped as a simple facial expression along with surprise and sadness, whereas fear was grouped with other complex expressions such as anger and disgust.

The videos from the ADFES set used in the current study were created by having the models trained in the FACS system (see Van der Schalk et al., 2011), and all models were required to activate particular AUs depending on the emotion for the video to be included in the set. Similar to Fiorentini and colleagues (2012), the number of AUs required for each emotion in the ADFES varied, with three AUs being necessary for inclusion for happiness and six AUs for fear. In addition to variations in the number and location of activated AUs across emotions, the order of the onset of different AUs resulted in differences in confusability or errors in adults’ identification of emotions in Fiorentini and colleagues (2012). This indicates that the timing of the changes in the face is also important for disentangling complex emotions. Therefore, it may be that the temporal characteristics of the movements as well as the location and direction of movement are more important cues for facial emotion processing than differences in overall amount of movement.

To conclude, we appreciate the point made by Grossmann and Jessen (2016) regarding potential confounding factors in Heck and colleagues (2016), and we acknowledge the challenges associated with controlling for factors such as movement differences between emotions when using dynamic stimuli (Quinn et al., 2011). However, based on the results from the inverted condition reported here and the analyses of data from one model whose portrayal did not differ in movement across emotions, the amount of movement and other low-level features do not appear to be driving the attentional differences exhibited by 5-month-olds in Heck and colleagues (2016). Rather, it appears that 5-month-olds were exhibiting sensitivity to emotional information in the dynamic stimuli used in the original study. Future research will be needed to investigate the nature of features that drive attention to fear during infancy and the mechanisms that engender the development of selective attention to emotions early in life.

Acknowledgments

This research was supported by grants from the National Science Foundation (BCS-1121096) and the National Institute of Child Health and Human Development (HD075829). The authors thank the infants and parents who participated in this study.

References

- Ambadar Z, Schooler JW, Cohn JF. Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychological Science. 2005;16:403–410. doi: 10.1111/j.0956-7976.2005.01548.x. [DOI] [PubMed] [Google Scholar]

- Bannerman RL, Milders M, de Gelder B, Sahraie A. Orienting to threat: Faster localization of fearful facial expressions and body postures revealed by saccadic eye movements. Proceedings of the Royal Society of London B: Biological Sciences. 2009;276:1635–1641. doi: 10.1098/rspb.2008.1744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertin E, Bhatt RS. The Thatcher illusion and face processing in infancy. Developmental Science. 2004;7:431–436. doi: 10.1111/j.1467-7687.2004.00363.x. [DOI] [PubMed] [Google Scholar]

- Bhatt RS, Bertin E, Hayden A, Reed A. Face processing in infancy: Developmental changes in the use of different kinds of relational information. Child Development. 2005;76:169–181. doi: 10.1111/j.1467-8624.2005.00837.x. [DOI] [PubMed] [Google Scholar]

- Bould E, Morris N. Role of motion signals in recognizing subtle facial expressions of emotion. British Journal of Psychology. 2008;99:167–189. doi: 10.1348/000712607X206702. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–551. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Chafi A, Schiaratura L, Rusinek S. Three patterns of motion which change the perception of emotional faces. Psychology. 2012;3:82–89. [Google Scholar]

- Chiller-Glaus SD, Schwaninger A, Hofer F, Kleiner M, Knappmeyer B. Recognition of emotion in moving and static composite faces. Swiss Journal of Psychology. 2011;70:233–240. [Google Scholar]

- Ekman P, Friesen WV, Hager JC, editors. Facial Action Coding System [E-book] Salt Lake City, UT: Research Nexus; 2002. [Google Scholar]

- Fiorentini C, Schmidt S, Viviani P. The identification of unfolding facial expressions. Perception. 2012;41:532–555. doi: 10.1068/p7052. [DOI] [PubMed] [Google Scholar]

- Fiorentini C, Viviani P. Perceiving facial expressions. Visual Cognition. 2009;17:373–411. [Google Scholar]

- Grossmann T. The development of emotion perception in face and voice during infancy. Restorative Neurology and Neuroscience. 2010;28:219–236. doi: 10.3233/RNN-2010-0499. [DOI] [PubMed] [Google Scholar]

- Grossmann T, Jessen S. When in infancy does the “fear bias” develop? Journal of Experimental Child Psychology. 2016 doi: 10.1016/j.jecp.2016.06.018. [DOI] [PubMed] [Google Scholar]

- Heck A, Hock A, White H, Jubran R, Bhatt RS. The development of attention to dynamic facial emotions. Journal of Experimental Child Psychology. 2016;147:100–110. doi: 10.1016/j.jecp.2016.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahana-Kalman R, Walker-Andrews AS. The role of person familiarity in young infants’ perception of emotional expressions. Child Development. 2001;72:352–369. doi: 10.1111/1467-8624.00283. [DOI] [PubMed] [Google Scholar]

- Kestenbaum R, Nelson CA. The recognition and categorization of upright and inverted emotional expressions by 7-month-old infants. Infant Behavior and Development. 1990;13:497–511. [Google Scholar]

- Leppänen JM, Nelson CA. Early development of fear processing. Current Directions in Psychological Science. 2012;21:200–204. [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- McKelvie SJ. Emotional expression in upside-down faces: Evidence for configurational and componential processing. British Journal of Social Psychology. 1995;34:325–334. doi: 10.1111/j.2044-8309.1995.tb01067.x. [DOI] [PubMed] [Google Scholar]

- Missana M, Atkinson AP, Grossmann T. Tuning the developing brain to emotional body expressions. Developmental Science. 2015;18:243–253. doi: 10.1111/desc.12209. [DOI] [PubMed] [Google Scholar]

- Missana M, Grossmann T. Infants’ emerging sensitivity to emotional body expressions: Insights from asymmetrical frontal brain activity. Developmental Psychology. 2015;51:151–160. doi: 10.1037/a0038469. [DOI] [PubMed] [Google Scholar]

- Missana M, Rajhans P, Atkinson AP, Grossmann T. Discrimination of fearful and happy body postures in 8-month-old infants: An event-related potential study. Frontiers in Human Neuroscience. 2014:8. doi: 10.3389/fnhum.2014.00531. http://dx.doi.org/10.3389/fnhum.2014.00531. [DOI] [PMC free article] [PubMed]

- Montague DPF, Walker-Andrews AS. Peekaboo: A new look at infants’ perception of emotion expressions. Developmental Psychology. 2001;37:826–838. [PubMed] [Google Scholar]

- Pallett PM, Meng M. Inversion effects reveal dissociations in facial expression of emotion, gender, and object processing. Frontiers in Psychology. 2015:6. doi: 10.3389/fpsyg.2015.01029. http://dx.doi.org/10.3389/fpsyg.2015.01029. [DOI] [PMC free article] [PubMed]

- Peltola MJ, Hietanen JK, Forssman L, Leppänen JM. The emergence and stability of the attentional bias to fearful faces in infancy. Infancy. 2013;18:905–926. [Google Scholar]

- Peltola MJ, Leppänen JM, Mäki S, Hietanen JK. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Social Cognitive and Affective Neuroscience. 2009;4:134–142. doi: 10.1093/scan/nsn046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Palokangas T, Hietanen JK. Fearful faces modulate looking duration and attention disengagement in 7-month-old infants. Developmental Science. 2008;11:60–68. doi: 10.1111/j.1467-7687.2007.00659.x. [DOI] [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Vogel-Farley VK, Hietanen JK, Nelson CA. Fearful faces but not fearful eyes alone delay attention disengagement in 7-month-old infants. Emotion. 2009;9:560–565. doi: 10.1037/a0015806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn PC, Anzures G, Izard CE, Lee K, Pascalis O, Slater AM, Tanaka JW. Looking across domains to understand infant representation of emotion. Emotion Review. 2011;3:197–206. doi: 10.1177/1754073910387941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed CL, Beall PM, Stone VE, Kopelioff L, Pulham DJ, Hepburn SL. Brief report: Perception of body posture—What individuals with autism spectrum disorder might be missing. Journal of Autism and Developmental Disorders. 2007;37:1576–1584. doi: 10.1007/s10803-006-0220-0. [DOI] [PubMed] [Google Scholar]

- Reed CL, Stone VE, Grubb JD, McGoldrick JE. Turning configural processing upside down: Part and whole body postures. Journal of Experimental Psychology: Human Perception and Performance. 2006;32:73–87. doi: 10.1037/0096-1523.32.1.73. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S. The inversion effect for neutral and emotional expressions on amygdala activity. Brain Research. 2011;1378:84–90. doi: 10.1016/j.brainres.2010.12.082. [DOI] [PubMed] [Google Scholar]

- Van der Schalk J, Hawk ST, Fischer AH, Doosje B. Moving faces, looking places: Validation of the Amsterdam Dynamic Facial Expression Set (ADFES) Emotion. 2011;11:907–920. doi: 10.1037/a0023853. [DOI] [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, Bhatt RS. The development of intermodal emotion perception from bodies and voices. Journal of Experimental Child Psychology. 2014;126:68–79. doi: 10.1016/j.jecp.2014.03.005. [DOI] [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, Bhatt RS. Infants’ perception of emotion from body movements. Child Development. 2014;85:675–684. doi: 10.1111/cdev.12134. [DOI] [PubMed] [Google Scholar]

- Zieber N, Kangas A, Hock A, Bhatt RS. Body structure perception in infancy. Infancy. 2015;20:1–17. [Google Scholar]