Abstract

Media literacy interventions offer promising avenues for the prevention of risky health behaviors among children and adolescents, but current literature remains largely equivocal about their efficacy. The primary objective of this study was to develop and test theoretically-grounded measures of audiences’ degree of engagement with the content of media literacy programs based on the recognition that engagement (and not participation per se) can better explain and predict individual variations in the effects of these programs. We tested the validity and reliability of a measure of engagement with two different samples of 10th grade high school students who participated in a pilot and actual test of a brief media literacy curriculum. Four message evaluation factors (involvement, perceived novelty, critical thinking, personal reflection) emerged and demonstrate acceptable reliability.

Keywords: measures, media literacy, health, engagement, critical thinking, reflection

Media literacy interventions offer relatively new and promising avenues for the prevention of risky health behaviors among children and adolescents, but current literature remains largely equivocal about their efficacy (Banerjee & Kubey, 2013; Bergsma & Carney, 2008; Jeong, Cho, & Hwang, 2012; Martens, 2010). We propose that (a) much of this ambiguity stems from the lack of conceptual clarity in the literature regarding the cognitive process through which media literacy interventions influence their target audience, and (b) that the ability to track this cognitive process by means of valid and reliable measures is necessary to evaluating the effects of media literacy programs on their audience. Accordingly, the primary objective of this study was to develop and test theoretically-grounded measures of audiences’ degree of engagement with the content of media literacy programs based on the recognition that engagement (and not participation per se) can better explain and predict individual variations in the effects of these programs.

Media Literacy as Health Prevention Tool

Media literacy is defined as the ability to access, analyze, evaluate and create messages in a wide variety of media modes and formats while recognizing the role and influence of media in society (Aufderheide & Firestone, 1993; Hobbs, 1998). Media literacy-based interventions have more recently been introduced into the field of health education and promotion and are currently recommended by leading health prevention organizations (e.g., the American Academy of Pediatrics, the Centers for Disease Control and Prevention, and the Office of the National Drug Control Policy) as means of educating adolescents about a range of negative media influences on their behavior (Primack, Fine, Yang, Wickett, & Zickmund, 2009). Such interventions typically include discussion of persuasive media strategies and analysis of sample persuasive media messages, sometimes along with a component that has students plan and/or produce counter-messages (see active involvement interventions, Greene, 2013). These activities are intended to help adolescents become more aware of the constructed nature of messages and teach them to be more adept at identifying the motives, purposes, and points of view rooted in messages (Hobbs, 1998).

Three recent systematic reviews (Banerjee & Kubey, 2013; Bergsma & Carney, 2008; Martens, 2010) and one meta-analytic review (Jeong, Cho, & Hwang, 2012) sought to assess the efficacy of media literacy interventions based on existing evidence. The meta-analytic review investigated the average effect size and moderators of 51 media literacy interventions on topics such as advertising, alcohol, body image and eating, sex, tobacco, and violence. Overall, the mean effect size of media literacy interventions, weighted by sample size, was .37 (p < .001), with a 95% confidence interval ranging from .27 to .47. This finding demonstrates that media literacy interventions can have positive effects on knowledge, critical thinking, and evaluations of behaviors, and therefore, may be an effective approach for reducing potentially harmful effects of media messages (Jeong et al., 2012). For example, Banerjee and Greene’s (2006, 2007) tobacco prevention media literacy intervention was effective in reducing positive attitudes toward smoking and behavioral intention to smoke. Similarly, Austin and colleagues’ (2005) media literacy intervention to prevent tobacco use was successful in reducing youths’ beliefs that most peers use tobacco, increased their understanding of advertising techniques, and increased their levels of efficacy regarding the extent to which they would participate in advocacy and prevention activities (Austin, Pinkleton, Hust, & Cohen, 2005). Overall, then, there is good evidence that media literacy interventions can enhance the critical thinking abilities of young adults and encourage them to make healthier decisions.

Unfortunately, not all media literacy programs are efficacious. Some programs report limited effects of media literacy interventions, while others report unintended effects or effects in an undesired direction (Banerjee & Kubey, 2013; Bergsma & Carney, 2008; Martens, 2010). For instance, in their study, Ramasubramanian and Oliver (2007) explored the role of media literacy training and counter-stereotypical media exemplars in decreasing prejudicial responses toward Asian Indians, African Americans, and Caucasian Americans. Participants were exposed to a media literacy video or a control group video, followed by an activity to analyze stereotypical or counter-stereotypical news stories. Media literacy video increased prejudicial responses as compared to the control video, thereby activating prejudice instead of decreasing such feelings (Ramasubramanian & Oliver, 2007). Other scholars have also demonstrated that improving children’s understanding of television may heighten the salience or appeal of violence and increase children’s willingness to use violence (Byrne, Linz & Potter, 2009; Nathanson, 2004).

One possible explanation for the differential success of media literacy interventions involves variations in content and/or delivery across programs. The Center for Media Literacy (2007) endorses five core concepts as central to the design of the content of media literacy interventions: (1) all media messages are social constructions (i.e., constructed by somebody and never able to reflect reality entirely); (2) people who make media messages use creative languages that have rules (i.e., creative components such as words, music, movement, camera angle and others are utilized to develop a media message in different formats such as magazine cover, advertisement, etc.); (3) different people experience the same media messages differently; (4) producers of media messages have their own values and points of view; and (5) media messages are constructed to achieve a purpose, usually for profit and/or power. In a systematic review of health-promoting media literacy interventions, Bergsma and Carney (2008) found that of 28 health-promoting media literacy interventions considered, only 10 interventions incorporated all of the five core concepts. In general, effective interventions were slightly more likely than ineffective interventions to have taught all the core concepts (Bergsma & Carney, 2008). However, the differences in efficacy the authors find may also be due to the fact that effectiveness was defined differently in each of the studies included in the review. Based on a synthesis of the literature on media literacy education across contexts and applications, Martens (2010) note that while some media literacy programs focus on literacy-related outcomes such as critical and informed consumption of media messages (see also Kubey, 2004), other programs “mostly try to increase children’s and adolescents’ mass media knowledge and skills because this, in turn, will maximize positive media effects and minimize negative ones” (Martens, 2010, p. 7). That is, many media literacy interventions are based on the assumption that individuals can protect themselves against negative influences from the media by becoming more media literate (Potter, 2004, 2010), and therefore the efficacy of such interventions ought to be assessed in relation to their ability to activate cognitive defenses against persuasive media content that promotes unhealthy behaviors.

In reflecting on the challenge of evaluating the effect of health communication interventions, Hornik and Yanovitzky (2003) argue that a more robust approach for evaluating the efficacy of interventions is to focus on their underlying theory of change (or logic model). That is, the most credible form of evidence about the efficacy of interventions involves demonstrating that the program was able to cause a range of intended effects in audiences. Indeed, some of the most robust evidence regarding the effect of media literacy programs on adolescents’ health-related cognitions and behavior has been produced by Austin and colleagues using the message interpretation process (MIP) model (e.g., Austin, 2007; Austin & Knaus, 2000; Austin & Meili, 1994; Austin, Pinkleton, & Fujioka, 2000), precisely because their assessments of direct program effects were tested against the prediction of their hypothesized model. In what follows, we discuss the contribution of the MIP model to the understanding of the process by which media literacy interventions influence their target audience and how it may be augmented, conceptually and empirically, to allow for more rigorous evaluations of effects.

Effects of Media Literacy Interventions on Behavior: A Conceptual Framework

To date, the field of media literacy has not yet produced a systematic account of the process by which media literacy interventions may contribute to positive health outcomes among adolescents (Martens, 2010). Representing this process necessarily requires an explication of how audiences interact (or are expected to interact) with the content and activities of media literacy programs. The MIP model (Austin, 2007; Austin & Knaus, 2000; Austin & Meili, 1994; Austin et al., 2000), that has evolved from the integration of social cognitive theory (Bandura, 1986), expectancy theory of behavior (Fishbein & Ajzen, 1975), and dual-process theories of attitude change (Petty & Cacioppo, 1986), attempts to do just that by tracking how adolescents process information they obtain through media literacy interventions to form their interpretation of media messages that promote unhealthy behaviors. According to this model, whether or not adolescents are influenced by media messages depends on the thoughts and feelings media messages invoke in them. In general, adolescents’ evaluation of media messages is informed by logic (comparison of the information provided against a benchmark) but also partially based on their affective reactions to the message. The logical interpretation of messages begins by evaluating the degree of realism or representativeness of media portrayals of the behavior and, unless it is rejected up front, is next evaluated for similarity (i.e., assessment of how closely the portrayal reflects normative personal experiences). Assuming that media portrayals are judged to match the adolescent’s perception of reference group norms (i.e., high similarity), the next step in the process involves identification with the portrayal, which in turn, leads the adolescent to form positive expectancies (i.e., the expectation that doing something consistent with what is portrayed in the media message will have positive outcomes). The affective route of message interpretation that adolescents employ to evaluate the desirability or undesirability of the behavior portrayed in the media message is assisted by heuristics (e.g., liking of the peer model or the social scene portrayed in the ad) and, depending on the intensity of the affective reaction induced by the message, may bypass or bias the effect of logical evaluations on behavioral expectancies (Austin, Pinkleton, & Funabiki, 2007; Nathanson, 2004). Over time, as adolescents are exposed repeatedly to the same portrayal of behavior, they either internalize or reject it based on their interpretation. If they internalize the message, they perceive positive outcomes of the behavior promoted. If they reject it, they perceive negative outcomes of the behavior promoted. Thus, adolescents’ decisions to enact or to avoid the behavior portrayed in media messages can be reliably predicted from their perceived expectancies.

Consistent with this theoretical rationale, research utilizing the MIP model generally found that media literacy interventions can influence adolescents’ decisions regarding risky health behaviors portrayed in the media (e.g., Austin et al., 2005; Chen, 2009; Kupersmidt, Scull, & Austin, 2010; Pinkleton, Austin, Cohen, Miller, & Fitzgerald, 2007). However, because the model is primarily occupied with the interpretation of media messages, it does not fully represent the process by which participation in media literacy interventions may logically cause adolescents to reject or resist negative influences from media content. What is currently missing from the conceptualization of this process is an account of what aspect of the nature of the media literacy intervention may motivate adolescents to engage in the cognitive process of message interpretation as well as an account of when or under which circumstances adolescents’ critical evaluation of media portrayals will go on to influence their own decisions and behavior. Our discussion below highlights the crucial role of personal engagement with and reflection on the knowledge and skills adolescents acquire through their participation in a media literacy program regarding their ability to resist negative influences from the media.

The Importance of Engagement and Reflectiveness

As we consider the process by which media literacy interventions may influence adolescents’ decisions and behaviors in response to media portrayals, it is important to keep in mind that the target audience for these interventions are active participants in this process (Biocca, 1988). Audience selectivity (i.e., selective attention, perception, and retention) and audiences’ degree of involvement with the content of media messages have long been recognized in persuasion theory to be important determinants of how people process and evaluate the information to which they are exposed, and consequently, the likelihood that they will be influenced by this information (Petty & Cacioppo, 1986; Sherif & Hovland, 1961). For example, social judgment theory (Sherif & Hovland, 1961) explains that the likelihood of a message being accepted or rejected by audiences is a function of their ego-involvement with the issue, or the extent to which the information is judged to be personally relevant. Higher levels of ego-involvement make for a much narrower (or more highly selective) range of positions that a person finds acceptable. The elaboration likelihood model (ELM; Petty & Cacioppo, 1986) defines involvement with the message as the amount of cognitive effort a person invests in evaluating messages, under the assumption that a greater investment of cognitive resources (or elaboration) is more conducive to learning from a message. Thus, audience involvement with messages is primarily assumed to be a function of an audience’s motivation to process the information provided. We can therefore predict that if participants in a media literacy program judge the activity as boring or irrelevant, they will likely ignore or disregard the information it offers despite being exposed to it. On the other hand, if they find the activity interesting and relevant, they are more likely to process and remember the information provided through their participation in this activity and, therefore, are more likely to use this information to form their attitudes and to guide their behavior.

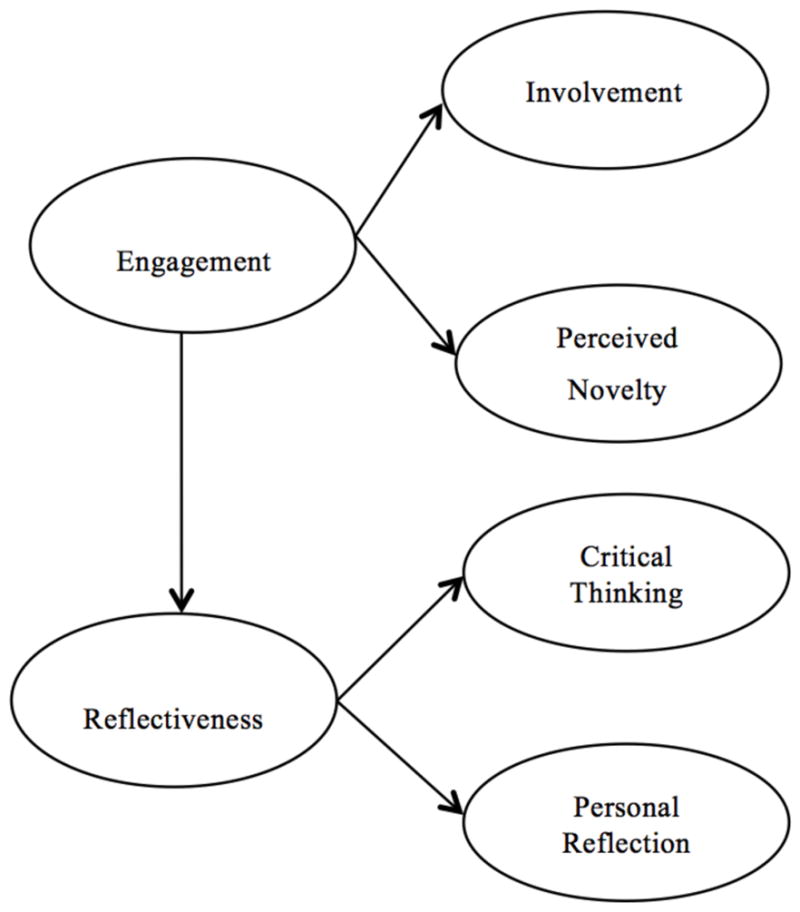

Given this, and because media literacy programs are essentially designed to be active and participatory (Hobbs, 2001, 2006; Masterman, 2001), it seems important to evaluate the degree to which target audiences’ are personally engaged with the activity to better predict the influence that this will have on their cognitions and behavior. Conceptually, our definition of engagement (see Figure 1) includes the dimensions of personal involvement with and perceived novelty of the activity, as both has been shown to be reliable predictors of information processing (Cacioppo, Petty, Feinstein, & Jarvis, 1996). Our conceptualization of personal involvement as the degree to which participants found the media literacy activity to be personally relevant and interesting without getting distracted is derived directly from its conceptualization in dual-process theories of persuasion (Petty, Cacioppo, & Goldman, 1981). Novelty is typically discussed in relation to the need for cognition component of dual-process models (see Cacioppo, Petty, Feinstein, & Jarvis, 1996). However, in the context of media literacy interventions it seems more reasonable to expect that perceived novelty will be assessed in relation to how activities are similar to or different from the typical educational activities that adolescents routinely experience. For example, Balch (1998) found that adolescent smokers would likely avoid anti-smoking programs that used approaches such as lecturing, preaching, nagging, and pushing that adolescents expected in these programs. Instead, Balch (1998) suggested that creative advertising opportunities may appeal to students and can involve students in designing such anti-smoking or quitting efforts/programs (see active involvement interventions, Greene, 2013). Conceptually, our definition of novelty measures the degree of newness or originality of the media literacy activity under the assumption that greater perceived novelty increases degree of adolescent engagement with the activity.

Figure 1.

Conceptual Model of Engagement and Reflectiveness

Reflectiveness

Audiences’ degree of engagement with media literacy programs is a necessary condition for initiating the acquisition of knowledge and critical thinking skills that adolescents need to interpret media messages and portrayals but may be insufficient for influencing adolescents’ health-related decisions and behaviors unless the knowledge acquired is used for self-regulating one’s behavior. That is, whereas the MIP model views the process by which message interpretation influences behavioral expectancies as primarily involving conformity to reference group norms, social cognitive theory (Bandura, 1986), on which this model is based, also acknowledges the importance of self-reflection to the potential for individuals self-regulating their behavior based on assessment of information they receive from their environment. According to Bandura (1986), people are self-motivated to change their behavior once they accept that their current behavior or conduct does not meet their personal standards or goals. When they perceive a discrepancy between their current behavior and ideal behavior (which is often accompanied by feelings of discomfort or affective reaction), they are motivated to reflect on how to align their personal behavior or goals with the standards they set for themselves. Assuming they have a sense of self-efficacy (that is, a belief in their ability to change their behavior), they make specific plans for changing their behavior that they then go on to execute.

Based on the self-regulation rationale of social cognitive theory, we propose that the degree to which participants personally reflect on what they learned from the media literacy intervention will be an important determinant of whether or not this knowledge is processed in a manner that can influence their behavioral expectancies and, subsequently, their behavior. Accordingly, we conceptualize reflectiveness as composed of two dimensions (see Figure 1): critical thinking (the degree to which the media literacy activity stimulated critical evaluations of media messages) and personal reflection (the degree to which the knowledge acquired was used to reevaluate personal conduct).

Study Goals

The primary goal of this study was to develop and test theory-grounded and reliable measures of audience engagement with and reflectiveness on media literacy interventions to enable the incorporation of these constructs into evaluations of media literacy interventions as potentially important explanatory variables. We examine and demonstrate the predictive utility of including both constructs into the outcome evaluation of a media literacy intervention elsewhere (Greene et al., 2011). Our focus here is on describing the procedure we followed to develop and test the measurement model presented in Figure 1.

Consistent with our conceptualization of each construct, we sought to represent engagement by measuring two distinct dimensions of this construct: degree of involvement with the intervention and degree of perceived novelty of the intervention. We therefore sought to develop two corresponding sub-scales of self-reported items that are moderately correlated with one another (because this finding will be consistent with the argument that each is a distinct dimension of the same latent construct). Similarly, we sought to represent reflectiveness via the combination of two dimensions (or subscales) – critical thinking and personal reflection – that are moderately correlated. Finally, because we expect reflectiveness to be dependent in part on degree of engagement with the intervention based on the logic of the self-regulation in social cognitive theory (Bandura, 1986), the model in Figure 1 also includes a hypothesized link (or an expectation of association) between the two constructs. In the next section we describe the measurement of each of these constructs and the methodology we used to evaluate the validity, reliability and overall utility of the instrument based on data obtained from an actual media literacy intervention.

Research Methods

The project utilizes data collected from two samples of adolescents to evaluate responses to a media literacy curriculum. We describe the combined procedure first, followed by the two samples, the measures, and finally data analyses. This measurement study is part of a larger project testing an intervention in which subjects were randomly assigned to different conditions. However, for the purposes of this paper, data were combined across all conditions to ensure adequate control for type I and type II errors, after we verified that the measurement structure was invariant across conditions.

Procedure

Participants in both samples attended a 75-minute media literacy workshop that included discussion of persuasion techniques and advertising claims employed by advertisers, along with coverage of the production features typically used to sell alcohol and other products. Participants learned to critically evaluate the claims made in alcohol ads and in anti-alcohol ads. At the end of the workshop, participants completed a short questionnaire measuring their evaluation of the media literacy intervention, in addition to offering oral comments (15 additional minutes taken for the evaluation). This paper focuses on the curriculum evaluation measures of the immediate post-implementation questionnaire.

Sample I

The data used for Sample I of this paper were collected in 2010 as part of a posttest-only pilot study to adapt and refine an existing brief media literacy intervention targeting adolescents. The full sample included 308 students from 32 schools across Pennsylvania attending a Leadership Institute (representing rural, smaller town, smaller city, suburban, and urban school districts). After removing 14 participants due to missing data on the relevant items, the final sample included 294 male (n = 44) and female (n = 169; 49 students did not report gender) 10th grade high school students (ages 14–16; M = 15.62, SD = .57). About 64% reported their race/ethnicity as White, with others indicating Hispanic/Latino (17%), African American (13%), American Indian/Alaskan Native (3%), Asian American/Pacific Islander (3%), or some other race/ethnicity (less than 1%).

Sample II

The data used for Sample II came from a longitudinal feasibility test of the revised curriculum implemented in 2011. After removing three participants due to missing data on the relevant items, the final sample included 171 male (n = 59) and female (n = 102; 10 students did not report gender) 10th grade high school students (ages 14–17; M = 15.75, SD = .90) from 34 schools across Pennsylvania (representing rural, smaller town, smaller city, suburban, and urban school districts) who participated in this study while attending a Leadership Institute. About 70% reported their race/ethnicity as White, while others indicated Hispanic/Latino (10%), Asian American/Pacific Islander (10%), American Indian/Alaskan Native (4%), African American (3%), or some other race/ethnicity (3%).

Measures

The present analyses focus on 14 questionnaire items (expanded to 16 in Study II) that measured participants’ degree of engagement with the media literacy workshop and their subsequent reflection on what they learned. Tables 1 and 2 list the items that were developed to measure engagement (see the subscales labeled “involvement” and “perceived novelty”) and reflectiveness (see the subscales labeled “critical thinking” and “personal reflection”). The items were initially developed during the pilot implementation of the intervention and underwent refinement based on the feedback collected from participants (see Greene et al., 2011). Most items were five-point Likert type. Scales were modified slightly between Study I and Study II. Specifically, the items measuring critical thinking were modified to include a five-point scale for consistency with the other subscales in the curriculum evaluation measure, and two additional items were developed for perceived novelty and reflectiveness. Since two Study I items did not correlate well with the scale, they were modified for Study II.

Table 1.

Study I: Workshop Engagement and Reflectiveness Reliability, Item Means and Primary Factor Loading

| Scale/Item | M | SD | Factor Loading |

|---|---|---|---|

| Involvement (1 = Strongly Disagree to 5 = Strongly Agree) α = .85 | |||

| -The workshop was interesting to me. | 3.78 | .80 | .79 |

| -I got easily distracted during the workshop. (R) | 3.44 | .97 | .78 |

| -I enjoyed this workshop. | 3.46 | .85 | .79 |

| -The workshop was boring. (R) | 3.61 | .98 | .79 |

| Personal Reflection (1 = Not at all to 4 = A Lot) α = .75 | |||

| -How much did the workshop make you think about the impact of advertising on you personally? | 3.00 | .80 | .61 |

| -How much did the workshop make you think about the impact of advertising on your peers? | 3.08 | .81 | .67 |

| -How much did the workshop make you think about your alcohol use? | 2.54 | 1.11 | .74 |

| -How much did the workshop make you think about how your peers use alcohol? | 2.98 | .94 | .75 |

| Perceived Novelty (1 = Strongly Disagree to 5 = Strongly Agree) α = .75 | |||

| -The workshop was just like what we normally do in school. (R) | 3.45 | 1.06 | .78 |

| -I’ve never done anything like what we did in the workshop today. | 2.43 | 1.06 | .72 |

| -The workshop was different from regular school classes. | 3.53 | 1.05 | .85 |

| Critical Thinking (1 = Strongly Disagree to 5 = Strongly Agree) α = .76 | |||

| -This workshop said something important to me. | 3.46 | .89 | .61 |

| -The messages in the workshop made me think about the ads that I see. | 3.79 | .78 | .83 |

| -The messages in the workshop made me think about the truthfulness of ad claims. | 3.80 | .77 | .86 |

Table 2.

Study II: Workshop Engagement and Reflectiveness Reliability, Item Means and Primary Factor Loading

| Scale/Item | M | SD | Factor Loading |

|---|---|---|---|

| Involvement (1 = Strongly Disagree to 5 = Strongly Agree) α = .87 | |||

| -The workshop was interesting to me. | 3.50 | .90 | .88 |

| -I got easily distracted during the workshop. (R) | 3.01 | 1.09 | .88 |

| -I enjoyed this workshop. | 3.51 | .88 | .79 |

| -The workshop was boring. (R) | 2.76 | 1.12 | .54 |

| -This workshop material was important to me. | 3.50 | .86 | .63 |

| Personal reflection (1 = Strongly Disagree to 5 = Strongly Agree) α = .69 | |||

| -The workshop made me think a lot about the impact of advertising on me. | 3.58 | .80 | .94 |

| -The workshop made me think a lot about the impact of advertising on my peers. | 3.64 | .82 | .59 |

| -The workshop made me think a lot about my alcohol use. | 3.02 | 1.23 | .46 |

| Perceived Novelty (1 = Strongly Disagree to 5 = Strongly Agree) α = .77 | |||

| -The workshop was just like what we normally do in school. (R) | 2.76 | 1.15 | .63 |

| -I’ve never done anything like what we did in the workshop today. | 2.63 | 1.13 | .63 |

| -The workshop was different from regular school classes. | 3.40 | 1.08 | .82 |

| -The workshop was unique. | 3.37 | .96 | .66 |

| Critical Thinking (1 = Strongly Disagree to 5 = Strongly Agree) α = .83 | |||

| -The workshop made me think about the ads that I see. | 3.87 | .79 | .87 |

| -The workshop made me think about the truthfulness of ad claims. | 3.83 | .83 | .78 |

| -The workshop made me think about advertising. | 3.80 | .78 | .72 |

Data Analysis: Study I

In the first step of the analyses, we examined the distribution of all items to detect any irregular patterns of responses. All items exhibited approximately normal distribution of responses (as evidenced by kurtosis and skewness values significantly smaller than 2). We then examined inter-class correlations within subscale items for each of the four subscales. In the next step, we subjected the data to principal component analysis, using eigenvalues, scree plots and conceptual framework to determine the number of factors and then utilized Varimax rotation to extract the factor structure and create subscales. Each of the subscales’ reliability was assessed using Cronbach’s alpha as a measure of internal consistency of the set of items making up each one of the subscales (an adequate alpha level was .70, good .80). Finally, we examined the correlations among the four subscales to assess the convergent and discriminant validity of our measure.

Data Analyses: Study II

The measures were modified slightly for Study II including generation of two additional items (one for perceived novelty and one for reflectiveness). In the first step of the analyses for Study II, we examined the distribution of all items to detect any irregular patterns of responses. All items exhibited approximately normal distribution of responses. Inter-class correlations among items within a subscale were examined. Because sub-scales were explored and identified based on the data collected from the first sample, our goal was to confirm the factor structure of all variables as subscales of the latent variable of motivation to process information using second-order confirmatory factor analysis (CFA) using maximum likelihood structural equation modeling (AMOS 18.0). The reliability of each of the subscales was assessed using Cronbach’s alpha. See Note 1 below. Finally, we examined the correlations across the four different subscales to assess the convergent and discriminant validity of our measure. In the next section, we present the results from Study I and Study II.

Results

Study I

Table 1 summarizes the findings of exposing the data to principal component analysis. This procedure identified four factors from the 14 items based on examination of the scree plot and the best conceptual fit (see Varimax-rotated factor loadings of individual items in Table 1). The first factor, labeled Involvement (eigenvalue = 5.26), was composed of 4 items and accounted for 35.6% of the variance with good internal consistency (α = .85). The second factor, labeled Personal Reflection (eigenvalue = 2.05), was composed of 4 items and accounted for 14.6% of the variance with acceptable internal consistency (α = .75). The third, labeled Perceived Novelty (eigenvalue = 1.16), was composed of 3 items and accounted for 8.4% of the variance with acceptable reliability (α = .75). The last (fourth) factor extracted from the data, labeled Critical Thinking (eigenvalue = 1.04), was also composed of 3 items and accounted for 7.4% of the variance with acceptable reliability (α = .76). This analysis confirmed our underlying theoretical rationale that that the motivation to process the information provided by the intervention is a multidimensional construct.

Once a composite variable was created to represent each subscale by averaging responses to relevant items, the next step of the analysis involved an examination of the bivariate correlations between the four factors (or subscales) to establish convergent validity. Convergent validity is established when measures of constructs that theoretically should be related to each other (such as subscales of the same latent variable) are, in fact, correlated with each other, albeit not perfectly (or else they represent the same dimension of the construct). As Table 3 illustrates, the non-trivial and statistically bivariate correlation coefficients among the different subscales are consistent with our expectation regarding the convergent validity of our instrument. Specifically, the correlations between the two subscales measuring the same latent construct – involvement and perceived novelty (r = .48) that measure engagement, and critical thinking and personal reflection (r = .56) that measure reflectiveness – were generally greater than the correlations between pairs of subscales hypothesized to measure different constructs. The discriminant validity of our instrument is established by the small correlations observed between the subscales of involvement and those that measure personal reflection. At the same time, the fact that statistically significant moderate correlations exist between subscales of engagement and reflectiveness (r = .39), suggest that both variables are positively associated as predicted by the self-regulation rationale of social cognitive theory.

Table 3.

Bivariate Correlations, Study 1

| 1 | 2 | 3 | 4 | |

|---|---|---|---|---|

| 1. Involvement | --- | |||

| 2. Perceived Novelty | .48** | --- | ||

| 3. Personal Reflection | .41** | .18** | --- | |

| 4. Critical Thinking | .47** | .24** | .56** | --- |

p < .05 (two-tailed),

p < .01 (two-tailed)

Study II

Table 2 summarizes the results of testing the conceptual measurement model in Figure 1 with a second independent sample using a confirmatory factor analysis procedure. Specifically, a second-order CFA procedure was employed to confirm the four-factor structure that was extracted from the principal component analysis of Sample I with a second independent sample. The model fit statistics for the second-order factor analysis on all four scales confirmed good fit for the four-factor structure [χ2(41) = 86.22, χ2/df = 2.10, p > .001; CFI = .90, RMSEA = .08] as subscales of the same latent variable (motivation to process the information provided in the media literacy workshop). The estimated factor loading of each item is reported in Table 2.

A comparison of the scale reliability estimates (alphas) reported in Table 2 to the comparable reliability estimates in Table 1 suggests that the slightly refined set of survey items used in Sample II improved the overall reliability of each subscale, with the exception of the critical thinking subscale (where alpha = .75 in Sample I but .69 in Sample II). However, inspecting the patterns of means and standard deviations on these items across the two samples, we note that there was a greater homogeneity in responses to this subscale among Sample I participants in comparison to Sample II participants, so the diverging estimates of reliability are likely due to between-samples variations, particularly when the wording of the items has not changed between the two applications of the instrument. Overall, then, the survey items used make for reliable measures of the subscales of interest.

Finally, as we did with the data in Sample I, we estimated a bivariate correlation matrix between the composite variables measuring the four subscales to estimate the convergent and discriminant validity of the four subscales (see Table 4). As expected theoretically, and consistent with the pattern observed for Sample I (see Table 3), correlations among the four subscales were positive and statistically significant, with correlations between each pair of subscales that measure the same latent construct being stronger than correlations of subscales that measure different latent constructs (i.e., engagement and reflectiveness).

Table 4.

Bivariate Correlations, Study 2

| 1 | 2 | 3 | 4 | |

|---|---|---|---|---|

| 1. Involvement | --- | |||

| 2. Perceived Novelty | .43** | --- | ||

| 3. Personal Reflection | .37** | .16* | --- | |

| 4. Critical Thinking | .45** | .24** | .85** | --- |

p < .05 (two-tailed),

p < .01 (two-tailed)

Thus, involvement and perceived novelty correlated strongly (r = .43) as did critical thinking and personal reflection (r = .85), suggesting convergent validity of our measurement instrument. In contrast, correlations between subscales that belong in different constructs were much smaller. As before, we interpret the significant correlation between involvement and personal reflection (r = .37) to suggest that reflectiveness depends to a considerable degree on a person’s engagement with the media literacy workshop.

Discussion

We propose that a more complete understanding of the cognitive process through which participation in media literacy interventions causes the intended outcomes in participants is necessary to harness the full potential of these programs. Based on the self-regulation rationale of social cognitive theory (Bandura, 1986) and dual-process models of information processing (Petty & Cacioppo, 1986), we highlight the potential importance of the degree to which audiences are cognitively engaged with and reflect on the knowledge they obtain through these programs to the explanation and prediction of the effects that these programs have (or fail to have) on adolescents’ decisions and behaviors. However, because theory-grounded and reliable measures of these constructs in the context of media literacy programs are not presently available, the primary goal of this study was to develop and test valid and reliable survey measures of engagement and reflectiveness. The measurement model we developed (see Figure 1) recognizes the multi-dimensionality of these constructs, with engagement encompassing involvement with the activity and its perceived novelty, and reflectiveness being represented by critical thinking about the topic of the activity as well as personal reflection on the insights generated from participation.

Overall, the results of two independent tests of this particular measurement model in the context of evaluating a media literacy intervention lend considerable support to this model. As expected, the factor analytic procedure confirmed that the proposed subscales provide adequate representation of the constructs of interest (as evidenced by the convergent and discriminant validity of the instrument) as well as the hypothesis that reflectiveness is dependent on engagement to some degree (by virtue of engagement preceding personal reflection). Despite being tested with a specific group of adolescents that may not be representative – demographically, developmentally, or in terms of motivation of the broader and diverse group of adolescents who may be susceptible to influences from media messages and portrayals, we believe that instrument we developed can be used reliably to measure the same constructs within this broader group and in the specific context of media literacy interventions. However, it is also clear that the instrument can benefit from further refinement and testing, as well as the inclusion of factors, such as self-efficacy, that social cognitive theory posits as important moderators of the degree to which acquired knowledge and skills translate into decisions and behavior via the process of self-regulation.

We believe that this work has both theoretical and practical implications. Theoretically, it signals the importance of expending the conceptual framework used to assess the effect of media literacy interventions on their audience by tracking a process of change in personal predispositions, decisions, and behaviors in response to influence from media messages that involves self-regulation as envisioned by social cognitive theory, and which augments the normative or social identification-based process of influence that the MIP model (Austin, 2007) proposes and that existing empirical tests support (e.g., Austin et al., 2005; Banerjee & Greene, 2006, 2007; Pinkleton et al., 2007). We believe that incorporating both processes into the evaluation of media literacy interventions can produce a more complete understanding of how and under which circumstances these interventions can be a viable tool of influencing decisions and behaviors among adolescents as well as target audiences more generally. Practically, this work provides researchers with a valid and reliable instrument for measuring audiences’ degree of engagement with media literacy interventions (as a prerequisite for expecting intervention effects) and personal reflection on the experience (which is necessary for self-regulation), that can be easily adapted to the particular context of the intervention, and be used (in addition to existing measures that track the influence of the program) for formative, process, and outcomes evaluation. We hope this this work will encourage media literacy scholars to further engage with the question of causal paths and to develop additional measures that will advance rigorous evaluations of media literacy interventions that are theoretically-driven.

Footnotes

The first step requires calculation of the error variance to account for measurement error in the variables. Three goodness-of-fit indices estimate the fit of models. The χ2/df adjusts the χ2 statistic for sample size. The CFI calculates the ratio of the noncentrality parameter estimate of the hypothesized model to the noncentrality parameter estimate of a baseline model. The RMSEA accounts for errors of approximation in the population. We determined that the model fit the data if χ2/df was less than 3, CFI was greater than .90, and RMSEA was less than .08.

Contributor Information

Kathryn Greene, Rutgers University.

Itzhak Yanovitzky, Rutgers University.

Amanda Carpenter, Rutgers University - New Brunswick/Piscataway.

Smita C. Banerjee, Memorial Sloan Kettering Cancer Center

Kate Magsamen-Conrad, Bowling Green State University.

Michael L. Hecht, Pennsylvania State University

Elvira Elek, RTI International.

References

- Aufderheide P, Firestone C. Media literacy: A report of the national leadership conference on media literacy. Queenstown, MD: Aspen Institute; 1993. [Google Scholar]

- Austin EW. Message interpretation process model. In: Arnett JJ, editor. Encyclopedia of children, adolescents, and the media. Thousand Oaks, CA: Sage; 2007. pp. 536–537. [Google Scholar]

- Austin EW, Knaus C. Predicting the potential for risky behavior among those “too young” to drink as the result of appealing advertising. Journal of Health Communication. 2000;5:13–27. doi: 10.1080/108107300126722. [DOI] [PubMed] [Google Scholar]

- Austin EW, Meili HK. Effects of interpretations of televised alcohol portrayals on children’s alcohol beliefs. Journal of Broadcasting & Electronic Media. 1994;38:417–435. [Google Scholar]

- Austin EW, Pinkleton B, Fujioka Y. The role of interpretation processes and parental discussion in the media’s effects on adolescents’ use of alcohol. Pediatrics. 2000;105:343–349. doi: 10.1542/peds.105.2.343. [DOI] [PubMed] [Google Scholar]

- Austin EW, Pinkleton BE, Funabiki RP. The desirability paradox in the effects of media literacy training. Communication Research. 2007;34:483–506. [Google Scholar]

- Austin EW, Pinkleton BE, Hust SJT, Cohen M. Evaluation of an American Legacy Foundation/Washington State Department of Health media literacy pilot study. Health Communication. 2005;18:75–95. doi: 10.1207/s15327027hc1801_4. [DOI] [PubMed] [Google Scholar]

- Balch GI. Exploring perceptions of smoking cessation among high school smokers: Input and feedback from focus groups. Preventive Medicine. 1998;27:A55–A63. doi: 10.1006/pmed.1998.0382. [DOI] [PubMed] [Google Scholar]

- Bandura A. Social foundations of thought and action: A social cognitive theory. Englewood Cliffs, NJ: Prentice-Hall, Inc; 1986. [Google Scholar]

- Banerjee SC, Greene K. Analysis versus production: Adolescent cognitive and attitudinal responses to anti-smoking interventions. Journal of Communication. 2006;56:773–794. [Google Scholar]

- Banerjee SC, Greene K. Anti-smoking initiatives: Examining effects of inoculation based media literacy interventions on smoking-related attitude, norm, and behavioral intention. Health Communication. 2007;22:37–48. doi: 10.1080/10410230701310281. [DOI] [PubMed] [Google Scholar]

- Banerjee SC, Kubey R. Boom or boomerang: A critical review of evidence documenting media literacy efficacy. In: Scharrer E, editor. Media effects/media psychology. Hoboken, NJ: Wiley-Blackwell Publishers; 2013. pp. 2–24. [Google Scholar]

- Bergsma LJ, Carney ME. Effectiveness of health-promoting media literacy education: A systematic review. Health Education Research. 2008;23:522–542. doi: 10.1093/her/cym084. [DOI] [PubMed] [Google Scholar]

- Biocca FA. Opposing conceptions of the audience: The active and passive hemispheres of mass communication theory. Communication Yearbook. 1988;11:51–80. [Google Scholar]

- Byrne S, Linz D, Potter WJ. A test of competing cognitive explanations for the boomerang effect in response to the deliberate disruption of media-induced aggression. Media Psychology. 2009;12:227–248. doi: 10.1080/15213260903052265. [DOI] [Google Scholar]

- Cacioppo JT, Petty RE, Feinstein JA, Jarvis WBG. Dispositional differences in cognitive motivation: The life and times of individuals varying in need for cognition. Psychological Bulletin. 1996;119:197–253. [Google Scholar]

- The Center for Media Literacy. Five key questions/Five key concepts. 2007 Retrieved from http://www.medialit.org/sites/default/files/14A_CCKQposter.pdf.

- Chen Y. The role of media literacy in changing adolescents’ responses to alcohol advertising. Paper presented at the International Communication Association (ICA) Conference; Chicago. 2009. May, [Google Scholar]

- Fishbein M, Ajzen I. Belief, attitude, intention, and behavior: An introduction to theory and research. Boston, MA: Addison-Wesley; 1975. [Google Scholar]

- Greene K. The Theory of Active Involvement: Processes underlying interventions that engage adolescents in message planning and/or production. Health Communication. 2013;28:644–656. doi: 10.1080/10410236.2012.762824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene K, Elek E, Magsamen-Conrad K, Banerjee SC, Hecht M, Yanovitzky I. Developing a brief media literacy intervention targeting adolescent alcohol use: The impact of formative research. Paper presented at the DC Health Communication Conference; Fairfax, VA. 2011. Apr, [Google Scholar]

- Greene K, Hecht ML. Introduction for Symposium on engaging youth in prevention message creation: The theory and practice of active involvement interventions. Health Communication. 2013;28:641–643. doi: 10.1080/10410236.2012.762825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hobbs R. The seven great debates in the media literacy movement. Journal of Communication. 1998;48:16–32. [Google Scholar]

- Hobbs R. Improving reading comprehension by using media literacy activities. Voices from the Middle. 2001;8:44–50. [Google Scholar]

- Hobbs R. Non-optimal uses of video in the classroom. Learning, Media and Technology. 2006;31:35–50. doi: 10.1080/17439880500515457. [DOI] [Google Scholar]

- Hornik R, Yanovitzky I. Using theory to design evaluations of communication campaigns: The case of the national youth anti-drug media campaign. Communication Theory. 2003;13:204–224. doi: 10.1111/j.1468-2885.2003.tb00289.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubey R. What is media education and why is it important? Television Quarterly. 2004;34:21–27. [Google Scholar]

- Kupersmidt JB, Scull TM, Austin EW. Media literacy education for elementary school substance use prevention. Pediatrics. 2010;126:525–531. doi: 10.1542/peds.2010-0068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeong SH, Cho H, Hwang Y. Media literacy interventions: A meta-analytic review. Journal of Communication. 2012;62:454–472. doi: 10.1111/j.1460-2466.2012.01643.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martens H. Evaluating media literacy education: Concepts, theories, and future directions. The Journal of Media Literacy Education. 2010;2:1–22. [Google Scholar]

- Masterman L. A rationale for media education. In: Kubey R, editor. Media literacy in the information age: Current perspectives. 2. New Brunswick, NJ: Transaction; 2001. pp. 15–68. [Google Scholar]

- Nathanson AI. Factual and evaluative approaches to modifying children’s responses to violent television. Journal of Communication. 2004;54:321–336. doi: 10.1111/j.1460-2466.2004.tb02631.x. [DOI] [Google Scholar]

- Petty RE, Cacioppo JT. Communication and persuasion: Central and peripheral routes to attitude change. New York, NY: Springer-Verlag; 1986. [Google Scholar]

- Petty RE, Cacioppo JT, Goldman R. Personal involvement as a determinant of argument-based persuasion. Journal of Personality and Social Psychology. 1981;41:845–855. [Google Scholar]

- Pinkleton BE, Austin EW, Cohen M, Miller A, Fitzgerald E. A statewide evaluation of the effectiveness of media literacy training to prevent tobacco use among adolescents. Health Communication. 2007;21:23–34. doi: 10.1080/10410230701283306. [DOI] [PubMed] [Google Scholar]

- Potter WJ. Theory of media literacy: A cognitive approach. Thousand Oaks, CA: Sage; 2004. [Google Scholar]

- Potter WJ. Media literacy. 5. Thousand Oaks, CA: Sage; 2010. [Google Scholar]

- Primack BA, Fine D, Yang CK, Wickett D, Zickmund S. Adolescents’ impressions of antismoking media literacy education: Qualitative results from a randomized controlled trial. Health Education Research. 2009;24:608–621. doi: 10.1093/her/cyn062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramasubramanian S, Oliver MB. Activating and suppressing hostile and benevolent racism: Evidence for comparative media stereotyping. Media Psychology. 2007;9:623–646. [Google Scholar]

- Sherif M, Hovland CI. Social judgment: Assimilation and contrast effects in communication and attitude change. Westport, CT: Greenwood Press; 1961. [DOI] [PubMed] [Google Scholar]