Abstract

Background

People’s ability to appraise claims about treatment effects is crucial for informed decision-making. Our objective was to systematically map this area of research in order to (a) provide an overview of interventions targeting key concepts that people need to understand to assess treatment claims and (b) to identify assessment tools used to evaluate people’s understanding of these concepts. The findings of this review provide a starting point for decisions about which key concepts to address when developing new interventions, and which assessment tools should be considered.

Methods

We conducted a systematic mapping review of interventions and assessment tools addressing key concepts important for people to be able to assess treatment claims. A systematic literature search was done by a reserach librarian in relevant databases. Judgement about inclusion of studies and data collection was done by at least two researchers. We included all quantitative study designs targeting one or more of the key concepts, and targeting patients, healthy members of the public, and health professionals. The studies were divided into four categories: risk communication and decision aids, evidence-based medicine and critical appraisal, understanding of controlled trials, and science education. Findings were summarised descriptively.

Results

We included 415 studies, of which the interventions and assessment tools we identified included only a handful of the key concepts. The most common key concepts in interventions were “Treatments usually have beneficial and harmful effects,” “Treatment comparisons should be fair,” “Compare like with like,” and “Single studies can be misleading.” A variety of assessment tools were identified, but only four assessment tools included 10 or more key concepts.

Conclusions

There is great potential for developing learning and assessment tools targeting key concepts that people need to understand to assess claims about treatment effects. There is currently no instrument covering assessment of all these key concepts.

Electronic supplementary material

The online version of this article (doi:10.1186/s13643-016-0389-z) contains supplementary material, which is available to authorized users.

Keywords: Health literacy, Evidence-based medicine, Controlled trials, Patient education, Decision making

Background

A large number of studies conducted across different populations and contexts have concluded that people’s ability to assess and apply health information is generally poor [1–10]. This is particularly the case when it comes to key concepts related to understanding the effects of treatments, such as understanding the need for fair comparisons of treatments, judging whether a comparison of treatments is a fair comparison, and understanding the role of chance, and the results and relevance of fair comparisons of treatments [1–10]. Furthermore, many people rely on anecdotes, as opposed to information based on research, and may overrate the trustworthiness of the information they find [3, 11–14]. As a result, people may be poorly informed and may trust information that is incomplete or even harmful. For example, studies have found that people may not have insight into reasons for policy switches between using brand or generic drugs, or the efficacy of preventive treatments such as screening interventions or vaccination [10, 14–16]. Furthermore, people’s lack of understanding of research methods, such as randomisation, may also be a barrier to people’s participation in controlled trials addressing treatment uncertainties [7].

Knowing what to trust and being able to assess if a claim is based on a review of fair comparisons of treatments is the first step in making an informed decision [17, 18]. Studies have found that patients may play an important part in promoting evidence-based practice important for patient safety but also for quality of care [19–21]. Studies also suggest that patients who are more informed are more involved, experience less decisional conflict, and choose less invasive treatments [16]. However, decisions about healthcare do not only happen on the individual level, many patients today have great influence on system level decisions, for example, through demand of new services and treatments, as participants in priority setting of research, members of hospital boards and as communicators of health information to fellow patients [22]. Considering that many patients do not rely on the best available evidence when making these decisions, the consequences may be costly if people are left uninformed.

Research exploring peoples’ ability to assess treatment effects is challenged by partly overlapping and sometimes parallel research areas being responsible for studies that have often focused on a specific concept, such as understanding of risk or randomization [7, 23–27]. Moreover, until recently, no consensus or conceptualisation of the key concepts critical to understanding the effects of treatments has been available [28]. Given that this research is characterized by heterogeneity, and considering the need for interventions and appropriate assessment tools in this area, we set out to conduct a systematic mapping review of interventions and assessment tools used in such studies [29]. The framework for this review, guiding the identification of interventions and assessment tools was based on a previously published list, or syllabus, we created of the key concepts we believe is important for people to be able to understand to assess treatment claims [30]. This work was done as part of the Informed Healthcare Choices (IHC) project. The IHC project aims to support the use of research evidence by patients and the public, policymakers, journalists and health professionals. The multidisciplinary group responsible for the project includes researchers in six countries—Norway, Uganda, Kenya, Rwanda, UK and Australia. The project has been responsible for developing and evaluating educational resources to improve the ability of people in low-income countries to assess claims about treatment effects (Semakula D, Nsangi A, Oxman M, Austvoll-Dahlgren A, Rosenbaum S, Kaseje M, et al.: Can an educational podcast improve the ability of parents of primary school children to assess claims about the benefits and harms of treatments?, submitted), (Nsangi A, Semakula D, Oxman M, Austvoll-Dahlgren A, Rosenbaum S, Kaseje M, et al.: Evaluation of resources to teach children in low income countries to assess claims about treatment effects, submitted). A short list of the concepts is presented in an additional word file (see Additional file 1). We defined treatment as “any action intended to improve health.”

Objective

Our objective was to systematically map this area of research by applying the list of key concepts, in order to (a) provide an overview of interventions targeting key concepts that people need to understand to assess treatment claims and (b) to identify assessment tools used to evaluate people’s understanding of these concepts.

Methods

There is an increasing variety of types of reviews for different purposes [29, 31]. If systematic, reviews should not only inform decisions about healthcare but also serve as the starting point when initiating new research such as developing interventions or assessment tools [18, 29, 32]. In order to address the above mentioned objectives, we set out to perform a mapping review. According to the typology of reviews by Grant and Booth [29], a mapping review differs from other scoping reviews in that the subsequent outcome of the review may require more work such as identifying gaps in the research literature or further review work. There is no agreement on standard method of doing a mapping review, as this will depend on the objectives. However, generally, mapping reviews provides an overview of the literature and identify gaps. Such reviews may also describe and organise the literature according to theoretical perspectives, population or other characteristics [29].

Review design, search strategy, and inclusion criteria

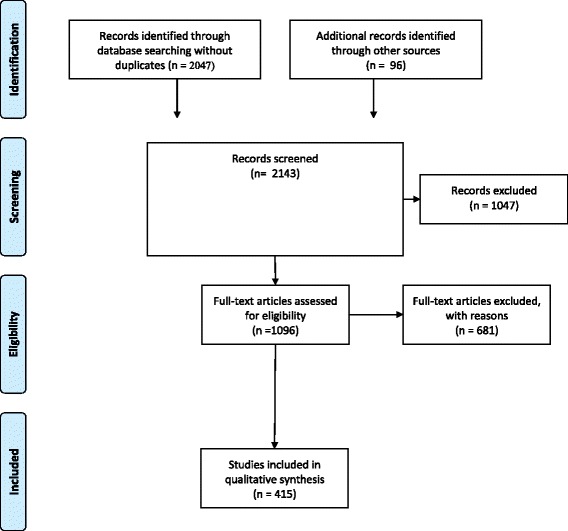

The protocol for this review was registered in PROSPERO [33]. The PRISMA flow diagram can be found in Fig. 1.

Fig. 1.

PRISMA flow diagram

Search strategy and inclusion criteria

We conducted a focused search for interventions and assessment tools targeting one or more of the key concepts. The full search strategy can be seen in more detail in an additional word file (see Additional file 2). A research librarian developed a search strategy based on the overview of key concepts. CDSR, DARE, HTA, CENTRAL, Method studies (Cochrane Library), MEDLINE 1946 to 22.06.13 (Ovid), and ERIC were included in our search. We applied the observational filter from SIGN, and the Cochrane filter based on HSSS (as applied to MEDLINE). For ERIC, we adapted Cochrane HSSS and SIGN from Medline to ERIC.

In order to identify unpublished studies, we also contacted key researchers working in related research areas such as health literacy and training of patients and consumers in evidence-based medicine, including members of the Cochrane Consumer group and the Nordic Health Literacy Network. We also checked the reference lists of all relevant systematic reviews. Hand searches were finalized in September 2015. The list of key concepts was revised after this review had been completed (revisions included adding concept 1.9, and splitting concept 5.1 into concepts 1.1 and 5.1—see Additional file 2 for more detail). As a result, the new key concept was not included in our search and data collection, and concepts 1.1 and 5.1 were treated as a single key concept. This did not influence our search strategy or conclusions as the revisions of the Key Concepts’ list was done after we had completed the review.

Types of designs

We included all quantitative study designs, including controlled trials and observational studies. We included both studies evaluating an intervention and descriptive studies without any intervention but which assessed understanding of one or more of the key concepts in a population. We also included studies describing the development of assessment tools.

Types of participants

Studies of patients and healthy members of the public were included. We also included studies aimed at health professionals, since the interventions and assessment tools directed at them may also be applicable to a lay public.

Types of interventions

We included all interventions that included one or more key concepts.

Types of assessment tools

We included all assessment tools that evaluated peoples’ understanding of one or more of the key concepts. We conceptualised “understanding” as any measure that assessed people’s knowledge or ability to apply the key concepts.

Exclusion criteria

We excluded theoretical or conceptual papers, editorials, letters, and studies with qualitative designs. We also excluded interventions that did not directly address any of the key concepts, for example framing interventions or others that intended to persuade and not educate people about making informed choices. For pragmatic reasons, we did not include publications in languages other than English or the Scandinavian languages.

Data collection and presentation of findings

All references were reviewed independently by two researchers (AA and AN). Studies classified as clearly relevant or unclear were retrieved in full text. At least two researchers (AA, AN, DS) screened and considered for inclusion all publications retrieved in full text. Any difference in opinion between two researchers was discussed with a third.

Data collection was performed by one researcher (AA, AN, or DS) using a data collection form, extracting information on the purpose of the study (intervention study or descriptive study), study design, population (patient, professionals or others), intervention, outcomes, and assessment tools. All data collection forms were double-checked by another researcher.

We categorized the interventions using pragmatic criteria and predefined categories informed by our knowledge of the research area using the following categories:

Risk communication and decision aids (risk and DA): studies evaluating the effects of interventions facilitating informed choice (mostly targeting patients) including how best to present estimates of risk and use of decision aids. Although the content and purpose of such interventions varies, they usually explore different ways of presenting the effects of treatments, help patients clarify their values and preferences, and provide a structured path through the decision making process.

Understanding of trials: studies evaluating interventions to improve people’s understanding of trial methodology and informed consent. The research interests underlying many of these studies are to improve recruitment to randomized trials and to identify barriers to consent. One reason for this is that one of most important reasons that people reject participating in trials is poor understanding of trial methods, and the benefits and harms associated with participation [7].

Evidence-based medicine and critical appraisal (EBM and CA): studies evaluating interventions that typically aim to enable people (usually health professionals) to formulate clinical questions, search for relevant evidence, appraise, and apply this evidence in practice [34].

Science education: studies evaluating interventions that aim to facilitate reasoning or critical thinking, usually in school settings. Such education may take place as part of the existing curricula or be initiated by external research initiatives.

The common goal of these research fields is that they aim to support people in making informed decisions by developing and evaluating interventions that enable people to appraise and apply research evidence [7, 27, 34–36]. These categories were not considered fixed, but were subject to revision if the included studies did not fit well within the categories.

The assignment of interventions to categories was done as part of the data collection process. In most cases, the interventions were categorised according to the purpose of the intervention as stated by the study authors. These categories were not mutually exclusive, and in some cases, an intervention was eligible for more than one category. In cases where an intervention’s assignment was unclear, this was resolved through discussion and consensus among the reviewers.

We also identified outcomes measured in the intervention studies and grouped them according to the following categories: behaviour, attitudes and beliefs, knowledge and skills, costs and other use of resources, and health outcomes.

All interventions and all assessment tools (in intervention studies or descriptive studies) intended to measure people’s understanding of one or more key concepts were tagged by relevant key concepts as part of the data collection process.

The lead researcher and a research assistant (AA, KO) conducted the data entering and summarised the findings using Excel.

Results

Description of the included studies

The search strategy resulted in 2143 references of which we judged 1096 potentially relevant and assessed these in full text (see the PRISMA flow diagram in Fig. 1). Of the studies assessed in full-text, we judged 415 to meet the inclusion criteria. The complete list of included studies is shown in an additional excel file (see Additional file 3). Forty-eight were descriptive studies and 367 were intervention studies, targeting one or more of the concepts. Overall, one hundred and twenty-three studies targeted health professionals, 20 studies targeted a mixed group of people including policy makers, and 272 targeted patients or consumers.

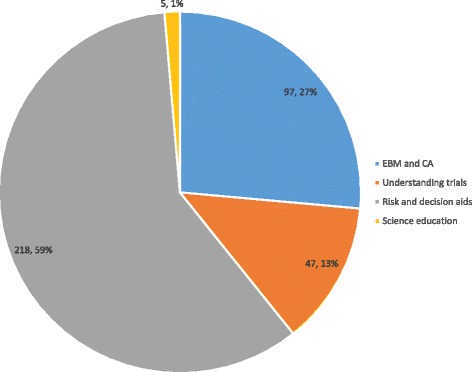

Overview of studies targeting key concepts by intervention categories

Applying the key concept list to the identified research literature, we sorted the studies into four research areas: risk and DA, EBM and CA, understanding of trials, and science education. Although these categories were predefined, the studies we identified fit well, and no new categories emerged as part of the data collection process (see Fig. 2).

Fig. 2.

Interventions by category addressing one or more key concepts

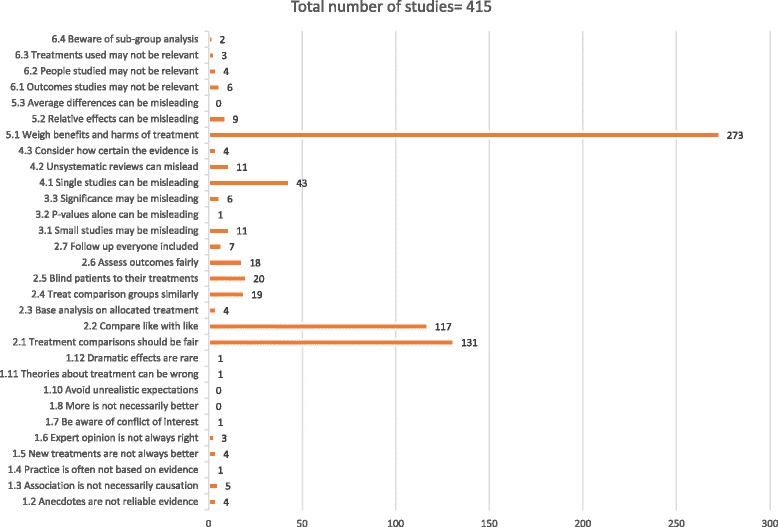

Overview of key concepts in interventions

Overall, the most common key concepts targeted in interventions were concepts 5.1 “Treatments usually have beneficial and harmful effects” (273 studies), concepts 2.1 “Treatment comparisons should be fair” (131 studies), 2.2 “Compare like with like” (117 studies) and concept 4.1 “Single studies can be misleading” (43 studies) (see Fig. 3).

Fig. 3.

Key concepts in interventions

Nearly all key concepts were included in at least one intervention, with the exception of concept 1.8 “More is not necessarily better”, concept 1.10 “Avoid unrealistic expectations”, and concept 5.3 “Average differences can be misleading”.

However, each intervention only targeted a handful of the concepts (ranging from 1 to 14 concepts) (see Additional file 3). Four interventions covered more than 10 key concepts, of which three intended to support people to making informed choices through communication of the results and the certainty of the evidence in decision support tools (three studies) and one was a EBM and CA intervention [37–40].

Key concepts in interventions by categories

We included 218 studies of risk and DA interventions. Although this category of studies was the largest, only a few of the key concepts were included and interventions usually focused on one of the key concepts, concept 5.1 “Treatments usually have beneficial and harmful effects.”

The intervention category that included most key concepts was EBM and CA studies. We included 97 such studies. EBM and CA interventions typically included concepts related to judging whether a comparison of treatments is a fair comparison (concepts 2.1 “Treatment comparisons should be fair,” 2.2 “Compare like with like,” 2.4 “Treat comparison groups similarly,” 2.5 “Blind participants to their treatments,” and 2.6 “Assess outcome measures fairly”), concept 4.1 “Single studies can be misleading,” and concept 5.1 “Weigh benefits and harms of treatment].” The majority of these studies targeted health professionals, but some also targeted patients [41–43].

We included 47 studies exploring peoples’ understanding of trials. These interventions targeted mainly three concepts associated with specific areas of consent information, including concepts 2.1 “Treatment comparisons should be fair,” 2.2 “Compare like with like,” and concept 5.1 “Weigh benefits and harms of treatment.”

Five studies were categorised as science education studies and were conducted in school settings. All of them included patients or consumers (students), and four were targeted at younger students (grade 7 and above). The purpose of these interventions was to facilitate critical thinking and science literacy. This may be why this category included most key concepts related to recognizing the need for fair comparisons, including concepts concept 1.2 “Anecdotes are not reliable evidence,” 1.3 “Association is not necessarily causation,” concept 1.6 “Expert opinion is not always right,” and concept 1.7 “Be aware of conflicts of interest,” as well as concepts 2.1 “Treatment comparisons should be fair,” 2.2 “Compare like with like,” and concept 5.1 “Weigh benefits and harms of treatment].”

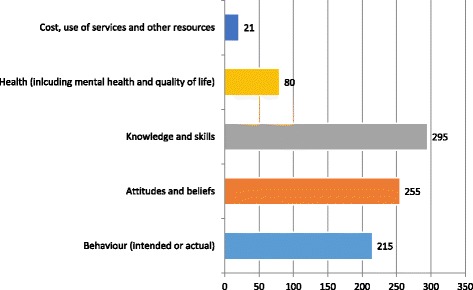

Outcomes evaluated in interventions

Factors associated with the interventions and intervention effects were measured on a range of covariates and outcomes. The most common outcomes measured were knowledge and skills (295 studies) and attitudes and beliefs (255 studies), followed by behavioural outcomes (215 studies), health related outcomes including mental health and quality of life (80 studies), and costs, use of services and other resources (21 studies) (see Fig. 4).

Fig. 4.

Outcomes and covariates in intervention studies

Overview of key concepts in assessment tools

Overall, the number of key concepts included in assessment tools ranged from 0 to 15 concepts. Although some studies included key concepts in their interventions, these were not always included in any of the assessment tools used in the same study. Only four assessment tools included 10 or more key concepts [38, 39, 44, 45]. Two were used for assessing EBM and CA skills and two for assessing understanding of the results and the certainty of the evidence from systematic reviews. An overview of the assessment tools addressing greater than 10 key concepts are presented in Table 1. None of the assessment tools that included 10 or more concepts targeted patients or consumers.

Table 1.

Assessment tools addressing 10 key concepts or more

| Study id (author and year) | Participants | Instrument with concept | Concepts included in measurement | Number of concepts |

|---|---|---|---|---|

| Ramos 2003, Tilson 2010 (modified version, relative to physical therapists), Dinkevich 2006 (modified version- excluded diagnosis and prevention), Hatmi 2010, Nicholson 2007, Mascola 2008 (adaption including only 7 questions), McCluskey 2005 (did not include advanced statistical questions), Shuval 2010 (adaption), Ilic 2012 (this study only evaluated the two first steps (question formulation and search) [44] (see Additional file 3) | Health professionals | Competency in EBM: the Fresno tool developed as part of the study, using clinical scenarios and open-ended questions related to the five EBM steps. The Fresno test require the candidate to formulate a focused question, identify the most appropriate research design for answering the question, show knowledge of electronic database searching, identify issues important for determining the relevance and validity of a given research article, and discuss the magnitude and importance of research findings. These questions are scored by using a standardised grading system. A series of calculations and fill in the blank questions. | 2.1, 2,2, 2.4-2.7, 3.1-3.3, 4.1, 4.2, 5.1, 6.1-6.3 | 15 |

| Godwin 2003 [45] | Health professionals | Knowledge about EBM: questionnaire with six open ended questions about criteria for quality assessing randomised trials, systematic reviews and diagnostic tests. For each question the respondent would get 0 to 2 points, and six questions evaluating understanding of results scored as 0 or 1 point per question. Instrument probably designed as part of study. | 2.1, 2,2, 2.4-2.7, 3.1, 4.1, 4.2, 5.1, 6.1-6.3 | 13 |

| Rosenbaum 2010 [39] | Mixed | Understanding the findings of trials and systematic reviews: 2 questions about self-assessed understanding of randomised trials rated on a scale, and 7 questions about self-assessed understanding of systematic reviews- including understanding the results, identifying important outcomes, helpfulness and accessibility of the intervention. Correct understanding: four questions about the reviews content- including risk, confidence in the evidence and identification of the most important outcomes. Evaluation tools developed for the study, not further described. Probably used likert scales. | 2.1-2.7, 3.1, 4.1- 4.3 | 11 |

| Vandvik 2012 [38] | Mixed | Understanding of summary of findings (results of systematic reviews): instrument created for study including 6 multiple- choice questions about the certainty of the evidence and interpretation of results, scored as correct/incorrect. | 2.1-2.7, 3.1, 4.1- 4.3 | 11 |

Assessment tools used in studies targeting patients or consumers included only seven or fewer key concepts. The large majority of these were risk and DA studies, and generally only touching upon one concept 5.1 “Weigh benefits and harms of treatment,” such as the Decisional Conflict Scale [46].

With few exceptions, people’s understanding of the key concepts was evaluated by different measurement instruments and procedures across studies. Many of these were developed for the specific study, and we identified approximately 210 discrete instruments/procedures. The most frequently used assessment tools, were the Fresno tool, which measures competency in EBM (used in nine studies), and the Decisional Conflict Scale, which measures the amount of uncertainty a person has regarding a course of action and the factors contributing to the uncertainty (used in 71 studies) [46].

Discussion

Methodological considerations

A limitation of mapping reviews is that they tend to be time constrained [29]. Furthermore, mapping reviews do not usually include any quality assessment of the included studies [29]. On the other hand, mapping reviews serves as an excellent starting point for initiating new research and reviews. The major contribution of this review is that it provides an overview of the body of research addressing the key concepts, across different fields of research. We have used pragmatic, but explicit criteria guided by a list of the key concepts we believe is important for people to be able to understand and assess treatment claims. Our overview provides information about which key concepts have been targets of interventions, how understanding and skills have been evaluated, and which concepts have received little attention in research. As noted in the introduction, the assessment of people’s assessments of treatment effects is challenging both because no inventory of key concepts underpinning such understanding and skills has been available until recently, and by parallel areas of research that are only partly overlapping. The findings of this review provide a starting point for decisions about which concepts to address when developing new interventions, and which additional assessment tools should be considered.

This review did not attempt to compare the effects of such interventions, this should be done by using other review methods. Related to this review is an ongoing review on the effects of educational interventions to improve people’s understanding of the key concepts [47].

Applying the key concept list to the identified research literature, we sorted the interventions into four categories: risk and DA, EBM, and CA, understanding of controlled trials, and science education. Overall, interventions focused on a small number of key concepts, and typically targeted the same concepts within each research area. The most common key concepts in interventions were concepts 5.1 “Treatments usually have beneficial and harmful effects,” concepts 2.1 “Treatment comparisons should be fair,” 2.2 “Compare like with like” (117 studies) and concept 4.1 “Single studies can be misleading”. A variety of assessment tools were identified, with approximately 210 discreet tools and procedures. Four assessment tools included 10 or more key concepts, but none of these instruments targeted patients or consumers. The most frequently used assessment tools were the Fresno tool and the Decisional Conflict Scale [44, 46].

A challenge we had conducting this review was that the descriptions and reporting of interventions and assessment tools were often limited. In cases in which key concepts were not explicitly stated as part of the intervention or assessment tools, we did not attempt to make any assumptions about whether concepts could have been included. A typical example would be decision aids, for which key concepts such as risk are usually presented as part of the decision aid and concept 5.2 “relative effects can be misleading” is likely to be relevant. In such cases, we did not assume that this concept was considered unless educating people about this concept was an explicitly reported component of the intervention. Another example is studies evaluating the effects of interventions teaching people EBM and CA skills. It was often unclear and rarely reported which concepts were included in these interventions and assessment tools. As a result, we may have missed relevant interventions or assessment tools that addressed some of the key concepts, or the number of concepts included may be underreported. Furthermore, the understanding of certain key concepts has changed over the years, for example, the reporting of p values and confidence intervals (concepts 3.2 and 3.3). In cases where the interpretation of these concepts was not explicit, we did not include them.

Another limitation of our review is that our search for studies was deliberately focused, and may not have identified all studies or assessment tools targeting one or more of our key concepts. However, we are quite certain, based on the large number of studies we included, that we have probably identified the most relevant interventions and assessment tools targeting the key concepts in our list. The list of key concepts is an evolving document, which will undergo yearly revisions where new key concepts may be added or existing concepts may be revised. While conducting this review, one concept was added and one concept was divided into two concepts. This did not have any implication for the review methods or results, other than that we did not map or identify instruments that addressed this newly added concept.

We included interventions and assessment tools used for both patients and health professionals. Patient education in critical thinking is fairly new, and we believe that when developing such interventions researchers and others may learn from what has been done in interventions developed for health professionals. Evidence also suggests that patients and health professionals have many of the same needs when it comes to training in the key concepts. For example, in studies evaluating specific concepts such as risk, no differences were found between patients and health professionals in understanding of different statistical formats of risk [27]. Furthermore, evidence-based practice and the need for fair tests of treatments has yet to be universally acknowledged [48, 49]. Thus, many professionals may not have had training in these concepts as part of their professional training. Therefore, interventions and assessment tools relevant for professionals may also be relevant to patients and vice versa, although terminology and examples used in such training may differ [50].

We did not assess the quality of the assessment tools we identified using COSMIN or other checklists [51]. This was because the main purpose of this review was to identify an assessment tool we could use to evaluate interventions targeted at the concepts in the list. If we had identified such instruments, the next step in this process would have been to ascertain the quality of these. However, none of the studies we found included more than half of the key concepts.

Nearly half of the excluded studies were “health literacy” studies. Health literacy has been defined in many ways but generally encompasses people’s ability to find, assess and apply reliable health information [26]. The most commonly used instruments in this area concentrate on measuring functional literacy, that is, general reading or numeracy skills, such as the Test for Functional Health Literacy in Adults (TOFHLA) or the Rapid Estimate of Adult Literacy (REALM) [52, 53]. Some instruments also include critical appraisal skills; however, none of the health literacy instruments we found addressed the key concepts directly. Instead, they measured more general understanding of health information and medical terminology [54–56].

Many school systems perform national assessments of school children’s science and mathematical literacy to measure educational achievement, such as the PISA test or SAT’s [57, 58]. We identified several such assessment tools; however, none of these met our inclusion criteria. This may be because many of these instruments are not publicly available and are apparently subject to change on a regular basis. Furthermore, such instruments generally focus on measuring understanding of basic science and mathematics. Although we found that many instruments included content relevant to our concepts, such as the importance of supporting claims by research evidence, preparing a protocol, conducting laboratory experiments and calculating probabilities, they did not address our key concepts directly.

Conclusions

The findings of this review indicate that the key concepts people need to understand to assess claims about treatment effects are of interdisciplinary research interest. However, the interventions we identified, and assessment tools used to map or evaluate peoples’ understanding, included only a handful of the key concepts. This suggests that many of the key concepts have not been focus of research, and that there is great need to explore how understanding about these key concepts can be improved and how such understanding can be evaluated. The findings of this review consequently should inform future research priorities, such as the choice of key concepts to include in interventions and for considering appropriate outcomes and assessment tools.

Acknowledgements

We are very grateful to Dr. Andrew D. Oxman and Sir Iain Chalmers for their generosity and support in providing their advice throughout the work on this review. We would also like to thank Kjetil Olsen for his administrative assistance.

Authors’ contributions

AA and AN conceived this systematic review, participated in its design, and drafted the protocol. AA and AN read all the references from the search and reference list of relevant systematic reviews and considered them for inclusion. AA, AN, and DS reviewed the all publications retrieved in full text and carried out the data collection. AA entered all results into an excel sheet supported by a research assistant (KO). AA, AN, and DS drafted and approved the final manuscript.

Competing interests

The project is funded by Norwegian Knowledge Centre for the Health Services and The Research Council of Norway. The authors declare that they have no competing interests.

Abbreviations

- Key concepts

Key concepts that people need to understand to assess treatment claims

- REALM

Rapid estimate of adult literacy

- TOFHLA

Test for functional health literacy in adults

Additional files

Short list of Key Concepts. (DOCX 14 kb)

Search strategy. (DOCX 30 kb)

Overview of all included studies. (XLSX 133 kb)

Contributor Information

Astrid Austvoll-Dahlgren, Phone: +47 41294057, Email: astrid.austvoll-dahlgren@fhi.no.

Allen Nsangi, Email: nsallen2000@yahoo.com.

Daniel Semakula, Email: semakuladaniel@gmail.com.

References

- 1.Woloshin S, Schwartz LM, Black WC, Welch HG. Women’s perceptions of breast cancer risk: how you ask matters. Med Decis Mak. 1999;19(3):221–9. doi: 10.1177/0272989X9901900301. [DOI] [PubMed] [Google Scholar]

- 2.Peterson G, Aslani P, Williams KA. How do consumers search for and appraise information on medicines on the internet? A qualitative study using focus groups. J Med Internet Res. 2003;5(4):e33. doi: 10.2196/jmir.5.4.e33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sillence E, Briggs P, Harris PR, Fishwick L. How do patients evaluate and make use of online health information? Soc Sci Med. 2007;64(9):1853–62. doi: 10.1016/j.socscimed.2007.01.012. [DOI] [PubMed] [Google Scholar]

- 4.Lloyd A. The extent of patients’ understanding of the risk of treatments. Qual Health Care. 2001;10(Suppl 1):i14–8. doi: 10.1136/qhc.0100014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Weinstein N. What does it mean to understand a risk? Evaluating risk comprehension. J Natl Cancer Inst. 1999;25:15–20. doi: 10.1093/oxfordjournals.jncimonographs.a024192. [DOI] [PubMed] [Google Scholar]

- 6.Tickner S, Leman P, Woodcock A. Factors underlying suboptimal childhood immunisation. Vaccine. 2006;24(49-50):7030–6. doi: 10.1016/j.vaccine.2006.06.060. [DOI] [PubMed] [Google Scholar]

- 7.Robinson E, Kerr C, Stevens A, Lilford R, Braunholtz D, Edwards S, et al. Lay public’s understanding of equipoise and randomisation in randomised controlled trials. Research Support, Non-U.S. Gov’t. NHS R & D HTA Programme. 2005 Mar. Report No.: 1366-5278 (Linking) Contract No.: 8. [DOI] [PubMed]

- 8.Stead M, Eadie D, Gordon D, Angus K. “Hello, hello - it’s English I speak”! a qualitative exploration of patients’ understanding of the science of clinical trials. J Med Ethics. 2005;31(11):664–9. doi: 10.1136/jme.2004.011064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Glenton C, Nilsen ES, Carlsen B. Lay perceptions of evidence-based information--a qualitative evaluation of a website for back pain sufferers. BMC Health Serv Res. 2006;6:34. doi: 10.1186/1472-6963-6-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schwartz L, Woloshin S, Black W, Welch H. The role of numeracy in understanding the benefit of screening mammography. Ann Intern Med. 1997;127(11):966–72. doi: 10.7326/0003-4819-127-11-199712010-00003. [DOI] [PubMed] [Google Scholar]

- 11.Glenton C, Paulsen E, Oxman A. Portals to Wonderland? Health portals lead confusing information about the effects of health care. BMC Med Inform Decis Mak. 2005;5:7–8. doi: 10.1186/1472-6947-5-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schwartz K, Roe T, Northrup J, Meza J, Seifeldin R, Neale A. Family medicine patients’ use of the Internet for health information: a MetroNet study. J Am Board Fam Med. 2006;19(1):39–45. doi: 10.3122/jabfm.19.1.39. [DOI] [PubMed] [Google Scholar]

- 13.Fox S, Jones S. The Social Life of Health Information. PEW Internet and American Life Project [Internet] 2009. p. 88. [Google Scholar]

- 14.Mills E, Jadad AR, Ross C, Wilson K. Systematic review of qualitative studies exploring parental beliefs and attitudes toward childhood vaccination identifies common barriers to vaccination. J Clin Epidemiol. 2005;58(11):1081–8. doi: 10.1016/j.jclinepi.2005.09.002. [DOI] [PubMed] [Google Scholar]

- 15.Ganther-Urmie J, Nair K, Valuck R, McCollum M, Lewis S, Turpin R. Consumer attitudes and factors related to prescription switching decisions in multitier copayment drug benefit plans. Am J Manag Care. 2004;10(3):201–8. [PubMed] [Google Scholar]

- 16.Stacey D, Légaré F, Col NF, Bennett CL, Barry MJ, Eden KB, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2014; Issue 1 Art No: CD001431 DOI: 10.1002/14651858CD001431pub4. [DOI] [PubMed]

- 17.Chalmers I, Glasziou P, Badenoch D, Atkinson P, Austvoll-Dahlgren A, Oxman A. Evidence Live 2016: promoting informed healthcare choices by helping people assess treatment claims. BMJ. http://blogs.bmj.com/bmj/2016/05/26/evidence-live-2016-promotinginformed-healthcare-choices-by-helping-people-assess-treatment-claims/

- 18.Evans I, Thornton H, Chalmers I, Glasziou P. Testing Treatments: better research for better healthcare. Second. London: Pinter & Martin Ltd; 2011. [PubMed] [Google Scholar]

- 19.Shekelle PG, Pronovost PJ, Wachter RM, McDonald KM, Schoelles K, Dy SM, et al. The top patient safety strategies that can be encouraged for adoption now. Ann Intern Med. 2013;158(5 Pt 2):365–8. doi: 10.7326/0003-4819-158-5-201303051-00001. [DOI] [PubMed] [Google Scholar]

- 20.Steinman M. Using patients to promote evidence-based prescribing: comment on “communicating uncertainties about prescription drugs to the public”. Arch Intern Med. 2011;171(16):1468–9. doi: 10.1001/archinternmed.2011.369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pilling SA, Williams MB, Brackett RH, Gourley R, Weg MW, Christensen AJ, et al. Part I, patient perspective: activating patients to engage their providers in the use of evidence-based medicine: a qualitative evaluation of the VA Project to Implement Diuretics (VAPID) Implement Sci. 2010;5:23. doi: 10.1186/1748-5908-5-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Austvoll-Dahlgren A, Johansen M. The patient as participant and knowledge manager. Nor J Epidemiol. 2013;23(2):225–30. doi: 10.5324/nje.v23i2.1649. [DOI] [Google Scholar]

- 23.Berkman N, Sheridan S, Donahue K, Halpern D, Crotty K. Low health literacy and health outcomes: an updated systematic review. Ann Intern Med. 2011;155(2):97–U89. doi: 10.7326/0003-4819-155-2-201107190-00005. [DOI] [PubMed] [Google Scholar]

- 24.Jordan J, Osborne R, Buchbinder R. Critical appraisal of health literacy indices revealed variable underlying constructs, narrow content and psychometric weaknesses. J Clin Epidemiol. 2011;64(4):366–79. doi: 10.1016/j.jclinepi.2010.04.005. [DOI] [PubMed] [Google Scholar]

- 25.Car J, Lang B, Colledge A, Ung C, Majeed A. Interventions for enhancing consumers’ online health literacy. Cochrane Database Syst Rev. 2011(6):CD007092. doi: 10.1002/14651858.CD007092.pub2. [DOI] [PMC free article] [PubMed]

- 26.Sorensen K, Van den Broucke S, Fullam J, Doyle G, Pelikan J, Slonska Z, et al. Health literacy and public health: a systematic review and integration of definitions and models. BMC Public Health. 2012;12:80. doi: 10.1186/1471-2458-12-80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Akl EA, Oxman AD, Herrin J, Vist GE, Terrenato I, Sperati F, et al. Using alternative statistical formats for presenting risks and risk reductions. Cochrane Database Syst Rev. 2011; Issue 3 Art No: CD006776. DOI: 10.1002/14651858CD006776pub2. [DOI] [PMC free article] [PubMed]

- 28.Austvoll-Dahlgren A, Oxman AD, Chalmers I, Nsangi A, Glenton C, Lewin S, et al. Key concepts that people need to understand to assess claims about treatment effects. J Evid Based Med. 2015;8(3):112–25. doi: 10.1111/jebm.12160. [DOI] [PubMed] [Google Scholar]

- 29.Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Info Libr J. 2009;26(2):91–108. doi: 10.1111/j.1471-1842.2009.00848.x. [DOI] [PubMed] [Google Scholar]

- 30.Austvoll-Dahlgren A, Oxman AD, Chalmers I, Nsangi A, Glenton C, Lewin S, Morelli A, Rosenbaum S, Semakula D, Sewankambo N. Key concepts that people need to understand to assess claims about treatment effects. J Evid Based Med. 2015;8:112–5. doi:10.1111/jebm.12160. [DOI] [PubMed]

- 31.Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32. doi: 10.1080/1364557032000119616. [DOI] [Google Scholar]

- 32.Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, Gülmezoglu AM, et al. How to increase value and reduce waste when research priorities are set. Lancet. 2014;383(9912):156-65. [DOI] [PubMed]

- 33.Austvoll-Dahlgren A, Nsangi A, Semakula D, Oxman A. Measuring peoples’ understanding of the effects of treatments: a review of outcome measures. 2015. PROSPERO CRD42015016449. Available from http://wwwcrdyorkacuk/PROSPERO/display_recordasp?ID=CRD42015016449.

- 34.Horsley T, Hyde C, Santesso N, Parkes J, Milne R, Stewart R. Teaching critical appraisal skills in healthcare settings. Cochrane Database Syst Rev. 2011;11:CD001270. doi: 10.1002/14651858.CD001270.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kaelin MA, Huebner WW, Nicolich MJ, Kimbrough ML. Field test of an epidemiology curriculum for middle school students. Am J Health Educ. 2007;38(1):16–31. doi: 10.1080/19325037.2007.10598938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Leshowitz B, Jenkens K, Heaton S, Bough TL. Fostering critical thinking skills in students with learning disabilities: an instructional program. J Learn Disabil. 1993;26:483–490. doi: 10.1177/002221949302600707. [DOI] [PubMed] [Google Scholar]

- 37.Dinkevich E, Markinson A, Ahsan S, Lawrence B. Effect of a brief intervention on evidence-based medicine skills of pediatric residents. BMC Med Educ. 2006;6:1. doi: 10.1186/1472-6920-6-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Vandvik PO, Santesso N, Akl EA, You J, Mulla S, Spencer FA, et al. Formatting modifications in GRADE evidence profiles improved guideline panelists comprehension and accessibility to information. A randomized trial. J Clin Epidemiol. 2012;65(7):748–55. doi: 10.1016/j.jclinepi.2011.11.013. [DOI] [PubMed] [Google Scholar]

- 39.Rosenbaum SE, Glenton C, Oxman AD. Summary-of-findings tables in Cochrane reviews improved understanding and rapid retrieval of key information. J Clin Epidemiol. 2010;63(6):620–6. doi: 10.1016/j.jclinepi.2009.12.014. [DOI] [PubMed] [Google Scholar]

- 40.Austvoll-Dahlgren A, Bjorndal A, Odgaard-Jensen J, Helseth S. Evaluation of a web portal for improving public access to evidence-based health information and health literacy skills: a pragmatic trial. PLoS One. 2012;7(5):e37715. doi: 10.1371/journal.pone.0037715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Milne R, Oliver S. Evidence-based consumer health information: developing teaching in critical appraisal skills. Int J Qual Health Care. 1996;8(5):439–45. doi: 10.1093/intqhc/8.5.439. [DOI] [PubMed] [Google Scholar]

- 42.Dickersin K, Braun L, Mead M, Millikan R, Wu AM, Pietenpol J, et al. Development and implementation of a science training course for breast cancer activists: Project LEAD (leadership, education and advocacy development) Health Expect. 2001;4(4):213–20. doi: 10.1046/j.1369-6513.2001.00153.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Steckelberg A, Hülfenhaus C, Kasper J, Mühlhauser I. Ebm@school – a curriculum of critical health literacy for secondary school students: results of a pilot study. Int J Public Health. 2009;54(3):158–65. doi: 10.1007/s00038-008-7033-1. [DOI] [PubMed] [Google Scholar]

- 44.Ramos KD, Schafer S, Tracz SM. Validation of the Fresno test of competence in evidence based medicine. BMJ. 2003;326(7384):319–21. doi: 10.1136/bmj.326.7384.319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Godwin M, Seguin R. Critical appraisal skills of family physicians in Ontario. Canada BMC Med Educ. 2003;3:10. doi: 10.1186/1472-6920-3-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.O’Connor AM. Validation of a decisional conflict scale. Med Decis Making. 1995;15(1):25–30. doi: 10.1177/0272989X9501500105. [DOI] [PubMed] [Google Scholar]

- 47.Cusack L, Hoffmann T, Del Mar C, Chalmers I. Educational interventions to improve people’s understanding of key concepts in assessing the effects of health interventions: a systematic review protocol PROSPERO. 2016: CRD42016033103. Available from http://wwwcrdyorkacuk/PROSPERO/display_recordasp?ID=CRD42016033103 [DOI] [PMC free article] [PubMed]

- 48.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312(7023):71–2. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Chalmers I. The development of fair tests of treatments. Lancet. 2014;383(9930):1713–14. http://dx.doi.org/10.1016/S0140-6736(14)60821-7. [DOI] [PubMed]

- 50.Austvoll-Dahlgren A, Danielsen S, Opheim E, Bjorndal A, Reinar LM, Flottorp S, et al. Development of a complex intervention to improve health literacy skills. Health Info Libr J. 2013;30(4):278–93. doi: 10.1111/hir.12037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Terwee CB, Mokkink LB, Knol DL, Ostelo RWJG, Bouter LM, de Vet HC. Rating the methodological quality in systematic reviews of studies on measurement properties: a scoring system for the COSMIN checklist. Qual Life Res. 2011. [DOI] [PMC free article] [PubMed]

- 52.Davis TC, Crouch MA, Long SW, Jackson RH, Bates P, George RB, et al. Rapid assessment of literacy levels of adult primary care patients. Fam Med. 1991;23(6):433–5. [PubMed] [Google Scholar]

- 53.Parker R, Baker D, Williams M, Nurss J. The test of functional health literacy in adults. J Gen Intern Med. 1995;21(4):651–7. [DOI] [PubMed]

- 54.Sorensen K, Van den Broucke S, Pelikan J, Fullam J, Doyle G, Slonska Z, et al. Measuring health literacy in populations: illuminating the design and development process of the European Health Literacy Survey Questionnaire (HLS-EU-Q) BMC Public Health. 2013;13(1):948. doi: 10.1186/1471-2458-13-948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Osborne R, Batterham R, Elsworth G, Hawkins M, Buchbinder R. The grounded psychometric development and initial validation of the Health Literacy Questionnaire (HLQ) BMC Public Health. 2013;13:658. doi: 10.1186/1471-2458-13-658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Ishikawa H, Nomura K, Sato M, Yano E. Developing a measure of communicative and critical health literacy: a pilot study of Japanese office workers. Health Promot Int. 2008;23(3):269–74. doi: 10.1093/heapro/dan017. [DOI] [PubMed] [Google Scholar]

- 57.Lemke M, Sen A, Pahlke E, Partelow L, Miller D, Williams T, et al. International Outcomes of Learning in Mathematics Literacy and Problem Solving: PISA 2003 Results From the U.S. Perspective. (NCES 2005–003) Washington, DC: U.S. Department of Education, National Center for Education Statistics; 2004. [Google Scholar]

- 58.Wilson MR, Bertenthal MW, editors. BM. Systems for state science assessment. Washington DC: The National Academies Press; 2005. [Google Scholar]