Abstract

Objective

This study evaluated the effects of insertion depth on spatial speech perception in noise for simulations of cochlear implants (CI) and single-sided deafness (SSD).

Design

Mandarin speech recognition thresholds were adaptively measured in five listening conditions and four spatial configurations. The original signal was delivered to the left ear. The right ear received either no input, one of three CI simulations in which the insertion depth was varied, or the original signal. Speech and noise were presented at either front, left, or right.

Study Sample

Ten Mandarin-speaking NH listeners with pure-tone thresholds less than 20-dB HL.

Results

Relative to no input in the right ear, the CI simulations provided significant improvements in head shadow benefit for all insertion depths, as well as better spatial release of masking (SRM) for the deepest simulated insertion. There were no significant improvements in summation or squelch for any of the CI simulations.

Conclusions

The benefits of cochlear implantation were largely limited to head shadow, with some benefit for SRM. The greatest benefits were observed for the deepest simulated CI insertion, suggesting that reducing mismatch between acoustic and electric hearing may increase the benefit of cochlear implantation.

Keywords: cochlear implants, single-side deafness, spatial release from masking, insertion depth

INTRODUCTION

In daily life, the hearing difficulties encountered by individuals with single-sided deafness (SSD) are often underestimated, as some people believe that normal hearing in only one ear is sufficient to ensure a relatively normal auditory experience (Giardina et al, 2014). Recent research has shown that SSD patients face significant hearing challenges in complex or noisy environments, and in localizing or lateralizing sound sources (Firszt et al, 2012; Kamal et al, 2012; Vermeire et al, 2009). Hearing rehabilitation of SSD patients has recently drawn much attention. Treatment with conventional contralateral routing of signal (CROS) or bone-anchored hearing aid (BAHA) have shown limited success (Baguley et al, 2006; Bishop et al, 2010), as these approaches cannot provide any bilateral auditory input (van Zon et al, 2015; Giardina et al, 2014). Cochlear implants (CIs) are able to provide bilateral auditory input in conjunction with contralateral normal hearing. SSD patients have benefitted from cochlear implantation in terms of localization, tinnitus reduction, and quality of life (e.g., Tavora-Vieira et al, 2013; Tokita et al, 2014; van Zon et al, 2015). However, the benefits of cochlear implantation for speech understanding in noise have been more variable and in many cases, very limited, depending on which aspect of binaural hearing is tested (e.g., van Zon et al, 2015; Giardina, 2014).

In normal-hearing (NH) listeners, there are a number of ways that binaural listening can help speech understanding in noise. Binaural summation (or “redundancy”) describes the advantage of two ears over one ear when segregating co-located target and masker sounds. In NH listeners, binaural summation can slightly improve NH listeners’ speech perception in noise due to increased loudness and/or redundant processing by binaural auditory pathways (Dillon, 2001). In bilateral CI users, binaural summation effects typically improve speech reception thresholds (SRTs) in noise by only 1–2 dB (e.g., Knop et al, 2004; Chan et al, 2008). For SSD CI users, binaural summation benefits have been shown to be similarly small (e.g., Mertens et al, 2015; Vermeire & Van de Heyning, 2009). Binaural squelch describes the advantage when adding the ear with the poorer signal-to-noise ratio (SNR) to the ear with the better SNR, when target and masker sounds are spatially separated. In NH listeners, binaural squelch can improve SRTs by approximately 5 dB (e.g., Cox & Bisset, 1984). Binaural squelch has been shown to improve SRTs by 1–3 dB in bilateral CI users (e.g., Knop et al, 2004; Chan et al, 2008) and SSD CI users (e.g., Mertens et al, 2015). With binaural listening, spatial release from masking (SRM) describes the advantage of spatially separated target and masker sounds over co-located target and masker sounds. When the target and masker are spatially separated, the SNR is better in one of the two ears allowing for better perception of the target. In NH listeners and in bilateral CI users, SRM typically results in 6–8 dB improvement in SRTs (e.g., Freyman et al, 1999; Murphy et al, 2011). SRM is related to head shadow, which describes the physical attenuation of sound and difference in spectrum between ears when target and masker sounds are spatially separated. In NH and bilateral CI listeners, head shadow can improve SRTs by 10 dB or more (e.g., Bronkhorst & Plomp, 1989; Schleich et al, 2004). For SSD patients, SRM and head shadow provide some of the strongest binaural benefits for speech in noise, with 5–6 dB of improvement in SRT (Vermeire & Van de Heyning, 2009).

Some CI parameters that may limit the benefit of cochlear implantation for SSD patients include the amplitude mapping, the acoustic frequency-to-electrode mapping of the CI, the limited number of spectral channels, the stimulation rate, the time constants used for signal compression in the CI, etc. The compressive amplitude mapping used in CIs may distort inter-aural level differences (ILDs), an important cue for localization. The frequency-to-electrode mapping may result in mismatch between the NH and CI ears, which may limit binaural fusion. The limited spectral resolution, especially when combined with potential frequency mismatch, may greatly limit speech perception with the CI, which would in turn limit binaural speech perception. The stimulation rate and time constants used for signal compression may distort interaural timing differences (ITDs), another important cue for localization.

Many studies have used acoustic CI simulations to evaluate the benefits of combining acoustic and electric hearing for speech understanding in noise (e.g., Brown & Bacon, 2009; Dorman et al, 2005; Sheffield & Zeng, 2012). In general, these studies simulated only low-frequency hearing in the acoustic ear, finding that the availability of fundamental frequency (F0) and first formant (F1) information improved speech understanding in noise, relative to the simulated CI alone. In these studies, the simulated hearing loss in the acoustic signal would not support speech understanding alone, but rather enhanced performance with the simulated CI alone. For SSD patients, there is much more acoustic information in the acoustic ear which would be expected to dominate perception. CI simulations have been used to evaluate potential limits and benefits of cochlear implantation for SSD patients. Aronoff et al (2015) simulated SSD CI perception in NH subjects listening to an 8-channel vocoder in one ear and unprocessed speech in the other ear. Results showed that spectral and temporal compression in the simulated CI greatly limited binaural fusion, which in turn may limit binaural perception of speech in noise. Bernstein et al (2015) found some benefit for adding vocoded stimuli in one ear (relative to no input) to unprocessed speech in the other, most likely due to release from informational masking.

Different from English language, Mandarin Chinese is a tonal language, with pitch contours associated with different meaning for monosyllabic words (Lin, 1988). CIs do not represent pitch cues very well, and as a result, CI performance for Mandarin tones is poorer than for NH listeners (Fu and Zeng, 2005). For SSD patients, perception of Mandarin tones would be expected to be largely driven by the NH ear. As such, it is unclear if the benefits of cochlear implantation for binaural speech perception in noise would be similar for Mandarin- and English-speaking SSD patients. In this study, understanding of Mandarin sentences in noise was measured in NH subjects listening to unprocessed speech in one ear and acoustic CI simulations in the other ear. Speech understanding in noise was measured for a variety of spatial conditions. The simulated CI insertion depth was varied to explore the effects of frequency mismatch on performance. Given the limited spectral resolution of the CI simulation, we hypothesized that the binaural benefit would be limited to head shadow and SRM. Given the effects of spectral mismatch on CI speech performance, we hypothesized that deeper insertion depths may provide better binaural benefit.

METHOD

Ethical statement

In compliance with ethical standards for human subjects, written informed consent was obtained from all participants before proceeding with any of the study procedures. This study was approved by Institutional Review Board in Department of Otolaryngology, Southwest Hospital, Third Military Medical University, Chongqing, China.

Subjects

Ten young adult NH subjects who were native speakers of Mandarin Chinese participated in the study. All subjects had pure-tone thresholds better than 20 dB HL for audiometric frequencies at 250, 500, 1000, 2000, 4000, and 8000 Hz. All reported speaking, reading, and writing Chinese with excellent proficiency in terms of daily communication. Exclusion criteria included organic brain diseases and other physical or mental illness that could lead to cognitive impairment.

Stimuli

Word- in-sentence recognition was assessed using the Mandarin Speech Perception (MSP), which consists of 5 lists with 20 sentences within each list (Fu et al, 2011). Each sentence contains 7 monosyllabic words produced by a native Mandarin female talker speaking at a normal rate, resulting in a total of 140 monosyllabic words for each list. All test sentences are familiar and commonly used in daily life; sentences were phonetically balanced across lists. The mean duration across all sentences was 1974 ms (±129), the mean speaking rate was 3.55 words per second (±0.08), and the mean fundamental frequency (F0) was 223 Hz (±15). Sentence recognition was measured in the presence of steady noise that was spectrally matched to average spectrum across all of the MSP sentences.

Spatial conditions

Non-individualized head-related transfer functions (HRTFs) were used to create a virtual auditory space for headphone presentation. Before any subsequent signal processing, stimuli were first processed using the same set of HRTFs as in Brungart et al (2005), simulating sound source locations at 0° (front), 90° (right or CI side), and 270° (left or NH side). Speech recognition in noise was measured for 4 spatial conditions: 1) speech and noise from the front (S0N0), 2) speech from the front and noise from the right (S0Nci), 3) speech from the front and noise from the left (S0Nnh), and 4), speech from the right and noise from the left (SciNnh). Figure 1 illustrates the 4 spatial conditions.

Figure 1.

Illustration of the four spatial listening conditions. The white (speech) and black speakers (noise) represent the virtual locations of signals delivered through headphones. The left ear always received the original signal. The right ear (filled) received either no input, one of the CI simulations, or the original signal. In S0N0, speech and noise were co-located in front of the listener. In S0Nci, speech originated from the front and noise from the CI side (right). In S0Nnh, speech originated from the front and noise from the NH side (left). In SciNnh, speech originated from the CI side (right) and noise from the NH side (left).

SSD and CI simulations

As noted above, stimuli were processed by the HRTFs before any subsequent signal processing. To simulate SSD, the original speech was always delivered to left ear. To simulate cochlear implantation, acoustic simulations of CI signal processing were always delivered to the right ear. As a control condition, the original speech was delivered to both the left and right ears.

For the CI simulations, stimuli were processed by a 16-channel sine-wave vocoder as in Fu et al (2004); the CI simulations were delivered only to the right ear. First, the signal was processed through a high-pass pre-emphasis filter with a cutoff of 1200 Hz and a slope of −6 dB/octave. The input frequency range (200–7000 Hz) was then divided into 16 frequency bands, using 4th order Butterworth filters distributed according to Greenwood’s (1990) frequency-place formula. The temporal envelope from each band was extracted using half-wave rectification and low-pass filtering with a cutoff frequency of 160 Hz. The extracted envelopes were then used to modulate the amplitude of sinewave carriers. The distribution of the carrier sinewaves assumed a 16 mm electrode array. Three different insertion depths were simulated: 19 mm (CI-19), 22 mm (CI-22), and 25 mm (CI-25) relative to the base. Figure 2 illustrates the analysis bands and the distribution of sinewave carriers for the three insertion depth conditions. Note that the 25 mm insertion had the least apical mismatch and the 19 mm insertion had the most apical mismatch, relative to the acoustic input. The binaural signals were generated by combining the right ear signal (no input, CI-19, CI-22, CI-25, or original) with the left ear signal (original).

Figure 2.

Illustration of the three simulated CI insertion depths. The gray bars at the top of the figure show the frequency analysis bands. The different shapes show the carrier frequencies for the sine-wave vocoders.

Procedure

Before formal testing, NH subjects were first trained while listening to CI simulation (without spatial processing) to familiarize them with the vocoded speech. Training stimuli consisted of 25 sentences from the Mandarin Angel Sound (http://mast.emilyfufoundation.org). After the familiarization trials, speech recognition thresholds (SRTs) were measured using an adaptive procedure (Plomp and Mimpen, 1979) for all listening conditions and all of spatial configurations. The SRT was defined as the signal-to-noise ratio (SNR) that produced 50% correct sentence recognition. During testing, a sentence was randomly selected from the list and delivered to the center or right location at 60 dBA and the noise was delivered to the left, right, or center location at the target SNR. Subjects were instructed to repeat the sentence as accurately as possible. If the subject repeated the entire sentence correctly, the SNR was reduced by 2 dB; if not, the SNR was increased by 2 dB. From trial to trial, the target SNR was calculated according to the long-term RMS of the speech and masker. The SRT was calculated as the average of the last 6 reversals in SNR. Speech and noise stimuli were presented via headphone (Sennheiser PX 80). Because of the larger number of conditions (4 spatial × 5 binaural = 20) and the limited number of MSP sentence lists (5), only one list was presented for each condition and each list was repeated four times across all conditions. The test order (and list order) for all conditions was randomized within and across subjects.

RESULTS

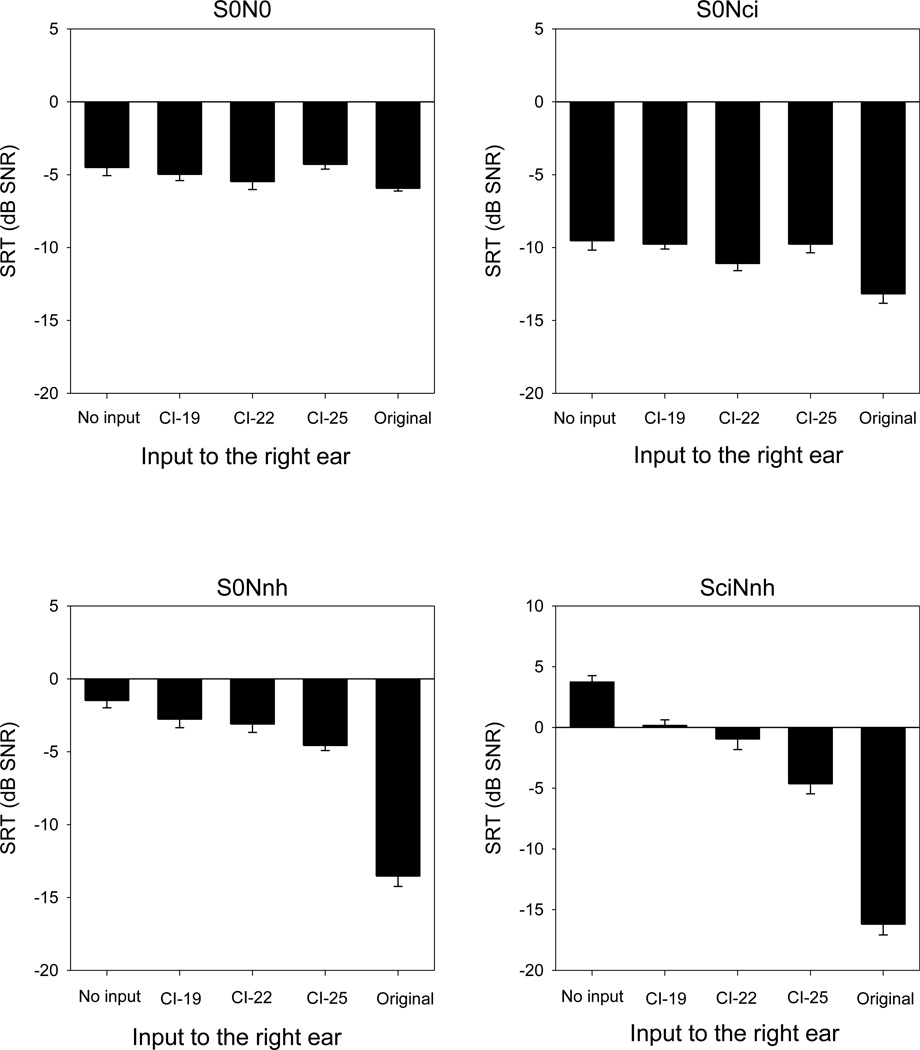

Figure 3 shows mean SRTs in noise as a function of listening condition; each panel shows data for the different spatial conditions. When speech and noise were presented from the front (top left panel) or when speech was presented to the front and noise presented to the right (top right panel), there was little difference in performance across listening conditions. A two-way repeated measures analysis of variance (RM ANOVA) was performed on the data shown in Figure 3, with right ear listening condition (no input, CI-19, CI-22, CI-25, and original) and spatial configuration (S0N0, S0Nci, S0Nnh, and SciNnh) as factors. Results showed significant effects for listening condition [F(4,108) = 172.6, p < 0.001] and spatial configuration [F(3,108) = 176.6, p < 0.001], as well as significant interaction [F(12,108) = 39.4, p<0.001]. The RM ANOVA results and post-hoc Bonferroni pair-wise comparisons are shown in Table 1.

Figure 3.

Mean SRTs (across subjects) for the four spatial conditions, as a function of input to the right ear; the input to the left ear was always the original signal. The error bars show the standard error.

Table 1.

Results of a two-way ANOVA on the data shown in Figure 3. Listening condition, variables included no input, CI-19 simulation, CI-22 simulation, CI-25 simulation, and original. Spatial variables included S0N0, S0Nnh, S0Nci, and SciNnh.

| Factor | dF. res | F-ratio | p-value | Bonferroni post-hoc (p <0.05) |

|---|---|---|---|---|

| Listening condition |

4, 108 | 172.6 | <0.001 | Original > CI-19, CI-22, CI-25, no input CI-19, CI-22, CI-25 > no input CI-25 > CI-19 |

| Spatial | 3, 108 | 176.6 | <0.001 | S0N0, S0Nci, S0Nnh > SciNnh S0Nci > S0N0, S0Nnh |

| Listening condition × spatial |

12, 108 | 39.4 | <0.001 | No input, CI-19, CI-22: S0N0, S0Nci, S0Nnh > SciNnh; S0Nci > S0N0, S0Nnh; S0N0 > S0Nnh CI-25: S0Nci > S0N0, S0Nnh, SciNnh Original: SciNnh > S0N0, S0Nci, S0Nnh; S0Nci, S0Nnh > S0N0 S0Nci: Original > no input, CI-19, CI-25 S0Nnh: Original > no input, CI-19, CI-22, CI-25; CI-25 > no input SciNnh: Original > no input, CI-19, CI-22, CI-25; CI-19, CI-22, CI-25 > no input; CI-25 > CI-19, CI-22 |

Speech understanding in noise was further characterized in terms of summation, squelch, SRM, and head shadow. Summation was defined as the difference in SRT between binaural and monaural listening (L+R − L) when speech and noise were co-located (S0N0). Mean benefits are 0.5, 1.0, −0.2, 1.4 dB for the CI-19, CI-22, or CI-25 simulations, or the original signal, respectively. Squelch was defined as the difference in SRT between binaural and monaural listening (L+R − L) when noise was presented to the right side (S0Nci). Mean benefits are 0.2, 1.6, 0.2, 3.6 dB for the CI-19, CI-22, or CI-25 simulations, or the original signal, respectively. SRM with binaural hearing was calculated as the difference in SRT between spatially separated and co-located speech and noise (S0Nnh – S0N0; S0Nci – S0N0). For S0Nnh condition, mean benefits are 4.8, 5.6, 5.5, 7.2 dB for the CI-19, CI-22, or CI-25 simulations, or the original signal, respectively. For S0Nci condition, mean benefits are −2.2, −2.4, 0.3, 7.6 dB for the CI-19, CI-22, or CI-25 simulations, or the original signal, respectively. Head shadow was defined as the difference between binaural and monaural listening (L+R − L) when speech was presented to the right side and noise to the left side (SciNnh). Mean benefits are 3.6, 4.7, 8.4, 19.9 dB for the CI-19, CI-22, or CI-25 simulations, or the original signal, respectively. For summation, adding the right ear had little effect, even with the original speech. For squelch, adding the CI simulations to the right ear had little effect; adding the original signal to the right ear improved the mean SRT by more than 3 dB. When the noise was presented to the right ear (S0Nci), there was a substantial improvement in the mean SRM, even with the CI simulations. When the noise was presented to the left ear (S0Nnh), there was actually a deficit in mean SRM for the CI-19 and CI-22 simulations. When the speech was presented to the right and noise to the left ear (SciNnh), the binaural benefit improved as the simulated CI insertion depth increased.

Figure 4 shows the binaural benefit in terms of summation (S0N0), squelch (S0Nci), and head shadow (SciNnh), relative to the “no input” listening condition (stimulation of the left ear only). In general, mean binaural benefits were very small with the CI simulations for summation and squelch. Binaural benefits for head shadow generally increased with simulated insertion depth. A two-way ANOVA was performed on the data shown in Figure 4, with input to the right ear (CI-19, CI-22, CI-25, original) and binaural condition (summation, squelch, head shadow) as factors; the results are shown in Table 2. For squelch and head shadow, the binaural benefit was significantly poorer with the CI simulations than with the original signal (p < 0.05). The binaural benefit for head shadow was significantly better than for summation or squelch (p <0.05 in all cases).

Figure 4.

Binaural benefit (in dB) relative to the left ear alone for different binaural perception categories as a function of input to the right ear. The error bars show the standard error.

Table 2.

Results of two-way RM ANOVA for binaural advantage in summation (S0N0), squelch (S0Nci), and head shadow (SciNnh)

| dF. res |

F ratio |

p value |

Bonferroni post-hoc (p <0.05) | |

|---|---|---|---|---|

| Right ear | 3, 54 | 103.9 | < 0.001 | Original > CI-19, CI-22, CI-25; CI-25 > CI-19 |

| Spatial | 3, 54 | 346.7 | < 0.001 | Head shadow > Summation, squelch |

| Right ear × spatial |

9, 54 | 44.1 | < 0.001 | CI-19, CI-22, CI-25, Original: Head shadow > Summation, squelch Squelch: Original > CI-19, CI-25 Head shadow: Original > CI-19, CI-22, CI-25; CI-25 > C1-19, CI-22 |

DISCUSSION

To our knowledge, this is the first simulation study to evaluate the potential benefits of cochlear implantation for Mandarin-speaking SSD patients. Results suggest that SSD patients may indeed benefit from cochlear implantation for speech understanding in noise because of improved access to spatial cues. Consistent with our hypotheses, binaural benefits were largely limited to head shadow and SRM, and binaural benefits increased with insertion depth.

The present CI simulation results are in agreement with previous SSD CI studies that showed significant head shadow benefits after implantation (Vermeire et al, 2009; Arndt et al, 2010; Tavora-Viera et al, 2013). Head shadow benefits relate to the physics of sound, as the head acts like an attenuator that can reduce the sound across the head by as much as 6 dB (Vermeire & Van de Heyning, 2009). In this study, head shadow was measured only for the SciNnh spatial condition, where the mean binaural benefit with the CI-25 simulation was 8.4 dB (Fig. 4).

There was no significant binaural benefit for summation with any of the CI simulations, suggesting that subjects attended to the better representation (original sound to the left ear). Previous SSD CI studies have shown a summation benefit of only 1–2 dB (Arndt et al, 2010; Buechner et al, 2010; Dunn et al, 2008; Jacob et al, 2011; Vermeire et al, 2009). However, Tavora et al (2013) found that SSD CI users’ binaural summation improved by 3 dB after 3 months of experience with their CI, compared to pre-operative measures. Significant binaural benefits for summation have also been shown for adult bilateral and bimodal (CI + hearing aid) CI users (Ching et al, 2005; Schleich et al, 2004). One possible explanation for the present results and those from most SSD CI studies is the performance difference between two ears. While the cochlear implantation can restore some speech understanding to the implanted ear, CI performance in noise is generally poorer than for NH listeners. Such a difference was evident in the present pattern of results for the S0Nnh and the SciNnh conditions (Fig. 3), where SRTs with the original signal were more than 10 dB better than with the best CI simulation. Such across-ear “performance asymmetry” effects on summation have been observed with bilateral CI users (Yoon et al, 2011), where greater binaural benefits were observed as the performance asymmetry was reduced. As such, improving performance in the implanted ear, whether by better mapping or training, may be key to maximizing the binaural benefit for summation.

In NH listeners, squelch can improve SRTs by 2–5 dB (Akeroyd, 2006). In this study, binaural benefits for squelch with the CI simulations were quite small (Fig. 4), consistent with previous reports for SSD CI patients (e.g., Buechner al., 2010). However, Eapen et al (2009) found that squelch benefits for SSD CI patients developed slowly and continued to increase beyond the first year after implantation, suggesting that the acute CI simulation data in this study may underestimate squelch benefits for real SSD CI patients.

When noise was delivered to the left (NH) side, there was a negative SRM for the CI-19 and CI-22 simulations and nearly no SRM for the CI-25 simulation. This lack of SRM was most likely due the relatively poor speech performance with the CI simulations. When noise was delivered to the right (CI) side, there was substantial SRM for the all the CI simulations, with the greatest SRM when the original signal was delivered to the right ear. The present data suggest that reducing apical mismatch (CI-25 simulation) may significantly benefit for SSD CI patients when noise is presented to the NH side, at least for SRM and head shadow. This is not surprising, as many studies with CI simulations and real CI users have shown that the acoustic frequency-to-electrode place mismatch is more deleterious in the apical than the basal region (e.g., Fu & Shannon, 1999a,b). Even when the apical mismatch was reduced (CI-25 simulation), performance remained 7.3 dB poorer for SRM and 11.6 dB poorer for head shadow, relative to the original signal delivered to the right ear. This suggests that performance on the CI side must be greatly improved to maximize the binaural benefit. Given the likely performance asymmetry between the NH and CI ears in SSD patients, optimal mapping and/or explicit training of the CI side may be necessary to sufficiently improve SSD CI users’ access to spatial cues for segregating speech and noise. However, the reduced spectral resolution of the CI ear relative to NH ear will most likely limit performance in the CI ear, especially under noisy listening conditions. This is not to say that cochlear implantation fails to benefit SSD patients. Many studies have shown that cochlear implantation can reduce tinnitus severity, improve sound source localization, and improve quality of life (e.g., Tavora-Vieira et al, 2013; Tokita et al, 2014; van Zon et al, 2015). However, the present data suggest that the binaural benefit for speech in noise is largely limited to SRM and head shadow when the speech is presented to the CI side.

Another consideration regarding mismatches between the NH ear and different CI insertion depths is the potential for frequency-dependent interaural level differences (ILDs). Interaural time differences (ITDs) are not well utilized by bilateral and bimodal (combined acoustic and electric hearing) CI users because of mismatches between the place of intracochlear stimulation across ears and timing differences between acoustic and electric hearing, due to travelling wave in acoustic hearing and stimulation rate effects in electric hearing, as well as time constants associated signal compression in CIs and hearing aids (e.g., Francart et al 2009, 2011; Francart & McDermott 2013). Consequently, bilateral and bimodal CI users rely more strongly on ILDs (e.g., Aronoff et al 2010). While bilateral place mismatches may reduce ITD sensitivity, they may also contribute to reduced ILD sensitivity. For example, in the CI-19 simulation, there was an upward shift in the stimulation pattern with electric hearing relative to the normal ear (see Fig. 2). As such, there would be little stimulation below the cochlear place associated with 1000 Hz on the CI side, but normal stimulation in this region for the NH side. The greater binaural benefits observed with the CI-25 simulation may have been partly due to better preservation of ILDs for a broader overall frequency range (see Fig 2).

If reducing apical mismatch can improve binaural perception in real SSD CI patients, better frequency matching between ears may be necessary to maximize the binaural benefit of cochlear implantation. Given the limited electrode array extent and insertion depth for some devices (e.g., Cochlear Corp), it may be preferable to match electrodes to the acoustic frequency, rather than compress a wide range of acoustic input onto the electrode array. Such an approach would necessarily truncate the acoustic input to the CI, but this tradeoff may lead to better fusion, which in turn may lead to better binaural perception (Aronoff et al, 2015).

CONCLUSION

The present CI simulation data suggest that cochlear implantation for SSD may provide significant benefits for speech understanding in noise under certain spatial listening conditions. Reducing acoustic-to-electric frequency mismatch in the apical region of the CI side significantly improves some binaural benefit.

Acknowledgments

This work was funded in part by NIH grant R01-DC004792 and R01-DC004993.

Acronyms and abbreviations

- CI

cochlear implant

- CI-19

CI simulation with 19 mm insertion

- CI-22

CI simulation with 22 mm insertion

- CI-25

CI simulation with 25 mm insertion

- df

degrees of freedom

- SSD

single-side deafness

- MSP

Mandarin speech perception

- NH

normal hearing

- res

residual

- RM-ANOVA

repeated-measures analysis of variance

- S0N0

speech and noise from front

- S0Nnh

speech from front, noise from right (NH side)

- S0Nci

speech from front, noise from left (CI side)

- SciNnh

speech from right (CI side), noise from left (NH side)

- SNR

signal to noise ratio

- SRM

spatial release from masking

- SRT

speech reception threshold

- SSN

steady-state noise

- HRTF

head related transfer function

Footnotes

Declaration of Interest: The authors report no conflicts of interests.

References

- Akeroyd MA. The psychoacoustics of binaural hearing. Int J Audiol. 2006;45:S25–S33. doi: 10.1080/14992020600782626. [DOI] [PubMed] [Google Scholar]

- Aronoff JM, Yoon YS, Freed DJ, Vermiglio AJ, Pal I, Soli SD. The use of interaural time and level difference cues by bilateral cochlear implant users. J Acoust Soc Am. 2010;127:EL87–E92. doi: 10.1121/1.3298451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Shayman C, Prasad A, Suneel D, Stelmach J. Unilateral spectral and temporal compression reduces binaural fusion for normal hearing listeners with cochlear implant simulations. Hear Res. 2015;320:24–29. doi: 10.1016/j.heares.2014.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arndt S, Aschendorff A, Laszig R, Beck R, Schild C, Kroeger S, Ihorst G, Wesarg T. Comparison of pseudobinaural hearing to real binaural hearing rehabilitation after cochlear implantation in patients with unilateral deafness and tinnitus. Otol Neurotol. 2010;32:39–47. doi: 10.1097/MAO.0b013e3181fcf271. [DOI] [PubMed] [Google Scholar]

- Baguley DM, Bird J, Humphriss RL, Prevost AT. The evidence base for the application of contralateral bone anchored hearing aids in acquired unilateral sensorineural hearing loss in adults. Clin Otolaryngol. 2006;31:6–14. doi: 10.1111/j.1749-4486.2006.01137.x. [DOI] [PubMed] [Google Scholar]

- Bernstein JG, Iyer N, Brungart DS. Release from informational masking in a monaural competing-speech task with vocoded copies of the maskers presented contralaterally. J Acoust Soc Am. 2015;137:702–713. doi: 10.1121/1.4906167. [DOI] [PubMed] [Google Scholar]

- Bishop CE, Eby TL. The current status of audiologic rehabilitation for profound unilateral sensorineural hearing loss. Laryngoscope. 2010;120:552–556. doi: 10.1002/lary.20735. [DOI] [PubMed] [Google Scholar]

- Bronkhorst AW. The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acustica. 2000;86:117–128. [Google Scholar]

- Bronkhorst AW, Plomp R. Binaural speech-intelligibility in noise for hearing-impaired listeners. J Acoust Soc Am. 1989;86:1374–1383. doi: 10.1121/1.398697. [DOI] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Achieving electric-acoustic benefit with a modulated tone. Ear Hear. 2009;30:489–493. doi: 10.1097/AUD.0b013e3181ab2b87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buechner A, Brendel M, Lesinski-Schiedat A, Wenzel G, Frohne-Buechner C, Jaeger B, Lenarz T. Cochlear implantation in unilateral deaf subjects associated with ipsilateral tinnitus. Otol Neurotol. 2010;31:1381–1385. doi: 10.1097/MAO.0b013e3181e3d353. [DOI] [PubMed] [Google Scholar]

- Chan JC, Freed DJ, Vermiglio AJ, Soli SD. Evaluation of binaural functions in bilateral cochlear implant users. Int J Audiol. 2008;47:296–310. doi: 10.1080/14992020802075407. [DOI] [PubMed] [Google Scholar]

- Ching TYC, Van Wanrooy E, Hill M, Dillon H. Binaural redundancy and inter-aural time difference cues for patients wearing a cochlear implant and a hearing aid in opposite ears. Int J Audiol. 2005;44:677–690. doi: 10.1080/14992020500190003. [DOI] [PubMed] [Google Scholar]

- Cox RM, Bisset JD. Relationship between two measures of aided binaural advantage. J Speech Hear Disorders. 1984;49:399–408. doi: 10.1044/jshd.4904.399. [DOI] [PubMed] [Google Scholar]

- Dillon H. Binaural and bilateral considerations in hearing aid fitting. In: Dooley GJ, et al., editors. Hearing Aids. Sydney: Boomerang Press; 2001. pp. 370–403. [Google Scholar]

- Dorman MF, Spahr AJ, Loizou PC, Dana CJ, Schmidt JS. Acoustic simulations of combined electric and acoustic hearing (EAS) Ear Hear. 2005;26:371–380. doi: 10.1097/00003446-200508000-00001. [DOI] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Oakley S, Gantz B, Noble W. Comparison of speech recognition and localization performance in bilateral and unilateral cochlear implant users matched on duration of deafness and age at implantation. Ear Hear. 2008;29:352–359. doi: 10.1097/AUD.0b013e318167b870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eapen RJ, Buss E, Adunka MC, Pillsbury HC, 3rd, Buchman CA. Hearing-in-noise benefits after bilateral simultaneous cochlear implantation continue to improve 4 years after implantation. Otol Neurotol. 2009;30:153–159. doi: 10.1097/mao.0b013e3181925025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Reeder RM, Waltzman SB, Arndt S. Auditory abilities after cochlear implantation in adults with unilateral deafness: a pilot study. Otol Neurotol. 2012;33:1339–1346. doi: 10.1097/MAO.0b013e318268d52d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Francart T, McDermott HJ. Psychophysics, fitting, and signal processing for combined hearing aid and cochlear implant stimulation. Ear Hear. 2013;34:685–700. doi: 10.1097/AUD.0b013e31829d14cb. [DOI] [PubMed] [Google Scholar]

- Francart T, Brokx J, Wouters J. Sensitivity to interaural time differences with combined cochlear implant and acoustic stimulation. J Assoc Res Otolaryngol. 2009;10:131–141. doi: 10.1007/s10162-008-0145-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Francart T, Lenssen A, Wouters J. Sensitivity of bimodal listeners to interaural time differences with modulated single- and multiple-channel stimuli. Audiol Neurootol. 2011;16:82–92. doi: 10.1159/000313329. [DOI] [PubMed] [Google Scholar]

- Freyman RL, Helfer KS, McCall DD, Clifton RK. The role of perceived spatial separation in the unmasking of speech. J Acoust Soc Am. 1999;106:3578–3588. doi: 10.1121/1.428211. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV. Effects of electrode location and spacing on phoneme recognition with the Nucleus-22 cochlear implant. Ear Hear. 1999a;20:321–331. doi: 10.1097/00003446-199908000-00005. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV. Effects of electrode configuration and frequency allocation on vowel recognition with the Nucleus-22 cochlear implant. Ear Hear. 1999b;20:332–344. doi: 10.1097/00003446-199908000-00006. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Zeng FG. Identification of temporal envelope cues in Chinese tone recognition. Asia Pac J Speech Lang Hear. 2005;5:45–57. [Google Scholar]

- Giardina CK, Formeister EJ, Adunka OF. Cochlear implants in single-sided deafness. Curr Surg Rep. 2014 [Google Scholar]

- Greenwood DD. A cochlear frequency-position function for several species - 29 years later. J Acoust Soc Am. 1990;87:2592–2605. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- Jacob R, Stelzig Y, Nopp P, Schleich P. Audiological results with cochlear implants for single-sided deafness. HNO. 2011;59:453–460. doi: 10.1007/s00106-011-2321-0. [DOI] [PubMed] [Google Scholar]

- Kamal SM, Robinson AD, Diaz RC. Cochlear implantation in single-sided deafness for enhancement of sound localization and speech perception. Curr Opin Otolaryngol Head Neck Surg. 2012;20:393–397. doi: 10.1097/MOO.0b013e328357a613. [DOI] [PubMed] [Google Scholar]

- Laszig R, Aschendorff A, Stecker M, Müller-Deile J, Maune S, et al. Benefits of bilateral electrical stimulation with the nucleus cochlear implant in adults: 6-month postoperative results. Otol Neurotol. 2004;25:958–968. doi: 10.1097/00129492-200411000-00016. [DOI] [PubMed] [Google Scholar]

- Lin MC. The acoustic characteristics and perceptual cues of tones in Standard Chinese. Chinese Yuwen. 1988;204:182–193. [Google Scholar]

- Mertens G, Kleine-Punte A, De Bodt M, Van de Heyning P. Binaural auditory outcomes in patients with postlingual profound unilateral hearing loss: 3 years after cochlear implantation. Audiol Neurootol. 2015;20(S1):67–72. doi: 10.1159/000380751. [DOI] [PubMed] [Google Scholar]

- Muller J, Schon F, Helms J. Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40/40+ cochlear implant system. Ear Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Murphy J, Summerfield AQ, O'Donoghue GM, Moore DR. Spatial hearing of normally hearing and cochlear implanted children. Int J Pediatr Otorhinolaryngol. 2011;75:489–494. doi: 10.1016/j.ijporl.2011.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plomp R, Mimpen AM. Improving the reliability of testing the speech reception threshold for sentences. Audiol. 1979;18:43–52. doi: 10.3109/00206097909072618. [DOI] [PubMed] [Google Scholar]

- Probst R. Cochlear implantation for unilateral deafness? HNO. 2008;56:886–888. doi: 10.1007/s00106-008-1796-9. [DOI] [PubMed] [Google Scholar]

- Nopp P, Schleich P, D’Haese P. Sound localization in bilateral users of MED-EL COMBI 40/40+ cochlear implants. Ear Hear. 2004;25:205–214. doi: 10.1097/01.aud.0000130793.20444.50. [DOI] [PubMed] [Google Scholar]

- Schleich P, Nopp P, D’Haese P. Head shadow, squelch, and summation effects in bilateral users of the Med-El Combi 40/40+ cochlear implant. Ear Hear. 2004;25:197–204. doi: 10.1097/01.aud.0000130792.43315.97. [DOI] [PubMed] [Google Scholar]

- Sheffield BM, Zeng FG. The relative phonetic contributions of a cochlear implant and residual acoustic hearing to bimodal speech perception. J Acoust Soc Am. 2012;131:518–530. doi: 10.1121/1.3662074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tavora-Vieira D, Marino R, Krishnaswamy J, Kuthbutheen J, Rajan GP. Cochlear implantation for unilateral deafness with and without tinnitus: a case series. Laryngoscope. 2013;123:1251–1255. doi: 10.1002/lary.23764. [DOI] [PubMed] [Google Scholar]

- Tokita J, Dunn C, Hansen M. Cochlear implantation and single sided deafness. Curr Opin Otolaryngol Head Neck Surg. 2014;22:353–358. doi: 10.1097/MOO.0000000000000080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vermeire K, Van de Heyning P. Binaural hearing after cochlear implantation in subjects with unilateral sensorineural deafness and tinnitus. Audiol Neurootol. 2009;14:163–1671. doi: 10.1159/000171478. [DOI] [PubMed] [Google Scholar]

- Yoon YS, Li Y, Kang HY, Fu QJ. The relationship between binaural benefit and difference in unilateral speech recognition performance for bilateral cochlear implant users. Int J Audiol. 2011;50:554–565. doi: 10.3109/14992027.2011.580785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Zon A, Peters JP, Stegeman I, Smit AL, Grolman W. Cochlear implantation for patients with single-sided deafness or asymmetrical hearing loss: A systematic review of the evidence. Otol Neurotol. 2015;36:209–219. doi: 10.1097/MAO.0000000000000681. [DOI] [PubMed] [Google Scholar]