Abstract

Because musicians are trained to discern sounds within complex acoustic scenes, such as an orchestra playing, it has been hypothesized that musicianship improves general auditory scene analysis abilities. Here, we compared musicians and non-musicians in a behavioural paradigm using ambiguous stimuli, combining performance, reaction times and confidence measures. We used ‘Shepard tones’, for which listeners may report either an upward or a downward pitch shift for the same ambiguous tone pair. Musicians and non-musicians performed similarly on the pitch-shift direction task. In particular, both groups were at chance for the ambiguous case. However, groups differed in their reaction times and judgements of confidence. Musicians responded to the ambiguous case with long reaction times and low confidence, whereas non-musicians responded with fast reaction times and maximal confidence. In a subsequent experiment, non-musicians displayed reduced confidence for the ambiguous case when pure-tone components of the Shepard complex were made easier to discern. The results suggest an effect of musical training on scene analysis: we speculate that musicians were more likely to discern components within complex auditory scenes, perhaps because of enhanced attentional resolution, and thus discovered the ambiguity. For untrained listeners, stimulus ambiguity was not available to perceptual awareness.

This article is part of the themed issue ‘Auditory and visual scene analysis’.

Keywords: hearing, ambiguity, vagueness, musical training, Shepard tones

1. Introduction

Two observers of the same stimulus may sometimes report drastically different perceptual experiences [1,2]. When such interindividual differences are stable over time, they provide a powerful tool for uncovering the neural bases of perception [3]. Here, we investigated interindividual differences for auditory scene analysis, the fundamental ability to focus on target sounds amidst background sounds. We used an ambiguous stimulus [4], as inconclusive sensory evidence should enhance the contribution of idiosyncratic processes [5]. We further compared listeners with varying degrees of formal musical training, as musicianship has been argued to affect generic auditory abilities [6]. Finally, we combined standard performance measures with introspective judgements of confidence [7]. This method addressed a basic but unresolved question about ambiguous stimuli: are observers aware of the physical ambiguity, or not [8,9]?

Ambiguous stimuli have been used to uncover robust interindividual differences in perception. For vision, colour [1] or motion direction [10] can be modulated by strong and unexplained idiosyncratic biases, that are surprisingly stable over time. For audition, reports of pitch-shift direction between ambiguous sounds have also revealed stable biases [2] that are correlated with the language experience of listeners [11], although this has been debated [12]. Stable interindividual differences also extend to the so-called metacognitive abilities, such as the introspective judgement of the accuracy of percepts [7,13]. Such reliable interindividual differences can provide useful methodological tools, to reveal underlying associations between different behavioural measures [14], or to relate perceptual measures to clinical symptoms [15]. Variations in behaviour can also be correlated with variations in physical characteristics, to probe the neurophysiological [16,17], neuroanatomical [3] or genetic [18] bases of perceptual processing.

For auditory perception, one long-recognized source of interindividual variability is musical training [19]. Musical training provides established benefits for music-related tasks, such as fine-grained pitch discrimination [6,20]. Generalization to basic auditory processes is still under scrutiny however. In particular, a number of studies have investigated whether musicianship improved auditory scene analysis. Musicians were initially shown to have improved intelligibility for speech in noise, which was correlated to enhanced neural encoding of pitch [21]. However, attempts to replicate and generalize these findings have been unsuccessful [22]. An advantage for musicians was subsequently found by emphasizing non-auditory aspects of the task, by using intelligible speech as a masker instead of noise [23,24], but null findings also exist with intelligible speech as the masker [25]. For auditory scene analysis tasks not involving speech, musicians were better at extracting a melody [26] or a repeated tone [27,28] from an interfering background. Musicians were also more likely to discern a mistuned partial within a complex tone [29] or within an inharmonic chord [30].

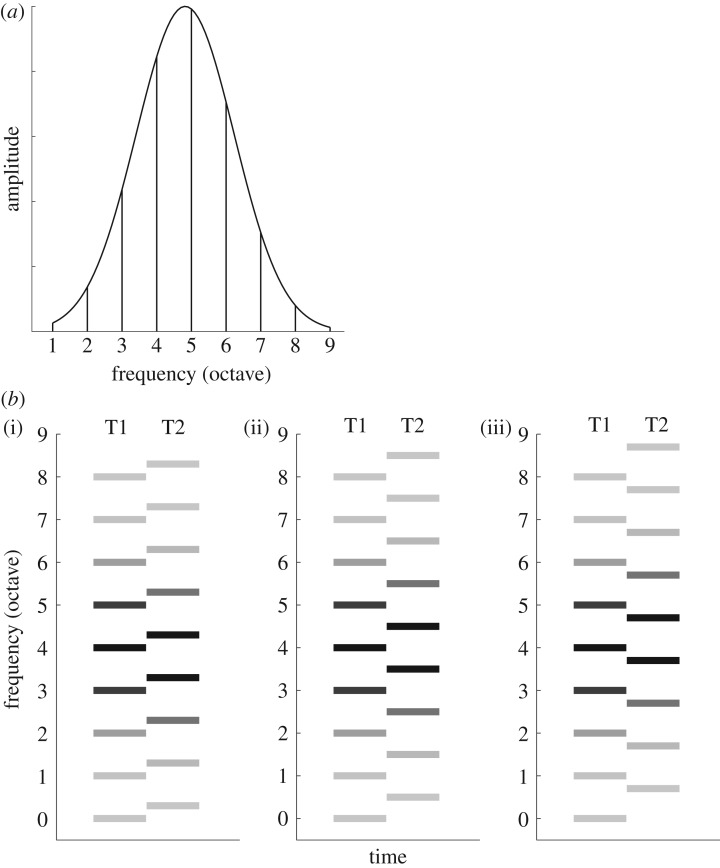

Here, we take the comparison between musicians and non-musicians to a different setting, using ambiguous stimuli. We used Shepard tones [4], which are chords of many simultaneous pure tones, all with an octave relationship to each other (figure 1a). When two Shepard tones are played in rapid succession, listeners report a subjective pitch shift, usually corresponding to the smallest log-frequency distance between successive component tones (figure 1b(i) or (iii)). When two successive Shepard tones are separated by a frequency distance of half-an-octave, however, an essential ambiguity occurs: there is no shortest log-frequency distance to favour either up or down pitch shifts (figure 1b(ii)). In this case, listeners tend to report either one or the other pitch-shift direction, with equal probability on average across trials and listeners [4]. Interindividual differences have been observed for the direction of the reported shift, with listeners displaying strong biases for one direction of pitch shift for some stimuli, but without any systematic effect of musicianship [2,11].

Figure 1.

Illustration of the ambiguous and non-ambiguous stimuli. (a) Shepard tones were generated by adding octave-related pure tones, weighted by a bell-shaped amplitude envelope. (b) Pairs of Shepard tones, T1 and T2, were used in the experiment. The panels show frequency intervals of 3 st (i), 6 st (ii) and 9 st (iii) between T1 and T2. When reporting the pitch shift between T1 and T2, participants tend to choose the shortest log-frequency distance: ‘up’ for 3 st and ‘down’ for 9 st. For 6 st, there is no shortest log-frequency distance. The stimulus is ambiguous and judgements tend to be equally split between ‘up’ and ‘down’.

We did not investigate interindividual differences in pitch-shift direction bias, but rather, differences in the introspective experience of Shepard tones: are listeners aware of the ambiguity, or not? The experimental evidence for ambiguity so far has been an equal split between ‘up’ and ‘down’ pitch shift reports, for the same stimulus. However, there are three possible reasons for such an outcome. First, listeners may hear neither an upward nor a downward shift, and respond at chance. Second, listeners may hear upward and downward shifts simultaneously, and randomly choose between the two. Third, listeners may clearly hear one direction of shift, and report it unhesitatingly, but this direction may change over trials. The question bears upon current debates on the nature of perception with under-determined information, which is the general case. The first two options, hearing neither or both pitch shifts, would be compatible with what has been termed vagueness: response categories are fuzzy and non-exclusive, and observers are aware of their uncertainty when selecting a response [31,32]. The third option would be more akin to what is assumed for bistable stimuli [9]. We will designate this third option as a ‘polar’ percept: observers are sure of what they perceive and unaware of the alternatives, but they differ in which percept they are attracted to. Based on informal observations, Shepard described ambiguous tone pairs as polar [4]. Deutsch further argued that the stable individual biases observed for such sounds pointed to polar percepts [2]. Interestingly, however, the idiosyncratic biases can be overcome by context effects [33] or cross-modal influence [34], suggesting that both percepts may be available to the listener. Here we assessed the listeners' introspective confidence in their judgement of Shepard tone pairs.

In three behavioural experiments, we investigated the perception of pitch shifts between Shepard tone pairs, comparing ambiguous and non-ambiguous cases. We collected pitch-shift direction choices, but also reaction times and judgements of confidence. We hypothesized that, if listeners were aware of the perceptual ambiguity in physically ambiguous stimuli, this would translate into longer reaction times [8,35] and lower confidence judgements. We further compared musicians and non-musicians, hypothesizing that musicians may be better able to discern individual components within complex Shepard tones [29,30] and thus be more likely to report the ambiguity. We finally assessed whether non-musicians could report the ambiguity when the component tones were made easier to discern, through acoustic manipulation [36]. Results suggested that the Shepard tones were polar for the naive non-musicians listeners, but that musicianship or acoustic manipulation could reveal the ambiguity.

2. Main experiment: the perception of ambiguous pitch shifts by musicians and non-musicians

(a). Methods

(i). Participants

Sixteen self-reported normal hearing participants (age in years M = 26, s.d. = 5) were tested. Eight were musicians (four men and four women, age M = 27, s.d. = 7) and eight were non-musicians (four men and four women, age M = 26, s.d. = 2). Musicians had more than 5 years of musical practice in an academic institution. Among them, three were professionals (a clarinet player, a pianist, and a composer and pianist, all with self-reported absolute pitch). Among the non-musicians, four reported having no musical training whatsoever, the other four having practised an instrument for less than 4 years in a non-academic institution. Such a binary distinction between musicians and non-musicians was arbitrary and based on the sample of participants who volunteered for the experiment. A finer sampling is presented later with the online experiment. The two groups did not differ with respect to age (two-samples t-test, t14 = 0.39, p = 0.69). All were paid for participation in the experiment.

(ii). Stimuli

Shepard tones were generated as in [33]. Briefly, nine pure tones all with an octave relationship to a base frequency, Fb, were added together to cover the audible range. They were amplitude-weighted by a fixed spectral envelope (Gaussian in log-frequency and linear amplitude, with M = 960 Hz and s.d. = 1 octave; figure 1a). A trial consisted of two successive tones, T1 and T2, with tone duration of 125 ms and no silence between tones. The Fb for T1 was randomly drawn for each trial, uniformly between 60 and 120 Hz, to counterbalance possible idiosyncratic biases in pitch-shift direction preference [2,33]. The Fb interval between T1 and T2 was randomly drawn from a uniform distribution between 0 semitones (st) and 11 st in steps of 1 st. The interval of 6 st corresponds to a half octave, the ambiguous case. Each interval was presented 40 times with presentation order randomly shuffled. To minimize context effects [33], participants were played an intertrial sequence of five tones between trials, with tone duration of 125 and 125 ms of silence between tones. The intertrial tones were similar to Shepard tones but with a half-octave relationship between tones. The Fb for intertrial tones was randomly drawn, uniformly between 60 and 120 Hz. The experiment lasted for about 90 min, in a single session split over four blocks.

(iii). Apparatus and procedure

Participants were tested individually in a double-walled sound-insulated booth (Industrial Acoustics). Stimuli were played diotically through an RME Fireface 800 soundcard, at 16-bit resolution and a 44.1 kHz sampling rate. They were presented through Sennheiser HD 250 Linear II headphones. The presentation level was 65 dB SPL, A-weighted. Participants provided an ‘up’ or ‘down’ response through a custom-made response box, which recorded reaction times with sub-millisecond accuracy. The right/left attribution of responses was counterbalanced across participants. Trial presentation was self-paced. A trial was initiated by participants depressing both response buttons. This started a random silent interval of 50–850 ms followed by the stimulus pair T1–T2. Participants were instructed to release as quickly as possible the response button corresponding to the pitch-shift direction they wished to report. Then, participants used a computer keyboard to rate their confidence in the pitch-shift direction report. They used a scale from 1 (very unsure) to 7 (very sure). The intertrial sequence was then played and the next trial was ready to be initiated.

(iv). Screening procedure

We asked participants to report pitch-shift direction, a task for which variability in performance is well documented [37]. A screening test was therefore applied, as in [33], to select participants who could report pitch shifts reliably. Briefly, prospective participants were asked to report ‘up’ or ‘down’ shifts for pure tone or Shepard tone pairs, and a minimum accuracy of 80% for a 1-st interval was required. Broadly consistent with previous reports [33,37], 10 participants out of 26 failed the screening test and were not invited to proceed to the main experiment. All of those who failed the screening test were non-musicians. The relatively large proportion of failure may have been caused by our use of frequency roving in a pitch-shift identification task [38]. Also, we did not provide any training prior to the brief screening procedure, which could have affected performance on the pitch direction task for naive participants [39].

(v). Data analysis for perceptual performance

For each participant and interval, we computed the proportion of ‘up’ responses, P(up), for the pitch-shift direction choices. Psychometric curves were fitted to the data for individual participants over the 1–11 st interval range, using cumulative Gaussians and estimating their parameters using the psignifit software [40]. The fitting procedure returned the point of subjective equality corresponding to P(up) = 0.5; a noise parameter corresponding to the standard deviation of the cumulative Gaussian, σ, inversely related to the slope of the psychometric function; and the two higher and lower asymptotes. The response times (RTs) were defined relative to the onset of T2, the first opportunity to provide a meaningful response. RTs faster than 100 ms were discarded as anticipations. Because of the long-tailed distribution typical of RTs, the natural logarithms of RTs were used for all analyses.

(vi). Data analysis for metacognitive performance

We used an extension of the signal detection theory framework to quantify the use of the confidence scale by each participant [41]. In this framework, a stimulus elicits a value of an internal variable, and this value is used to predict both perceptual decisions and metacognitive judgements of confidence. For perceptual decisions, as is standard with signal detection theory, the internal variable is compared to a fixed criterion value. For confidence judgements, it is the distance between the internal variable and the criterion that is used: values closer to the criterion should correspond to lower confidence. Assuming no loss of information, the metacognitive performance of each participant can then be mathematically derived from the perceptual performance. As shown in [41], this performance can be expressed as ‘meta-d′’, which measures the perceptual information (in d′ units) that is translated into the empirical metacognitive judgements. Under those assumptions, meta-d′ equals d′ for participants with ideal metacognition. A complete formulation of the method is available in [41,42] and comparisons with other techniques are reviewed in [43]. For each participant, we computed d′, meta-d′, and the ratio meta-d′/d′, which quantifies the efficacy of metacognitive judgements [43]. Intervals from 1 to 5 st, which had an expected correct response of ‘up’, were treated as signal trials. Intervals from 7 to 11 st, which had an expected correct response of ‘down’, were treated as noise trials. Intervals of 0 and 6 st, for which there was no expected correct or incorrect response, were discarded from this analysis. For the confidence judgements, we summarized confidence levels using a median-split for each participant and added one-fourth trial to responses for each condition (stimulus × response × confidence) to ensure that there were no empty cells in the analysis.

(b). Results

(i). Pitch-shift direction responses

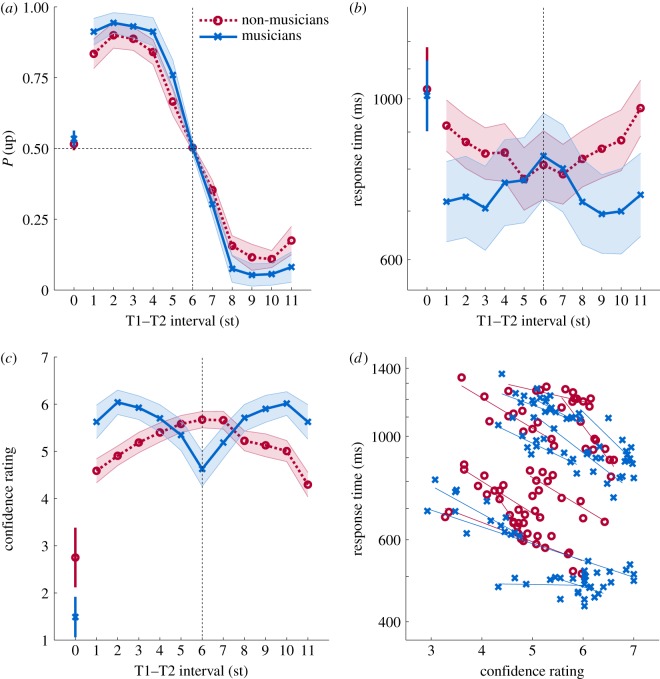

As expected, both musicians and non-musicians reported mostly ‘up’ for small intervals and ‘down’ for large intervals (figure 2a), corresponding to the shortest log-frequency distances between successive components. Performance was around the chance level of P(up) = 0.5 for both the 0-st interval, corresponding to no physical difference between T1 and T2, and the 6-st interval, corresponding to the ambiguous case with no shortest log-frequency distance. Overall accuracy, as measured by σ, was statistically indistinguishable between musicians and non-musicians (two-samples t-test, t14 = 0.45, p = 0.65). The trend for higher performance for musicians at the extreme of the raw values (figure 2a) was not confirmed in the fitted psychometric functions (upper asymptote: t14 = 1.46, p = 0.16; lower asymptote: t14 = 1.28, p = 0.22). The point of subjective equality corresponding to P(up) = 0.5 was not different from 6 st for both groups (musicians: M = 6.0, t7 = −0.26, p = 0.80; non-musicians: M = 6.0, t7 = 0.37, p = 0.72) and not different across groups (t14 = 0.45, p = 0.66).

Figure 2.

Results of the main experiment. (a) The proportion of ‘up’ responses, P(up), is displayed for each interval and averaged within groups (non-musicians, circles; musicians, crosses). For all panels, shaded areas indicate ±1 s.e. about the mean. The interval of 6 st corresponds to the ambiguous case. (b) Response times for each interval, averaged within groups. The natural logarithms of RTs were used to compute means and standard errors; y-labels have been converted to milliseconds for display purposes. (c) Confidence ratings on a scale from 1 (very unsure) to 7 (very sure). (d) Correlation between confidence and log-RT. Each point represents an interval condition for an individual participant. Solid lines are fitted linear regressions over all intervals for each participant. (Online version in colour.)

(ii). Response times

Perhaps predictably, the longest RTs for judging the pitch-shift direction were observed for 0 st (figure 2b), corresponding to identical sounds for T1 and T2. For the other intervals, the pattern of responses differed across groups. Musicians were slower for the ambiguous 6-st interval than for the less ambiguous intervals, whereas non-musicians showed a trend to be faster for the ambiguous interval. We tested the statistical reliability of this observation by averaging the log-RTs for non-ambiguous intervals (all intervals in the 1–11 st range except 6 st) and comparing this value with the log-RT at 6 st. The analysis confirmed that musicians were slower for the ambiguous interval compared with non-ambiguous intervals (paired t-test, t7 = −3.72, p = 0.007). For non-musicians, the difference was not significant (t7 = 0.93, p = 0.38). Finally, to test the interaction between response pattern and group, we computed the difference between the averaged non-ambiguous cases and the ambiguous case, what we term the ‘ambiguity effect’. The ambiguity effect was then contrasted across groups. The contrast was significant (t14 = 3.07, p = 0.008), confirming that musicians and non-musicians displayed different ambiguity effects in terms of log RT.

(iii). Confidence ratings

Confidence was lowest for 0 st, and lower for musicians than non-musicians at this interval (figure 2c). For the other intervals, the patterns of responses differed across groups. Musicians, but not non-musicians, displayed a dip in confidence for the ambiguous case. We used the same analysis method as above to quantify this observation. For musicians, confidence was higher for the non-ambiguous intervals than for the ambiguous interval (t7 = 3.38, p = 0.012). For non-musicians, the pattern was reversed, with higher confidence for the ambiguous interval (t7 = −3.34, p = 0.012). Note that this ambiguous interval also contained the largest log-frequency distance between successive components of T1 and T2. The interaction between group and ambiguity, tested with the ambiguity effect, was significant (t14 = −4.43, p = 0.001). Musicians and non-musicians displayed different ambiguity effects in terms of confidence.

(iv). Metacognitive performance

We investigated whether the difference in confidence judgements between non-musicians and musicians could be explained by a different use of the confidence scale. We estimated a meta-d′ for each participant, which measures the perceptual information (in d′ units) needed to explain the empirical metacognitive data, and expressed the results as the meta-ratio of meta-d′/d′ (see §2a and [41]). We observed relatively low values of meta-ratio overall, and no difference between groups (musicians: M = 0.4, s.d. = 0.14; non-musicians: M = 0.3, s.d. = 0.39; t14 = −0.68, p = 0.5). Moreover, there was no correlation between meta-ratio and ambiguity effect (Pearson correlation coefficient r14 = 0.24, p = 0.36). The metacognitive analysis thus suggests that not all of the perceptual evidence available for pitch-shift direction judgements was used for confidence judgements, but that, importantly, the efficacy of each individual participant in using the confidence scale was not related to the ambiguity effect.

(v). Correlation of response times and confidence

We hypothesized that RTs and confidence judgments would be negatively correlated. To test this hypothesis, we calculated the correlation between the log-RTs and confidence values for each participant separately, over the 1–11 st range. We found negative correlations for almost all participants (figure 2d). The relationship between the two variables was strong (Pearson correlation coefficient r averaged across participants, M = −0.7, s.d. = 0.23).

3. Online experiment: replication on a larger cohort

(a). Rationale

In the main experiment, we observed different ambiguity effects for non-musicians and musicians. However, the comparison rested on a relatively small sample size (eight in each group). Moreover, the binary definition of musicianship, which was required to analyse a small sample, could not reflect the spread of musical abilities between participants. We performed an online experiment to try to replicate our main findings on a larger cohort. Participants first completed a questionnaire about their music background. Then, they performed the pitch-shift direction task, followed by the confidence task, for a reduced set of intervals containing non-ambiguous and ambiguous cases. Response times were not collected for technical reasons and also because they were correlated with confidence in the main experiment. Thanks to the number of online participants with useable data (n = 134), we could then compare the effect of ambiguity with years of musical training.

(b). Methods

(i). Participants

Participants were recruited through an email call sent to a self-registration mailing list, provided by the ‘Relais d'information sur les Sciences Cognitives’, Paris, France. Participation was anonymous and participants were not paid. In total, 359 participants started the experiment after reading the instructions (age M = 30, s.d. = 11). Among them, 173 participants completed the Shepard tones experiment, which took about 30 min. We only considered the data of those 173 participants (age M = 30, s.d. = 10).

(ii). Questionnaire

We collected information about years of formal musical training, current practice and type of instrument played. For simplicity, and to be consistent with the main experiment, we only used the duration of formal musical training as a shorthand for musicianship. In the self-selected sample of the online experiment, mean number of years of musical training was M = 8.7 years (s.d. = 8.5). For group contrasts, we used the same criterion as for the main experiments: musicians were participants with 5 years or more of formal musical training. This resulted in a sample comprising 90 musicians and 83 non-musicians.

(iii). Stimuli and procedure

Test pairs of T1–T2 Shepard tones were generated prior to the experiment, as in the main experiment. To keep the experiment at a reasonable length, we only presented intervals of 0, 2, 4, 6, 8 and 10 st. Each interval was presented 12 times and the presentation order was shuffled across participants. Participants were instructed to listen over headphones or loudspeaker, preferably in a quiet environment, but no attempt was made to control the sound presentation conditions. After each trial, they provided a pitch-shift direction response by means of the up or down arrows on the keyboard. They were then asked for their confidence ratings on a scale ranging from 1 to 7 (1 = very unsure, 7 = very sure) using number keys on the keyboard. Between each trial, an intertrial sequence of three tones was played. Randomly interspersed with experimental trials were four catch trials, containing two successive harmonic complex tones (first six harmonics with flat spectral envelope) at an interval of 12 st. These catch trials were intended to contain large and unambiguous pitch shifts, which attentive participants should have no difficulty in reporting correctly. The online experiment contained a final part, where we attempted to measure performance for the detection of a mistuned harmonic within a harmonic complex [29,44]. However, because a large proportion of participants failed to complete this last phase, results were too noisy to be meaningfully analysed and will not be reported here.

(c). Results

(i). Data-based screening

Data were expected to be noisy, especially as we did not control the equipment used for playback nor the attentiveness of participants. Therefore, we chose to perform a data-based screening of participants, using their performance on the pitch-shift direction task (and not the confidence judgements). Psychometric functions were fitted to the data for each participant (see §2a). There was a large spread in the parameter representing accuracy, σ (M = 6.2, s.d. = 43). After visual inspection of individual functions, we excluded participants with σ > 5 (larger values indicate shallower slopes of the psychometric function and thus poorer performance). This left 134 participants for subsequent analyses. The new sample comprised 86 musicians and 48 non-musicians. The unequal balance may reflect higher performance or higher motivation of musicians in the online experiment. Performance on catch trials after the data-based screening was high overall, but marginally differed between groups (per cent correct; musicians M = 98, s.d. = 0.06; non-musicians M = 96, s.d. = 0.09; t132 = −2.05, p = 0.04).

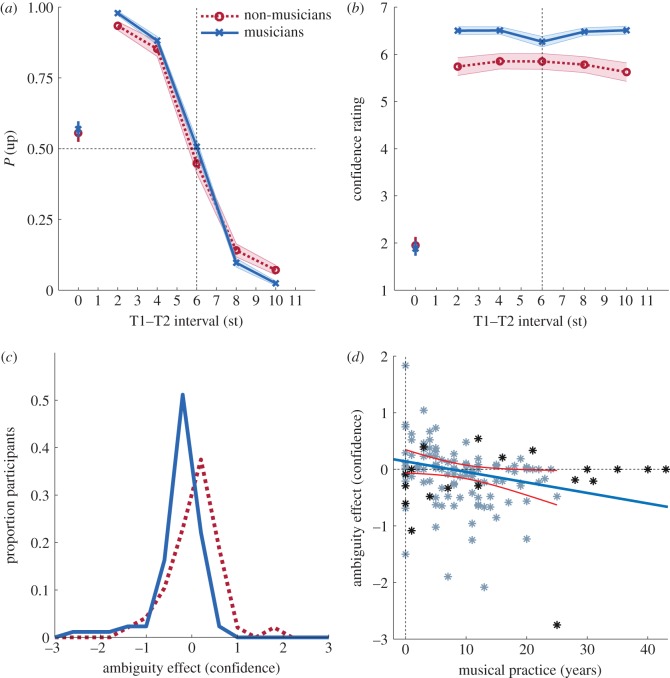

(ii). Pitch-shift direction responses

Participants responded in the expected way for unambiguous cases and responses were close to P(up) = 0.5 for the ambiguous case (figure 3a). No difference was observed across groups when comparing accuracy, as measured by the slopes of the psychometric functions (σ, t132 = 0.83, p = 0.4). Groups differed for extreme interval values, corresponding to small log-frequency distances between T1 and T2 (upper asymptote: t132 = 2.72, p = 0.007; lower asymptote: t132 = 3.60, p < 0.001), consistent with a better fine frequency discrimination for musicians [20]. The point of subjective equality was equivalent for the two groups (t132 = −0.69, p = 0.5). This point of subjective equality was not different from 6 st for both groups (musicians: M = 5.9, s.d. = 0.81; t85 = −0.84, p = 0.4; non-musicians: M = 5.8, s.d. = 1.0; t47 = −1.24, p = 0.22).

Figure 3.

Results of the online experiment. (a) The proportion of ‘up’ responses, P(up), is displayed for each interval tested in the online experiment. Format as in figure 2a. (b) Confidence ratings. Format as in figure 2b. (c) Histograms of the ambiguity effect, in confidence units, for non-musicians (dotted line) and musicians (solid line). The ambiguity effect was defined as the average confidence for all intervals excluding 0 and 6 st, minus confidence for 6 st, the ambiguous case. Negative values correspond to participants being less confident for the ambiguous case. Bin width is 0.4 confidence units (d) Correlation between the ambiguity effect and years of formal musical training. Blue stars indicate participants aged 35 years or less. Solid lines indicate the linear regression (straight line) and 95% CI (curved lines) fitted to the data for these younger participants. (Online version in colour.)

(iii). Confidence ratings

As for the main experiment, musicians showed a dip in confidence for the ambiguous case, whereas non-musicians showed a broad peak in confidence for the same stimulus (figure 3b). However, the contrast between intervals was less pronounced than in the main experiment. Moreover, musicians gave higher confidence values than non-musicians overall. The same statistical analysis as for the main experiment was applied: confidence for non-ambiguous intervals of 2, 4, 8 and 10 st was averaged and compared to confidence for the ambiguous interval of 6 st. Musicians were less confident for the ambiguous interval (t85 = −4.22, p < 0.001), whereas non-musicians showed equivalent confidence for the two types of intervals (t47 = 1.21, p = 0.23). The interaction between group and confidence was significant, as estimated by the ambiguity effect (t132 = 3.45, p = 0.001). To illustrate the difference between groups, histograms of the ambiguity effect for individual participants are displayed in figure 3c. Thus, even though the differences across groups were less pronounced on average than in the main experiment, presumably because of the noisy nature of online data, the main findings were replicated.

(iv). Metacognitive performance

The meta-ratio analysis revealed a difference between musicians and non-musicians (musicians: M = 0.69, s.d. = 0.43; non-musicians: M = 0.36, s.d. = 0.62; t132 = −3.58, p < 0.001). However, the meta-ratio was not correlated to the ambiguity effect (r132 = −0.053, p = 0.55). Also, we observed a sizeable proportion of participants with negative meta-ratio values, denoting a higher confidence for incorrect perceptual judgements, or meta-ratio values greater than unity, suggesting that confidence was not entirely based on perceptual information within a signal detection theory framework [41]. To test whether such unexpected response patterns affected the results, we performed an additional metacognitive analysis, retaining only those participants with a meta-ratio of between zero and unity. In this subgroup of 52 musicians and 30 non-musicians, there was no difference in meta-ratio (musicians: M = 0.57, s.d. = 0.26; non-musicians: M = 0.50, s.d. = 0.31; t80 = −1.13, p = 0.26). We also confirmed that the ambiguity effect was maintained for this subgroup (t80 = 2.67, p = 0.009). As in the main experiment, there was therefore no link between the use of the confidence scale and the ambiguity effect.

(v). Correlation with musical expertise

The ambiguity effect became more pronounced (more negative) with years of musical training (figure 3d). The rank-order correlation between the two variables was negative and significant (ρ132 = −0.25, p = 0.004). Inevitably, the measure ‘years of musical training’ was partly confounded by ‘age’. Also, one outlier participant with 25 years of musical training displayed an especially strong ambiguity effect, which could in part have driven the correlation. We performed an additional correlation restricting the analysis to participants younger than 35 years, retaining 73 musicians and 41 non-musicians (and thus excluding the outlier, who was over 35 years). Age did not differ across the two subgroups (t112 = −0.97, p = 0.3). Even when matching age and removing outliers, the correlation between years of musical practice and the ambiguity effect was maintained (ρ112 = −0.37, p < 0.001).

4. Control experiment: discerning component tones

(a). Rationale

The main experiment and its online replication suggest that musicians were aware of the ambiguity at 6 st, whereas non-musicians were not. Following our initial hypothesis, this would be consistent with a perceptual difference between musicians and non-musicians: musicians were able to discern the component tones of the stimuli, which enabled them to discover the ambiguity. But it is also possible that the difference was in the report itself. Possibly, non-musician participants also heard out the different component tones, but were unable to discover the ambiguity. We assessed this possibility by making component tones easier to discern for non-musicians, through acoustic manipulation. We compared conditions where all component tones started in synchrony, as in the previous two experiments, with conditions where the onset of each tone was jittered in time. Successive components of complex chords that would be normally fused can be explicitly compared when onset jitter is applied [36]. We hypothesized that, if non-musicians could discern component tones thanks to onset jitter, they would behave as the musicians of the main experiment.

(b). Method

(i). Participants

Sixteen naive participants were tested (age M = 24, s.d. = 0.8, 6 men and 10 women). All had less than 5 years of formal musical training and were thus labelled non-musicians by our criterion. They were paid for their participation.

(ii). Stimuli

Three values of temporal jitter were used. For 0-ms jitter, stimuli were T1–T2 test pairs generated as in the main experiment. For 50-ms jitter, a time jitter was introduced on the onset of individual component tones. Nine different onset-time values, linearly spaced between 0 and 50 ms, were assigned, at random on each trial, to the components of T1 and T2 independently. For 100-ms jitter, the jitter values were linearly spaced between 0 and 100 ms. We tested intervals of 0, 2, 4, 6, 8 and 10 st between T1 and T2, as in the online experiment. An intertrial sequence of five tones, of the same type of those in previous experiments and thus with no jitter, was presented after each trial.

(iii). Apparatus and procedure

Participants were tested individually in a sound-insulated booth, as in the main experiment. They performed six blocks, with a short rest after each block. The total duration of the experiment including the rests was approximately 3 h, performed in a single session. During the first two blocks, only stimuli with 0-ms jitter were presented, with 40 repeats per interval. This was intended as a replication of the main experiment for this group of naive participants. We term these initial two blocks the ‘baseline’ condition. For the following four blocks, all intervals and jitter values (0, 50 and 100 ms) were presented at random, with 40 repeats per interval and jitter value. We designate these conditions by their jitter values. Note that the baseline and 0-ms jitter conditions used the same stimuli, with no temporal jitter. However, the conditions differed because baseline trials were presented in blocks that only contained sounds without jitter, to replicate the main experiment, whereas 0-ms jitter trials were interleaved with trials for the 50- and 100-ms jitter conditions. Also, the baseline condition blocks were performed first. Participants responded through a computer keyboard. Response times were not recorded. All other details of the apparatus were as in the main experiment.

(c). Results

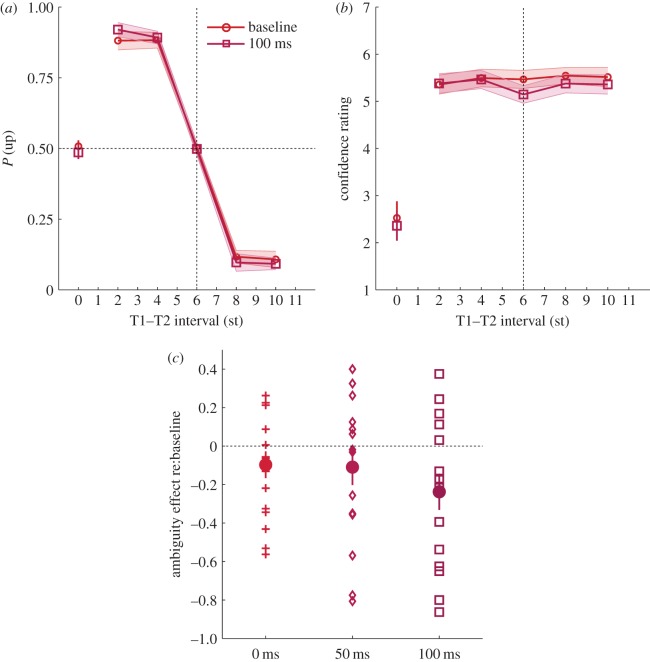

(i). Pitch direction response

Psychometric curves were fitted to P(up) for each participant. For clarity, because results were visually similar, we only display the results for baseline and the maximal jitter value of 100 ms (figure 4a). The accuracy of participants, as measured by σ for the fitted psychometric functions, was equivalent for baseline and 100-ms jitter (t15 = −1.01, p = 0.33). There was also no difference in the point of subjective equality (t15 = 0.40, p = 0.7), which was not different from 6 st in both cases (baseline: M = 6.0, s.d. = 0.28; t15 = −0.46, p < 0.65; 100 ms: M = 6.0, s.d. = 0.52; t15 = 0.30, p < 0.76). In summary, temporal jitter had no measurable effect on the pitch-shift direction task.

Figure 4.

Results of the control experiment. (a) The proportion of ‘up’ responses, P(up), is displayed for each interval tested in the control experiment. Format as in figure 2a. Only results for the baseline condition (no jitter) and 100 ms condition (maximum jitter) are displayed, for clarity. (b) Confidence ratings. Format as in figure 2b. (c) For each participant, we normalized the ambiguity effect observed in each condition by subtracting the ambiguity effect observed for the baseline. The panel displays individual means and standard errors about the means. Negative values signal a dip in confidence for the ambiguous case, when jitter was applied. (Online version in colour.)

(ii). Confidence ratings

Baseline results replicated the main and online experiments for non-musicians, but a dip in confidence appeared for these non-musicians at a jitter of 100 ms (figure 4b). As before, we compared confidence for the non-ambiguous cases (2, 4, and 10 st) to confidence for the ambiguous case (6 st). For the baseline condition, we found no ambiguity effect (t15 = −0.10, p = 0.92). To quantify the effect of jitter on a participant by participant basis, we normalized the ambiguity effect observed for each jitter condition by subtracting the ambiguity effect observed for the baseline condition. Figure 4c displays the individual data for such normalized ambiguity effects. A negative ambiguity effect (a decrease in confidence for the ambiguous case) would correspond to the behaviour of musicians in the main experiment. Not all participants exhibited such a negative ambiguity effect, but for some of them the effect was as large as for musicians. The ambiguity effect differed from the baseline value only for the 100-ms condition (0 ms: t15 = −1.40, p = 0.18; 50 ms: t15 = −1.18, p = 0.26; 100 ms: t15 = −2.52, p = 0.024). However, when contrasting the three jitter conditions against each other, there was no significant difference (all pairwise comparisons p > 0.17).

(iii). Metacognitive performance

There was only one group of participants in this experiment, but we still tested whether the ambiguity effect might have been related to a different use of the confidence scale across conditions. This was not the case, as variations in the ambiguity effect between baseline and 100-ms jitter were not correlated to variations in meta-ratio (r14 = 0.24, p = 0.38).

(iv). Interim discussion

Non-musicians exhibited a significant confidence dip for the non-ambiguous interval when a large temporal jitter was introduced, while for the baseline condition no dip was observed. However, there was some variability across participants. Furthermore, results were statistically less clear cut when all jitter conditions were interleaved (note also that we did not correct for multiple comparisons). The lack of difference across jitter conditions is likely due to a trend towards an ambiguity effect for 0 ms (figure 4c), even though this condition corresponded to the same stimulus as for the baseline but presented in a different context. A possible interpretation of this contextual effect is that non-musicians were able to partially discern the component tones, even without jitter, but only when their attention had been drawn to the structure of the stimulus by other trials that contained jitter. A further point to consider is that we did not test whether the jitter values were sufficient for all participants to discern component tones, which may also account for part of the variability across participants.

5. Discussion

Results from the three experiments can be summarized as follows. In the main experiment, all participants responded with ‘up’ and ‘down’ responses in equal measure when judging the pitch direction of an ambiguous stimulus. The same pattern of chance result was, predictably, observed when they compared two physically identical stimuli. However, confidence differed between the two cases: participants were more confident in their judgement for the ambiguous tones than for identical tones. Non-musician participants were even more confident for ambiguous tones, for which they were at chance, than for non-ambiguous tones, for which they could perform the task accurately. As the ambiguous tones also contained the largest interval between tones, results for non-musician participants are consistent with confidence mirroring the size of the perceived pitch shift, irrespective of stimulus ambiguity. Musicians provided the same pattern of responses for ‘up’ and ‘down’ judgements as non-musicians, but, importantly, response patterns differed markedly for confidence ratings. For musicians, confidence was lower for the ambiguous tones than for the non-ambiguous tones. In both groups of participants, response times mirrored the confidence ratings, with higher confidence corresponding to faster responses. The online experiment replicated those findings on a larger cohort (n = 134), further demonstrating that the ambiguity effect was correlated with years of musical training. The control experiment showed that at least some naive non-musicians, initially unaware of the ambiguity, could exhibit an ambiguity effect when component tones were easier to discern. This confirms that the difference between musicians and non-musicians was perceptual and not purely decisional.

What was the nature of the perceptual difference between musicians and non-musicians? Based on prior evidence [29,30] combined with the control experiment, we hypothesize that musicians were better able to discern acoustic components within Shepard tones. Discerning the components would have revealed that two opposite pitch-shift directions were available, leading to low confidence in the forced-choice task. The advantage previously demonstrated for musicians when discerning tones within a chord [30] or a complex tone background [28] did not seem to be based on enhanced peripheral frequency selectivity (although see [45]). In our case, the octave spacing of Shepard tones was also greater than the frequency separation required to discern partials [46], so we can rule out a difference in the accuracy of peripheral representations.

Another possible difference between listeners, perhaps due to more central processes, is the distinction first introduced by Helmholtz between ‘analytic’ and ‘holistic’ listeners [47]. Stimuli containing frequency shifts changing in one direction if one focuses on individual components, or the opposite direction if one focuses on the (missing) fundamental frequency, have been used to characterize such a difference. Analytic listeners focus on individual components, whereas holistic listeners focus on the missing fundamental. In one study, the analytic/holistic pattern of response was found to be correlated with brain anatomy, but not musicianship [48]. A later behavioural investigation suggested, in contrast, that musicians were overall more holistic [49]. Recent data indicate that listeners may change their listening style according to the task or stimulus parameters [50]. In our case, musicians would be classified as analytic if they spontaneously heard out component tones within ambiguous stimuli. Non-musicians were able to switch from holistic to analytic when the component tones were easier to discern. This confirms that the holistic/analytic distinction is task dependent [50].

We would argue that a more general characteristic is relevant to the interindividual differences we observed: the ability to focus on sub-parts of a perceptual scene. In the visual modality, experts such as drawing artists [51] or video-game enthusiasts [52] have been shown to better resist visual crowding (the inability to distinguish items presented simultaneously in the visual periphery even though each item would be easily recognized on its own). This has been interpreted as enhanced ‘attentional resolution’ for experts. The notion of attentional resolution can be transposed to auditory perception, through what has been termed ‘informational masking’ [53,54]. Informational masking is defined as the impairment in detecting a target caused by irrelevant background sounds, in the absence of any overlap between targets and maskers at a peripheral level of representation [53]. An effect of auditory expertise has been shown for informational masking. Musicians are less susceptible to informational masking than non-musicians when using complex maskers such as random tone clouds with a large degree of uncertainty [28], environmental sounds [20] or simultaneous speech [23]. This has been interpreted as better attentional resolution for musicians [28]. Importantly, informational masking can also occur in simpler situations, through a failure to perceptually segregate target and background [53]. The Shepard tones we used certainly challenged perceptual segregation, because component tones had synchronous onsets and were all spaced by octaves [55]. Scene analysis processes related to informational masking may thus have caused non-musicians to hear Shepard tones as perceptual units, while reduced informational masking may have helped musicians to hear out component tones. Moreover, in the control experiment, asynchronous onsets between components were introduced, a manipulation that has been shown to reduce informational masking [56]. This led non-musicians to behave qualitatively like musicians, again consistent with an implication of informational masking in our task.

We now come back to the distinction, drawn in §1, between vague and polar percepts. The results suggest that, for non-musicians, perception was polar: the intrinsic ambiguity of the stimuli was unavailable to awareness. This is consistent with recent observations using bistable visual stimuli [8]. In this visual study, the degree of ambiguity of bistable stimuli (defined as the proximity to an equal probability of reporting either percept) was varied, and reaction times were collected for the report of the first percept. No increase in reaction time was observed for more ambiguous stimuli, consistent with an unawareness of ambiguity, as in our results. Why would ambiguity, a potentially useful cue, not be registered by observers? One hypothesis is that ambiguity is not a valid property of perceptual organization at any given moment: either an acoustic feature belongs to one source, or it does not. This has been formulated as a principle of exclusive allocation for auditory scene analysis [55,57]. However, there are exceptions to this principle, as for instance when a mistuned harmonic within a harmonic complex is heard out from the complex, but still contributes to the overall pitch of the complex [44,46,58]. Another hypothesis, perhaps more specific to our experimental set-up, involves perceptual binding over time. Shepard tones are made up of several frequency components, which have to be paired over time to define a pitch shift. If musicians heard Shepard tones as perceptual units, this may have biased the binding processes towards a single and unambiguous direction of shift. By contrast, if musicians were able to discern components, they may have experienced contradictory directions of pitch shift and thus ambiguity.

To conclude, we must point out that these interpretations remain speculative, especially as we have no direct evidence that musicians heard out component tones. Further tests could include counterparts to our control experiment, increasing informational masking to prevent musicians from discerning component tones. This could be achieved, for instance, by presenting shorter duration sounds. Context effects could also be used to bias perceptual binding in a consistent manner across components [33], again predicting higher confidence for musicians. Irrespective of the underlying mechanisms, the mere existence of polar percepts demonstrates that a seemingly obvious feature of sensory information, ambiguity, is sometimes unavailable to perceptual awareness. The precise conditions required for polar perception remain to be explored experimentally. Ambiguity in the stimulus often causes multistable perception [9], but it is still unknown whether all multistable stimuli elicit polar perception, or whether all polar percepts are associated with spontaneous perceptual alternations over time. By analogy to categorical perception, it could also be tested whether polar perception is a feature of conscious processing and absent from subliminal processing [59]. Finally, we showed that ambiguity was not experienced in the same way by different observers, so polar perception may be another useful trait to consider when investigating interindividual differences.

6. Conclusion

Ambiguous auditory stimuli [2,4,33] were judged with high confidence and fast reaction times by naive non-musician listeners, showing that those listeners were unaware of the physical ambiguity of the sounds. This confirms an untested assumption in previous reports about those stimuli [2,4,33,60]. By contrast, musicians judged ambiguous stimuli with less confidence and slower reaction times, suggesting that they did perceive the ambiguity. We interpreted this interindividual difference as an enhanced attentional resolution in crowded perceptual scenes for musicians [28–30]. From a methodological perspective, we showed robust effects in confidence judgements for matched performance within participants (identical sounds versus ambiguous sounds), and robust interindividual differences for matched performance and matched stimuli (musicians versus non-musicians). Ambiguous stimuli may thus provide a useful tool for probing the neural bases of interindividual differences in perception.

Supplementary Material

Acknowledgements

We thank Maxime Sirbu for implementing the online experiment. We also thank Brian C. J. Moore and two anonymous reviewers for useful comments and suggestions on a previous version of this manuscript.

Ethics

Ethical approval was provided by the CERES IRB #20142500001072 (Université Paris Descartes, France).

Data accessibility

Data archives are provided as the electronic supplementary material. Each file provides data for one figure panel, identified by the file name. Data are provided for musicians (m in the file name) and non-musicians (nm in the file name).

Authors' contributions

D.P. and P.E. initiated the research. C.P., D.P. and V.d.G. designed the experiments. C.P. collected the data. C.P. and V.d.G. analysed the data. All authors interpreted the data and drafted the manuscript.

Competing interests

We have no competing interest.

Funding

C.P. and D.P. were supported by grant ERC ADAM nos 295603, EU H2020 COCOHA 644732. C.P., P.E. and D.P. were supported by grant nos ANR-10-LABX-0087 IEC and ANR-10-IDEX-0001-02 PSL. P.E. was partly supported by a fellowship from the Swedish Collegium for Advanced Study.

References

- 1.Brainard DH, Hurlbert AC. 2015. Colour vision: understanding #thedress. Curr. Biol. 25, R551–R554. ( 10.1016/j.cub.2015.05.020) [DOI] [PubMed] [Google Scholar]

- 2.Deutsch D. 1986. A musical paradox. Music Percept. 3, 275–280. ( 10.2307/40285337) [DOI] [Google Scholar]

- 3.Kanai R, Rees G. 2011. The structural basis of inter-individual differences in human behaviour and cognition. Nat. Rev. Neurosci. 12, 231–242. ( 10.1038/nrn3000) [DOI] [PubMed] [Google Scholar]

- 4.Shepard RN. 1964. Circularity in judgments of relative pitch. J. Acoust. Soc. Am. 36, 2346 ( 10.1121/1.1919362) [DOI] [Google Scholar]

- 5.Kleinschmidt A, Sterzer P, Rees G. 2012. Variability of perceptual multistability: from brain state to individual trait. Phil. Trans. R. Soc. B 367, 988–1000. ( 10.1098/rstb.2011.0367) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Moreno S, Bidelman GM. 2014. Examining neural plasticity and cognitive benefit through the unique lens of musical training. Hear. Res. 308, 84–97. ( 10.1016/j.heares.2013.09.012) [DOI] [PubMed] [Google Scholar]

- 7.de Gardelle V, Mamassian P. 2015. Weighting mean and variability during confidence judgments. PLoS ONE 10, e0120870 ( 10.1371/journal.pone.0120870) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Takei S. 2010. Perceptual ambiguity of bistable visual stimuli causes no or little increase in perceptual latency. J. Vis. 10, 1–15. ( 10.1167/10.4.23) [DOI] [PubMed] [Google Scholar]

- 9.Schwartz JL, Grimault N, Hupe JM, Moore BCJ, Pressnitzer D. 2012. Multistability in perception: binding sensory modalities, an overview. Phil. Trans. R. Soc. B 367, 896–905. ( 10.1098/rstb.2011.0254) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wexler M, Duyck M, Mamassian P. 2015. Persistent states in vision break universality and time invariance. Proc. Natl Acad. Sci. USA 112, 14 990–14 995. ( 10.1073/pnas.1508847112) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Deutsch D. 1991. The tritone paradox: an influence of language on music perception. Music Percep. 8, 335–347. ( 10.2307/40285517) [DOI] [Google Scholar]

- 12.Repp BH. 1994. The tritone paradox and the pitch range of the speaking voice: a dubious connection. Music Percept. 12, 227–255. ( 10.2307/40285653) [DOI] [Google Scholar]

- 13.Fleming SM, Weil RS, Nagy Z, Dolan RJ, Rees G. 2010. Relating introspective accuracy to individual differences in brain structure. Science 329, 1541–1543. ( 10.1126/science.1191883) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vogel EK, Awh E. 2008. How to exploit diversity for scientific gain: using individual differences to constrain cognitive theory. Curr. Dir. Psychol. Sci. 17, 171–176. ( 10.1111/j.1467-8721.2008.00569.x) [DOI] [Google Scholar]

- 15.Lin I-F, Shirama A, Kato N, Kashino M. 2017. The singular nature of auditory and visual scene analysis in autism. Phil. Trans. R. Soc. B 372, 20160115 ( 10.1098/rstb.2016.0115) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kondo HM, van Loon AM, Kawahara J-I, Moore BCJ. 2017. Auditory and visual scene analysis: an overview. Phil. Trans. R. Soc. B 372, 20160099 ( 10.1098/rstb.2016.0099) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Takeuchi T, Yoshimoto S, Shimada Y, Kochiyama T, Kondo HM. 2017. Individual differences in visual motion perception and neurotransmitter concentrations in the human brain. Phil. Trans. R. Soc. B 372, 20160111 ( 10.1098/rstb.2016.0111) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kashino M, Kondo HM. 2012. Functional brain networks underlying perceptual switching: auditory streaming and verbal transformations. Phil. Trans. R. Soc. B 367, 977–987. ( 10.1098/rstb.2011.0370) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zatorre R, McGill J. 2005. Music, the food of neuroscience? Nature 434, 312–315. ( 10.1038/434312a) [DOI] [PubMed] [Google Scholar]

- 20.Carey D, Rosen S, Krishnan S, Pearce MT, Shepherd A, Aydelott J, Dick F. 2015. Generality and specificity in the effects of musical expertise on perception and cognition. Cognition 137, 81–105. ( 10.1016/j.cognition.2014.12.005) [DOI] [PubMed] [Google Scholar]

- 21.Parbery-Clark A, Skoe E, Lam C, Kraus N. 2009. Musician enhancement for speech-in-noise. Ear Hear. 30, 653–661. ( 10.1097/AUD.0b013e3181b412e9) [DOI] [PubMed] [Google Scholar]

- 22.Ruggles DR, Freyman RL, Oxenham AJ. 2014. Influence of musical training on understanding voiced and whispered speech in noise. PLoS ONE 9, e86980 ( 10.1371/journal.pone.0086980) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Swaminathan J, Mason CR, Streeter TM, Best V, Gerald Kidd J, Patel AD. 2015. Musical training, individual differences and the cocktail party problem. Sci. Rep. 5, 11628 ( 10.1038/srep11628) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Başkent D, Gaudrain E. 2016. Musician advantage for speech-on-speech perception. J. Acoust. Soc. Am. 139, EL51–EL56. ( 10.1121/1.4942628) [DOI] [PubMed] [Google Scholar]

- 25.Boebinger D, Evans S, Rosen S, Lima CF, Manly T, Scott SK. 2015. Musicians and non-musicians are equally adept at perceiving masked speech. J. Acoust. Soc. Am. 137, 378–387. ( 10.1121/1.4904537) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bey C, McAdams S. 2002. Schema-based processing in auditory scene analysis. Percept. Psychophys. 64, 844–854. ( 10.3758/BF03194750) [DOI] [PubMed] [Google Scholar]

- 27.Beauvois MW, Meddis R. 1997. Time decay of auditory stream biasing. Percept. Psychophys. 59, 81–86. ( 10.3758/BF03206850) [DOI] [PubMed] [Google Scholar]

- 28.Oxenham AJ, Fligor BJ, Mason CR, Kidd G. 2003. Informational masking and musical training. J. Acoust. Soc. Am. 114, 1543–1547. ( 10.1121/1.1598197) [DOI] [PubMed] [Google Scholar]

- 29.Zendel BR, Alain C. 2009. Concurrent sound segregation is enhanced in musicians. J. Cogn. Neurosci. 21, 1488–1498. ( 10.1162/jocn.2009.21140) [DOI] [PubMed] [Google Scholar]

- 30.Fine PA, Moore BCJ. 1993. Frequency analysis and musical ability. Music Percept. 11, 39–53. ( 10.2307/40285598) [DOI] [Google Scholar]

- 31.Raffman D. 2014. Unruly words: a study of vague language. Oxford, UK: Oxford University Press. [Google Scholar]

- 32.Egré P, de Gardelle V, Ripley D. 2013. Vagueness and order effects in color categorization. J. Logic Lang. Inform. 22, 391–420. ( 10.1007/s10849-013-9183-7) [DOI] [Google Scholar]

- 33.Chambers C, Pressnitzer D. 2014. Perceptual hysteresis in the judgment of auditory pitch shift. Atten. Percept. Psychophys. 76, 1271–1279. ( 10.3758/s13414-014-0676-5) [DOI] [PubMed] [Google Scholar]

- 34.Repp BH, Knoblich G. 2007. Action can affect auditory perception. Psychol. Sci. 18, 6–7. ( 10.1111/j.1467-9280.2007.01839.x) [DOI] [PubMed] [Google Scholar]

- 35.Kalisvaart JP, Klaver I, Goossens J. 2011. Motion discrimination under uncertainty and ambiguity. J. Vis. 11, 20 ( 10.1167/11.1.20) [DOI] [PubMed] [Google Scholar]

- 36.Demany L, Semal C, Pressnitzer D. 2011. Implicit versus explicit frequency comparisons: two mechanisms of auditory change detection. J. Exp. Psychol. Hum. Percept. Perform. 37, 597–605. ( 10.1037/a0020368) [DOI] [PubMed] [Google Scholar]

- 37.Semal C, Demany L. 2006. Individual differences in the sensitivity to pitch direction. J. Acoust. Soc. Am. 120, 3907–3909. ( 10.1121/1.2357708) [DOI] [PubMed] [Google Scholar]

- 38.Mathias SR, Micheyl C, Bailey PJ. 2010. Stimulus uncertainty and insensitivity to pitch-change direction. J. Acoust. Soc. Am. 127, 3026 ( 10.1121/1.3365252) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Foxton JM, Brown ACB, Chambers S, Griffiths TD. 2004. Training improves acoustic pattern perception. Curr. Biol. 14, 322–325. ( 10.1016/j.cub.2004.02.001) [DOI] [PubMed] [Google Scholar]

- 40.Wichmann FA, Hill NJ. 2001. The psychometric function: I. Fitting, sampling, and goodness of fit. Percept. Psychophys. 63, 1293–1313. ( 10.3758/BF03194544) [DOI] [PubMed] [Google Scholar]

- 41.Maniscalco B, Lau H. 2012. A signal detection theoretic approach for estimating metacognitive sensitivity from confidence ratings. Conscious. Cogn. 21, 422–430. ( 10.1016/j.concog.2011.09.021) [DOI] [PubMed] [Google Scholar]

- 42.Maniscalco B, Lau H. 2014. Signal detection theory analysis of type 1 and type 2 data: meta-d′, response-specific meta-d′, and the unequal variance SDT model. In The cognitive neuroscience of metacognition, pp. 25–66. Berlin, Germany: Springer. [Google Scholar]

- 43.Fleming SM, Lau HC. 2014. How to measure metacognition. Front. Hum. Neurosci. 8, 443 ( 10.3389/fnhum.2014.00443) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Moore B, Peters RW, Glasberg BR. 1985. Thresholds for the detection of inharmonicity in complex tones. J. Acoust. Soc. Am. 77, 1861 ( 10.1121/1.391937) [DOI] [PubMed] [Google Scholar]

- 45.Bidelman GM, Schug JM, Jennings SG, Bhagat SP. 2014. Psychophysical auditory filter estimates reveal sharper cochlear tuning in musicians. J. Acoust. Soc. Am. 136, EL33–EL39. ( 10.1121/1.4885484) [DOI] [PubMed] [Google Scholar]

- 46.Moore BC, Glasberg BR, Peters RW. 1986. Thresholds for hearing mistuned partials as separate tones in harmonic complexes. J. Acoust. Soc. Am. 80, 479–483. ( 10.1121/1.394043) [DOI] [PubMed] [Google Scholar]

- 47.Schneider P, Wengenroth M. 2009. The neural basis of individual holistic and spectral sound perception. Contemp. Music Rev. 28, 315–328. ( 10.1080/07494460903404402) [DOI] [Google Scholar]

- 48.Schneider P, et al. 2005. Structural and functional asymmetry of lateral Heschl's gyrus reflects pitch perception preference. Nat. Neurosci. 8, 1241–1247. ( 10.1038/nn1530) [DOI] [PubMed] [Google Scholar]

- 49.Seither-Preisler A, Johnson L, Krumbholz K, Nobbe A, Patterson R, Seither S, Lütkenhöner B. 2007. Tone sequences with conflicting fundamental pitch and timbre changes are heard differently by musicians and nonmusicians. J. Exp. Psychol. Hum. Percept. Perform. 33, 743–751. ( 10.1037/0096-1523.33.3.743) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ladd DR, Turnbull R, Browne C, Caldwell-Harris C, Ganushchak L, Swoboda K, Woodfield V, Dediu D. 2013. Patterns of individual differences in the perception of missing-fundamental tones. J. Exp. Psychol. Hum. Percept. Perform. 39, 1386–1397. ( 10.1037/a0031261) [DOI] [PubMed] [Google Scholar]

- 51.Perdreau F, Cavanagh P. 2014. Drawing skill is related to the efficiency of encoding object structure. i-Perception 5, 101–119. ( 10.1068/i0635) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Green CS, Bavelier D. 2007. Action-video-game experience alters the spatial resolution of vision. Psychol. Sci. 18, 88–94. ( 10.1111/j.1467-9280.2007.01853.x) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kidd G, Mason CR, Richards VM, Gallun FJ, Durlach NI. 2007. Informational masking. Boston, MA: Springer. [Google Scholar]

- 54.Durlach NI, Mason CR, Kidd G, Arbogast TL, Colburn HS, Shinn-Cunningham BG. 2003. Note on informational masking (L). J. Acoust. Soc. Am. 113, 2984 ( 10.1121/1.1570435) [DOI] [PubMed] [Google Scholar]

- 55.Bregman AS. 1990. Auditory scene analysis. The perceptual organization of sound. Cambridge, MA: MIT Press. [Google Scholar]

- 56.Neff DL. 1995. Signal properties that reduce masking by simultaneous, random-frequency maskers. J. Acoust. Soc. Am. 98, 1909–1920. ( 10.1121/1.414458) [DOI] [PubMed] [Google Scholar]

- 57.Shinn-Cunningham BG, Lee AKC, Oxenham AJ. 2007. A sound element gets lost in perceptual competition. Proc. Natl Acad. Sci. USA 104, 12 223–12 227. ( 10.1073/pnas.0704641104) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Moore BCJ. 1985. Relative dominance of individual partials in determining the pitch of complex tones. J. Acoust. Soc. Am. 77, 1853 ( 10.1121/1.391936) [DOI] [Google Scholar]

- 59.de Gardelle V, Charles L, Kouider S. 2011. Perceptual awareness and categorical representation of faces: evidence from masked priming. Conscious. Cogn. 20, 1272–1281. ( 10.1016/j.concog.2011.02.001) [DOI] [PubMed] [Google Scholar]

- 60.Repp BH, Thompson JM. 2009. Context sensitivity and invariance in perception of octave-ambiguous tones. Psychol. Res. 74, 437–456. ( 10.1007/s00426-009-0264-9) [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data archives are provided as the electronic supplementary material. Each file provides data for one figure panel, identified by the file name. Data are provided for musicians (m in the file name) and non-musicians (nm in the file name).