Abstract

Over the last 50 years, we argue that incentives for academic scientists have become increasingly perverse in terms of competition for research funding, development of quantitative metrics to measure performance, and a changing business model for higher education itself. Furthermore, decreased discretionary funding at the federal and state level is creating a hypercompetitive environment between government agencies (e.g., EPA, NIH, CDC), for scientists in these agencies, and for academics seeking funding from all sources—the combination of perverse incentives and decreased funding increases pressures that can lead to unethical behavior. If a critical mass of scientists become untrustworthy, a tipping point is possible in which the scientific enterprise itself becomes inherently corrupt and public trust is lost, risking a new dark age with devastating consequences to humanity. Academia and federal agencies should better support science as a public good, and incentivize altruistic and ethical outcomes, while de-emphasizing output.

Keywords: : academic research, funding, misconduct, perverse incentives, scientific integrity

Introduction

The incentives and reward structure of academia have undergone a dramatic change in the last half century. Competition has increased for tenure-track positions, and most U.S. PhD graduates are selecting careers in industry, government, or elsewhere partly because the current supply of PhDs far exceeds available academic positions (Cyranoski et al., 2011; Stephan, 2012a; Aitkenhead, 2013; Ladner et al., 2013; Dzeng, 2014; Kolata, 2016). Universities are also increasingly “balance<ing> their budgets on the backs of adjuncts” given that part-time or adjunct professor jobs make up 76% of the academic labor force, while getting paid on average $2,700 per class, without benefits or job security (Curtis and Thornton, 2013; U.S. House Committee on Education and the Workforce, 2014). There are other concerns about the culture of modern academia, as reflected by studies showing that the attractiveness of academic research careers decreases over the course of students' PhD program at Tier-1 institutions relative to other careers (Sauermann and Roach, 2012; Schneider et al., 2014), reflecting the overemphasis on quantitative metrics, competition for limited funding, and difficulties pursuing science as a public good.

In this article, we will (1) describe how perverse incentives and hypercompetition are altering academic behavior of researchers and universities, reducing scientific progress and increasing unethical actions, (2) propose a conceptual model that describes how emphasis on quantity versus quality can adversely affect true scientific progress, (3) consider ramifications of this environment on the next generation of Science, Technology, Engineering and Mathematics (STEM) researchers, public perception, and the future of science itself, and finally, (4) offer recommendations that could help our scientific institutions increase productivity and maintain public trust. We hope to begin a conversation among all stakeholders who acknowledge perverse incentives throughout academia, consider changes to increase scientific progress, and uphold “high ethical standards” in the profession (NAE, 2004).

Perverse Incentives in Research Academia: The New Normal?

When you rely on incentives, you undermine virtues. Then when you discover that you actually need people who want to do the right thing, those people don't exist…—Barry Schwartz, Swarthmore College (Zetter, 2009)

Academics are human and readily respond to incentives. The need to achieve tenure has influenced faculty decisions, priorities, and activities since the concept first became popular (Wolverton, 1998). Recently, however, an emphasis on quantitative performance metrics (Van Noorden, 2010), increased competition for static or reduced federal research funding (e.g., NIH, NSF, and EPA), and a steady shift toward operating public universities on a private business model (Plerou, et al., 1999; Brownlee, 2014; Kasperkevic, 2014) are creating an increasingly perverse academic culture. These changes may be creating problems in academia at both individual and institutional levels (Table 1).

Table 1.

Growing Perverse Incentives in Academia

| Incentive | Intended effect | Actual effect |

|---|---|---|

| “Researchers rewarded for increased number of publications.” | “Improve research productivity,” provide a means of evaluating performance. | “Avalanche of” substandard, “incremental papers”; poor methods and increase in false discovery rates leading to a “natural selection of bad science” (Smaldino and Mcelreath, 2016); reduced quality of peer review |

| “Researchers rewarded for increased number of citations.” | Reward quality work that influences others. | Extended reference lists to inflate citations; reviewers request citation of their work through peer review |

| “Researchers rewarded for increased grant funding.” | “Ensure that research programs are funded, promote growth, generate overhead.” | Increased time writing proposals and less time gathering and thinking about data. Overselling positive results and downplay of negative results. |

| Increase PhD student productivity | Higher school ranking and more prestige of program. | Lower standards and create oversupply of PhDs. Postdocs often required for entry-level academic positions, and PhDs hired for work MS students used to do. |

| Reduced teaching load for research-active faculty | Necessary to pursue additional competitive grants. | Increased demand for untenured, adjunct faculty to teach classes. |

| “Teachers rewarded for increased student evaluation scores.” | “Improved accountability; ensure customer satisfaction.” | Reduced course work, grade inflation. |

| “Teachers rewarded for increased student test scores.” | “Improve teacher effectiveness.” | “Teaching to the tests; emphasis on short-term learning.” |

| “Departments rewarded for increasing U.S. News ranking.” | “Stronger departments.” | Extensive efforts to reverse engineer, game, and cheat rankings. |

| “Departments rewarded for increasing numbers of BS, MS, and PhD degrees granted.” | “Promote efficiency; stop students from being trapped in degree programs; impress the state legislature.” | “Class sizes increase; entrance requirements” decrease; reduce graduation requirements. |

| “Departments rewarded for increasing student credit/contact hours (SCH).” | “The university's teaching mission is fulfilled.” | “SCH-maximization games are played”: duplication of classes, competition for service courses. |

Modified from Regehr (pers. comm., 2015) with permission.

Quantitative performance metrics: effect on individual researchers and productivity

The goal of measuring scientific productivity has given rise to quantitative performance metrics, including publication count, citations, combined citation-publication counts (e.g., h-index), journal impact factors (JIF), total research dollars, and total patents. These quantitative metrics now dominate decision-making in faculty hiring, promotion and tenure, awards, and funding (Abbott et al., 2010; Carpenter et al., 2014). Because these measures are subject to manipulation, they are doomed to become misleading and even counterproductive, according to Goodhart's Law, which states that “when a measure becomes a target, it ceases to be a good measure” (Elton, 2004; Fischer et al., 2012; Werner, 2015).

Ultimately, the well-intentioned use of quantitative metrics may create inequities and outcomes worse than the systems they replaced. Specifically, if rewards are disproportionally given to individuals manipulating their metrics, problems of the old subjective paradigms (e.g., old-boys' networks) may be tame by comparison. In a 2010 survey, 71% of respondents stated that they feared colleagues can “game” or “cheat” their way into better evaluations at their institutions (Abbott, 2010), demonstrating that scientists are acutely attuned to the possibility of abuses in the current system.

Quantitative metrics are scholar centric and reward output, which is not necessarily the same as achieving a goal of socially relevant and impactful research outcomes. Scientific output as measured by cited work has doubled every 9 years since about World War II (Bornmann and Mutz, 2015), producing “busier academics, shorter and less comprehensive papers” (Fischer et al., 2012), and a change in climate from “publish or perish” to “funding or famine” (Quake, 2009; Tijdink et al., 2014). Questions have been raised about how sustainable this exponential increase in the knowledge industry is (Price, 1963; Frodeman, 2011) and how much of the growth is illusory and results from manipulation as per Goodhart's Law.

Recent exposés have revealed schemes by journals to manipulate impact factors, use of p-hacking by researchers to mine for statistically significant and publishable results, rigging of the peer-review process itself, and overcitation (Falagas and Alexiou, 2008; Labbé, 2010; Zhivotovsky and Krutovsky, 2008; Bartneck and Kokkelmans, 2011; Delgado López-Cózar et al., 2012; McDermott, 2013; Van Noorden, 2014; Barry, 2015). A fictional character was recently created to demonstrate a “spamming war in the heart of science,” by generation of 102 fake articles and a stellar h-index of 94 on Google Scholar (Labbé, 2010). Blogs describing how to more discretely raise h-index without committing outright fraud are also commonplace (e.g., Dem, 2011).

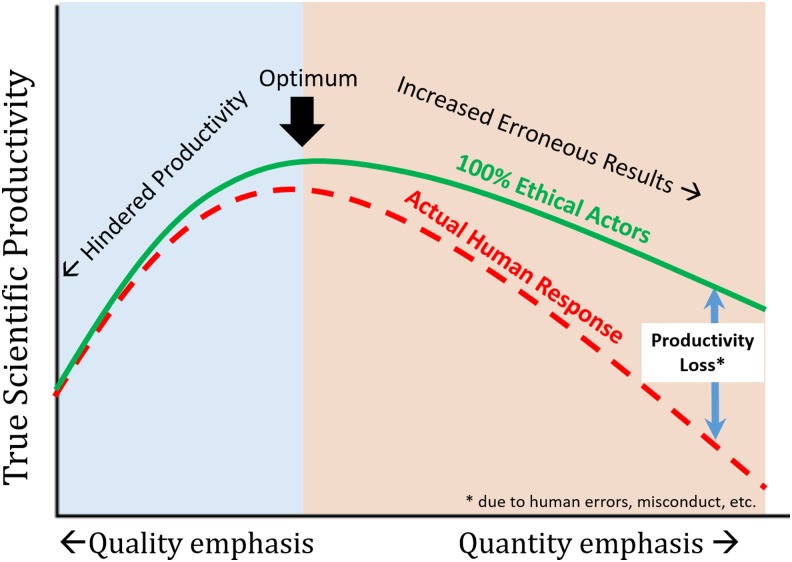

It is instructive to conceptualize the basic problem from a perspective of emphasizing quality-in-research versus quantity-in-research, as well as effects of perverse incentives (Fig. 1). Assuming that the goal of the scientific enterprise is to maximize true scientific progress, a process that overemphasizes quality might require triple or quadruple blinded studies, mandatory replication of results by independent parties, and peer-review of all data and statistics before publication—such a system would minimize mistakes, but would produce very few results due to overcaution (left Fig. 1). At the other extreme, an overemphasis on quantity is also problematic because accepting less scientific rigor in statistics, replication, and quality controls or a less rigorous review process would produce a very high number of articles, but after considering costly setbacks associated with a high error rate, true progress would also be low. A hypothetical optimum productivity lies somewhere in between, and it is possible that our current practices (enforced by peer review) evolved to be near the optimum in an environment with fewer perverse incentives.

FIG. 1.

True scientific productivity vis-à-vis emphasis on research quality/quantity.

However, over the long term under a system of perverse incentives, the true productivity curve changes due to increased manipulation and/or unethical behavior (Fig. 1). In a system overemphasizing quality, there is less incentive to cut corners because checks and balances allow problems to be discovered more easily, but in a system emphasizing quantity, productivity can be dramatically reduced by massive numbers of erroneous articles created by carelessness, subtle falsification (i.e., eliminating bad data), and substandard review if not outright fabrication (i.e., dry labbing).

While there is virtually no research exploring the impact of perverse incentives on scientific productivity, most in academia would acknowledge a collective shift in our behavior over the years (Table 1), emphasizing quantity at the expense of quality. This issue may be especially troubling for attracting and retaining altruistically minded students, particularly women and underrepresented minorities (WURM), in STEM research careers. Because modern scientific careers are perceived as focusing on “the individual scientist and individual achievement” rather than altruistic goals (Thoman et al., 2014), and WURM students tend to be attracted toward STEM fields for altruistic motives, including serving society and one's community (Diekman et al., 2010, Thoman et al., 2014), many leave STEM to seek careers and work that is more in keeping with their values (e.g., Diekman et al., 2010; Gibbs and Griffin, 2013; Campbell, et al., 2014).

Thus, another danger of overemphasizing output versus outcomes and quantity versus quality is creating a system that is a “perversion of natural selection,” which selectively weeds out ethical and altruistic actors, while selecting for academics who are more comfortable and responsive to perverse incentives from the point of entry. Likewise, if normally ethical actors feel a need to engage in unethical behavior to maintain academic careers (Edwards, 2014), they may become complicit as per Granovetter's well-established Threshold Model of Collective Behavior (1978). At that point, unethical actions have become “embedded in the structures and processes” of a professional culture, and nearly everyone has been “induced to view corruption as permissible” (Ashforth and Anand, 2003).

It is also telling that a new genre of articles termed “quit lit” by the Chronicle of Higher Education has emerged (Chronicle Vitae, 2013–2014), in which successful, altruistic, and public-minded professors give perfectly rational reasons for leaving a profession they once loved—such individuals are easily replaced with new hires who are more comfortable with the current climate. Reasons for leaving range from a saturated job market, lack of autonomy, concerns associated with the very structure of academe (CHE, 2013), and “a perverse incentive structure that maintains the status quo, rewards mediocrity, and discourages potential high-impact interdisciplinary work” (Dunn, 2013).

Summary

While quantitative metrics provide an objective means of evaluating research productivity relative to subjective measures, now that they have become a target, they cease to be useful and may even be counterproductive. A continued overemphasis on quantitative metrics will pressure all but the most ethical scientists, to overemphasize quantity at the expense of quality, create pressures to “cut corners” throughout the system, and select for scientists attracted to perverse incentives.

Scientific societies, research institutions, academic journals and individuals have made similar arguments, and some have signed the San Francisco Declaration of Research Assessment (DORA). The DORA recognizes the need for improving “ways in which output of scientific research are evaluated” and calls for challenging research assessment practices, especially the JIF, which are currently in place. Signatories include the American Society for Cell Biology, American Association for the Advancement of Science, Howard Hughes Medical Institute, and Proceedings of The National Academy of Sciences, among 737 organizations and 12,229 individuals as of June 30, 2016. Indeed, publishers of Nature, Science, and other journals have called for downplaying the JIF metric, and the American Society of Microbiology is announcing plans to “purge the conversation of the impact factor” and remove them from all their journals (Callaway, 2016). The argument is not to get rid of metrics, but to reduce their importance in decision-making by institutions and funding agencies, and perhaps invest resources toward creating more meaningful metrics (ACSB, 2012). DORA would be a step in the right direction of halting the “avalanche” of performance metrics dominating research assessment, which are unreliable and have long been hypothesized to threaten the quality of research (Rice, 1994; Macilwain, 2013).

Performance metrics: effect on institutions

We had to get into the top 100. That was a life-or-death matter for Northeastern.—Richard Freeland, Former President of Northeastern University (Kutner, 2014)

The perverse incentives for academic institutions are growing in scope and impact, as best exemplified by U.S. News & World Report annual rankings that purportedly measure “academic excellence” (Morse, 2015). The rankings have strongly influenced, positively or negatively, public perceptions regarding the quality of education and opportunities they offer (Casper, 1996; Gladwell, 2011; Tierney, 2013). Although U.S. News & World Report rankings have been dismissed by some, they still undeniably wield extraordinary influence on college administrators and university leadership—the perceptions created by the objective quantitative ranking determines “how students, parents, high schools, and colleges pursue and perceive education” in practice (Kutner, 2014; Segal, 2014).

The rankings rely on subjective proprietary formula and algorithms, the original validity of which has since been undermined by Goodhart's law—universities have attempted to game the system by redistributing resources or investing in areas that the ranking metrics emphasize. Northeastern University, for instance, unapologetically rose from #162 in 1996 to #42 in 2015 by explicitly changing their class sizes, acceptance rates, and even peer assessment. Others have cheated by reporting incorrect statistics (Bucknell University, Claremont-McKenna College, Clemson University, George Washington University, and Emory University are examples of those who were caught) to rise in the ranks (Slotnik and Perez-Pena, 2012; Anderson, 2013; Kutner, 2014). More than 90% of 576 college admission officers thought other institutions were submitting false data to U.S. News according to a 2013 Gallup and Inside Higher Ed poll (Jaschik, 2013), which creates further pressures to cheat throughout the system to maintain a ranking perceived to be fair as discussed in preceding sections.

Hypercompetitive funding environments

If the work you propose to do isn't virtually certain of success, then it won't get funded—Roger Kornberg, Nobel laureate (Lee, 2007)

The only people who can survive in this environment are people who are absolutely passionate about what they're doing and have the self-confidence and competitiveness to just go back again and again and just persistently apply for funding—Robert Waterland, Baylor College of Medicine (Harris and Benincasa, 2014)

The federal government's role in financing research and development (R&D), creating new knowledge, or fulfilling public missions like national security, agriculture, infrastructure, and environmental health has become paramount. The cost of high-risk, long-term research, which often has uncertain prospects and/or utility, has been largely borne by the U.S. government in the aftermath of World War II, forming part of an ecosystem with universities and industries contributing to the collective progress of mankind (Bornmann and Mutz, 2015; Hourihan, 2015).

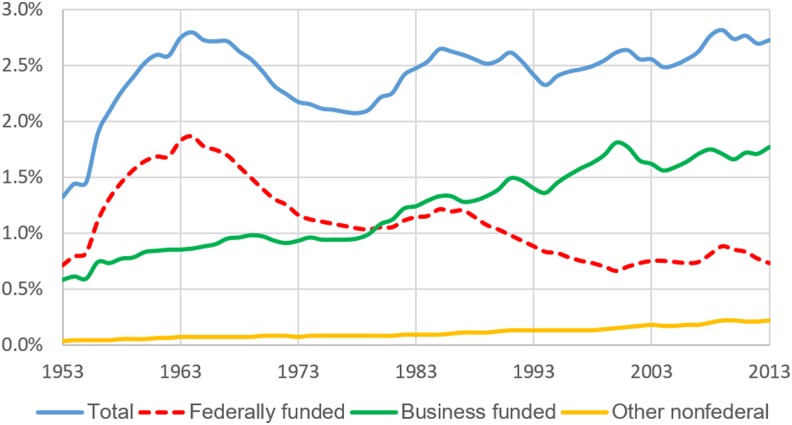

However, in the current competitive global environment where China is projected to outspend the U.S. on R&D by 2020, some worry that the “edifice of American innovation rests on an increasingly rickety foundation” because of a decline in spending on federal R&D in the past decade (Casassus, 2014; OECD, 2014; MIT, 2015; Porter, 2015). U.S. “Research Intensity” (i.e., federal R&D as a share of the country's gross domestic product or GDP) has declined to 0.78% (2014), which is down from about 2% in the 1960 s (Fig. 2). With discretionary spending of federal budgets projected to decrease, research intensity is likely to drop even further, despite increased industry funding (Hourihan, 2015).

FIG. 2.

Trends in research intensity (i.e., ratio of U.S. R&D to gross domestic product), roles of federal, business, and other nonfederal funding for R&D: 1953–2013. Data source: National Science Foundation, National Center for Science and Engineering Statistics, National Patterns of R&D Resources (annual series). R&D, research and development.

A core mission of American colleges and universities has been “service to the public,” and this goal will be more difficult to reach as universities morph into profit centers churning out patents and new products (Faust, 2009; Mirowski, 2011; Brownlee, 2014; Hinkes-Jones, 2014; Seligsohn, 2015; American Academy of Arts and Sciences, 2016). Until the late 2000s, research institutions and universities went on a building spree fueled by borrowing, with an expectation that increased research funding would allow them to further boost research productivity—a cycle that went bust after the 2007–2008 financial crash (Stephan, 2012a). Universities are also allowed to offset debt from ill-fated expansion efforts as indirect costs (Stephan, 2012b), which increases overhead and decreases dollars available to spend on research even if funds raised by grants remain constant.

The static or declining federal investment in research has created the “worst research funding <scenario in 50 years>” and further ratcheted competition for funding (Lee, 2007; Quake, 2009; Harris and Benincasa, 2014; Schneider et al., 2014; Stein, 2015), given that the number of researchers competing for grants is rising. The funding rate for NIH grants fell from 30.5% to 18% between 1997 and 2014, and the average age for a first time PI on an R01-equivalent grant has increased to 43 years (NIH, 2008, 2015). NSF funding rates have remained stagnant between 23 and 25% in the past decade (NSF, 2016). While these funding rates are still well above the breakeven point of 6%, at which the net cost of proposal writing equals the net value obtained from a grant by the grant winner (Cushman et al., 2015), there is little doubt the grant environment is hypercompetitive, susceptible to reviewer biases, and strongly dependent on prior success as measured by quantitative metrics (Lawrence, 2009; Fang and Casadevall, 2016). Researchers must tailor their thinking to align with solicited funding, and spend about half of their time addressing administrative and compliance, drawing focus away from scientific discovery and translation (NSB, 2014; Schneider et al., 2014; Belluz et al., 2016).

Systemic Risks to Scientific Integrity

Science is a human endeavor, and despite its obvious historical contributions to advancement of civilization, there is growing evidence that today's research publications too frequently suffer from lack of replicability, rely on biased data-sets, apply low or substandard statistical methods, fail to guard against researcher biases, and their findings are overhyped (Fanelli, 2009; Aschwanden, 2015; Belluz and Hoffman, 2015; Nuzzo, 2015; Gobry, 2016; Wilson, 2016). A troubling level of unethical activity, outright faking of peer review and retractions, has been revealed, which likely represents just a small portion of the total, given the high cost of exposing, disclosing, or acknowledging scientific misconduct (Marcus and Oransky, 2015; Retraction Watch, 2015a; BBC, 2016; Borman, 2016). Warnings of systemic problems go back to at least 1991, when NSF Director Walter E. Massey noted that the size, complexity, and increased interdisciplinary nature of research in the face of growing competition was making science and engineering “more vulnerable to falsehoods” (The New York Times, 1991).

Misconduct is not limited to academic researchers. Federal agencies are also subject to perverse incentives and hypercompetition, giving rise to a new phenomenon of institutional scientific research misconduct (Lewis, 2014; Edwards, 2016). Recent exemplars uncovered by the first author in the Flint and Washington D.C. drinking water crises include “scientifically indefensible” reports by the U.S. Centers for Disease Control and Prevention (U.S. Centers for Disease Control and Prevention, 2004; U.S. House Committee on Science and Technology, 2010), reports based on nonexistent data published by the U.S. EPA and their consultants in industry journals (Reiber and Dufresne, 2006; Boyd et al., 2012; Edwards, 2012; Retraction Watch, 2015b; U.S. Congress House Committee on Oversight and Government Reform, 2016), and silencing of whistleblowers in EPA (Coleman-Adebayo, 2011; Lewis, 2014; U.S. Congress House Committee on Oversight and Government Reform, 2015). This problem is likely to increase as agencies increasingly compete with each other for reduced discretionary funding. It also raises legitimate and disturbing questions as to whether accepting research funding from federal agencies is inherently ethical or not—modern agencies clearly have conflicts similar to those that are accepted and well understood for industry research sponsors. Given the mistaken presumption of research neutrality by federal funding agencies (Oreskes and Conway, 2010), the dangers of institutional research misconduct to society may outweigh those of industry-sponsored research (Edwards, 2014).

A “trampling of the scientific ethos” witnessed in areas as diverse as climate science and galvanic corrosion undermines the “credibility of everyone in science” (Bedeian et al., 2010; Oreskes and Conway, 2010; Edwards, 2012; Leiserowitz et al., 2012; The Economist, 2013; BBC, 2016). The Economist recently highlighted the prevalence of shoddy and nonreproducible modern scientific research and its high financial cost to society—posing an open question as to whether modern science was trustworthy, while calling upon science to reform itself (The Economist, 2013). And, while there are hopes that some problems could be reduced by practices that include open data, open access, postpublication peer review, metastudies, and efforts to reproduce landmark studies, these can only partly compensate for the high error rates in modern science arising from individual and institutional perverse incentives (Fig. 1).

The high costs of research misconduct

The National Science Foundation defines research misconduct as intentional “fabrication, falsification, or plagiarism in proposing, performing, or reviewing research, or in reporting research results” (Steneck, 2007; Fischer, 2011). Nationally, the percentage of guilty respondents in research misconduct cases investigated by the Department of Health and Human Services (includes NIH) and NSF ranges from 20% to 33% (U.S. Department of Health and Human Services, 2013; Kroll, 2015, pers. comm.). Direct costs of handling each research misconduct case are $525,000, and over $110 million are incurred annually for all such cases at the institutional level in the U.S (Michalek, et al., 2010). A total of 291 articles retracted due to misconduct during 1992–2012 accounted for $58 M in direct funding from the NIH, which is less than 1% of the agency's budget during this period, but each retracted article accounted for about $400,000 in direct costs (Stern et al., 2014).

Obviously, incidence of undetected misconduct is some multiple of the cases judged as such each year, and the true incidence is difficult to predict. A comprehensive meta-analysis of research misconduct surveys between 1987 and 2008 indicated that 1 in 50 scientists admitted to committing misconduct (fabrication, falsification, and/or modifying data) at least once and 14% knew of colleagues who had done so (Fanelli, 2009). These numbers are likely an underestimate considering the sensitivity of the questions asked, low response rates, and the Muhammad Ali effect (a self-serving bias where people perceive themselves as more honest than their peers) (Allison et al., 1989). Indeed, delving deeper, 34% of researchers self-reported that they have engaged in “questionable research practices,” including “dropping data points on a gut feeling” and “changing the design, methodology, and results of a study in response to pressures from a funding source,” whereas 72% of those surveyed knew of colleagues who had done so (Fanelli, 2009). One study included in Fanelli's meta-analysis looked at rates of exposure to misconduct for 2,000 doctoral students and 2,000 faculty from the 99 largest graduate departments of chemistry, civil engineering, microbiology, and sociology, and found between 6 and 8% of both students and faculty had direct knowledge of faculty falsifying data (Swazey et al., 1993).

In life science and biomedical research, the percentage of scientific articles retracted has increased 10-fold since 1975, and 67% were due to misconduct (Fang et al., 2012). Various hypotheses are proposed for this increase, including “lure of the luxury journal,” “pathological publishing,” prevalent misconduct policies, academic culture, career stage, and perverse incentives (Martinson et al., 2009; Harding et al., 2012; Laduke, 2013; Schekman, 2013; Buela-Casal, 2014; Fanelli et al., 2015; Marcus and Oransky, 2015; Sarewitz, 2016). Nature recently declared that “pretending research misconduct does not happen is no longer an option” (Nature, 2015).

Academia and science are expected to be self-policing and self-correcting. However, based on our experiences, we believe there are incentives throughout the system that induce all stakeholders to “pretend misconduct does not happen.” Science has never developed a clear system for reporting, investigating, or dealing with allegations of research misconduct, and those individuals who do attempt to police behavior are likely to be frustrated and suffer severe negative professional repercussions (Macilwain, 1997; Kevles, 2000; Denworth, 2008). Academics largely operate on an unenforceable and unwritten honor system, in relation to what is considered fair in reporting research, grant writing practices, and “selling” research ideas, and there is serious doubt as to whether science as a whole can actually be considered self-correcting (Stroebe et al., 2012). While there are exceptional cases where individuals have provided a reality check on overhyped research press releases in areas deemed potentially transformative (e.g., Eisen, 2010–2015; New Scientist, 2016), limitations of hot research sectors are more often downplayed or ignored. Because every modern scientific mania also creates a quantitative metric windfall for participants and there are few consequences for those responsible after a science bubble finally pops, the only true check on pathological science and a misallocation of resources is the unwritten honor system (Langmuir et al., 1953).

If nothing is done, we will create a corrupt academic culture

The modern academic research enterprise, dubbed a “Ponzi Scheme” by The Economist, created the existing perverse incentive system, which would have been almost inconceivable to academics of 30–50 years ago (The Economist, 2010). We believe that this creation is a threat to the future of science, and unless immediate action is taken, we run the risk of “normalization of corruption” (Ashforth and Anand, 2003), creating a corrupt professional culture akin to that recently revealed in professional cycling or in the Atlanta school cheating scandal.

To review, for the 7 years Lance Armstrong won the Tour de France (1999–2005), 20 out of 21 podium finishers (including Armstrong) were directly tied to doping through admissions, sanctions, public investigations, or failing blood tests. Entire teams cheated together because of a “win-at-all cost culture” that was created and sustained over time because there was no alternative in sight (U.S. ADA, 2012; Rose and Fisher, 2013; Saraceno, 2013). Numerous warning signs were ignored, and a retrospective analysis indicates that more than half of all Tour de France winners since 1980 had either been tested positive for or confessed to doping (Mulvey, 2012). The resultant “culture of doping” put clean athletes under suspicion (CIRC, 2015; Dimeo, 2015) and ultimately brought worldwide disrepute to the sport.

Likewise, the Atlanta Public Schools (APS) scandal provides another example of a perverse incentive system run to its logical conclusion, but in an educational setting. Twelve former APS employees were sent to prison and dozens faced ethics sanctions for falsifying students' results on state-standardized tests. The well-intentioned quantitative test results became high stakes to the APS employees, because the law “trigger[s] serious consequences for students (like grade promotion and graduation); schools (extra resources, reorganization, or closure); districts (potential loss of federal funds), and school employees (bonuses, demotion, poor evaluations, or firing)” (Kamenetz, 2015). The APS employees betrayed their stated mission of creating a “caring culture of trust and collaboration [where] every student will graduate ready for college and career,” and participated in creating the illusion of a “high-performing school district” (APS, 2016). Clearly, perverse incentives can encourage unethical behavior to manipulate quantitative metrics, even in an institution where the sole goal was to educate children.

An uncontrolled perverse incentive system can create a climate in which participants feel they must cheat to compete, whether it is academia (individual or institutional level) or professional sports. While procycling was ultimately discredited and its rewards were not properly distributed to ethical participants, in science, the loss of altruistic actors and trust, and risk of direct harm to the public and the planet raise the dangers immeasurably.

What Kind of Profession Are We Creating for the Next Generation of Academics?

So I have just one wish for you—the good luck to be somewhere where you are free to maintain the kind of integrity I have described, and where you do not feel forced by a need to maintain your position in the organization, or financial support, or so on, to lose your integrity. May you have that freedom—Richard Feynman, Nobel laureate (Feynman, 1974)

The culture of academia has undergone dramatic change in the last few decades—quite a bit of it has been for the better. Problems with diversity, work-life balance, funding, efficient teaching, public outreach, and engagement have been recognized and partly addressed.

As stewards of the profession, we should continually consider whether our collective actions will leave our field in a state that is better or worse than when we entered it. While factors such as state and federal funding levels are largely beyond our control, we are not powerless and passive actors. Problems with perverse incentives and hypercompetition could be addressed by the following:

(1) The scope of the problem must be better understood, by systematically mining the experiences and perceptions held by academics in STEM fields, through a comprehensive survey of high-achieving graduate students and researchers.

(2) The National Science Foundation should commission a panel of economists and social scientists with expertise in perverse incentives, to collect and review input from all levels of academia, including retired National Academy members and distinguished STEM scholars. The panel could also develop a list of “best practices” to guide evaluation of candidates for hiring and promotion, from a long-term perspective of promoting science in the public interest and for the public good, and maintain academia as a desirable career path for altruistic ethical actors.

(3) Rather than pretending that the problem of research misconduct does not exist, science and engineering students should receive instruction on these subjects at both the undergraduate and graduate levels. Instruction should include a review of real world pressures, incentives, and stresses that can increase the likelihood of research misconduct.

(4) Beyond conventional goals of achieving quantitative metrics, a PhD program should also be viewed as an exercise in building character, with some emphasis on the ideal of practicing science as service to humanity (Huber, 2014).

(5) Universities need to reduce perverse incentives and uphold research misconduct policies that discourage unethical behavior.

Acknowledgments

The authors wish to thank PhD Candidate William Rhoads from Virginia Tech and three anonymous reviewers from Environmental Engineering Science for their assistance with the article and valuable suggestions.

Author Disclosure Statement

No competing financial interests exist.

References

- Abbott A., Cyranoski D., Jones N., Maher B., Schiermeier Q., and Van Noorden R. (2010). Metrics: Do metrics matter? Nature. 465, 860. [DOI] [PubMed] [Google Scholar]

- Aitkenhead D. (2013, Dec. 6). Peter Higgs: I wouldn't be productive enough for today's academic system. The Guardian. Available at: www.theguardian.com/science/2013/dec/06/peter-higgs-boson-academic-system (accessed September16, 2016)

- Allison S.T., Messick D.M., and Goethals G.R. (1989). On being better but not smarter than others: The Muhammad Ali effect. Soc. Cogn. 7, 275 [Google Scholar]

- American Academy of Arts and Sciences. (2016). Public Research Universities: Serving the Public Good. Cambridge, MA: American Academy of Arts and Sciences [Google Scholar]

- American Society of Cell Biology (ACSB). (2012). San Francisco Declaration on Research Assessment. Available at: www.ascb.org/dora (accessed September16, 2016)

- Anderson N. (2013, Feb. 6). Five colleges misreported data to U.S. News, raising concerns about rankings, reputation. The Washington Post. Available at: www.washingtonpost.com/local/education/five-colleges-misreported-data-to-us-news-raising-concerns-about-rankings-reputation/2013/02/06/cb437876-6b17-11e2-af53-7b2b2a7510a8_story.html (accessed September16, 2016)

- Aschwanden C. (2015, Aug. 19). Science isn't broken. FiveThirtyEight. Available at: http://fivethirtyeight.com/features/science-isnt-broken (accessed September16, 2016)

- Ashforth B.E., and Anand V. (2003). The normalization of corruption in organizations. Res. Organ. Behav. 25, 1 [Google Scholar]

- Atlanta Public Schools (APS). (2016). About APS | Mission and Vision. Available at: www.atlanta.k12.ga.us/Domain/35 (accessed September16, 2016)

- Barry F. (2015, Apr. 1). Peer-review cheating ring discredits another 43 papers. In-Pharma Technologist. Available at: www.in-pharmatechnologist.com/Regulatory-Safety/Peer-review-cheating-ring-discredits-another-43-papers (accessed September16, 2016)

- Bartneck C., and Kokkelmans S. (2011). Detecting h-index manipulation through self-citation analysis. Scientometrics. 87, 85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedeian A.G., Taylor S.G., and Miller A.N. (2010). Management science on the credibility bubble: Cardinal sins and various misdemeanors. Acad. Manage. Learn. Educ. 9, 715 [Google Scholar]

- Belluz J., and Hoffman S. (2015, May 13). Science is often flawed. Its time we embraced that. Vox. Available at: www.vox.com/2015/5/13/8591837/how-science-is-broken (accessed September16, 2016)

- Belluz J., Plumer B., and Resnick B. (2016, Jul. 14). The 7 biggest problems facing science, according to 270 scientists. Vox. Available at: www.vox.com/2016/7/14/12016710/science-challeges-research-funding-peer-review-process (accessed September16, 2016)

- Borman S. (2016). Nanoparticle synthesis paper retracted after 12 years. Chemical and Engineering News. Available at: http://cen.acs.org/articles/94/i8/Nanoparticle-Synthesis-Paper-Retracted-After-12-Years.html (accessed September16, 2016)

- Bornmann L., and Mutz R. (2015). Growth rates of modern science: A bibliometric analysis based on the number of publications and cited references. J. Assoc. Inform. Sci. Technol. 66, 2215 [Google Scholar]

- Boyd G.R., Reiber S.H., McFadden M., and Korshin G. (2012). Effect of changing water quality on galvanic coupling. J. AWWA. 104, E136 [Google Scholar]

- British Broadcasting Corporation (BBC). (2016). Saving science from the scientists: Episode 2. BBC. Available at: www.bbc.co.uk/programmes/b07378cr (accessed September16, 2016)

- Brownlee J.K. (2014). Irreconcilable Differences: The Corporatization of Canadian Universities (Doctoral dissertation). Carleton University; Available at: https://curve.carleton.ca/b945d1f1-64d4-40eb-92d2-1a29effe0f76 (accessed September16, 2016) [Google Scholar]

- Buela-Casal G. (2014). Pathological publishing: A new psychological disorder with legal consequences? Eur. J. Psychol. Appl. Legal Context. 6, 91 [Google Scholar]

- Callaway E. (2016). Beat it, impact factor! Publishing elite turns against controversial metric. Nature. Available at: www.nature.com/news/beat-it-impact-factor-publishing-elite-turns-against-controversial-metric-1.20224 (accessed September16, 2016) [DOI] [PubMed]

- Campbell A.G., Skvirsky R., Wortis H., Thomas S., Kawachi I., and Hohmann C. (2014). Nest 2014: Views from the trainees-talking about what matters in efforts to diversify the stem workforce. CBE Life Sci. Educ. 13, 587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter C.R., Cone D.C., and Sarli C.C. (2014). Using publication metrics to highlight academic productivity and research impact. Acad. Emerg. Med. 21, 1160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casassus B. (2014). China predicted to outspend the US on science by 2020. Nature. Available at: www.nature.com/news/china-predicted-to-outspend-the-us-on-science-by-2020-1.16329 (accessed September16, 2016)

- Casper G. (1996). Criticism of College Rankings. Letter from Stanford President Gerhard Casper to James Fallows, Editor of U.S. News & World Report. Available at: http://web.stanford.edu/dept/pres-provost/president/speeches/961206gcfallow.html (accessed September16, 2016)

- Chronicle of Higher Education (CHE). (2013). What we talk about when we talk about quitting. Chronicle of Higher Education. Available at: https://chroniclevitae.com/news/215-what-we-talk-about-when-we-talk-about-quitting?cid=vem (accessed September16, 2016)

- Chronicle Vitae (2013–2014). Quit lit. Chronicle Vitae. Available at: https://chroniclevitae.com/news/tags/Quit%20Lit (accessed September16, 2016)

- Coleman-Adebayo M. (2011). No Fear: A Whistleblower's Triumph Over Corruption and Retaliation at the EPA. Chicago, IL: Chicago Review Press [Google Scholar]

- Curtis J.W., and Thornton S. (2013). Here's the news: The annual report on the economic status of the profession 2012–13. Academe. 99, 4 [Google Scholar]

- Cushman P., Hoeksema J.T., Kouveliotou C., Lowenthal J., Peterson B., Stassun K.G., and von Hippel T. (2015). Impact of declining proposal success rates on scientific productivity. AAAC Proposal Pressures Study Group Discussion Draft. Available at: www.nsf.gov/attachments/134636/public/proposal_success_rates_aaac_final.pdf (accessed September16, 2016)

- Cycling Independent Reform Commission (CIRC). (2015). Report to the President of the Union Cyclist Internationale. Available at: www.uci.ch/mm/Document/News/CleanSport/16/87/99/CIRCReport2015_Neutral.pdf (accessed September16, 2016)

- Cyranoski D., Gilbert N., Ledford H., Nayar A., and Yahia M. (2011). Education: The PhD factory. Nature. 472, 276. [DOI] [PubMed] [Google Scholar]

- Delgado López-Cózar N., Robinson-García N., and Torres-Salinas D. (2012). Manipulating Google scholar citations and Google scholar metrics: Simple, easy and tempting. EC3 Working Papers. 6: 29 May, 2012 [Google Scholar]

- Dem G. (2011). How to Increase Your Papers Citation and H Index. Available at: www.academia.edu/934257/How_to_increase_your_papers_citations_and_ h_index_in_5_simple_steps (accessed September16, 2016)

- Denworth L. (2008). Toxic Truth: A Scientist, a Doctor, and the Battle Over Lead. Boston, MA: Beacon Press [Google Scholar]

- Diekman A.B., Brown E.R., Johnston A.M., and Clark E.K. (2010). Seeking congruity between goals and roles: A new look at why women opt out of science, technology, engineering, and mathematics careers. Psychol. Sci. 21, 1051. [DOI] [PubMed] [Google Scholar]

- Dimeo P. (2015, Jul. 10). How cycling's dark history continues to haunt the Tour de France. The Conversation. Available at: http://theconversation.com/how-cyclings-dark-history-continues-to-haunt-the-tour-de-france-44312 (accessed September16, 2016)

- Dunn S. (2013, Dec. 12). Why so many academics quit and tell. Chronicle of Higher Education. Available at: https://chroniclevitae.com/news/216-why-so-many-academics-quit-and-tell (accessed September16, 2016)

- Dzeng E. (2014). How academia and publishing are destroying scientific innovation: A conversation with Sydney Brenner. Elizabeth Dzeng's Blog; Available at: https://elizabethdzeng.com/2014/02/26/how-academia-and-publishing-are-destroying-scientific-innovation-a-conversation-with-sydney-brenner/ (accessed September16, 2016) [Google Scholar]

- Edwards M.A. (2012). Discussion: Effect of changing water quality on galvanic coupling. J. Am. Water Works Assoc. 104, 65 [Google Scholar]

- Edwards M.A. (2014). Foreword. In Lewis D.L., Ed., Science for Sale. New York: Skyhorse Publishing, pp. ix–xiii [Google Scholar]

- Edwards M.A. (2016). Institutional Scientific Misconduct at U.S. Public Health Agencies: How Malevolent Government Betrayed Flint, MI. Testimony to the U.S. Cong. Committee on Oversight and Government Reform on Examining Federal Administration of the Safe Drinking Water Act in Flint, Michigan Hearing, February 3, 2016. 112th Congress. 2nd session Available at: https://oversight.house.gov/wp-content/uploads/2016/02/Edwards-VA-Tech-Statement-2-3-Flint-Water.pdf (accessed September16, 2016) [Google Scholar]

- Eisen J.A. (2010–2015). Microbiomania. Personal Blog. Available at: http://phylogenomics.blogspot.com/p/blog-page.html (accessed September16, 2016)

- Elton L. (2004). Goodhart's law and performance indicators in higher education. Eval. Res. Educ. 18, 120 [Google Scholar]

- Falagas M.E., and Alexiou V.G. (2008). The top-ten in journal impact factor manipulation. Arch Immunol Ther Exp (Warsz). 56, 223. [DOI] [PubMed] [Google Scholar]

- Fanelli D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS One. 4, e5738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fanelli D., Costas R., and Larivière V. (2015). Misconduct policies, academic culture and career stage, not gender or pressures to publish, affect scientific integrity. PLoS One. 10, e0127556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fang F.C., and Casadevall A. (2016). Research funding: The case for a modified lottery. mBio. 7, e00422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fang F.C., Grant Steen R., and Casadevall A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proc. Natl. Aacd. Sci. U. S. A. 109, 17028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faust D.G. (2009, Sep. 1). The University's Crisis of Purpose. The New York Times. Available at: www.nytimes.com/2009/09/06/books/review/Faust-t.html?_r=0 (accessed September16, 2016)

- Feynman R.P. (1974). Cargo Cult Science: Caltech's 1974 Commencement Address. Available at: http://calteches.library.caltech.edu/51/2/CargoCult.htm (accessed September16, 2016)

- Fischer P. (2011). New Research Misconduct Policies. National Science Foundation; Available at: www.nsf.gov/oig/_pdf/presentations/session.pdf (accessed September16, 2016) [Google Scholar]

- Fischer J., Ritchie E.G., and Hanspach J. (2012). An academia beyond quantity: A reply to Loyola et al. and Halme et al. Trends Ecol. Evol. 27, 587. [DOI] [PubMed] [Google Scholar]

- Frodeman R. (2011). Interdisciplinary research and academic sustainability: Managing knowledge in an age of accountability. Environ. Conserv. 38, 105 [Google Scholar]

- Gibbs K.D., and Griffin K.A. (2013). What do I want to be with my PhD? The roles of personal values and structural dynamics in shaping the career interests of recent biomedical science phd graduates. CBE Life Sci. Educ. 12, 711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gladwell M. (2011, Feb. 24). The order of things. The New Yorker. Available at: www.newyorker.com/magazine/2011/02/14/the-order-of-things (accessed September16, 2016)

- Gobry P.-E. (2016, Apr. 18). Big science is broken. The Week. Available at: http://theweek.com/articles/618141/big-science-broken (accessed September16, 2016)

- Harding T.S., Carpenter D.D., and Finelli C.J. (2012). An exploratory investigation of the ethical behavior of engineering undergraduates. J. Eng. Educ. 101, 346 [Google Scholar]

- Harris R., and Benincasa R. (2014, Sep. 9). U.S. science suffering from booms and busts in funding. National Public Radio. Available at: www.npr.org/sections/health-shots/2014/09/09/340716091/u-s-science-suffering-from-booms-and-busts-in-funding (accessed September16, 2016)

- Hinkes-Jones L. (2014). Bad science. Jacobin. Available at: www.jacobinmag.com/2014/06/bad-science (accessed September16, 2016)

- Hourihan M. (2015). Federal R&D Budget Trends: A Short Summary. Available at: www.aaas.org/program/rd-budget-and-policy-program (accessed September16, 2016)

- Huber B.R. (2014, Sep. 22). Scientists seen as competent but not trusted by Americans. Woodrow Wilson Research Briefs. Available at: wws.princeton.edu/news-and-events/news/item/scientists-seen-competent-not-trusted-americans (accessed September16, 2016)

- Jaschik S. (2013, Sep. 18). Feeling the Heat: The 2013 Survey of College and University Admissions Directors. Inside Higher Ed, www.insidehighered.com/news/survey/feeling-heat-2013-survey-college-and-university-admissions-directors (accessed September16, 2016) [Google Scholar]

- Kamenetz A. (2015, Apr. 1). The Atlanta cheating verdict: Some context. National Public Radio. Available at: www.npr.org/blogs/ed/2015/04/01/396869874/the-atlanta-cheating-verdict-some-context (accessed September16, 2016)

- Kasperkevic J. (2014, Oct. 7). The harsh truth: US colleges are businesses, and student loans pay the bills. The Guardian. Available at: www.theguardian.com/money/us-money-blog/2014/oct/07/colleges-ceos-cooper-union-ivory-tower-tuition-student-loan-debt (accessed September16, 2016)

- Kevles D.J. (2000). The Baltimore Case: A Trial of Politics, Science, and Character. New York: W. W. Norton & Company [Google Scholar]

- Kolata G. (2016, Jul. 14). So many research scientists, so few openings as professors. The New York Times. Available at: www.nytimes.com/2016/07/14/upshot/so-many-research-scientists-so-few-openings-as-professors.html (accessed September16, 2016)

- Kutner M. (2014, Sep.). How Northeastern university gamed the college rankings. Boston Magazine. Available at: www.bostonmagazine.com/news/article/2014/08/26/how-northeastern-gamed-the-college-rankings (accessed September16, 2016)

- Labbé C. (2010). Ike Antkare One of the Great Stars in the Scientific Firmament. Laboratoire d'Informatique de Grenoble Research Report No. RR-LIG-008. Available at: http://rr.liglab.fr/research_report/RR-LIG-008.pdf (accessed September16, 2016)

- Ladner D.A., Bolyard S.C., Apul D., and Whelton A.J. (2013). Navigating the academic job search for environmental engineers: Guidance for job seekers and mentors. J. Prof. Issues Eng. Educ. Pract. 139, 211 [Google Scholar]

- Laduke R.D. (2013). Academic dishonesty today, unethical practices tomorrow? J. Prof. Nurs. 29, 402. [DOI] [PubMed] [Google Scholar]

- Langmuir I. (1953). Colloquium on Pathological Science. Knolls Research Laboratory, December 18, 1953. Available at: www.cs.princeton.edu/∼ken/Langmuir/langmuir.htm (accessed September16, 2016)

- Lawrence P.A. (2009). Real lives and white lies in the funding of scientific research. PLoS Biol. 7, e1000197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee C. (2007, May 28). Slump in NIH funding is taking toll on research. The Washington Post. Available at: www.washingtonpost.com/wp-dyn/content/article/2007/05/27/AR2007052700794.html (accessed September16, 2016)

- Leiserowitz A.A., Maibach E.W., Roser-Renouf C., Smith N., and Dawson E. (2012). Climategate, public opinion and the loss of trust. Am. Behav. Scientist. 57, 818 [Google Scholar]

- Lewis D.L. (2014). Science for Sale. New York: Skyhorse Publishing [Google Scholar]

- Macilwain C. (1997). Whistleblowers face blast of hostility. Nature. 385, 669. [DOI] [PubMed] [Google Scholar]

- Macilwain C. (2013). Halt the avalanche of performance metrics. Nature. 500, 255. [DOI] [PubMed] [Google Scholar]

- Marcus A., and Oransky I. (2015, May 22). What's behind big science frauds? The New York Times. Available at: www.nytimes.com/2015/05/23/opinion/whats-behind-big-science-frauds.html (accessed September16, 2016)

- Martinson B.C., Crain A.L., Anderson M.S., and De Vries R. (2009). Institutions' expectations for researchers' self-funding, federal grant holding, and private industry involvement: Manifold drivers of self-interest and researcher behavior. Acad. Med. 84, 1491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massachusetts Institute of Technology (MIT). (2015). The Future Postponed. A Report by the MIT Committee to evaluate the innovation deficit. Available at: https://dc.mit.edu/sites/default/files/Future%20Postponed.pdf (accessed September16, 2016)

- McDermott J. (2013, Mar. 7). Gaming the system: How to get an astronomical h-index with little scientific impact. Personal Blog. Available at: http://jasonya.com/wp/gaming-the-system-how-to-get-an-astronomical-h-index-with-little-scientific-impact (accessed September16, 2016)

- Michalek A.M., Hutson A.D., Wicher C.P., and Trump D.L. (2010). The costs and underappreciated consequences of research misconduct: A case study. PLoS Med. 7, e1000318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mirowski P. (2011). Science-Mart. Boston, MA: Harvard University Press [Google Scholar]

- Morse R. (2015, Mar. 27). Debunking myths about The U.S. news best colleges rankings. U.S. News & World Report. Available at: www.usnews.com/education/blogs/college-rankings-blog/2015/03/27/debunking-myths-about-the-us-news-best-colleges-rankings (accessed September16, 2016)

- Mulvey S. (2012, Oct. 12). Lance Armstrong: Tyler Hamilton on ‘how US Postal cheated’. BBC News. Available at: www.bbc.com/news/magazine-19912623 (accessed September16, 2016)

- National Academy of Engineering (NAE). (2004). The Engineer of 2020: Visions of Engineering in the New Century. Washington, DC: The National Academies Press [Google Scholar]

- National Institutes of Health (NIH). (2008). Average Age of Principal Investigators. Available at: https://report.nih.gov/NIH_Investment/PDF_sectionwise/NIH_Extramural_DataBook_PDF/NEDB_SPECIAL_TOPIC-AVERAGE_AGE.pdf (accessed September16, 2016)

- National Institutes of Health (NIH). (2015). NIH Research Portfolio Online Reporting Tools–Research Project Success Rates of NIH Institute. Available at: https://report.nih.gov/success_rates/Success_ByIC.cfm (accessed September16, 2016)

- National Science Board (NSB). (2014). Reducing Investigators' Administrative Workload for Federally Funded Research. Report NSB-14-18. Arlington, VA: National Science Foundation [Google Scholar]

- National Science Foundation (NSF). (2016). Budget Internet Information System: Providing Statistical and Funding Information. Available at: http://dellweb.bfa.nsf.gov/awdfr3/default.asp (accessed September16, 2016)

- Nature. (2015, May 19). Editorial: Publish or perish. Nature. 521, 15925993919 [Google Scholar]

- New Scientist. (2016, May 17). Microbiomania: The Truth Behind the Hype About Our Bodily Bugs. Available at: www.newscientist.com/article/2088613-microbiomania-the-truth-behind-the-hype-about-our-bodily-bugs (accessed September16, 2016)

- Nuzzo R. (2015, Oct. 7). How scientists fool themselves–and how they can stop. Nature. 526, 182. [DOI] [PubMed] [Google Scholar]

- OECD. (2014). OECD Science, Technology and Industry Outlook 2014. Paris: OECD Publishing [Google Scholar]

- Oreskes N., and Conway E.M. (2010). Merchants of Doubt. New York: Bloomsbury Press; [DOI] [PubMed] [Google Scholar]

- Plerou V., Nunes Amaral L.A., Gopikrishnan P., Meyer M., and Stanley H.G. (1999). Similarities between the growth dynamics of university research and of competitive economic activities. Nature. 400, 433 [Google Scholar]

- Porter E. (2015, May 19). American Innovation Lies on Weak Foundation. The New York Times. Available at: www.nytimes.com/2015/05/20/business/economy/american-innovation-rests-on-weak-foundation.html (accessed September16, 2016)

- Price D.J.D.-S. (1963). Little Science, Big Science… and Beyond. New York: Columbia University Press [Google Scholar]

- Quake S. (2009). Letting scientists off the leash. The New York Times Blog, February 10

- Reiber S., and Dufresne L. (2006). Effects of External Currents and Dissimilar Metal Contact on Corrosion of Lead from Lead Service Lines. Philadelphia: Final Report to USEPA region III [Google Scholar]

- Retraction Watch. (2015a, Nov. 5). Got the Blues? You Can Still See Blue: Popular Paper on Sadness, Color Perception Retracted. Available at: http://retractionwatch.com/2015/11/05/got-the-blues-you-can-still-see-blue-after-all-paper-on-sadness-and-color-perception-retracted (accessed September16, 2016)

- Retraction Watch. (2015b). Expression of Concern Opens Floodgates of Controversy Over Lead in Water Supply. Available at: http://retractionwatch.com/2015/04/15/expression-of-concern-opens-floodgates-of-controversy-over-lead-in-water-supply (accessed September16, 2016)

- Rice J.R. (1994). Metrics to Evaluate Academic Departments. Purdue University Computer Science Technical Reports. Paper 1148

- Rose C., and Fisher N. (2013). Following Lance Armstrong: Excellence corrupted. Harvard Business Review, Case Study # 314015-PDF-ENG, Available at: https://hbr.org/product/following-lance-armstrong-excellence-corrupted/an/314015-PDF-ENG (accessed September16, 2016)

- Saraceno J. (2013, Jan. 18). Lance Armstrong: ‘I was trying to win at all costs.’ USA Today. Available at: www.usatoday.com/story/sports/cycling/2013/01/18/lance-armstrong-oprah-winfrey-television-interview-tour-de-france-drugs/1843329 (accessed September16, 2016)

- Sarewitz D. (2016). The pressure to publish pushes down quality. Nature. 533, 147. [DOI] [PubMed] [Google Scholar]

- Sauermann H., and Roach M. (2012). Science PhD career preferences: Levels, changes, and advisor encouragement. PLoS One. 7, e36307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schekman R. (2013, Dec. 9). How journals like Nature, Cell and Science are damaging science. The Guardian. Available at: www.theguardian.com/commentisfree/2013/dec/09/how-journals-nature-science-cell-damage-science (accessed September16, 2016)

- Schneider R.L., Ness K.L., Rockwell S., Shaver S., and Brutkiewicz R. (2014). 2012 Faculty Workload Survey Research Report. National Academy of Sciences, Engineering, and Medicine; Available at: http://sites.nationalacademies.org/cs/groups/pgasite/documents/webpage/pga_087667.pdf (accessed September16, 2016) [Google Scholar]

- Segal M. (2014, Sep. 15). The big college ranking sham: Why you must ignore U.S. News and World's Report list. Salon. Available at: www.salon.com/2014/09/15/the_big_college_ranking_sham_why_you_must_ignore_u_s_news_and_worlds_report_list (accessed September16, 2016)

- Seligsohn A. (2015, Apr. 29). An observation about the mission of higher education. Campus Compact. Available at: http://compact.org/resource-posts/an-observation-about-the-mission-of-higher-education (accessed September16, 2016)

- Slotnik D.E., and Perez-Pena R. (2012, Jan. 30). “College Says It Exaggerated SAT Figures for Ratings.” The New York Times. www.nytimes.com/2012/01/31/education/claremont-mckenna-college-says-it-exaggerated-sat-figures.html?smid=pl-share&_r=0 (accessed September16, 2016)

- Smaldino P.E., and McElreath R. (2016). The natural selection of bad science. arXiv. Available at: https://arxiv.org/abs/1605.09511 (accessed September16, 2016) [DOI] [PMC free article] [PubMed]

- Stein S. (2015, Mar. 3). Congrats young scientists, you face the worst research funding in 50 years. The Huffington Post. Available at: www.huffingtonpost.com/2015/03/03/francis-colliins-nih-funding_n_6795900.html (accessed September16, 2016)

- Steneck N.H. (2007). ORI: Introduction to the Responsible Conduct of Research. U.S. Department of Health and Human Services. Washington, DC: Government Printing Office [Google Scholar]

- Stephan P. (2012a). How Economics Shapes Science. Boston, MA: Harvard University Press [Google Scholar]

- Stephan P. (2012b). Research efficiency: Perverse incentives. Nature. 484, 29. [DOI] [PubMed] [Google Scholar]

- Stern A.M., Casadevall A., Steen R.G., and Fang F.C. (2014). Financial costs and personal consequences of research misconduct resulting in retracted publications. eLife. 3, e02956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stroebe W., Postmes T., and Spears R. (2012). Scientific misconduct and the myth of self-correction in science. Perspect. Psychol. Sci. 7, 670. [DOI] [PubMed] [Google Scholar]

- Swazey J.P., Anderson M.S., Lewis K.S., and Louis K.S. (1993). Ethical problems in academic research. Am. Scientist. 81, 542 [Google Scholar]

- The Economist. (2010, Dec. 16). The Disposable Academic: Why doing a PhD is often a waste of time. Available at: www.economist.com/node/17723223 (accessed September16, 2016)

- The Economist. (2013, Oct. 19). How Science Goes Wrong. Available at: www.economist.com/news/leaders/21588069-scientific-research-has-changed-world-now-it-needs-change-itself-how-science-goes-wrong (accessed September16, 2016)

- The New York Times. (1991, Jun. 4). Commencements; Science Official Tells M.I.T. of Perils to Ethics. Available at: www.nytimes.com/1991/06/04/us/commencements-science-official-tells-mit-of-peril-to-ethics.html (accessed September16, 2016)

- Thoman D.B., Brown E.R., Mason A.Z., Harmsen A.G., and Smith J.L. (2014). The role of altruistic values in motivating underrepresented minority students for biomedicine. BioScience. 65, 183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tierney J. (2013, Sep. 10). Your Annual Reminder to Ignore the U.S. News & World Report College Rankings. The Atlantic. Available at: www.theatlantic.com/education/archive/2013/09/your-annual-reminder-to-ignore-the-em-us-news-world-report-em-college-rankings/279103 (accessed September16, 2016)

- Tijdink J.K., Verbeke R., and Smulders Y.M. (2014). Publication pressure and scientific misconduct in medical scientists. J. Empir. Res. Hum. Res. Ethics. 9, 64. [DOI] [PubMed] [Google Scholar]

- U.S. Anti-Doping Agency (U.S. ADA). (2012). Lance Armstrong Receives Lifetime Ban and Disqualification of Competitive Results for Doping Violations Stemming from His Involvement in the United States Postal Service Pro-Cycling Team Doping Conspiracy. Available at: www.usada.org/lance-armstrong-receives-lifetime-ban-and-disqualification-of-competitive-results-for-doping-violations-stemming-from-his-involvement-in-the-united-states-postal-service-pro-cycling-team-doping-conspi (accessed September16, 2016)

- U.S. Centers for Disease Control and Prevention. (2004). Blood lead levels in residents of homes with elevated lead in tap water–District of Columbia, 2004. CDC Morbid. Mortal. Weekly Rep. 53, 268. [PubMed] [Google Scholar]

- U.S. Cong. House, Committee on Oversight and Government Reform. (2015). EPA Mismanagement II July 29, 2015. Hearing. 114th Congress. 1st Session Available at: https://oversight.house.gov/hearing/epa-mismanagement-part-ii (accessed September16, 2016) [Google Scholar]

- U.S. Cong. House, Committee on Oversight and Government Reform. (2016). Examining Federal Administration of the Safe Drinking Water Act in Flint, Michigan Hearing, February 3, 2016. Hearing. 114th Congress. 2nd Session Available at: https://oversight.house.gov/hearing/examining-federal-administration-of-the-safe-drinking-water-act-in-flint-michigan (accessed September16, 2016) [Google Scholar]

- U.S. Department of Health and Human Services. (2013). Office of Research Integrity Annual Report 2012

- U.S. House Committee on Education and the Workforce. (2014). The Just In Time Professor. Available at: http://democrats.edworkforce.house.gov/sites/democrats.edworkforce.house.gov/files/documents/1.24.14-AdjunctEforumReport.pdf (accessed September16, 2016)

- U.S. House Committee on Science and Technology. (2010). A Public Health Tragedy: How Flawed CDC Data and Faulty Assumptions Endangered Children's Health in the Nation's Capital. Report by the Majority Staff on the Subcommittee on Investigations and Oversight. Available at: http://archives.democrats.science.house.gov/publications/caucus_detail.aspx?NewsID=2843 (accessed September16, 2016)

- Van Noorden R. (2010). Metrics: A profusion of measures. Nature. 465, 864. [DOI] [PubMed] [Google Scholar]

- Van Noorden R. (2014). Publishers withdraw more than 120 gibberish papers. Nature. Available at: www.nature.com/news/publishers-withdraw-more-than-120-gibberish-papers-1.14763 (accessed September16, 2016)

- Werner R. (2015). The focus on bibliometrics makes papers less useful. Nature. 517, 245. [DOI] [PubMed] [Google Scholar]

- Wilson W.A. (2016, May). Scientific regress. First Things. Available at: www.firstthings.com/article/2016/05/scientific-regress (accessed September16, 2016)

- Wolverton M. (1998). Treading the tenure-track tightrope: Finding balance between research excellence and quality teaching. Innovative Higher Educ. 23, 61 [Google Scholar]

- Zetter K. (2009, Feb. 6). TED: Barry Schwartz and the Importance of Practical Wisdom. WIRED. Available at: www.wired.com/2009/02/ted-barry-schwa (accessed September16, 2016)

- Zhivotovsky L., and Krutovsky K. (2008). Self-citation can inflate h-index. Scientometrics. 77, 373 [Google Scholar]