Abstract

Sensory cortices of individuals who are congenitally deprived of a sense can exhibit considerable plasticity and be recruited to process information from the senses that remain intact. Here, we explored whether the auditory cortex of congenitally deaf individuals represents visual field location of a stimulus—a dimension that is represented in early visual areas. We used functional MRI to measure neural activity in auditory and visual cortices of congenitally deaf and hearing humans while they observed stimuli typically used for mapping visual field preferences in visual cortex. We found that the location of a visual stimulus can be successfully decoded from the patterns of neural activity in auditory cortex of congenitally deaf but not hearing individuals. This is particularly true for locations within the horizontal plane and within peripheral vision. These data show that the representations stored within neuroplastically changed auditory cortex can align with dimensions that are typically represented in visual cortex.

Keywords: neuroplasticity, congenital deafness, visual location, sensory reorganization, fMRI, multivariate pattern analysis, decoding

Individuals who are congenitally deprived of a sense often exhibit considerable brain plasticity. Work on congenital blindness has demonstrated remarkable changes both in neural processing and in cognitive performance for nonvisual information. For instance, blind individuals show finer auditory pitch and tactile discrimination than sighted individuals (e.g., Gougoux et al., 2004; Van Boven, Hamilton, Kauffman, Keenan, & Pascual-Leone, 2000). Moreover, putative visual occipital regions are recruited in blind individuals for nonvisual processing, such as braille reading (e.g., Sadato et al., 1996).

Similar results have been obtained in congenitally deaf individuals. Studies with congenitally deaf nonhuman animals have shown extensive compensatory and cross-modal plasticity. For instance, the auditory cortex of congenitally deaf animals is co-opted to process visual (and somatosensory) stimuli (e.g., Hunt, Yamoah, & Krubitzer, 2006; Kral, Schröder, Klinke, & Engel, 2003; Meredith & Lomber, 2011). In fact, some of these visually responsive neurons present response patterns characteristic of neurons within visual cortex (e.g., direction selectivity; e.g., Meredith & Lomber, 2011). Moreover, Lomber, Meredith, and Kral (2010; see also Meredith et al., 2011) showed that deaf cats are better than hearing cats in visual-localization and motion-detection tasks, and that these compensatory behaviors are dependent on certain structures of the auditory cortex.

Work on congenitally deaf humans has produced somewhat converging, albeit much less conclusive, results. For instance, deaf individuals are better than hearing individuals at detecting visual stimuli presented in the visual periphery (e.g., Neville & Lawson, 1987a; Reynolds, 1993) and discriminating and detecting visual motion (e.g., Bavelier et al., 2000; Bosworth & Dobkins, 2002; Neville & Lawson, 1987a). These individuals also demonstrate heightened tactile sensitivity (Levänen & Hamdorf, 2001). Correspondingly, putative auditory cortex in congenitally deaf individuals can be responsive to nonauditory stimulation—Finney and colleagues showed that the auditory cortex of congenitally deaf, but not of hearing, participants responds to simple visual stimulation (e.g., Finney, Fine, & Dobkins, 2001; see also Karns, Dow, & Neville, 2012; Levänen, Jousmäki, & Hari, 1998; Nishimura et al., 1999; Scott, Karns, Dow, Stevens, & Neville, 2014; but see Hickok et al., 1997).

The fact that nontypical sensory information is processed in deafferented sensory regions (e.g., visual information in putative auditory cortex of deaf individuals) raises questions about the type of information represented in these cortices: What is being represented in the neuroplastically changed auditory cortex of congenitally deaf humans? A property that is central to visual processing in visual cortex is visual-field location (e.g., Sereno et al., 1995). Hence, if auditory cortex of congenitally deaf individuals contains representations of particular visual properties, one likely candidate would be visual field location. Here, we tested congenitally deaf and hearing individuals in a visual task using functional MRI (fMRI) to address whether human auditory cortex represents the location of a visual stimulus.

Method

During fMRI, we presented stimuli typically used to map visual field preferences in early visual cortex (Zhang, Zhaoping, Zhou, & Fang, 2012; see also Sereno et al., 1995). We analyzed patterns of blood-oxygen-level-dependent response in auditory and visual cortex of 10 congenitally deaf and 10 hearing individuals using between-participants multivariate pattern analysis (MVPA; e.g., Haxby et al., 2001, 2011). The focus of this experiment was on understanding whether the auditory cortex of deaf individuals is capable of representing visual information about a stimulus location in the visual field.

Participants

Sixteen hearing individuals (3 males, 13 females) and 15 congenitally deaf individuals (2 males, 13 females) participated; all were naive to the purpose of the experiment. Participants in the hearing group were between the ages of 18 and 22 years (mean age = 20.1 years); participants in the congenitally deaf group were between 17 and 22 years old (mean age = 20.4 years). All participants had normal or corrected-to-normal vision, had no history of neurological disorder, and gave written informed consent in accordance with the guidelines of the institutional review board of Beijing Normal University Imaging Center for Brain Research. All congenitally deaf participants were proficient in Chinese sign language, had hearing loss above 90 dB binaurally (frequencies tested ranged from 125 to 8,000 Hz), and did not use hearing aids. All hearing participants reported no hearing impairment or knowledge of Chinese sign language. The full data sets from 5 deaf and 6 hearing participants were discarded because of excessive head motion (above 3 mm during any of the sessions) or because of low fMRI signal-to-noise ratio (based on the variance of the standardized average values of the whole-brain signal for each time point). This left a final sample of 10 participants in each group.

Stimuli and procedure

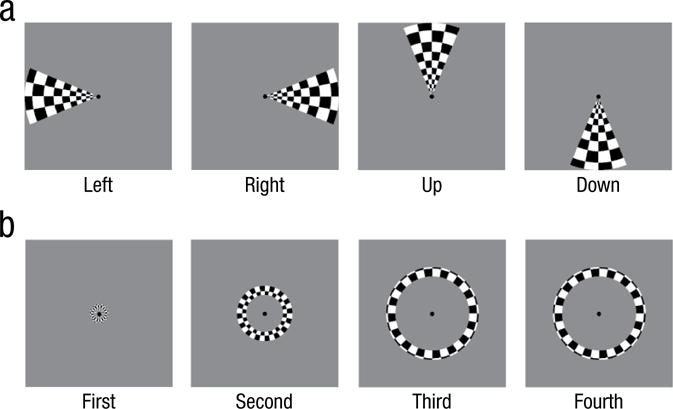

Participants viewed two types of visual stimuli that were used to calculate visual field maps (rotating wedges and expanding annuli; Zhang et al., 2012; see also Sereno et al., 1995). The first type was counterphase flickering (5 Hz) checkerboard wedges of 10.50° located at the left or right (horizontal) and up or down (vertical) planes (see Fig. 1a). The second type of stimuli was counterphase flickering (5 Hz) checkerboard annuli of 9.23°, 6.61°, 3.97°, and 1.30° (see Fig. 1b). Participants were asked only to maintain fixation on a central point throughout the entire functional run. Participants completed four runs: two in which the four wedge stimuli randomly alternated and two in which the four annuli randomly alternated. In each run, stimuli were presented in 12-s blocks with five repetitions of each stimulus per run. The stimuli were presented consecutively without any rest period in between.

Fig. 1.

Stimuli used in the experiments. Wedges (a) appeared alternately in each quadrant of the screen and were used for visual field location classification, and annuli (b) appeared at four different sizes in the center of the screen and were used for eccentricity classification.

Data acquisition and localization of regions of interest (ROIs)

MRI data were collected at the Beijing Normal University MRI center on a 3-T Siemens Tim Trio scanner. Before collecting functional data, we acquired a high-resolution 3-D structural data set with a 3-D magnetization-prepared rapid-acquisition gradient echo sequence in the sagittal plane—repetition time (TR) = 2,530 ms, echo time (TE) = 3.39 ms, flip angle = 7°, matrix size = 256 × 256, voxel size = 1.33 × 1 × 1.33 mm, 144 slices, acquisition time = 8.07 min. An echo-planar image sequence was used to collect functional data (TR = 2,000 ms, TE = 30 ms, flip angle = 90°, matrix size = 64 × 64, voxel size = 3.125 × 3.125 × 4 mm, 33 slices, interslice distance = 4.6 mm, slice orientation = axial).

All MRI data were analyzed using Brain Voyager QX (Goebel, Esposito, & Formisano, 2006). The anatomical volumes were transformed into a brain space that was common for all participants (Talairach & Tournoux, 1988). Preprocessing of the functional data included slice scan-time correction, 3-D motion correction, linear trend removal, and high-pass filtering (0.015 Hz; Smith et al., 1999).

To localize the auditory cortex, we conducted a functional run in which hearing participants were exposed to periods of white noise interspaced with periods of silence. Each period lasted 12 s (white noise or silence), and the sequence of white noise and silence was repeated 10 times. In a group analysis, auditory cortex was defined as the area, in normalized space, that responded more strongly to white noise than to silence (for a similar approach, see Finney et al., 2001; Shiell, Champoux, & Zatorre, 2015).

V1 was defined from the analysis of participants’ neural responses while viewing the wedge stimuli using a simplified version of the standard phase-encoding method focusing on the vertical and horizontal meridians (Sereno et al., 1995; Zhang et al., 2012). As one moves from the middle of V1 to the V1/V2 border, the receptive-field locations change from the horizontal to the vertical meridian. As one crosses the border from V1 and continues into V2, the receptive-field locations move from the vertical meridian back toward the horizontal meridian. This reversal facilitates the definition of V1.

Data analysis

Between-participants MVPA decoding analysis

We performed between-participants MVPA (Haxby et al., 2011) on beta values, having aligned all images anatomically in Talairach space (Talairach & Tournoux, 1988). The classification was performed with a support-vector-machine algorithm implemented with LIBSVM (a library for support vector machines; Chang & Lin, 2014). Annuli runs were analyzed separately from wedge runs. In both cases, we first created a general linear model to estimate the magnitude of the response at each voxel for the four rings (or wedges) in each scan run. Specifically, each general linear model consisted of 20 predictors (four stimuli × five alternations) in which each stimulus-presentation event was modeled as a unit-impulse function convolved with a canonical hemodynamic response function. Beta values for each predictor in the model were extracted at each voxel. In total, we had 40 beta values (20 predictors × two runs) at each voxel for each participant, 10 beta values for each stimulus (annulus or wedge).

We then used multivoxel data from different participants across the same or different experimental groups. This yielded two types of classifiers: within-groups and between-groups classifiers. Within-groups classifiers were trained and tested with data from participants of the same group (e.g., deaf participants), whereas between-groups classifiers were trained on data from one group and tested on data from the other group. We trained the classifiers on data from 8 participants and tested them on data from 2 other participants. Group membership of training and testing data sets was dependent on the type of classifier (for an example, see the Supplemental Material available online).

By using between-participants MVPA and creating these two types of classifiers, we could directly compare the two groups and assess visual representational content in the auditory cortex of deaf and hearing adults. Classification was done for the four conditions of the wedge protocol (left, right, up, down) and over specific contrasts (left vs. right, up vs. down). We also tested classification over annuli stimuli, in which we compared the innermost rings (first and second) with the outermost ring (fourth). In other words, the comparison was center versus periphery classification.

Accuracy was computed as the average classification accuracy over all possible combinations, without repetition, of 2 participants whose data were used for testing the classifiers out of the set of 10 participants ( ; i.e., 45 data folds). Chance level was calculated over another set of MVPA computations performed with surrogate data. Surrogate data were constructed using original beta values of voxels in the same ROI, but their labels were randomly shuffled (Watanabe et al., 2011). Using surrogate data took into consideration the intrinsic variability of our own data set, and therefore, it may have been a more natural way of assessing chance performance. Independent-samples z tests were performed on real and surrogate data, and upper-boundary confidence intervals were used as the threshold for chance level. We used the 99% confidence intervals as our canonical threshold to define above-chance classification. We also calculated the 99.99% and 99.9999% confidence intervals for more stringent statistical comparisons.

Whole-brain between-participants searchlight MVPA

We also performed whole-brain searchlight analysis (Kriegeskorte, Goebel, & Bandettini, 2006) at the voxel level using in-house scripts under the same linear support-vector-machine algorithms as in the ROI analysis. This analysis was performed exclusively for the within-groups conditions (i.e., training on deaf and testing on deaf, and training on hearing and testing on hearing) using the same cross-validation procedures. The searchlight size was 33 voxels (i.e., voxels within 6 mm from the visited voxel were included). Statistical significance was assessed in a similar fashion as in the ROI analysis—we performed classifications on surrogate data over 180 iterations per voxel.

Results

Representation of visual location in the auditory cortex of the congenitally deaf

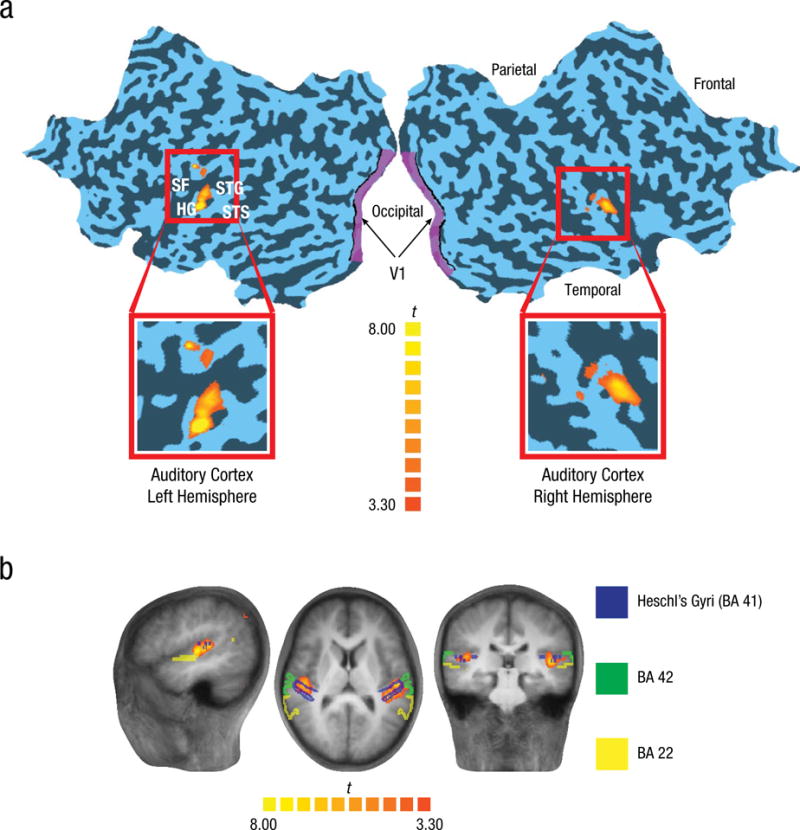

Auditory cortex was defined at the group level as the region in hearing participants that showed heightened responses to white noise compared with silence, t(1249) = 3.3, p < .001, uncorrected; peak Talairach coordinates in the right hemisphere: x = 44, y = −19, z = 9; peak Talairach coordinates in the left hemisphere: x = −49, y = −19, z = 7 (see Fig. 2). The peak Talairach coordinates of our auditory region were within the 50% to 100% probability range of being in primary auditory cortex (Penhune, Zatorre, MacDonald, & Evans, 1996), which includes the regions hA1 and hR (e.g., Da Costa et al., 2011). Moreover, as shown in Figure 2b, our ROI clearly includes Heschl’s gyri (Brodmann’s area 41), which is the principal anatomical landmark for primary auditory cortex, but does not seem to include other auditory ROIs, such as Brodmann’s areas 42 and 22 (e.g., Da Costa et al., 2011).

Fig. 2.

Activations used to define auditory cortex in hearing participants. The two-dimensional projection of the surface of the brain (a) highlights the areas used for classification—the auditory cortex, defined on the basis of heightened responses to white noise compared with silence, and primary visual cortex (V1). The sagittal, axial, and coronal slices (b) show the location of the auditory cortex region of interest (ROI) with respect to known landmarks—Brodmann’s areas (BAs) 41, 42, and 22. The t maps show results from the contrast of white noise versus silence in the auditory cortex ROI. HG = Heschl’s gyrus, SF = Sylvian fissure, STG = superior temporal gyrus, STS = superior temporal sulcus.

However, there was a strong possibility that areas outside the primary auditory cortex (e.g., belt regions of auditory cortex) were also part of our auditory ROI. The variability inherent in using group-level ROIs defined in a different group may have undermined precise localization of primary auditory cortex. Furthermore, it has been shown that A1 of deaf cats suffers volumetric reduction and may be partially taken over by other neighboring areas (Wong, Chabot, Kok, & Lomber, 2014), which suggests that accurate localization of A1 in deaf individuals may be extremely hard. Therefore, we loosely refer to our auditory region as the auditory cortex. This selected bilateral auditory region was used to test predictions in both the hearing and deaf groups.

We then used between-participants MVPA (e.g., Haxby et al., 2001; Haxby et al., 2011) to test whether auditory cortex in congenitally deaf individuals contains reliable information about the location of a visual stimulus (see the section on univariate analysis and Fig. S1 in the Supplemental Material). Between-participants MVPA allows for a direct comparison between groups and thus tests how specific the results are for each group. We constructed visual-field-location decoders to classify neural activity in auditory cortex and V1 using linear support-vector-machine algorithms. The goal of classification was to decode the location within the visual field where the visual stimulus appeared (left, right, up, or down) and its eccentricity (center or periphery). We employed multivoxel pattern classifiers with different combinations of learning and testing sets: Within-groups classifiers were trained on data from 8 participants from a group (i.e., deaf or hearing) and tested over data from the remaining 2 participants from that group; between-groups classifiers were trained on data from 8 participants from one group and tested over data from 2 participants from the other group.

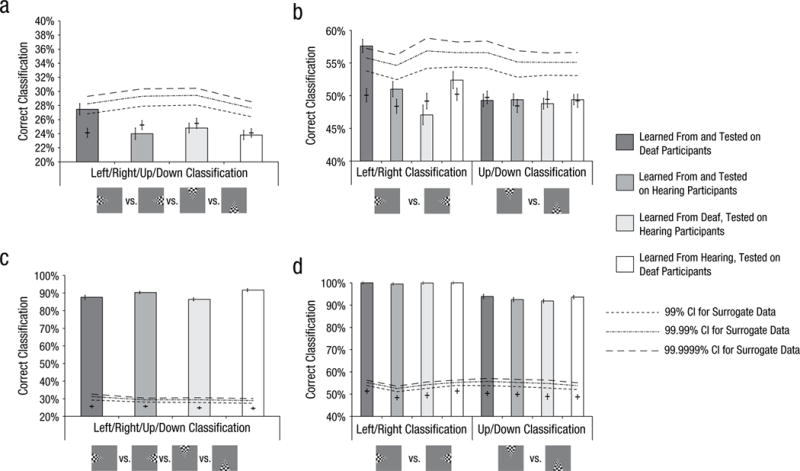

Our results showed that successful classification in auditory cortex depended on the learning and testing sets used for classification. Classifiers that learned from or were tested with input from hearing participants were not able to reliably decode the location of a visual stimulus (see Fig. 3), which indicates that activity in auditory cortex in hearing participants does not contain reliable information about where a stimulus was presented. The within-groups classification in auditory cortex (bilaterally) of deaf individuals was, however, significantly better than chance (27.47%; upper-boundary 99% confidence interval for chance level: 26.82%; see Fig. 3a). This above-chance classification accuracy seems to have been driven more by decoding of the horizontal location (left vs. right) than the vertical location (up vs. down). Classification performance in auditory cortex for right/left classification was 57.61% (upper-boundary 99.9999% confidence interval for chance level: 57.24%) and 49.28% for up/down (upper-boundary 99% confidence interval for chance level: 54.22%; see Fig. 3b). These above-chance performances were obtained only for data from bilateral auditory cortex and not for unilateral analysis for data from left or right auditory cortex.

Fig. 3.

Classification accuracy for real data (bars) and surrogate data (lines) in bilateral auditory cortex (top row) and visual cortex (bottom row). Performance is shown for the contrast between the four quadrants in which the wedge stimuli appeared (a, c) and for the contrasts between left versus right and up versus down wedges (b, d). In each data bar, the error bar showing ±1 SEM for the real data is toward the left, and the error bar showing ±1 SEM for the surrogate data is in the center. The horizontal bar crossing the error bars for surrogate data shows the mean. Some of the error bars for the real data are too small to be easily visible here. CI = confidence interval.

Finally, the within-groups classification between the innermost and outermost locations of the annuli stimuli in deaf individuals was also above chance for data from the right auditory cortex (and not for the left or bilateral auditory cortex; performance with data from right auditory cortex: 54.97%; upper-boundary 99.999% confidence interval for chance level: 54.96%). This means that beyond information on quadrant location (for the wedge stimuli), the patterns of activity within auditory cortex of congenitally deaf individuals contain information about whether a stimulus was presented centrally or in the visual periphery. As expected, classification accuracies for neural patterns arising from V1 were near ceiling regardless of the learning or testing set used (see Figs. 3c and 3d for classification accuracy in V1).

Whole-brain searchlight MVPA of visual field location in the congenitally deaf

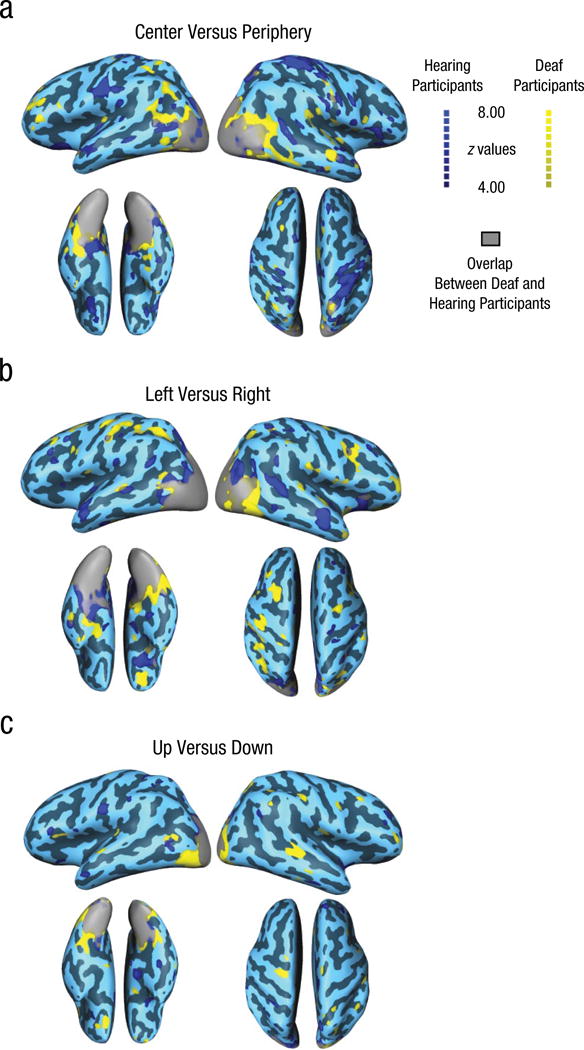

We also performed a whole-brain searchlight analysis to identify other areas that contain information that could be used to decode locations within the horizontal plane and the vertical plane (for wedge stimuli), as well as between locations in the center and periphery of the visual field (for annuli), in both our deaf and hearing groups. Figure 4 shows z maps for the three classification conditions (left vs. right, up vs. down, and center vs. periphery); a z value above 3.9 corresponds to a p value equal to or less than .0001. In this analysis, we tested only within-groups classification (e.g., training and testing on deaf participants).

Fig. 4.

Results from the whole-brain searchlight analysis in deaf and hearing participants. Decoding performance effect maps (z values) are shown separately for the contrasts of (a) center versus periphery for annuli, (b) left versus right for wedges, and (c) up versus down for wedges. All z values shown (calculated against surrogate data) correspond to p values equal to or less than .0001.

Not surprisingly, classifying locations within the horizontal or vertical plane, or between central and peripheral positions, could be performed within visual occipital cortex of both deaf and hearing individuals. There were, however, other areas beyond occipital cortex where the location of a stimulus in the visual field could be successfully decoded. Peripheral versus central stimuli locations could be decoded using data from superior parietal regions for both deaf and hearing participants, although this effect was more widespread for hearing than deaf participants. This classification was also reliably above chance in right superior and lateral temporal regions (e.g., around the auditory cortex) for deaf but not hearing participants (Fig. 4a). Decoding locations within the horizontal plane was possible within left parietal regions and right superior temporal and temporo-parietal regions (around the auditory cortex) for deaf individuals (Fig. 4b). In hearing participants, decoding could be performed in bilateral anterior temporal regions and in the right superior posterior temporal sulcus (Fig. 4b). Finally, it was possible to decode locations within the vertical plane in a more limited set of areas beyond occipital cortex and mainly for deaf individuals, in particular within bilateral temporal regions (Fig. 4c).

Discussion

In the experiment reported here, we showed that the auditory cortex of congenitally deaf individuals contains reliable information about the location of a stimulus in the visual field. We found that the fMRI patterns in the auditory cortex (bilaterally) of congenitally deaf individuals can be used to decode the position of a stimulus, especially along the horizontal plane. Using patterns of activation in the right auditory cortex of deaf individuals, we were also able to decode whether a stimulus was presented in central or peripheral vision. Moreover, whole-brain decoding results confirmed the importance of temporal regions (mainly in the right hemisphere) for decoding peripheral and horizontal locations in the deaf. The implication of our results is that congenital deafferentiation of the auditory cortex leads to a remapping of visual information and that along with this remapping, the content of the representations stored within the neuroplastically changed auditory cortex may follow dimensions that are typically seen in visual cortex. In particular, we showed that one of the most ubiquitous visual properties—stimulus location in the visual field—can be decoded from the representations in the auditory cortex of congenitally deaf individuals.

The differential hemispheric contribution reported here for periphery versus center and left versus right may be suggestive of aspects of neuroplasticity specifically concerned with periphery versus center (potentially associated with attentional processes and which differentially depend on the right hemisphere) and aspects of neuroplasticity that focus on the horizontal dimension and depend on the processing taking place within both hemispheres. Whole-brain searchlight analyses also suggested that multisensory and attentional networks (e.g., around superior parietal cortex) may be important for the representation of center versus periphery locations in both hearing and deaf groups, and for the representation of the horizontal plane in deaf participants. This could be in line with the proposal that some of the neuroplastic effects within congenitally deaf individuals are due to a heightened ability to allocate attention to peripheral visual locations (e.g., Bavelier et al., 2000).

Our data are in line with those from research on visual responses within putative auditory cortex of deaf nonhuman mammals. The auditory cortex of these animals responds to visual and somatosensory information (e.g., Hunt et al., 2006; Kral et al., 2003; Meredith & Lomber, 2011). These cortices inherit properties typical of visual neurons. For instance, they seem to cover the contralateral visual field and code for direction of motion and velocity (Meredith & Lomber, 2011). In fact, Roe, Pallas, Hahm, and Sur (1990) showed that the auditory cortex of newborn ferrets, in which the projections of retinal cells were surgically rerouted onto an auditory thalamic nucleus, show response patterns to visual stimulation similar to those observed in primary visual cortex. Most important, neurons within the auditory cortex seem to represent the visual field in a systematic way (Roe et al., 1990; but see Meredith & Lomber, 2011). Hence, the data herein regarding the representation of visual space in the human auditory cortex converge with extant animal data. Our findings raise the intriguing possibility that visual responses in the auditory cortex of the congenitally deaf could follow a systematic organization similar to what is observed within visual cortex (i.e., retinotopy). Evidence for such deep reorganization of the cortical surface under congenital sensory deprivation in humans has been demonstrated in blind individuals (Watkins et al., 2013). Watkins and collaborators showed systematic representations of different sound frequencies within visual cortex. While no such evidence has been reported for the auditory cortex of congenitally deaf humans, as noted previously, there is some evidence within the animal model for such organization (e.g., Roe et al., 1990). Regardless, it may nevertheless be the case that our findings are driven by a rudimentary type of organization in the auditory cortex of deaf individuals of visual stimulation along the horizontal plane. Thus, an important question to be addressed in subsequent studies is whether visual information in auditory cortex in deaf individuals follows a retinotopic organization.

Our data also suggest that the representations of the horizontal and vertical planes are not equivalently remapped in auditory cortex in deaf individuals. Here, we showed a processing advantage for stimuli within the horizontal plane. Convergent results were obtained in studies with deaf cats and ferrets. Meredith and Lomber (2011) showed that the extended representation of the contralateral visual field observed in the anterior auditory field of deaf cats did not include the superior and inferior extremes of the visual field, whereas Roe et al. (1990) showed that the horizontal meridian is more precisely mapped than the vertical meridian in the auditory cortex of deaf ferrets.

Why should there be an advantage for the processing stimuli along the horizontal plane in congenital deafness? In order for the auditory cortex of deaf individuals to represent visual space, a two-dimensional spatial variable has to be coded instead of the typical one-dimensional sound variable (sound frequency). It is well known that certain areas of the auditory cortex (e.g., A1) are organized tonotopically, with an organization that follows an orderly preference for different frequencies (e.g., Da Costa et al., 2011). Within the bands that roughly show the same preference toward a particular frequency (isofrequency bands), other properties of sound are coded. Roe et al. (1990) suggested that because the pattern of projections from the auditory thalamus along the isofrequency axis is not as topographically organized as those along the tonotopic axis, and because, in their data, the vertical plane is represented along the same anterior-to-posterior dimension of cortex as the isofrequency axis, the orderly processing of visual stimuli within the vertical plane would not be fully feasible. An alternative possibility may be related to the fact that processing within the isofrequency bands codes for interaural differences—a cue for sound localization within the horizontal plane. Rajan, Aitkin, and Irvine (1990) showed that there is a relative organization of responses to auditory stimuli by horizontal localization within the isofrequency bands of the auditory cortex of hearing cats. This organization could be the scaffolding for the enhanced processing within the horizontal plane.

Another interesting implication of our data is how the multisensory nature of the neocortex (Ghazanfar & Schroeder, 2006) can affect neuroplasticity. It has been shown that stimuli presented in a particular modality (e.g., vision) that also implies some sensory experience in another modality (e.g., audition) can be meaningfully processed in the sensory cortices of both modalities, which suggests a high degree of multisensorial processing within primary sensory cortices. For instance, Meyer and colleagues (2010) presented three types of items (animals, musical instruments, and human-made objects) visually in the absence of sounds. They were, however, depicted in a sound-implying fashion (e.g., a howling dog). They showed that those categories could be decoded from data from the auditory cortex. Vetter, Smith, and Muckli (2014) showed that information within the visual cortex of blindfolded individuals can be used to decode complex stimuli (birds, traffic, and people) conveyed by auditory input. Our stimuli do not have a multisensory property and therefore may not be amenable to show these multisensory effects in normal participants. Nevertheless, the intrinsic multisensory nature of the sensory cortices may be what the system exploits under sensorial deprivation, thus facilitating the emergence of the neuroplastic changes presented here.

An important question for understanding the results of our experiment is related to the potential role that sign-language proficiency may play on the capacity of the auditory cortex of the congenitally deaf to represent visual content. Our two groups of participants differed not only on their hearing capacity, but also in their proficiency in using sign language—deaf individuals were proficient in using sign language, whereas hearing participants here not. Extant data on visual processing under deafness strongly suggests, however, that the majority of effects indicating visual processing in the auditory cortex of congenitally deaf individuals are due to auditory deprivation rather than to the use of sign language (e.g., Fine, Finney, Boynton, & Dobkins, 2005; for similar results, see also Bavelier et al., 2000; Neville & Lawson, 1987b).

Another outstanding issue concerns how visual information reaches the auditory cortex of congenitally deaf individuals. One possible pathway involves visual and auditory subcortical nuclei. Roe et al. (1990) suggested that auditory thalamic nuclei may be involved in delivering visual information to deafferented A1. Work in the barn owl may also suggest that mixing of auditory and visual information at the level of the colliculus could provide a means for how auditory cortex could come to represent crude organizational principles for visual information in the congenitally deaf (e.g., Brainard & Knudsen, 1993). Moreover, Barone, Lacassagne, and Kral (2013) showed that A1 of deaf cats receives a weak projection from visual thalamus. Thus, it could be that there is an unmasking effect of congenital deafferentation in auditory cortex, such that the integration of auditory and visual information occurring in the midbrain is projected into the auditory cortex. Interestingly, it has been shown that the lateral geniculate nucleus (a thalamic relay of visual information to and from the cortex) overrepresents the horizontal plane when compared with the vertical one (e.g., Schneider, Richter, & Kastner, 2004), and this could be the basis for our differential decoding results for vertical and horizontal planes. Another possibility may relate to existing cortico-cortical connections between primary auditory and visual cortices. Bavelier and Neville (2002) suggested that the degeneration of these connections as a result of deafness could be responsible for a special involvement of auditory cortex in compensatory plasticity and for the observed advantage for processing peripheral stimuli in the congenitally deaf.

Finally, our data may have important implications for neuroprosthetics (e.g., cochlear implants). The implementation of those devices depends on exploiting the content of the representations within the deprived sensory system. The neuroplastic reorganization demonstrated here—and in particular the neuroplastically induced representation of visual content—may be ineffective in processing auditory input arriving from newly implanted devices, which could lead to complications in the implementation of those devices (e.g., Sandmann et al., 2012).

Supplementary Material

Acknowledgments

Funding

J. Almeida was funded by European Commission Seventh Framework Programme 2007–2013 Grant No. PCOFUND-GA-2009-246542 and by Fundação BIAL Grant 112/12. F. Fang was funded by National Natural Science Foundation of China (NSFC) Grants 30925014 and 31230029. B. Z. Mahon was supported by U.S. National Institutes of Health Grants R21NS076176 and R01NS089609. Y. Bi was funded by NSFC Grants 31171073 and 31222024. This research was supported by Fundação BIAL.

Footnotes

Supplemental Material

Additional supporting information can be found at http://pss.sagepub.com/content/by/supplemental-data

Author Contributions

J. Almeida developed the study concept. J. Almeida, D. He, Q. Chen, F. Fang, and Y. Bi developed the study design. Testing, data collection, and data analysis were performed by J. Almeida, D. He, Q. Chen, and F. Zhang under the supervision of J. Almeida, F. Fang, and Y. Bi. J. Almeida interpreted the results and discussed them with all authors. J. Almeida drafted the manuscript, and all authors provided critical revisions. All authors approved the final version of the manuscript for submission.

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

References

- Barone P, Lacassagne L, Kral A. Reorganization of the connectivity of cortical field DZ in congenitally deaf cat. PLoS ONE. 2013;8(4) doi: 10.1371/journal.pone.0060093. Article e60093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville H. Cross-modal plasticity: Where and how? Nature Reviews Neuroscience. 2002;3:443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell T, Corina D, Liu G, Neville H. Visual attention to the periphery is enhanced in congenitally deaf individuals. The Journal of Neuroscience. 2000;20:1–6. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosworth RG, Dobkins KR. Visual field asymmetries for motion processing in deaf and hearing signers. Brain and Cognition. 2002;49:170–181. doi: 10.1006/brcg.2001.1498. [DOI] [PubMed] [Google Scholar]

- Brainard MS, Knudsen EI. Experience-dependent plasticity in the inferior colliculus: A site for visual calibration of the neural representation of auditory space in the barn owl. The Journal of Neuroscience. 1993;13:4589–4608. doi: 10.1523/JNEUROSCI.13-11-04589.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. LIBSVM—A library for support vector machines (Version 3.20) [Computer software] 2014 Retrieved from http://www.csie.ntu.edu.tw/~cjlin/libsvm/

- Da Costa S, van der Zwaag W, Marques JP, Frackowiak RS, Clarke S, Saenz M. Human primary auditory cortex follows the shape of Heschl’s gyrus. The Journal of Neuroscience. 2011;31:14067–14075. doi: 10.1523/JNEUROSCI.2000-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fine I, Finney EM, Boynton GM, Dobkins KR. Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. Journal of Cognitive Neuroscience. 2005;17:1621–1637. doi: 10.1162/089892905774597173. [DOI] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR. Visual stimuli activate auditory cortex in the deaf. Nature Neuroscience. 2001;4:1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends in Cognitive Sciences. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with BrainVoyager QX: From single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping. 2006;27:392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gougoux F, Lepore F, Lassonde M, Voss P, Zatorre RJ, Belin P. Neuropsychology: Pitch discrimination in the early blind. Nature. 2004;430:309. doi: 10.1038/430309a. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Guntupalli JS, Connolly AC, Halchenko YO, Conroy BR, Gobbini MI, Ramadge PJ. A common, high-dimensional model of the representational space in human ventral temporal cortex. Neuron. 2011;72:404–416. doi: 10.1016/j.neuron.2011.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D, Clark K, Buxton RB, Rowley HA, Roberts TP. Sensory mapping in a congenitally deaf subject: MEG and fMRI studies of cross-modal non-plasticity. Human Brain Mapping. 1997;5:437–444. doi: 10.1002/(SICI)1097-0193(1997)5:6<437::AID-HBM4>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- Hunt DL, Yamoah EN, Krubitzer L. Multisensory plasticity in congenitally deaf mice: How are cortical areas functionally specified? Neuroscience. 2006;139:1507–1524. doi: 10.1016/j.neuroscience.2006.01.023. [DOI] [PubMed] [Google Scholar]

- Karns CM, Dow MW, Neville HJ. Altered cross-modal processing in the primary auditory cortex of congenitally deaf adults: A visual-somatosensory fMRI study with a double-flash illusion. The Journal of Neuroscience. 2012;32:9626–9638. doi: 10.1523/JNEUROSCI.6488-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kral A, Schröder JH, Klinke R, Engel AK. Absence of cross-modal reorganization in the primary auditory cortex of congenitally deaf cats. Experimental Brain Research. 2003;153:605–613. doi: 10.1007/s00221-003-1609-z. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proceedings of the National Academy of Sciences, USA. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levänen S, Hamdorf D. Feeling vibrations: Enhanced tactile sensitivity in congenitally deaf humans. Neuroscience Letters. 2001;301:75–77. doi: 10.1016/s0304-3940(01)01597-x. [DOI] [PubMed] [Google Scholar]

- Levänen S, Jousmäki V, Hari R. Vibration-induced auditory-cortex activation in a congenitally deaf adult. Current Biology. 1998;8:869–872. doi: 10.1016/s0960-9822(07)00348-x. [DOI] [PubMed] [Google Scholar]

- Lomber SG, Meredith MA, Kral A. Crossmodal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nature Neuroscience. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Kryklywy J, McMillan AJ, Malhotra S, Lum-Tai R, Lomber SG. Crossmodal reorganization in the early deaf switches sensory, but not behavioral roles of auditory cortex. Proceedings of the National Academy of Sciences, USA. 2011;108:8856–8861. doi: 10.1073/pnas.1018519108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Lomber SG. Somatosensory and visual crossmodal plasticity in the anterior auditory field of early-deaf cats. Hearing Research. 2011;280:38–47. doi: 10.1016/j.heares.2011.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer K, Kaplan JT, Essex R, Webber C, Damasio H, Damasio A. Predicting visual stimuli on the basis of activity in auditory cortices. Nature Neuroscience. 2010;13:667–668. doi: 10.1038/nn.2533. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: An event-related potential and behavioral study. II. Congenitally deaf adults. Brain Research. 1987a;405:268–283. doi: 10.1016/0006-8993(87)90296-4. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: An event-related potential and behavioral study. III. Separate effects of auditory deprivation and acquisition of a visual language. Brain Research. 1987b;405:284–294. doi: 10.1016/0006-8993(87)90297-6. [DOI] [PubMed] [Google Scholar]

- Nishimura H, Hashikawa K, Doi K, Iwaki T, Watanabe Y, Kusuoka H, Kubo T. Sign language ‘heard’ in the auditory cortex. Nature. 1999;397:116. doi: 10.1038/16376. [DOI] [PubMed] [Google Scholar]

- Penhune VB, Zatorre RJ, MacDonald JD, Evans AC. Interhemispheric anatomical differences in human primary auditory cortex: Probabilistic mapping and volume measurement from magnetic resonance scans. Cerebral Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- Rajan R, Aitkin LM, Irvine DR. Azimuthal sensitivity of neurons in primary auditory cortex of cats. II. Organization along frequency-band strips. Journal of Neurophysiology. 1990;64:888–902. doi: 10.1152/jn.1990.64.3.888. [DOI] [PubMed] [Google Scholar]

- Reynolds HN. Effects of foveal stimulation on peripheral visual processing and laterality in deaf and hearing subjects. American Journal of Psychology. 1993;106:523–540. [PubMed] [Google Scholar]

- Roe AW, Pallas SL, Hahm JO, Sur M. A map of visual space induced in primary auditory cortex. Science. 1990;250:818–820. doi: 10.1126/science.2237432. [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual-Leone A, Grafman J, Ibañez V, Deiber MP, Dold G, Hallett M. Activation of the primary visual cortex by Braille reading in blind subjects. Nature. 1996;380:526–528. doi: 10.1038/380526a0. [DOI] [PubMed] [Google Scholar]

- Sandmann P, Dillier N, Eichele T, Meyer M, Kegel A, Pascual-Marqui RD, Debener S. Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. Brain. 2012;135:555–568. doi: 10.1093/brain/awr329. [DOI] [PubMed] [Google Scholar]

- Schneider KA, Richter MC, Kastner S. Retinotopic organization and functional subdivisions of the human lateral geniculate nucleus: A high-resolution functional magnetic resonance imaging study. The Journal of Neuroscience. 2004;24:8975–8985. doi: 10.1523/JNEUROSCI.2413-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott GD, Karns CM, Dow MW, Stevens C, Neville HJ. Enhanced peripheral visual processing in congenitally deaf humans is supported by multiple brain regions, including primary auditory cortex. Frontiers in Human Neuroscience. 2014;8 doi: 10.3389/fnhum.2014.00177. Article 177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Shiell MM, Champoux F, Zatorre RJ. Reorganization of auditory cortex in early-deaf people: Functional connectivity and relationship to hearing aid use. Journal of Cognitive Neuroscience. 2015;27:150–163. doi: 10.1162/jocn_a_00683. [DOI] [PubMed] [Google Scholar]

- Smith AM, Lewis BK, Ruttimann UE, Ye FQ, Sinnwell TM, Yang Y, Frank JA. Investigation of low frequency drift in fMRI signal. NeuroImage. 1999;9:526–533. doi: 10.1006/nimg.1999.0435. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York, NY: Thieme; 1988. [Google Scholar]

- Van Boven RW, Hamilton RH, Kauffman T, Keenan JP, Pascual-Leone A. Tactile spatial resolution in blind Braille readers. Neurology. 2000;54:2230–2236. doi: 10.1212/wnl.54.12.2230. [DOI] [PubMed] [Google Scholar]

- Vetter P, Smith FW, Muckli L. Decoding sound and imagery content in early visual cortex. Current Biology. 2014;24:1256–1262. doi: 10.1016/j.cub.2014.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe M, Cheng K, Murayama Y, Ueno K, Asamizuya T, Tanaka K, Logothetis N. Attention but not awareness modulates the BOLD signal in the human V1 during binocular suppression. Science. 2011;334:829–831. doi: 10.1126/science.1203161. [DOI] [PubMed] [Google Scholar]

- Watkins KE, Shakespeare TJ, O’Donoghue MC, Alexander I, Ragge N, Cowey A, Bridge H. Early auditory processing in area V5/MT+ of the congenitally blind brain. The Journal of Neuroscience. 2013;33:18242–18246. doi: 10.1523/JNEUROSCI.2546-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong C, Chabot N, Kok MA, Lomber SG. Modified areal cartography in auditory cortex following early- and late-onset deafness. Cerebral Cortex. 2014;24:1778–1792. doi: 10.1093/cercor/bht026. [DOI] [PubMed] [Google Scholar]

- Zhang X, Zhaoping L, Zhou T, Fang F. Neural activities in V1 create a bottom-up saliency map. Neuron. 2012;73:183–192. doi: 10.1016/j.neuron.2011.10.035. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.