Abstract

Background

Techniques have been developed to compute statistics on distributed datasets without revealing private information except the statistical results. However, duplicate records in a distributed dataset may lead to incorrect statistical results. Therefore, to increase the accuracy of the statistical analysis of a distributed dataset, secure deduplication is an important preprocessing step.

Methods

We designed a secure protocol for the deduplication of horizontally partitioned datasets with deterministic record linkage algorithms. We provided a formal security analysis of the protocol in the presence of semi-honest adversaries. The protocol was implemented and deployed across three microbiology laboratories located in Norway, and we ran experiments on the datasets in which the number of records for each laboratory varied. Experiments were also performed on simulated microbiology datasets and data custodians connected through a local area network.

Results

The security analysis demonstrated that the protocol protects the privacy of individuals and data custodians under a semi-honest adversarial model. More precisely, the protocol remains secure with the collusion of up to N − 2 corrupt data custodians. The total runtime for the protocol scales linearly with the addition of data custodians and records. One million simulated records distributed across 20 data custodians were deduplicated within 45 s. The experimental results showed that the protocol is more efficient and scalable than previous protocols for the same problem.

Conclusions

The proposed deduplication protocol is efficient and scalable for practical uses while protecting the privacy of patients and data custodians.

Electronic supplementary material

The online version of this article (doi:10.1186/s12911-016-0389-x) contains supplementary material, which is available to authorized users.

Keywords: Bloom Filter, Data Reuse, Deduplication, Distributed Statistical Computation, Data Linkage, Duplicate Record, Electronic Health Record, Privacy, Record Linkage, Set Intersection

Background

Electronic health record (EHR) systems have been in existence for many years. The increased adoption of EHR systems has led, and continues to lead, to the collection of large amounts of health data [1]. Large amounts of administrative, survey, and registry data are also being collected. These data could aid in the development of scientific evidence that helps improve the effectiveness, efficiency, and quality of care of healthcare systems [2–4].

Introduction

The focus of this paper is the reuse of health data horizontally partitioned between data custodians, such that each data custodian provides the same attributes for a set of patients. Reusing data from multiple data custodians provides a sufficient number of patients who satisfy the inclusion criteria of a particular study. The number of patients at a single data custodian may provide insufficient statistical power, especially for studies on rare exposures or outcomes. When data are collected across multiple data custodians, the data of a heterogeneous mix of patients can be reused.

There has been substantial interest in the reuse of EHR data for public health surveillance, which also requires data from multiple data custodians covering the geographic area of interest [5–7]. One of the EHR meaningful use criteria in the United States is the ability to release health data for public health surveillance [8].

The horizontally partitioned datasets required for a health study or disease surveillance are often queried by distributing the query to data custodians, who execute the query and store a copy of the data extracts locally [9]. We refer to the data extracts distributed across data custodians as a virtual dataset (VD). Consider the execution of the query “select the records of patients tested for influenza A viruses in January 2016” across three data custodians . Figure 1 illustrates a VD that consists of the query results for the data custodians.

Fig. 1.

A simplified virtual dataset of influenza A test results distributed across three data custodians

A VD may contain duplicate records from data custodians that cover overlapping areas and areas in close proximity [10–12]. The duplicate records can be exact or approximate. A set of records are exact duplicates if the records are compared using exact comparison functions, and the records have the same value for all attributes used for comparison. In contrast, approximate duplicate records are compared using comparison functions that allow approximate similarities, and the records have different values for one or more attributes.

Privacy-preserving distributed statistical computation

Access to and the use of patient data for research raise significant privacy concerns for the various stakeholders (i.e., patients and data custodians) [7, 13, 14]. A recent approach that addresses privacy concerns is secure multi-party computation (SMC), which deals with the problem of computing a function f on distributed data without revealing any information except the results. SMC protocols have been developed for the statistical computation of distributed data that do not reveal anything except the results [15–21].

Statistical analysis of a virtual dataset that contains duplicate records may lead to incorrect results. Let us consider a query of the number of patients in a VD that satisfy a set of criteria. When there are duplicate records in the VD, a simple summation of the data custodians’ local counts will not return the same result if the query is run against the combined datasets of all data custodians stored in a central database.

For example, the distributed statistical computation of the number of women who tested positive for influenza A against the VD shown in Fig. 1 would return an incorrect result. Patient P3 would be counted twice, as she has a positive test result stored in D 1 and D 2. Therefore, to improve the accuracy of the statistical results, deduplication of the VD is a necessary preprocessing step before statistical analysis is conducted.

Privacy-preserving deduplication

Deduplication (also known as record linkage) is the process of linking records at the same or different data custodians that refer to the same individual. In contrast to record linkage, the final goal of deduplication is to remove duplicate records while maintaining a single occurrence of each record. Privacy-preserving record linkage (PPRL; also known as private set intersection and private record linkage) protocols have been developed to link records across multiple data custodians without revealing any information other than the linkage result [22, 23]. The main challenges of these protocols for practical use include the quality of the linkage, privacy, efficiency, and scalability [22].

The objective of this paper is to develop an efficient and scalable protocol for the deduplication of a VD while protecting the privacy of the patients and the data custodians. The proposed protocol supports various deterministic record linkage algorithms.

Our main contributions can be summarized as follows: We propose a novel efficient and scalable protocol based on Bloom filters for the privacy-preserving deduplication of a horizontally partitioned dataset. We provide proof of the security of the protocol against a semi-honest adversarial model in which the participating entities are assumed to follow the protocol steps, but the entities may try to learn private information from the messages exchanged during the protocol execution. We conducted a theoretical analysis of the protocol’s efficiency and scalability. We implemented a prototype of the protocol and ran experiments among three microbiology laboratories located in Norway. We also ran experiments using simulated microbiology laboratory datasets with up to 20 data custodians and one million records.

The remainder of this section presents a review of related work and provides a use case for the deduplication problem and formally presents it. The Methods section outlines the requirements of the proposed protocol, as well as the threat model and assumptions, Bloom filter, notations, basic set operations, and secure sum protocol used in the protocol. Then, the proposed protocol is described. The Results section presents the security analysis, implementation, and evaluations of the protocol. Finally, the Discussion and Conclusions are presented.

Related work

Several PPRL protocols have been developed based on either deterministic or probabilistic matching of a set of identifiers. Interested readers are referred to [22, 23] for an extensive review of the PPRL protocols. The protocols can be broadly classified as protocols with or without a third party. In this section, we review privacy-preserving protocols for deterministic record linkage. These protocols are secure against the semi-honest adversarial model, which is the adversarial model considered in this paper.

A record contains a set of identifiers that consists of direct and indirect (quasi-identifier) identifiers and other health information. Direct identifiers are attributes that can uniquely identify an individual across data custodians, such as a national identification number (ID). In contrast, quasi-identifiers are attributes that in combination with other attributes can identify an individual, such as name, sex, date of birth, and address. In this paper, the terms identifier and quasi-identifier are used interchangeably.

Weber [12] and Quantin et al. [24] proposed protocols that use keyed hash functions. These protocols require data custodians send a hash of their records’ identifiers to a third party that performs exact matching and returns the results. The data custodians use a keyed hash function with a common secret key to prevent dictionary attacks by the third party. These protocols are secure as long as the third party does not collude with a data custodian. Quantin et al.’s protocol [24] performs phonetic encoding of the identifiers (i.e., last name, first name, date of birth, and sex) before hashing, in order to reduce the impact of typing errors in the identifiers on the quality of the linkage.

Several protocols were proposed based on commutative encryption schemes1 [25–27]. In these protocols, each data custodian, in turn, encrypts the unique identifiers for all records across the data custodians using its private key, and consequently, each unique identifier is encrypted with the private keys of all the data custodians. Then, the encrypted unique identifiers are compared with each other, as the encrypted values of two unique identifiers match if the two unique identifiers match. The protocols proposed in [25, 26] are two-party computation protocols, whereas Adam et al.’s [27] protocol is a multi-party computation protocol.

The protocols reviewed thus far require the exchange of a long list of hash or encrypted identifiers, which can limit the scalability of the protocols as the number of data custodians and records increases. In addition, protocols based on commutative encryption require communication rounds quadratic with the number of data custodians.

Multi-party private set intersection protocols were designed based on Bloom filters2 [28, 29]. In general, each data custodian encodes the unique identifier values of its records as a Bloom filter (see the description of a Bloom filter in the Methods section). The protocols use different privacy-preserving techniques, as discussed below, to intersect the Bloom filters and then create a Bloom filter that encodes the unique identifiers of the records that have exact matches at all data custodians. Then, the data custodian queries the unique identifiers of its records in the intersection Bloom filter to identify the records that match.

In Lai et al.’s [28] protocol, each data custodian splits its Bloom filter into multiple segments and distributes them to the other participating data custodians while keeping one segment for itself. Then, each data custodian locally intersects its share of the Bloom filter segments and distributes it to the other data custodians. Finally, the data custodians combine the results of the intersection of the Bloom filter segments to create a Bloom filter that is an intersection between all the data custodians’ Bloom filters. The protocol requires communication rounds quadratic with the number of data custodians, and the protocol is susceptible to a dictionary attack of the unique identifiers that have all the array positions in the same segment of the Bloom filter.

In Many et al.’s [29] protocol, each data custodian uses secret sharing schemes3 [30] to split each counter position of the data custodian’s Bloom filter and then distributes them to three semi-trusted third parties. The third parties use secure multiplication and comparison protocols to intersect the data custodians’ Bloom filters, which adds overhead to the protocol.

Dong et al. [31] proposed a two-party protocol for private set intersection. The protocol introduced a new variant of a Bloom filter, called a garbled Bloom filter, using a secret sharing scheme. The first data custodian encodes the unique identifiers of the data custodian’s records as a Bloom filter, whereas the second data custodian encodes the unique identifiers of its records as a garbled Bloom filter. Then, the data custodians intersect their Bloom filters using an oblivious transfer technique (OT)4 [32], which adds significant overhead to the overall performance of the protocol.

Karapiperis et al. [33] proposed multi-party protocols for a secure intersection based on the Count-Min sketch.5 Each data custodian locally encodes the unique identifiers of its records based on the Count-Min sketch, denoted as the local synopsis, and then, the data custodians jointly compute the intersections of the local synopses using a secure sum protocol. The authors proposed two protocols that use secure sum protocols based on additive homomorphic encryption [34] and obscure the secret value with a random number [19, 35]. The protocols protect only the data custodians’ privacy, whereas our protocol protects individuals’ and data custodians’ privacy. The additive homomorphic encryption adds computation and communication overhead as the number of records and data custodians increases.

The results of the protocols in [28, 29, 31, 33] contain the probability of a false positive. Although the protocols can choose a small false positive probability, for some applications, a false positive probability may not be acceptable.

Use case

The need for comprehensive and timely infectious disease surveillance is fundamental for public health monitoring that makes early prevention and control of disease outbreaks possible. EHRs have been used as a data source for routine syndromic and laboratory-based public health surveillance [5–7].

The use case considered in this paper is distributed disease surveillance [6]. In particular, we consider the Snow system that is used for experimental evaluations of the protocol proposed in this paper [36]. The Snow system uses microbiology laboratory test results from multiple microbiology laboratories in Norway. The laboratories collect and analyze samples from patients in primary care settings, such as general practitioner offices and nursing homes.

Every day, the Snow system extracts anonymized test results and maintains the datasets within local databases at each laboratory according to a predefined data model. The data extracts contain attributes, such as infectious agent, age, sex, geographic area, and time. The Snow system broadcasts a query across the laboratories and reveals only the number of matching patients at each laboratory. We extend the Snow system with a secure sum protocol to hide the local count of a single laboratory [20].

Consider the statistical query of the count of positive or negative test results for a disease in a particular stratum of individuals (e.g., male or female) within a VD. A simple summation of the laboratories’ local counts gives an overestimated count when the test results are duplicated across the laboratories. A laboratory may transfer test samples to another laboratory when the first laboratory does not have the appropriate laboratory equipment. Then, when the test results are sent to the first laboratory, the same test result appears in both laboratories’ datasets.

In the context of infectious disease surveillance, two or more separate tests for an individual that have a positive result can also be considered duplicates depending on the required aggregate query for the dataset, such as the number of patients who have had a particular disease and the number of disease episodes.

Individuals may be infected with the same disease multiple times within a given time period, which may lead to being tested for the same disease at multiple laboratories. Individuals can also be tested at multiple laboratories for the same infection; this practice is more common in chronic infections. Testing at multiple laboratories may occur when patients switch healthcare providers, receive emergency care, or visit different providers during an episode of infection [37]. In Norway, primary care institutions may send samples collected from a patient to different laboratories, and patients can change general practitioners up to twice a year.

Consider a statistical query of the number of individuals infected with influenza A viruses within the VD shown in Fig. 1. The query requires that patient P1 is counted once, even if the patient has two positive test results at data custodians D 1 and D 3. For this query, the objective of the deduplication is to link the positive test results for each individual in the VD and to maintain the test result at only one of the laboratories.

When the number of disease episodes is counted, the number of positive test results for different disease episodes for an individual across the laboratories is counted separately. However, the positive test results for an individual in the same disease episode should be counted once. For example, Lazarus et al. [38] grouped two healthcare service encounters for a patient for a lower respiratory infection into one episode if the subsequent visit occurred within six weeks of the preceding visit. The researchers assumed that the second visit likely represented a follow-up visit for the same infection. In this context, the objective of deduplication is to link an individual’s positive test results for the same disease episode and keep the test result at only one of the laboratories.

We describe the protocol proposed in this paper in the context of deduplicating a VD to be able to accurately compute the statistical count of the number of individuals infected with the disease in question. However, the protocol can be easily extended to other types of statistical count queries.

Problem statement and definitions

In this section, we define the context for the deduplication problem and the problem statement.

Data custodian (Di)

We assume three or more data custodians (e.g., hospitals, general practitioner offices, or medical laboratories) are willing to share their data for a secondary use in a health study but are concerned about privacy risks. The data custodians form a distributed health research network denoted by , where D i is a data custodian.

Data schema

The heterogeneity of data models is a challenge in reusing data from multiple data custodians [39]. Therefore, the distributed data must be harmonized through standardization. For example, several distributed health research networks, such as Mini-Sentinel [40] and the Shared Health Research Information Network (SHRINE) [41], create a common data model by transforming the data at each data custodian into a predefined common data model and data representations [9].

In this paper, for simplicity, we assume a common data model exists across the data custodians that enforces uniform attribute naming conventions, definitions, and data storage formats. We also assume the data distributed across the data custodians are horizontally partitioned in that each data custodian D i collects the same attributes for a set of patients.

Virtual dataset (VD)

We assume the data query for a particular study can be broadcast to all data custodians . Then, each data custodian executes the query and stores a copy of the query result locally. The data extracts across the data custodians form a virtual dataset. We make the same assumption as above that the VD adheres to a common data model.

Record linkage

We consider deterministic record linkage algorithms in which a set of records belongs to the same person if they exactly or partially match on a predefined combination of identifiers. First, we describe the protocol proposed in this paper by assuming the existence of a common unique identifier j for a patient denoted by p j. Second, we extend the protocol for deterministic record linkage using quasi-identifiers, when the available unique identifier is low quality or does not exist.

Problem statement

Assume a subset of data custodians . Each data custodian has a record r j of patient p j in a virtual dataset. The problem addressed in this paper is to find a privacy-preserving protocol through which the patient’s duplicate records are identified and removed from the virtual dataset while one occurrence of the record is maintained at one of the data custodians.

Methods

Overview

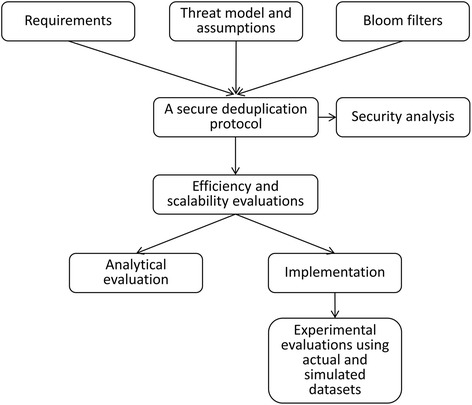

Figure 2 shows an overview of the methods we used to develop and evaluate the secure deduplication protocol proposed in this paper. First, we defined the requirements for the protocol and the threat model and assumptions with which the protocol would be secure. We presented the building blocks used in the protocol, such as a Bloom filter, functions for the basic operations of Bloom filters, and secure sum protocol, and described the proposed protocol.

Fig. 2.

An overview of the methods for developing and evaluating the proposed protocol

We then performed a security analysis of the proposed protocol. We also conducted theoretical and experimental evaluations of the protocol’s efficiency and scalability. We implemented a prototype of the protocol and ran the experiments on the virtual datasets distributed across three Norwegian microbiology laboratories. We also ran experiments on simulated datasets with up to 20 data custodians and one million records.

Requirements for secure deduplication protocol

Data custodians’ privacy concerns about disclosing patient data continue, even in the context of a pandemic [42]. Therefore, a deduplication protocol should protect the privacy of patients who have records in a VD.

However, even when patients’ privacy is protected, data custodians (e.g., clinicians and health institutions) have expressed concerns about their own privacy risks [7]. For example, deduplication may reveal the total number of patients in a data custodian who satisfy certain criteria. Although this information does not directly reveal any information about the patients, data custodians might consider this information sensitive, and in many scenarios, it needs to be hidden.

For example, a general practitioner may fear that the number of laboratory test requests and results she sent to laboratories could be used to evaluate her testing behavior. A microbiology laboratory may fear that other laboratories and investors may use the number of tests the laboratory performs during a time period to gain competitive advantage. Therefore, the protocol should be designed in such a way that the total number of patients remains private.

The protocol should allow only a data custodian to learn which of its records have duplicates in the VD, which does not reveal any new sensitive information to the data custodian. However, the identity of the data custodians that contributed the duplicate records should remain unknown.

For example, in Fig. 1, the influenza A–positive test results for patient P1 are stored at D 1 and D 3. Data custodian D 2 cannot learn any information about P1. D 1 and D 3 learn only that P1 tested positive for influenza A at another anonymous laboratory, which is not sensitive information.

Often, public health studies require a large number of patients’ data from several data custodians. Therefore, the deduplication protocol should be computationally efficient and scale with the number of records and participating data custodians.

Threat model and assumptions

We considered a semi-honest (honest-but-curious) adversarial model in which the data custodians correctly follow the protocol specifications using the data custodians’ correct data. However, the data custodians might use the messages exchanged during the protocol execution to learn information that otherwise should remain private. The adversarial model allows efficient and scalable protocols, whereas the malicious adversarial model provides stronger security at the expense of significant computation and communication costs [43–46].

We also assumed that a semi-trusted third party (STTP), denoted as the coordinator, who participates in the protocol without any input. In addition, we assumed that the coordinator follows the protocol specification and does not collude with a data custodian. An efficient and scalable protocol can be constructed using an STTP [7, 47].

We assumed that the communications between two entities that participate in the protocol are secure. Therefore, an adversary cannot read the messages sent between two honest entities, and the integrity of the messages is verified.

Bloom filter

A Bloom filter (BF) is a space-efficient probabilistic data structure that encodes a set of n elements [48]. A BF is an array of size m, and each array position has one bit initially set to 0. The Bloom filter allows insertion and membership queries of an element .

Bloom filter operations are performed using k independent hash functions H h(.), where 1 ≤ h ≤ k. First, the hash of an element x is computed using each hash function H h(x). Second, the modulo m of each hash value is computed to get k array positions of BF, b h(x) = H h(x) mod m, where b h(x) ∈ [0, m − 1]. Then, x is inserted into the BF by setting all the positions b h(x) of BF to 1. The element x is concluded to be a non-member of the BF if at least one of the positions b h(x) of the BF is 0.

A membership query result can be a false positive due to the hash collisions that occur when all the positions b h(x) of the BF have been set to 1 as a result of the insertion of other elements. After elements equal to the expected number of elements n are inserted into the BF the false positive probability of a membership query is equal to P(false positve) ≈ (1 − e − kn/m)k [49]. Figure 3 presents an example of a Bloom filter through inserting and querying elements.

Fig. 3.

Insertion and membership query for a Bloom filter (BF) (m = 16, k = 3). b i(x j) denotes an array position for x j with a hash function denoted as H i(.)

A counting Bloom filter (CBF) is an extension of a Bloom filter [49, 50] that supports the deletion of elements, as well as insertion and membership queries. Each array position of a CBF has a counter size c greater than one bit that is large enough to avoid counter overflow.

An element x is inserted into the CBF by incrementing all the counters at the array positions b h(x) of the CBF by 1. Similarly, an element x is deleted from the CBF by decrementing all the counters at positions b h(x) by 1. The element x is concluded to be a non-member of the CBF if at least one of the positions b h(x) is 0. A membership query has the same false positive probability as a Bloom filter.

Notations

In this section, we describe the notations that are used in the remainder of the paper. I i denotes a set of the unique identifiers (IDs) of the records of a data custodian in a particular deduplication query. The union of all the IDs of the records across all data custodians is denoted as S = I 1 ∪ I 2 ∪ … ∪ I N. We write S ∩ I i and S ∪ I i to denote the intersection and the union between sets S and I i, respectively.

We use CBF s to denote a counting Bloom filter that encodes set S and use CBF iI and BF iI to denote a counting Bloom filter and a Bloom filter that encode set I i, respectively. CBF iS ∩ I and CBF iS ∪ I encode sets S ∩ I i and S ∪ I i, respectively.

We use CBF ir to denote the random counting Bloom filter of data custodian D i and use CBF R to denote the sum of all the random counting Bloom filters, . However, CBF iR denotes the partial sum of the random counting Bloom filters, , where CBF 0r denotes the initial random counting Bloom filter of the leader data custodian D L.

The union of CBF iI and CBF ir is denoted as CBF ir ∪ I, and the Bloom filter representation of CBF ir ∪ I is denoted as BF ir ∪ I. CBF R ∪ S denotes the union of CBF R and CBF S.

Set operations on Bloom filters

Table 1 describes the main functions for the set operations on Bloom filters that are required for the construction of our protocol. Interested readers are referred to Additional file 1 for detailed descriptions and the algorithms of the functions.

Table 1.

Functions for basic operations of Bloom filters

| Functions | Description |

|---|---|

| add(CBF ir, CBF iI) | Returns counting Bloom filter CBF ir ∪ I that represents the summation of CBF ir and CBF iI |

| sub(CBF R ∪ S, CBF R) | Returns counting Bloom filter CBF S that represents the subtraction of CBF R from CBF R ∪ S |

| intersect(CBF S, BF iI) | Returns counting Bloom filter CBF iS ∩ I that represents the intersection between CBF S and BF iI |

| count(CBF iS ∩ I, {b 1(x), b 2(x), …, b k(x)}) | Returns f that is equal to the number of occurrences of x in CBF iS ∩ I |

| toBloomFilter(CBF iI) | Returns Bloom filter BF iI that represents CBF iI |

Secure sum protocol

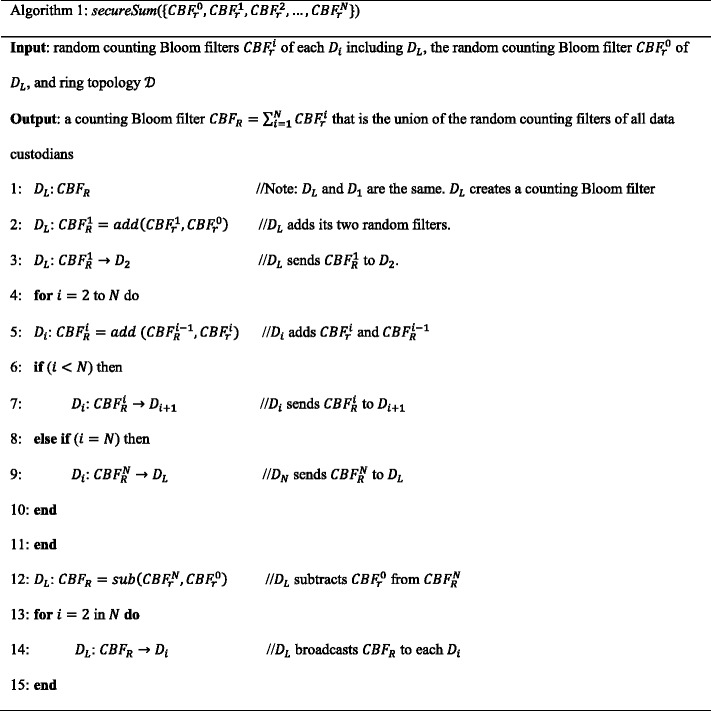

Several secure sum protocols are constructed using different building blocks [7, 17, 51, 52]. Secure sum protocols compute , where v

i ∈ [0, m) is the secret value of data custodian D

i. The protocols compute without disclosing v

i to any entity that participates in the protocol. We extend the secure sum protocol proposed in [19, 35] to compute the union of random counting Bloom filters, , where CBF

ir is the random counting Bloom filter of D

i. Assume that D

1 is selected as the leader data custodian, denoted as D

L. The protocol steps are shown in Algorithm 1.

In steps 1–3, the leader data custodian D L computes CBF 1R = add(CBF 1r, CBF 0r) and sends the result CBF 1R to data custodian D 2. In steps 4–11, each data custodian D i, in turn, computes CBF iR = add(CBF ir, CBF i − 1R) where 2 ≤ i ≤ N and CBF i − 1R is the value received from the previous data custodian D i − 1. Then, D N sends its result CBF NR to D L. In step 12, D L computes CBF R = sub(CBF Nr, CBF 0r) and gets the actual sum . In steps 13–15, D L broadcasts CBF R to all data custodians.

In this protocol, collusion between data custodians D i − 1 and D i + 1 reveals the secret value of D i. Extensions to the protocol are proposed in [21, 53] to make collusion between data custodians difficult.

A secure deduplication protocol

In this section, we describe the secure deduplication protocol proposed in this paper. The protocol includes the setup and computation phases.

Setup phase

In this phase, the coordinator broadcasts a start message that contains the user query criteria and the P(false positive) value to each D i in . Then, the data custodians jointly select the leader data custodian, denoted as D L. For simplicity, let us assume that D 1 is selected as the leader. Then, they form a ring topology, D L → D 2 → D 3 → … → D i → D i + 1 → … → D N, as shown in Fig. 4.

Fig. 4.

Ring topology of the data custodians

The data custodians jointly select the required parameters, such as the expected number of records n, Bloom filter size m, number of hash functions k, counter size c, and P(false positive). The data custodians also agree on a cryptographic hash function H 0(.) and the k hash functions H k with two secret keys k 0 and k 1.

Computation phase

The computation phase contains two subprotocols, such as the secure duplicate identifier and the distributed sorted neighborhood. The secure duplicate identifier subprotocol allows each data custodian to learn which of its records have duplicate records in the VD with false positive probability P(false positive). Then, the distributed sorted neighborhood subprotocol is executed on the results of the secure duplicate identifier subprotocol to identify the real duplicate records and remove the duplicate records while maintaining a single occurrence of the records.

A secure duplicate identifier subprotocol

The objective of this subprotocol is to allow each data custodian D i to identify which of its records has a duplicate in the VD with a small false positive probability P(false positive). The protocol consists of the following steps:

- Each D i in performs the following steps:

- Extract from its local dataset a set of unique IDs, denoted as I i, of the patients who satisfy the user query criteria.

- Encode I i as the counting Bloom filter CBF ir using the keyed hash functions H k with the secret key k 1.

- Create the random counting Bloom filter CBF ir (the algorithm used to create the random counting Bloom filter is described in Additional file 1).

D L creates the initial random counting Bloom filter CBF 0r.

The data custodians jointly run Algorithm 1 to compute the sum .

Each D i sums CBF ir and CBF iI and sends the result CBF ir ∪ I to the coordinator.

The coordinator computes the sum CBF ir ∪ I.

- For each D i in , the coordinator performs the following steps:

- Convert CBF ir ∪ I into the Bloom filter BF ir ∪ I.

- Intersect CBF R ∪ S and BF ir ∪ I and send the result CBF R ∪ S ∩ BF ir ∪ I to D i.

- Each D i in performs the following steps:

- Create the Bloom filters BF iI and BF ir ∪ I from the counting Bloom filters CBF iI and CBF ir ∪ I, respectively.

- Intersect CBF R and BF ir ∪ I and create the counting Bloom filter CBF R ∩ BF ir ∪ I.

- Subtract CBF R ∩ BF ir ∪ I from CBF R ∪ S ∩ BF ir ∪ I. The result is denoted by (CBF R ∪ S ∩ BF ir ∪ I) − (CBF R ∩ BF ir ∪ I) = (CBF R ∪ S − CBF R) ∩ BF ir ∪ I. However, we know that CBF R ∪ S − CBF R = CBF S. Therefore, the result can be expressed by CBF S ∩ BF ir ∪ I.

- Intersect CBF S ∩ BF ir ∪ I and BF iI and create the counting Bloom filter denoted as CBF S ∩ BF ir ∪ I ∩ BF iI. The expression can be reduced to CBF iS ∩ I = CBF S ∩ BF iI, as BF ir ∪ I ∩ BF iI is equal to BF iI.

- Query the number of occurrences of the IDs I i in CBF iS ∩ I using the count() function, and create the list L i that contains the IDs that have more than one occurrence.

In steps 1–2, each data custodian D i (where 1 ≤ i ≤ N) encodes the unique IDs of its records as the counting Bloom filter CBF iI and creates the random counting Bloom filter CBF ir. The leader data custodian D L creates the additional random counting Bloom filter CBF 0r. In step 3, the data custodians jointly compute the sum of their random counting Bloom filters, , using Algorithm 1. In step 4, D i computes CBF ir ∪ I = add(CBF ir, CBF iI) and sends the result CBF ir ∪ I to the coordinator. In step 5, the coordinator sums all data custodians’ CBF ir ∪ I, CBF ir ∪ I.

In step 6, the coordinator computes BF ir ∪ I = toBloomFilter(CBF ir ∪ I) and CBF R ∪ S ∩ BF ir ∪ I. The coordinator sends CBF R ∪ S ∩ BF ir ∪ I to D i. In step 7, each data custodian D i creates the counting Bloom filter CBF iS ∩ I = CBF S ∩ BF iI that encodes the intersection between the IDs of D i and all data custodians. Finally, D i queries its IDs in CBF iS ∩ I to create the list L i that contains the IDs for the records that are likely to be duplicates with the false positive probability P(false positive). Although the P(false positive) is very small, for some applications it may not be acceptable, and the true duplicate records should be identified.

The total number of likely duplicate records across the data custodians, is very small compared to the total number of records, as the number of records that have duplicate records is often a small proportion of the total number of records. Therefore, we can run existing deterministic PPRL protocols [12, 22, 24, 27] on the results of the secure duplicate identifier subprotocol with minimal computation and communication complexity. In the next section, we present an improved protocol based on the keyed hash function that reduces the required number of comparisons.

Secure distributed sorted neighborhood subprotocol

In the conventional sorted neighborhood (SN) technique [54, 55], sorting keys are generated for each record using a single attribute or a concatenation of the attributes of the records, and the keys are sorted lexicographically. Then, a sliding window of fixed size w is moved over the sorted records, and only the records in the same window are compared.

After the secure duplicate identifier subprotocol is run, each data custodian D i has the list L i that contains the IDs of the likely duplicate records. Note that the size of L i is much smaller than the total number of records of D i. In a simple approach for finding the actual duplicate records, D i hashes each ID in L i using a keyed hash function and sends the result HL i to the coordinator, who computes the union of the hash lists from all data custodians, HL = ⊎i HL i. Then, the coordinator performs exact matching between every ID with every ID in HL. However, in practice, we know that most of the comparisons are unlikely to match.

Let us assume that a set of data custodians and each data custodian has the record r j with the ID j. As r j is a duplicate record, value j appears in the list of IDs of the likely duplicate records, L i, of each . H 0(j) denotes the hash of j with hash function H 0(.). As the hash of j with the same hash function multiple times gives the same hash values, each sends to the coordinator the list HL i that contains H 0(j) . Therefore, HL contains multiple occurrences of H 0(j), and sorting HL brings hash values for the same ID next to each other.

Based on these observations, we present a distributed sorted neighborhood (DSN) subprotocol that extends the SN technique. The protocol parallelizes the sorting by making each data custodian D i locally sort HL i, and the coordinator merges only the sorted lists. The DSN protocol has the following steps:

- Each D i in performs the following steps:

- For every ID j in L i, D i performs the following steps:

- Hash j using the keyed hash function H 0(.) with the secret key k 0.

- Store the hash of j in the list SL i.

- Lexicographically sort SL i.

- Send SL i to the coordinator.

- The coordinator performs the following steps:

- Merge the SL i of each D i in and create the list SL.

- Slide a window of size w over the list SL and compare each pair of hash IDs within the window. If at least two hash IDs match, then the records associated with the IDs are duplicates.

- Send to D i the list DL i of the hash IDs of the records that D i needs to remove from its local dataset.

Each D i in , for every ID j in DL i, removes its record associated with j.

Extension of the secure deduplication protocol for deterministic algorithms

Thus far, the proposed protocol has been described for situations in which a common unique identifier exists, which enables efficient and high-quality linkage. This assumption is realistic in countries, such as Norway, Sweden, and Denmark, where a high-quality unique personal identifier is available [56, 57].

However, in many situations, the available unique identifier is low quality or does not exist. We describe how our protocol can be extended to support deterministic record linkage algorithms that define the criteria about which identifiers need to match in order to accept the linkage of a pair of records.

To increase the quality of the linkage, data cleaning often precedes record linkage. We also assume appropriate data cleaning occurs before the protocol is run. Various data-cleaning techniques, such phonetic encoding algorithms, have been proposed in the literature [58].

It has been shown that a linkage key can be created based on a concatenation of quasi-identifiers, such as name, sex, date of birth, and address. Studies have estimated that up to 87% of the U.S. population [59], 98% of the Canadian population [60], and 99% of the Dutch population [61] are unique, with a combination of quasi-identifiers, such as postal code, sex, and date of birth.

The National Cancer Institute in the United States uses a deterministic record linkage algorithm to link Surveillance, Epidemiology and End Results (SEER) data collected from cancer registries and Medicare claims data. The algorithm creates linkage keys using a set of criteria based on a Social Security number (SSN), first name, last name, date of birth, and sex [62, 63].

Let us consider a deterministic record linkage algorithm that has p linkage keys where each linkage is generated using a distinct match criterion defined by combinations of quasi-identifiers. For each match criterion, each data custodian creates a linkage key, and the deduplication protocol is run with the linkage key the same way the protocol is run with a unique identifier. However, in the distributed sorted neighborhood subprotocol, each data custodian sends the hash of the local identifiers of the likely duplicate records with the hash of the linkage keys to the coordinator. Finally, the coordinator identifies the actual duplicate records from the results of the protocol with all the linkage keys.

Let us consider, for simplicity of description, that each data custodian has an equal number of records. The computation time for a data custodian to create linkage keys for its records based on a combination of quasi-identifiers is denoted as t l. The runtime for the protocol using a unique identifier is denoted as t u. Assuming that the data custodians generate linkage keys for their records in parallel, deduplication using a linkage key has a total runtime of t u + t d.

For a deterministic record linkage algorithm that has p linkage keys, the total runtime is p × (t u + t d) + t a, where t a is the sum of the additional time required to send local unique identifiers to the coordinator and the computation time for the coordinator to find the actual duplicate by combining the results of the protocol with each linkage key. However, as a separate instance of the protocol can run with each linkage key in parallel, the runtime reduces to (t u + t d) + t a.

Results

In this section, we describe the security analysis and the implementation of the proposed deduplication protocol. We also describe the theoretical and experimental evaluations of the protocol’s efficiency and scalability.

Security analysis

We prove the security of the proposed protocol in the presence of corrupt data custodians or a corrupt coordinator who tries to learn information as a result of the protocol execution. We assume that a corrupt coordinator does not collude with a corrupt data custodian.

For the security proof of the protocol, we follow the standard security definition that is called privacy by simulation. For an adversary that controls a set of data custodians (or the coordinator), the adversary’s view (the information learned during the protocol execution) is a combination of the corrupt data custodians’ views. The adversary also accesses the corrupt data custodians’ inputs and outputs. Thus, in the simulation paradigm, we require to show the existence of an efficient simulator that can generate the adversary’s view in the protocol execution given only the corrupt data custodians’ inputs and outputs.

Theorem 1 (compromised Di)

The protocol is secure against an honest-but-curious adversary ADV that corrupts (or controls) at most N − 2 data custodians.

Proof

We prove the robustness of the protocol by looking at the exchanged messages and reducing the security to the properties of the Bloom filter.

We denote the corrupt data custodians as , where . The inputs to a simulator are the inputs and outputs of the corrupt data custodians . The inputs and outputs of each corrupt data custodian are the list of the IDs of its records I a and the list of the IDs for likely duplicate records L a, respectively.

The view of each corrupt data custodian are the counting Bloom filters, such as CBF aI, CBF aS ∩ I, CBF ar and CBF R. CBF aI and CBF aS ∩ I can be generated from lists I a and L a, respectively. CBF ar and CBF R are randomly generated. In general, the simulator can generate the adversary’s view in the protocol execution from the corrupt data custodians’ inputs and outputs. Thus, the protocol is secure against an honest-but-curious adversary so that the protocol computes without revealing anything except the outputs. Therefore, the adversary cannot extract any private information about patients who have records at honest data custodians. In addition, the adversary cannot learn the number of records at honest data custodians.

Let us assume that the ID j for a duplicate record r j is in the list L a of corrupt data custodian . The adversary learns the number of duplicates for r j from CBF aS ∩ I with a false-positive probability equal to P(false positive), denoted as d. The adversary can look into its inputs to learn the actual number of duplicates of r j at , denoted as d A. Therefore, an adversary may infer whether duplicate records for r j exist at honest data custodians with the following probability:

Theorem 2 (compromised coordinator)

An honest-but-curious adversary ADV that corrupts the coordinator cannot infer any information about the presence or absence of patients at data custodians and the number of records contributed by a data custodian.

Proof

We prove the security of the protocol by analyzing the messages received by the coordinator during the execution of the protocol and reduce its security to the properties of the hash functions H k and H 0(.) used in the protocol.

The coordinator’s view is the counting Bloom filter CBF ir ∪ I and the list of the hash IDs of the likely duplicate records SL i of each data custodian D i. The coordinator does not have inputs and outputs. The objective of the security proof is to show that private information about patients and data custodians cannot be learned based on the coordinator’s view during the protocol execution.

The secret keys (k 0, k 1) used by the data custodians are not available to the simulator. Therefore, the simulator cannot learn the IDs of the records inserted in CBF ir ∪ I. In addition, as the hash function H 0(.) is cryptographically secure, the simulator cannot learn the IDs based on SL i.

Each array position of CBF ir ∪ I has a counter value equal to the sum of the corresponding counter values of CBF iI and CBF ir. Thus, the counter values of CBF ir ∪ I are random, as every counter position of CBF ir has a random value. The random noise CBF ir inserted in CBF ir ∪ I prevents the simulator from learning the approximate total number of records of D i encoded by CBF iI.

Therefore, ADV cannot learn the IDs and the number of records held at a data custodian, and consequently, the protocol is secure in the face of a corrupt coordinator.

Implementation

A prototype of the proposed deduplication protocol is implemented in Java. The prototype contains the local and coordinator software components. The local software component is deployed at each data custodian, while the coordinator software component is deployed at the coordinator. The parameters required for an instance of the protocol are configured through the configuration files.

The dataset at each data custodian was stored in a MySQL relational database. We used the JavaScript Object Notation (JSON) format for message communication. Each component used an Extensible Messaging and Presence Protocol (XMPP) [64] client to connect to an XMPP server. Then, a JSON message was sent through the XMPP server between two entities that participate in the protocol. All messages were compressed using the Lz4 [65] lossless compression algorithm to reduce the overall size. After transmission, each message was decompressed before actual use.

Analytical evaluation

The main concerns for the practical use of SMC protocols are efficiency and scalability. Efficiency is the ability to compute with a good performance, which is often expressed by the communication and computation complexity. Communication complexity is analyzed in terms of the communication rounds and the size of the messages exchanged between the parties involved in the protocol. For N data custodians, each data custodian sends three messages and receives three messages, except the leader data custodian that sends N + 2 messages. The coordinator sends 2N messages and receives 2N messages. The overall communications of the protocol is 6N − 1, which is linear with the number of data custodians O(N).

The size of a message that contains a counting Bloom filter depends on the Bloom filter size m and counter size c. The size of the message that contains the list of likely duplicate hash IDs SL i is small compared to the size of the message that contains the IDs I i of all records, but it depends on the false positive probability and the proportion of the records of D i that have duplicates in the virtual dataset.

Computation complexity is measured in terms of the time it takes for each entity to complete local computations and the protocol runtime. Scalability is measured in terms of the change in efficiency as the number of records and data custodians increases.

In general, the local computations of the protocol are computationally very efficient, as it does not use a building block that adds overhead to the performance. Bloom filter operations require only O(1) time. The other computations are performed only on the list of the hash IDs of the likely duplicate records, which are a small proportion of the IDs of all the records. In addition, the data custodians often compute in parallel. Detailed analysis of the total computation time is provided in Additional file 1.

Experimental evaluation

We ran the experiments using actual and simulated datasets to evaluate the efficiency and scalability of the protocol. The experiments were run 100 times, and the average total runtime for the protocol and the local computation time for each entity were recorded. In this section, we report only the total runtimes. Details regarding the parameters used for the experiments are given in Additional file 1.

In situ experiments

We deployed the prototype at three microbiology laboratories located in Norway on top of the Snow disease surveillance system [36]. Two laboratories are departments at the University Hospital of North Norway (UNN) and Nordland Hospital (NLSH). The third laboratory is Fürst Medical Laboratory, a private laboratory. UNN and NLSH together cover a total population of more than 475 000 inhabitants. Based on the number of laboratory tests conducted within a specific time period, we estimated that the Fürst population coverage is approximately twice the total population covered by UNN and NLSH.

At Fürst, the local software component was deployed on an Intel i5-4590 3.3GHz quad core with 8GB RAM and Ubuntu 14.04. At UNN and NLSH, the local software component was deployed on an Intel Xeon E5-2698 v3 2.3GHz dual core with 4GB RAM and Red Hat Enterprise Linux 7. The coordinator software component was deployed on an Intel Xeon X3220 2.4GHz quad core with 8GB RAM and Ubuntu 14.04. The laboratories and the coordinator were connected through the Norwegian Health Network, a wide area network of healthcare service providers. Details about the network connections and the communication patterns are described in Additional file 1.

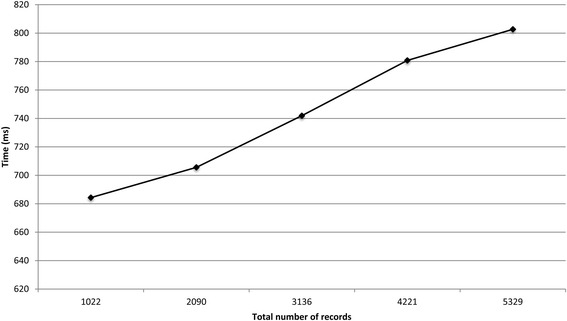

We ran two experiments on the data distributed across the three laboratories. The first experiment involved answering a query about the number of individuals infected with influenza A between January 2015 and April 2016. Each laboratory locally queried the IDs of the individuals who were tested positive for influenza A during this time period, and a virtual dataset that contained 5329 records was created.

The second experiment involved answering a query about the number of patients who were tested at multiple laboratories between January 2015 and April 2016. Each laboratory locally queried the unique IDs of the patients who had been tested for any of the diseases included in the Snow system during the time period, and a virtual dataset that contained 85 353 unique IDs was created.

We divided each virtual dataset into segments by varying the time period in which the analyses were performed. Then, we ran the protocol on each segment of the virtual datasets.

Figures 5 and 6 show the runtimes for the protocol on the two virtual datasets as the total number of records increased. The deduplication of 5329 and 85 353 records took around 0.8 and 7.5 s, respectively. The local computation time for the laboratories and the coordinator is presented in Additional file 1. For the deduplication of 85 353 records, the local computation time for the coordinator and the data custodians did not exceed 0.6 s.

Fig. 5.

The total runtime for the protocol on the influenza A datasets

Fig. 6.

The total runtime for the protocol on the datasets that contain the test results for various diseases

The experiment on the VD of the positive test results for influenza A found one patient who was tested at multiple laboratories between January 2015 and April 2016. The experiment on the VD that contained the test results for various diseases found 449 patients who had been tested at multiple laboratories for different infectious diseases. The results showed that the samples collected from each patient were tested at multiple laboratories at different times.

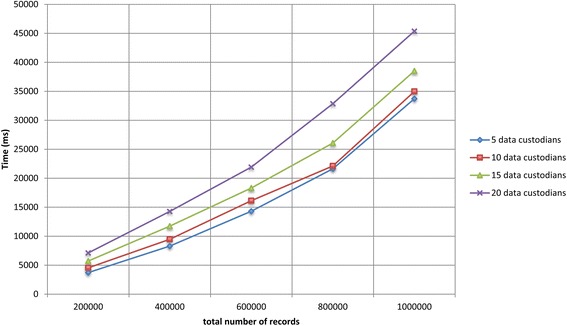

In vitro experiments

We deployed the prototype of the protocol on a cluster computer. Each node had two Intel E8500 dual-core 1.17GHz CPUs, 4GB RAM, and CentOS 6.7. The nodes were connected through fast Ethernet.

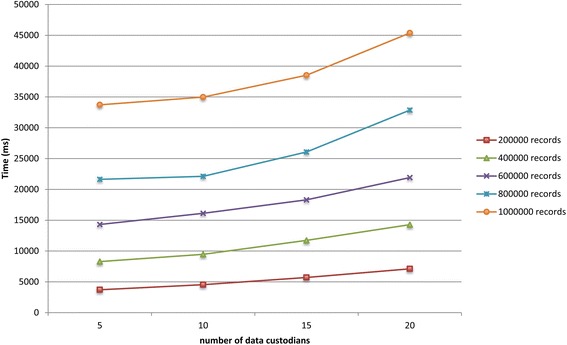

We ran experiments on simulated virtual datasets that consisted of a large number of data custodians and records. The VDs consisted of a varying number of data custodians (i.e., 5, 10, 15, and 20) and total number of records (i.e., 200 000, 400 000, 600 000, 800 000, and 1 000 000). The total number of records of each VD was distributed equally among all the data custodians, and each data custodian contained around 5% duplicate records. Details about the datasets are provided in Additional files 1 and 2.

Figures 7 and 8 show the total runtime for the protocol as the total number of records in the virtual dataset and the number of participating data custodians increased, respectively. The deduplication of one million records distributed across five and 20 data custodians took around 34 and 45 s, respectively. The local computation time for the laboratories and the coordinator is presented in Additional file 1. In general, the local computation time for the coordinator and the data custodians did not exceed 34 and 7 s, respectively.

Fig. 7.

The total runtime for the protocol on the simulated datasets as the total number of records increases

Fig. 8.

The total runtime for the protocol on the simulated datasets as the number of participating data custodians increases

Discussion

We proposed a privacy-preserving protocol for the deduplication of data horizontally partitioned across multiple data custodians. The protocol protects the privacy of patients and data custodians under the semi-honest adversarial model. The protocol remains secure even when up to N − 2 data custodians collude.

The protocol satisfies the security requirements that were formulated in [26] for a secure record linkage protocol. However, we assumed that the coordinator has no means of getting the secret keys used in the protocol, which improves the efficiency and scalability of the protocol. The assumption can be ensured through a data use agreement among the data custodians that prohibits them from sharing the secret keys with the coordinator.

The protocol was deployed and evaluated in situ for the deduplication of test results distributed across three microbiology laboratories. The protocol was also evaluated in vitro on simulated microbiology datasets of up to one million records and 20 data custodians. The deduplication of the one million simulated records distributed across 20 data custodians was completed within 45 s. The experimental results showed that the proposed protocol is more efficient and scalable than previous protocols [29, 31].

The local computation time for the entities and the total runtime for the protocol scale linearly as the number of participating data custodians and the total number of records increase. The protocol scales because Bloom filter operations are not expensive, and the coordinator and data custodians perform most of the computations in parallel. In addition, the number of communication rounds of a data custodian is constant and does not increase with the addition of new data custodians.

The protocol was not experimentally evaluated for situations in which there is no unique identifier. However, we theoretically showed that the protocol remains scalable. The computation complexity of each party linearly increases with the number of steps of the deterministic record linkage algorithm.

There is a need for reuse health data at the regional, national, and global levels to increase the number of records and participating data custodians for a given study [66, 67]. The blocking technique [68] can be applied to increase the scalability of the proposed deduplication protocol to such a large scale. The intuition for the use of the blocking technique is that running a separate instance of the protocol on a subset of records that are likely to match enables parallel computations. A very simple example is running different instances of the protocol on the records of female and male patients.

In practice, data custodians create blocking keys for their records based on one or more attributes, and records that have the same blocking key values are grouped into the same block, which consequently divides the virtual dataset into segments. Then, the data custodians jointly execute a separate instance of the protocol for each segment of the virtual dataset in parallel. The data custodians can execute the protocol instances on different CPU cores or servers to increase the scalability of the protocol.

Conclusions

Deduplication is a necessary preprocessing step for privacy-preserving distributed statistical computation of horizontally partitioned data. However, deduplication should not reveal any private information about individuals and data custodians. We proposed a privacy-preserving protocol for the deduplication of a horizontally partitioned dataset.

Efficiency and scalability are the main challenges for practical uses of SMC protocols. The experimental evaluations of the proposed protocol demonstrated its feasibility for a wide range of practical uses.

As discussed in the Discussion section, we plan to parallelize the execution of the protocol using the blocking technique. Furthermore, we also plan to integrate the protocol with the privacy-preserving distributed statistical computation framework we developed [20].

Acknowledgments

We would like to thank Gro Berntsen for discussions about the use case section. We also would like to thank Gunnar Hartvigsen and Andrius Budrionis for invaluable discussions. We must thank the system developers of the Snow system, in particular Torje Henriksen, for their invaluable support in the integration of the protocol into the Snow system and the execution of the experiments across the microbiology laboratories.

We would like to thank the microbiology laboratories of University Hospital of North Norway, Nordland Hospital, and Fürst Medical Laboratory for permitting the in situ experiments to be performed on their datasets. We are also indebted to Eyvind W. Axelsen and Haagen Berg at the microbiology laboratories for their support in the preparation of the microbiology datasets used for the in situ experiments.

Funding

This work was supported by the Center for Research-based Innovation, Tromsø Telemedicine Laboratory (TTL), through The Research Council of Norway, grant number 174934. The study was also partially supported by the Research Council of Norway, grant number 248150/O70. The funding bodies did not have any role in the design and evaluation of the protocol and in writing the manuscript.

Availability of data and material

The laboratory datasets used for the in situ experiments of this study are available from University Hospital of North Norway, Nordland Hospital, and Fürst Medical Laboratory, but restrictions apply to make these data publicly available. However, the algorithm that generated the datasets used for the in vitro experiments is included in the supplementary files of this article. The prototype software of the protocol is available from the corresponding author upon request.

Authors’ contributions

KYY contributed to the conception and design of the manuscript, and was the major contributor to the writing of the manuscript. KYY also implemented the protocol, contributed to the design of the experiments, and executed the experiments. AM contributed to the design of the manuscript and participated in drafting the manuscript. JGB contributed to the conception of the manuscript and the design of the experiments. JGB also extensively reviewed the manuscript for important scientific content. All authors read and approved the submission of the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

The privacy ombudsman of the University Hospital of North Norway approved the in situ experiments, and all participating laboratories also agreed to the experiments. Individuals’ consent was not required.

Abbreviations

- EHR

Electronic Health Record

- JSON

JavaScript Object Notation

- PPRL

Privacy-Preserving Record Linkage

- SMC

Secure Multi-Party Computation

- VD

Virtual Dataset

- XMPP

Extensible Messaging and Presence Protocol

Additional files

It contains a description of the Bloom filter set operations, the computation complexity analysis of the protocol, the algorithm for generating random Bloom filters, the parameters used in the experiments, the datasets used in the experiments, the network connection for the in situ experiments, and additional experiment results. (DOCX 6563 kb)

It is a Bash script that implements the algorithm we used to generate the simulated microbiology datasets. The script is discussed in Additional file 1. (SH 9 kb)

Footnotes

Commutative encryption is a form of encryption in which the order of the consecutive encryption and decryption of a value with different cryptographic keys does not affect the final result and no two values have the same encrypted value [69].

The protocols are different from the protocol proposed in [70, 71] for probabilistic record linkage. In Schnell et al.’s protocol [70], for each record, each identifier is encoded as a separate Bloom filter, whereas in Durham et al.’s protocol [71], to avoid frequency-based cryptanalysis, the set of identifiers of each record is encoded as a Bloom filter.

Secret sharing is a method by which a secret value is split into shares and a predefined number of shares are required to reconstruct the secret value [30].

OT is a method for two parties to exchange one of several values in which the sender is oblivious to which value is selected, while the receiver learns only the selected value [32].

The Count-Min sketch [72] is, similar to the counting Bloom filter (see the description of the counting Bloom filter in the Methods section), a space-efficient probabilistic data structure for encoding a set of elements that allows querying the frequencies of the occurrence of the inserted elements with some error.

Contributor Information

Kassaye Yitbarek Yigzaw, Phone: +4796747253, Email: kassaye.y.yigzaw@uit.no.

Antonis Michalas, Email: A.Michalas@westminster.ac.uk.

Johan Gustav Bellika, Email: Johan.Gustav.Bellika@telemed.no.

References

- 1.Ross MK, Wei W, Ohno-Machado L. “Big data” and the electronic health record. IMIA Yearb. 2014;9:97–104. doi: 10.15265/IY-2014-0003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kohane IS, Drazen JM, Campion EW. A glimpse of the next 100 years in medicine. N Engl J Med. 2012;367:2538–2539. doi: 10.1056/NEJMe1213371. [DOI] [PubMed] [Google Scholar]

- 3.Geissbuhler A, Safran C, Buchan I, Bellazzi R, Labkoff S, Eilenberg K, et al. Trustworthy reuse of health data: a transnational perspective. Int J Med Inf. 2013;82:1–9. doi: 10.1016/j.ijmedinf.2012.11.003. [DOI] [PubMed] [Google Scholar]

- 4.Hripcsak G, Bloomrosen M, FlatelyBrennan P, Chute CG, Cimino J, Detmer DE, et al. Health data use, stewardship, and governance: ongoing gaps and challenges: a report from AMIA’s 2012 Health Policy Meeting. J Am Med Inform Assoc. 2013;21:204–211. doi: 10.1136/amiajnl-2013-002117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lober WB, Thomas Karras B, Wagner MM, Marc Overhage J, Davidson AJ, Fraser H, et al. Roundtable on bioterrorism detection: information system–based surveillance. J Am Med Inform Assoc. 2002;9:105–115. doi: 10.1197/jamia.M1052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lazarus R, Yih K, Platt R. Distributed data processing for public health surveillance. BMC Public Health. 2006;6:235. doi: 10.1186/1471-2458-6-235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.El Emam K, Hu J, Mercer J, Peyton L, Kantarcioglu M, Malin B, et al. A secure protocol for protecting the identity of providers when disclosing data for disease surveillance. J Am Med Inform Assoc. 2011;18:212–217. doi: 10.1136/amiajnl-2011-000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lenert L, Sundwall DN. Public health surveillance and meaningful use regulations: a crisis of opportunity. Am J Public Health. 2012;102:e1–e7. doi: 10.2105/AJPH.2011.300542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Holmes JH, Elliott TE, Brown JS, Raebel MA, Davidson A, Nelson AF, et al. Clinical research data warehouse governance for distributed research networks in the USA: a systematic review of the literature. J Am Med Inform Assoc. 2014;21:730–736. doi: 10.1136/amiajnl-2013-002370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Finnell JT, Overhage JM, Grannis S. All health care is not local: an evaluation of the distribution of emergency department care delivered in Indiana. AMIA Annu Symp Proc. 2011;2011:409–416. [PMC free article] [PubMed] [Google Scholar]

- 11.Gichoya J, Gamache RE, Vreeman DJ, Dixon BE, Finnell JT, Grannis S. An evaluation of the rates of repeat notifiable disease reporting and patient crossover using a health information exchange-based automated electronic laboratory reporting system. AMIA Annu Symp Proc. 2012;2012:1229–1236. [PMC free article] [PubMed] [Google Scholar]

- 12.Weber GM. Federated queries of clinical data repositories: the sum of the parts does not equal the whole. J Am Med Inform Assoc. 2013;20:e155–e161. doi: 10.1136/amiajnl-2012-001299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Malin BA, El Emam K, O’Keefe CM. Biomedical data privacy: problems, perspectives, and recent advances. J Am Med Inform Assoc. 2013;20:2–6. doi: 10.1136/amiajnl-2012-001509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Laurie G, Jones KH, Stevens L, Dobbs C. A review of evidence relating to harm resulting from uses of health and biomedical data [Internet]. The Nuffield Council on Bioethics (NCOB); 2014 Jun p. 210. Available from: http://nuffieldbioethics.org/wp-content/uploads/FINAL-Report-on-Harms-Arising-from-Use-of-Health-and-Biomedical-Data-30-JUNE-2014.pdf

- 15.Du W, Atallah MJ. Privacy-preserving cooperative statistical analysis. In: Williams AD, editor. Comput. Secur. Appl. Conf. 2001 ACSAC 2001 Proc. 17th Annu. IEEE. 2001. pp. 102–110. [Google Scholar]

- 16.Du W, Han YS, Chen S. Privacy-preserving multivariate statistical analysis: linear regression and classification. In: Berry MW, editor. Proc. Fourth SIAM Int. Conf. Data Min. SIAM. 2004. pp. 222–233. [Google Scholar]

- 17.Kantarcioglu M. A survey of privacy-preserving methods across horizontally partitioned data. In: Aggarwal CC, Yu PS, editors. Priv.-Preserv. Data Min. New York: Springer; 2008. pp. 313–335. [Google Scholar]

- 18.Vaidya J. A survey of privacy-preserving methods across vertically partitioned data. In: Aggarwal CC, Yu PS, editors. Priv.-Preserv. Data Min. New York: Springer; 2008. pp. 337–358. [Google Scholar]

- 19.Clifton C, Kantarcioglu M, Vaidya J, Lin X, Zhu MY. Tools for privacy preserving distributed data mining. ACM SIGKDD Explor Newsl. 2002;4:28–34. doi: 10.1145/772862.772867. [DOI] [Google Scholar]

- 20.Hailemichael MA, Yigzaw KY, Bellika JG. Emnet: a tool for privacy-preserving statistical computing on distributed health data. In: Granja C, Budrionis A, editors. Proc. 13th Scand. Conf. Health Inform. Linköping: Linköping University Electronic Press; 2015. pp. 33–40. [Google Scholar]

- 21.Andersen A, Yigzaw KY, Karlsen R. Privacy preserving health data processing. IEEE 16th Int. Conf. E-Health Netw. Appl. Serv. Heal. IEEE; 2014. p. 225–30

- 22.Vatsalan D, Christen P, Verykios VS. A taxonomy of privacy-preserving record linkage techniques. Inf Syst. 2013;38:946–969. doi: 10.1016/j.is.2012.11.005. [DOI] [Google Scholar]

- 23.Pinkas B, Schneider T, Zohner M. Faster private set intersection based on OT extension. In: Fu K, Jung J, editors. Proc. 23rd USENIX Secur. Symp. San Diego: USENIX Association; 2014. pp. 797–812. [Google Scholar]

- 24.Quantin C, Bouzelat H, Allaert FAA, Benhamiche AM, Faivre J, Dusserre L. How to ensure data security of an epidemiological follow-up:quality assessment of an anonymous record linkage procedure. Int J Med Inf. 1998;49:117–122. doi: 10.1016/S1386-5056(98)00019-7. [DOI] [PubMed] [Google Scholar]

- 25.Agrawal R, Evfimievski A, Srikant R. Information sharing across private databases. Proc. 2003 ACM SIGMOD Int. Conf. Manag. Data. New York, NY, USA: ACM; 2003. p. 86–97

- 26.El Emam K, Samet S, Hu J, Peyton L, Earle C, Jayaraman GC, et al. A protocol for the secure linking of registries for HPV surveillance. PLoS One. 2012;7:e39915. doi: 10.1371/journal.pone.0039915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Adam N, White T, Shafiq B, Vaidya J, He X. Privacy preserving integration of health care data. AMIA Annu. Symp. Proc. 2007. 2007. p. 1–5. [PMC free article] [PubMed]

- 28.Lai PK, Yiu S-M, Chow KP, Chong CF, Hui LCK. An efficient bloom filter based solution for multiparty private matching. Secur. Manag. 2006. p. 286–292

- 29.Many D, Burkhart M, Dimitropoulos X. Fast private set operations with SEPIA. Technical report, ETH Zurich; 2012

- 30.Beimel A. Secret-sharing schemes: a survey. In: Chee YM, Guo Z, Shao F, Tang Y, Wang H, Xing C, editors. Coding Cryptol. Berlin: Springer; 2011. pp. 11–46. [Google Scholar]

- 31.Dong C, Chen L, Wen Z. When private set intersection meets big data: an efficient and scalable protocol. Proc. 2013 ACM SIGSAC Conf. Comput. Commun. Secur. New York, NY, USA: ACM; 2013. p. 789–800

- 32.Kilian J. Founding crytpography on oblivious transfer. Proc. Twent. Annu. ACM Symp. Theory Comput. New York, NY, USA: ACM; 1988. p. 20–31.

- 33.Karapiperis D, Vatsalan D, Verykios VS, Christen P. Large-scale multi-party counting set intersection using a space efficient global synopsis. In: Renz M, Shahabi C, Zhou X, Cheema MA, editors. Database Syst. Adv. Appl. Springer International Publishing; 2015. p. 329–45.

- 34.Paillier P. Public-key cryptosystems based on composite degree residuosity classes. In: Stern J, editor. Adv. Cryptol. — EUROCRYPT’99. Berlin: Springer; 1999. pp. 223–238. [Google Scholar]

- 35.Karr AF, Lin X, Sanil AP, Reiter JP. Secure regression on distributed databases. J Comput Graph Stat. 2005;14:263–279. doi: 10.1198/106186005X47714. [DOI] [Google Scholar]

- 36.Bellika JG, Henriksen TS, Yigzaw KY. The Snow system - a decentralized medical data processing system. In: Llatas CF, García-Gómez JM, editors. Data Min. Clin. Med. Springer; 2014

- 37.Stewart BA, Fernandes S, Rodriguez-Huertas E, Landzberg M. A preliminary look at duplicate testing associated with lack of electronic health record interoperability for transferred patients. J Am Med Inform Assoc JAMIA. 2010;17:341–344. doi: 10.1136/jamia.2009.001750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lazarus R, Kleinman KP, Dashevsky I, DeMaria A, Platt R. Using automated medical records for rapid identification of illness syndromes (syndromic surveillance): the example of lower respiratory infection. BMC Public Health. 2001;1:1. doi: 10.1186/1471-2458-1-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Richesson RL, Horvath MM, Rusincovitch SA. Clinical research informatics and electronic health record data. Yearb Med Inform. 2014;9:215–223. doi: 10.15265/IY-2014-0009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Curtis LH, Weiner MG, Boudreau DM, Cooper WO, Daniel GW, Nair VP, et al. Design considerations, architecture, and use of the Mini-Sentinel distributed data system. Pharmacoepidemiol Drug Saf. 2012;21:23–31. doi: 10.1002/pds.2336. [DOI] [PubMed] [Google Scholar]

- 41.Weber GM, Murphy SN, McMurry AJ, MacFadden D, Nigrin DJ, Churchill S, et al. The Shared Health Research Information Network (SHRINE): a prototype federated query tool for clinical data repositories. J Am Med Inform Assoc. 2009;16:624–630. doi: 10.1197/jamia.M3191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.El Emam K, Mercer J, Moreau K, Grava-Gubins I, Buckeridge D, Jonker E. Physician privacy concerns when disclosing patient data for public health purposes during a pandemic influenza outbreak. BMC Public Health. 2011;11:454. doi: 10.1186/1471-2458-11-454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lindell Y, Pinkas B. Secure multiparty computation for privacy-preserving data mining. J Priv Confidentiality. 2009;1:5. [Google Scholar]

- 44.Goldreich O. Secure multi-party computation (working draft). 2002. Available from http://www.wisdom.weizmann.ac.il/~oded/PSX/prot.pdf. Accessed 18 Oct 2016.

- 45.Cramer R, Damgård I. Multiparty computation, an introduction. In: Castellet M, editor. Contemp. Cryptol. Basel: Birkhäuser Basel; 2005. pp. 41–87. [Google Scholar]

- 46.Goldreich O. Foundations of cryptography: basic applications. 1. New York: Cambridge University Press; 2004. [Google Scholar]

- 47.Vaidya J, Clifton C. Leveraging the “Multi” in secure multi-party computation. Proc. 2003 ACM Workshop Priv. Electron. Soc. New York, NY, USA: ACM; 2003. p. 53–9

- 48.Bloom BH. Space/time trade-offs in hash coding with allowable errors. Commun ACM. 1970;13:422–426. doi: 10.1145/362686.362692. [DOI] [Google Scholar]

- 49.Tarkoma S, Rothenberg CE, Lagerspetz E. Theory and practice of bloom filters for distributed systems. Commun Surv Tutor IEEE. 2012;14:131–155. doi: 10.1109/SURV.2011.031611.00024. [DOI] [Google Scholar]

- 50.Fan L, Cao P, Almeida J, Broder AZ. Summary cache: a scalable wide-area Web cache sharing protocol. IEEE ACM Trans Netw. 2000;8:281–293. doi: 10.1109/90.851975. [DOI] [Google Scholar]

- 51.Dimitriou T, Michalas A. Multi-party trust computation in decentralized environments. 2012 5th Int. Conf. New Technol. Mobil. Secur. NTMS. 2012. p. 1–5

- 52.Dimitriou T, Michalas A. Multi-party trust computation in decentralized environments in the presence of malicious adversaries. Ad Hoc Netw. 2014;15:53–66. doi: 10.1016/j.adhoc.2013.04.013. [DOI] [Google Scholar]

- 53.Karr AF, Fulp WJ, Vera F, Young SS, Lin X, Reiter JP. Secure, privacy-preserving analysis of distributed databases. Technometrics. 2007;49:335–345. doi: 10.1198/004017007000000209. [DOI] [Google Scholar]

- 54.Hernández MA, Stolfo SJ. Real-world data is dirty: data cleansing and the merge/purge problem. Data Min Knowl Discov. 1998;2:9–37. doi: 10.1023/A:1009761603038. [DOI] [Google Scholar]

- 55.Hernández MA, Stolfo SJ. The merge/purge problem for large databases. Proc. 1995 ACM SIGMOD Int. Conf. Manag. Data. New York, NY, USA: ACM; 1995. p. 127–38

- 56.Lunde AS, Lundeborg S, Lettenstrom GS, Thygesen L, Huebner J. The person-number systems of Sweden, Norway, Denmark, and Israel. Vital Health Stat 2. 1980;84:1–59. [PubMed] [Google Scholar]

- 57.Ludvigsson JF, Otterblad-Olausson P, Pettersson BU, Ekbom A. The Swedish personal identity number: possibilities and pitfalls in healthcare and medical research. Eur J Epidemiol. 2009;24:659–667. doi: 10.1007/s10654-009-9350-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Randall SM, Ferrante AM, Boyd JH, Semmens JB. The effect of data cleaning on record linkage quality. BMC Med Inform Decis Mak. 2013;13:64. doi: 10.1186/1472-6947-13-64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Sweeney L. Simple demographics often identify people uniquely [Internet]. Pittsburgh: Carnegie Mellon University; 2000 p. 1–34. Report No.: 3. Available from: http://dataprivacylab.org/projects/identifiability/paper1.pdf

- 60.El Emam K, Buckeridge D, Tamblyn R, Neisa A, Jonker E, Verma A. The re-identification risk of Canadians from longitudinal demographics. BMC Med Inform Decis Mak. 2011;11:46. doi: 10.1186/1472-6947-11-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Koot M, Noordende G, Laat C. A study on the re-identifiability of Dutch citizens. Workshop Priv. Enhancing Technol. PET. 2010