Abstract

Objectives: Medicine must keep current with the research literature, and keeping current requires continuously updating the clinical knowledgebase (i.e., references that provide answers to clinical questions). The authors estimated the volume of medical literature potentially relevant to primary care published in a month and the time required for physicians trained in medical epidemiology to evaluate it for updating a clinical knowledgebase.

Methods: We included journals listed in five primary care journal review services (ACP Journal Club, DynaMed, Evidence-Based Practice, Journal Watch, and QuickScan Reviews). Finding little overlap, we added the 2001 “Brandon/Hill Selected List of Print Books and Journals for the Small Medical Library.” We counted articles (including letters, editorials, and other commentaries) published in March 2002, using bibliographic software where possible and hand counting when necessary. For journals not published in March 2002, we reviewed the nearest issue. Five primary care physicians independently evaluated fifty randomly selected articles and timed the process.

Results: The combined list contained 341 currently active journals with 8,265 articles. Adjusting for publication frequency, we estimate 7,287 articles are published monthly in this set of journals. Physicians trained in epidemiology would take an estimated 627.5 hours per month to evaluate these articles.

Conclusions: To provide practicing clinicians with the best current evidence, more comprehensive and systematic literature surveillance efforts are needed.

INTRODUCTION

To provide high-quality care, clinicians must have rapid access to relevant evidence when clinical questions arise. The idea of evidence-based medicine (EBM) has existed for over 100 years [1], but its use over the past decade has mushroomed. A search of MEDLINE on May 1, 2003, using the words “evidence based” returned 53,998 citations, and 42,123 (78%) were in the past 10 years.

A leading textbook in EBM describes tracking down the best evidence for answering questions as a key step for individual clinicians in the “full-blown practice of EBM” [2]. Until recently, this effort roughly translated into conducting a MEDLINE search to answer clinical questions. Primary care physicians rarely seek original research in this manner to answer clinical questions during practice; during observation of 1,101 questions during practice, this research occurred only twice [3]. Even family practice residents trained in EBM select rapid, convenient sources over evidence-based searches during clinical practice [4].

Current models and recommendations for practicing EBM [5] or information mastery [6–8] recommend using sources of pre-appraised evidence to facilitate information retrieval in practice. Physicians are encouraged to rely on others to do many of the labor-intensive steps (comprehensive searching, evaluating full-text articles, and condensing reports into easily digestible formats), so that clinicians can practice with the ability to find the current best evidence in a reasonable amount of time.

Clinical references provide the potential to make research evidence available in easily accessible and digested formats during clinical practice. To fulfill this need, the responsibility for tracking down the best evidence is then shifted to clinical reference producers. The effort required for clinical reference producers to systematically monitor the research literature in primary care has not been determined.

BACKGROUND

Systematic reviews are the most valid approach but are impractical in isolation.

Systematic reviews are protocol-driven efforts to comprehensively search for and select the most valid relevant information, critically evaluate and synthesize that information, and generate summative reports. These reviews provide the most valid method for tracking down the best evidence for a given question.

With a global network of volunteers and tremendous effort, the Cochrane Collaboration has amassed 1,837 systematic reviews addressing intervention-related questions and 11,669 abstracts of additional published systematic reviews [9]. But 13,000 systematic reviews hardly address the number of questions that occur in practice. Cochrane personnel have estimated it would take 30 years to summarize all the current controlled trials in the form of Cochrane reviews [10], not accounting for new evidence published during those 30 years.

Many clinical questions (e.g., diagnosis or prognosis questions) are not typically addressed through controlled trials. The medical literature contains systematic reviews for other types of clinical questions, such as the Rational Clinical Examination series in JAMA that provides systematic reviews of studies evaluating the use of patient history, physical examination, and office-based diagnostic tests for predicting or ruling out common diagnoses. When available (and done using methods of the highest methodological rigor), these systematic reviews represent the best evidence for these types of clinical questions, but these reviews are less common than systematic reviews of randomized trials.

Systematic reviews limited to randomized trials may also not provide the best available evidence for intervention-related questions. When randomized trial data are available to assess efficacy, these data represent the best available evidence. Many interventions have some evidence of efficacy (e.g., cohort studies) but have not yet been studied through randomized trials and will thus not be addressed by systematic reviews limited to randomized trials. For example, considering surgical repair for elderly patients with massive rotator cuff tears, no randomized trials determine if surgery improves symptoms, but cohort studies are available that provide the most valid current information for informed clinical decision making [11, 12]. Potential harms of interventions (which may be rare but serious) may also be found through cohort studies and other “lower validity” studies, where randomized trials have not documented relevant outcomes in sufficiently large samples.

Systematic reviews provide the best available evidence for answering the specific questions addressed at the time the systematic reviews are conducted, but a single systematic review can take years to perform. Two important limitations of clinical references derived solely from systematic reviews are that such reviews can be years out of date and that many significant medical topics lack systematic reviews but have research evidence. The large effort involved in conducting systematic reviews makes it impossible for systematic reviews alone to keep pace with medical information needs.

Systematic literature surveillance provides the most valid method for tracking down the best evidence not yet identified by systematic reviews.

Systematic literature surveillance is a method for supplementing systematic reviews. Systematic literature surveillance involves systematically assessing new research reports for relevance and validity and summarizing the best new research evidence. Systematic literature surveillance can be more efficient than systematic reviews for addressing a large number of information needs, because each article can be identified and evaluated once rather than be separately identified and evaluated for each question. The advantages of systematic literature surveillance, by virtue of increased efficiency, include greater currency and ability to cover more topics and more clinical questions than systematic reviews. However, systematic literature surveillance alone is inadequate for identifying the best research evidence, because it does not include older research reports that may provide better evidence, unless, of course, retrospective systematic literature surveillance is undertaken.

Complementing systematic reviews with systematic literature surveillance is necessary to provide current best research evidence for clinical references, because clinical reference content is prepared before the questions are asked. The combination of systematic reviews and systematic literature surveillance provides a balance for addressing the most information needs with the best research evidence.

Systematic literature surveillance has been developed for newsletter and alerting services.

Several services use literature surveillance to inform primary care clinicians. Journal Watch [13] and QuickScan Reviews [14] monitor a defined set of journals for multiple specialties (including primary care specialties) and provide brief summaries and commentaries on articles of interest. ACP Journal Club/ Evidence-Based Medicine [15] and Evidence-Based Practice Newsletter/InfoPOEMs [16] use explicitly defined, systematic literature surveillance methods: they monitor a defined set of journals and report on articles selected for validity and relevance, with summaries based on critical appraisal and commentaries with a focus on clinical application.

Little has been published on the size and scope of the literature that should be reviewed to inform primary care clinicians, but the Evidence-Based Practice and ACP Journal Club services have reported on their efforts. The editors of the newsletter Evidence-Based Practice reviewed 85 journals and counted 8,085 original articles over a 6-month period (January through June 1997) [17]. They classified articles as Patient-Oriented Evidence that Matters (POEMs) if the articles addressed a clinical question encountered by a typical family physician at least once every 6 months, measured patient-oriented outcomes (e.g., mortality or symptom reduction), and presented results that would require a change in practice for the typical family physician. The editors found 211 of the articles (2.6%) to be sufficiently relevant for publication as POEMs in their newsletter.

The editors of ACP Journal Club reviewed 58 journals in 1992 with a rigorous focus on the validity of articles with abstracts [18]. The top 20 journals had 6,837 articles with abstracts, of which 339 (5%) met ACP Journal Club criteria and 160 (2.3%) were selected for publication in ACP Journal Club.

Different systematic literature surveillance systems are needed for the clinical knowledgebase.

The Evidence-Based Practice and ACP Journal Club approaches are useful for clinical alerting, in other words, reporting new research findings to clinicians to raise awareness. However, these approaches are inadequate for identifying the best available information to be used when clinicians seek answers to questions. For example, if a physician has a patient with acute parotitis and wants guidance regarding antibiotic selection, that physician might not find information in sources limiting their reports to effects on patient-oriented outcomes from studies of the highest possible validity (i.e., randomized trials). However, having access to guidance from reviews or guidelines, or to studies of common etiologies and antimicrobial sensitivities, could provide the best available information and clinical usefulness.

A clinical knowledgebase should provide the best available information, even when the current evidence is limited to less valid studies or expert opinion, and should update that information as more valid studies are published. Systematic literature surveillance systems supporting a clinical knowledgebase must recognize when studies of limited validity or expert opinion represent the current state of knowledge and provide useful information for clinical decision making.

Systematic literature surveillance processes developed for updating clinical reference content need further development.

The authors could not identify any published literature on this subject. Several clinical reference products are promoted as up to date and evidence based, implying that product vendors conduct systematic surveillance of medical journals, but our efforts to review these products and their editorial policies and discuss methods with the product vendors have found no explicit methodology for how such surveillance is conducted.

The only clinical reference product, to our knowledge, that both provides rapid access to evidence-based information in the form of knowledge syntheses and updates syntheses through a systematic literature surveillance process with publicly stated methods is DynaMed [19]. DynaMed is a clinical knowledgebase in development that has already been shown to answer more than half of family physicians' clinical questions [20]. Systematic literature surveillance is used to keep DynaMed current with a process that balances validity, relevance, convenience, and affordability.

Multiple resources are monitored for updating DynaMed, including (as of August 2002) 45 original research journals, 3 systematic review collections, 9 journal review services, 5 drug information sources, and 9 sources for clinical review articles (these numbers have since increased). Article summaries vary in length based on the validity and relevance of individual articles and are incorporated directly into existing clinical topic summaries in the DynaMed database. To enhance the currency of information access, article summaries are made available immediately (the database is updated many times daily) and then secondarily peer reviewed. This process is currently text based and completely dependent on human effort.

The authors take the first steps toward defining a comprehensive systematic literature surveillance system.

While the Evidence-Based Practice and ACP Journal Club selection processes provide estimates of the scope and yield of systematic literature surveillance services for clinical alerting, the scope and yield of an identification-and-filtering process for maintaining a clinical knowledgebase is unknown. As a rough “first guess,” we created a bibliographic database to quantify the research literature pertinent to primary care and estimated the effort needed to conduct systematic literature surveillance for this literature collection.

METHODS

We compiled a list of medical journals that could be considered relevant to primary care by combining the lists of journals reviewed by Journal Watch (general medicine), QuickScan Reviews (in family practice and in internal medicine), ACP Journal Club/Evidence-Based Medicine, Evidence-Based Practice Newsletter/ InfoPOEMs, and DynaMed as of August 2002. Journal review services did not exhibit substantial agreement on which journals were included, so we supplemented our list with the 2001 “Brandon/Hill Selected List of Print Books and Journals for the Small Medical Library” [21]. The Brandon/Hill list was not created for the purpose of guiding literature surveillance activities but appeared to complement the lists from journal review services. The American Academy of Family Physicians cited the 2001 Brandon/Hill list as a leading source for customary and generally accepted medical practice [22]. All the journals on the Brandon/Hill list had some relevance to primary care.

We counted the articles published in March 2002 for all of the journals on our list. For journals that published less frequently than monthly, we included the issue closest to March 2002 in our count. Letters to the editor, review articles, editorials, and other unique features of selected journals were included in the count because they could contain useful information, including reports of original research that would not otherwise be identified [23]. We used citations indexed in MEDLINE as a reasonable estimate for the number of articles in each journal issue. For journals not available in MEDLINE, we used Current Contents, PsycINFO, Cumulative Index to Nursing and Allied Health Literature (CINAHL), and EMBASE. For journals not available in these bibliographic databases, we obtained the tables of contents of the journal issues most closely related to March 2002 (e.g., March–April, spring, first quarter) and counted each article or journal item that related to research articles, review articles, editorials, commentary, or opinions. To provide a more accurate estimate of the number of articles published monthly, we adjusted the count for bimonthly journals by half, for quarterly journals by a third, and so forth.

To estimate the effort required to systematically evaluate this literature base, we randomly sampled fifty citations from this database. Five generalist physicians with training in medical epidemiology (Alper, Kinkade, Hauan, Onion, Sklar) each reviewed these fifty articles using a standard protocol.* This protocol was designed to:

1. allow rapid determination of the potential usefulness of articles based on quickly identified factors affecting clinical relevance and scientific validity

2. triage articles into one of six pathways:

a. items to ignore as not useful for a clinical reference

b. items to catalog for assistance in future searches but not clearly useful for updating specific content (e.g., a review article addressing rarely encountered subgroups of patients)

c. one- or two-line summaries

d. brief summaries but no formal critical appraisals

e. critical appraisals but no alerts

f. critical appraisals and alerts for clinicians

3. summarize clinically relevant information based on the selected pathway

The five physicians were provided with the fifty articles in full-text form using a combination of paper and electronic copies. The amount of time spent on each article and the pathway selected for each article were left to the discretion of each physician.

Future study will further refine this process for systematic literature surveillance, including consensus methods for defining these pathways and publishing an ontology to govern the domain of systematic literature surveillance. For now, the five experienced physicians used the protocol to guide their reviews of the fifty sample articles, as if they were reviewing these articles for a primary care clinical reference. Software recorded the time spent on each article, and the physicians could adjust the time if they were interrupted during the process. We determined average times for article evaluation collectively and for each physician.

RESULTS

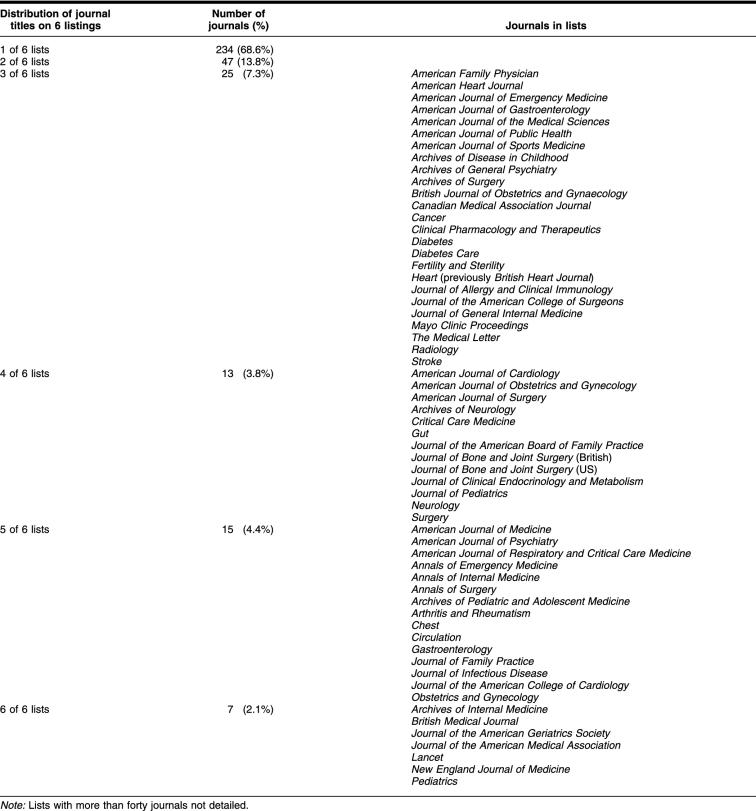

The combined lists identified 343 unique journals. Two journals (Archives of Family Medicine, Hospital Practice) were discontinued. Of the 341 journals confirmed to be currently active, we found surprisingly little overlap among the six services that purportedly addressed the core literature for primary care. Only 7 journals (2.1%) were on all six lists, and 15 (4.4%) were on five lists, while 234 (68.6%) were on only one of the six lists (Table 1).

Table 1 Distribution of journal titles on listings from ACP Journal Club/Evidence-Based Medicine, Brandon/Hill, DynaMed, InfoPOEMs/Evidence-Based Practice Newsletter, Journal Watch (general medicine), and QuickScan Reviews (in family practice and in internal medicine)

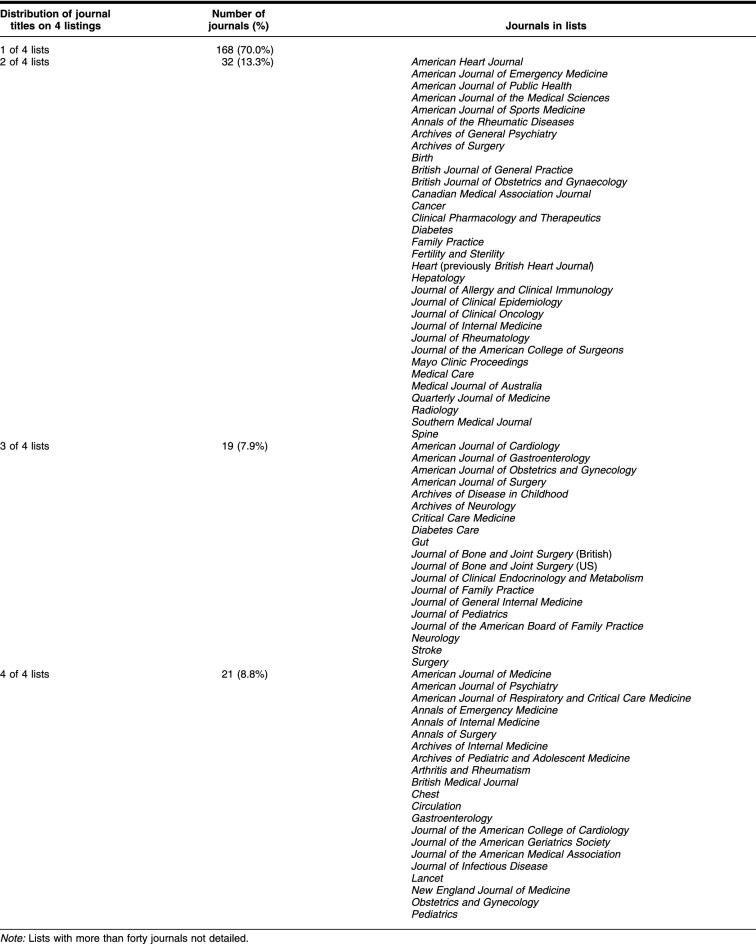

Agreement might not have been a realistic expectation, because the 6 journal lists were derived for different purposes. We repeated our analysis for the 4 services that provided regular abstract reporting for clinical alerting. The combined list of journals from these 4 services identified 240 unique, currently active journals. Again, we found little overlap among the 4 lists. Only 21 journals (8.8%) were on all four lists, and 168 (70%) were on only one of the 4 lists (Table 2).

Table 2 Distribution of journal titles on listings from ACP Journal Club/Evidence-Based Medicine, InfoPOEMs/Evidence-Based Practice Newsletter, Journal Watch (general medicine), and QuickScan Reviews (in family practice and in internal medicine)

Using the 6 journal lists, we identified 8,265 articles and collected their citations in a database using Reference Manager 10. Adjustment for journal publication frequency yielded an estimate of 7,287 articles monthly that would need to be considered to comprehensively and systematically update the primary care knowledgebase.

Evaluation of sample articles found that the time spent evaluating individual articles ranged from 0.18 minutes (for an article quickly deemed irrelevant) [24] to 39.5 minutes [25], with the exception of 1 outlier (taking up to 133 minutes to evaluate one citation [26] that represented 34 abstracts from a conference). No articles required formal critical appraisal and detailed summarization. The average times per article spent by the 5 physicians were 1.82 minutes, 1.83 minutes, 3.88 minutes, 4.03 minutes, and 14 minutes, with an overall average of 5.16 minutes. Sources of variation among the 5 physicians include levels of experience processing articles for clinical references, reading pace, format in which articles were read (electronic or print), and decisions regarding how much information was clinically relevant. One outlier spent considerably more time evaluating articles and summarized more information. Average time per article was 2.89 minutes, if this outlier was excluded.

Extrapolating this estimate to 7,287 articles per month, this effort would require 627.5 hours per month, or about 29 hours per weekday, or 3.6 full-time equivalents of physician effort. If excluding the outlier (i.e., basing estimates only on physicians with higher thresholds for clinical relevance), the estimated effort would be 351 hours per month or 2 full-time equivalents of physician effort.

DISCUSSION

Defining the “right” set of journals for primary care literature surveillance.

The volume of research literature potentially relevant to primary care is enormous. Our report provides an estimate but is not based on a rigorous selection of the journals that should or should not be included. The four journal review services we evaluated have different styles and audiences but all have the purpose of providing clinicians with news regarding recently published research articles of clinical importance.

During the conduct of this study, we were surprised to discover the limited overlap among these four services. The differences in covered journals might be related to different opinions among editors of journal review services regarding quality and importance of individual journals, availability (editors may have been exposed to or have easier access to different journals), different costs, or other factors. Except for an occasional journal, we do not think any of these journal review services focused on irrelevant sources. Though not the focus of our research, this limited overlap raises the questions of whether any of these services are sufficiently comprehensive to be relied on for alerting functions or whether a larger systematic literature surveillance service is needed for clinical alerting in addition to or in conjunction with informing the clinical knowledgebase (clinical reference updating).

Because each of these services did not individually provide comprehensive coverage of the clinically relevant journals for primary care, we suspected that the combined list would also exclude clinically relevant journals. Despite our attempts to be more comprehensive in our approach to identifying journals relevant to primary care (by adding DynaMed sources and the Brandon/Hill list), we still missed relevant journals. For example, the Scandinavian Journal of Primary Health Care published original research but was not found on any of the six lists we used to identify journals.

Twenty years ago, a bibliometric analysis of 35,455 articles cited in MEDLINE and indexed to the 50 most prevalent problems in outpatient family practice found a “core” set of 332 journals accounted for 76.4% of the citations [27]. A search of the 50 most frequently cited journals from this report found 11 journals that were not in our database yet were still in print today (including 3 continuing under different titles). A bibliometric analysis, however, cannot address current journals of high importance for primary care. For example, the Annals of Family Medicine was launched in 2003 to fill a void left by the demise of Archives of Family Medicine. Annals of Family Medicine was not yet indexed in MEDLINE when this paper was submitted.

If journals could be ranked in order of usefulness for informing the primary care knowledgebase, then this ranking might be a mechanism for defining the “right” set of journals for a defined amount of effort. Current impact factors (measures of how often journal articles are cited) are not designed to address clinical relevance, so a different ranking method would need to be developed to determine the “right” set of journals.

Providing the first report in a research agenda.

We consider this report a “quick look” prior to embarking on a research agenda to evaluate methods for systematic literature surveillance for the primary care clinical knowledgebase. We need to refine our estimations of effort with further research. We estimated the effort to evaluate this literature using methods extrapolated from those used for updating DynaMed. The methods used were neither actual methods used at the time nor the current methods outlined in draft research protocols but rather represented a “quick guess” while in transition, so that we could determine a rough effort estimation.

Our current estimates might underestimate the cognitive effort to determine which articles were important and extract the clinically relevant information from those articles. Our random sample did not identify articles that were considered highly relevant and valid, and such articles take substantial additional effort for critical appraisal and detailed summarization. Also, our estimates did not include the time to obtain articles, edit summaries, or have article summaries peer reviewed.

Our current estimates might also overestimate the effort that could be required using a refined process. Additional research might determine areas where nonphysician personnel, Medical Subject Heading (MeSH) terms, or other methods could provide some pre-filtering and increase overall efficiency of the process.

We selected the month of March in this study to avoid “index,” “holiday,” and “summer” issues of journals, but a longitudinal study will be more accurate than a cross-sectional study. We also need to study our process to determine and improve consensus, consistency, and efficiency. At this stage in the development of the systematic literature surveillance pathway, we did not institute methods to further clarify the process and reach consensus guidelines on how to make the judgments in selecting pathways or information to be summarized. These methods will be the focus of future studies.

Balancing effort needed and effort available.

If unlimited effort were available, every journal considered potentially relevant to primary care could be evaluated to inform the primary care knowledgebase, no matter how remote the possibility of finding useful information for this purpose. In a broad sense, considering everything of potential biomedical importance would require perusing about 6,000 articles per day [28]. If the effort available was limited to a defined amount, one could theoretically determine the highest-yield journals that could be processed with the available effort and limit evaluation to only those journals (or supplement the effort with high-yield journal review services).

Ultimately, the scalability is dependent on the resources allocated to this effort. As the biomedical community increases the perceived value of this approach, more resources can be allocated to investigate and expand the process. Additional efforts are needed for secondary peer review, editing, pruning (deleting or archiving outdated reports), and testing of the clinical knowledgebase, but that is beyond the scope of this report.

CONCLUSION

Our study provides an estimate for the overall workload of systematically keeping up with the medical literature relevant to primary care, and further study is needed to better select appropriate journals and define the article evaluation process for clinical reference updating. These efforts will help develop more comprehensive and systematic literature surveillance to provide practicing clinicians with clinical references that incorporate current research.

Acknowledgments

Joseph L. Quetsch, David R. Mehr, Mary Barile, Linda Cooperstock, and Richard O. Schamp contributed to revisions. Kinkade's efforts were supported by a Bureau of Health Professions Award (DHHS 5 T32 HP17001–15) from the Health Resources and Services Administration.

CONFLICT OF INTEREST STATEMENT

Brian S. Alper is the owner of Dynamic Medical Information Systems and editor-in-chief of DynaMed (http://www.dynamicmedical.com), which is described briefly and used as a source of journal lists for the research reported in this paper.

Footnotes

* The standard protocol may be found at http://www.dianexus.org/keeping/.

This article has been approved for the Medical Library Association's Independent Reading Program (IRP).

Contributor Information

Brian S. Alper, Email: editor@dynamicmedical.com.

Jason A. Hand, Email: jaahand@iupui.edu.

Susan G. Elliott, Email: elliotts@health.missouri.edu.

Scott Kinkade, Email: skinkade@pol.net.

Michael J. Hauan, Email: mjh@hauan.org.

Daniel K. Onion, Email: daniel.k.onion@dartmouth.edu.

Bernard M. Sklar, Email: bersklar@netcantina.com.

REFERENCES

- Sackett DL, Rosenberg WM, Gray JA, Haynes RB, and Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ. 1996 Jan 13; 312(7023):71–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sackett DL, Straus SE, Richardson WS, Rosenberg W, and Haynes RB. Evidence-based medicine. how to practice and teach EBM. 2nd ed. London, UK: Harcourt Publishers, 2000:1. [Google Scholar]

- Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, and Evans ER. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999 Aug 7; 319(7206):358–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramos K, Linscheid R, and Schafer S. Real-time information-seeking behavior of residency physicians. Fam Med. 2003 Apr; 35(4):257–60. [PubMed] [Google Scholar]

- Sackett DL, Straus SE, Richardson WS, Rosenberg W, and Haynes RB. Evidence-based medicine. how to practice and teach EBM. 2nd ed. London, UK: Harcourt Publishers, 2000:1. [Google Scholar]

- Slawson DC, Shaughnessy AF, and Bennett JH. Becoming a medical information master: feeling good about not knowing everything. J Fam Pract. 1994 May; 38(5):505–13. [PubMed] [Google Scholar]

- Shaughnessy AF, Slawson DC, and Bennett JH. Becoming an information master: a guidebook to the medical information jungle. J Fam Pract. 1994 Nov; 39(5):489–99. [PubMed] [Google Scholar]

- Slawson DC, Shaughnessy AF. Obtaining useful information from expert based sources. BMJ. 1997 Mar 29; 314(7085):947–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Cochrane Library,. 2003(4). Oxford, UK: Update Software. [Google Scholar]

- Mallett S, Clarke M. How many Cochrane reviews are needed to cover existing evidence on the effects of health care interventions? ACP Journal Club. 2003 Jul–Aug; 139(1):A11. [PubMed] [Google Scholar]

- Worland RL, Arredondo J, Angles F, and Lopez-Jimenez F. Repair of massive rotator cuff tears in patients older than 70 years. J Shoulder Elbow Surg. 1999 Jan–Feb; 8(1):26. [DOI] [PubMed] [Google Scholar]

- Grondel RJ, Savoie FH, and Field LD. Rotator cuff repairs in patients 62 years of age or older. J Shoulder Elbow Surg. 2001 Mar–Apr; 10(2):97–9. [DOI] [PubMed] [Google Scholar]

- Journal watch online. [Web document]. Waltham, MA: Massachusetts Medical Society, 2002. [cited 30 Aug 2002]. <http://genmed.jwatch.org>. [Google Scholar]

- Programs in medicine. [Web document]. Birmingham, AL: Oakstone Medical Publishing, 2002. [cited 30 Aug 2002] <http://www.oakstonemedical.com/medical.cfm>. [Google Scholar]

- ACP journal club. [Web document]. Philadelphia, PA: American College of Physicians-American Society of Internal Medicine, 2002. [cited 30 Aug 2002]. <http://www.acpjc.org>. [Google Scholar]

- InfoPOEMs: the clinical awareness system. [Web document]. Charlottesville, VA: InfoPOEM, 2002. [cited 30 Aug 2002]. <http://www.infopoems.com/index.cfm>. [Google Scholar]

- Ebell MH, Barry HC, Slawson DC, Shaughnessy AF.. Finding POEMs in the medical literature. J Fam Pract. 1999;48(5):350–5. [PubMed] [Google Scholar]

- Haynes RB.. Where's the meat in clinical journals? ACP Journal Club. 1993;119:A22. [Google Scholar]

- DynaMed policy on information quality. [Web document]. Columbia, MO: Dynamic Medical Information Systems. [cited 30 Aug 2002]. <http://www.dynamicmedical.com/policy>. [Google Scholar]

- Alper BS, Stevermer JJ, White DS, and Ewigman BG. Answering family physicians' clinical questions using electronic medical databases. J Fam Pract. 2001 Nov; 50(11):960–5. [PubMed] [Google Scholar]

- Hill DR, Stickell HN. Brandon/Hill selected list of print books and journals for the small medical library. Bull Med Libr Assoc. 2001 Apr; 89(2):131–53. [PMC free article] [PubMed] [Google Scholar]

- Categories, definitions, and criteria for the clinical content of CME. [Web document]. Leawood, KS: American Academy of Family Physicians, 2002. [cited 24 Oct 2002]. <http://www.aafp.org/x1546.xml>. [Google Scholar]

- Wentz R. Visibility of research: FUTON bias. Lancet. 2002 Oct 19; 360(9341):1256. [DOI] [PubMed] [Google Scholar]

- Strand PS. Coordination of maternal directives with preschoolers' behavior: influence of maternal coordination training on dyadic activity and child compliance. J Clin Child Adolesc Psychol. 2002 Mar; 31(1):6–15. [DOI] [PubMed] [Google Scholar]

- Ritter AJ. Naltrexone in the treatment of heroin dependence: relationship with depression and risk of overdose. Aust N Z J Psychiatry. 2002 Apr; 36(2):224–8. [DOI] [PubMed] [Google Scholar]

- Canadian Spine Society 2nd Annual Meeting. Vernon, BC, Canada; March 21–23, 2002. Abstracts. Can J Surg. 2002 Apr; 45(Suppl):4–12. [PMC free article] [PubMed] [Google Scholar]

- Sneiderman CA.. Keeping up with the literature of family practice: a bibliometric approach. Fam Pract Res J. 1983;3(1):17–23. [Google Scholar]

- Lundberg GD. Perspective from the editor of JAMA, The Journal of the American Medical Association. Bull Med Libr Assoc. 1992 Apr; 80(2):110–4. [PMC free article] [PubMed] [Google Scholar]