Abstract

Background

The rapid review is an approach to synthesizing research evidence when a shorter timeframe is required. The implications of what is lost in terms of rigour, increased bias and accuracy when conducting a rapid review have not yet been elucidated.

Methods

We assessed the potential implications of methodological shortcuts on the outcomes of three completed systematic reviews addressing agri‐food public health topics. For each review, shortcuts were applied individually to assess the impact on the number of relevant studies included and whether omitted studies affected the direction, magnitude or precision of summary estimates from meta‐analyses.

Results

In most instances, the shortcuts resulted in at least one relevant study being omitted from the review. The omission of studies affected 39 of 143 possible meta‐analyses, of which 14 were no longer possible because of insufficient studies (<2). When meta‐analysis was possible, the omission of studies generally resulted in less precise pooled estimates (i.e. wider confidence intervals) that did not differ in direction from the original estimate.

Conclusions

The three case studies demonstrated the risk of missing relevant literature and its impact on summary estimates when methodological shortcuts are applied in rapid reviews. © 2016 The Authors. Research Synthesis Methods Published by John Wiley & Sons Ltd.

Keywords: rapid review, systematic review, knowledge synthesis, meta‐analysis, methodology, shortcut

1. Background

Systematic reviews (SRs) represent the highest level of evidence in intervention research because they use rigorous and transparent methods to identify, appraise and synthesize all relevant research evidence on a specific and clearly defined question (Centre for Reviews and Dissemination, 2008). As a result, they are considered to be more appropriate sources of research evidence to inform decision‐making than the newest or most highly cited individual studies (Lavis et al., 2005). However, decision makers often require rapid access to current evidence on a topic to inform urgent policy and practice decisions (Ganann et al., 2010; Harker and Kleijnen, 2012; National Collaborating Centre for Methods and Tools, 2012). Frequently, the time frame available may be shorter than the typical 6 months to a year required to complete a full SR (Hemingway and Brereton, 2009). To better align with the needs of decision makers and other knowledge users, the rapid review (RR) has emerged as an approach to synthesizing research evidence when a shorter time frame (e.g. less than 6 months) is required (Ganann et al., 2010; Harker and Kleijnen, 2012; Khangura et al., 2012).

Although there is currently no standardized approach for conducting RRs, they generally follow the same methodological process as traditional SRs but with one or more methodological shortcuts (Ganann et al., 2010; Harker and Kleijnen, 2012). Some methodological shortcuts that have been adopted in RRs include addressing a narrower research question (e.g. specific to a particular study population or setting), limiting the number of electronic databases searched, limiting or eliminating the search of the grey literature, defining narrower study eligibility criteria (e.g. study design, language, location, publication dates), screening and reviewing by a single reviewer, eliminating risk‐of‐bias assessment and not carrying out meta‐analyses (MAs) (Ganann et al., 2010; Harker and Kleijnen, 2012; Cameron et al., 2007; Grant and Booth, 2009; Van De Velde et al., 2011). While methodological shortcuts allow reviews to be conducted in less time and with fewer resources than a full SR, they may also increase the likelihood of introducing bias into the review process (Ganann et al., 2010; Harker and Kleijnen, 2012). The full implications of what is lost in terms of rigour, increased bias and accuracy of results when conducting a RR have not yet been elucidated (Ganann et al., 2010).

To date, there has been little research evaluating the impact of applying RR processes or comparing the outcomes of RRs and SRs addressing the same question. A pilot study by Buscemi et al. (2006) found that data extraction by a single reviewer generated more errors than double data extraction with two reviewers. However, the greater error rate did not translate into substantial differences in ‘the direction, magnitude, precision or significance of pooled estimates for most outcomes’ (Buscemi et al., 2006). In the only comparative study to date, Cameron et al. (2007) evaluated differences in methodologies and conclusions among four sets of SRs and RRs on the same topic published in the literature. They found that despite ‘axiomatic differences’ between the evaluated reviews, there were ‘no instances in which the essential conclusions of the different reviews were opposed’. Less formally, Van de Velde et al. (Van De Velde et al., 2011) noted in a letter to the editor that a SR (Vlachojannis et al., 2010) published in the journal concluded oppositely to their RR addressing the same topic (De Buck and Van Velde, 2010).

In this paper, the potential implications of four different methodological shortcuts on the outcome(s) of three completed SR‐MAs addressing agri‐food public health topics are assessed. For each review, the four methodological shortcuts were applied individually to assess the impact of the shortcut on the overall number of relevant studies included in the review and to evaluate whether omitted studies affected the direction, magnitude or precision of summary estimates from MA.

2. Methods

2.1. Selection of systematic review meta‐analyses

Three SR‐MAs evaluating the effectiveness of various agri‐food public health interventions were selected as case studies (Greig et al., 2012; Wilhelm et al., 2011; Bucher et al., 2015). The review questions for each SR‐MA are provided in Table 1. These SR‐MAs were purposively selected because we had full access to their protocols, data collection forms and most data files, thereby ensuring that the original review processes could be duplicated.

Table 1.

Review question for the three original systematic review meta‐analyses.

| Author | Review question |

|---|---|

| Bucher et al. (2015) | Does chilling reduce Campylobacter spp. prevalence and/or concentration during the primary processing of broiler chickens? |

| Greig et al. (2012) | Do primary processing interventions reduce contamination of beef carcasses with generic or pathogenic E. coli (measured as prevalence or concentration)? |

| Wilhelm et al. (2011) | What is the effect of hazard analysis critical control point (HACCP) programs on microbial prevalence and concentration on food animal carcasses in abattoirs through primary processing? |

2.2. Methodological shortcuts

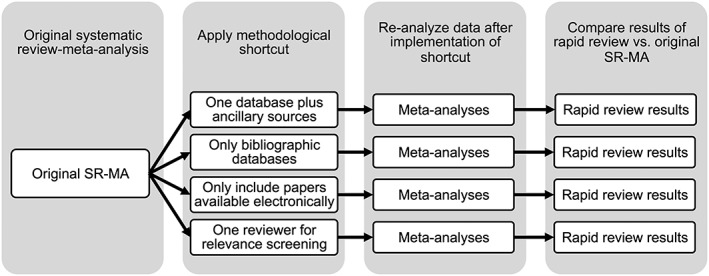

For each SR‐MA, the search and relevance screening steps were recreated while applying one of four methodological shortcuts. Each shortcut was applied separately while keeping all other methodological processes consistent with the original SR‐MA. An overview of the process is provided in Figure 1. An initial list of methodological shortcuts was developed based on a rapid scan of published RRs and methodological papers about rapid reviews:

Published RRs. A search was conducted in Scopus (SciVerse) using the search terms ‘rapid review’ and ‘rapid systematic review’. Scanning the search output identified 43 articles on RRs, of which 38 were available in full text through institutional holdings. Data regarding the methodological processes reported in each RR were extracted and reviewed.

Methodological papers on RRs. The following methodological papers were reviewed: two reviews of published RRs (Ganann et al., 2010; Harker and Kleijnen, 2012), two articles describing the authors' experiences in conducting RRs (Khangura et al., 2012; Thomas et al., 2013), a survey of HTA agencies (Watt et al., 2008a) and two papers describing comparisons between RRs and SRs on the same topic (Van De Velde et al., 2011; Watt et al., 2008b).

Figure 1.

Overview of study process for evaluating the potential implications of methodological shortcuts on the outcome of rapid reviews. This process was repeated for each of three original systematic review meta‐analyses.

Based on feasibility and prior use in the literature, the following four methodological shortcuts were selected for evaluation:

Limiting the search of bibliographic databases to the one yielding the highest number of records from the search algorithm, in addition to ancillary sources searched in the original SR‐MA (e.g. grey literature, hand‐searching of key journals, reference lists and consultation with experts).

Limiting the search to bibliographic databases (i.e. excluding the grey literature and other non‐bibliographic sources from the search).

Limiting inclusion of studies to those in which the full text was available electronically through institutional holdings or publicly online (i.e. excluding studies that must be requested or photocopied from print sources).

Relevance screening of titles and abstracts by a single reviewer (rather than by two independent reviewers).

2.3. Shortcut 1 — one bibliographic database plus ancillary sources

For each SR‐MA, the search documentation was examined to determine which of the included bibliographic databases yielded the largest number of records. The records obtained from the single database were imported into a reference management program and merged with records arising from ancillary sources (e.g. grey literature search, hand‐searching, consultation with experts), as reported in the original SR‐MA. Studies included in the original SR‐MA were cross‐checked against these records to identify the relevant studies missed by not including the other bibliographic databases in the search.

2.4. Shortcut 2 — limiting the search to bibliographic databases

For each SR‐MA, only records arising from the bibliographic database searches were imported into a reference management program. Studies included in the original SR‐MA were cross‐checked against these records to determine if, and how many, relevant studies were missed by omitting the grey literature and other non‐bibliographic database sources from the search.

2.5. Shortcut 3 — papers available electronically

A search was conducted to determine whether studies included in each of the original SR‐MAs were readily available in a full‐text electronic format (e.g. PDF, html), either publicly online or through institutional holdings (TriUniversity Group of Libraries, http://www.tug‐libraries.on.ca/). Studies were excluded if the full text was only available in print, through a library request or purchase through the publisher.

2.6. Shortcut 4 — single reviewer for title and abstract relevance screening

The potential implication(s) of this methodological shortcut was assessed by rescreening titles and abstracts for relevance in duplicate with two independent reviewers. Reviewer A was a veterinarian, had a master's degree in epidemiology and had over 5 years of experience in relevance screening for reviews in agri‐food public health. Reviewer B had a master's degree in public health and over 2 years of experience in relevance screening for reviews in agri‐food public health. The reviewers were blinded to the study design and objectives and to the identity of the other reviewer. Prior to screening, the reviewers were provided a short document containing background information, the eligibility criteria and the relevance screening tool for the review. Each reviewer was then assigned a practice pre‐test of 50 records of potentially relevant studies to screen in order to ensure adequate understanding of the review topic and screening criteria. Pre‐test results for each reviewer were analysed using Cohen's kappa (k), which assessed the extent of each reviewer's agreement with the results of the original review in the preceding texts that expected by chance alone (Cohen, 1960). During screening, the reviewers did not have access to the other reviewers' responses. Studies included in the original SR‐MA were cross‐checked against the list of records included after screening by each reviewer to determine if, and how many, relevant studies were excluded.

2.7. Review management and data analysis

Bibliographic records for each review were managed in ProCite for Windows, Version 5.0.3 (ISI ResearchSoft, Berkeley, CA, USA) or RefWorks 2.0 (RefWorks‐COS, Besthesda, MD). Title and abstract relevance screening were performed in the electronic systematic review management program DistillerSR (Evidence Partners Incorporated, Ottawa, ON, Canada). Data were imported into Microsoft Excel 2010 (Microsoft Corporation, Redmond, WA, USA) for tabulation. Analyses were conducted using the procedures reported in the original SR‐MAs (Bucher et al., 2015; Greig et al., 2012; Wilhelm et al., 2011). All MAs were performed in STATA/IC 13.1 (StataCorp. 2013. Stata Statistical Software: Release 13. College Station, TX: StataCorp LP).

3. Results

3.1. Impact on the number of relevant studies omitted or excluded

The numbers of studies included in the original SRs and those remaining after implementing each methodological shortcut are displayed in Table 2. Single relevance screening by ‘Reviewer B’ resulted in the highest number of relevant studies omitted from the Bucher et al. (2015) and Wilhelm et al. (2011) reviews, with 2/18 and 11/19 excluded respectively. Limiting the bibliographic database search to one database resulted in 15/36 relevant studies not being captured in the Greig et al. (2012) search and were thus omitted from the review. The number of separate MAs performed in each review and the number impacted by each shortcut are described in Table 3. The observed effects on the direction, magnitude, precision and heterogeneity of summary estimates are summarized by shortcut type.

Table 2.

Impact of the four methodological shortcuts on the number of relevant studies included.

| Systematic review meta‐analysis | Bucher et al. (2015) | Greig et al. (2012) | Wilhelm et al. (2011) |

|---|---|---|---|

| Number of relevant studies included in original systematic review | 18 | 36 | 19 |

| Relevant studies included after: | |||

| Shortcut 1 — 1 database plus ancillary sources | 17 (94%) | 21 (53%) | 17 (89%) |

| Shortcut 2 — only bibliographic databases | n/aa | 35 (97%) | 14 (74%) |

| Shortcut 3 — papers available electronically | 18 (100%) | 33 (92%) | 18 (95%) |

| Shortcut 4 — screening by Reviewer A | 18 (100%) | 34 (94%) | 17 (89%) |

| Shortcut 4 — screening by Reviewer B | 15 (83%) | 29 (81%) | 8 (42%) |

The Bucher et al. search included only bibliographic databases.

Table 3.

Impact of the four methodological shortcuts on the meta‐analyses for each review.

| Systematic review meta‐analysis | Bucher et al. (2015) | Greig et al. (2012) | Wilhelm et al. (2011) |

|---|---|---|---|

| Number of studies (trials) included in original meta‐analysis | 18 (44) | 22 (107) | 9 (n/a) |

| Number of meta‐analyses performed in original paper | 7 | 18 | 5 |

| Number of meta‐analyses affected by: | |||

| Shortcut 1 — 1 database plus ancillary sources | 1 (14%) | 13 (72%) | 1 (20%) |

| Shortcut 2 — only bibliographic databases | n/aa | 1 (6%) | 4 (80%) |

| Shortcut 3 — papers available electronically | 0 | 3 (17%) | 0 |

| Shortcut 4 — screening by Reviewer A | 0 | 1 (6%) | 3 (60%) |

| Shortcut 4 — screening by Reviewer B | 3 (43%) | 4 (22%) | 5 (100%) |

The Bucher et al. search included only bibliographic databases.

3.2. Shortcut 1 — one bibliographic database plus ancillary sources

Overall, limiting the search of bibliographic databases to one database plus the ancillary sources affected the highest number of MAs (n = 15). The affected MAs are listed in Table 4 with their original summary estimate and the summary estimate obtained after applying the shortcut. The search output was limited to Biological Sciences/ProQuest for the Bucher et al. review and Agricola for the Wilhelm et al. review. For the Greig et al. (2012) review, limiting the search output to MEDLINE/PubMed and other non‐bibliographic database sources resulted in 15/36 relevant studies being omitted from the search, and thus, affecting 13/18 MAs. The shortcut was also applied with the output from CAB Abstracts/CABI (n = 715) because the database yielded only 16 fewer hits than MEDLINE/PubMed (n = 731) and was hypothesized to have a higher sensitivity because of its subject coverage that includes agriculture, veterinary science and food science (CABI, 2014). Applying the shortcut with the output from CAB Abstracts/CABI resulted in more relevant studies being omitted from the search (17/36) than with MEDLINE/PubMed (15/36), and so further analysis was discontinued with the CAB Abstracts/CABI output.

Table 4.

Meta‐analyses affected by limiting the search of bibliographic databases to one database plus ancillary sources (shortcut 1).

| Original SR‐MA | After shortcut | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | Meta‐analysis | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 |

| Bucher et al., 2015 | 7. Immersion chilling with unspecified disinfectant | 316/4/3 | OR 0.284 (0.026, 3.081) | 12.68 | 0.005 | 76.3% | 244/3/2 | OR 0.297 (0.014, 6.165) | 11.07 | 0.004 | 81.9% |

| Greig et al., 2012 | 2. Final carcass wash (+wash) | 1145/18/2 | OR 0.133 (0.050, 0.349) | 73.24 | <0.001 | 76.8% | 640/8/1 | OR 0.014 (0.005, 0.040) | 7.98 | 0.334 | 12.3% |

| Greig et al., 2012 | 3. Final carcass wash | 838/10/4 | OR 0.563 (0.414, 0.766) | 8.42 | 0.493 | 0.0% | 588/5/2 | OR 0.590 (0.359, 0.968) | 5.34 | 0.254 | 25.1% |

| Greig et al., 2012 | 4. Pasteurization — steam | 3286/17/6 | OR 0.134 (0.080, 0.223) | 25.07 | 0.069 | 36.2% | 2866/12/4 | OR 0.172 (0.100, 0.294) | 16.68 | 0.118 | 34.0% |

| Greig et al., 2012 | 5. Pasteurization – steam + lactic acid | 150/3/2 | OR 0.010 (0.002, 0.039) | 1.62 | 0.444 | 0.0% | All studies excluded | n/a | n/a | n/a | n/a |

| Greig et al., 2012 | 6. Pasteurization — hot water | 450/9/2 | OR 0.089 (0.053, 0.148) | 8.00 | 0.434 | 0.0% | 300/6/1 | OR 0.095 (0.051, 0.180) | 4.84 | 0.435 | 0.0% |

| Greig et al., 2012 | 7. Overall pasteurization rate | 3986/31/10 | OR 0.092 (0.062, 0.135) | 47.63 | 0.022 | 37.0% | 3166/18/5 | OR 0.134 (0.090, 0.199) | 22.04 | 0.183 | 22.9% |

| Greig et al., 2012 | 9. Dry chill | 925/9/4 | OR 0.166 (0.114, 0.242) | 4.59 | 0.800 | 0.0% | 825/7/3 | OR 0.159 (0.107, 0.234) | 3.70 | 0.717 | 0.0% |

| Greig et al., 2012 | 11. Spray chill | 2156/4/2 | OR 5.233 (0.128, 214.186) | 62.14 | 0.000 | 95.2% | 2006/1/1 | OR 0.097a (0.057, 0.165) | n/a | n/a | n/a |

| Greig et al., 2012 | 12.Final carcass wash (+wash) | 1145/18/2 | SMD −1.741 (−2.367, −1.116) | 326.3 | <0.001 | 94.8% | 640/8/1 | SMD −3.059 (−3.817, −2.300) | 77.74 | <0.001 | 91.0% |

| Greig et al., 2012 | 13. Final carcass wash (wash) | 566/9/3 | SMD −0.279 (−0.521, −0.037) | 15.25 | 0.009 | 67.2% | 100/2/1 | SMD −0.301 (−0.580, −0.022) | 0.00 | 0.991 | 0.0% |

| Greig et al., 2012 | 14. Final carcass wash (wash+) | 2200/5/1 | SMD −0.206 (−0.339, −0.074) | 20.00 | <0.001 | 80.0% | Study excluded | n/a | n/a | n/a | n/a |

| Greig et al., 2012 | 17. Dry age chill | 1119/3/2 | SMD −0.130 (−0.205, −0.055) | 1.43 | 0.488 | 0.0% | 80/1/1 | SMD −0.100a (−0.218, 0.018) | n/a | n/a | n/a |

| Greig et al., 2012 | 18. Spray chill | 3421/6/4 | SMD −0.243 (−0.553, 0.067) | 60.69 | 0.000 | 93.4% | 240/1/1 | SMD −0.200a (−0.337, −0.063) | n/a | n/a | n/a |

| Wilhelm et al., 2011 | 1. HACCP on aerobic bacterial counts | 2486/(n/a)/3 | SMD −0.747 (−0.943, −0.551) | 5.16 | 0.076 | 61.3% | 2006/(n/a)/2 | SMD −1.059 (−1.859, −0.259) | 4.23 | 0.040 | 76.3% |

Obs, observations; CI, confidence interval; OR, odd ratio; Q, Cochran's Q statistic; n/a, not applicable, SMD, standardized mean difference.

Effect estimate from a single trial, not a pooled estimate from meta‐analysis.

Of the 13 MAs affected in the Greig et al. (2012) review, five MAs were no longer possible because fewer than two studies remained. One MA by Greig et al. (2012) found that the odds of detecting an Escherichia coli‐positive beef carcass was 5.233 (95% CI: 0.128–214.186) when spray chill was applied. Applying the shortcut resulted in all but one trial being omitted; this trial found a reduced odd of carcass contamination after spray chilling (OR 0.097, 95% CI: 0.057–0.165).

3.3. Shortcut 2 — limiting the search to bibliographic databases

This shortcut was not applicable to the Bucher et al. (2015) review because the original search was performed exclusively in bibliographic databases. Use of the shortcut resulted in one MA from Greig et al. (2012) to no longer be possible because the original analysis was based on five trials from the same study, which was a study reported in an unpublished research report, and thus, not indexed in any commercial databases. For the Wilhelm et al. (2011) review, four of five MAs were affected (Table 5) — one MA that was no longer possible because all but one study was omitted, and three MAs yielded an odd ratio numerically close in value to the original but with a wider confidence interval.

Table 5.

Meta‐analyses affected by limiting the search to only bibliographic databases (shortcut 2).

| Original SR‐MA | After shortcut | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | Meta‐analysis | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 |

| Greig et al., 2012 | 14. Final carcass wash (wash+) | 2200/5/1 | SMD −0.206 (−0.339, −0.074) | 20.00 | <0.001 | 80.0% | Study excluded | n/a | n/a | n/a | n/a |

| Wilhelm et al., 2011 | 2. HACCP on E. coli prevalence | 6236/(n/a)/3 | OR 0.585 (0.298, 1.149) | 9.77 | 0.008 | 79.5% | 2166/(n/a)/1 | OR 0.443a (0.350, 0.561) | n/a | n/a | n/a |

| Wilhelm et al., 2011 | 3. HACCP on Salmonella spp. Prevalence — beef | 7843/(n/a)/5 | OR 0.887 (0.530, 1.485) | 3.39 | 0.496 | 0.0% | 3773/(n/a)/3 | OR 0.777 (0.178, 3.396) | 2.79 | 0.248 | 28.3% |

| Wilhelm et al., 2011 | 4. HACCP on Salmonella spp. Prevalence — pork | 11540/(n/a)/3 | OR 0.776 (0.535, 1.123) | 10.45 | 0.005 | 80.9% | 7311/(n/a)/2 | OR 0.758 (0.365, 1.573) | 9..93 | 0.002 | 89.9% |

| Wilhelm et al., 2011 | 5. HACCP on Salmonella spp. Prevalence — poultry | 18938/(n/a)/3 | OR 0.392 (0.173, 0.886) | 76.34 | <0.001 | 97.4% | 16417/(n/a)/2 | OR 0.393 (0.089, 1.733) | 50.58 | <0.001 | 98.0% |

Obs, observations; CI, confidence interval; OR, odd ratio; Q, Cochran's Q statistic; n/a, not applicable.

Effect estimate from a single study, not a pooled estimate from meta‐analysis.

3.4. Shortcut 3 — papers available electronically

Limiting eligibility criteria to studies directly available to the authors in an electronic format had no impact on the Bucher et al. (2015) review and resulted in one study (that was not included in any MAs) being omitted from the Wilhelm et al. (2011) review. The shortcut affected three MAs from the Greig et al. (2012) review (Table 6): Two were no longer possible because fewer than two trials remained, and one MA resulted in a larger standardized mean difference (SMD) value, but with a wider confidence interval (SMD −0.243 (95% CI: −0.553–0.067) vs. SMD −0.414 (95% CI: −1.108–0.280)).

Table 6.

Meta‐analyses affected by limiting eligibility to studies readily available to the authors in a full‐text electronic format through institutional holdings or publicly online (shortcut 3).

| Original SR‐MA | After shortcut | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | Meta‐analysis | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 |

| Greig et al., 2012 | 14. Final carcass wash (wash+) | 2200/5/1 | OR −0.206 (−0.339, −0.074) | 20.00 | <0.001 | 80.0% | Study excluded | n/a | n/a | n/a | n/a |

| Greig et al., 2012 | 17. Dry age chill | 1119/3/2 | SMD −0.130 (−0.205, −0.055) | 1.43 | 0.488 | 0.0% | 80/1/1 | SMD −0.100a (−0.218, 0.018) | n/a | n/a | n/a |

| Greig et al., 2012 | 18. Spray chill | 3421/6/4 | SMD −0.243 (−0.553, 0.067) | 60.69 | 0.000 | 93.4% | 340/3/2 | SMD −0.414 (−1.108, 0.280) | 46.07 | <0.001 | 95.7% |

Obs, observations; CI, confidence interval; OR, odd ratio; Q, Cochran's Q statistic; n/a, not applicable, SMD, standardized mean difference.

Effect estimate from a single trial, not a pooled estimate from meta‐analysis.

3.5. Shortcut 4 — single reviewer for title and abstract relevance screening

The impact of using a single reviewer for title and abstract relevance screening differed between Reviewer A (Table 7) and Reviewer B (Table 8). For the Bucher et al. (2015) review, Reviewer A screened in all records corresponding to studies included in the original SR‐MA (Table 2), and thus, had no impact on the results of the review (Table 3). Records screened out by Reviewer A impacted one MA from the Greig et al. (2012) review, and three MAs from the Wilhelm et al. (2011) review (Table 3), which resulted in odd ratios numerically closer to the null value of 1 (Table 7). Single screening by Reviewer B had a large impact on the results of the Wilhelm et al. review (Table 8). The original review identified four studies evaluating the effectiveness of hazard analysis critical control point on the prevalence of Salmonella spp. on poultry carcasses: one reporting a non‐significant odd (OR 1.047, 95% CI: 0.826–1.327) (Cates et al., 2001), and three reporting statistically significant reductions (OR 0.392, 95% CI: 0.173–0.866) (United States Department of Agriculture, Food Safety and Inspection Service, 2000; Ghafir et al., 2005; Rose et al., 2002). The latter three were among the 11 of 18 relevant studies screened out by Reviewer B, and thus, would have resulted in a review that found no evidence of hazard analysis critical control point programs being effective in reducing Salmonella spp. contamination in poultry processing plants.

Table 7.

Meta‐analyses affected by relevance screening performed by a single reviewer (shortcut 4 — reviewer A).

| Original SR‐MA | After shortcut | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | Meta‐analysis | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 |

| Greig et al., 2012 | 3. Final carcass wash | 838/10/4 | OR 0.563 (0.414, 0.766) | 8.42 | 0.493 | 0.0% | 538/9/3 | OR 0.747 (0.497, 1.123) | 4.12 | 0.846 | 0.0% |

| Wilhelm et al., 2011 | 3. HACCP on Salmonella spp. Prevalence — beef | 7843/(n/a)/5 | OR 0.887 (0.530, 1.485) | 3.39 | 0.496 | 0.0% | 7532/(n/a)/4 | OR 0.953 (0.565, 1.608) | 0.98 | 0.805 | 0.0% |

| Wilhelm et al., 2011 | 4. HACCP on Salmonella spp. Prevalence — pork | 11540/(n/a)/3 | OR 0.776 (0.535, 1.123) | 10.45 | 0.005 | 80.9% | 10789/(n/a)/2 | OR 0.913 (0.658, 1.266) | 3.73 | 0.053 | 73.2% |

| Wilhelm et al., 2011 | 5. HACCP on Salmonella spp. Prevalence — poultry | 18938/(n/a)/3 | OR 0.392 (0.173, 0.886) | 76.34 | <0.001 | 97.4% | 18237/(n/a)/2 | OR 0.564 (0.262, 1.212) | 33.63 | <0.001 | 97.0% |

Obs, observations; CI, confidence interval; OR, odd ratio; Q, Cochran's Q statistic; n/a, not applicable, SMD, standardized mean difference.

Table 8.

Meta‐analyses affected by relevance screening performed by a single reviewer (shortcut 4 — reviewer B).

| Original SR‐MA | After shortcut | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | Meta‐analysis | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 | Obs/trials/studies | Summary estimate (95% CI) | Q | p | I 2 |

| Bucher et al., 2015 | 2. Immersion chilling with chlorine | 300/6/4 | SMD −1.955 (−2.609, −1.302) | 32.14 | <0.001 | 84.4% | 220/5/3 | SMD −1.960 (−2.977, −0.942) | 31.99 | <0.001 | 87.5% |

| Bucher et al., 2015 | 4. Immersion chilling with unspecific disinfectant — BaA trials | 192/5/2 | SMD −2.472 (−3.387, −1.156) | 107.11 | <0.001 | 96.3% | All studies excluded | n/a | n/a | n/a | n/a |

| Bucher et al., 2015 | 7. Immersion chilling with unspecified disinfectant — ChT | 316/4/3 | OR 0.284 (0.026, 3.081) | 12.68 | 0.005 | 76.3% | 244/3/2 | OR 0.297 (0.014, 6.165) | 11.07 | 0.004 | 81.9% |

| Greig et al., 2012 | 3. Final carcass wash | 838/10/4 | OR 0.563 (0.414, 0.766) | 8.42 | 0.493 | 0.0% | 538/9/3 | OR 0.747 (0.497, 1.123) | 4.12 | 0.846 | 0.0% |

| Greig et al., 2012 | 4. Pasteurization — steam | 3286/17/6 | OR 0.134 (0.080, 0.223) | 25.07 | 0.069 | 36.2% | 3006/10/5 | OR 0.094 (0.066, 0.135) | 9.75 | 0.371 | 7.7% |

| Greig et al., 2012 | 7. Overall pasteurization rate | 3986/31/10 | OR 0.092 (0.062, 0.135) | 47.63 | 0.022 | 37.0% | 3706/24/9 | OR 0.076 (0.053, 0.109) | 30.64 | 0.132 | 24.9% |

| Greig et al., 2012 | 9. Dry chill | 925/9/4 | OR 0.166 (0.114, 0.242) | 4.59 | 0.800 | 0.0% | 540/8/3 | OR 0.222 (0.111, 0.442) | 3.62 | 0.823 | 0.0% |

| Wilhelm et al., 2011 | 1. HACCP on aerobic bacterial counts | 2486/(n/a)/3 | SMD −0.747 (−0.943, −0.551) | 5.16 | 0.076 | 61.3% | 520/(n/a)/2 | SMD −1.027 (−1.923, −0.132) | 4.98 | 0.026 | 79.9% |

| Wilhelm et al., 2011 | 2. HACCP on E. coli prevalence | 6236/(n/a)/3 | OR 0.585 (0.298, 1.149) | 9.77 | 0.008 | 79.5% | All studies excluded | n/a | n/a | n/a | n/a |

| Wilhelm et al., 2011 | 3. HACCP on Salmonella spp. prevalence — beef | 7843/(n/a)/5 | OR 0.887 (0.530, 1.485) | 3.39 | 0.496 | 0.0% | All studies excluded | n/a | n/a | n/a | n/a |

| Wilhelm et al., 2011 | 4. HACCP on Salmonella spp. prevalence — pork | 11540/(n/a)/3 | OR 0.776 (0.535, 1.123) | 10.45 | 0.005 | 80.9% | All studies excluded | n/a | n/a | n/a | n/a |

| Wilhelm et al., 2011 | 5. HACCP on Salmonella spp. prevalence — poultry | 16417/(n/a)/2 | OR 0.392 (0.173, 0.886) | 76.34 | <0.001 | 97.4% | All studies excluded | n/a | n/a | n/a | n/a |

Obs, observations; CI, confidence interval; OR, odd ratio; Q, Cochran's Q statistic; n/a, not applicable, SMD, standardized mean difference.

4. Discussion

The implications of four methodological shortcuts on the outcomes of three completed SR‐MAs in the area of agri‐food public health were evaluated in this set of case studies. Because each methodological shortcut was applied individually – with all other processes the same as the original SR‐MA – we have been able to show the relative impact of each shortcut through the observed differences in the outcomes of the SRs and RRs. This is in contrast to previous comparisons of SRs and RRs on the same topic (Cameron et al., 2007; Van De Velde et al., 2011), where multiple dissimilarities in the review approaches (e.g. search algorithm, bibliographic databases searched, eligibility criteria) and the conditions in which they were conducted (e.g. extent of access to full‐text journal articles, expertise of review team) made it difficult to assess the specific reasons for the observed differences in the outcomes of the reviews. Our approach also allowed us to assess the potential variability in the impact of each shortcut by applying them on three separate reviews and to illustrate the relative impact some methodological shortcuts can have on the results of a SR‐MA.

This paper is based on a non‐random sample of three SR‐MAs from the field of agri‐food public health. Agri‐food public health refers to the cross‐cutting and overlapping areas of veterinary public health, food safety and ‘One Health’ (Rajić and Young, 2013; Sargeant et al., 2006). Examples of agri‐food public health issues include antimicrobial use and antimicrobial resistance, emerging infectious diseases and the prevention and management of hazards in the food chain (Rajić and Young, 2013). Systematic reviews in agri‐food public health may differ from those in human medicine because they commonly include observational studies and challenge trials (where study subjects are deliberately exposed to the disease agent of interest under controlled conditions) (Sargeant and O'Connor, 2014; Sargeant et al., 2014). While SRs of randomized controlled trials provide the highest level of evidence about the effectiveness of an intervention, fewer randomized controlled trials are published in this field compared with human medicine (Sargeant and O'Connor, 2014). Consequently, the results may differ when the shortcuts are applied to SR‐MAs published in other fields of study.

With the exception of two shortcuts applied on the Bucher et al. review, each of the shortcuts resulted in at least one relevant study being omitted from the SR‐MAs. The omission of studies had a large impact on the three SR‐MAs evaluated in this paper because several of the original MAs were conducted with only a small number of trials or studies, with the result that a third (14/39) of the affected MAs were no longer possible because of an insufficient number of studies (i.e. <2 studies). For some of the review outcomes, the shortcuts increased the potential of the review to miss relevant literature, and thus, draw conclusions that would miss large amounts of evidence. For example, a MA of four trials from the original Greig et al. review found an uninformative odd of generic E. coli carcass contamination after spray chilling (OR 5.233, 95% CI: 0.128–214.186). However, limiting the search to one bibliographic database resulted in only one trial remaining (Corantin et al., 2005), a study that reported a significant reduction in the odds of contamination after spray chilling (OR 0.097, 95% CI: 0.057–0.165). When MA was possible, the omission of studies generally resulted in less precise pooled estimates (i.e. wider confidence intervals). There was significant heterogeneity (p‐value > 0.1; I 2 ≥ 25%) in many of the original MAs, and the omission of studies had varying effects on the between study heterogeneity when two or more studies remained in the affected MAs.

Among the three SR‐MAs examined, the results of the Bucher et al. review were the least affected by the shortcuts applied. There were a number of features of this review that likely reduced the impact of the search‐related shortcuts: the specificity of the review question and the limits imposed on the original search. The Bucher et al. review had the most narrowly defined research question of all three reviews, with just one population group (i.e. broiler chicken carcasses), one intervention (i.e. chilling during primary processing) and one pathogen (i.e. Campylobacter spp.). The specificity of the review question may have made identification of relevant studies more straightforward for reviewers, and thus, explain why this review had the highest ascertainment of relevant studies after single screening by both of our reviewers. The search for the original Bucher et al. review consisted of searching six electronic bibliographic databases and was limited to studies published after 2005. These features of the original search likely account for why limiting the search to only one bibliographic database still identified the majority of included papers (94%).

Limiting eligibility criteria to studies readily available in a full‐text electronic format decreases the time and costs associated with ordering paper‐only archives. The availability of electronic full texts can be related to year of publication, type of publication (e.g. journal article, report, thesis, etc.) and review authors' access to electronic databases and journals. While the advantages of electronic journals have been discussed since as early as 1976 (Senders, 1976), e‐journals only became more widely available in the late 1990s and early 2000s (Oermann and Hays, 2015). The original Bucher et al. review limited the search to studies published after 2005, which may account for why applying the shortcut of limiting eligibility to electronic full texts had no impact on this review. We did not evaluate the potential variability in the impact of review authors' access to electronic holdings; we had access to the fairly comprehensive electronic holdings of the TriUniversity Group of Libraries, an administrative cooperation between the libraries of three Ontario universities (http://www.tug‐libraries.on.ca/).

Relevance screening by Reviewer B for the Wilhelm et al. review demonstrated the potential impact of single reviewer screening on the accuracy and reliability of the screening process. It is unclear why so many relevant studies were screened out by Reviewer B when Reviewer A, in contrast, included 89% of relevant studies, and both reviewers had comparable kappa coefficients in the initial screening pre‐test (0.68 vs. 0.72). The large discrepancy between the screening results of the two reviewers highlights the potential risk of bias introduced with single screening. Relevance screening is a critical stage of the review to quickly assess the relevance of a citation based on the limited information reported in the title and abstract of a record (Doust et al., 2005), and exclusion at this point typically means that the citation is not considered again for inclusion in the review (Edwards et al., 2002). Thus, based on our results, we recommend the use of two reviewers whenever possible. This recommendation is supported by the findings of other studies that reported relevance screening by two reviewers increased the number of relevant records identified compared with screening by a single reviewer (Doust et al., 2005; Edwards et al., 2002). While Edwards et al. noted that a single reviewer is likely to identify the majority of relevant studies, they still recommended that screening be conducted by two reviewers whenever possible because it can increase by as much as one‐third the number of relevant records identified (Edwards et al., 2002).

Our findings support the views of Watt et al. (Watt et al., 2008a) that it may not be possible to validate methodological strategies for conducting RRs, given the inherent topic‐specific variability underpinning the research evidence. We found that the impact of the four methodological shortcuts varied between the three SR‐MAs depending on the characteristics of each topic. Thus, the impact of any methodological shortcut likely depends on the review question and its scope; rapid review authors must consider the potential size and composition of the research evidence existing for a topic and the time and resources available to conduct the review to determine the most appropriate methodological approach. Applying methodological shortcuts to SRs will make a review more susceptible to bias and/or error; therefore, it is imperative that RR authors are transparent regarding their methodology (Ganann et al., 2010; Watt et al., 2008b) and use caution when interpreting their findings. In the absence of a standardized approach for conducting RRs, transparent reporting of the methods undertaken and potential biases or limitations will better enable end‐users to make informed judgments about the validity and utility of the review results.

There have been questions regarding the appropriateness and validity of RRs given the methodological processes used to prepare them (Ganann et al., 2010; Khangura et al., 2012; Watt et al., 2008b). In light of their potential to miss relevant information, it has been suggested that RRs be viewed only as interim guidance until a full SR can be conducted (Ganann et al., 2010; Watt et al., 2008b). Watt et al. (2008b) contend that RRs are inappropriate for addressing questions that are complex or require in‐depth investigation because they ‘cannot realistically be adequately evaluated in the time frame of a rapid review’. Their study, comparing the outcomes of four RRs and SRs addressing the same question, found that the RRs reached ‘appropriate conclusions’, but that the scope of each RR was substantially narrower than that of the full SR (Cameron et al., 2007). Consequently, they suggested that RRs may be useful for answering highly refined research questions (Watt et al., 2008a). While this limits the generalizability of the review findings to other populations or settings, it can allow RRs to inform specific policy or practice decisions in a timely manner.

Our set of case studies demonstrated that there are potentially substantive implications of applying methodological shortcuts to the SR process. However, the small sample size of three SR‐MAs limited our ability to determine the range of impacts from each shortcut. Although resource‐intensive, replicating this study with a larger and more representative sample of SRs would provide more robust results and further insight into how the review features (e.g. date limits and complexity of the review question) may impact the potential bias of each methodological shortcut. We recommend that future replication of this type of study record the person‐hours for each stage of the review – which we were unable to obtain – so the number of person‐hours saved by implementing methodological shortcuts can be tabulated. Finally, there are numerous methodological shortcuts reported in the literature to expedite SRs; in this set of case studies, we investigated four that we considered to be important, not previously investigated and with the potential to have a substantive impact on the validity of the review results. Further research evaluating the implications of other methodological shortcuts will better enable RR authors to determine the most appropriate approach to synthesis when time is limited. For example, future research may demonstrate strategies to decrease the resource burden of relevance screening as the use of text‐mining algorithms become validated and more widely used for screening.

5. Conclusion

The results of these case studies demonstrated the relative effects of four methodological shortcuts on the outcomes of three completed SR‐MAs. In all but two instances, the shortcuts resulted in at least one relevant study being omitted from the SR‐MA; highlighting the risk of missing relevant literature when shortcuts are applied in SRs. Overall, the shortcuts affected 39 of a possible 143 MAs, of which 14 were no longer possible because of insufficient studies. Given that requests for rapid syntheses of research evidence will undoubtedly continue, the results of these case studies further our understanding of the implications of applying shortcuts to the SR process. Future research expanding on this study with a larger and more representative sample of SRs and evaluating the implications of other methodological shortcuts will better enable RR authors to determine the most appropriate approach to synthesis when time is limited. In the absence of a standardized approach for conducting RRs, transparent reporting of the methods undertaken and potential biases or limitations will better enable end‐users to make informed judgments about the validity and utility of the review results.

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

MP, LW and AR conceived of the study. MP, LW, AR, SM, JS and AP participated in the design of the study. MP carried out the study. All authors drafted the manuscript. All authors read and approved the final manuscript.

Supporting information

Table S1. List of potential methodological shortcuts ranked in terms of priority to be addressed.

Table S2. List of methodological shortcuts not applicable or relevant to this study.

Supporting info item

Acknowledgements

Funding for this project was provided by the Laboratory for Foodborne Zoonoses, Public Health Agency of Canada.

Pham, M. T. , Waddell, L. , Rajić, A. , Sargeant, J. M. , Papadopoulos, A. , and McEwen, S. A. (2016) Implications of applying methodological shortcuts to expedite systematic reviews: three case studies using systematic reviews from agri‐food public health. Res. Syn. Meth., 7: 433–446. doi: 10.1002/jrsm.1215.

References

- Bucher O, Waddell L, Greig J, Smith BA 2015. Systematic review‐meta‐analysis of the effect of chilling on Campylobacter spp. during primary processing of broilers. Food Control 56: 211–217. DOI:10.1016/j.foodcont.2015.03.032. [Google Scholar]

- Buscemi N, Hartling L, Vandermeer B, Tjosvold L, Klassen TP 2006. Single data extraction generated more errors than double data extraction in systematic reviews. Journal of Clinical Epidemiology 59: 697–703. [DOI] [PubMed] [Google Scholar]

- CABI . 2014. CAB Abstracts. Available: http://www.cabi.org/publishing‐products/online‐information‐resources/cab‐abstracts/ [2014, 12/29].

- Cameron A, Watt A, Lathlean T, Sturm L 2007. Rapid Versus Full Systematic Reviews: An Inventory of Current Methods and Practice in Health Technology Assessment. Adelaide, South Australia: ASERNIP‐S. [DOI] [PubMed] [Google Scholar]

- Cates SC, Anderson DW, Karns SA, Brown PA 2001. Traditional versus hazard analysis and critical control point‐based inspection: results from a poultry slaughter project. Journal of Food Protection 64: 826–832. [DOI] [PubMed] [Google Scholar]

- Centre for Reviews and Dissemination (CRD) 2008. Systematic Reviews: CRD's Advice for Undertaking Reviews in Health Care. York: Centre for Reviews and Dissemination. [Google Scholar]

- Cohen J 1960. A coefficient of agreement for nominal scales. Educational and Psychological Measurement 20: 37–46. [Google Scholar]

- Corantin H, Quessy S, Gaucher M, Lessard L, Leblanc D, Houde A 2005. Effectiveness of steam pasteurization in controlling microbiological hazards of cull cow carcasses in a commercial plant. Canadian Journal of Veterinary Research 69: 200–207. [PMC free article] [PubMed] [Google Scholar]

- De Buck E, Van Velde SD 2010. BET 2: Potato peel dressings for burn wounds. Emergency Medicine Journal 27: 55–56. [DOI] [PubMed] [Google Scholar]

- Doust JA, Pietrzak E, Sanders S, Glasziou PP 2005. Identifying studies for systematic reviews of diagnostic tests was difficult due to the poor sensitivity and precision of methodologic filters and the lack of information in the abstract. Journal of Clinical Epidemiology 58: 444–449. [DOI] [PubMed] [Google Scholar]

- Edwards P, Clarke M, DiGuiseppi C, Pratap S, Roberts I, Wentz R 2002. Identification of randomized controlled trials in systematic reviews: accuracy and reliability of screening records. Statistics in Medicine 21: 1635–1640. DOI:10.1002/sim.1190. [DOI] [PubMed] [Google Scholar]

- Ganann R, Ciliska D, Thomas H 2010. Expediting systematic reviews: methods and implications of rapid reviews. Implementation Science 5: . DOI:10.1186/1748-5908-5-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghafir Y, China B, Korsak N, Dierick K, Collard JM, Godard C, Zutter L, Daube G 2005. Belgian surveillance plans to assess changes in Salmonella prevalence in meat at different production stages. Journal of Food Protection 68: 2269–2277. [DOI] [PubMed] [Google Scholar]

- Grant MJ, Booth A 2009. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information and Libraries Journal 26: 91–108. DOI:10.1111/j.1471-1842.2009.00848.x. [DOI] [PubMed] [Google Scholar]

- Greig JD, Waddell L, Wilhelm B, Wilkins W, Bucher O, Parker S, Rajić A 2012. The efficacy of interventions applied during primary processing on contamination of beef carcasses with Escherichia coli: A systematic review‐meta‐analysis of the published research. Food Control 27: 385–397. DOI:10.1016/j.foodcont.2012.03.019. [Google Scholar]

- Harker J, Kleijnen J 2012. What is a rapid review? A methodological exploration of rapid reviews in health technology assessments. International Journal of Evidence‐Based Healthcare 10: 397–410. [DOI] [PubMed] [Google Scholar]

- Hemingway P, Brereton N 2009. What is a Systematic Review? London: Hayward Medical Communications. [Google Scholar]

- Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D 2012. Evidence summaries: the evolution of a rapid review approach. Systematic Reviews 1: 10 DOI:10.1186/2046-4053-1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavis J, Davies HTO, Oxman A, Denis J, Golden‐Biddle K, Ferlie E 2005. Towards systematic reviews that inform health care management and policy‐making. Journal of Health Services Research and Policy 10(SUPPL. 1): 35–48. DOI:10.1258/1355819054308549. [DOI] [PubMed] [Google Scholar]

- National Collaborating Centre for Methods and Tools . 2012. Rapid Reviews of Research Evidence. McMaster University: Hamilton, ON: Available from: http://www.nccmt.ca/registry/view/eng/116.html [2015, 02/05]. [Google Scholar]

- Oermann MH, Hays JC 2015. Writing for Publication in Nursing. Third edn. Springer Publishing Company: New York, NY. [Google Scholar]

- Rajić A, Young I 2013. Knowledge Synthesis, Transfer and Exchange in Agri‐Food Public Health: A Handbook for Science‐to‐Policy Professionals. Canada: Guelph. [Google Scholar]

- Rose BE, Hill WE, Umholtz R, Ransom GM, James WO 2002. Testing for Salmonella in raw meat and poultry products collected at federally inspected establishments in the United States, 1998 through 2000. Journal of Food Protection 65: 937–947. [DOI] [PubMed] [Google Scholar]

- Sargeant JM, Kelton DF, O'Connor AM 2014. Randomized controlled trials and challenge trials: design and criterion for validity. Zoonoses and Public Health 61(Suppl 1): 18–27. DOI:10.1111/zph.12126. [DOI] [PubMed] [Google Scholar]

- Sargeant JM, O'Connor AM 2014. Introduction to systematic reviews in animal agriculture and veterinary medicine. Zoonoses and Public Health 61(Suppl 1): 3–9. DOI:10.1111/zph.12128. [DOI] [PubMed] [Google Scholar]

- Sargeant JM, Torrence ME, Rajić A, O'Connor AM, Williams J 2006. Methodological quality assessment of review articles evaluating interventions to improve microbial food safety. Foodborne Pathogens and Disease 3: 447–456. DOI:10.1089/fpd.2006.3.447. [DOI] [PubMed] [Google Scholar]

- Senders J 1976. The scientific journal of the future. The American Sociologist 11(August): 160–164. [Google Scholar]

- Thomas J, Newman M, Oliver S 2013. Rapid evidence assessments of research to inform social policy: taking stock and moving forward. Evidence and Policy 9: 5–27. DOI:10.1332/174426413X662572. [Google Scholar]

- United States Department of Agriculture, Food Safety and Inspection Service . 2000. Nationwide young vhicken microbiological baseline data collection program November 1999–October 2000. Available from: http://www.fsis.usda.gov/wps/wcm/connect/5fc8968b‐cba1‐4a38‐a36d‐9924b8deb21d/Baseline_Data_Young_Chicken.pdf?MOD=AJPERES/ [2015, 03/03].

- Van De Velde S, De Buck E, Dieltjens T, Aertgeerts B 2011. Medicinal use of potato‐derived products: conclusions of a rapid versus full systematic review. Phytotherapy Research 25: 787–788. DOI:10.1002/ptr.3356. [DOI] [PubMed] [Google Scholar]

- Vlachojannis JE, Cameron M, Chrubasik S 2010. Medicinal use of potato‐derived products: a systematic review. Phytotherapy Research 24: 159–162. [DOI] [PubMed] [Google Scholar]

- Watt A, Cameron A, Sturm L, Lathlean T, Babidge W, Blamey S, Facey K, Hailey D, Norderhaug I, Maddern G 2008a. Rapid reviews versus full systematic reviews: an inventory of current methods and practice in health technology assessment. International Journal of Technology Assessment in Health Care 24: 133–139. [DOI] [PubMed] [Google Scholar]

- Watt A, Cameron A, Sturm L, Lathlean T, Babidge W, Blamey S, Facey K, Hailey D, Norderhaug I, Maddern G 2008b. Rapid versus full systematic reviews: validity in clinical practice? ANZ Journal of Surgery 78: 1037–1040. DOI:10.1111/j.1445-2197.2008.04730.x. [DOI] [PubMed] [Google Scholar]

- Wilhelm BJ, Raji A, Greig J, Waddell L, Trottier G, Houde A, Harris J, Borden LN, Price C 2011. A systematic review/meta‐analysis of primary research investigating swine, pork or pork products as a source of zoonotic hepatitis E virus. Epidemiology and Infection 39: 1–18. DOI:10.1017/S0950268811000677. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1. List of potential methodological shortcuts ranked in terms of priority to be addressed.

Table S2. List of methodological shortcuts not applicable or relevant to this study.

Supporting info item