Abstract

The Journal Impact Factor (JIF) is a single citation metric, which is widely employed for ranking journals and choosing target journals, but is also misused as the proxy of the quality of individual articles and academic achievements of authors. This article analyzes Scopus-based publication activity on the JIF and overviews some of the numerous misuses of the JIF, global initiatives to overcome the ‘obsession’ with impact factors, and emerging strategies to revise the concept of the scholarly impact. The growing number of articles on the JIF, most of which are in English, reflects interest of experts in journal editing and scientometrics toward its uses, misuses, and options to overcome related problems. Solely displaying values of the JIFs on the journal websites is criticized by experts as these average metrics do not reflect skewness of citation distribution of individual articles. Emerging strategies suggest to complement the JIFs with citation plots and alternative metrics, reflecting uses of individual articles in terms of downloads and distribution of related information through social media and networking platforms. It is also proposed to revise the original formula of the JIF calculation and embrace the concept of the impact and importance of individual articles. The latter is largely dependent on ethical soundness of the journal instructions, proper editing and structuring of articles, efforts to promote related information through social media, and endorsements of professional societies.

Keywords: Journal Impact Factor, Periodicals as Topic, Editorial Policies, Publishing, Publication Ethics, Science Communication

INTRODUCTION

The Journal Impact Factor (JIF) is the brainchild of Eugene Garfield, the founder of the Institute for Scientific Information, who devised this citation metric in 1955 to help librarians prioritize their purchases of the most important journals. The idea of quantifying the ‘impact’ by counting citations led to the creation of the prestigious journal rankings, which have been recorded annually in the Science Citation Index since 1961 (1). The JIFs are currently calculated by Thomson Reuters annually and published in the Journal Citation Reports (JCR).

The original formula of the JIF measures the average impact of articles published in a journal with a citation window of one year (numerator). The ‘citable’ articles, which are counted in the denominator of the formula, are published during the 2 preceding years. To get the JIF, a journal should be accepted for coverage by citation databases of Thomson Reuters, such as the Science Citation Index Expanded, and remain in the system for at least three years. Although there are no publicized criteria, influential new journals occasionally get their first (partial) JIF for a shorter period of indexing by Thomson Reuters databases (2).

Thomson Reuters' citation databases were initially designed to serve regional interests of their users from the U.S. English sources were preferentially accepted for coverage, and the JIFs were published to compare the ‘importance’ of journals within a scientific discipline. Nonetheless, the JIFs have gradually become yardsticks for ranking scholarly journals worldwide, and their use has expanded far beyond the initial regional and disciplinary limits (3).

PUBLICATION ACTIVITY

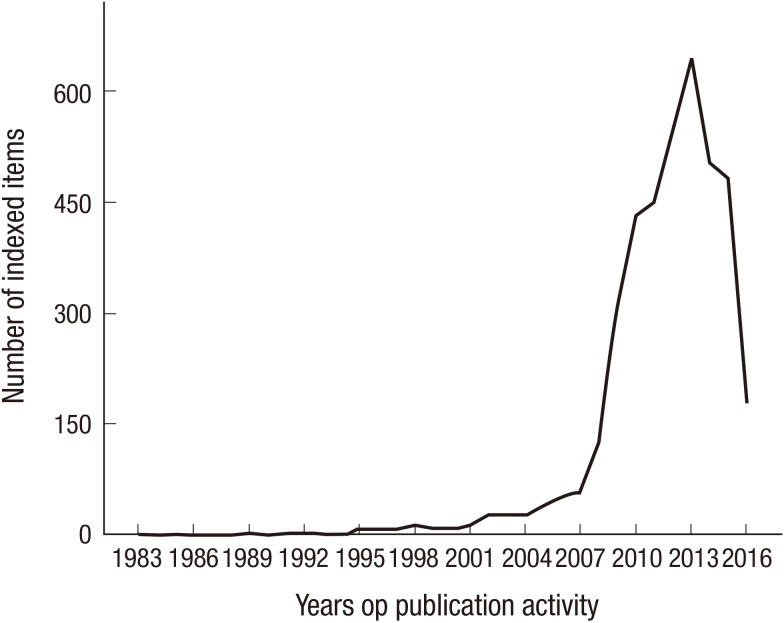

The issue of uses and misuses of the JIFs is a hot topic itself. The dynamics and patterns of global interest to the issue can be explored by a snapshot analysis of searches through Scopus, which is the most comprehensive multidisciplinary database. As of November 6, 2016, there are 4,003 indexed items, which are tagged with the term “Journal Impact Factor (JIF)” in their titles, abstracts, or keywords, with date range of 1983 to 2016. A steady increase of the indexed items starts from 2000 (n = 10) and reaches its pick in 2013 (n = 645) (Fig. 1). Top 5 periodicals that actively publish relevant articles are PLOS One (n = 111), Scientometrics (n = 105), Nature (n = 50), J Informetrics (n = 41), and J Am Soc Inform Sci Technol (n = 26). Top 3 prolific authors in the field are the following renowned experts in research evaluation and scientometrics: Bornmann L (n = 22), Smith DR (n = 17), and Leydesdorff L (n = 14). Among the most prolific countries, the U.S.A. is the absolute leader with 904 published documents. Importantly, the absolute majority of the articles covers issues in the medical sciences (n = 2,968, 74%). A large proportion of the items are editorials (n = 1,477, 37%). The absolute majority of the documents are in English (n = 3,595), followed by those in Spanish (n = 167), German (n = 110), Portuguese (n = 79), and French (n = 39). Finally, 2 top-cited articles on the JIFs (893 and 391 times) are authored by its creator, Eugene Garfield (1,4).

Fig. 1.

Number of Scopus-indexed items tagged with the term “Journal Impact Factor (JIF)” (as of November 6, 2016).

MISUSES

The JIFs and related journal rankings in the JCR have enourmously influenced editorial policies across academic disciplines over the past few decades. The growing importance of journals published from the U.S.A. and Western Europe has marked a shift in the prioritization of English articles (5,6), sending a strong message to non-English periodicals — change the language, cover issues of global interest, or perish. A large number of articles across scientific disciplines from non-Anglophone countries, and particularly those with a country name in the title, abstract, or keywords, unduly end up in low-impact periodicals and do not appeal to the authors, who cite references in high-impact journals (7,8).

Editors and publishers, who encounter the harsh competition in the publishing market, are forced to change their priorities in line with the citation chances of scholarly articles and ‘hot’ topics (9). Several quantitative analyses have demonstrated that randomized controlled trials (10) and methodological articles are highly cited (11), and that systematic reviews receive more citations than narrative ones (12). Relying on these analyses, most journal editors have embarked on rejecting ‘unattractive’ scientific topics and certain types of articles. High-impact journals, and particularly those from the U.S., have boosted their JIFs by preferentially accepting authoritative submissions of ‘big names’ in science, systematic reviews and meta-analyses, reports on large cohorts and multicenter trials, and practice guidelines.

Some established publishers have also decided to limit or ban entirely items that receive few citations (e.g., short communications, preliminary scientific reports, case studies) (13). Clinical case reports with enormous educational value for medical students and physicians but low citation records have been fallen out of favor and disappeared in most high-impact medical journals. And many young researchers and students have been ousted from the mainstream high-impact periodicals. All these subjective factors and the ‘obsession’ with impact factors have created a citation-related publication bias, with discontinuing publication of a journal without JIF as an extreme measure.

The ‘obsession’ with articles attracting abundant citations may be also the trigger of the current unprecedented proliferation of systematic reviews (14), most of which are of low quality and even harmful for the scientific evidence accumulation (15,16,17).

Academic promotion, grant funding, and rewarding schemes across most developed countries and emerging scientific powers currently rely heavily on where, but not necessarily what the authors publish. Fallaciously, getting an article published in a high-impact journal is viewed as a premise for academic promotion and research grant funding. Many researchers list their articles on their individual profiles, covering a certain period of academic activities, along with the JIFs that tend to dynamically change (18). Likewise, ResearchGate™, the global scholarly networking platform, calculates scores of publication activity in connection with the JIFs.

The JIFs of the target journals are still inappropriately employed by research evaluators as the proxies of the quality. In China, for example, bonuses paid to academics depend on a category of the target journals, which is calculated as an average of the JIFs in the last three years (19). In the leading Chinese universities, distinctive monetary reward schemes push authors to submit to and publish more in Nature, Science, and other high-impact journals (20). An analysis of more than 130,000 research projects, which were funded by the U.S. National Institutes of Health, revealed that higher scores were given by reviewers to proposals with potentially influential output in terms of high JIFs and more citations, but not necessarily innovative ideas (21).

The decades-long overemphasis placed on the JIFs has evolved into a grossly incorrect use of the term “impact factor” by sprung up bogus agencies. These ‘predatory’ agencies claim to assess the impact of journals and calculate metrics, which often mimic those by Thomson Reuters, but do not take into account indexing in established databases and citations from indexed journals (22). Predatory journals often display misleading or fake metrics on their websites to influence inexperienced authors' choices of the target journals (23).

GLOBAL INITIATIVES AGAINST MISUSES

To a certain degree, the decades-long global competition for getting and increasing the JIFs has enabled improving the quality of the indexed periodicals and subsequently attracting professional interest and citations (24). However, the absence of alternative metrics for a long time has led to monopoly and misuses of the JIFs. Journals publishing a single or a few highly-cited articles and boosting their JIFs in the two succeeding years have achieved an advantage over the competing periodicals (25). Disparagingly, some journal editors have also embarked on coercive citation practices that unethically boosted their JIFs and adversely affected the whole field of scientometrics (26,27).

Additionally, a thorough analysis of impressive increases of the JIFs of a cohort of journals in 2013–2014 (> 3, n = 49) revealed manipulations with shrinking of publication output and decreasing article numbers in the denominator of the JIF formula (28).

Curiously, despite the seemingly simple methodology of calculating the JIF, values of metrics presented in the JCR often differ from those calculated by editors and publishers themselves (29).

All these and many other deficiencies of the JIF have prompted several campaigns against its monopoly and misuses. The San Francisco Declaration on Research Assessment (DORA), which was developed by a group of editors and publishers at the Annual Meeting of the American Society for Cell Biology in 2012, encouraged interested parties across all scientific disciplines to improve the evaluation of research output and avoid relying on the JIFs as the proxies of the quality (30). The Declaration highlighted the importance of crediting research works based on scientific merits but not values of related JIFs. It also called to discontinue practices of grant funding and academic promotion in connection with JIFs. The organizations that issue journal metrics were called to transparently publicize their data, allowing unrestricted reuse and calculations by others.

A series of opinion pieces and comments on journal metrics, which were recently published in Nature, heralded a new powerful campaign against misuses of the JIFs (31). First of all, it was announced that several influential journals of the American Society for Microbiology would remove the JIFs from their websites (32). By analyzing distribution of citations, which contributed to JIFs of Nature, Science, and PLOS One, it was emphasized that the average citation values did not reveal the real impact of most articles published in these journals. For example, 78% of Nature articles were cited below its latest impact factor of 38.1. Displaying distribution of citations and drawing attention of readers to highly-cited articles were considered as more appropriate for assessing journal stance than simply publicizing the JIFs (33).

Editors of Nature strongly advised against replacing opinion of peer reviewers with citations and related quantitative metrics for evaluating grant applications and publications (34). Paying more attention to what is new and important for public health rather than relying on surrogate metrics and prestige of target journals was considered as a more justified approach to academic promotion of authors (35).

Finally, ten principles of research evaluation (The Leiden Manifesto) were published in Nature to guide research managers how to use a combination of quantitiative and qualitative tools (36). The Leiden Manifesto called to protect locally relevant research, which can be published in non-English and low-impact media, particularly in the fields of social sciences and humanities. It pointed to the differences in publication and citation practices across disciplines that should not confound crediting and promotion systems; books, national-language literature, and conference papers can be counted as highly influential sources in some fields.

EMERGING ALTERNATIVE FACTORS OF THE IMPACT

The digitization of scholarly publishing has offered numerous ways for increasing the discoverability of individual articles and improving knowledge transfer (Box 1). The systematization of searches through digital platforms and databases has emerged as the main factor of scholarly influence. Authors and editors alike are currently advised to carefully edit their article's titles, abstracts, and keywords to increase the discoverability and related impact (37). Importantly, a recent analysis of 500 highly-cited articles in the field of knowledge management revealed a positive correlation between the number of keywords and citations (38). The same study pointed to the value of article references and page numbers for prediciting citations.

Box 1. Factors of the journal impact and importance

Discoverability of journal articles by search engines by properly structuring titles, abstracts, and keywords

Citations received by journal articles over a certain period of time from Scopus or Web of Science databases

Downloads of journal articles within a certain period of time

Attention to the journal by social media (e.g., Twitter, Facebook), blogs, newspapers, and magazines

Journal endorsements and support by professional societies

Completeness and adherence to ethical standards in the journal instructions

Experts advocate shifting from traditional JIF-based evaluations to combined qualitative and quantitative metrics schemes for scholarly sources (39). Citation counts from prestigious citation databases, such as Web of Science and Scopus, and related arithmetic metrics will remain the strongholds of the journal ranking in the years to come (40). Following a recent debate over the distribution of citations contributing to the JIFs, it is likely that citation metrics will be accompanied by plots depicting most and least cited items (41).

An argument in favor of a combined approach to the impact particularly concerns the use of individual articles, which are published in journals with low or declining JIFs, but are still actively dowloaded and distributed among professionals, most of whom read but never publish papers (42,43). The combined approach has been already embraced by Elsevier, displaying top 25 most downloaded articles along with citation metrics from Web of Science and Scopus on their journal websites. Although there is no linear correlation, downloads reveal interest of the professional community and may predict citations (44,45).

Some established publishers, such as Nature Publishing Group and Elsevier, have gone further and started providing their readers with more inclusive information about the use of individual articles by combining citation metrics and downloads with altmetric scores (46). The altmetric score is a relatively new multidimentional metric, which was proposed in 2010 to capture a board online attention of social media, blogs, and scholarly networking platforms to research output (47). Essentially, the enhanced online visibility of articles may attract views, downloads, bookmarks, likes, and comments on various networking platforms. Pilot studies of Facebook “likes” and Twitter mentions have pointed to an association between social media attention and traditional impact metrics, such as citations and downloads, in the field of psychology and psychiatry (48,49) and emergency medicine (50). Although no such association has been reported across many other fields of science, wider distribution of journal information through social media holds promise for distinguishing popular and scientifically important research output (51,52,53).

With the rapid growth of numerous online publication outlets, reaching out to relevant readers and evaluators is becoming a critical factor of impact. Emerging evidence suggests that periodicals with affiliation and endorsement of relevant professional societies get an advantage and attract more citations (54). The journal affiliation to a professional society is advantageous in terms of maintaining flow of relevant submissions from the membership and continuous support of the scientific community, both valued by prestigous indexing services. There are even suggestions to prefentially submit articles to journals, which are supported by professional societies, regardless of their JIFs. Such an approach can be strategically important for circumventing substandard open-access periodicals (55,56).

Finally, several studies have examined the relationship between JIF and completeness of the journal instructions with regard to research and publication ethics (57,58,59). In a landmark comparative analysis of the instructions of 60 medical journals with JIFs above 10 (e.g., Nature, Science, Lancet) and below 10 for the year 2009 (e.g., Gut, Archives of Internal Medicine, Pain), ethical considerations were significantly better scored for periodicals with higher JIF (57). The results of the study pointed to the importance of mentioning about research reporting guidelines, such as STrengthening the Reporting of OBservational studies in Epidemiology (STROBE) and Consolidated Standards of Reporting Trials (CONSORT), conflicts of interest, local ethics committee approval, and patient consents for increasing the impact and attractiveness of the journals for authors. Similar results were obtained in a subsequent analysis of the instructions of radiological (58), but not medical laboratory journals (59). Despite the differences across the journals, it can be concluded that upgrading ethical instructions in line with the examples of the flagship multidisciplinary and specialist journals is rewarding in terms of attracting the best possible and complete research reports (60).

CONCLUSION

The lasting debates over the JIF, its uses, and misuses highlight several points of interest to all stakeholders of science communication. First of all, authors are currently offered numerous options for choosing best target journals for their research. The JIFs may influence their choices along with other journal metrics and emerging alternative factors of the impact. They should realize that not all journals with the JIFs are up to high ethical standards, and that some periodicals without the JIFs but with support of professional societies can be better platforms for relevant research. Journals accepting locally important articles in English or national languages can be still influential and useful (61). Journal editors have an obligation toward their authors to widely distribute relevant information to increase the use of the articles and attract citations. Social media and scholarly networking platforms can be instrumental in this regard. Regularly revising and upgrading journal instructions may also improve the structure and ethical soundness of the publications, and translate into the discoverability and attractiveness for indexing services (60). Editors, who aim to boost the JIFs, should not undermine the importance of publishing different types of articles, regardless of their citation chances. Manipulating with the number of articles, which are counted in the denominator of the JIF formula, cannot be considered as the best service to the authors.

Indexers of Thomson Reuters databases should respond to arguments that point to the need for revising the original formula of the JIF (62,63). Remarkably, editorials and letters, the so-called noncitable items, which have long been excluded from the denominator of the JIF, have changed their influence over the past decades. These items, and particularly in the modern biomedicine, contain long lists of references, affecting the JIF calculations in many ways. It should be also stressed that the lack of transparency of the JIF calculations, which is partly due to the lack of open access to citations tracked by Thomson Reuters databases (64), damages reputation of the JIF as a reliable and reproducible scientometric tool.

Finally, research evaluators should consider the true impact of scholarly articles, which is confounded by their novelty, methodological quality, ethical soundness, and relevance to the global and local scientific communities.

Footnotes

DISCLOSURE: Armen Yuri Gasparyan is an expert of Scopus Content Selection & Advisory Board (since 2015), former council member of the European Association of Science Editors and chief editor of European Science Editing (2011–2014). All other authors have no potential conflicts of interest to disclose.

AUTHOR CONTRIBUTION: Conceptualization: Gasparyan AY, Nurmashev B, Kitas GD. Data curation: Yessirkepov M, Udovik EE, Kitas GD. Visualization: Gasparyan AY, Yessirkepov M. Writing - original draft: Gasparyan AY. Writing - review & editing: Gasparyan AY, Nurmashev B, Yessirkepov M, Udovik EE, Baryshnikov AA, Kitas GD.

References

- 1.Garfield E. The history and meaning of the journal impact factor. JAMA. 2006;295:90–93. doi: 10.1001/jama.295.1.90. [DOI] [PubMed] [Google Scholar]

- 2.Gurnhill G. PeerJ receives its first (partial) impact factor [Internet] [accessed on 6 November 2016]. Available at https://peerj.com/blog/post/115284878055/our-first-partial-impact-factor/

- 3.Libkind AN, Markusova VA, Mindeli LE. Bibliometric indicators of Russian journals by JCR-science edition, 1995-2010. Acta Naturae. 2013;5:6–12. [PMC free article] [PubMed] [Google Scholar]

- 4.Garfield E. Journal impact factor: a brief review. CMAJ. 1999;161:979–980. [PMC free article] [PubMed] [Google Scholar]

- 5.Chen M, Zhao MH, Kallenberg CG. The impact factor of rheumatology journals: an analysis of 2008 and the recent 10 years. Rheumatol Int. 2011;31:1611–1615. doi: 10.1007/s00296-010-1541-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bredan A, Benamer HT, Bakoush O. Why are journals from less-developed countries constrained to low impact factors? Libyan J Med. 2014;9:25774. doi: 10.3402/ljm.v9.25774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Abramo G, D’Angelo CA, Di Costa F. The effect of a country’s name in the title of a publication on its visibility and citability. Scientometrics. 2016;109:1895–1909. [Google Scholar]

- 8.Tahamtan I, Safipour Afshar A, Ahamdzadeh K. Factors affecting number of citations: a comprehensive review of the literature. Scientometrics. 2016;107:1195–1225. [Google Scholar]

- 9.Nielsen MB, Seitz K. Impact factors and prediction of popular topics in a journal. Ultraschall Med. 2016;37:343–345. doi: 10.1055/s-0042-111209. [DOI] [PubMed] [Google Scholar]

- 10.Zhao X, Guo L, Lin Y, Wang H, Gu C, Zhao L, Tong X. The top 100 most cited scientific reports focused on diabetes research. Acta Diabetol. 2016;53:13–26. doi: 10.1007/s00592-015-0813-1. [DOI] [PubMed] [Google Scholar]

- 11.Van Noorden R, Maher B, Nuzzo R. The top 100 papers. Nature. 2014;514:550–553. doi: 10.1038/514550a. [DOI] [PubMed] [Google Scholar]

- 12.Bhandari M, Montori VM, Devereaux PJ, Wilczynski NL, Morgan D, Haynes RB. Hedges Team. Doubling the impact: publication of systematic review articles in orthopaedic journals. J Bone Joint Surg Am. 2004;86-A:1012–1016. [PubMed] [Google Scholar]

- 13.Howard L, Wilkinson G. Impact factors of psychiatric journals. Br J Psychiatry. 1997;170:109–112. doi: 10.1192/bjp.170.2.109. [DOI] [PubMed] [Google Scholar]

- 14.Uthman OA, Okwundu CI, Wiysonge CS, Young T, Clarke A. Citation classics in systematic reviews and meta-analyses: who wrote the top 100 most cited articles? PLoS One. 2013;8:e78517. doi: 10.1371/journal.pone.0078517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Page MJ, McKenzie JE, Kirkham J, Dwan K, Kramer S, Green S, Forbes A. Bias due to selective inclusion and reporting of outcomes and analyses in systematic reviews of randomised trials of healthcare interventions. Cochrane Database Syst Rev. 2014:MR000035. doi: 10.1002/14651858.MR000035.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang J, Wang J, Han L, Zhang F, Cao J, Ma Y. Epidemiology, quality, and reporting characteristics of systematic reviews and meta-analyses of nursing interventions published in Chinese journals. Nurs Outlook. 2015;63:446–455.e4. doi: 10.1016/j.outlook.2014.11.020. [DOI] [PubMed] [Google Scholar]

- 17.Roush GC, Amante B, Singh T, Ayele H, Araoye M, Yang D, Kostis WJ, Elliott WJ, Kostis JB, Berlin JA. Quality of meta-analyses for randomized trials in the field of hypertension: a systematic review. J Hypertens. 2016;34:2305–2317. doi: 10.1097/HJH.0000000000001094. [DOI] [PubMed] [Google Scholar]

- 18.Bavdekar SB, Save S. Choosing the right journal for a scientific paper. J Assoc Physicians India. 2015;63:56–58. [PubMed] [Google Scholar]

- 19.Suo Q. Chinese academic assessment and incentive system. Sci Eng Ethics. 2016;22:297–299. doi: 10.1007/s11948-015-9643-3. [DOI] [PubMed] [Google Scholar]

- 20.Shao JF, Shen HY. Research assessment and monetary rewards: the overemphasized impact factor in China. Res Eval. 2012;21:199–203. [Google Scholar]

- 21.Li D, Agha L. Research funding. Big names or big ideas: do peer-review panels select the best science proposals? Science. 2015;348:434–438. doi: 10.1126/science.aaa0185. [DOI] [PubMed] [Google Scholar]

- 22.Sohail S. Of predatory publishers and spurious impact factors. J Coll Physicians Surg Pak. 2014;24:537–538. [PubMed] [Google Scholar]

- 23.Beall J. Dangerous predatory publishers threaten medical research. J Korean Med Sci. 2016;31:1511–1513. doi: 10.3346/jkms.2016.31.10.1511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Abdullgaffar B. Impact factor in cytopathology journals: what does it reflect and how much does it matter? Cytopathology. 2012;23:320–324. doi: 10.1111/j.1365-2303.2011.00950.x. [DOI] [PubMed] [Google Scholar]

- 25.Dimitrov JD, Kaveri SV, Bayry J. Metrics: journal’s impact factor skewed by a single paper. Nature. 2010;466:179. doi: 10.1038/466179b. [DOI] [PubMed] [Google Scholar]

- 26.Wilhite AW, Fong EA. Scientific publications. Coercive citation in academic publishing. Science. 2012;335:542–543. doi: 10.1126/science.1212540. [DOI] [PubMed] [Google Scholar]

- 27.Chorus C, Waltman L. A large-scale analysis of impact factor biased journal self-citations. PLoS One. 2016;11:e0161021. doi: 10.1371/journal.pone.0161021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kiesslich T, Weineck SB, Koelblinger D. Reasons for journal impact factor changes: influence of changing source items. PLoS One. 2016;11:e0154199. doi: 10.1371/journal.pone.0154199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Undas A. The 2015 impact factor for Pol Arch Med Wewn: comments from the editor‑in‑chief. Pol Arch Med Wewn. 2016;126:453–456. doi: 10.20452/pamw.3515. [DOI] [PubMed] [Google Scholar]

- 30.San Francisco declaration on research assessment: putting science into the assessment of research [Internet] [accessed 6 November 2016]. Available at http://www.ascb.org/files/SFDeclarationFINAL.pdf?c95f4b.

- 31.Wilsdon J. We need a measured approach to metrics. Nature. 2015;523:129. doi: 10.1038/523129a. [DOI] [PubMed] [Google Scholar]

- 32.Callaway E. Beat it, impact factor! Publishing elite turns against controversial metric. Nature. 2016;535:210–211. doi: 10.1038/nature.2016.20224. [DOI] [PubMed] [Google Scholar]

- 33.Time to remodel the journal impact factor. Nature. 2016;535:466. doi: 10.1038/535466a. [DOI] [PubMed] [Google Scholar]

- 34.A numbers game. Nature. 2015;523:127–128. doi: 10.1038/523127b. [DOI] [PubMed] [Google Scholar]

- 35.Benedictus R, Miedema F, Ferguson MW. Fewer numbers, better science. Nature. 2016;538:453–455. doi: 10.1038/538453a. [DOI] [PubMed] [Google Scholar]

- 36.Hicks D, Wouters P, Waltman L, de Rijcke S, Rafols I. Bibliometrics: the Leiden Manifesto for research metrics. Nature. 2015;520:429–431. doi: 10.1038/520429a. [DOI] [PubMed] [Google Scholar]

- 37.Bekhuis T. Keywords, discoverability, and impact. J Med Libr Assoc. 2015;103:119–120. doi: 10.3163/1536-5050.103.3.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Akhavan P, Ebrahim NA, Fetrati MA, Pezeshkan A. Major trends in knowledge management research: a bibliometric study. Scientometrics. 2016;107:1249–1264. [Google Scholar]

- 39.Scarlat MM, Mavrogenis AF, Pećina M, Niculescu M. Impact and alternative metrics for medical publishing: our experience with International Orthopaedics. Int Orthop. 2015;39:1459–1464. doi: 10.1007/s00264-015-2766-y. [DOI] [PubMed] [Google Scholar]

- 40.Bollen J, Van de Sompel H, Hagberg A, Chute R. A principal component analysis of 39 scientific impact measures. PLoS One. 2009;4:e6022. doi: 10.1371/journal.pone.0006022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Blanford CF. Impact factors, citation distributions and journal stratification. J Mater Sci. 2016;51:10319–10322. [Google Scholar]

- 42.Haitjema H. Impact factor or impact? Ground Water. 2015;53:825. doi: 10.1111/gwat.12376. [DOI] [PubMed] [Google Scholar]

- 43.Gibson R. Considerations on impact factor and publications in molecular imaging and biology. Mol Imaging Biol. 2015;17:745–747. doi: 10.1007/s11307-015-0893-x. [DOI] [PubMed] [Google Scholar]

- 44.Della Sala S, Cubelli R. Downloads as a possible index of impact? Cortex. 2013;49:2601–2602. doi: 10.1016/j.cortex.2013.11.002. [DOI] [PubMed] [Google Scholar]

- 45.Gregory AT, Denniss AR. Impact by citations and downloads: what are heart, lung and circulation’s top 25 articles of all time? Heart Lung Circ. 2016;25:743–749. doi: 10.1016/j.hlc.2016.05.108. [DOI] [PubMed] [Google Scholar]

- 46.Rhee JS. High-impact articles-citations, downloads, and altmetric score. JAMA Facial Plast Surg. 2015;17:323–324. doi: 10.1001/jamafacial.2015.0869. [DOI] [PubMed] [Google Scholar]

- 47.Brigham TJ. An introduction to altmetrics. Med Ref Serv Q. 2014;33:438–447. doi: 10.1080/02763869.2014.957093. [DOI] [PubMed] [Google Scholar]

- 48.Ringelhan S, Wollersheim J, Welpe IM. I like, I cite? Do facebook likes predict the impact of scientific work? PLoS One. 2015;10:e0134389. doi: 10.1371/journal.pone.0134389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Quintana DS, Doan NT. Twitter article mentions and citations: an exploratory analysis of publications in the American Journal of Psychiatry. Am J Psychiatry. 2016;173:194. doi: 10.1176/appi.ajp.2015.15101341. [DOI] [PubMed] [Google Scholar]

- 50.Barbic D, Tubman M, Lam H, Barbic S. An analysis of altmetrics in emergency medicine. Acad Emerg Med. 2016;23:251–268. doi: 10.1111/acem.12898. [DOI] [PubMed] [Google Scholar]

- 51.Amir M, Sampson BP, Endly D, Tamai JM, Henley J, Brewer AC, Dunn JH, Dunnick CA, Dellavalle RP. Social networking sites: emerging and essential tools for communication in dermatology. JAMA Dermatol. 2014;150:56–60. doi: 10.1001/jamadermatol.2013.6340. [DOI] [PubMed] [Google Scholar]

- 52.Cosco TD. Medical journals, impact and social media: an ecological study of the Twittersphere. CMAJ. 2015;187:1353–1357. doi: 10.1503/cmaj.150976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Tonia T, Van Oyen H, Berger A, Schindler C, Künzli N. If I tweet will you cite? The effect of social media exposure of articles on downloads and citations. Int J Public Health. 2016;61:513–520. doi: 10.1007/s00038-016-0831-y. [DOI] [PubMed] [Google Scholar]

- 54.Karageorgopoulos DE, Lamnatou V, Sardi TA, Gkegkes ID, Falagas ME. Temporal trends in the impact factor of European versus USA biomedical journals. PLoS One. 2011;6:e16300. doi: 10.1371/journal.pone.0016300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Putirka K, Kunz M, Swainson I, Thomson J. Journal impact factors: their relevance and their influence on society-published scientific journals. Am Mineral. 2013;98:1055–1065. [Google Scholar]

- 56.Romesburg HC. How publishing in open access journals threatens science and what we can do about it. J Wildl Manage. 2016;80:1145–1151. [Google Scholar]

- 57.Charlier P, Bridoux V, Watier L, Ménétrier M, de la Grandmaison GL, Hervé C. Ethics requirements and impact factor. J Med Ethics. 2012;38:253–255. doi: 10.1136/medethics-2011-100174. [DOI] [PubMed] [Google Scholar]

- 58.Charlier P, Huynh-Charlier I, Hervé C. Ethics requirements and impact factor in radiological journals. Acta Radiol. 2016;57:NP3. doi: 10.1177/0284185115626482. [DOI] [PubMed] [Google Scholar]

- 59.Horvat M, Mlinaric A, Omazic J, Supak-Smolcic V. An analysis of medical laboratory technology journals’ instructions for authors. Sci Eng Ethics. 2016;22:1095–1106. doi: 10.1007/s11948-015-9689-2. [DOI] [PubMed] [Google Scholar]

- 60.Gasparyan AY, Ayvazyan L, Gorin SV, Kitas GD. Upgrading instructions for authors of scholarly journals. Croat Med J. 2014;55:271–280. doi: 10.3325/cmj.2014.55.271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Gasparyan AY, Hong ST. Celebrating the achievements and fulfilling the mission of the Korean Association of Medical Journal Editors. J Korean Med Sci. 2016;31:333–335. doi: 10.3346/jkms.2016.31.3.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Sewell JM, Adejoro OO, Fleck JR, Wolfson JA, Konety BR. Factors associated with the journal impact factor (JIF) for urology and nephrology journals. Int Braz J Urol. 2015;41:1058–1066. doi: 10.1590/S1677-5538.IBJU.2014.0497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Liu XL, Gai SS, Zhou J. Journal impact factor: do the numerator and denominator need correction? PLoS One. 2016;11:e0151414. doi: 10.1371/journal.pone.0151414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Fernandez-Llimos F. Bradford’s law, the long tail principle, and transparency in journal impact factor calculations. Pharm Pract (Granada) 2016;14:842. doi: 10.18549/PharmPract.2014.03.842. [DOI] [PMC free article] [PubMed] [Google Scholar]