Abstract

The continuing enhancement of the surgical environment in the digital age has led to a number of innovations being highlighted as potential disruptive technologies in the surgical workplace. Augmented reality (AR) and virtual reality (VR) are rapidly becoming increasingly available, accessible and importantly affordable, hence their application into healthcare to enhance the medical use of data is certain. Whether it relates to anatomy, intraoperative surgery, or post-operative rehabilitation, applications are already being investigated for their role in the surgeons armamentarium. Here we provide an introduction to the technology and the potential areas of development in the surgical arena.

Keywords: Augmented reality (AR), virtual reality (VR), surgery

Introduction

Surgeons are regularly on the lookout for technologies that will enhance their operating environment. They are often the early adopters of technologies that allow their field to offer a better surgical and patient experience. Examples of such innovations include fibre-optics which allowed for the advent of minimal access surgery and robotic surgery which lead to developments of systems such as the da Vinci robot over a decade ago (1). These tools have historically come at a considerable cost but over the last decade have become cheaper and increasingly available. New computational paradigms are emerging with rapid advancement and miniaturisation of real-time visualisation platforms. Smartphones are now commonplace with microprocessing powers rivalling desktop computers. The near ubiquitous use of smartphones by doctors has driven an increasing use of technology in healthcare (2). Medical applications (3) and instant access to web-based resources now guide clinical practice. This has allowed for the development of powerful wearable technologies that can provide high fidelity audio-visual data to the surgeon whilst operating. The recent emerging role and integration of virtual reality (VR) and augmented reality (AR) in healthcare is ripe for translation into this data rich field and warrants consideration for future applications to enhance the surgical experience. This viewpoint aims to review the applications, limitations and legal pitfalls of these devices across surgical specialties and imagines what the future surgical landscape may reveal.

Augmented reality

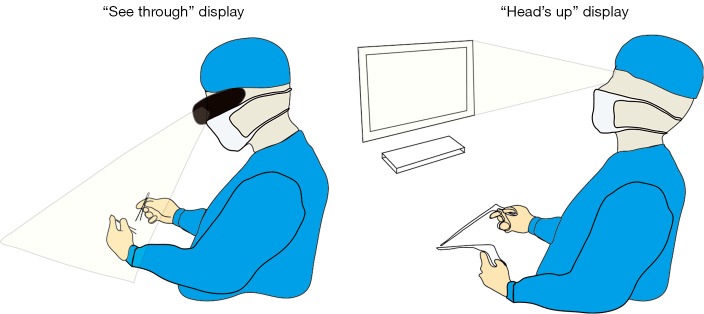

AR is the addition of artificial information to one or more of the senses that allows the user to perform tasks more efficiently. This can be achieved using superimposed images, video or computer generated models. Examples include the AccuVein (AccuVein Inc., NY, USA), a projector-like device that displays a map of the vasculature on the skin surface (Figure 1) (4) or Google Glass (GG) which is a head mounted display (HMD) with generated objects superimposed onto real-time images (Figure 2). The feedback can involve auditory augmentation, haptic feedback, smell and taste (5). Augmentation of reality has been used in surgery for many years especially in neurosurgery where stereotactic surgery has used the combination of radiographic scan data in stored or real time acquisition to allow accurate and safer “neuronavigation” (6). Traditionally this has been through a “head’s up” visualisation method (Figure 3) where the visualisation data is on a screen as commonly seen in smartphone based broadcasting and video games (7). Advances have been made in image registration and video tracking that allow for the displays to track points in the field that match the orientation and scale of the device to give accurate superimposition (8). This application is of greater benefit in real surgery compared with VR devices as the technology can be “see through”. The concept of a headset with superimposed display was introduced by Ivan Sutherland to the military in 1965 (9) and consisted of a head worn display and an image generation subsystem. These were cumbersome, heavy and expensive hence the future implementation of these technologies in healthcare need to be cost-effective, versatile and comfortable to ensure acceptance (10).

Figure 1.

Use of Accuvein to image veins on patient.

Figure 2.

Use of Google Glass in theatre.

Figure 3.

Difference between see through display and head’s up display.

A refreshed interest in accessible AR came after the announcement of GG which gained publicity up until January 2015 when production ceased (11). This technology benefited from a lightweight wearable superimposed viewing screen and high resolution video camera and features similar to that seen on a smartphone such as wireless and cloud accessibility (12). The open source development platform for the device allowed creative medical and surgical application and subsequently a number of other similar devices specific for surgical enhancement have been developed (13). Trials of GG in other surgical specialties have demonstrated good clinician satisfaction and great optimism for its integration into surgical practice (14). It is clear that there are many exciting opportunities and applications of AR to surgical healthcare.

Virtual reality

VR generates an immersive, completely artificial computer-simulated image and environment with real-time interaction. VR has been used for endoscopic training and assessment for more than a decade (15). One of the earliest platforms was Minimally Invasive Surgical Trainer-Virtual Reality (MIST-VR) (Mentice Medical Simulation, Gothenburg, Sweden) for endoscopic training (16). A recent meta-analysis of randomised controlled trials showed a reduction in operative time, error rate and accuracy when VR training is employed for new trainees with no prior experience or when supplemented with standard laparoscopic training (17). VR 3D technology is being incorporated into simulation-based training. This technology would surpass the fidelity of current distance learning packages and 2-dimensional videos with creation of a near true experience from the point of view of the operator. An example of this is work funded by the MOVEO Foundation which uses the Oculus Rift platform to put trainees into the virtual view of the operating surgeon. This provides the viewer with a first-person view of the procedure being performed. The MOVEO Foundation has produced videos in several orthopaedic procedures (18). Oculus Rift is one of the first mass-produced, recently released head-mounted VR devices that is particularly popular amongst developers and has a strong financial backing from Facebook (19).

Many other headsets are being developed which means user support will increase as the installer base is established (Table 1). Sony’s VR headset is likely to be one of the biggest platforms into the virtual world due to its low price point and preinstalled user base of PlayStation 4 owners. HTC vive offers a higher quality immersive environment and benefits from virtual space tracking which allows participants to move around a virtual room provided real living space is available. Most of the VR devices offer some tools to interact with the virtual environment and those familiar with endoscopic and robotic surgery may find these peripherals intuitive. The fidelity of the equipment may need to be tailored to surgical simulation to provide the most immersive experience. However, what is unique about the nature of this current vogue is that the consumer cost is accessible and therefore is in a good position to succeed. This may eventually form the cornerstone of future assessment, revalidation and continued training. Surgical training tools, like ‘Touch Surgery’ that are currently on smartphone and tablet may find themselves on a VR platform and be a significant change to how we learn and practice surgeries (20).

Table 1. Augmented and virtual reality devices.

| Devices | Specifications |

|---|---|

| Augmented reality devices | |

| Microsoft HoloLens | Windows 10, proprietary Microsoft Holographic Processing Unit, Intel 32 bit processor, 2 MP camera, four microphones, 2 GB RAM, 64 GB flash, Bluetooth, Wi-Fi |

| Sony SmartEyeglass | 3 MP camera, Bluetooth 3.0, 802.11 b/g Wi-Fi, 2.5 battery life. Compass, gyroscope, accelerometer, brightness sensor |

| Epson Moverio BT-20 | 0.3 MP camera; Bluetooth 3.0; 802.11 b/g/n Wi-Fi; around 6 hours of battery life; 8 GB internal memory; built-in GPS, compass, gyroscope and accelerometer |

| Google Glass | 5 MP camera with 720p video; Bluetooth; 802.11 b/g Wi-Fi; around 1 day of “typical use” on battery; 12 GB usable memory |

| Vuzix M100 Smart Glasses | 5 MP camera; 1,080p video; Bluetooth; 802.11 b/g/n Wi-Fi; around 6 hours of battery life (display off); 4 GB internal memory; built-in GPS |

| Recon Jet | “HD” camera; Bluetooth 4.0; ANT+; 802.11 b/g/n Wi-Fi; around 4–6 hours battery life; 8 GB internal memory; built-in GPS; accelerometer, gyroscope, magnetometer, pressure sensor and infrared sensor |

| Optinvent Ora-1 | 5 MP camera; 1,080p video recording; Bluetooth 4.0; 802.11 b/g/n Wi-Fi; 4 GB internal memory; ambient light sensor; photochromic lenses |

| GlassUp | Android and iOS-friendly; Bluetooth LE; 1-day battery life; accelerometer, compass, ambient light sensor, precision altimeter |

| Virtual reality devices | |

| Oculus Rift | 2,160×1,200 resolution, 110-degree field of view, 90 Hz refresh rate, positional tracking, built-in mic and audio |

| Sony PlayStation VR | 5.7-inch, 1,920×1,080 display; 120 fps refresh rate; 100-degree field of view; 360-degree tracking |

| HTC Vive | 2,160×1,200 resolution, 90 fps refresh rate, 110-degree field of view, positional tracking, location tracking at up to 15×15 feet |

| Samsung Gear VR | 2,560×1,440 resolution; 96 degree field of view; 60 Hz refresh rate. Supports head-mounted touch controls as well as Bluetooth controllers |

| FOVE VR | 5.8-inch, 1,440p, display; a 100+ degree field of view; 90 fps frame rate; and eye-tracking measured at 120 fps |

| Avegant Glyph | 1,280×800 resolution; 45 degree field of view; 120 Hz refresh rate |

VR, virtual reality.

Many companies acknowledge that the healthcare arena is ripe for AR and VR and inevitably there will be an increase in the number of drivers of this technology into clinical practice.

Opportunities in surgical healthcare

Anatomical evaluation

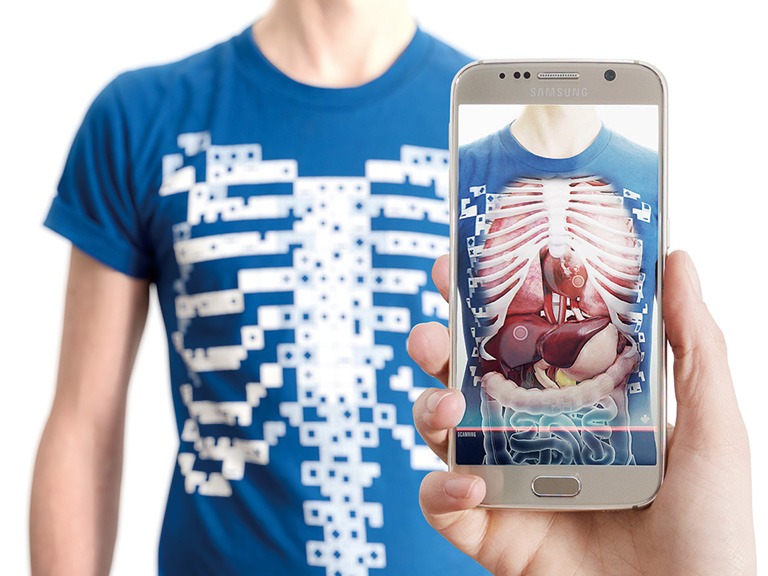

The traditional teaching of anatomy usually involves use of an anatomical atlas, time spent in the dissection room and fixed prosections (21). AR and VR are being used to deliver a better appreciation of structures in virtual or real space to ease the transition from the learning environment to the clinical environment. An example of this is using Microsoft Kinect to produce an interactive ‘digital mirror’ of the leaner. This digital mirrored image is then augmented by anatomical datasets to visualise structures such as musculature, superimposed on the user’s own arm (Figure 4) (23). The technology is being applied to clothing with pre-patterned codes on fabric, which when detected by camera software will superimpose animated anatomical images of the internal organs on the subject (Figure 5). The values of these modalities enhance our appreciation of anatomy where cadaver resources have limited accessibility (24). The use of VR and AR allows interaction with anatomy in whole new ways where the only constraint is the computing power available. Dassault Systèmes are using computation modelling in relation to anatomy and VR which allow clinical scientists to immerse themselves into the patient’s anatomy to problem solve pathologies (25). Virtual vascular endoscopy can generate endoluminal views of blood vessels that may be useful in pre-operative planning (26), especially for patients with significant atherosclerosis or aberrant anatomy. VR devices would allow for 360-degree visualisation and the ability to view multiple images simultaneously. Similar benefits have been realised in the visualisation of complex hand and lower limb fractures following 3D CT or MRI.

Figure 4.

4D Anatomy Augmented reality app of the heart and human body (22). Available online: http://www.asvide.com/articles/1271

Figure 5.

Curiscope T-shirt with visualisation of animated internal organs.

AR is also being used to evaluate dynamic anatomy in real time through use of digital ultrasound (US) (27). This simple application takes a reference point on the Doppler probe and on a separate HMD superimposes the US display on the subject. This technology allows visualization of structures and blood flow that can enhance performing invasive procedures. AR can supplement anatomy learning by superimposing radiological (CT or MRI) images on to a body and creating a direct view of spatial anatomy for the learner (28). Additionally, the use of haptic technology alongside this AR application provides the user with tactile feedback for appreciating the tactile consistency to different tissue components (29). Overall, this represents an exciting area for VR and AR development in anatomical education.

Broadcasting and recording surgery

The first live global broadcast of the VR surgical environment has been successfully trialled at the Royal London Hospital and has gained much media attention in globalizing medicine (20). The experience itself provides full 360 view of the operating room from the head of the operating table and provides the viewer with a patients’ rather than a surgeons’ perspective. An increasing number of educational meetings incorporate live surgery as part of the programme. As the cost of VR can be kept low with technology like Google Cardboard, we may see this as a more immersive forum for surgical education (20). In practical terms, the ability to interact with real life and digital elements make AR the forerunner in usability for live surgery compared to full immersed VR. Both can be used in medical education to display surgical procedures but the simulated environments still fall short graphically from augmented images. According to the Lancet Commission on Global Surgery, 5 billion people do not have access to safe affordable surgery (30). Live operations using AR, have been broadcast to a global community with feasibility demonstrated for basic procedures both in Paraguay and Brazil (31). Virtual interactive presence and augmented reality (VIPAR) has developed a support solution that would allow a remote surgeon to project their hands into the display of another surgeon wearing a headset (32). Proximie is another platform that aims to introduce AR technology to surgeons in the developing world that will allow them to visualise real-time or recorded operations being performed by experts in other parts of the world, allowing for a breadth of surgical experience to be disseminated. Both complex visual and verbal communication enable long-distance intra-operative guidance (33). It is possible to combine this with triggers such as 3D printed props which then can launch specific instructional video of surgeries and dissection (Figure 6). In an era of collaboration and sub-specialisation, AR may provide the much-needed contribution to educational advancement in the future.

Figure 6.

3D printed haptic models triggered augmented operative videos (34). Available online: http://www.asvide.com/articles/1272

Such data rich resources require careful management of potential confidential information. Applications on these platforms will permit the capture of images and video with digital archiving and it is important that these are managed in ethical and secure ways. One possibility is to allow instantaneous upload of images or videos onto a secure encrypted health care server, which would improve ease and quality of out-of-hours documentation and reduce costs of medical illustration services. For example, in the acute assessment of burn injuries, these devices would enable the assessor to effectively evaluate total body surface area affected and simultaneously obtain photography for clinical documentation. Video recording capabilities can help in documenting surgical procedures and real-time range of motion, beneficial in monitoring hand therapy progress. Currently, the imaging quality of such devices are considerably inferior to that offered by full frame image capture (35) and data storage of images and video in this way would require systems with massive capacity. This would require a considerable investment in the health care information technology services.

An enhanced medical information infrastructure could see development of patient records into AR and mobile applications. MedRef (36) enables AR systems to retrieve medical records by patient facial recognition. Electronic records of blood results, wound swabs, clinic notes, ward documentation, and medications can all be reviewed in conjunction with live vital signs using systems such as drchrono (37). Paperless recording and a to-do list on display would allow busy units to keep track of important tasks. This has the potential to improve clinical effectiveness and patient safety.

Taking this concept further into surgery, holoportation, an application of the Microsoft Hololens allows the user to see a projected hologram of a person occupying a different ‘space’ as though they were in the same room. This application has promise in the operating theatre where an identical surgical space enables two surgeons to walk around a holographic representation in two different environments, facilitating interaction with surgeons worldwide. Recorded procedures in small, true magnified scale enables navigation from any angle or orientation. The potential for recording-enabled HMDs to serve as a medical ‘black box’, similar to the airline industry would have important medico-legal implications for clinical practice.

Telementoring and education

Live feed and recorded data could be used for a number of applications such as trainee-trainer interactions in the context of work-based assessments. This would allow greater flexibility to learn and interact on one’s own terms. Sharing information and techniques between teaching hospitals and across continents is potentially very powerful (38). This form of telementoring has already demonstrated its effectiveness by using GG to facilitate a real-time consultation with a live feed of the surgical view and relevant radiological imaging (39).

The use of technology to provide off-site out of hours specialist consults is now a reality and would be an obvious transition for AR and VR. Out of hours surgery in the United Kingdom is primarily manned by a single junior member of staff. Telementoring would enable juniors to share a real time view of complex cases with an off-site senior member of the team for immediate advice. Specialities whereby visual review is required would benefit from this such as injury assessment, patient monitoring and trauma management. Telementoring is a safe option for providing expert diagnosis and opinion which can reduce the likelihood of mismanagement and unnecessary patient transfer (40). Likewise, multidisciplinary team (MDT) management requires a careful coordination of different disciplines at one site and is often fraught with difficulties in accessibility (41). Using AR or VR in the teleconferencing set up has the potential to improve communication when specialists are off-site and combine specialist care from different centres.

Operative benefits

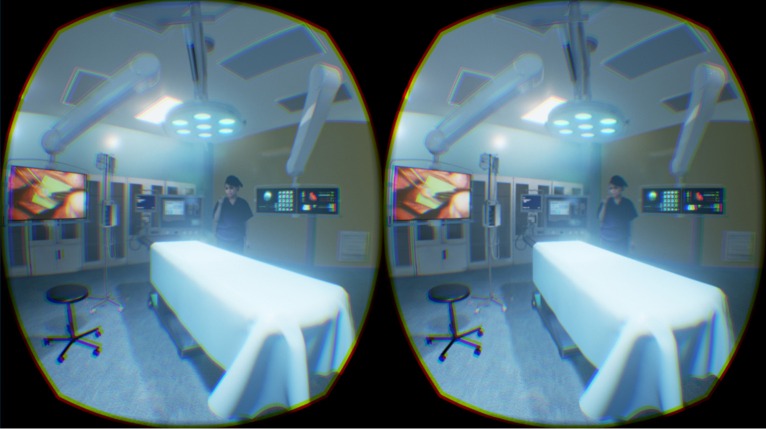

AR and VR have the potential to impact on surgery in a number of novel ways as discussed above, especially in the arena of surgical training in the virtual surgical environment (Figure 7). However, real-time enhancement of the surgical procedure remains a slightly tentative application. It is not yet validated that surgery can be enhanced with AR and in some instances, it could be distracting. Some features may be useful of systems like GG where with voice activation the operator could communicate beyond the theatre environment, retrieve images and test results without breaking scrub. Real-time updates regarding the progress of the trauma list would reduce unnecessary fasting of patients in the event of a delay in theatre.

Figure 7.

The virtual operating theatre.

Real-time augmentation of surgery usually involves the blending of acquired 3D imaging with surgical reference points. Novel applications of AR include use to project optimal port placement on the abdomen for laparoscopic surgery (42); using AR to identify the position of sentinel nodes with 3D freehand single photon emission computed tomography (43,44); and using this with near infra-red spectroscopy to provide visual guidance in lymph node dissection in cancer surgery (45). Specialised near infrared (NIR) devices have been developed for the detection of tissue vascularity using indocyanine green (ICG) dye (46). The use of ICG in lymphatic surgery is already well developed to help identify vessels and check for their patency hence the move from microscope to HMD is a likely future development (47). AR technology would also be able to seamlessly project diagnostic images intra-operatively for surgical planning to guide surgeons with optimal incisions and approach (39).

Several studies have demonstrated the use of AR to guide surgeons through intricate anatomy during minimally invasive surgery. Su et al. [2009] demonstrated the use of pre-operative imaging with intra-operative 3D overlay to guide robotic laparoscopic limited partial nephrectomy. Minimal access limited partial nephrectomy has been an area of interest for AR guided surgery (48). AR guidance allows for projection of 3D imaging onto the laparoscopic image to mark surgical incisions within the laparoscopic view (49).

AR has been used extensively in neurosurgical procedures. Use of pre-operative imaging to detect suitable vessels for extracranial-intracranial bypass allows for image injection into the operator’s microscope to guide intra-operative dissection. Similar techniques for AR have been utilised for intracranial arteriovenous malformation surgery (50,51).

Patient benefits

Companies are using the technology to provide patients with an augmented or virtual experience of what they can expect from surgery. Crisalix are promoting virtual aesthetics planning whereby after obtaining the patients attributes, the software can virtually demonstrate the likely changes in aesthetics such as breast enhancement (52). This improves documentation, communication, and education of clinicians which will have implications for quality of service and patient safety. In addition, a case study demonstrated that using Oculus Rift with distraction software reduced the level of pain experienced by a burns’ patient during occupational therapy (53). Other VR systems offered similar results for pain control in burns’ patients undergoing wound debridement (54).

Limitations of augmented reality

It is likely that AR will have an important role in image-based augmentation of the surgical environment. This will require increasingly powerful microcomputers to drive AR, which is currently limited but will improve with time. For the device to be a natural extension of the surgeon’s senses, it has be light, mobile, comfortable and functional for potentially long periods of time. Therein lies the limitations of the technology at present, where the battery life is limited, devices are large and the cables can be cumbersome. Such technology has to progress at present and eventually after several generations of development these tools will become as common as surgical loupes.

As with electronic patient records, confidentiality and data management will be a major hurdle in the integration of recordable HMDs into medical practice. As clinicians, we have a duty of care and we should remain mindful of our ethical and legal obligations when using technology to either review, store or transfer patient data. In 2004, the General Medical Council (GMC) highlighted the importance of appropriate security for electronically stored personal information (55). The holding organization and a ‘Caldicott guardian’ uphold and maintain the responsibility to enforce appropriate security of confidential information, protect all personal identifiable data. In the United Kingdom, we currently have a legal requirement determined by common law, the Data Protection Act 1998 and the Freedom of Information Act 2000. The common law duty of confidentiality states patient identifiable data should not be provided to third parties. Data that is regarded as confidential in nature, as witnessed during a doctor-patient relationship is protected under the notion that the confidant (doctor) can only disclose information with consent from the confider (patient) (56). The NHS is required to also abide by the NHS code of practice for confidentiality. There are now seven principles since March 2013 which are related to transferring and collecting identifiable information. All health professionals using and storing patient information would need to be aware of these seven principles. We must also consider the legal risk to healthcare trusts that do not make adequate attempts to prevent medical identity theft (57).

Encryption improves but does not guarantee prevention from data hijacking (58,59). It is important that whichever system is developed meets the standards for health care information governance (60). With so many different healthcare systems it is likely that there will be many different AR and VR systems in use, which will have varying degrees of compatibility. As such, the healthcare market will capitalise on developing accessible price sensitive software and hardware to market. It is anticipated the global AR and VR healthcare industry will be worth $641 million by 2018 (61).

Conclusions

HMDs with either VR or AR will have great potential in the field of surgery. Their functionality has the potential for benefit in a range of clinical settings across the MDT and in medical education. First generation devices like GG have given us a glimpse of what AR can provide and despite its demise our appetite for new head-mounted devices has not diminished. New innovations like Microsoft HoloLens and the emerging mass market of VR headsets would indicate that these technologies will become familiar to surgeons and inevitability we will find a way to integrate them into our day-to-day practice. The challenge of identifying compelling and valuable experiences for these modalities now begins along with validation of their benefits in all aspects of surgical care. The digital surgical environment is about to drastically change.

Acknowledgements

None.

Footnotes

Conflicts of Interest: A Chan is co-founder of Occipital VR. The other authors have no conflicts of interest to declare.

References

- 1.Horgan S, Vanuno D. Robots in laparoscopic surgery. J Laparoendosc Adv Surg Tech A 2001;11:415-9. 10.1089/10926420152761950 [DOI] [PubMed] [Google Scholar]

- 2.Al-Hadithy N, Ghosh S. Smartphones and the plastic surgeon. J Plast Reconstr Aesthet Surg 2013;66:e155-61. 10.1016/j.bjps.2013.02.014 [DOI] [PubMed] [Google Scholar]

- 3.Amin K. Smartphone applications for the plastic surgery trainee. J Plast Reconstr Aesthet Surg 2011;64:1255-7. 10.1016/j.bjps.2011.03.026 [DOI] [PubMed] [Google Scholar]

- 4.Miyake RK, Zeman HD, Duarte FH, et al. Vein imaging: a new method of near infrared imaging, where a processed image is projected onto the skin for the enhancement of vein treatment. Dermatol Surg 2006;32:1031-8. [DOI] [PubMed] [Google Scholar]

- 5.Reidsma D, Katayose H, Nijholt A. editors. Advances in computer entertainment: 10th international conference, ACE 2013 Boekelo, The Netherlands, November 12-15, 2013, proceedings. Berlin: Springer, 2013. [Google Scholar]

- 6.Alberti O, Dorward NL, Kitchen ND, et al. Neuronavigation--impact on operating time. Stereotact Funct Neurosurg 1997;68:44-8. 10.1159/000099901 [DOI] [PubMed] [Google Scholar]

- 7.Klopfer E, Sheldon J. Augmenting your own reality: student authoring of science-based augmented reality games. New Dir Youth Dev 2010;2010:85-94. [DOI] [PubMed]

- 8.Azuma R, Baillot Y, Behringer R, et al. Recent Advances in Augmented Reality. IEEE Computers Graphics and Applications 2001;21:34-47. 10.1109/38.963459 [DOI] [Google Scholar]

- 9.Sutherland IE. The Ultimate Display. Proceedings of IFIP Congress 1965;2:506-8. [Google Scholar]

- 10.Curtis D, Mizell D, Gruenbaum P, et al. editors. Several Devils in the Details: Making an AR Application Work in the Airplane Factory. Natick: A. K. Peters Ltd., 1999. [Google Scholar]

- 11.Yu J, Ferniany W, Guthrie B, et al. Lessons Learned From Google Glass: Telemedical Spark or Unfulfilled Promise? Surg Innov 2016;23:156-65. 10.1177/1553350615597085 [DOI] [PubMed] [Google Scholar]

- 12.Tech specs. Google Glass Help. Available online: https://support.google.com/glass/answer/3064128?hl=en-GB

- 13.Mitrasinovic S, Camacho E, Trivedi N, et al. Clinical and surgical applications of smart glasses. Technol Health Care 2015;23:381-401. 10.3233/THC-150910 [DOI] [PubMed] [Google Scholar]

- 14.Muensterer OJ, Lacher M, Zoeller C, et al. Google Glass in pediatric surgery: an exploratory study. Int J Surg 2014;12:281-9. 10.1016/j.ijsu.2014.02.003 [DOI] [PubMed] [Google Scholar]

- 15.Coleman J, Nduka CC, Darzi A. Virtual reality and laparoscopic surgery. Br J Surg 1994;81:1709-11. 10.1002/bjs.1800811204 [DOI] [PubMed] [Google Scholar]

- 16.Wilson MS, Middlebrook A, Sutton C, et al. MIST VR: a virtual reality trainer for laparoscopic surgery assesses performance. Ann R Coll Surg Engl 1997;79:403-4. [PMC free article] [PubMed] [Google Scholar]

- 17.Nagendran M, Gurusamy KS, Aggarwal R, et al. Virtual reality training for surgical trainees in laparoscopic surgery. Cochrane Database Syst Rev 2013;(8):CD006575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Al-Qattan MM, Al-Turaiki TM. Flexor tendon repair in zone 2 using a six-strand 'figure of eight' suture. J Hand Surg Eur Vol 2009;34:322-8. 10.1177/1753193408099818 [DOI] [PubMed] [Google Scholar]

- 19.Angeles JG, Heminger H, Mass DP. Comparative biomechanical performances of 4-strand core suture repairs for zone II flexor tendon lacerations. J Hand Surg Am 2002;27:508-17. 10.1053/jhsu.2002.32619 [DOI] [PubMed] [Google Scholar]

- 20.Medical Realities. Press article library. Available online: http://www.medicalrealities.com/press-page/

- 21.McLachlan JC, Patten D. Anatomy teaching: ghosts of the past, present and future. Med Educ 2006;40:243-53. 10.1111/j.1365-2929.2006.02401.x [DOI] [PubMed] [Google Scholar]

- 22.Wee Sim Khor, Benjamin Baker, Kavit Amin, et al. 4D Anatomy Augmented reality app of the heart and human body. Asvide 2016;3:496. Available online: http://www.asvide.com/articles/1271

- 23.Ma M, Fallavollita P, Seelbach I, et al. Personalized augmented reality for anatomy education. Clin Anat 2016;29:446-53. 10.1002/ca.22675 [DOI] [PubMed] [Google Scholar]

- 24.Estai M, Bunt S. Best teaching practices in anatomy education: A critical review. Ann Anat 2016;208:151-7. 10.1016/j.aanat.2016.02.010 [DOI] [PubMed] [Google Scholar]

- 25.Brouillette D, Thivierge G, Marchand D, et al. Preparative study regarding the implementation of a muscular fatigue model in a virtual task simulator. Work 2012;41 Suppl 1:2216-25. [DOI] [PubMed] [Google Scholar]

- 26.Glockner JF. Navigating the aorta: MR virtual vascular endoscopy. Radiographics 2003;23:e11. 10.1148/rg.e11 [DOI] [PubMed] [Google Scholar]

- 27.Lovo EE, Quintana JC, Puebla MC, et al. A novel, inexpensive method of image coregistration for applications in image-guided surgery using augmented reality. Neurosurgery 2007;60:366-71; discussion 371-2. [DOI] [PubMed] [Google Scholar]

- 28.Benninger B. Google Glass, ultrasound and palpation: the anatomy teacher of the future? Clin Anat 2015;28:152-5. 10.1002/ca.22480 [DOI] [PubMed] [Google Scholar]

- 29.Kamphuis C, Barsom E, Schijven M, et al. Augmented reality in medical education? Perspect Med Educ 2014;3:300-11. 10.1007/s40037-013-0107-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Meara JG, Leather AJ, Hagander L, et al. Global Surgery 2030: Evidence and solutions for achieving health, welfare, and economic development. Surgery 2015;158:3-6. 10.1016/j.surg.2015.04.011 [DOI] [PubMed] [Google Scholar]

- 31.Datta N, MacQueen IT, Schroeder AD, et al. Wearable Technology for Global Surgical Teleproctoring. J Surg Educ 2015;72:1290-5. 10.1016/j.jsurg.2015.07.004 [DOI] [PubMed] [Google Scholar]

- 32.Shenai MB, Dillavou M, Shum C, et al. Virtual interactive presence and augmented reality (VIPAR) for remote surgical assistance. Neurosurgery 2011;68:200-7; discussion 207. [DOI] [PubMed] [Google Scholar]

- 33.Davis MC, Can DD, Pindrik J, et al. Virtual Interactive Presence in Global Surgical Education: International Collaboration Through Augmented Reality. World Neurosurg 2016;86:103-11. 10.1016/j.wneu.2015.08.053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wee Sim Khor, Benjamin Baker, Kavit Amin, et al. 3D printed haptic models triggered augmented operative videos. Asvide 2016;3:497. Available online: http://www.asvide.com/articles/1272

- 35.Albrecht UV, von Jan U, Kuebler J, et al. Google Glass for documentation of medical findings: evaluation in forensic medicine. J Med Internet Res 2014;16:e53. 10.2196/jmir.3225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Google Glasses app looks up medical records by face. Biometric Technology Today 2013;2013:2.

- 37.Wu YF, Tang JB. Recent developments in flexor tendon repair techniques and factors influencing strength of the tendon repair. J Hand Surg Eur Vol 2014;39:6-19. 10.1177/1753193413492914 [DOI] [PubMed] [Google Scholar]

- 38.Tang JB, Amadio PC, Boyer MI, et al. Current practice of primary flexor tendon repair: a global view. Hand Clin 2013;29:179-89. 10.1016/j.hcl.2013.02.003 [DOI] [PubMed] [Google Scholar]

- 39.Armstrong DG, Rankin TM, Giovinco NA, et al. A heads-up display for diabetic limb salvage surgery: a view through the google looking glass. J Diabetes Sci Technol 2014;8:951-6. 10.1177/1932296814535561 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Gardiner S, Hartzell TL. Telemedicine and plastic surgery: a review of its applications, limitations and legal pitfalls. J Plast Reconstr Aesthet Surg 2012;65:e47-53. 10.1016/j.bjps.2011.11.048 [DOI] [PubMed] [Google Scholar]

- 41.Austin AA, Druschel CM, Tyler MC, et al. Interdisciplinary craniofacial teams compared with individual providers: is orofacial cleft care more comprehensive and do parents perceive better outcomes? Cleft Palate Craniofac J 2010;47:1-8. 10.1597/08-250.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Volonté F, Pugin F, Bucher P, et al. Augmented reality and image overlay navigation with OsiriX in laparoscopic and robotic surgery: not only a matter of fashion. J Hepatobiliary Pancreat Sci 2011;18:506-9. 10.1007/s00534-011-0385-6 [DOI] [PubMed] [Google Scholar]

- 43.Heuveling DA, Karagozoglu KH, van Schie A, et al. Sentinel node biopsy using 3D lymphatic mapping by freehand SPECT in early stage oral cancer: a new technique. Clin Otolaryngol 2012;37:89-90. 10.1111/j.1749-4486.2011.02427.x [DOI] [PubMed] [Google Scholar]

- 44.Schnelzer A, Ehlerding A, Blümel C, et al. Showcase of Intraoperative 3D Imaging of the Sentinel Lymph Node in a Breast Cancer Patient using the New Freehand SPECT Technology. Breast Care (Basel) 2012;7:484-6. 10.1159/000345472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Tagaya N, Yamazaki R, Nakagawa A, et al. Intraoperative identification of sentinel lymph nodes by near-infrared fluorescence imaging in patients with breast cancer. Am J Surg 2008;195:850-3. 10.1016/j.amjsurg.2007.02.032 [DOI] [PubMed] [Google Scholar]

- 46.Diana M, Dallemagne B, Chung H, et al. Probe-based confocal laser endomicroscopy and fluorescence-based enhanced reality for real-time assessment of intestinal microcirculation in a porcine model of sigmoid ischemia. Surg Endosc 2014;28:3224-33. 10.1007/s00464-014-3595-6 [DOI] [PubMed] [Google Scholar]

- 47.Raabe A, Beck J, Seifert V. Technique and image quality of intraoperative indocyanine green angiography during aneurysm surgery using surgical microscope integrated near-infrared video technology. Zentralbl Neurochir 2005;66:1-6; discussion 7-8. 10.1055/s-2004-836223 [DOI] [PubMed] [Google Scholar]

- 48.Hughes-Hallett A, Mayer EK, Marcus HJ, et al. Augmented reality partial nephrectomy: examining the current status and future perspectives. Urology 2014;83:266-73. 10.1016/j.urology.2013.08.049 [DOI] [PubMed] [Google Scholar]

- 49.Su LM, Vagvolgyi BP, Agarwal R, et al. Augmented reality during robot-assisted laparoscopic partial nephrectomy: toward real-time 3D-CT to stereoscopic video registration. Urology 2009;73:896-900. 10.1016/j.urology.2008.11.040 [DOI] [PubMed] [Google Scholar]

- 50.Cabrilo I, Bijlenga P, Schaller K. Augmented reality in the surgery of cerebral aneurysms: a technical report. Neurosurgery 2014;10 Suppl 2:252-60; discussion 260-1. 10.1227/NEU.0000000000000328 [DOI] [PubMed] [Google Scholar]

- 51.Cabrilo I, Schaller K, Bijlenga P. Augmented reality-assisted bypass surgery: embracing minimal invasiveness. World Neurosurg 2015;83:596-602. 10.1016/j.wneu.2014.12.020 [DOI] [PubMed] [Google Scholar]

- 52.Tzou CH, Artner NM, Pona I, et al. Comparison of three-dimensional surface-imaging systems. J Plast Reconstr Aesthet Surg 2014;67:489-97. 10.1016/j.bjps.2014.01.003 [DOI] [PubMed] [Google Scholar]

- 53.Hoffman HG, Meyer WJ, 3rd, Ramirez M, et al. Feasibility of articulated arm mounted Oculus Rift Virtual Reality goggles for adjunctive pain control during occupational therapy in pediatric burn patients. Cyberpsychol Behav Soc Netw 2014;17:397-401. 10.1089/cyber.2014.0058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Maani CV, Hoffman HG, Morrow M, et al. Virtual reality pain control during burn wound debridement of combat-related burn injuries using robot-like arm mounted VR goggles. J Trauma 2011;71:S125-30. 10.1097/TA.0b013e31822192e2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.General Medical Council. Available online: http://www.gmc-uk.org/search.asp?client=gmc_frontend&site=gmc_collection&output=xml_no_dtd&proxystylesheet=gmc_frontend&ie=UTF-8&oe=UTF-8&q=phone&partialfields=&btnG=Google+Search&num=10&getfields=description&start=0&-as_sitesearch=http://www.gmc-uk.org/concerns&filter=0&txtKeywords=phone#SearchAnchor

- 56.Haynes CL, Cook GA, Jones MA. Legal and ethical considerations in processing patient-identifiable data without patient consent: lessons learnt from developing a disease register. J Med Ethics 2007;33:302-7. 10.1136/jme.2006.016907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Mancilla D, Moczygemba J. Exploring medical identity theft. Perspect Health Inf Manag 2009;6:1e. [PMC free article] [PubMed] [Google Scholar]

- 58.The Heartbleed Bug. Available online: http://heartbleed.com/

- 59.Cao Y, Tang JB. Biomechanical evaluation of a four-strand modification of the Tang method of tendon repair. J Hand Surg Br 2005;30:374-8. 10.1016/j.jhsb.2005.04.003 [DOI] [PubMed] [Google Scholar]

- 60.Donaldson A, Walker P. Information governance--a view from the NHS. Int J Med Inform 2004;73:281-4. 10.1016/j.ijmedinf.2003.11.009 [DOI] [PubMed] [Google Scholar]

- 61.BusinessWire. Global Augmented Reality & Virtual Reality in Healthcare Industry Worth USD 641 Million by 2018 - Analysis, Technologies & Forecasts 2013-2018 - Key Vendors: Hologic Inc, Artificial Life Inc, Aruba Networks - Research and Markets. Available online: http://www.businesswire.com/news/home/20160316005923/en/Global-Augmented-Reality-Virtual-Reality-Healthcare-Industry