Abstract

The elderly population faces an increasing number of cases of chronic neurological conditions, such as epilepsy and Alzheimer’s disease. Because the elderly with epilepsy are commonly excluded from randomized controlled clinical trials, there are few rigorous studies to guide clinical practice. When the elderly are eligible for trials, they either rarely participate or frequently have poor adherence to therapy, thus limiting both generalizability and validity. In contrast, large observational data sets are increasingly available, but are susceptible to bias when using common analytic approaches. Recent developments in causal inference-analytic approaches also introduce the possibility of emulating randomized controlled trials to yield valid estimates. We provide a practical example of the application of the principles of causal inference to a large observational data set of patients with epilepsy. This review also provides a framework for comparative-effectiveness research in chronic neurological conditions.

Keywords: epilepsy, epidemiology, neurostatistics, causal inference

Introduction

Epilepsy is an incurable, life-threatening neurological disorder characterized by recurrent, spontaneous seizures that affects approximately 3 million in the US annually.1–3 Epilepsy among the elderly has garnered attention in recent years, due to the disproportionate rates of incidence in this population, compounding the existing disease burden and its negative impact on quality-of-life, eg, loss of a driver’s license and reduced independence.4–6 Half of all new-onset epileptic seizures are expected to occur in patients aged 60 years and above in 2020.7,8 The risk of seizure recurrence after a first seizure could be as high as 80%.9 Unfortunately, there have been few randomized clinical trials in the elderly that could guide therapy.

Moreover, many epilepsy patients have a complex constellation of other conditions that might influence both therapy and outcomes. For example, increased survival in elderly people with other underlying medical complications may contribute to the observed increased rate of epilepsy.9–12 Neurodegenerative diseases are large contributors to the development of epilepsy, with Alzheimer’s disease patients experiencing a six- to tenfold increased risk of epilepsy when compared to age-matched healthy individuals.9 Cerebrovascular complications (eg, stroke), cerebral tumors, and severe brain injury are also significant risk factors contributing to epilepsy in the elderly.10–12 There are no trials to date that account for both epilepsy and comorbid conditions in the elderly.

In this study, we aimed to review the limitations of traditional retrospective and prospective studies involving the elderly population with epilepsy. We then sought to describe the application of a new model for conducting clinical research at the population level.

Scope of the problem

A total of 24 antiepileptic drugs (AEDs) are currently available in the US. While all have demonstrated efficacy to some degree, the amount of available information for each drug varies, particularly with respect to side effects and toxicity.13–17 In general, most clinicians believe that the benefits of preventing seizure recurrence with AEDs outweigh the potential risks of drug therapy.18,19 Therefore, treatment with AEDs is currently recommended for epilepsy in the elderly.20,21 However, the most recent national practice guidelines do not rank AEDs.22–24 As a result, AED-prescribing practices within adult epilepsy patients vary widely, and no consensus exists regarding which drugs should be used as a form of first-line treatment in the elderly.24–27

The elderly are exceptional, due to known age-related metabolic changes, reduced drug clearance, and increased pharmacodynamic sensitivity.4,20,27 This age-group is more vulnerable to treatment side effects, including cognitive impairment, vomiting, hepatic failure, and loss of mobility.28–34 Elderly patients often suffer from comorbid conditions, increasing the potential for drug–drug interactions, and reducing efficacy and adherence to monitoring schedules.27,35,36 Clinicians are left with questions regarding 1) which drug to prescribe for older adults that will be taken consistently, and 2) whether the drug(s) prescribed will achieve desired outcomes.

The current literature points to the need for more studies based on patient-centered outcomes for the elderly suffering from epilepsy. By identifying which drugs will lead to better seizure control, higher quality-of-life scores, fewer negative effects on cognition, and less frequent falls, physicians will be better able to create an optimized prescription regimen for these patients.37 However, because the elderly are commonly excluded from clinical trials, these outcomes remain underexplored.

Clinical trials

Clinical trials are regarded as the gold standard for defining optimal approaches to treatment. There are several major trial-related challenges that limit the likelihood that clinical trials can address the range of questions valuable for guiding therapy (Table 1). Trials can be extremely expensive to conduct, with few funders willing to sponsor the multitude of trials that might be necessary to develop a robust evidence base. Even fewer funders are willing to support large comparative trials in which there is a direct analysis of different candidate therapies (as opposed to a comparison to placebos). In part because of cost, most trials attempt to examine clinically homogeneous groups, and generally exclude those with multiple comorbidities, cognitive impairment, limited life expectancy, or functional limitations, which tend to exclude the modal elderly patient with seizures.38

Table 1.

Comparison of the advantages and limitations of study strategies

| Study stage/type | Randomized clinical trial | Prospective cohort | Causal inference framework |

|---|---|---|---|

| Study design/major limitations | Many studies are unethical, impractical, or too expensive | Misclassification bias and under ascertainment with administrative data | Misclassification bias and under ascertainment with administrative data |

| Recruitment | Expensive enrollment, multiple comorbid conditions, selection bias, limited external validity | Prospective, follow-up period might make it impractical or expensive | Retrospective, feasible within shorter period of time, less expensive |

| Randomization | Yes | No | Yes |

| Treatment-group retention and statistical analysis | Limited tolerability for drugs, limited statistical power, limited intention-to-treat effect estimates | Individual preferences, comorbidities, or practice patterns may determine the treatment group and may be enigmatically confounded, restricting validity | The appearance of a drug or formulary creates a new treatment arm, independently of individual preferences, comorbidities, or practice patterns |

Paradoxically, comorbidities that preclude participation in ED trials contribute to the unique burden of epilepsy observed in elderly patients. Compared with healthy individuals of the same age, patients with Alzheimer’s disease have a six- to tenfold increased risk of developing clinical seizures during the course of their illness.9 The paucity of comparative-effectiveness research involving patients with chronic neurological conditions, such as epilepsy and Alzheimer’s disease, as well as their lowered medication tolerance, limits the application of results from randomized clinical trials in this population.9,38,39

The International Conference on Harmonisation (ICH) guidelines on geriatrics (E7) were adopted in 1994 to improve elderly representation in clinical trials; however, low enrollment numbers persist.38 In clinical drug trials involving medications frequently prescribed to the elderly, less than 10% of participants are above age 65 years, and only 1% are above age 75 years. This is particularly low, given the bimodal distribution of incident epilepsy, with pediatric and elderly patients representing over 50% of the population.39,40

Even though substantial societal resources are dedicated to the conduct of randomized controlled trials that include the elderly population with epilepsy, most studies do not provide adequate clinical information, because they are only summarized by the “intention-to-treat effect”. In these intention-to-treat analyses, all persons, regardless of whether they obeyed the treatment regimens from the study’s protocol, are placed in the treatment arm they were randomized to.41

In epilepsy in particular, adherence to therapy tends to be poor in both trials and real-world practice. This variable adherence creates a major threat to the validity of randomized clinical trials to yield valid estimates of the treatment effects.41 Stated differently, epilepsy trials need to address potential time-varying confounders that can impact adherence to the study protocol, as well as to outcomes. By adding a properly conducted “per protocol analysis” to each intention-to-treat analysis in randomized trials, one can help adjust for these incomplete-adherence issues. Unfortunately, a universally accepted per protocol effect-estimation method does not currently exist. The naïve approach, “per protocol analysis”, is flawed, because it approximates nonadherence to occur at random. Modification of the prerandomization variables would lessen the impact of this bias, but this is not common in practice. In fact, most clinical trial protocols devote little if any attention to the estimation of the per protocol effect.41 With the aid of new statistical methods, which allow for manipulation of pre- and postrandomization variables, one can obtain less biased per protocol effect estimates than those found through the naïve per protocol analysis.42

Even when eligible, recruitment rates among the elderly in trials are often lower than among younger patients. This raises further concerns about the ability to generalize findings beyond the narrow group of trial subjects. In summary, even if substantial resources are allocated to conduct large randomized clinical trials targeting the elderly population, there might still be a gap in the literature with respect to treatment effectiveness (ie, efficacy of treatments in the real world), which includes adjustment for real-world adherence to treatment.

Observational studies

Randomized experiments often exclude the elderly, and many experiments are simply impractical.9,38,39 The next-best alternative to a randomized experiment is an observational study. Observational studies are promising, as observational data sets of elderly patients with epilepsy have become widely available with the advent and large-scale adoption of electronic health records, linked registry-claim data, and validated-claims data sets. However, common observational studies are scrutinized on the basis of both internal validity (the strength of the conclusions from the study) and external validity (the ability to generalize study results to a broader population). Internal validity is assessed on the basis of whether the observed changes can be credited to the exposure/intervention, and not to any systemic error in the study. A common cause of lack of internal validity is lacking a control group, or by including a noncomparable group.43 External validity refers to generalizability of study results to other populations and settings. In this way, internal validity is required for external validation, as a validation of the causal relationship is necessary before it can be applied universally.43 As outlined in Table 1, conventional observational studies (eg, comparative cohorts and case-control studies) face major challenges related to causality. For instance, individual preferences, practice patterns, and policy decisions determine the treatment group and may be enigmatically confounded, restricting validity.43 The study subjects, sampling method, and variable measures may all contribute to random variation.43

A number of statistical tools now exist for use in observational data to assess causality better, allowing for less biased effect estimates.42 These new forms of analysis, termed “causal inference”, have been used in comparative-effectiveness research using large observational data, and have been particularly helpful in the study of chronic conditions in which enrollment and adherence to treatment is highly variable.44,45 The effectiveness of the causal inference framework lies in its ability to make use of the counterfactual theory for outcome comparisons in point treatments and sustained-treatment strategies, organize and combine analytic approaches throughout literature, provide structured criticism of observational studies, and avoid common methodological pitfalls. Previous work has demonstrated the application of the causal inference framework across clinical trial treatment of chronic conditions, such as HIV and cancer, though this approach has rarely been used within neurology.44,46 The next section provides a practical example of the application of the causal inference framework to emulate a clinical trial examining the effect of AED choice on risk of seizure recurrence.

Emulating the target trial using observational data

We wish to estimate the 1-year risk of emergency room visits for seizures among elderly patients treated with a new-generation vs old-generation AED (eg, levetiracetam vs phenytoin) using a claims database, as in Table 2 and 3.

Table 2.

Summary of protocol of target trial to estimate effect of epilepsy therapy (old- vs new-generation antiepileptic drug [AED]) on 1-year risk of seizure recurrence

| Eligibility criteria | Patients with new diagnosis of epilepsy 2009–2014 older than 65 years with no AED use in previous 2 years |

| Treatment strategies: new- vs old-generation AED | Initiate therapy with an old- vs new-generation AEDa and remain on it during the 1-year follow-up period |

| Assignment procedures | Participants will be randomly assigned (ie, natural experiment) to either strategy at baseline, and will be aware of the strategy to which they have been assigned |

| Follow-up period | Starts at randomization and ends at diagnosis of seizure recurrence, death, loss to follow-up, or 1 year after baseline, whichever occurs first |

| Outcome | Seizure recurrence diagnosed at office visits or emergency rooms by a primary care physician, neurologist, or emergency physician within 1 year of baseline |

| Causal contrasts of interest | Intention-to-treat effect, per protocol effect |

| Analysis plan | Intention-to-treat effect estimated via comparison of 1-year seizure-recurrence risk among individuals assigned to each treatment strategy. Per-protocol effect estimation requires adjustment for pre- and postbaseline prognostic factors associated with adherence to the strategies of interest. All analysis will be adjusted for pre- and postbaseline prognostic factors associated with loss to follow-up. This analysis plan implies that the investigators prespecify and collect data on the adjustment factors |

Note:

Old = AED marketed before 1992, new = AED marketed after 1992.

Table 3.

Treatment allocation based on a natural experiment

| Treatment allocation | Treatment allocation | Outcome assessment |

|---|---|---|

| Patent protected (before 2009) | Majority of patients receiving phenytoin (2007–2008) | Seizure frequency (2009–2010) |

| Patent expired (after 2009) | Majority of patients receiving levetiracetam (2010–2011) | Seizure frequency (2012–2013) |

Notes: Measurement of prescription patterns before and after patent serves as one such natural experiment, in which changes in the effective antiepileptic-drug choice set are used as an independent instrument of treatment allocation. The natural experiment allows for identification of two groups using levetiracetam’s expiration year (2009): 1) patent protected – most patients received older drugs (eg, phenytoin), and 2) patent expired – most patients received newer drugs (eg, levetiracetam). The outcomes can now be assessed over a 2-year panel: 2009–2010 (patent protected) and 2012–2013 (patent expired).

Eligibility criteria

Analysis of retrospective data using the causal inference framework depends on concordance between the study question and billing-codification scheme. If we wish to specify that all eligible patients are required to have an electroencephalogram with focal interictal abnormalities suggestive of localization-related epilepsy, excluding patients with abnormalities suggestive of generalized epilepsy, we must ensure the claim data set includes these findings or reconsider the eligibility criteria and question of interest.

In true randomized controlled trials, expected engagement in follow-up may be defined and measured during the recruitment process, while it is often problematic in observational analyses. The analysis of retrospective data using the causal inference framework can combat this issue by examining the contact level between individuals and their health care providers prior to baseline. It would not be appropriate simply to exclude those with missing outcome data due to lack of follow-up (ie, biased censored data).47

Treatment strategies

Most randomized controlled clinical trials compare treatment strategies under ideal conditions (ie, controlled). Pragmatic clinical trials compare treatment strategies under real-world conditions. For instance, the treatment strategies are often controlled (eg, placebo vs active drug), but compliance with monitoring schedules or study protocol may not be. In using retrospective data in which neither treatment strategies nor outcomes assessment are controlled, we need to analyze the data in a way that emulates a random assignment of treatment strategies (termed here emulated trials using the causal inference framework).

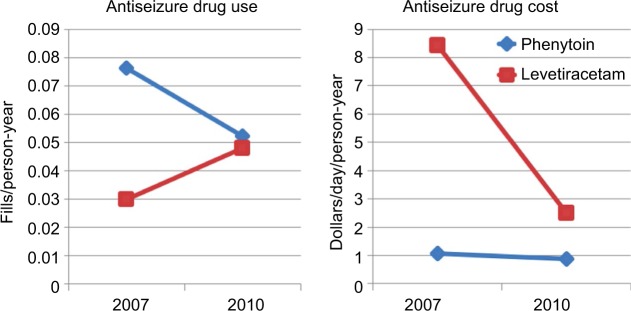

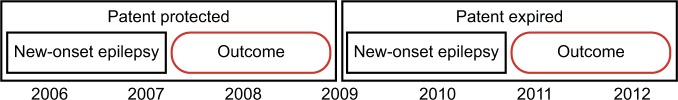

The use of a natural experiment created by changes in the patent protection of a new drug, such as levetiracetam, is an example of how we can emulate a random allocation of treatment strategies (ie, an assumption that patent-status changes lead to changes in the unit prices and formulary placement, and that the changes are unrelated to any individual patient’s clinical status). In this example, an observational trial using the causal inference approach will use the changes in prescribing patterns due to more availability of the drug as an independent instrument of treatment allocation, which is a factor not influenced by other predictor variables (eg, patient’s clinical status). To illustrate the feasibility of this example, we analyzed anti-seizure prescription fills (phenytoin vs. levetiracetam) by Medicare beneficiaries aged 65+ years in 2007 and 2010, using data from the Medicare Part D program (Figure 1 and Table 4). In addition, we examined the changes in unit prices (levetiracetam lost its patent protection in 2009). In this example, the shift in prescribing patterns identifies two groups using levetiracetam’s expiration year: 1) patent protected (older) – most patients received older drugs, and 2) patent expired (newer) – most patients received newer drugs. Analysis of retrospective data using the causal inference approach will then assess outcomes over a 2-year panel: 2008–2009 (patent protected) and 2011–2012 (patent expired), as in Figure 2.48,49

Figure 1.

Changes in antiepileptic-drug choice (patterns of use and cost).

Notes: From 2007 to 2010, there were substantial changes in the use and the costs of phenytoin and levetiracetam. Phenytoin: 32% decrease in fills/person-year with 32% decrease in mean cost/day. Levetiracetam: 27% increase in prescriptions filled/person-year with 70% decrease in mean cost/day.

Table 4.

Changes in the anti-epileptic drug choice (demographics of the sample)

| 2007 Calendar Year | 2010 Calendar Year | |

|---|---|---|

| Subjects | >4M | >4M |

| Female | 60% | 59% |

| Low income subsidy | 31% | 31% |

| Dual eligible beneficiarya | 19% | 8% |

| Age (mean, SD) | 70 (12) | 70 (13) |

| Risk scoreb | 1.08 | 0.98 |

Note:

Dual eligible beneficiary for Medicare-Medicaid,

risk score refers to part D risk adjustment score based on diagnoses. Part D refers to the Medicare files that contains information about medication fills.

Abbreviation: M, million.

Figure 2.

Natural experiment created by expiration in patent protection.

Assignment procedures

Randomized controlled clinical trials are frequently based on blind assignment, whereas analysis of retrospective data using the causal inference framework, termed here “emulated target trials”, often include participants whose treatments are known to study investigators.48,50 The goal is to compare realistic treatment strategies in persons who are aware of care received.

It is necessary to fine-tune baseline confounding factors, ensuring comparability among the groups in order to mimic the random assignment of strategies. Comparability here means that the confounders are symmetrically distributed among the groups, which is the principal advantage of randomization. An asymmetrically distributed confounding factor leads to a confounding bias and thus erroneously derived associations between exposure and outcome. For instance, concurrent use of a benzodiazepine may confound the association between a new AED (eg, phenytoin vs placebo) and seizure control. In this scenario, comparable groups should have the use of benzodiazepine symmetrically distributed.

Confounding bias is especially a challenge when the target trial aims to compare an active treatment to no treatment (ie, not even placebo). The accepted modes of fine-tuning baseline confounders to ensure that sure potential confounders are symmetrically distributed include matching, stratification or regression, standardization or inverse probability weighting, g-estimation, doubly robust methods, and propensity-score matching. Hernán and Robins42 can be referred for a detailed description of each of these methods.44,45,50,51 However, this issue does not appear often in epilepsy-related trials, as only a small portion of epileptic patients decline treatment.52 Moreover, with the use of indirect approaches, such as “natural experiments”, we can accurately alert ourselves of confounding biases (Figure 2 and Table 3).

The term “natural experiment” in population-based research means an event not under the control of researchers, but which researchers can use to study the association between the occurrences of the event on outcomes. Typical examples involve the introduction of free services previously unaffordable, such as with the Patient Protection and Affordable Care Act in the US, or a new drug, which represent a new event independent of patient baseline comorbid conditions or severity. Nevertheless, it is possible that even after balancing measured confounders, the analysis might still be confounded by unmeasured variables. In our example, it is possible that differing degrees of generosity of any Medicare supplemental insurance (eg, patient cost-sharing, as determined by Medigap insurance) could affect the likelihood that patients receive the proper treatment.53 This is a major threat to using causal inference approaches, with patient cost-sharing levels (or overall insurance generosity) being an unmeasured confounder.

Furthermore, information sources previously believed to be impractical for large-scale research can be used to lessen the impact of unmeasured confounding. For example, manual medical-chart review may be eliminated with the use of novel technologies for natural language and advanced image processing. Machine-learning tools and new computer-science techniques may function to help investigators identify combinations of variables that work together to decrease confounding when compared to traditional methods.54

Negative- or positive-control outcomes prove to be an adequate check for confounding bias when the magnitude of their effect is not zero but approximately known. In a negative-control outcome, the exposure to a drug is not expected to be associated with the control outcome. For instance, AEDs do not affect the risk of developing lung cancers based on prior knowledge and safety drug trials. One can use the rate of hospitalizations for lung cancer after exposure to phenytoin vs levetiracetam as a negative-control outcome. In that case, the primary hypothesis is that the effect of initiating an antiseizure drug is unlikely to be related to the risk of hospitalizations for lung cancer or related risk factors (eg, smoking). In this example, if the patients receiving phenytoin prove to be more likely to develop lung cancer (ie, a result with no reasonable biological explanation), then this indicates a residual confounding (eg, physicians systematically chose to give phenytoin to potentially sicker patients).54 In a positive-control outcome strategy, the outcomes are known to be affected by the drug. In that case, lack of an association between the exposure and the outcome should raise concern for residual confounding. For instance, patients on phenytoin should have frequent drug-level monitoring (ie, expected frequent lab work orders), while patients on levetiracetam are not required to have any drug-level monitoring, unless there is a concern for noncompliance with treatment. If the rate of drug-level monitoring proves to be greater on the levetiracetam arm, there may be residual confounding (eg, physicians systematically chose to give levetiracetam to potentially noncompliant patients). In every case, negative or positive outcomes are identified a priori. Tan et al provide a framework for selecting control outcomes and a series of examples from previous studies.54

Outcome

In our example, we examine treatment-specific event rates suggesting seizure recurrence within 1 year. We can create two variables to measure events suggestive of seizure recurrence: 1) epilepsy-related ER visit rates within the 1-year study period, and 2) epilepsy-related outpatient physician-visit rates. In this example, epilepsy-related health care may be defined as an event associated with an International Classification of Diseases (ICD)-9 code 345.x, an AED code, or an epilepsy-specific event code.3,54–57 Independent outcome validation is recommended, as studies without it have been shown to result in misleading effect estimates. In our example, rate of seizure recurrence among phenytoin users may vary as a function of fluctuations in drug levels, resulting in more frequent office visits related to safety monitoring relative to levetiracetam users. Such a factor could cause an inflated estimation of seizure recurrence in the phenytoin group without biological basis. Unfortunately, because most treating physicians are aware of the treatment received by their patients, observational data alone cannot be used to create a trial with systematic and blind-outcome ascertainment, unless the outcome ascertainment is independent of treatment history.

Causal contrast(s) of interest

Several measures of causal effect are of particular interest in true randomized trials. Typical examples include the intention-to-treat effect (ie, the comparative effect of being placed in a certain treatment strategy at baseline) and per protocol effect (ie, the comparative effect of following up with the treatment strategies in the study protocol).58,59 In the analysis of retrospective data using the causal inference approach, It is important to estimate both the intention-to-treat effect and the per protocol effect.

Analysis plan

In a true randomized trial, the intention-to-treat effect is estimated by conduction of an intention-to-treat analysis comparing the results of the groups assigned to each treatment strategy. Unfortunately, when observational data already exist, performing an intention-to-treat analysis is nearly impossible. The closest analog to an intention-to-treat analysis is a comparison of treatment-strategy initiators. This comparison mimics the intention-to-treat analysis in target trials at baseline, whether or not individuals continue with the strategies thereafter.

Data on AED-choice set is analogous to intention-to-treat analysis. Data on patients who are prescribed but have not started treatment (ie, evidence of prescription, but no evidence of dispensing) would be analogous to a per protocol analysis. In our example, we may control for potential confounders by including them as covariates in multivariable-adjusted regression models. These covariates might include patient, physician, insurance, and area traits, as well as additional measures, such as exposure to other drugs (eg, antiseizure-drug polypharmacy, antiseizure-drug indication).

As in traditional regression analysis, we may also control for potential confounders by using a propensity score-matching strategy. This model calculates propensity scores using a logistic regression model to estimate the probability of an antiseizure-drug choice. Covariates related to the antiseizure-drug prescription should be included in the regression model used to compute the propensity score. Each patient who was prescribed phenytoin is then matched to a patient with a similar propensity score who received levetiracetam.45 We must also be aware that drug exposure, as well as covariates, are likely to change during the 1-year follow-up period, which could result in a confounding of treatment effectiveness. When this occurs, the investigator(s) should turn to the per protocol effect for evidence of confounding.58 In our example, the impact of these assumptions can be addressed by using inverse-probability-of-treatment weighting. In this method of analysis, we calculate the parameters of a marginal structural model of time to seizure recurrence and develop a Fine–Gray competing-risk model as a modified risk set that accounts for outcome events due to competing risks.45 Then, we develop estimations of additional outcomes, such as cost for epilepsy care, and explore additional deterministic and probabilistic sensitivity analysis. Hernán and Robins42 can be referred for a comprehensive description of each of these terms and methods.44,45,50,51

Finally, unmeasured confounders in observational studies may result in biased effect estimates, which present a major threat to causal inference approaches. As outlined in the “Assignment procedures” section, differences in care access are possible (eg, patient cost-sharing), which could affect the likelihood that patients receive proper treatment.53 Several approaches can evaluate the likelihood of this threat: 1) comparing 2011–2012 data to earlier periods, assessing the potential magnitude and impact of this bias, as described earlier; 2) using additional data, such as national surveys, to estimate the local area percentage use of specific insurance plans; 3) focusing on the subgroup of beneficiaries who have insurance plans that guarantee negligible cost-sharing; and 4) increasing the number of measured traits, eg, using a high-dimensional propensity score.

In practice, as the number of measured traits increases, the probability that there are relevant unmeasured factors influencing key variables will decrease.59 Basic information on unmeasured variables is captured by a collection of measured traits, including patient and physician traits, which are associated with the unmeasured factor. For example, sicker patients tend to have more contact with physicians, and appear to have a greater likelihood of receiving antiseizure drugs.60 Validation of the observational data using a subset of longitudinal medical records is warranted. Two other considerations should be borne in mind: defining time zero and specifying a grace period.

Defining time zero

Definition of baseline, or a time zero to follow-up, is a key aspect of successful target-trial emulation. This is the point where study outcomes begin to be quantified and all eligibility criteria must be met. Start time is defined as the point at which treatment strategy is assigned. Any treatment strategies started after randomization could cause selection bias, as every outcome case between randomization and time zero would be omitted from analysis.

When utilizing observational data, the ideal time zero is when a patient who meets the eligibility criteria begins a treatment strategy. This can become problematic, because one individual may meet eligibility at many different times. For instance, if a study aims to compare seizure prophylaxis during and early after craniotomy of brain tumors for patients on phenytoin vs levetiracetam, the follow-up start time is in the immediate postoperative period.58,59 The follow-up begins when eligibility criteria are met, but this time may vary from individual to individual.

Specifying a grace period

A pragmatic trial is often designed to allow for the constraints faced by decision makers in practice. For example, once a patient and his clinician agree to initiation of AED therapy, it may take between a few minutes (eg, in an emergency room situation) to several weeks for the patient to receive the therapy (eg, in an outpatient setting with insurance-driven preauthorization paperwork). Another example is the difference in titration schedules. One antiepileptic may require a titration schedule (eg, lamotrigine), where the patient remains on a subtherapeutic dose until reaching a target dose within 6–8 weeks, whereas other AEDs have the propensity to be safely administered at their target dose within days to hours. A possible way to circumvent these variations would be to have the trial protocol dictate that a patient with the initial AED strategy is given a 2-month grace period to confirm compliance with the protocol, as long as therapy is begun within a month of randomization.

If no grace period were allowed at randomization for a target trial aimed at examining AED therapy, then the trial would be inadequate, failing to adopt strategies applicable to clinical practice. When using grace periods in target trials with observational data, it is important to make this grace period analogous, beginning at time zero. Implementation of such a grace period would increase the realism of the strategies and the number of eligible participants from the observational database.

A consequence of having a grace period is that for the duration of the grace period, an individual’s observational data is consistent with more than one strategy. In our example, the introduction of a 2-month grace period implies that the strategies are redefined as initiation of phenytoin or levetiracetam within 2 months of eligibility. Therefore, a patient who starts either therapy in month 2 after baseline has data consistent with both strategies during months 1 and 2. Whenever a patient’s data at baseline are consistent with initiation of two or more treatment strategies, one possibility is to assign them randomly to one of them.

Consequently, the patient will have data in line with both strategies during the first 2 months. If the patient dies during this time period, how does the investigator decide which strategy to assign them? There are two possibilities: 1) randomly assign the patient to either strategy, or 2) create two copies of the patient (ie, clones), with each clone assigned to a different strategy.34–37 When using clones, however, it is important to censor them once their data lose consistency with the strategy to which they were appointed. For instance, if a patient began therapy at month 2, the clone placed in the “never initiate therapy” strategy would be censored at that time. However, time-varying factors must be adjusted for (with inverse-probability weighting, for example) to avoid any bias introduced via censoring.58,59

Cloning and censoring are not perfect techniques. For example, the intention-to-treat effect cannot be properly replicated, since each individual was placed in many or all strategies at baseline. Therefore, contrasting based on baseline assignment, such as an intention-to-treat analysis, can provide a comparison of groups with identical outcomes. The incorporation of a grace period at baseline can permit investigation of the target trial per protocol effect.

Recommendations

Neurologists and clinical neuroscience researchers should learn to use causal inference tools with both experimental and observational data. These approaches are particularly critical with respect to the care of elderly patients with epilepsy.

Acknowledgments

The authors acknowledge Miguel Hernan, MD, PhD, MPH, MSc of the Harvard T Chan School of Public Health, Departments of Epidemiology and Biostatistics for statistical consultation. LMVRM is the recipient of a 2015–2016 Clinical Research Fellowship sponsored by the American Brain Foundation. MBW receives grant funding from NIH (NIH-NINDS 1K23NS090900). JH receives grant funding from NIH (1R01 CA164023-04, 2P01AG032952-06A1, R01 HD075121-04, R01 MH104560-02).

Footnotes

Author contributions

All authors contributed toward data analysis, drafting and revising the paper and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

- 1.Stovner LJ, Hoff JM, Svalheim S, Gilhus NE. Neurological disorders in the Global Burden of Disease 2010 study. Acta Neurol Scand Suppl. 2014;129(198):1–6. doi: 10.1111/ane.12229. [DOI] [PubMed] [Google Scholar]

- 2.Reid AY, St Germaine-Smith C, Liu M, et al. Development and validation of a case definition for epilepsy for use with administrative health data. Epilepsy Res. 2012;102(3):173–179. doi: 10.1016/j.eplepsyres.2012.05.009. [DOI] [PubMed] [Google Scholar]

- 3.St Germaine-Smith C, Metcalfe A, Pringsheim T, et al. Recommendations for optimal ICD codes to study neurologic conditions: a systematic review. Neurology. 2012;79(10):1049–1055. doi: 10.1212/WNL.0b013e3182684707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Brodie MJ, Elder AT, Kwan P. Epilepsy in later life. Lancet Neurol. 2009;8(11):1019–1030. doi: 10.1016/S1474-4422(09)70240-6. [DOI] [PubMed] [Google Scholar]

- 5.Werhahn KJ, Trinka E, Dobesberger J, et al. A randomized, double-blind comparison of antiepileptic drug treatment in the elderly with new-onset focal epilepsy. Epilepsia. 2015;56(3):450–459. doi: 10.1111/epi.12926. [DOI] [PubMed] [Google Scholar]

- 6.Baker GA, Jacoby A, Buck D, Brooks J, Potts P, Chadwick DW. The quality of life of older people with epilepsy: findings from a UK community study. Seizure. 2001;10(2):92–99. doi: 10.1053/seiz.2000.0465. [DOI] [PubMed] [Google Scholar]

- 7.Faught E, Richman J, Martin R, et al. Incidence and prevalence of epilepsy among older US Medicare beneficiaries. Neurology. 2012;78(7):448–453. doi: 10.1212/WNL.0b013e3182477edc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Centers for Disease Control and Prevention . At a Glance 2015 – Targeting Epilepsy: One of the Nation’s Most Common Neurological Conditions. Atlanta: CDC; 2015. [Accessed October 12, 2016]. Available from: http://www.cdc.gov/chronicdisease/resources/publications/aag/pdf/2015/epilepsy-2015-aag-rev.pdf. [Google Scholar]

- 9.Pandis D, Scarmeas N. Seizures in Alzheimer disease: clinical and epidemiological data. Epilepsy Curr. 2012;12(5):184–187. doi: 10.5698/1535-7511-12.5.184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stegmayr B, Asplund K, Wester OP. Trends in incidence, case-fatality rate, and severity of stroke in northern Sweden, 1985–1991. Stroke. 1994;25(9):1738–1745. doi: 10.1161/01.str.25.9.1738. [DOI] [PubMed] [Google Scholar]

- 11.Maschio M. Brain tumor-related epilepsy. Curr Neuropharmacol. 2012;10(2):124–133. doi: 10.2174/157015912800604470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Annegers JF, Hauser WA, Coan SP, Rocca WA. A population-based study of seizures after traumatic brain injuries. N Engl J Med. 1998;338(1):20–24. doi: 10.1056/NEJM199801013380104. [DOI] [PubMed] [Google Scholar]

- 13.Talati R, Scholle JM, Phung OJ, et al. Effectiveness and Safety of Antiepileptic Medications in Patients with Epilepsy. Rockville, MD: Agency for Healthcare Research and Quality; 2011. Available from: https://www.effectivehealthcare.ahrq.gov/ehc/products/159/868/Epilepsy_FinalReport_20120802.pdf. [PubMed] [Google Scholar]

- 14.Margolis JM, Chu BC, Wang ZJ, Copher R, Cavazos JE. Effectiveness of antiepileptic drug combination therapy for partial-onset seizures based on mechanisms of action. JAMA Neurol. 2014;71(8):985–993. doi: 10.1001/jamaneurol.2014.808. [DOI] [PubMed] [Google Scholar]

- 15.Arif H, Buchsbaum R, Pierro J, et al. Comparative effectiveness of 10 antiepileptic drugs in older adults with epilepsy. Arch Neurol. 2010;67(4):408–415. doi: 10.1001/archneurol.2010.49. [DOI] [PubMed] [Google Scholar]

- 16.Gupta PP, Thacker AK, Haider J, Dhawan S, Pandey N, Pandey AK. Assessment of topiramate’s efficacy and safety in epilepsy. J Neurosci Rural Pract. 2014;5(2):144–148. doi: 10.4103/0976-3147.131657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Glauser T, Ben-Menachem E, Bourgeois B, et al. ILAE treatment guidelines: evidence-based analysis of antiepileptic drug efficacy and effectiveness as initial monotherapy for epileptic seizures and syndromes. Epilepsia. 2006;47(7):1094–1120. doi: 10.1111/j.1528-1167.2006.00585.x. [DOI] [PubMed] [Google Scholar]

- 18.Sheorajpanday RV, De Deyn PP. Epileptic fits and epilepsy in the elderly: general reflections, specific issues and therapeutic implications. Clin Neurol Neurosurg. 2007;109(9):727–743. doi: 10.1016/j.clineuro.2007.07.002. [DOI] [PubMed] [Google Scholar]

- 19.Sunmonu TA, Komolafe MA, Ogunrin AO, Ogunniyi A. Cognitive assessment in patients with epilepsy using the Community Screening Interview for Dementia. Epilepsy Behav. 2009;14(3):535–539. doi: 10.1016/j.yebeh.2008.12.026. [DOI] [PubMed] [Google Scholar]

- 20.Leppik IE. Epilepsy in the elderly: scope of the problem. Int Rev Neurobiol. 2007;81(6):1–14. doi: 10.1016/S0074-7742(06)81001-9. [DOI] [PubMed] [Google Scholar]

- 21.Huber DP, Griener R, Trinka E. Antiepileptic drug use in Austrian nursing home residents. Seizure. 2013;22(1):24–27. doi: 10.1016/j.seizure.2012.09.012. [DOI] [PubMed] [Google Scholar]

- 22.Fountain NB, Van Ness PC, Bennett A, et al. Quality improvement in neurology: Epilepsy Update Quality Measurement Set. Neurology. 2015;84(14):1483–1487. doi: 10.1212/WNL.0000000000001448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kuehn BM. New adult first seizure guideline emphasizes an individualized approach. JAMA. 2015;314(2):111–113. doi: 10.1001/jama.2015.5341. [DOI] [PubMed] [Google Scholar]

- 24.Krumholz A, Shinnar S, French J, Gronseth GS, Wiebe S. Evidence-based guideline: management of an unprovoked first seizure in adults. Neurology. 2015;85(17):1526–1527. doi: 10.1212/01.wnl.0000473351.32413.7c. [DOI] [PubMed] [Google Scholar]

- 25.Bourgeois FT, Olson KL, Poduri A, Mandl KD. Comparison of drug utilization patterns in observational data: antiepileptic drugs in pediatric patients. Pediatr Drugs. 2015;17(5):401–410. doi: 10.1007/s40272-015-0139-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hope OA, Zeber JE, Kressin NR, et al. New-onset geriatric epilepsy care: race, setting of diagnosis, and choice of antiepileptic drug. Epilepsia. 2009;50(5):1085–1093. doi: 10.1111/j.1528-1167.2008.01892.x. [DOI] [PubMed] [Google Scholar]

- 27.Pugh MJ, Vancott AC, Steinman MA, et al. Choice of initial antiepileptic drug for older veterans: possible pharmacokinetic drug interactions with existing medications. J Am Geriatr Soc. 2010;58(3):465–471. doi: 10.1111/j.1532-5415.2010.02732.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yamada M, Welty TE. Comment: generic substitution of antiepileptic drugs – a systematic review of prospective and retrospective studies [reply] Ann Pharmacother. 2012;46(2):304. doi: 10.1345/aph.1Q349a. [DOI] [PubMed] [Google Scholar]

- 29.Erickson SC, Le L, Ramsey SD, et al. Clinical and pharmacy utilization outcomes with brand to generic antiepileptic switches in patients with epilepsy. Epilepsia. 2011;52(7):1365–1371. doi: 10.1111/j.1528-1167.2011.03130.x. [DOI] [PubMed] [Google Scholar]

- 30.Hartung DM, Middleton L, Svoboda L, McGregor JC. Generic substitution of lamotrigine among Medicaid patients with diverse indications: a cohort-crossover study. CNS Drugs. 2012;26(8):707–716. doi: 10.2165/11634260-000000000-00000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Brodie MJ, Stephen LJ. Outcomes in elderly patients with newly diagnosed and treated epilepsy. Int Rev Neurobiol. 2007;81:253–263. doi: 10.1016/S0074-7742(06)81016-0. [DOI] [PubMed] [Google Scholar]

- 32.Tatum WO. Antiepileptic drugs: adverse effects and drug interactions. Continuum (Minneap Minn) 2010;16(3 Epilepsy):136–158. doi: 10.1212/01.CON.0000368236.41986.24. [DOI] [PubMed] [Google Scholar]

- 33.Birnbaum AK, Hardie NA, Conway JM, et al. Phenytoin use in elderly nursing home residents. Am J Geriatr Pharmacother. 2003;1(2):90–95. doi: 10.1016/s1543-5946(03)90005-5. [DOI] [PubMed] [Google Scholar]

- 34.Griffith HR, Martin RC, Bambara JK, Marson DC, Faught E. Older adults with epilepsy demonstrate cognitive impairments compared with patients with amnestic mild cognitive impairment. Epilepsy Behav. 2006;8(1):161–168. doi: 10.1016/j.yebeh.2005.09.004. [DOI] [PubMed] [Google Scholar]

- 35.Garrard J, Cloyd J, Gross C, et al. Factors associated with antiepileptic drug use among elderly nursing home residents. J Gerontol A Biol Sci Med Sci. 2000;55(7):M384–M392. doi: 10.1093/gerona/55.7.m384. [DOI] [PubMed] [Google Scholar]

- 36.Gidal BE, French JA, Grossman P, Le Teuff G. Assessment of potential drug interactions in patients with epilepsy: impact of age and sex. Neurology. 2009;72(5):419–425. doi: 10.1212/01.wnl.0000341789.77291.8d. [DOI] [PubMed] [Google Scholar]

- 37.Roberson ED, Hope OA, Martin RC, Schmidt D. Geriatric epilepsy: research and clinical directions for the future. Epilepsy Behav. 2011;22(1):103–111. doi: 10.1016/j.yebeh.2011.04.005. [DOI] [PubMed] [Google Scholar]

- 38.Beers E, Moerkerken DC, Leufkens HG, Egberts TC, Jansen PA. Participation of older people in preauthorization trials of recently approved medicines. J Am Geriatr Soc. 2014;62(10):1883–1890. doi: 10.1111/jgs.13067. [DOI] [PubMed] [Google Scholar]

- 39.Räty LK A, Söderfeldt BA, Larsson G, Larsson BM. The relationship between illness severity, sociodemographic factors, general self-concept, and illness-specific attitude in Swedish adolescents with epilepsy. Seizure. 2004;13(6):375–382. doi: 10.1016/j.seizure.2003.09.011. [DOI] [PubMed] [Google Scholar]

- 40.Hesdorffer DC, Logroscino G, Benn EK, Katri N, Cascino G, Hauser WA. Estimating risk for developing epilepsy: a population-based study in Rochester, Minnesota. Neurology. 2011;76(1):23–27. doi: 10.1212/WNL.0b013e318204a36a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Murray EJ, Hernán MA. Adherence adjustment in the Coronary Drug Project: a call for better per-protocol effect estimates in randomized trials. Clin Trials. 2016;13(4):372–378. doi: 10.1177/1740774516634335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hernán MA, Robins JM. Using big data to emulate a target trial when a randomized trial is not available. Am J Epidemiol. 2016;183(8):758–764. doi: 10.1093/aje/kwv254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Carlson MD, Morrison RS. Study design, precision, and validity in observational studies. J Palliat Med. 2009;12(1):77–82. doi: 10.1089/jpm.2008.9690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Swanson SA, Holme Ø, Løberg M, et al. Bounding the per-protocol effect in randomized trials: an application to colorectal cancer screening. Trials. 2015;16:541. doi: 10.1186/s13063-015-1056-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hernán MA, Lanoy E, Costagliola D, Robins JM. Comparison of dynamic treatment regimes via inverse probability weighting. Basic Clin Pharmacol Toxicol. 2006;98(3):237–242. doi: 10.1111/j.1742-7843.2006.pto_329.x. [DOI] [PubMed] [Google Scholar]

- 46.Cain LE, Saag MS, Petersen M, et al. Using observational data to emulate a randomized trial of dynamic treatment-switching strategies: an application to antiretroviral therapy. Int J Epidemiol Epub. 2015 Dec 31; doi: 10.1093/ije/dyv295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hernán MA, Hernández-Díaz S, Robins JM. A structural approach to selection bias. Epidemiology. 2004;15(5):615–625. doi: 10.1097/01.ede.0000135174.63482.43. [DOI] [PubMed] [Google Scholar]

- 48.Thorpe KE, Zwarenstein M, Oxman AD, et al. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J Clin Epidemiol. 2009;62(5):464–475. doi: 10.1016/j.jclinepi.2008.12.011. [DOI] [PubMed] [Google Scholar]

- 49.Schwartz D, Lellouch J. Explanatory and pragmatic attitudes in therapeutical trials. J Clin Epidemiol. 2009;62(5):499–505. doi: 10.1016/j.jclinepi.2009.01.012. [DOI] [PubMed] [Google Scholar]

- 50.Hernán MA, Alonso A, Logan R, et al. Observational studies analyzed like randomized experiments. Epidemiology. 2008;19(6):766–779. doi: 10.1097/EDE.0b013e3181875e61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gruber S, Logan RW, Jarrín I, Monge S, Hernán MA. Ensemble learning of inverse probability weights for marginal structural modeling in large observational datasets. Stat Med. 2015;34(1):106–117. doi: 10.1002/sim.6322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Schneeweiss S, Patrick AR, Stürmer T, et al. Increasing levels of restriction in pharmacoepidemiologic database studies of elderly and comparison with randomized trial results. Med Care. 2007;45(10 Suppl 2):S131–S142. doi: 10.1097/MLR.0b013e318070c08e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Franchi C, Giussani G, Messina P, et al. Validation of healthcare administrative data for the diagnosis of epilepsy. J Epidemiol Community Health. 2013;67(12):1019–1024. doi: 10.1136/jech-2013-202528. [DOI] [PubMed] [Google Scholar]

- 54.Fei Wang NL. A Framework for Mining Signatures from\nEvent Sequences and Its Applications\nin Healthcare Data. [Accessed October 28, 2016];IEEE Trans Pattern Anal Mach Intell. 2013 35(2):272. doi: 10.1109/TPAMI.2012.111. Available at: http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=6200289. [DOI] [PubMed] [Google Scholar]

- 55.Tan M, Wilson I, Braganza V, et al. Epilepsy and behavior development and validation of an epidemiologic case definition of epilepsy for use with routinely collected Australian health data. Epilepsy Behav. 2015;51:65–72. doi: 10.1016/j.yebeh.2015.06.031. [DOI] [PubMed] [Google Scholar]

- 56.Holden EW, Nguyen HT, Grossman E, et al. Estimating prevalence, incidence, and disease-related mortality for patients with epilepsy in managed care organizations. Epilepsia. 2005;46(2):311–319. doi: 10.1111/j.0013-9580.2005.30604.x. [DOI] [PubMed] [Google Scholar]

- 57.Hernán MA, Lanoy E, Costagliola D, Robins JM. Comparison of dynamic treatment regimes via inverse probability weighting. Basic Clin Pharmacol Toxicol. 2006;98(3):237–242. doi: 10.1111/j.1742-7843.2006.pto_329.x. [DOI] [PubMed] [Google Scholar]

- 58.Hernán MA, Hernández-Díaz S, Robins JM. A structural approach to selection bias. Epidemiology. 2004;15(5):615–625. doi: 10.1097/01.ede.0000135174.63482.43. [DOI] [PubMed] [Google Scholar]

- 59.Bodrogi J, Kaló Z. Principles of pharmacoeconomics and their impact on strategic imperatives of pharmaceutical research and development. Br J Pharmacol. 2010;159(7):1367–1373. doi: 10.1111/j.1476-5381.2009.00550.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kurth T, Lewis BE, Walker AM. Health care resource utilization in patients with active epilepsy. Epilepsia. 2010;51(5):874–882. doi: 10.1111/j.1528-1167.2009.02404.x. [DOI] [PubMed] [Google Scholar]

- 61.Swarztrauber K, Vickrey BG, Mittman BS. Physicians’ preferences for specialty involvement in the care of patients with neurological conditions. Med Care. 2002;40(12):1196–1209. doi: 10.1097/00005650-200212000-00007. [DOI] [PubMed] [Google Scholar]