Abstract

Background and aim

Parkinson’s disease (PD) patients have impairment of facial expressivity (hypomimia) and difficulties in interpreting the emotional facial expressions produced by others, especially for aversive emotions. We aimed to evaluate the ability to produce facial emotional expressions and to recognize facial emotional expressions produced by others in a group of PD patients and a group of healthy participants in order to explore the relationship between these two abilities and any differences between the two groups of participants.

Methods

Twenty non-demented, non-depressed PD patients and twenty healthy participants (HC) matched for demographic characteristics were studied. The ability of recognizing emotional facial expressions was assessed with the Ekman 60-faces test (Emotion recognition task). Participants were video-recorded while posing facial expressions of 6 primary emotions (happiness, sadness, surprise, disgust, fear and anger). The most expressive pictures for each emotion were derived from the videos. Ten healthy raters were asked to look at the pictures displayed on a computer-screen in pseudo-random fashion and to identify the emotional label in a six-forced-choice response format (Emotion expressivity task). Reaction time (RT) and accuracy of responses were recorded. At the end of each trial the participant was asked to rate his/her confidence in his/her perceived accuracy of response.

Results

For emotion recognition, PD reported lower score than HC for Ekman total score (p<0.001), and for single emotions sub-scores happiness, fear, anger, sadness (p<0.01) and surprise (p = 0.02). In the facial emotion expressivity task, PD and HC significantly differed in the total score (p = 0.05) and in the sub-scores for happiness, sadness, anger (all p<0.001). RT and the level of confidence showed significant differences between PD and HC for the same emotions. There was a significant positive correlation between the emotion facial recognition and expressivity in both groups; the correlation was even stronger when ranking emotions from the best recognized to the worst (R = 0.75, p = 0.004).

Conclusions

PD patients showed difficulties in recognizing emotional facial expressions produced by others and in posing facial emotional expressions compared to healthy subjects. The linear correlation between recognition and expression in both experimental groups suggests that the two mechanisms share a common system, which could be deteriorated in patients with PD. These results open new clinical and rehabilitation perspectives.

Introduction

Facial expression of emotion is a key tool for communicating with others. Likewise, our ability to interpret the emotional facial expression of others is key to successfully understanding what others are communicating to us. As would be expected given the complexity of producing or interpreting emotional facial expression, distributed brain networks have been proposed as being involved, including cortical and sub-cortical regions.

One important and unresolved question is: to what extent does the facility for emotional facial expression overlap with the facility for interpretation of the emotional facial expression of others? The concept of “mirroring” of observed action by activation of the sensorimotor network of the observer is widely discussed in the motor control literature, but does this have relevance for the expression and understanding of facial emotion? In an fMRI study of healthy participants, largely overlapping patterns of activation were noted for both observation and imitation of facial emotional expressions, including the premotor face area, the pars opercularis of the inferior frontal gyrus, the superior temporal sulcus, the insula and the amygdala [1]. These observed activations fit with previous works investigating the neural correlates of emotional expression and interpretation of the emotional facial expression of others.

However, there are a number of outstanding issues with the current evidence in favour of a link between the mechanisms of emotion expression production and recognition. Firstly, the demonstration of overlapping activation patterns in two different tasks does not necessarily imply co-dependence of behavioural abilities linked to these activations. Secondly, there is evidence that different emotions (and their recognition) may involve different brain regions. A recent meta-analysis of neuroimaging studies in 1600 individuals has suggested that basic emotions are implemented by neural systems that are at least partially separable, although they may not be represented by entirely distinct neural circuits [2]. Happy and fearful faces activate the amygdala bilaterally, sad faces the right amygdala only, disgust seems to activate preferentially the anterior insula [3]); fear seems to preferentially activate the amygdala [4].

Patients with Parkinson’s disease (PD) provide an interesting model to address these issues directly. Indeed, patients with PD are known to have impairment of both spontaneous and posed facial expressivity [5–12]. With regard to recognition of emotional facial expression, a recent meta-analysis evaluating only behavioural studies found that individuals with PD were more impaired than healthy individuals in the recognition of negative emotions (anger, disgust, fear, and sadness) than those of relatively positive emotions (happiness, surprise) [13].

The aim of the present study was to evaluate the ability to produce facial emotional expressions and to recognize facial emotional expressions produced by others in a group of non-demented, non-depressed PD patients and a group of matched healthy participants. We were specifically interested in exploring the relationship between the participants’ ability to express and their ability to recognize facial emotional expressions and any differences between the two groups of participants.

Methods

Subjects

Twenty PD patients were included in the study. Inclusion criteria were: a diagnosis of PD according to UK Brain Bank criteria [14], treatment and clinical condition stable for at least 4 weeks prior to the study; absence of significant cognitive deficits or score < 24 at the Mini Mental State Examination (MMSE) [15]; absence of depression [diagnosed according to both the DMS-IV TR criteria and Beck Depression Inventory (BDI) [16]score ≥ 17] or other psychiatric or neurological illnesses. Twenty healthy controls (HC) matched for age and gender were enrolled and served as control group; they also underwent cognitive and psychiatric testing to rule out cognitive impairment and depression. Demographic data of all the study participants were collected; for patients, clinical information such as disease duration, and dopaminergic therapy expressed in terms of Levodopa Equivalent Daily Dose (LEDD) [17] were included. Patients underwent a clinical assessment of motor impairment by means of the Unified Parkinson’s disease rating scale (UPDRS) section III [18], the total score was taken into account as well as the sub-score of item 19 evaluating facial expressions. Demographical and clinical data of PD and HC are presented in Table 1. All participants agreed to participate in the study and have given written informed consent (as outlined in PLOS consent form) to publish these case details. UCL institutional review board approved the study protocol.

Table 1. Demographic, clinical and emotion recognition (Ekman 60 Faces Test) data of the study populations.

| PD (n = 20) | HC (n = 20) | p-value | |

|---|---|---|---|

| Gender | 12 F | 11 F | 0.7 |

| Age (years) | 69.3±6.6 | 65.9±6.4 | 0.1 |

| Education level* (years) | 15.1±5.1 | 20.5±4.9 | 0.7 |

| MMSE | 28.3±1.4 | 29.7±0.8 | 0.4 |

| BDI | 9.7±2.7 | 8.9±4.9 | 0.5 |

| UPDRS-III | 21.8±8.7 | ------ | ------ |

| UPDRS-III item 19 | 1.8±0.7 | ------ | ------ |

| Disease duration (years) | 7.3±4.1 | ------ | ------ |

| LEDD (mg) | 612.5±370.5 | ------- | ------ |

| Ekman-total score | 44.5±7.3 | 52±4.1 | <0.001 |

| Ekman-happiness | 9.6±0.7 | 10 | 0.02 |

| Ekman-sadness | 7±2.7 | 8.7±1.3 | 0.03 |

| Ekman-anger | 7.4±1.6 | 8.9±1.14 | 0.003 |

| Ekman-disgust | 8.2±1.9 | 9±0.9 | 0.12 |

| Ekman-fear | 4±2.7 | 6.5±2.8 | 0.01 |

| Ekman-surprise | 8.2±2.3 | 9.5±0.4 | 0.01 |

Values are expressed as mean±standard deviation. Abbreviations: BDI: Beck’s Depression Inventory; F: Female; HC: healthy subjects; LEDD: levodopa equivalent daily dose; MMSE: Mini Mental State Examination; PD: Parkinson’s disease; UPDRS: Unified Parkinson’s disease Rating scale.

* total number of years spent at school (from primary school to university).

Procedure

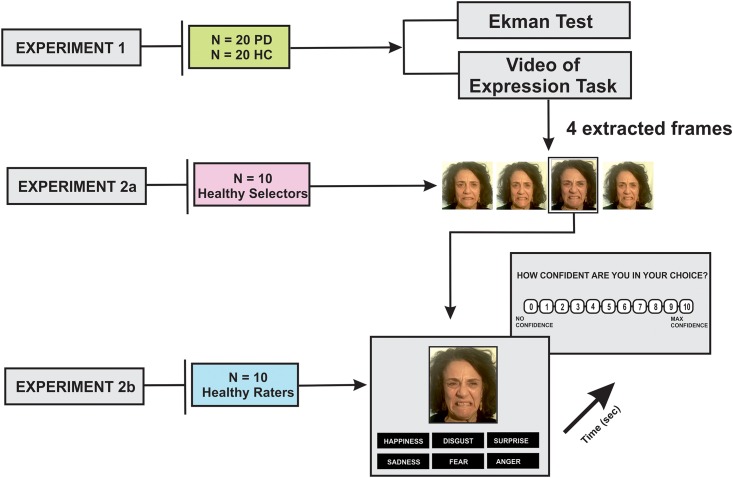

The experimental procedure included three main experiments (experiment #1, experiment #2a, experiment #2b) which are summarized in Fig 1.

Fig 1. Experimental design displaying the main 3 experiments.

In experiment 1, Parkinson’s disease (PD) and Healthy Controls (HC) subjects performed an emotion recognition task and a facial expressivity task (which was videotaped). In experiment 2a, ten healthy subjects selected the most expressing frames extracted from the videos for each emotion and for each subject. In experiment 2b, ten healthy raters (different from those employed in experiment 2a) judged PD and HC expressivity. At the end of each trial, the participant was asked to rate his/her level of confidence in their choice clicking the mouse on a visual analogic scale on the screen, where “max confidence” was 10 “no confidence at all” was 0. Reaction time (RT; in sec) and accuracy of responses were recorded.

Experiment #1: Facial recognition task and facial expression video protocol

The experimental procedure was conducted in a soft-lighted, sound-attenuated room. All patients evaluations were performed after taking the first dose of dopaminergic medications, once they reached their best motor state (“ON” medication).

All subjects (PD and HC) underwent a standardized video to record facial expression, including face, hair, and shoulders, as described by Ekman and Friesen [19]. They were asked to pose facial expressions representing six distinct emotions (happiness, sadness, surprise, anger, disgust, and fear) [20].

In the facial recognition task, PD and HC were assessed by means of the Ekman 60 Faces test [21]. Pictures of 10 actors’ faces expressing the six basic emotions (happiness, sadness, surprise, anger, disgust, fear) were selected from a set of validated pictures, i.e. the Pictures of Facial Affect [21] and randomly presented on a computer screen, one at a time. Participants were asked to identify the emotional label in a six-forced-choice response format (alternatives: happy, sad, surprise, angry, disgust, fear). Response time was unlimited, but participants were encouraged to respond as quickly as possible. The test yields a score out of a maximum of 60 correct for recognition of all six emotions, or scores out of 10 for recognition of each basic emotion. In order to ensure participants’ understanding of the task, the emotional labels were confirmed prior to testing by asking the subjects to describe an example of scenario for each emotion (“Name a situation when you felt happiness, fear, etc…).

Experiment #2a: Selection of the most expressive frames

To assess the expression of emotions, it was required to have still images of each participant (HC and PD) expressing the six basic emotions. That is, we extracted from the videos still images of each PD and HC subject, for each of the six emotions tested. For each of the video segments, a window of 4 s was selected that showed the expression of the intended emotion. The total duration of each single scene showing the best facial emotion expression was calculated and the duration of the video was divided into four sections of equal length. Finally, one still image from each section was then selected that was deemed to best express the intended emotion. This produced 4 images per participant per emotion to be used in experiment #2a designed to select which of these 4 images best expressed the intended emotion in an unbiased way.

To this aim, we programmed a computerized task, in which the 4 different pictures selected for each participant and for each emotion were presented on the computer screen (colour images, 6.3 × 4.5 cm in size). Ten healthy selectors (7 females/3 males, mean age 33 ± 3.0 years) were asked to select the most expressive one out of the four frames (Fig 1B). Participants made their decision by selecting with the mouse the chosen frame. For each emotion the procedure was repeated 4 times with each frame appearing always in a different position on the screen to avoid any spatial bias. To determine which frame best expressed the emotion, a weighted average of the frequency of expressivity rank was calculated across repetitions and across the ten participants. Frames ranked 1st were assigned the weight 2, frames ranked 2nd were assigned the weight 1, frames ranked 3rd were assigned the weight 0.5 and frames ranked 4th were assigned the weight 0. The frame with the highest average weighted ranking was chosen as the most expressive of the four. When this analysis failed to give a single choice among the four (19/120 cases), we selected frame ranked first the most often across participants.

Experiment #2b: Facial expressiveness evaluation

Ten healthy raters (7 females/3 males, mean age 29 ± 2.0 years), different from those recruited for experiment #2a, were enrolled among clinical and research fellows at the University College of London. Subjects were excluded if they presented impairment of facial emotion recognition as assessed by means of the Ekman 60 Faces test prior to the main experiment (see above for details on Ekman 60 Faces test).

Each rater was seated in front of a high-resolution computer monitor at a visual distance of approximately 60 cm. The previously selected frames (experiment #2a) of 20 PD patients and 20 HC, posing the 6 facial emotions, were presented on the screen in random fashion. Six replications for each picture were included, for a total of 1440 trials (6 emotions x 6 trials x 40 posing subjects). Participants were asked to identify the emotional label in a six-forced-choice response format (alternatives: happyness, sadness, surprise, anger, disgust, fear), and were instructed to click the mouse on the chosen label displayed on the bottom of the picture. It has been assigned "0" for uncorrected response and "1" for corrected responses. The score range for each emotion (relative score) ranged from 0 to 6 (facial expressivity score). Reaction time (RT; sec) and accuracy of responses were also recorded. At the end of each trial, each subject was asked to rate his/her confidence in their perceived accuracy of response. This confidence judgement was made asking the participant to click the mouse on a visual analogic scale that was 10 cm long, displayed on the screen. One end was marked “max confidence” (10), while the other end was labelled “no confidence at all” (0) (Fig 1C).

All experiments were run with MatLab software (version 2014b, Mathworks MA, USA). In order to avoid fatigue and make the task more feasible, we divided it in 4 different blocks, each one consisting of 360 trials. Participants performed the task in two consecutive days, 2 blocks each day.

Statistical analysis

Two-way analysis of variance (ANOVA) was applied to the Ekman total score with a 2x1 design (within-subjects factor: Ekman score; between-subjects factor: PD and HC). The Ekman sub-scores were included in a 2x6 mixed-design ANOVA, with “group” (two levels: PD, HC) as between-subjects factor and “emotion” (6 levels: happiness, sadness, anger, fear, surprise and disgust) as within-subjects factor. An analogue statistical design was performed to evaluate differences between PD and HC in the facial expressivity total score and in the sub-scores for each emotion. We performed two separates 2x6 ANOVAs with group (PD, HC) as between-subjects factor and “emotion” as between-subjects factor, for the variables reaction time (RT) and confidence level (CL). We analysed the RT and CL of the correct answers. The linear relation between RT and confidence level was analysed as measure of metacognition [22]. Conditional to significant F-values in the ANOVA, post-hoc unpaired t-tests were performed to highlight differences between groups.

Correlational analysis was run by the Pearson correlation test, in order to test possible correlations between facial emotion recognition (Ekman total score and single emotion sub-score) and facial emotion expressivity (facial expressivity total score single emotion expressivity sub-score). The first correlation analysis was aimed to probe the presence of a relationship between the ability to express the emotions and the ability to recognise the emotions. Thus, we correlated each participant’s average score for the facial recognition task with each rater’s average score for the facial expressivity task considering all emotions together. In a subsequent analysis, Pearson correlation was employed on the mean value across subjects (PD or HC) for each emotion in the facial expressivity task and for each emotion in the Ekman recognition test. Finally, in order to evaluate whether the relation between recognition and expression was emotion-dependent, we performed a correlation analysis ranking the emotions on the basis of the recognition performance by both PD and HC. Namely, for each subject we ranked the emotions from the best recognized to the worst recognized in the recognition task and we gave the same order to the emotions for the expressivity task. We considered the mean value in each group (PD and HC) for each ranked emotion for both recognition and expression and we performed the correlation analysis. A p-value ≤ 0.05 was considered significant.

Results

Facial emotion recognition in PD and HC

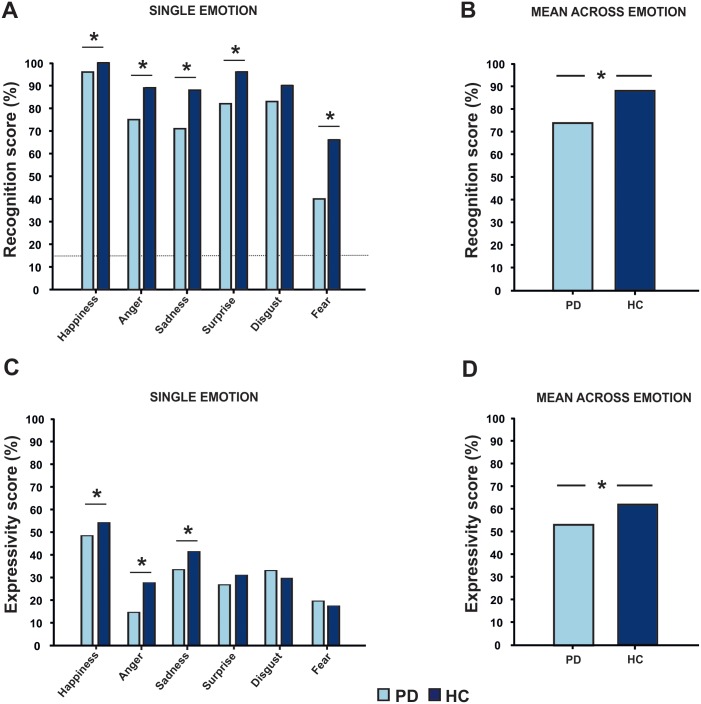

Factorial ANOVA revealed a significant main effect of the factors “group” (F(1,228) = 33.84 p<0.001) (Fig 2A) and “emotion” (F(5,228) = 28.15 p<0.001) (Fig 2B) with no significant interaction between these two factors. Post-hoc t-tests revealed a statistical difference for happiness, anger, sadness, surprise and fear (p<0.05), which were less recognized by the PD group. Despite PD patients performed significantly worse in the facial emotion recognition task, it is important to underline that they were significantly better than chance at the task in each emotion as shown in Fig 2A by the dash lines; as each subject (HC and PD) has 1/6 chance to get a right answer for each picture of the 6 emotions for 10 different actors’ pictures, the possibility that one participant can get a right answer only by chance is 16% (1:6 = X:100 [X = 100/6]) (Fig 2A and 2B).

Fig 2.

Panel A and B: Facial emotion recognition task (Ekman 60 Faces test) scores for each single emotion (A) and total score for all emotions taken together (B) for HC (dark blue) and PD group (light blue). Higher scores indicate better performance. PD patients performed worse than HC in all emotions but disgust. Dash line represents the cut-off above which participant performs better than chance (see text for details). Panel C and D: Facial expressivity task scores for HC and PD. Higher scores indicate better performance. PD patients were judged less expressive than HC for all emotions but disgust, fear and surprise. For both tasks we plotted the percentage of corrected answer (%) to facilitate the understanding of the results. Asterisks represent a statistical difference between experimental groups (p<0.05)

Facial expressivity in PD and HC

A significant effect for both the factors “group” (F(1,228) = 3.83 p = 0.05) and “emotion” (F(5,228) = 21.24 p<0.001) emerged at the ANOVA (Fig 2, panels C and D), without any interaction between these two factors. Post-hoc t-tests revealed that happiness, anger and sadness were less recognized by the PD group (p<0.001) compared to HC.

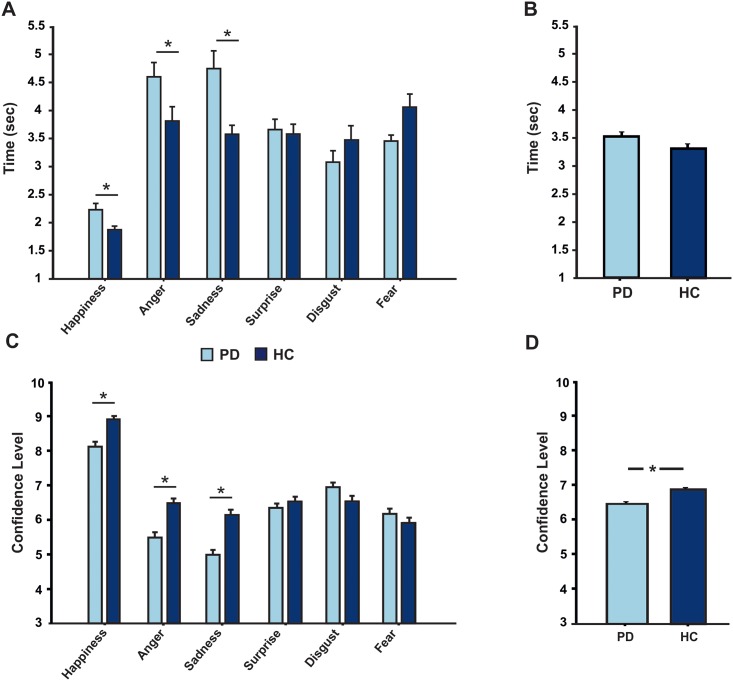

Reaction time and confidence level in the facial expressivity test

For RT, ANOVA showed a significant effect of the factor “emotion” (F(5,224) = 8.33, p<0.001), but not of the factor “group” (Fig 3A and 3B). No interaction between these factors was present. For CL, ANOVA showed a significant effect of the factors “emotion” (F(5,222) = 21.42, p<0.001) and “group” (F(1,222) = 4.28, p = 0.03) (Fig 3C and 3D).

Fig 3.

A) Reaction Time in choosing the pictures of PD (light blue) and HC (dark blue) in the expressivity task. Raters were faster in judging HC than PD pictures expressing happiness, anger and sadness. B) Level of confidence in evaluating which emotional expression was displayed in the pictures of PD (light blue) and HC (dark blue) in the expressivity task. Raters were more confident of their choices when evaluating HC’ pictures compared to PD’ s pictures for happiness, anger and sadness.

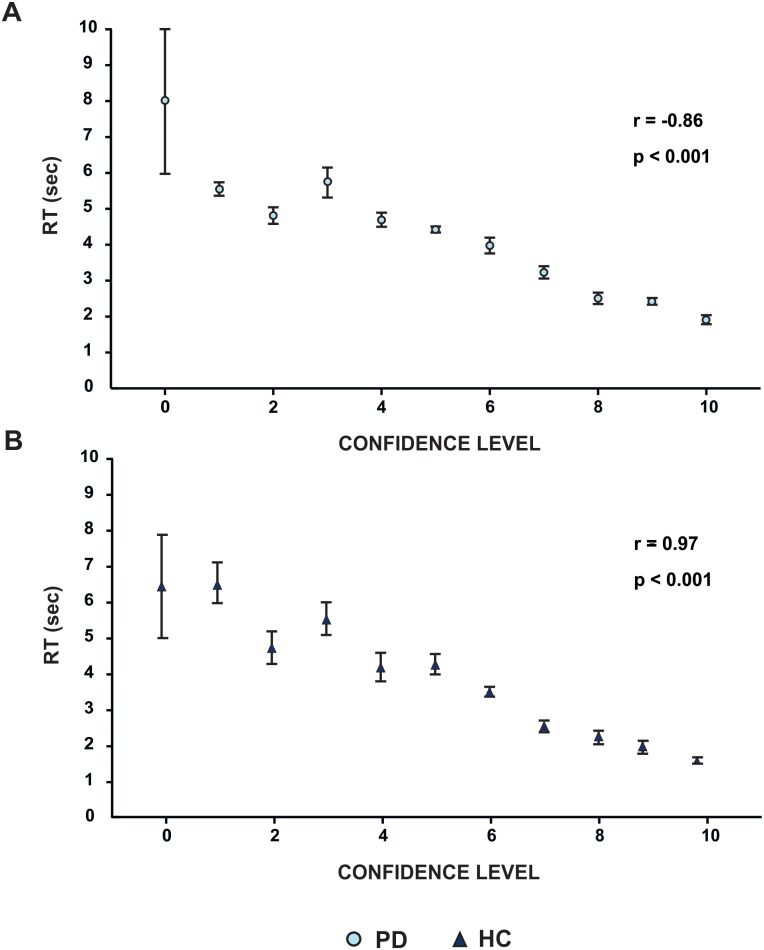

As expected, we showed that the participants' level of confidence in their decision was correlated with their RT. The higher level of confidence they had, the shorter the reaction time in choosing the picture was. This was true for both PD (r = 0.86, p<0.001) and HC frames (r = -97, p<0.001) (Fig 4A and 4B).

Fig 4. Correlation between raters’ Reaction Time (RT) in choosing the pictures and their level of confidence during their own choices (CL) for both the PD (panel A) and HC (panel B) frames during the expressivity task.

A positive correlation between RT (y axis) and CL (x axis) is shown.

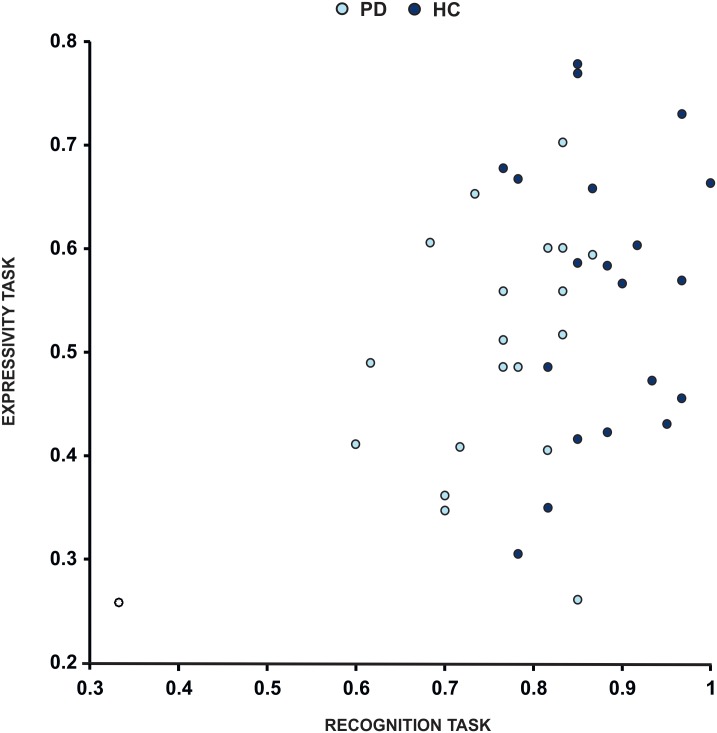

Correlation between facial emotion expressivity and facial emotion recognition

The relationship between the ability to express the emotion and the ability to recognise the emotion was significant when considering all participants from the two groups together (r = 0.39, p = 0.01); yet, when looking at the correlation at each single group level, only the PD group showed a significant correlation (r = 0.48, p = 0.02) (Fig 5). When considering single emotions, a significant positive correlation between recognition and expressivity scores was revealed only for surprise (r = 0.55, p = 0.01) in the PD group and a tendency toward statistical significance was also observed for disgust (r = 0.4, p = 0.06) for the same group.

Fig 5. Correlation between facial recognition task scores and expressivity task scores.

Each dot represents the mean value across emotions per subject in HC group (light blue) and PD group (dark blue). There is a significant positive correlation between facial recognition (x axis) and expressivity (y axis) for all subjects.

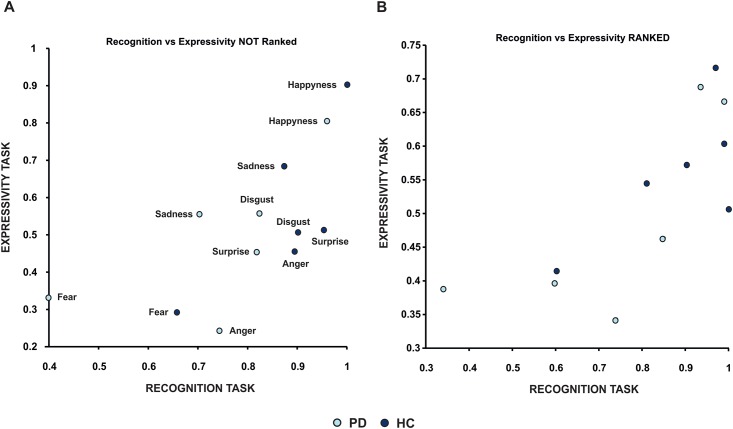

To further investigate the relationship between emotional recognition and expressivity, we calculated the grand average of the facial expressivity score and the recognition score for each emotion in the PD and HC groups and we performed a correlation analysis between these two measures. Results revealed that when treating PD and HC groups as independent there was a significant correlation between emotion expression and emotion recognition (r = 0.67, p = 0.01) (Fig 6A). This analysis (although significant) assumes that all participants performed equally for each emotion. If there were truly a link between emotion expression and recognition then one would predict that the emotion that any individual was worse at recognising they would also be bad at expressing. To test this we ranked the order of the emotions for each individual in the two groups (PD and HC) from the best recognized to the worst and we modified accordingly the order of the emotions for the expressivity task. We calculated this for each subject and we calculated the mean value for each ranked emotion. We found an even greater significant correlation (r = 0.75, p = 0.004, Fig 6B), suggesting that the relation between expression and recognition is not emotion dependent.

Fig 6. Correlations between facial emotion recognition and expression per single emotion.

Each dot represents the mean value across subjects per each emotion for both HC (light blue) and PD (dark blue). There is a significant positive correlation between recognition (x axis) and expression (y axis) in both groups (panel A, each emotion is displayed next to the corresponding dot). The correlation is even stronger when ranking emotions from the best recognized to the worst (Panel B, see text for details).

Discussion

We demonstrated a relationship between the ability to express facial emotions and the ability to recognize facial emotions expressed by others. Interestingly, this relationship was not emotion-specific, as each subject differed from the others in terms of emotion affected both for its expression and its recognition. That is, in both healthy subjects and PD patients, better performance in a task of execution of facial emotional expression was related to a better performance in a well-validated task of facial emotion recognition. Nevertheless, PD patients performed worse in both tasks than healthy controls.

The motivation for this study was to explore the relationship between facial emotional expression and recognition of these expressed emotions in others. While functional imaging studies have suggested overlapping areas of activation during tasks of emotional expression and emotional recognition, this falls short of evidence for co-dependence of these faculties. Here, by evaluating participants on both an execution and recognition task, we were able to assess within subjects the relationship between performance on both tasks, and found a strong correlation between them. Thus, we provide strong additional evidence for a shared substrate for these tasks. We found a strong relationship irrespective of the type of emotion, suggesting an involvement of an overall unified system, instead of different neural systems for specific emotions.

We evaluated both PD patients and HC and therefore provide additional data regarding emotional expression and recognition in Parkinson’s disease. First, although performance in tasks of emotional expression and recognition was impaired in PD patients compared to controls in line with previous data [5–12], the correlation between performance on the two tasks found in healthy subjects and in PD patients is a novelty provided by our study. This adds weight to our suggestion that both tasks share a common substrate that is disrupted in patients with PD. Second, our data in PD patients addresses some areas of conflicting data in the literature. An extensive literature has evaluated emotional recognition ability in PD patients with conflicting results likely due to confounding factors (e.g. depression and cognitive deficits) and methodological differences [23,24]. In a recent meta-analysis, evaluating only behavioural studies, Gray and Tickle-Degnen suggested that individuals with PD disclosed a lower recognition score for negative emotions (anger, disgust, fear, and sadness) than for relatively positive emotions (happiness, surprise) [13]. Here we found a more extensive deficit in recognizing facial expressions, including positive and negative emotions, when a well-validated task (Ekman 60-faces test) is administered and when confounding factors such as depression and cognitive impairment are controlled for.

A previous study has found a correlation between a task of emotional facial imagery and tasks of emotional facial expression and recognition [8]. This study provides some supportive evidence for our contention that tasks of emotional facial expression and recognition are co-dependent, though in this previous study different emotions were not assessed separately and this correlation was not tested in healthy participants, limiting the scope of the conclusions.

Third, our data provide some support for “simulation” or "shared substrates" models of emotion recognition, which are based on the assumption that the ability to recognize the emotions expressed by other individuals relies on processes that internally simulate the same emotional state in ourselves [25]. These models have clear overlap with the suggested “mirror neuron” system related to voluntary movement. Mirror neurons were first identified in the ventral premotor cortex (area F5) of the macaque [26,27], subsequently they have been demonstrated in human homolog of area F5, the pars opercularis in the inferior frontal gyrus [28]. The property of these neurons is that they show activation triggered both by observation of an action and execution of that same action [29].

In an fMRI study on healthy participants, largely overlapping regions were activated by both observation and imitation of facial emotional expressions. Areas with common activation in the two tasks included the premotor face area, the dorsal sector of pars opercularis of the inferior frontal gyrus, the superior temporal sulcus, the insula, and the amygdala [1]. It has been suggested that in this network the insula may act as an interface between the frontal component of the mirror neuron system and the limbic system, “translating” the observed or posed facial emotional expression into its internally represented emotional meaning [1].

Our data are in keeping with the idea that a system, similar to the mirror system for goal-directed actions, supports both the action of facial emotional expression and the ability to correctly identify the facial emotional expression of others. In contrast to other studies, our data support the suggestion that this shared system is emotion-independent, and that while specific brain areas may be more involved in specific emotions (e.g. amygdala for fear [4,30] or insula for disgust [3], there is a broader mechanism for emotional “sense”. The hypothesis of a shared system for execution and recognition of facial emotional expression is supported by previous studies. Work in healthy participants has shown that “blocking” facial movements while performing a task of facial emotion recognition leads to a lower accuracy of performance [31]. In addition, fMRI studies on patients affected by autism, who have an impairment in recognizing facial emotions [32], have demonstrated a lack of activity in the “mirror area” in the pars opercularis when observing facial emotional expressions [33]. It has also been suggested that patients with autism have difficulties interpreting the expressions of other individuals as well as expressing emotions in a way others can understand [34]. Finally, patients with Huntington's disease (HD), have both an impairment of recognition and expression of facial emotions, and a role for the striatum in mediating facial emotional expression and recognition since performance in both tasks was correlated with the degree of striatal atrophy in these patients [35].

An important and seemingly contradictory study in patients with Moebius syndrome, a disorder of congenital bilateral facial paralysis, found no emotion recognition impairment in these patients, questioning the role of emotional facial expression recognition of emotional expression in others [36]. However, the congenital nature of this disorder raises the question as to whether compensatory mechanisms for emotion recognition have developed. The assessment of (admittedly rare) patients with acquired bilateral facial nerve palsy would certainly be of interest.

We acknowledge limitations of our study. We did not have concurrent functional imaging, which does not allow us to correlate our behavioural results with patterns of brain activation for comparison with previous studies. We did not evaluate spontaneous facial expression but rather focussed on voluntarily posed expressions. Finally, although we excluded patients with overt cognitive impairment (by means of MMSE evaluation), an extensive neuropsychological evaluation is lacking and future studies are encouraged to rule out any associated deficit in specific cognitive domains.

In conclusion, our data demonstrate a strong relationship between the execution and recognition of facial emotional expression in both healthy subjects and people with PD. Patients with PD performed overall poorer on both tasks. Although our data allow us only to speculate on the possible mechanisms underlying this process, they are consistent with an emotional mirror neuron mechanism.

Acknowledgments

LR is grafetul to Dr. Santi Furnari for the support in designing the study methods.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

The authors received no specific funding for this work.

References

- 1.Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci U S A. 2003. April 29; 100(9):5497–502. 10.1073/pnas.0935845100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, et al. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci. 2009. November;34(6):418–32. Review [PMC free article] [PubMed] [Google Scholar]

- 3.Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage. 2002. June;16(2):331–48. [DOI] [PubMed] [Google Scholar]

- 4.Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, et al. A specific neural substrate for perceiving facial expressions of disgust. Nature. 1997. October 2;389(6650):495–8. 10.1038/39051 [DOI] [PubMed] [Google Scholar]

- 5.Buck R, Duffy RJ. Nonverbal communication of affect in brain-damaged patients. Cortex. 1980. October;16(3):351–62. [DOI] [PubMed] [Google Scholar]

- 6.Smith MC, Smith MK, Ellgring H. Spontaneous and posed facial expression in Parkinson's disease. J Int Neuropsychol Soc 1996;2: 383–391. [DOI] [PubMed] [Google Scholar]

- 7.Katsikitis M, Pilowsky I. A controlled quantitative study of facial expression in Parkinson's disease and depression. J Nerv Ment Dis 1991;179: 683–688. [DOI] [PubMed] [Google Scholar]

- 8.Jacobs DH, Shuren J, Bowers D, Heilman KM. Emotional facial imagery, perception, and expression in Parkinson's disease. Neurology. 1995. September;45(9):1696–702. [DOI] [PubMed] [Google Scholar]

- 9.Madeley P, Ellis AW, Mindham RH. Facial expressions and Parkinson's disease. Behav Neurol. 1995;8(2):115–9. 10.3233/BEN-1995-8207 [DOI] [PubMed] [Google Scholar]

- 10.Simons G, Pasqualini MC, Reddy V, Wood J. Emotional and nonemotional facial expressions in people with Parkinson's disease. J Int Neuropsychol Soc 2004;10: 521–535. 10.1017/S135561770410413X [DOI] [PubMed] [Google Scholar]

- 11.Bologna M, Fabbrini G, Marsili L, Defazio G, Thompson PD, Berardelli A. Facial bradykinesia. J Neurol Neurosurg Psychiatry. 2013. June;84(6):681–5. 10.1136/jnnp-2012-303993 [DOI] [PubMed] [Google Scholar]

- 12.Marsili L, Agostino R, Bologna M, Belvisi D, Palma A, Fabbrini G, et al. Bradykinesia of posed smiling and voluntary movement of the lower face in Parkinson's disease. Parkinsonism Relat Disord. 2014. April;20(4):370–5. 10.1016/j.parkreldis.2014.01.013 [DOI] [PubMed] [Google Scholar]

- 13.Gray HM, Tickle-Degnen L. A meta-analysis of performance on emotion recognition tasks in Parkinson's disease. Neuropsychology 2010;24: 176–191. 10.1037/a0018104 [DOI] [PubMed] [Google Scholar]

- 14.Hughes AJ, Daniel SE, Kilford L, Lees AJ. Accuracy of clinical diagnosis of idiopathic Parkinson's disease: a clinico-pathological study of 100 cases. J Neurol Neurosurg Psychiatry 1992;55(3):181–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Folstein MF; Folstein SE; McHugh PR. "Mini-mental state". A practical method for grading the cognitive state of patients for the clinician". Journal of Psychiatric Research 1975;12 (3): 189–98.); [DOI] [PubMed] [Google Scholar]

- 16.Beck AT, Ward CH, Mendelson M, Mock J, Erbaugh J. An inventory for measuring depression. Arch Gen Psychiatry. 1961. June;4:561–71. [DOI] [PubMed] [Google Scholar]

- 17.Tomlinson CL, Stowe R, Patel S, Rick C, Gray R, Clarke CE. Systematic review of levodopa dose equivalency reporting in Parkinson's disease. Mov Disord. 2010. November 15;25(15):2649–53 10.1002/mds.23429 [DOI] [PubMed] [Google Scholar]

- 18.Fahn S, Elton R, Members of the UPDRS Development Committee. The Unified Parkison's Disease Rating Scale In: Fahn S, Marsden CD, Calne DB, Goldstein M, editors. Recent Developments in Parkinson's Disease, Vol 2 Florham Park, NJ: Macmillan Health Care Information; 1987;153–63,-293-304. [Google Scholar]

- 19.Ekman P. & Friesen W. Pictures of Facial Affect, Consulting Psychologist’s Press, Palo Alto, CA, 1976. [Google Scholar]

- 20.Ricciardi L, Bologna M, Morgante F, Ricciardi D, Morabito B, Volpe D, et al. Reduced facial expressiveness in Parkinson's disease: A pure motor disorder? J Neurol Sci. 2015. November 15;358(1–2):125–30. 10.1016/j.jns.2015.08.1516 [DOI] [PubMed] [Google Scholar]

- 21.Fleming SM, Lau HC. How to measure metacognition. Front Hum Neurosci. 2014. July 15;8:443 10.3389/fnhum.2014.00443 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ekman P, Friesen W., and Hager Joseph C.. Facial Action Coding System: The Manual on CD ROM 2002; A Human Face, Salt Lake City. [Google Scholar]

- 23.Assogna F, Pontieri FE, Caltagirone C, Spalletta G. The recognition of facial emotion expressions in Parkinson's disease. Eur Neuropsychopharmacol. 2008. November;18(11):835–48. 10.1016/j.euroneuro.2008.07.004 [DOI] [PubMed] [Google Scholar]

- 24.Péron J, Dondaine T, Le Jeune F, Grandjean D, Vérin M. Emotional processing in Parkinson's disease: a systematic review. Mov Disord. 2012. February;27(2):186–99. 10.1002/mds.24025 [DOI] [PubMed] [Google Scholar]

- 25.Heberlein A.S. & Atkinson A.P. Neuroscientific evidence for simulation and shared substrates in emotion recognition: beyond faces. Emotion Review. 2009; 1(2), 162–177. [Google Scholar]

- 26.Rizzolatti G, Camarda R, Fogassi L, Gentilucci M, Luppino G, Matelli M. Functional organization of inferior area 6 in the macaque monkey. II. Area F5 and the control of distal movements. Exp Brain Res. 1988;71:491–507. [DOI] [PubMed] [Google Scholar]

- 27.Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119(Part 2):593–609. [DOI] [PubMed] [Google Scholar]

- 28.Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996;3:131–141. [DOI] [PubMed] [Google Scholar]

- 29.Rizzolatti G, Arbib MA (1998) Language within our grasp. Trends Neurosci 21:188–194. [DOI] [PubMed] [Google Scholar]

- 30.AdolpHC R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994. December 15;372(6507):669–72. 10.1038/372669a0 [DOI] [PubMed] [Google Scholar]

- 31.Oberman LM, Winkielman P, Ramachandran VS. Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Soc Neurosci. 2007;2(3–4):167–78. 10.1080/17470910701391943 [DOI] [PubMed] [Google Scholar]

- 32.Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol Rev. 2010. September;20(3):290–322. 10.1007/s11065-010-9138-6 [DOI] [PubMed] [Google Scholar]

- 33.Dapretto M, Davies MS, Pfeifer JH, Scott AA, Sigman M, Bookheimer SY, et al. Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nat Neurosci. 2006. January;9(1):28–30 10.1038/nn1611 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Brewer R, Biotti F, Catmur C, Press C, Happé F, Cook R, et al. Can Neurotypical Individuals Read Autistic Facial Expressions? Atypical Production of Emotional Facial Expressions in Autism Spectrum Disorders. Autism Res. 2015. June 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Trinkler I, Cleret de Langavant L, Bachoud-Lévi AC. Joint recognition-expression impairment of facial emotions in Huntington's disease despite intact understanding of feelings. Cortex. 2013. February;49(2):549–58. 10.1016/j.cortex.2011.12.003 [DOI] [PubMed] [Google Scholar]

- 36.Rives Bogart K, Matsumoto D. Facial mimicry is not necessary to recognize emotion: Facial expression recognition by people with Moebius syndrome. Soc Neurosci. 2010;5(2):241–51. 10.1080/17470910903395692 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.