Abstract

Objective. To use the nominal group technique to develop a framework to improve existing and develop new objective structured clinical examinations (OSCEs) within a four-year bachelor of pharmacy course.

Design. Using the nominal group technique, a unique method of group interview that combines qualitative and quantitative data collection, focus groups were conducted with faculty members, practicing pharmacists, and undergraduate pharmacy students. Five draft OSCEs frameworks were suggested and participants were asked to generate new framework ideas.

Assessment. Two focus groups (n=9 and n=7) generated nine extra frameworks. Two of these frameworks, one from each focus group, ranked highest (mean scores of 4.4 and 4.1 on a 5-point scale) and were similar in nature. The project team used these two frameworks to produce the final framework, which includes an OSCE in every year of the course, earlier implementation of teaching OSCEs, and the use of independent simulated patients who are not examiners.

Conclusions. The new OSCE framework provides a consistent structure from course entry to exit and ensures graduates meet internship requirements.

Keywords: objective structured clinical exam (OSCE), nominal group technique (NGT), framework, pharmacy, students

INTRODUCTION

In Australia, pharmacy students’ competence must be confirmed before they leave the university to ensure that they have the adequate skills to begin internship training.1 While the Australian Pharmacy Council (the independent accreditation agency for pharmacy schools and programs in Australia and New Zealand) does not mandate how student competence should be assessed, they do recommend that examinations are “robust, fair and scientifically defensible; assessment outcomes are to be consistent and fair; are best practice; and inform and influence intern pharmacist assessment.”2 Recently published national pharmacy learning outcomes also have the potential to guide assessment design as they clarify expectations for both standards and levels of achievements for students, faculty, employers and the professional body. Those outcomes include: demonstrate professional behavior; apply evidence in practice; deliver safe and effective collaborative health care; take responsibility for clinically, ethically and scientifically sound decisions; communicate in lay and professional language, plan ongoing personal and professional development, apply pharmaceutical, medication and health knowledge and skills, and formulate and supply medications and therapeutic products.3

These national learning outcomes are in line with global competencies developed for the pharmacy workforce by the International Pharmaceutical Federation, and include health promotion, medicines information and advice, professional communication, patient consultation and diagnosis, monitoring, and supply.4 The national outcomes also concur with competencies for pharmacists by the United Kingdom (delivery of patient care, problem solving, and management and organization),5 Canada (practice pharmaceutical care, provide drug information, educate, manage drug distribution and apply management principles),6 and the United States (process of care, documentation, collaborative, team-based and privileging, professional development and maintenance of competence, professionalism and ethics, research and scholarship and other responsibilities).7

Written examinations are unsuited to assess some competencies, such as communication skills and logical expression.8 Verbal and nonverbal communication cannot be assessed through written communication and does not require students to solve tasks that include a patient barrier. Oral assessments also test students for immediate responses and minimize the use of prepared, memorized responses.9 However, one oral examination can only test limited skills.8 In contrast, objective structured clinical examinations (OSCEs) require students to make decisions independently, apply judgement using available information and communicate outcomes with simulated patients (SPs) or simulated doctors.

Professor Ronald Harden, postgraduate dean and director for Centre for Medical Education, University of Dundee, previously practicing endocrinologist, created OSCEs in the 1970s to overcome the disadvantages of traditional clinical examinations and to allow more of the student’s knowledge to be tested.9 A recent review of 1065 studies demonstrated OSCE feasibility for use in “different cultural and geographical contexts; to assess a wide range of learning outcomes; in different specialties and disciplines; and for formative and summative purposes.”10 Objective structured clinical examinations have been used in examinations for professional registration,11 competency maintenance of clinicians already in practice,12 and as part of the recruitment process for acceptance into a health professional program.13 They have also been successfully used in Australia and overseas for assessment of pharmacy students, interns, and pharmacists.11,14-17 Teaching OSCEs (TOSCEs) are important to implement alongside OSCEs as practice for OSCEs. They are generally run as tutorials for the whole class so students can familiarize themselves with the OSCE process and get valuable facilitator and peer feedback.18

In 2011-2013, OSCEs were introduced into three separate teaching units in the four-year Bachelor of Pharmacy Honours (BPharm(Hons)) undergraduate course at the Faculty of Pharmacy and Pharmaceutical Sciences, Monash University. The three OSCEs examine the areas of communication, cardiovascular health, and hospital practice. Although their introduction has been generally perceived by faculty members as a step forward in assessing student competence, the three OSCEs differ in design and delivery, raising the concern that they may negatively affect student learning outcomes. The main concerns have been the lack of simulated patients and inconsistencies with the development and marking of OSCE cases, resulting in wide variations in the assessment of students’ performance and delayed, inconsistent feedback to students about their performance. As far as we are aware, there are no OSCE best practice guidelines in the Australian pharmacy context that could be used to guide the improvement of our existing OSCEs and development of our new OSCEs. However, guidelines for nursing and midwifery exist that the authors assert can be applied to other health disciplines.19-22 These guidelines recommend that OSCEs should be focused on aspects of practice related directly to delivery of safe client/patient care that are most relevant and likely to be commonly encountered; structured and delivered in a manner which reflects the desired knowledge and skill needed to practice; judged via a holistic marking guide, meaning that students would get marked on their performance as a whole, not just as a collection of discrete independent actions; and integrated and scheduled during a time in the student’s learning that allows for maximal assimilation of course content; and should allow for ongoing practice of integrated clinical assessment and intervention skills, for timely use of feedback to guide students’ development.19-22

This study describes how we produced a framework using the nominal group technique (NGT) to improve our existing OSCEs and to develop new ones that are in line with these general guidelines19-22 and other literature.11, 23-28 The NGT was chosen over brainstorming because it is a structured group process that combines quantitative and qualitative data collection to elicit the judgments of individuals, whose opinions contribute towards making a consensus group decision. The NGT is suggested to have advantages over the Delphi Technique because it allows participants to meet in person, is less time consuming and costly to conduct, and produces immediate results, as well as the lowest percentage of error and variability of estimations.29 The desire was to seek input from faculty members, practicing pharmacists, and undergraduate students to come to a consensus on criteria needed to generate a framework for all levels of the Bachelor of Pharmacy(Hons) course, by year and by stream. The course has four main streams: Enabling Sciences, Drug Delivery, Pharmacy Practice, and Integrated Therapeutics. The framework was intended to help faculty members develop OSCEs that remain coherent; are in context; and are effectively coordinated and involve cooperation of the relevant faculty members to achieve goals (1) and (2).

DESIGN

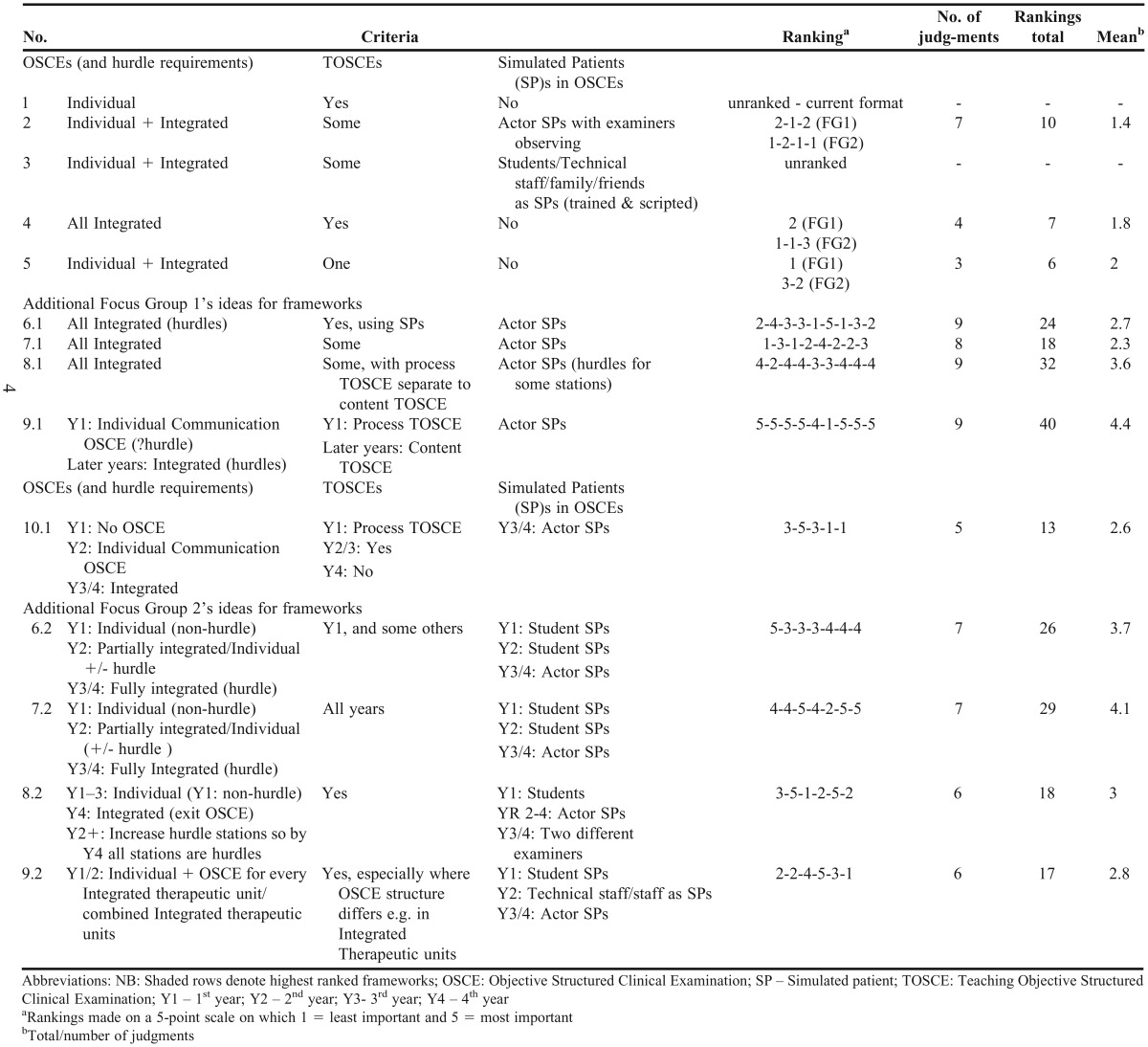

Two focus groups were conducted by two of the study authors at the Faculty of Pharmacy and Pharmaceutical Sciences, Monash University. All unit coordinators and pharmacy teaching staff, practicing pharmacists who were involved in delivering OSCEs, and undergraduate students who had been examined via current OSCEs were invited to participate. Participants were recruited via email, face-to-face (invited by the chief investigator), phone, and through advertisements in the faculty bulletin. Interested participants were given an explanatory statement containing information about the study and a consent form to sign and return. At both focus group sessions, five draft frameworks (see Table 1) produced by the Bachelor of Pharmacy education team were given to each group for initial discussion. The BPharm(Hons) education team consisted of the course director, the four stream leaders for pharmacy practice, enabling sciences, drug delivery, and integrated therapeutics, the associate dean of education, and the director of learning and teaching. The team’s responsibilities included but were not limited to: ensuring vertical and horizontal integration of all units in the course; leading educational initiatives; developing and implementing guidelines related to teaching, learning and assessment; and reviewing educational policy.

Table 1.

Consolidated Focus Group (n=16) Results for Frameworks and Their Criteria

The education team draft frameworks were designed based on the aforementioned general guidelines19-22 and other literature,11, 23-28which stated that: non-examiner SP actors are needed; integrated OSCEs encompassing content from all year levels should be used rather than individual OSCEs per unit that only test the content of that particular unit; and TOSCEs should be implemented to assist student learning and OSCE preparation.

Focus group participants were briefed on the focus group aims and format, after which they were shown the draft frameworks for OSCEs. They were asked to construct their own frameworks, brainstorm the groups’ ideas, and then rank the draft frameworks along with their own frameworks using the nominal group technique (NGT) (see specific steps below). The NGT is a structured brain-storming process similar to a focus group, but focuses on a single goal (ie, defining criteria for assessment) rather than attempting to elicit a range of themes and ideas.30 The NGT was preferred as it allowed for more ideas to be generated in a short time, and individuals could vote privately, uninfluenced by more senior members of the staff.

Participants spent approximately 10 minutes individually generating new framework ideas (nominal phase). A round robin phase followed, where ideas were recorded on a flip chart and numbered in no particular order for all participants to see. Each new framework was then discussed to obtain clarification and evaluation (structured discussion phase). Where different wording indicated the expression of a previous idea, the frameworks were collapsed into one. From the numbered list of newly generated frameworks, and the initial draft frameworks, participants chose a certain number of frameworks they considered to be the most important (independent voting phase) and ranked them. The number chosen depended on the total number available (eg, if 10 was the total number of frameworks, then participants were asked to choose five they would consider the most important and rank them from 5=most appropriate to 1=least appropriate). Next, the round-robin phase was repeated, and each person’s individual judgements were again recorded on the flipchart next to the number of the corresponding framework. The rankings then were ordered based on their aggregate score to give participants a feel for the group priority. Finally, the results were discussed by the group.

The BPharm(Hons) education team considered the framework with the highest aggregate score to be the model framework, with the view to implementation. The team also examined which knowledge and skills that graduates needed to possess (derived from the national pharmacy learning outcomes3) could be assessed by OSCEs. The study was approved by the Monash University’s Human Research and Ethics Committee.

EVALUATION AND ASSESSMENT

Focus group 1 consisted of pharmacy undergraduate students (n=3), pharmacy faculty members (n=3), and practicing pharmacists (n=3); Focus group 2, held the following day, also comprised pharmacy undergraduate students (n=2), faculty members (n=4), and a practicing pharmacist (n=1). In addition to the five draft frameworks, nine extra frameworks were produced (see Table 1), of which two were ranked highest by focus group participants on a scale of 1 to 5 (group 1, mean=4.4, group 2, mean=4.1). Consolidated focus group results for frameworks and their criteria and associated means are shown in Table 1. The final framework is shown in Table 2. Currently, the faculty’s second year OSCE under the unit “pharmacists as communicators” has its cases developed without applying the standardized format involving blueprinting, case writing days or standard setting11, 31, and only a global rating scale, focusing on communication only has been used to assess students instead of the combination of communication and analytical checklists (which assesses clinical and problem solving skills). Also, simulated patients have not been utilized; instead, the examiners have acted as both the examiner and the patient/relevant other. This OSCE will be revised in line with the new framework: existing cases will be reviewed and rewritten, analytical checklists will be created, and simulated patients will be recruited to act as patients and doctors.

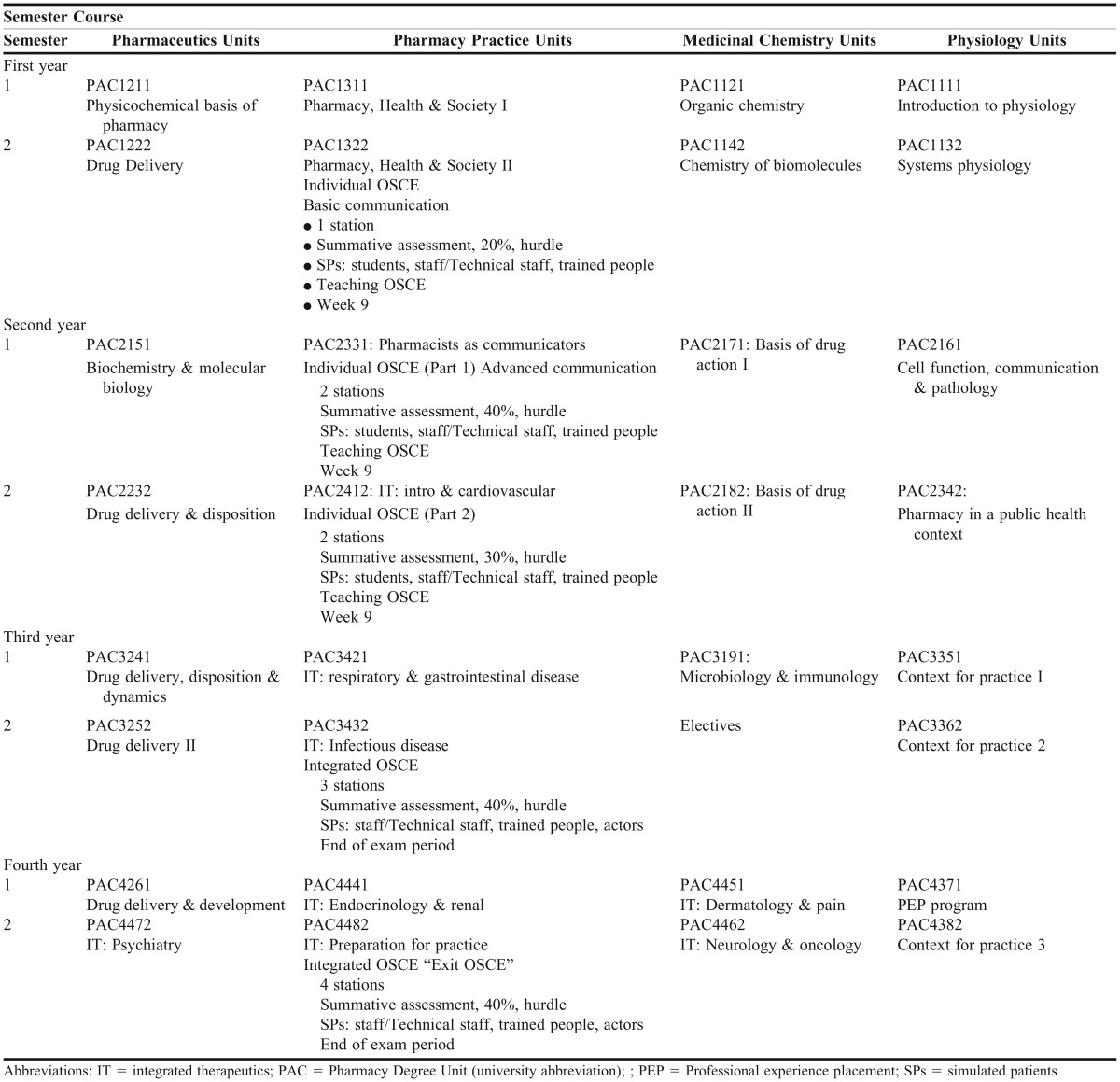

Table 2.

Final OSCE Framework for the Monash B.Pharm (Hons) Course

To implement the final framework (Table 2), the BPharm(Hons) education team developed the following supporting recommendations: (1) there should be one OSCE in every year of the course; (2) all OSCEs are hurdle requirements to pass the unit and students will have only one attempt at a remediation examination if they fail which is in line with the practice of other health disciplines; (3) every OSCE must assess communication and interpersonal skills, and students must pass these components to pass the OSCE; (4) TOSCEs covering the OSCE process and content will only be conducted in years 1 and 2. In years 3 and 4, required supporting tutorials will be provided to cover content, and online videos will be used to refresh students’ recollection of the process; (5) pharmaceutical calculations and extemporaneous dispensing should continue to be examined separately to the OSCE; OSCEs should be reserved for oral, face-to-face assessment; (6) following the OSCE, students must be provided with performance feedback, consisting of a breakdown of their scores on each rubric criterion as well as their final score; (6) staged implementation of newly developed OSCEs is ideal, with priority given to first developing the year 4 “exit” OSCE, then redeveloping the existing ones. For these recommendations to be fully realized, all teaching staff need to be trained in developing, designing, and delivering OSCEs. Consequently, an OSCE-delivery team will need to be appointed to oversee OSCE development for each year.

DISCUSSION

This study focused on two key areas of the learning and teaching journey: time and assessment. We were acting on the opportunity to improve OSCEs in our course by focusing on their sequencing and underpinning their design and implementation with an evidence-based pedagogy. We used a collaborative approach to help develop staff capability in OSCE development and implementation. The NGT was shown to be an efficient and easy-to-use instrument in this study, and while using other interactive processes (eg, brainstorming, the Delphi technique) may have engaged participants in spontaneous dialogue, they would have been less effective in quickly identifying the key individuals’ preferences for the frameworks and in achieving consensus across the two focus groups.

Currently in our OSCEs there is a 1:1 examiner-to-student ratio, where the examiner, generally a teaching staff member, is also required to play the role of the simulated patient (SP). This is not best practice, as SPs should ideally be professional actors, unknown to the student, who can respond to student stimuli in a manner similar to that of patients in practice.32 We also hypothesize that lay people are better judges of communication than trained professionals as their responses are not influenced by professional training.33 Professional actors are more likely to provide consistent performances, and clear instructions should be given to the actor to ensure patients are standardized.34 Because of resource constraints, our framework proposes the use of non-staff SPs in OSCEs administered in the last two years of the curriculum, and the use of senior students as SPs for OSCEs administered in the first two years of the curriculum. Nonteaching faculty members and volunteers could also be recruited. OSCEs are resource intensive and further discussion at a faculty level will occur in the near future to formulate appropriate strategies for recruiting SPs.

Our current bank of OSCE cases was developed without using the standardized format.14 First, case development should be based on a blueprint.24 Students should only be tested on what they have been taught, and a blueprint ensures different domains of skills are tested equitably and that the balances of subject areas have been fairly decided.24 Commonly, a case-writing day is scheduled where a group of faculty members with expertise in different areas of pharmacy practice develop a case and then give their case to another group to review. A third group then completes the standard setting (marking criteria). Finally, the cases are pilot tested for feasibility. Standard setting is mandatory to have a robust method to justify the pass score and to maintain a valid and reliable OSCE.31 In contrast, our current cases were written and reviewed by individuals rather than groups of academic staff members, and remain unpiloted with students. Involving practicing pharmacists in case writing is vital to ensure cases are ecologically valid (consistent with real-life practice) and current. The BPharm(Hons) education team recommended that a more structured and validated approach to OSCE case writing is essential in order for the framework goals to be realized. Since then, our institution has trained over 40 teaching and sessional staff members in case writing, and these groups have developed 20 new cases.

TOSCEs give students an indication of what to expect in the examination. TOSCEs have only been conducted for two of the three current OSCEs in our course. TOSCEs have been extremely well rated by faculty members and students at other universities, as students appreciated the feedback, the opportunity to observe a variety of interaction styles, and the practice.18 Faculty members also find giving feedback to the students and their peers useful for their own learning.18 Our experience with TOSCEs has been similar; however, our framework stipulates their use only in earlier years of the curriculum, as focus group participants believed that by year 3 students should be highly familiar and comfortable with the OSCE format. We have subsequently developed a video to refresh students’ recollection of the process.

The placement of OSCEs within the course was carefully considered, as well as their progression across the years. In the framework for years 1-3, to allow for less interruption, OSCEs have been strategically placed in week 9 of a 12-week semester, just before the examination period. In year 4, the OSCE is conducted at the end of the examination period to emphasize the “exit” nature of the examination. The BPharm(Hons) education team decided to maintain the two existing OSCEs in year 2 (communication and cardiovascular health), in order to reduce their duration from two days to one day. Only one of the three existing OSCEs was a hurdle requirement (a successful grade is essential for passing the unit). However, from hereon, all OSCEs will be hurdles, as students already intensively prepare for OSCEs. Students should also understand that OSCEs play a vital role in assessing competency, communication skills, and knowledge for professional practice, and should be approached seriously. Finally, the number of OSCE stations is increased for each year as students acquire competence in various domains as they progress through the curriculum. Also, the validity and reliability of the assessment increases as the number of OSCE stations are increased.14 The framework will be evaluated by examining students’ year-by-year OSCE performance relative to their performance on other oral assessments, unit evaluation scores and qualitative comments, faculty perceptions, and stakeholder opinions.

A limitation of our study is the lack of hospital pharmacists as focus group participants (some faculty members worked previously in hospitals), although 80% of our undergraduates practice in community pharmacy and the basic interpersonal skills of a pharmacist should be similar across the two sectors. It could be argued that more focus groups were necessary; however, similar ideas were put forward by the participants in both groups and extremely similar, highest-ranking frameworks were produced across groups. Also, there was a high disparity in rankings, which could indicate professional bias, but the NGT requires a mean and not a median, which is a limitation of the technique. Other limitations of the NGT cited in the literature include the need for extended preparation to clearly identify the information desired from a group, its time-consuming nature, being limited to a single-topic meeting and requiring agreement from all group members to use the same structured method.29 However, we did not experience any of these limitations. We did not find the preparation and time required to conduct the focus groups to be overly onerous. Furthermore, the focus on a single topic helped us achieve the study aims efficiently, as participants were able to concentrate on the task at hand rather than being distracted by a series of questions. Finally, no objections were expressed by any of the participants who were briefed about the process and what it involves prior to the meetings.

As there is no evidence of such a framework for OSCEs in the literature, we believe this work is highly innovative. Our application of nursing and midwifery guidelines19-22 to the development and implementation of OSCEs in our course has helped with their further validation and critique. Our application of the national pharmacy learning outcomes3 to OSCEs is also new, and foreshadows what the Australian Pharmacy Council will require all Australian and New Zealand pharmacy programs to demonstrate in the near future with regard to their curricula. Given similarities between our national learning outcomes and global competencies for pharmacists, as well as competencies from the United Kingdom, the United States, and Canada where pharmacy is practiced similarly, the framework developed in our study could potentially be applied and/or adapted by other pharmacy schools that also use OSCEs. Pharmacy schools elsewhere could alternatively apply the same framework process for their OSCEs, or to other course/curricular developments using the NGT. Implementation of the framework has already commenced, with the year 4 exit OSCE having been recently developed. Last, we have shared the framework and other core development elements with pharmacy schools around the world through PharmAcademy (http://pharmacademy.org/), an online platform that allows the global pharmacy education community to share, discover, acquire and repurpose content.

CONCLUSIONS

The highest-ranking OSCE frameworks created by focus group participants were similar in nature and were reviewed to produce an OSCE framework for our course. The framework provides a consistent OSCE structure from course entry to exit and ensures graduates meet internship requirements.

ACKNOWLEDGMENTS

This work was funded under the Better Teaching, Better Learning Small Grants Scheme by The Office of the Pro Vice-Chancellor (Learning & Teaching), Monash University. We thank the focus group participants for their valuable contributions.

REFERENCES

- 1.Marriott JL, Nation RL, Roller L, et al. Pharmacy education in the context of Australian practice. Am J Pharm Educ. 2008;72(6):Article 131. doi: 10.5688/aj7206131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mitchell ML, Henderson A, Jeffrey C, et al. Application of best practice guidelines for OSCEs – an Australian evaluation of their feasibility and value. Nurse Educ Today. 2015;35(5):700–705. doi: 10.1016/j.nedt.2015.01.007. [DOI] [PubMed] [Google Scholar]

- 3.Stupans I, McAllister S, Clifford R, et al. Nationwide collaborative development of learning outcomes and exemplar standards for Australian pharmacy programmes. Int J Pharm Pract. 2014:1–9. doi: 10.1111/ijpp.12163. [DOI] [PubMed] [Google Scholar]

- 4. International Pharmaceutical Federation FIP Education Initiatives. A global competency framework for services provided by Pharmacy Workforce Version 1, 2012.

- 5. Competency Development and Evaluation Group. General Level Framework, a framework for pharmacist development in general pharmacy practice. GLF Second Edition. October 2007.

- 6. National Association of Pharmacy Regulatory Authorities. Model standards of practice for Canadian pharmacists. 2003.

- 7.American College of Clinical Pharmacy. ACCP guideline: standards of practice for clinical pharmacists. Pharmacotherapy. 2014;34(8):794–797. doi: 10.1002/phar.1438. [DOI] [PubMed] [Google Scholar]

- 8.Boursicot KA, Roberts TE, Burdick WP. Structured assessment of clinical competence. In: Swanwick T, editor. Understanding Medical Education: Evidence, Theory and Practice. Oxford, UK: Wiley-Blackwell; 2010. [Google Scholar]

- 9.Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J. 1975;1(5955):447–451. doi: 10.1136/bmj.1.5955.447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Patrício MF, Julião M, Fareleira F, Carneiro AV. Is the OSCE a feasible tool to assess competencies in undergraduate medical education? Med Teach. 2013;35(6):503–514. doi: 10.3109/0142159X.2013.774330. [DOI] [PubMed] [Google Scholar]

- 11.Austin Z, O’Byrne C, Pugsley J, Munoz LQ. Development and validation processes for an Objective Structured Clinical Examination (OSCE) for entry-to-practice certification in pharmacy: the Canadian experience. Am J Pharm Educ. 2003;67(3):Article 76. [Google Scholar]

- 12.Austin Z, Marini A, Croteau D, Violato C. Assessment of pharmacists’ patient care competencies: validity evidence from Ontario (Canada)’s quality assurance and peer review process. Pharm Educ. 2004;4(1):23–32. [Google Scholar]

- 13.Mitchell M, Strube P, Vaux A, West N, Auditore A. Right person, right skills, right job: the contribution of Objective Structured Clinical Examinations in advancing staff nurse experts. J Nurse Admin. 2013;43(10):543–548. doi: 10.1097/NNA.0b013e3182a3e91d. [DOI] [PubMed] [Google Scholar]

- 14.Munoz LQ, O’Byrne C, Pugsley J, Austin Z. Reliability, validity, and generalizability of an objective structured clinical examination (OSCE) for assessment of entry-to-practice in pharmacy. Pharm Educ. 2005;5(1):33–43. [Google Scholar]

- 15.Harrhy K, Coombes J, McGuire T, Fleming G, McRobbie D, Davies J. Piloting an objective structured clinical examination to evaluate the clinical competency of pre-registration pharmacists. J Pharm Pract Res. 2003;33(3):194–198. [Google Scholar]

- 16.Lapkin S, Levett-Jones T, Gilligan C. A cross-sectional survey examining the extent to which interprofessional education is used to teach nursing, pharmacy and medical students in Australian and New Zealand universities. J Interprof Care. 2012;26(5):390–396. doi: 10.3109/13561820.2012.690009. [DOI] [PubMed] [Google Scholar]

- 17.Aslani P, Krass I. Adherence: a review of education, research, practice and policy in Australia. Pharm Pract. 2009;7(1):1–10. doi: 10.4321/s1886-36552009000100001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brazeau C, Boyd L, Crosson J. Changing an existing OSCE to a teaching tool: the making of a teaching OSCE. Acad Med. 2002;77(9):932. doi: 10.1097/00001888-200209000-00036. [DOI] [PubMed] [Google Scholar]

- 19.Jeffrey CA, Mitchell ML, Henderson A, et al. The value of best-practice guidelines for OSCEs in a postgraduate program in an Australian remote area setting. Rural Remote Health. 2014;14(3):2469. [PubMed] [Google Scholar]

- 20. Mitchell M, Jeffrey C. An implementation framework for the OSCE ‘Best Practice Guidelines’ designed to improve nurse preparedness for practice. Griffith University; 2013:1–81.

- 21.Mitchell ML, Henderson A, Jeffrey C, et al. Application of best practice guidelines for OSCEs – an Australian evaluation of their feasibility and value. Nurse Educ Today. 2015;35(5):700–705. doi: 10.1016/j.nedt.2015.01.007. [DOI] [PubMed] [Google Scholar]

- 22.Nulty DD, Mitchell ML, Jeffrey CA, Henderson A, Groves M. Best practice guidelines for use of OSCEs: maximising value for student learning. Nurse Educ Today. 2011;31(2):145–151. doi: 10.1016/j.nedt.2010.05.006. [DOI] [PubMed] [Google Scholar]

- 23. Association of Standardized Patient Educators. Pharmacy OSCE toolkit. Accessed January 25, 2016.

- 24.Boursicot K, Roberts T. How to set up an OSCE. Clin Teach. 2005;2(1):16–20. [Google Scholar]

- 25.Newble D. Techniques for measuring clinical competence: objective structured clinical examinations. Med Educ. 2004;38(2):199–203. doi: 10.1111/j.1365-2923.2004.01755.x. [DOI] [PubMed] [Google Scholar]

- 26.Reznick RK, Smee S, Baumber JS, et al. Guidelines for estimating the real cost of an objective structured clinical examination. Acad Med. 1993;68(7):513–517. doi: 10.1097/00001888-199307000-00001. [DOI] [PubMed] [Google Scholar]

- 27. Pugh D, Smee S. Guidelines for the development of objective structured clinical examination (OSCE) cases. Ottawa: Medical Council of Canada; 2013.

- 28.Zabar S, Kachur E, Kalet A, Hanley K. Objective Structured Clinical Examinations: 10 Steps to Planning and Implementing OSCEs and Other Standardized Patient Exercises. New York, NY: Springer Science+Business Media; 2013. [Google Scholar]

- 29.Delbecq AL, Van De Ven A, Gustafson DH. Group Techniques for Program Planning: A Guide to Nominal Groups and Delphi Process. Glenview, IL: Scott Foresman Company; 1975. [Google Scholar]

- 30.Jones J, Hunter D. Consensus methods for medical and health services research. BMJ. 1995;311(7001):376–380. doi: 10.1136/bmj.311.7001.376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rajiah K, Veettil SK, Kumar S. Standard setting in OSCEs: a borderline approach. Clin Teach. 2014;11(7):551–556. doi: 10.1111/tct.12213. [DOI] [PubMed] [Google Scholar]

- 32.Schwartzman E, Hsu DI, Law AV, Chung EP. Assessment of patient communication skills during OSCE: examining effectiveness of a training program in minimizing inter-grader variability. Patient Educ Couns. 2011;83(3):472–477. doi: 10.1016/j.pec.2011.04.001. [DOI] [PubMed] [Google Scholar]

- 33.Austin Z, Gregory P, Tabak D. Simulated patients vs. standardized patients in objective structured clinical examinations. Am J Pharm Educ. 2006;70(5):Article 119. doi: 10.5688/aj7005119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Austin Z, Gregory PA. Professional students’ perceptions of the value, role, and impact of science in clinical education. Clin Pharmacol Ther. 2007;82(5):615–620. doi: 10.1038/sj.clpt.6100274. [DOI] [PubMed] [Google Scholar]