Abstract

Objective. To identify and address areas for curricular improvement by evaluating student achievement of expected learning outcomes and competencies on annual milestone examinations.

Design. Students were tested each professional year with a comprehensive milestone examination designed to evaluate student achievement of learning outcomes and professional competencies using a combination of multiple-choice questions, standardized patient assessments (SPAs), and objective structured clinical examination (OSCE) questions.

Assessment. Based on student performance on milestone examinations, curricular changes were instituted, including an increased emphasis on graded comprehensive cases, OSCE skills days, and use of patient simulation in lecture and laboratory courses. After making these changes, significant improvements were observed in second and third-year pharmacy students’ grades for the therapeutic case and physician interaction/errors and omissions components of the milestone examinations.

Conclusion. Results from milestone examinations can be used to identify specific areas in which curricular improvements are needed to foster student achievement of learning outcomes and professional competencies.

Keywords: milestone examination, curriculum, assessment, progress examination, objective structured clinical examination (OSCE), standardized patient assessments (SPA)

INTRODUCTION

The Accreditation Council for Pharmacy Education (ACPE) Standards 2007 (Standard 15), as well as the upcoming Standards 2016 (Standard 24), call for schools and colleges of pharmacy to carry out comprehensive knowledge- and performance-based assessment(s) of student achievement of learning outcomes and professional competencies.1,2 This includes assessment plans that measure student readiness to enter into pharmacy practice experiences. Additionally, the ACPE Standards call for schools and colleges to analyze student performance on assessments for continuous curricular improvement to aid student achievement of learning outcomes and professional competencies.1,2

One type of assessment for evaluating student achievement of learning outcomes and professional competencies is annual progress examinations.3 There are several reports on the use of progress examinations in pharmacy education, including MileMarker or milestone examinations.4-10 These are cumulative and comprehensive examinations designed to assess student learning, knowledge retention, and in some instances professional competencies. The composition of the examinations varies from exclusively multiple-choice questions (eg, general knowledge and case-based questions) to those with performance-based assessments through objective structured clinical examination (OSCE)/standardized patient assessment (SPA) activities.4-10 Ideally, no information about the content of the examination is provided to students ahead of time as assessing student performance immediately following focused study is not the desired intent of these examinations. The advantage of this type of examination over course examinations is that they should provide a more accurate evaluation of the knowledge and competencies acquired and retained by students. The stakes associated with progressive examinations have been shown to impact student performance with students performing better on higher stakes examinations.5,7 Studies examining the effect of incentives on student performance have shown that negative incentives (eg, remediation, failure to progress) are more effective than positive incentives (eg, rewards such as books or bonus points). Additionally, examinations with negative high-stakes incentives (ie, failure to progress in the curriculum) are more effective than low-stakes incentives (ie, remediation).5 While there are several reports on the usefulness of progress examinations as a means for assessing student achievement of learning outcomes and clinical competencies in pharmacy education,4-10 there are no reports on how the results from these examinations can be used for curricular improvements.

The ACPE Standards also call for schools and colleges to analyze student performance on assessments and to use the information gained to institute curricular improvements that facilitate student achievement of learning outcomes and competencies.1,2 There have been several reports over the last few years detailing processes for curricular assessment and improvements.11-14 These processes have largely focused on the use of course measurements (ie, examinations, evaluations) for curricular improvements rather than progress examinations. Content changes based solely on review of individual courses has the potential to miss overarching concepts since individual courses are narrower in scope and time. Progress examinations may provide a more accurate picture of the knowledge that has been both learned and retained by students, as well as professional competencies that are acquired, and it would be beneficial to use these measurements to identify areas for curricular improvement. Herein we demonstrate one successful strategy for how annual milestone examination measurements can be used to drive curricular changes that improve student achievement of learning outcomes and professional competencies.

DESIGN

This is a retrospective study that received approval from the Appalachian College of Pharmacy IRB committee (exempt status). The college’s 3-year doctor of pharmacy (PharmD) program tests students at the end of each professional year with comprehensive milestone examinations. Participants in this study were the students enrolled at the college during the 2010-2011 through 2014-2015 academic years (class sizes varied from 62 to 77 students). Although milestone examinations for first professional year (P1) and second professional year (P2) students had been used prior to the 2010-2011 academic year, this was the first year that a third professional year (P3) student milestone examination was used.

Milestone examinations were administered annually near the conclusion of the P1, P2, and P3 years. Each examination was a combination of written and simulation exercises that consisted of the following components: multiple-choice questions on general knowledge, laboratory compounding and calculations, patient counseling, physician interaction/errors and omissions (E&O), and a written therapeutic case.

Faculty representatives from both the pharmaceutical sciences (3-4 members) and pharmacy practice (5-6 members) departments were selected by the dean to serve on the milestone committee, including several members of the curriculum committee. Examination questions, patient care scenarios, and grading rubrics were prepared and peer reviewed by faculty members on the milestone examination committee. The format for the various milestone examination components remains consistent each year; however, specific content varies. The therapeutic topics for the patient counseling, physician interaction/E&O, and written therapeutic case are agreed upon by committee members after a discussion regarding suspected curricular strengths and weaknesses.

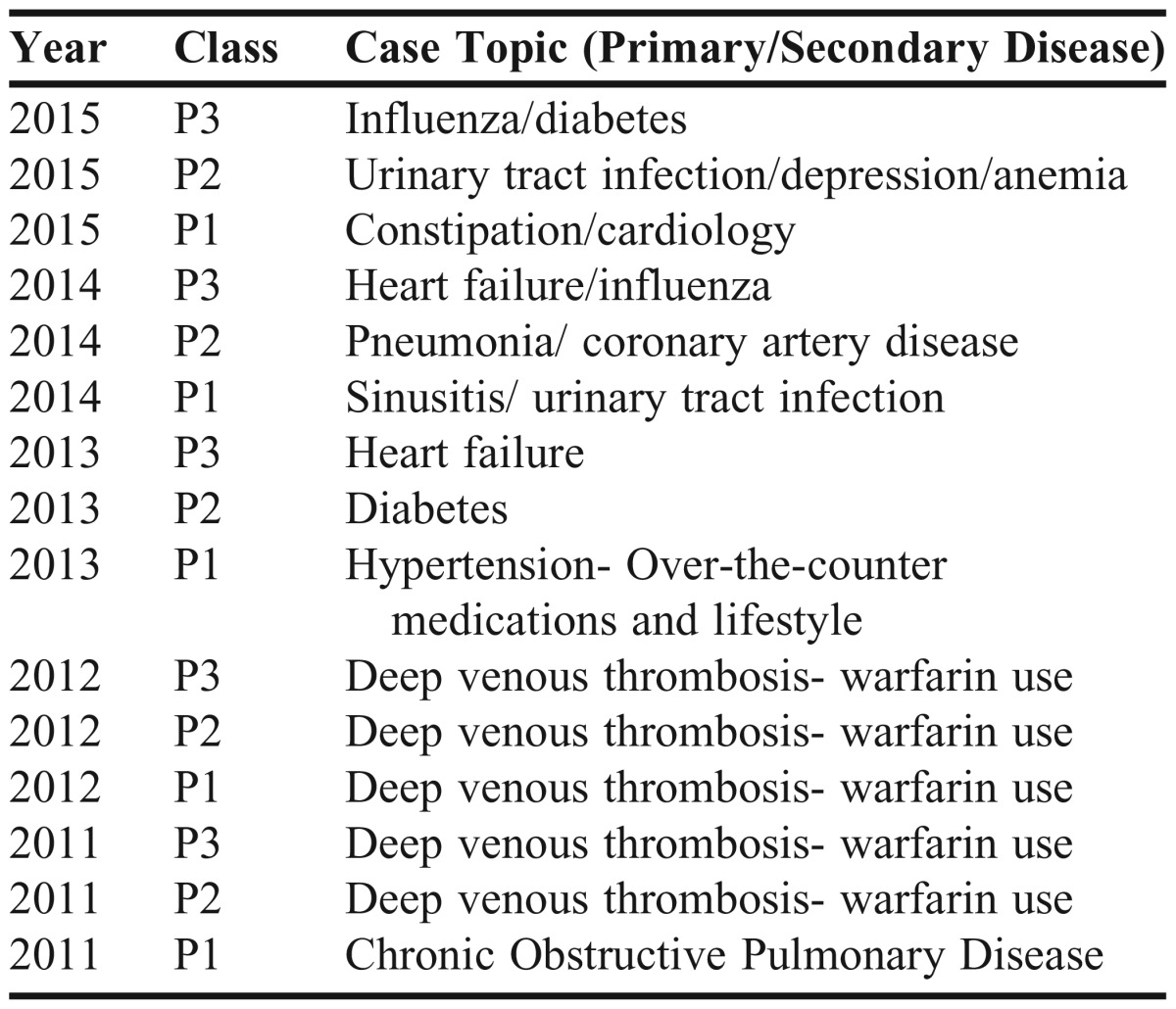

Topics chosen during the course of this study are listed in Appendix 1. The committee chose content that focused on perceived student weaknesses. Once topics were selected, assigned committee members reviewed course content, textbooks, and primary literature to create an examination that was consistent with previously taught material and clinical practice. From conception to finalized product, the milestone examination required multiple committee discussions over at least 3 months. Each component of the examination was then mapped to the college’s learning and ability-based outcomes (ABOs). All examination components were initially administered on a single day; however, more recently, the examinations were administered over 2 days wherein the multiple-choice questions (60-100 questions; 75-100 minutes) and laboratory compounding and calculations (15-25 questions; 60-75 minutes) are administered on day 1 and the OSCE/SPA components (patient counseling, physician interaction/E&O, written therapeutic case), each covering a single topic, are administered on day 2 (1-7 days later). All OSCE/SPA components are graded using standardized grading rubrics. The focus of this study was on the OSCE/SPA components (patient counseling, physician interaction/E&O, therapeutic case) of the examinations.

The examination composition breakdown and time limits over the period 2011-2015 for the OSCE/SPA components were as follows: patient counseling (1 topic, 20 min); physician interaction/prescription E&O (1 phone call to a faculty member playing the role of the physician, 20 min); and written therapeutic case (short answer question format, 70 min). For the patient-counseling component, faculty members and/or outside actors posed as patients in a community pharmacy setting. Students approached the mock patient and through verbal communication were given clues to a clinical scenario. The student was responsible for eliciting the desired information from the patient and then administering proper counseling. Students were expected to provide medication recommendations to the patient and counsel the patient about pharmacologic and nonpharmacological therapies.

During the physician interaction/E&O component, students were provided with patient information available in a community pharmacy setting (date of birth, allergies, previous prescription medications, etc) and at least one prescription (written, faxed, or phoned in) that had to be assessed for completeness and clinical merit. The student was expected to call the physician (played by a faculty member) to discuss any identified errors or clinical concerns and make a recommendation to resolve the issue. In the written therapeutic case, students were presented with patient history, laboratory values, home medications, and other pertinent information. The case then led the student thru a series of structured questions tailored to the academic year, and included such things as creating a prioritized problem list, creating a treatment plan, discussing drug mechanism of action or side effect profiles, and patient counseling.

Specific changes in the design for each of the OSCE/SPA components over years 1-5 are provided in Table 1, along with the stakes associated with each year’s examination. Students were required to earn a score of 70% or better to achieve competency for each examination component. KaleidaGraph was used to calculate the mean, median, and standard deviation of student scores for each examination component. The p-values for student scores in years 1 and 5 for each class (P1-P3) were calculated using analysis of variance (ANOVA) function (alpha =.05) in KaleidaGraph. Post hoc analyses (Tukey HSD, honest significant difference, Bonferroni) were used to corroborate significant ANOVA findings.

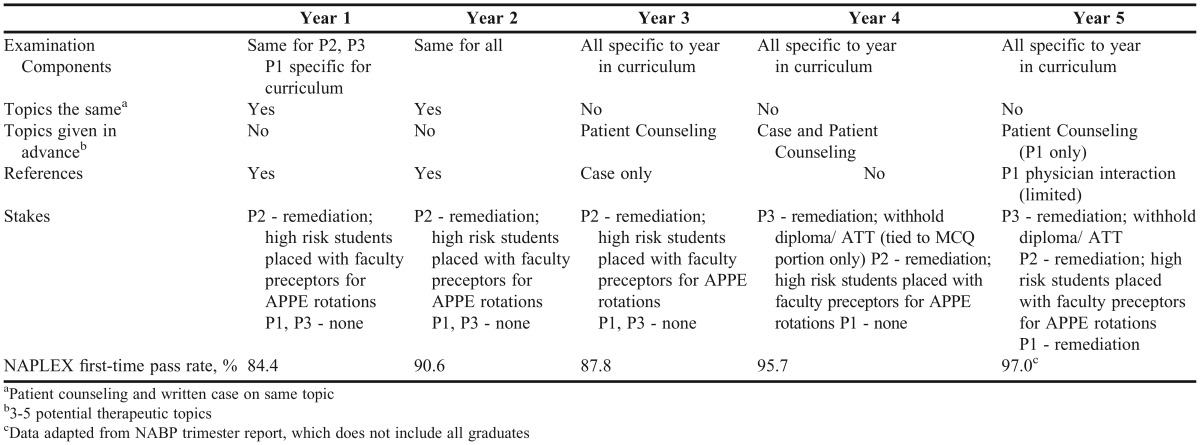

Table 1.

Summary of Changes Made to Milestone Examination Components and Stakes Over a Five-Year Period

A retrospective analysis of all therapeutics and patient assessment courses in the P2 year as well as the communications and nonprescription drug courses in the P1 year was conducted to determine the number of graded therapeutic cases, skills days and activities, and patient simulation cases in the curriculum. A significant increase in the use of patient simulation in didactic courses was expected since the college acquired a patient simulator (SimMan) in 2012, which contributed to the decision to select 2010-2011 as the baseline year (year 1) for this study. While a large number of therapeutic cases were used throughout the curriculum as active-learning assignments, for the purposes of this study only graded cases listed as a component of final course grade calculations or as part of skills days were included since these are of higher rigor and have more reliable tracking. For each skills day, the number of skills related activities was tabulated and categorized. Examples of skills-related activities on skills days include: therapeutic cases, patient simulation, patient assessment, patient counseling (prescription and nonprescription drugs), patient education, prescription errors and omissions, device instruction/demonstration, literature search and evaluation, and drug information requests.

EVALUATION AND ASSESSMENT

At the end of each professional year (P1-P3), a comprehensive milestone examination that included OSCE/SPA questions was administered to students. The focus of this study was the OSCE and SPA components of the examinations, specifically patient counseling, physician interaction/E&O, and therapeutic case components. In year 1 of the study, the students were allowed to use references for all three OSCE/SPA components (Table 1). The components for all classes were the same. Additionally, topics and questions for the P2 and P3 students were the same, as this allowed for measurement of retention of knowledge while on clinical rotations, while topics for the P1 class were modified to align with courses they had completed. There were no stakes associated with the P1 and P3 examinations in year 1 of the study, and only low stakes (remediation) associated with the P2 examination. Additionally, high-risk students (those scoring 1 or more standard deviations below the mean composite [MCQ+Laboratory +OSCE/SPA] score) were placed with faculty preceptors for their APPEs in the following academic year.

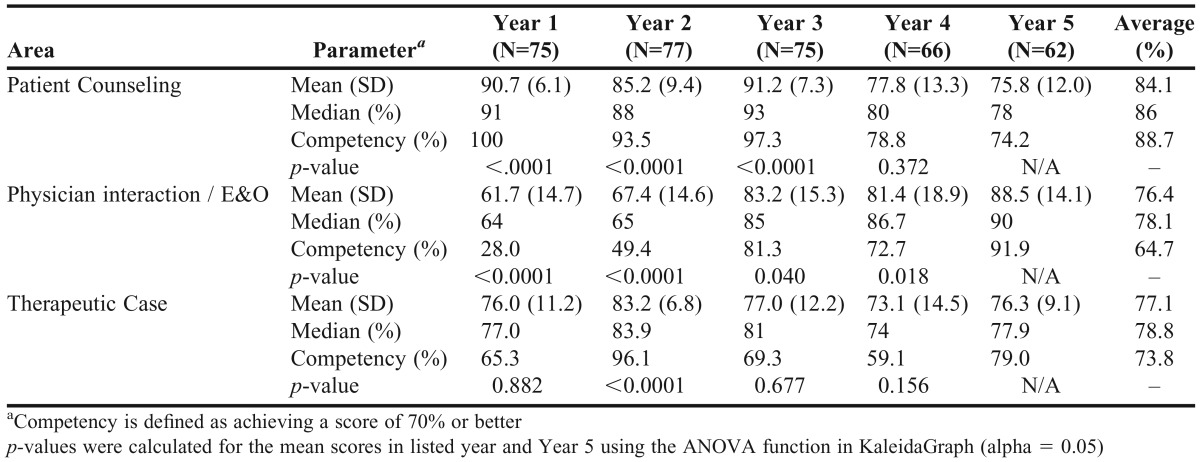

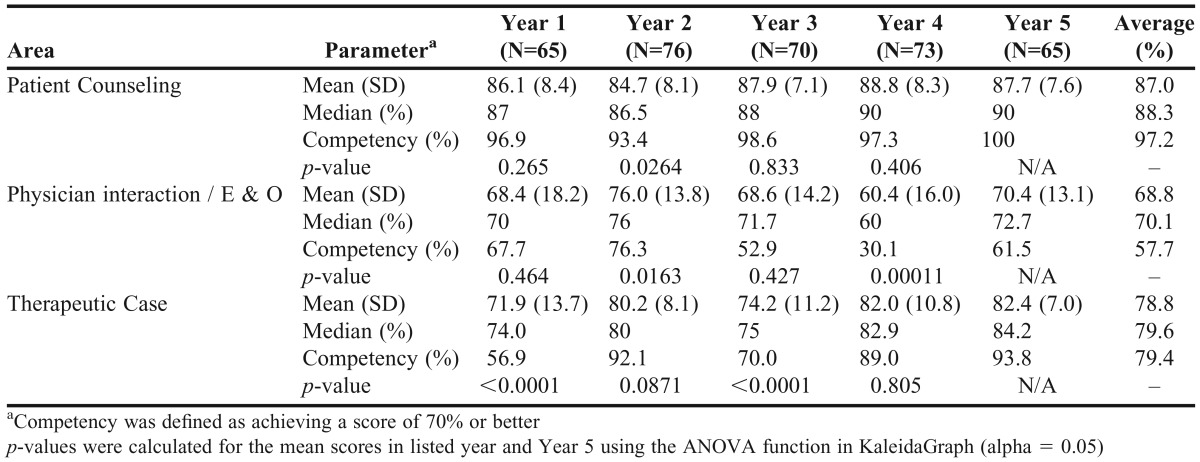

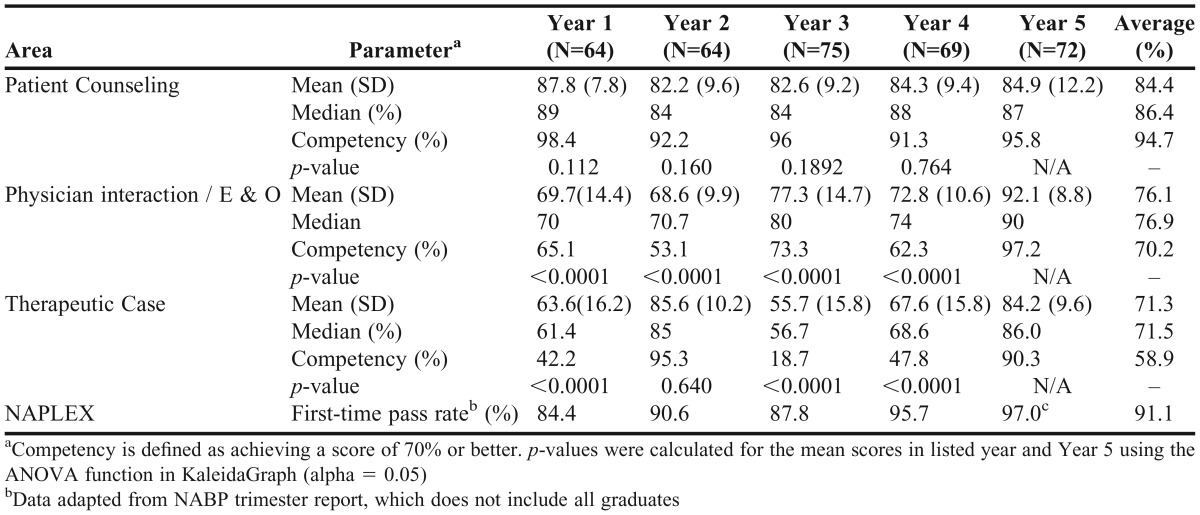

Results from the milestone examinations in years 1-5 of the study are shown in Tables 2-4. In year 1 of the study, students in all three years (P1-P3) performed well on the patient-counseling component (mean 86.1%-90.7%) with 87%-91% of students in each class (P1-P3) meeting competency requirements of 70% or better. Student performance on the therapeutic case (mean 63.3%-76.0%) was lower than patient counseling with only 42.2%-65.3% of students meeting competency requirements. Class performance on the physician interaction/E&O component was the lowest of these components for all three classes (mean 61.7%-69.7%) with only 28.0%-67.7% of students achieving competency on this component.

Table 2.

Summary of Student Performance on P1 Milestone Examinations

Table 3.

Summary of Student Performance on P2 Milestone Examinations

Table 4.

Summary of Student Performance on P3 Milestone Examinations

After the milestone examinations in year 1, the college instituted a number of changes to both the milestone examinations and the curriculum to both increase the rigor of the examination, and to improve student achievement of learning outcomes and professional competencies. A summary of the changes made to the milestone examination with respect to the patient counseling, physician interaction/E&O, and therapeutic case components during the 5 years of this study is provided in Table 1. Specifically, changes to the examination centered on student use of references during the examination. In year 1, students were allowed to use references on the patient counseling, physician interaction/E&O, and therapeutic case components of the milestone examinations. However, we found this hampered the ability of the examination to assess student achievement of learning outcomes related to knowledge.

Because of this, over the five-year study period, the use of references was gradually removed from all three of these examination components as outlined in Table 1. The increase in the difficulty of the examination that resulted from the removal of references was initially compensated for by providing the students with a list of potential topics ahead of time, so that they could narrow the focus of their examination preparation. As the students became more comfortable and proficient with the new examination process, the practice of providing potential topics ahead of time was gradually phased out (Table 1).

To improve student performance on the OSCE/SPA components of the milestone examinations, a number of curricular changes were instituted. Specifically, there was an increase in the number of graded comprehensive cases, OSCE skills days (includes patient counseling), and emphasis on the use of patient simulation in lecture-based courses. This was accomplished primarily through the existing communications and OTC courses in the P1 year, and the therapeutics and patient assessment courses in the P2 year. As mentioned above, an increase in the use of patient simulation in lecture-based courses was expected since the college acquired a patient simulator in 2012.

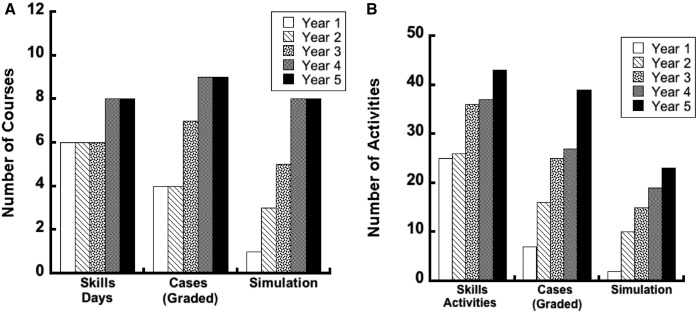

A summary of the curricular changes is shown in Figure 1. Over the five-year period examined, the number of courses with OSCE/SPA activities (Figure 1A), including skills days, therapeutic cases, and patient simulation increased. The number of courses with skills days increased from 6 to 8, graded therapeutic cases increased from 4 to 9, and patient simulation increased from 1 to 9. Additionally, over this time period there were increases in the total numbers of skills activities from 25 to 43, graded therapeutic cases from 7 to 39, and patient simulation activities from 2 to 23 (Figure 1B). Importantly, a physician call was added to the curriculum in the communications course for the first time in the 2012-2013 academic year.

Figure 1.

OSCE/SPA-related activities in didactic courses over years 1-5. (A) Number of courses with OSCE/SPA-related activities. (B) Total number of OSCE/SPA-related activities.

Following the curricular changes, there was a significant increase in the physician interaction/E&O in the P1class (mean=88.5%, p<.001) and P3 class (mean=92.1%, p<.001) classes with 90%-97% of students achieving competency, while this component remained the most difficult component for the P2 students (mean 70.4%) with only 61.5% of students achieving competency. Importantly, the increase in student performance on this component of the examination in the P1 year (Table 2) took place in year 3, which is when the physician interaction (phone call) was incorporated into the communications course. A significant increase in performance on the P1 physician interaction is observed in the years where the physician interaction activity is included in the curriculum (year 1 and 2 of the study: mean=64.5%, competency 38.7% vs years 3-5 of the study: mean=84.4%, competency=82.0%; p<.001). Additionally, the students in the first class with the physician interaction incorporated into the curriculum also had the highest score on this component of the P3 milestone (years 1-4: mean = 72.1%, competency=63.5% vs year 5: mean=92.1%, competency=97.2%).

Importantly, a significant increase in performance on the P2 milestone was observed following the increases in these activities (years 1-3: mean=75.7%, competency=73%; years 4-5: mean=82.2%, competency=91.4%; p<.001). This level of performance was maintained by students in the P3 year (mean 84.2%, competency 90.3%). The high scores for the written case on the P2 and P3 milestone examinations may be attributed in part to the fact that the written cases used in years 1 and 2 of the study were the same (Appendix 1). If the data for year 2 is excluded, the increase in performance following the curricular changes is more striking for P2 classes (years 1-3: mean=73.1%, competency=63.5% vs. years 4-5: mean=82.2%, competency=91.4%; p < .001) and P3 classes (years 1, 3-4: mean=62.3%, competency=36.2% vs year 5: mean=84.2%, competency=90.3%, p<.001) classes. There was not a significant change in scores on the P1 milestone examination as most of the graded cases and patient simulation activities occur in the P2 year.

Results from the milestone examinations in year 5 of the study are shown in Tables 2-4. In spite of the presumed increase in examination difficulty because of the students’ inability to use resources, students in the P2 and P3 years maintained a high level of performance on the patient counseling component (mean = 84.9%-87.7%) with 95.8%-100% of students in each class meeting competency requirements. Student performance for P1 counseling decreased with the increased examination difficulty (mean=75.8%, competency=74.2%). Student performance on the physician interaction/E&O component significantly improved for students in the P1 (mean=88.5%, p<.001) and P3 (mean = 92.1%, p<.001) classes with 90%-97% of students achieving competency. The physician interaction/E&O component remained the most difficult component for the P2 students (mean 70.4%), with only 61.5% of students achieving competency. Lastly, student performance on the therapeutic case significantly improved for students in the P2 (mean 82.4%, p<.001) and P3 classes (mean 63.6% vs. 84.2%, p<.001) with 90%-94% of students meeting competency requirements. Although there was not a significant improvement in the mean/median for therapeutic case in the P1 year (mean: 76.0% vs. 76.3%, p=0.88), the percentage of students meeting competency increased from 65.3% to 79.0%.

DISCUSSION

Annual progress examinations are one type of assessment used by pharmacy schools to measure student achievement of learning outcomes, knowledge retention, and professional competencies. The Appalachian College of Pharmacy administers multi-component milestone examinations at the end of each professional year (P1-P3) that include three OSCE/SPA components: patient counseling, physician interaction/E&O, and a written therapeutic case. Evaluation of student performance on the patient-counseling component of the milestone examinations in year 1 (Tables 2-4) revealed a very high level of performance (86.1%-90.7%) and competency rate (87%-91%). Point losses on this component of the examinations are largely attributed to knowledge deficits rather than to communication skills. Although these results are quite satisfactory, students were given a listing of potential counseling topics at least a week prior to the examination. Students were encouraged to research and prepare for each topic. Additionally, simple drug information resources (eg, LexiComp, Micromedex) were supplied at the counseling station. Knowledge of potential topics and the ability to use resources during this component complicated our ability to accurately assess whether students were achieving the desired learning outcomes.

For example, did a student score higher on the assessment because they retained information taught in class (achieved the desired learning outcomes), or did they score higher because they were better at creating a study guide and memorizing information (unintended outcome)? Consequently, over a four-year period the rigor of the patient-counseling component of the examination was increased by eliminating the use of resources and maintaining confidentiality of test topics. The increase in examination rigor was compensated for by an increase in patient counseling experiences in didactic courses, thereby enabling students to maintain a high level of performance on this component of the examination in the P2 and P3 classes (years 4 and 5 of the study) in spite of the perceived increase in difficulty associated with loss of planning and resources. There was a decrease in performance on this component in the P1 class (years 4 and 5 of the study) with the removal of references. We expected this finding since the majority of the counseling opportunities take place during the P2 year.

Unlike the prescription in the P1 year, prescriptions on the P2 and P3 milestone examinations in year 5 involved controlled substances; therefore, students were also tested on their knowledge of federal laws for dispensing controlled substances. Students scored an average 86.9% on the physician rapport part of the P2 milestone examination (ie, communicating appropriately, making a recommendation). However, the average decreased to 41.5% with respect to identifying the E&O on the prescription, with the failure to include a cautionary statement on the prescription bottle label being the number one reason for loss of points. Although students performed well overall on the P3 milestone examination, the majority of missed points were from a lack of knowing legal requirements for dispensing suboxone. These findings highlight the need for information related to pharmacy law to be better emphasized and indicates that more E&O activities, especially those related to controlled substances, should be incorporated into more coursework and capstone courses. We expect that students’ ability to identify errors and omissions on prescriptions will improve as they are afforded more opportunities to practice this type of activity.

We were concerned over student performance on the written therapeutic case in year 1, especially the P3 milestone examination. We attributed this poor performance in part to unfamiliarity with process and expectations for graded written cases. Therefore, faculty members increased the number of graded therapeutic cases and other OSCE/SPA activities in the curriculum (Figure 1), with the majority of these activities occurring in the P2 year. Following the increase in these activities, a significant improvement in student performance on this component of the milestone examinations was observed (Tables 2-4). The largest increase in OSCE/SPA activities, including graded cases, occurred in years 4 and 5 (Figure 1).

The college’s remediation strategies have evolved over time based on discussions between the milestone examination committee, the dean, and the faculty. Initially, students with composite scores (MCQ+laboratory+OSCE/SPA components) one standard deviation or more below the class mean were required to complete cases available through Access Pharmacy, an online reference from McGraw-Hill Medical (www.accesspharmacy.com). The decision to remediate based on the composite scores, as opposed to individual components, was made to minimize faculty members’ workload. However, over the last two years this strategy has evolved into identifying and improving area(s) of weakness, such that students are now required to remediate any component where they do not achieve a score of 70% or greater. This decision was made because increasing examination stakes (Table 1) has been shown to increase student performance,5,7 and faculty members felt that it was in the best interest of students to ensure that they were prepared for APPEs and the North American Pharmacist Licensure Examination (NAPLEX). Currently, students who do not achieve passing scores on any OSCE/SPA components of the P1 and P2 milestone examinations remediate that component by completing quiz banks (with a specified passing score of 85%) on the related therapeutic topic(s) in Access Pharmacy (Top 200 Drugs Challenge) or the American Pharmacists Association (APhA) (APhA Complete Review for Pharmacy). This remediation takes place after all examinations have been graded and the milestone examination committee has met to review and discuss the results (approximately 7 days after the completion of the examination). While the number of students requiring remediation per class has increased from 0-45 with the transition to remediating each component for all years, the use of available quiz banks has kept this process from being labor intensive. For P3 students, we are also able to use the RxPrep (Manhattan Beach, CA) modules and question database. Since the stakes on the P3 milestone examination were increased, the college’s NAPLEX first-time pass rate (Table 1) has increased and is now above the national average.

As expected, there is some degree of student anxiety associated with comprehensive milestone examinations since these examinations are vastly different from typical course examinations. However, the incorporation of more graded cases and opportunities for patient counseling and healthcare provider interaction into the curriculum, particularly through the use of course skills days, has lessened the anxiety so that students now handle these situations with more composure. Through the years, however, students have appreciated the changes we have made. While the preparation of skills days and graded therapeutic cases increase faculty workload to some degree, faculty members have embraced the changes because of the positive results we have seen during courses, APPEs, and on the NAPLEX.

One limitation of this study is that while the content components of the examination are the same for all classes within a year, the specific therapeutic topics and questions are not the same between different years for any given class level (eg, P3, Appendix 1). Although this would be great in theory, it is not practical since the students would know the topics to prepare for ahead of time, which defeats the purpose of using the milestone examination. A second limitation of this study is that the stakes across all years are not the same. This is important because the stakes associated with progressive examinations have been shown to impact student performance.5,7 The largest change in the examination stakes occurred with the milestone examination for P3 students, where there was a change from no stakes in years 1-3 of the study to high-stakes in years 4-5 (remediation+withhold diploma, authorization to test) (Table 1).

Since making the transition to a high-stakes examination, the college has seen its highest first-time pass rates on the NAPLEX. This finding is consistent with previous studies showing that students perform better with high (negative) stakes. We feel that the college should move towards making all three examinations high-stakes by making successful completion of the milestone examination required for progression into the experiential components of the curriculum. This would involve progression of students from P1 to P2 Core Pharmacy Practice Experiences (CPPEs), which consist of two three-week clinical rotations (one institutional and one community pharmacy), and progression from P2 to P3 APPEs.

CONCLUSIONS

Although typically used as a tool for assessing student achievement of learning outcomes and professional competencies, evaluation of milestone examinations can also be used to highlight curricular areas that need improvement. We report on a process for using the critical evaluation of student performance on comprehensive annual milestone examinations to drive curricular improvements that increase student achievement of desired learning outcomes and professional competencies. The approach used in this study can be adopted by other schools to identify areas for curricular improvements that will facilitate student achievement of learning outcomes and competencies.

ACKNOWLEDGMENTS

The authors thank the faculty at Appalachian College of Pharmacy for their time and contribution to the milestone examinations and curricular activities, as well as Tina Fletcher for her assistance in compiling the statistics for OSCE/SPA-related activities in didactic courses.

Appendix 1. Topics for Written Case

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. Standards 2007. https://www.acpe-accredit.org/standards/. Accessed October 14, 2015.

- 2.Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. Standards 2016. https://www.acpe-accredit.org/pharmd-program-accreditation/. Accessed December 5, 2016.

- 3.Plaza CM. Progress examinations in pharmacy education. Am J Pharm Educ. 2007;71(4):Article 66. doi: 10.5688/aj710466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Szilagyi JE. Curricular progress assessments: the MileMarker. Am J Pharm Educ. 2008;72(5):Article 101. doi: 10.5688/aj7205101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sansgiry SS, Chanda S, Lemke T, Szilagyi JE. Effect of incentives on student performance on MileMarker examinations. Am J Pharm Educ. 2006;70(5):Article 103. doi: 10.5688/aj7005103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sansgiry SS, Nadkarni A, Lemke T. Perceptions of PharmD students towards a cumulative examination: the MileMarker process. Am J Pharm Educ. 2004;68(4):Article 93. [Google Scholar]

- 7.McDonough SLK, Kleppinger EL, Donaldson AR, Helms KL. Going “high stakes” with a pharmacy OSCE: Lessons learned in the transition. Curr Pharm Teach Learn. 2015;7(1):4–11. [Google Scholar]

- 8.Hardinger K, Garavalia L, Graham MR, et al. Enrollment management strategies in the professional pharmacy program: a focus on progression and retention. Curr Pharm Teach Learn. 2015;7(2):199–206. [Google Scholar]

- 9.Alston GL, Haltom WR. Reliability of a minimal competency score for an annual skills mastery assessment. Am J Pharm Educ. 2013;77(10):Article 211. doi: 10.5688/ajpe7710211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Alston GL, Love BL. Development of a reliable, valid annual skills mastery assessment examination. 2010/09/01 2010;74(5):Article 80. [DOI] [PMC free article] [PubMed]

- 11.Ried LD. A model for curricular quality assessment and improvement. Am J Pharm Educ. 2011;75(10):Article 196. doi: 10.5688/ajpe7510196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Peterson SL, Wittstrom KM, Smith MJ. A course assessment process for curricular quality improvement. Am J Pharm Educ. 2011;75(8):Article 157. doi: 10.5688/ajpe758157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Letassy NA, Medina MS, Britton ML, Dennis V, Draugalis JR. A progressive, collaborative process to improve a curriculum and define an assessment program. Am J Pharm Educ. 2015;79(4):Article 55. doi: 10.5688/ajpe79455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Conway SE, Medina MS, Letassy NA, Britton ML. Assessment of streams of knowledge, skill, and attitude development across the doctor of pharmacy curriculum. Am J Pharm Educ. 2011;75(5):Article 83. doi: 10.5688/ajpe75583. [DOI] [PMC free article] [PubMed] [Google Scholar]