Abstract

Introduction

Screening and recruitment for clinical trials can be costly and time-consuming. Inpatient trials present additional challenges because enrollment is time-sensitive based on length of stay. We hypothesized that using an automated pre-screening algorithm to identify eligible subjects would increase screening efficiency and enrollment and be cost-effective compared to manual review of a daily admission list

Methods

Using a before-and-after design, we compared time spent screening, number of patients screened, enrollment rate, and cost-effectiveness of each screening method in an inpatient diabetes trial conducted at Massachusetts General Hospital. Manual Chart Review (CR) involved reviewing a daily list of admitted patients to identify eligible subjects. The automated pre-screening method (APS) used an algorithm to generate a daily list of patients with glucose levels ≥180 mg/dl, an insulin order, and/or admission diagnosis of diabetes mellitus. The census generated was then manually screened to confirm eligibility and eliminate patients who met our exclusion criteria. We determined rates of screening and enrollment and cost-effectiveness of each method based on study sample size.

Results

Total screening time (pre-screening and screening) decreased from 4 to 2 hours, allowing subjects to be approached earlier in the course of the hospital stay. The average number of patients pre-screened per day increased from 13 ± 4 to 30 ± 16 (P<0.0001). Rate of enrollment increased from 0.17 to 0.32 patients per screening day. Developing the computer algorithm added a fixed cost of $3,000 to the study. Based on our screening and enrollment rates, the algorithm was cost-neutral after enrolling 12 patients. Larger sample sizes further favored screening with an algorithm. By contrast, higher recruitment rates favored individual chart review.

Limitations

Because of the before-and-after design of this study, it is possible that unmeasured factors contributed to increased enrollment.

Conclusion

Using a computer algorithm to identify eligible patients for a clinical trial in the inpatient setting increased the number of patients screened and enrolled, decreased the time required to enroll them, and was less expensive. Upfront investment in developing a computerized algorithm to improve screening may be cost-effective even for relatively small trials, especially when the recruitment rate is expected to be low.

Keywords: Algorithm, screening, recruitment, inpatient trial, health information technology

Introduction

Subject recruitment is one of the major challenges encountered in the conduct of clinical trials. The inability to enroll subjects in a timely manner often leads to increased costs and “lower-quality studies” (1), since successful recruitment has a great impact on the quality of a clinical trial (2). Inadequate sample sizes decrease the statistical power and thus the usefulness of a trial’s results once it is completed (3). Inpatient clinical trials, while seemingly providing an abundance of potential subjects, may paradoxically present additional obstacles. Lengthy recruitment procedures have been identified as one of the primary reasons behind slow and inadequate recruitment (4,5). In inpatient trials, recruitment is especially time-sensitive and limited by the decreasing lengths of stay and increasing intensity of services provided in the hospital. Due to ineffective screening methods or lack of patient availability on care units, eligible subjects may be screened and/or approached closer to the time of discharge from the hospital, which renders them ineligible for an inpatient intervention. Methods that identify eligible inpatients as early in the course of the hospital stay as possible are critical for effective subject recruitment.

In order to increase enrollment for an inpatient randomized clinical trial, we developed a computerized algorithm to facilitate pre-screening. We hypothesized that the automated pre-screening algorithm (APS) would be a more efficient and cost-effective method of identifying and subsequently recruiting eligible inpatients compared to a method based on a manual chart review (CR). To test this hypothesis we measured and compared the yield and cost of the two screening strategies.

Methods

The Inpatient Diabetes Management for Outpatient Glycemic Control Trial (Clinical Trials.gov registration number NCT00869362) was conducted at Massachusetts General Hospital (MGH), a 900-bed tertiary care hospital in Boston. The trial tested the hypothesis that an inpatient diabetes-specific medication and education intervention would improve glycemic control 6 months after discharge in patients with poorly controlled diabetes admitted to the hospital for reasons other than uncontrolled diabetes. Consenting patients with hemoglobin A1c (HbA1c) > 7.5% admitted to acute general medical or surgical units for reasons other than hyperglycemia were randomly assigned either to an inpatient diabetes management intervention or to usual care. Usual care could include endocrinology consultation if desired. The study was approved by the Partners Healthcare Institutional Review Board (IRB).

Two methods were employed to identify eligible patients: manual chart review (CR) and automated pre-screening algorithm (APS). IRB approval included permission to pre-screen all MGH diabetes inpatients. CR entailed reviewing a daily admission list of all inpatients in general medical and several surgical services to pre-screen for potential subjects with a known diagnosis of type 2 diabetes mellitus. As they were identified, the screener determined trial eligibility. This was a time-consuming process that took the first half of the work day, prompting study investigators to develop automated screening methods.

APS consisted of automated pre-screening performed by a computerized query algorithm, followed by manual screening. The algorithm was designed to identify all current inpatients who matched at least one of the following criteria: 1) a principal or associated diagnosis of “diabetes mellitus,” 2) a blood glucose level over 180 mg/dL, or 3) an order for insulin. We added the criterion of an insulin order to the algorithm to increase sensitivity for eligible patients who lacked a coded principal or associated diagnosis of diabetes mellitus. The query was developed in Structured Query Language, a database language designed for managing data in relational databases. The query was not applied directly to the electronic medical record to avoid impact on the performance of the Electronic Health Record (EHR) databases. Instead, the query gathered data for each inpatient using multiple web services to create a searchable table of inpatients with the key elements driving the algorithm. Once the query was written, its execution was automated to generate an updated daily census identifying all inpatients meeting the specified criteria. This census, in turn, was reviewed by the research coordinator to confirm eligibility and eliminate patients who met the exclusion criteria. The algorithm was not refined after its initial development.

CR was used for 63 days and APS was subsequently used for 62 days. We recorded the age, sex and reason(s) for exclusion of all patients screened. Demographic variables, reasons for admission, and comorbidities were obtained from the medical records. Reasons for exclusion were defined as follows: 1) Primary hyperglycemia: an admission diagnosis of diabetic ketoacidosis or hyperosmolar hyperglycemic syndrome; 2) Central nervous system condition: inability to participate due to a psychiatric diagnosis, delirium, or dementia; 3) Life expectancy less than one year; 4) Language barrier with inability to procure interpreter services in a timely fashion; 5) HbA1c < 7.5%; 6) Administrative reasons: short length of stay, patient’s attending or consulting physician refused patient participation, patient’s primary residence out of state; 7) Severe liver or kidney disease; 8) Other comorbidities that could potentially increase the risk of hypoglycemia when insulin would be administered without close follow up; and 9) Unreliable HbA1c value due to a need for or recent blood transfusion, since HbA1c was the main outcome variable of the study.

Statistical Methods

Using a before-and-after design, we compared the time spent screening, number of patients screened, enrollment rate, and cost-effectiveness of each screening method from the perspective of cost of study conduct by sample size. Screening cost was calculated for different sample sizes using the cost per day and enrollment rate of each method. The cost per day was calculated by multiplying the hourly wage of the screener, or research coordinator, by the number of hours spent screening. We used the rate of enrollment to determine the number of screening days necessary to enroll various sample sizes. We estimated the total screening costs by multiplying the cost per day by the number of days required to achieve a given sample size. The following formulae concisely describe the calculations:

The algorithm added a fixed cost of $3,000 to the study. To determine the cost-effectiveness of the algorithm, we performed sensitivity analyses varying the planned sample size and algorithm cost from $3,000 to $10,000. We tested the difference between continuous variables using t-tests and categorical variables using chi square tests. Analyses were performed with SAS Version 9.2 (Cary, N.C.).

Results

A total of 2,698 patients were pre-screened, 826 with chart review and 1872 with the automated pre-screening algorithm. The CR method pre-screened a mean of 13 ± 4 patients per day and enrolled 11 subjects. The APS method pre-screened a mean of 30 ± 16 patients per day and enrolled 20 subjects. Total screening time (pre-screening and screening) decreased from 4 to 2 hours daily. The enrollment rate increased from 0.17 to 0.32 subjects per screening day (P=0.0001; Table 1). The two screening methods yielded slightly different reasons for exclusion, as shown in Table 2 (P=0.0001).

Table 1.

Enrollment rate by screening method

| CR | APS | |

|---|---|---|

| Hours of total screening per day | 2 | 4 |

| Total patients pre-screened | 826 | 1872 |

| Patients confirmed eligible (%) | 32 (3.9) | 41 (2.2) |

| Days of screening | 63 | 62 |

| Patients enrolled (%) | 11 (1.3) | 20 (1.1) |

| Enrollment rate | 0.17 | 0.32* |

| Mean patients pre-screened per day | 13 ± 4 | 30 ± 16* |

CR= Manual Chart Review; APS=Automated Pre-Screening

P= 0.0001 for difference between CR and APS.

Table 2.

Reasons for exclusion by method

| Exclusion | CR N (%) |

APS N (%) |

|---|---|---|

| Primary hyperglycemia | 8 (1.0) | 11 (0.6) |

| Refused | 21 (2.5) | 21 (1.1) |

| CNS condition | 58 (7.0) | 91 (4.9) |

| Life expectancy less than one year | 47 (5.7) | 123 (6.6) |

| Language barrier: unable to procure interpreter services | 8 (1.0) | 89 (4.8) |

| Hemoglobin A1c at goal | 405 (49.0) | 873 (46.6) |

| Administrative reasons | 107 (12.9) | 169 (9.0) |

| Other comorbidities that increase the risk of hypoglycemia with insulin use | 81 (9.8) | 304 (16.2) |

| Hemoglobin A1c unreliable | 14 (1.7) | 11 (0.6) |

| Severe liver or kidney disease | 66 (8.0) | 160 (8.6) |

CR= Manual Chart Review; APS= Automated Pre-Screening

P= 0.001 for chi-square between methods; percentages do not sum to 1.0 due to rounding error.

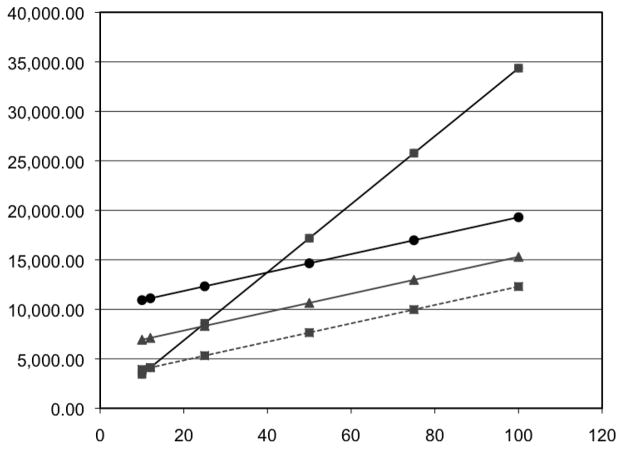

The cost analysis shows that the algorithm was cost-neutral after enrolling 12 subjects with each method ($4,123 with CR and $4,116 with APS). For a target sample size of 50 subjects, the cost of screening would be $17,181 using manual chart review and $8,437 using the algorithm. Additionally, we present the hypothetical cost of running an inpatient study of various sizes using each screening method (Table 3). Varying the baseline costs of the algorithm revealed that the algorithm would be cost-neutral at a sample size of 25 subjects with an algorithm cost of $6,000. Figure 1 shows the information technology investment costs of screening at different sample sizes.

Table 3.

Total cost of screening by sample size

| Sample size (number of subjects) | Cost of manual chart review (dollars) | Cost of algorithm (dollars) |

|---|---|---|

| 10 | 3,436 | 3,930 |

| 12 | 4,123 | 4,116 |

| 25 | 8,590 | 5,325 |

| 50 | 17,181 | 7,650 |

| 75 | 25,772 | 9,975 |

| 100 | 34,363 | 12,300 |

Figure 1.

Total Cost of Screening by Sample Size

X Axis- Number of Subjects

Y Axis- Cost of Screening

Squares: manual method. Circle: algorithm with fixed cost of $10,000. Triangle: Algorithm with fixed cost of $6,000. Square with dashed line: algorithm with fixed cost of $3,000.

Discussion

This analysis of two screening methods for inpatient trial recruitment demonstrates that using an automated algorithm to identify eligible patients is more efficient and cost-effective than manual chart review. We believe our increased enrollment rate after implementing the APS method was related to the higher number of patients pre-screened and screened and the decreased total screening time which allowed us to identify and approach patients earlier in the course of the hospital stay. While development of an automated algorithm adds a significant cost to a small study, the increase in efficiency rendered it cost-neutral after enrolling only 12 subjects (Table 3), indicating that seemingly costly information technology development can benefit even small trials.

Use of an algorithm is most beneficial for studies with low enrollment rates because of the long duration of the accrual period. We did not vary enrollment rates in the sensitivity analysis. However, low eligibility and enrollment rates are characteristic of inpatient trials conducted in general settings, which emphasizes the need for fast and effective screening methods. In our trial, approximately 3% of screened subjects were eligible and 1.2% agreed to participate. Using slightly different criteria, Cohen and associates report a 7% eligibility rate and a 3% enrollment rate in an inpatient intervention to improve geriatric management (6). Of 11,796 patients screened, only 409 eligible patients were enrolled. In a randomized inpatient trial for a smoking cessation intervention, 36% of the inpatients identified were eligible and only 17% of the eligible population agreed to participate (7). These percentages show that approximately half of the patients meeting eligibility criteria in inpatient trials refuse to enroll. Refusal of eligible patients to participate in clinical trials constitutes another barrier to successful recruitment. The reasons leading to refusal are currently being investigated (3).

The use of technology has been increasingly incorporated in the conduct of clinical trials in an attempt “to optimize various parts of the clinical trial process” (8). As observed in our study and elsewhere (9), such methods have a significant impact in the pre-screening phase, which thus has an effect on screening and finally on enrollment. (To identify other studies that have used automated screening and recruitment methods, we searched Pubmed and Google Scholar using the keywords “inpatient trial”, “screening”, “recruitment” and PubMed Mesh terms “Clinical Trial as Topic”, “Patient Selection” and “Medical Informatics”. We also performed secondary searches within the bibliographies of the articles retrieved from our preliminary search.) In a national multi-center epilepsy trial in New Zealand, researchers developed a website to facilitate subject recruitment and data collection (10). As community based research becomes more common, electronic data can aid recruitment in primary care settings (11,12) given the appropriate privacy controls (13). McGregor and associates (14) describe the use of an algorithm to identify potential study participants using primary care data to select patients based on the trial’s inclusion and exclusion criteria. These and other studies (8,15–18) mainly tested the feasibility of incorporating automated recruitment methods into practice and showed that in fact it is possible to do so.

However, fewer studies have reported an increase in subject recruitment and/or efficiency of enrollment procedures following the introduction of automated methods. Dugas noted a zero to 40% increase in recruitment and an estimated time saving of 10 minutes per patient enrolled after the implementation of an algorithm that identified potentially eligible subjects, paired with a notification system that alerted study physicians of such patients (4); success (or lack therof) of the algorithm was related to the quality of information system data pertinent to the inclusion and exclusion criteria. In order to increase the number of physician referrals for a clinical trial, Embi et al used a similar program, which not only augmented the number of physician referrals by more than tenfold (5.7/month to 59.5/month) but also doubled the enrollment rate (19). Similarly, Weidner and associates, who also used an automated notification system, reported alerts for 84% of potentially eligible patients compared to 56% prior to the implementation of the system (20).

The cost-effectiveness of low-cost automated methods compared to manual review has been reported elsewhere. Nkoy and colleagues (21) compared the costs of an automated system that identified potentially eligible patients for clinical trials and found agreement with between the algorithm and manual identification (kappa of 0.84), at a much lower cost (one time fee of approximately $100) vs. manual identification ($1,200/month), with a 2 hour reduction in time to identification, similar to our algorithm (21). It may be more expensive for other centers to implement automated methods, as it was for us, prompting questions about cost-effectiveness in these settings. While the benefit may be obvious for large, multicenter trials (22,23), as electronic medical records become integrated into more health care systems, it becomes increasingly practical and surprisingly cost-effective for even single-center, small trials to adopt similar methods, as this analysis demonstrates.

Because of the before-and-after design of this study, we cannot rule out unmeasured reasons for improved enrollment, but the dramatic difference in numbers of patients screened and the decreased screening time, allowing us to approach subjects earlier in the course of their hospital stay, were clearly major factors. We did not calculate sensitivity and specificity of the screening methods since the goal of this study was not to gauge which screening method would capture the most accurate number of inpatients with uncontrolled diabetes at MGH, but rather to determine which method would provide the highest enrollment rate while being cost-effective. Other limitations include the fact that the algorithm may miss patients who would be detected by chart review, since one disadvantage of using automated methods with EHR is the inability of an algorithm to capture unstructured text (4). Specifically, subjects with missing data, such as coded diabetes diagnosis or, less commonly, glucose data, would escape detection by the algorithm, as was seen in the Dugas trial (4). However, this disadvantage was outweighed by the ability of the algorithm to capture significantly more patients overall. Finally, while the algorithm helped boost enrollment by identifying more patients, it failed to signal other possible reasons for non-enrollment that might be uncovered by chart review, requiring human involvement in the screening process, or, potentially, an algorithm with the capability of searching free text.

In summary, use of an automated algorithm as a pre-screening method increased screening efficiency and enrollment rate and was more cost-effective than manual chart review for an inpatient trial. Due to the time constraints and the challenges of enrolling eligible patients in inpatient trials, even small trials with low enrollment rates should consider budgeting for development of an automated algorithm to assist with patient screening.

Acknowledgments

We would like to thank Ellen Nicholson BA, Richard Pompei RN, and Tiffany Soper FNP for their work on the trial. We would also like to thank Anika Hedberg BA for her assistance with the graphics.

Funding Acknowledgements

This research study was funded by a Career Development Award from the National Institute of Diabetes and Digestive and Kidney Diseases to DJW (K23 DK 080 228).

Footnotes

Disclosure statement: The authors have no relationships to disclose.

References

- 1.Canavan C, Grossman S, Kush R, Walker J. Integrating Recruitment into eHealth Patient Records. [Accessed October 2011];Applied Clinical Trials Online. 2006 http://appliedclinicaltrialsonline.findpharma.com/appliedclinicaltrials/article/articleDetail.jsp?id=334569.

- 2.Sanders KM, Stuart AL, Merriman EN, Read ML, Kotowicz MA, Young D, et al. Trials and tribulations of recruiting 2,000 older women onto a clinical trial investigating falls and fractures: Vital D study. BMC Med Res Methodol. 2009;9:78. doi: 10.1186/1471-2288-9-78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rengerink KO, Opmeer BC, Logtenberg SL, Hooft L, Bloemenkamp KW, Haak MC, et al. IMproving PArticipation of patients in Clinical Trials - rationale and design of IMPACT. BMC Med Res Methodol. 2010;10:85. doi: 10.1186/1471-2288-10-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dugas M, Lange M, Müller-Tidow C, Kirchhof P, Prokosch H-U. Routine data from hospital information systems can support patient recruitment for clinical studies. Clin Trials. 2010;7:183–9. doi: 10.1177/1740774510363013. [DOI] [PubMed] [Google Scholar]

- 5.Dugas M, Amler S, Lange M, Gerss J, Breil B, Köpcke W. Estimation of patient accrual rates in clinical trials based on routine data from hospital information systems. Methods Inf Med. 2009;48:263–6. doi: 10.3414/ME0582. [DOI] [PubMed] [Google Scholar]

- 6.Cohen HJ, Feussner JR, Weinberger M, Carnes M, Hamdy RC, Hsieh F, et al. A controlled trial of inpatient and outpatient geriatric evaluation and management. N Engl J Med. 2002;346:905–12. doi: 10.1056/NEJMsa010285. [DOI] [PubMed] [Google Scholar]

- 7.Rigotti NA, Thorndike AN, Regan S, McKool K, Pasternak RC, Chang Y, et al. Bupropion for smokers hospitalized with acute cardiovascular disease. Am J Med. 2006;119:1080–7. doi: 10.1016/j.amjmed.2006.04.024. [DOI] [PubMed] [Google Scholar]

- 8.Butte AJ, Weinstein DA, Kohane IS. Enrolling patients into clinical trials faster using RealTime Recuiting. Proc AMIA Symp. 2000:111–5. [PMC free article] [PubMed] [Google Scholar]

- 9.Cuggia M, Besana P, Glasspool D. Comparing semi-automatic systems for recruitment of patients to clinical trials. Int J Med Inform. 2011;80:371–88. doi: 10.1016/j.ijmedinf.2011.02.003. [DOI] [PubMed] [Google Scholar]

- 10.Bergin PS, Ip T, Sheehan R, Frith RW, Sadleir LG, McGrath N, et al. Using the Internet to recruit patients for epilepsy trials: Results of a New Zealand pilot study. Epilepsia. 2010;51:868–73. doi: 10.1111/j.1528-1167.2009.02393.x. [DOI] [PubMed] [Google Scholar]

- 11.Page MJ, French SD, McKenzie JE, O’Connor DA, Green SE. Recruitment difficulties in a primary care cluster randomised trial: investigating factors contributing to general practitioners’ recruitment of patients. BMC Med Res Methodol. 2011;11:35. doi: 10.1186/1471-2288-11-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dyas JV, Apekey T, Tilling M, Siriwardena AN. Strategies for improving patient recruitment to focus groups in primary care: a case study reflective paper using an analytical framework. BMC Med Res Methodol. 2009;9:65. doi: 10.1186/1471-2288-9-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.de Lusignan S, van Weel C. The use of routinely collected computer data for research in primary care: opportunities and challenges. Fam Pract. 2006;23:253–63. doi: 10.1093/fampra/cmi106. [DOI] [PubMed] [Google Scholar]

- 14.McGregor J, Brooks C, Chalasani P, Chukwuma J, Hutchings H, Lyons RA, et al. The Health Informatics Trial Enhancement Project (HITE): Using routinely collected primary care data to identify potential participants for a depression trial. Trials. 2010;11:39. doi: 10.1186/1745-6215-11-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dugas M, Lange M, Berdel WE, Müller-Tidow C. Workflow to improve patient recruitment for clinical trials within hospital information systems - a case-study. Trials. 2008;9:2. doi: 10.1186/1745-6215-9-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Heinemann S, Thüring S, Wedeken S, Schäfer T, Scheidt-Nave C, Ketterer M, et al. A clinical trial alert tool to recruit large patient samples and assess selection bias in general practice research. BMC Med Res Methodol. 2011;11:16. doi: 10.1186/1471-2288-11-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Embi PJ, Jain A, Clark J, Harris CM. Development of an electronic health record-based Clinical Trial Alert system to enhance recruitment at the point of care. AMIA Annu Symp Proc. 2005:231–5. [PMC free article] [PubMed] [Google Scholar]

- 18.Lee Y, Dinakarpandian D, Katakam N, Owens D. MindTrial: An Intelligent System for Clinical Trials. AMIA Annu Symp Proc. 2010;2010:442–6. [PMC free article] [PubMed] [Google Scholar]

- 19.Embi PJ, Jain A, Clark J, Bizjack S, Hornung R, Harris CM. Effect of a Clinical Trial Alert System on Physician Participation in Trial Recruitment. Arch Intern Med. 2005;165:2272–7. doi: 10.1001/archinte.165.19.2272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Weiner DL, Butte AJ, Hibberd PL, Fleisher GR. Computerized recruiting for clinical trials in real time. Ann Emerg Med. 2003 Feb;41(2):242–6. doi: 10.1067/mem.2003.52. [DOI] [PubMed] [Google Scholar]

- 21.Nkoy FL, Wolfe D, Hales JW, Lattin G, Rackham M, Maloney CG. Enhancing an existing clinical information system to improve study recruitment and census gathering efficiency. AMIA Annu Symp Proc. 2009:476–80. [PMC free article] [PubMed] [Google Scholar]

- 22.Street A, Strong J, Karp S. Improving patient recruitment to multicentre clinical trials: The case for employing a data manager in a district general hospital-based oncology centre. Clin Oncol. 2001;13:38–43. doi: 10.1053/clon.2001.9212. [DOI] [PubMed] [Google Scholar]

- 23.Campbell MK, Snowdon C, Francis D, Elbourne D, McDonald AM, Knight R, et al. Recruitment to randomised trials: Strategies for trial enrollment and participation study. The STEPS study. Health Technol Assess. 2007;11:iii, ix–105. doi: 10.3310/hta11480. [DOI] [PubMed] [Google Scholar]