Abstract

Context:

In the emerging Learning Health System (LHS), the application and generation of medical knowledge are a natural outgrowth of patient care. Achieving this ideal requires a physician workforce adept in information systems, quality improvement methods, and systems-based practice to be able to use existing data to inform future care. These skills are not currently taught in medical school or graduate medical education.

Case Description:

We initiated a first-ever Learning Health Systems Training Program (LHSTP) for resident physicians. The curriculum builds analytical, informatics and systems engineering skills through an active-learning project utilizing health system data that culminates in a final presentation to health system leadership.

Findings:

LHSTP has been in place for two years, with 14 participants from multiple medical disciplines. Challenges included scheduling, mentoring, data standardization, and iterative optimization of the curriculum for real-time instruction. Satisfaction surveys and feedback were solicited mid-year in year 2. Most respondents were satisfied with the program, and several participants wished to continue in the program in various capacities after their official completion.

Major Themes:

We adapted our curriculum to successes and challenges encountered in the first two years. Modifications include a revised approach to teaching statistics, smaller cohorts, and more intensive mentorship. We continue to explore ways for our graduates to remain involved in the LHSTP and to disseminate this program to other institutions.

Conclusion:

The LHSTP is a novel curriculum that trains physicians to lead towards the LHS. Successful methods have included diverse multidisciplinary educators, just in time instruction, tailored content, and mentored projects with local health system impact.

Keywords: Quality Improvement, Data Use and Quality, Electronic Health Record, Provider Education, Learning Health System

Introduction

In the emerging Learning Health System (LHS), the application and generation of medical knowledge are a natural outgrowth of patient care. Achieving this ideal requires a physician workforce adept in information systems, quality improvement (QI) methods, and systems-based practice to be able to use existing data to inform future care. These skills are not currently taught in medical school or graduate medical education.

In response to this need, we initiated a first-ever Learning Health Systems Training Program (LHSTP) for resident physicians. The yearlong curriculum builds analytical, informatics, and systems engineering skills through an active-learning project that utilizes health system data and culminates in a final presentation to health system leadership. LHSTP has been in place for two years, with 14 participants from multiple medical disciplines.

This is a retrospective description of the development, implementation, early experience, feasibility, and acceptance with the Duke University LHSTP in its first two-year pilot phase. While we have had early successes, we also wish to highlight the obstacles we encountered and how we refined our approach in response. We hope this experience will be valuable to other educators as they consider similar endeavors.

The Physician’s Role in the Learning Health System

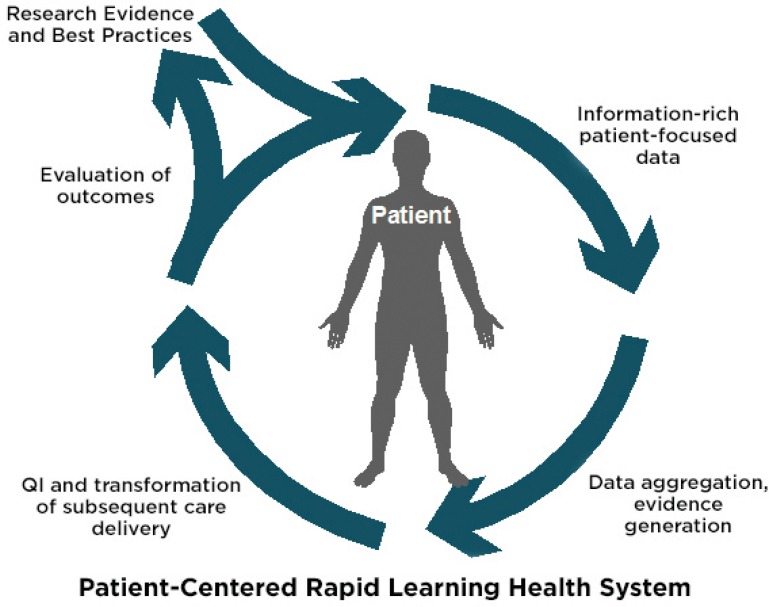

The LHS describes an environment where the application and generation of medical knowledge are a natural outgrowth of patient care.1 To realize this requires patient-level data gathering, real-time aggregation as well as analysis to prompt changes care in delivery, to allow real-time outcomes evaluation, and to produce new best practices (Figure 1).2 As compared to the current paradigm wherein evidence from clinical research is applied unevenly and with great delay from the time of initial evidence generation, the LHS actively learns through the process of care delivery and can apply this knowledge rapidly to patient care. The LHS will require fundamental changes to health information management infrastructure, linkage of disparate health data sources, data quality improvements, creation of new decision support tools, and new ethical and legal frameworks concerning human subjects protections.3

Figure 1.

The Learning Health System Conceptualized

Reports emerging from early LHS projects demonstrate the promise of this ideal, but have been limited to narrow disease states or health conditions with highly engaged physicians.2,4 Scaling up this vision to encompass all patients and conditions within a health system holds tremendous promise, but also poses significant challenges.

Importantly, the LHS is not merely a technological exercise. It requires a supportive organizational structure and a skilled, highly engaged physician workforce. Just as the evidence-based medicine (EBM) movement required physicians to learn basic principles of research design to interpret and apply evidence, the LHS movement requires physicians to acquire additional skills to interact with emerging LHS information systems and to engage in true systems-based practice. These skills include the following:

Quality Improvement: methods of analysis and implementation;

Informatics: the use of data, information, knowledge, and technology in medicine;

Statistical reasoning: a basic understanding of statistical principles; and

Systems engineering and systems-based practice: an understanding of the systems in which the physician practices and how to change these systems.

Today’s physicians do not generally possess these skills. While programs to address this need are emerging for physician leaders, and quality improvement curricula are increasingly common, we are aware of no graduate medical education (GME) curriculum to teach a comprehensive learning health care skill set and viewpoint.

To best harness the promise of LHS, a culture change similar to the EBM movement is necessary. Because of the amount of clinical data and the diverse applications, a robust LHS needs a multitude of physicians from diverse clinical backgrounds to shape the process of data-driven continuous improvement. We recognized a need to equip future physicians with an understanding of these LHS methods and designed a curriculum to introduce these concepts so that our trainees could become leaders and catalysts in promoting the creation and application of the LHS.

Case Description

The Duke University LHSTP was created in 2013 with the help of grants from the Association of American Medical Colleges (AAMC) and Duke University Health System leadership (see acknowledgements). The program’s development was a collaborative effort, with cross-disciplinary engagement from biostatistics, biomedical informatics, quality improvement, and health system administration.

LHSTP leverages existing health-system data infrastructure. Duke Enterprise Data Unified Content Explorer (DEDUCE) is a web-based query tool for the Duke clinical data warehouse that has supported numerous research and quality improvement projects, and enables access to patient-level health data spanning three decades.5,6 Duke also had recently adopted and begun to optimize Epic—a unified electronic health record system (EHR), which presented both a motivation to invest in the LHSTP and a use-case opportunity.

Trainees

Applications were solicited across the internal medicine residency and subspecialty fellowships for the first year. We admitted applicants who demonstrated understanding and commitment to the program’s goals, and those who could make a time commitment to be present at most didactic sessions. Six competitively selected internal medicine residents and subspecialty fellows formed the initial cohort. In general, they had some prior experience in quality improvement and limited research experience. Data skills and statistical knowledge were generally immature relative to their clinical expertise. The second cohort was larger, with eight trainees from diverse specialty backgrounds, including surgery and neonatology.

Curriculum

The yearlong LHSTP curriculum is delivered in two-hour sessions scheduled approximately every two weeks from the fall through the spring. Trainees are asked to attend in person, though due to realities of training house staff, we also record sessions for asynchronous web viewing.

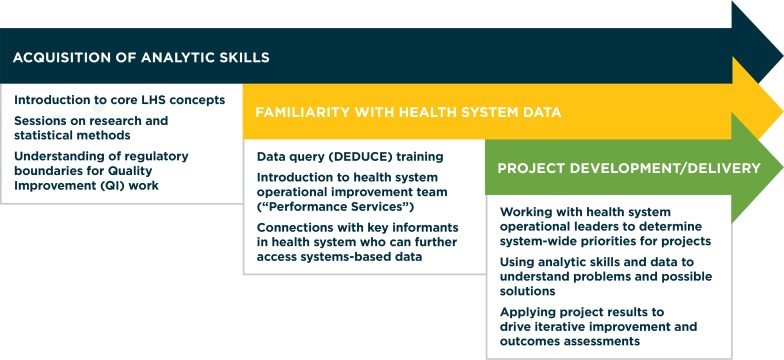

The curricular framework is depicted in Figure 2. The elements were arrived at through a consensus among course leaders referencing published descriptions of the LHS.1–3 We surmised that the most effective way to teach the content would be to give an overview of the LHS goals, followed by the concepts underlying these aims (statistical reasoning, quality improvement methodologies). This is followed by an introduction to the available tools with which to apply these concepts (institutional data resources), which can be used toward their final product. We employ content experts to teach quality improvement methods, statistical methods, database design, and research methods, and to share local and national examples of LHS principles. The curriculum in its current form is outlined in Table 1.

Figure 2.

Schematic of Curricular Goals and Organization

Table 1.

Curricular Sessions and Goals

| TOPIC | OBJECTIVES | FACULTY | ASSIGNMENTS |

|---|---|---|---|

| Introduction: What Is Learning Health? | Program introduction LHS Concepts Project pitches |

AZ | |

| Data Systems | Attributes of Data Introduction to data systems at Duke |

NW, KP, BC, LR | Project Charter assigned |

| Database | What is a database? Setting up a database Introduction to DEDUCE |

GS, Director of DEDUCE repository | Final Project topic chosen |

| Data Management | Introduction to REDCap CRF creation |

Director of Duke Office of Clinical Research | Present Aims section of project charter |

| Basic Statistics | Introduce statistical reasoning | GS | Present full project charter Minichart-review exercise assigned |

| Minichart review | Review progress Feedback on scope, data sources, outcomes |

All | Group presentations |

| QI in health care – traditional QI models | Describe QI methodologies | Medical Director of Duke University Hospital Mortality and Quality Review | |

| QI versus LHS | Review conceptual similarities between QI and aims of LHS Highlight differences Statistical methods in learning health |

GS | |

| Informatics | Describe clinical informatics | BC | “Work in Progress” update from groups Group project time |

| Health Systems Engineering using the EHR | Describe a learning health project executed by faculty | Faculty member with expertise in learning health projects8 | |

| LHSTP frames of reference | Historical background to computational abilities of the LHS | Faculty member with expertise in bioinformatics and funded NIH BD2K projects9 | |

| Data visualization Longitudinal Data |

Conceptual framework for working with, interpreting, and presenting longitudinal data | GS | |

| Project Troubleshooting | Open session for removing roadblocks for projects | All | |

| HSR | Describe traditional HSR and how the LHS can support research | Faculty with HSR expertise10 | |

| NIH Collaboratory | Describe current national efforts to create data standards that will enable multisite LHS functions | Faculty with expertise in informatics11 | |

| Practice Presentations | Teams practice their final presentations Feedback for improvement |

Practice final presentation | |

| Final Presentation |

Notes: DEDUCE = Duke Enterprise Data Unified Content Explorer; REDCap = Research Electronic Data Capture; CRF = case report form; QI = quality improvement; EHR = electronic health record; NIH BD2K = National Institutes of Health Big Data of Knowledge; HSR = Health Service Research; NIH = National Institutes of Health

In the spirit of active learning, the capstone of the curriculum is a group project presentation to the health system leadership. The key curricular challenge is developing the necessary skills to identify problems, to plan projects around them, and to begin work on these projects early in the year. Similarly, we have been challenged to overcome common limitations of clinical information systems and to provide the mentorship and ancillary support (e.g., statistical expertise) to make the most of the projects. As the year progresses, session time becomes more devoted to use-case examples of LHS concepts in action and active collaborative time to enhance their projects.

Statistical content is delivered by a PhD-level statistician with expertise in adult learning and in teaching physicians and other health care practitioners.7 We found the most effective way to deliver the statistical content revolved around the construction of an appropriate database. The training focuses on how to frame clinical questions and answer them using descriptive statistics and data visualization tools. Formal hypothesis testing and inferential statistics are de-emphasized. This reflects a focus on rapid-cycle quality improvement, which is philosophically distinct from inferential statistics, instead focusing on important observed trends.

One particularly valuable exercise is to have trainees implement these concepts by constructing a small (five-patient) “minidatabase” around a clinical question of their choice. While the goal of the LHS is to operate at scale, clinical trainees are often unfamiliar with the characteristics of the available structured clinical data. Having them begin with a small sample is intended to reinforce several skills: framing an appropriate clinical question, designing a data set that adequately addresses that question, developing a search strategy, getting and cleaning data, and reporting results using data visualization software. Once familiar with the relevant data, trainees are able to more effectively communicate their needs to data managers and statisticians for the final project.

Findings

Trainee Experience

To evaluate the need for program changes in the third year, satisfaction with LHSTP was assessed by an anonymous survey emailed midway through the second year. Eleven (79 percent) of 14 trainees responded. The majority (n=7; 64 percent) reported satisfaction with the program; three trainees (27 percent) were neutral. Most (n=9, 82 percent) trainees reported interest in the project topics. Only about half (n=6; 55 percent) felt contact with project mentors was adequate. At that time, half (n=5) would recommend the LHSTP to peers.

Qualitative feedback revealed that trainees had enrolled hoping to acquire quality improvement and statistical skills and to implement, rather than merely to plan, their projects. Several appreciated the current limitations of health information structures and data quality.

In a debriefing session at the conclusion of the second year, trainees expressed gratitude for the curriculum. Many wished to remain involved in the program in some capacity, and favored more mentored “over-the-shoulder” time for project work, rather than lectures, in the subsequent year. Their experience in their projects for the LHSTP had provided a new perspective, helping them realize the importance of accurate, structured clinical data. Some remarked that it changed the way they used the EHRs; they were more likely to enter structured data for problem lists, family history, and other fields.

Final Presentations

The curriculum culminates in a final presentation for health system leadership. The final topics were diverse: (1) a robust analysis of trends in prolonged observational stays, sources of these admissions, and clinical predictors; (2) exploration of an association between maternal magnesium tocolysis for preterm labor and neonatal bowel perforations; (3) the value of individual components in an early recovery after surgery program; (4) appropriate sedation in intensive care units; and (5) appropriateness of deep venous thrombosis prophylaxis in an inpatient medicine unit.

The projects highlighted the key skills of learning health and curricular goals as previously outlined:

Quality Improvement: each project was chosen with input from health system leadership, based on a perceived gap in quality of care delivery.

Informatics: each project was a hands-on lesson in the clinical information systems available at Duke—highlighting strengths, weaknesses, and ways for improvement.

Statistical reasoning: each project used at least descriptive statistics; the choices of which statistic and the data collected, and visualization techniques, were informed by the curriculum.

Systems engineering and systems-based practice: while none of our projects evaluated clinical change, the lessons in data quality created better users of the EHR and opportunities for participants to help improve data quality in their respective clinical areas.

The final presentations ranged in quality and comprehensiveness, relating in large part to the analytical skills of the team members but also to the data sources available with which to answer their chosen questions. As an example, project 2 had difficulty identifying the appropriate control cohort and difficulty in identifying maternal-neonatal pairs within the EHR. By contrast, project 1 attempted derivation of a multivariate model to predict prolonged observation admissions.

Limitations due to data quality were a recurrent theme of the presentations, as in for example, the main finding of projects 4 and 5. Assessing the appropriateness of sedation required nursing assessments that were not being collected within the EHR. Project 5 could not resolve discrepancies between a pharmacy utilization database and the Medication Administration Record (MAR) showing when the medications were delivered. Project 1 was also limited by questions of data accuracy and quality and by potential disparities between clinical and billing data. Even project 3, which utilized an existing quality data set, required significant work to clean and complete the data set. Many of these challenges with data arose because we did not have access to raw data from the EHR, but instead used other reports or databases that were intended for alternative purposes. These challenges have served as important lessons to the LHSTP and the health system leaders.

Major Themes

Implementing the LHSTP, as a novel curriculum, presented a steep learning curve for the course leaders. Early successes relied on a supportive environment, health system buy-in, diverse expertise, and enthusiastic learners. Challenges we have encountered include irregular attendance, provision of faculty mentorship, and challenges inherent in immature data systems.

To address these we have made some important changes for the upcoming academic year. We have reorganized the curriculum to front-load the skills needed to begin work on the final project and to provide just-in-time instruction. Selection of trainees’ final topics will occur earlier in the year, allowing more time for a robust product. We plan to use trainees’ selected topics for the minidatabase exercise (rather than a common, assigned topic), which will build familiarity with data analysis and will identify crucial barriers to trainees’ chosen topics sooner. This should allow refinement of the approach and identification of additional supporting resources. Along these lines, we will make additional statistical support available for groups who do not have skills in using statistical software.

We continue to gain greater operational IT support and to learn about the data structure at our institution. The initial projects have taught both the learners and the course directors about limitations of the readily accessed data at Duke. Subsequent projects have addressed limitations in the data. For example, the chief aim of a current project is to identify the mechanism for linking neonatal-maternal pairs within the health record. We knew of this limitation based on the project presented in year 2, so when a subsequent group with similar interests came through we knew to direct them to this problem early on. Many of our current projects have identified opportunities that allow access to the raw EHR data (Epic Clarity tables), accessed through professional data analysts.

This has required us to be more active in curating viable projects with identified mentors. This year, there have been several large-scale quality improvement efforts at our institution whose clinical champions have asked us for assistance in analyzing the resulting data. This development will allow our current trainees to join a project in the outcome evaluation stage and also work with professional data scientists.

Based on our survey results, we agreed that trainees could benefit from more mentorship, so we had initially intended to reduce the number of trainees back to six. But subsequently two highly motivated applicants joined the program, bringing the total to eight for the third cohort. We have set expectations for in-person meeting attendance and will better coordinate meetings with trainees’ schedules. We will also work more proactively to strengthen the ties between trainees and project mentors, which may involve recognition—financial or otherwise—of these mentors’ effort. We have asked our current mentors to attend sessions when their group is presenting their project charters and interim results to ensure they remain actively involved in the projects and accountable for their progress.

We are also pursuing an official certificate of completion recognized by the Office of Graduate Medical Education, as currently exists at our institution for leadership, education, ethics, and patient safety. We are working to retain skilled and interested graduates of the LHSTP in leadership roles, which we hope will extend to their junior faculty roles.

While our trainees achieved remarkable accomplishments in one year, time constraints meant that the projects could not progress toward implementation. Not every project idea once studied is amenable to an intervention, and our trainees, faculty, and health system administration appreciate that. For such projects, we emphasize what we learned from the study phase of the cycle. While this does not demonstrate the rapid-cycle improvement we hope to see out of a fully functional LHS, it is also an important lesson to understand a problem, the limitations of our current environment, and what it might take to change these, even if change is not presently feasible. On the other hand, we are advancing promising projects to the execution phase by having members of our third cohort pick up a project where the prior cohort left off, and by supporting graduates in continuing their projects following completion of their training. One such project has segued into a systemwide, health-system improvement effort with a focus on improving EHR data quality and accuracy of nursing assessments, which will allow rapid quality reporting and eventually accumulate a body of evidence to support comparative effectiveness. We also hope the current potential projects, which evaluate existing interventions, will provide a more comprehensive LHS experience.

We are exploring ways to disseminate our curriculum to other institutions. We have hosted a visiting professor, supported by an AAMC grant. We hope that our departing graduates will also take the curriculum to other institutions. Most importantly, as the LHSTP continues to grow, we plan to assess feasibility of further scaling to include more GME trainees and nonphysician health care workers (e.g., nurses and pharmacists), prospectively measure its impact on trainees, and better define the required infrastructure to make such a curriculum successful.

Conclusion

In summary, the LHSTP is a novel curriculum that trains physicians to participate in a future LHS. Its success can be attributed to diverse multidisciplinary expertise and content tailored to our trainees’ unique needs, organized around hands-on projects with health-system impact. Most importantly, though, we recognize that we are teaching toward an ideal system that does not yet exist.

As our program has matured we have learned more about the informatics resources and data structures at our institution, including the strengths and limitations of existing data. This has translated to trainee projects centered on improving existing data. It has also prompted us to identify colleagues who can grant us access to the best sources of data. We have become increasingly visible within the institution, and in this process are establishing stronger partnerships with others engaged in quality improvement work and clinical innovations where our trainees can see the impact of their work.

While we have focused on the more data-oriented tasks of the LHS—scanning and surveillance, and evaluation—we recognize there are other important skills to be built around design of interventions, implementation, and refinement.2 We hope that as we continue to build relationships around the institution and as prior projects mature, subsequent cohorts and graduates will gain these diverse experiences.

Our ongoing evaluation of this program will be based on quality of final projects, feedback from health system leadership, and periodic evaluations from current students and graduates. We hope that this identifies particularly valuable skills going forward or skills we could improve on developing. Realizing this, and in the spirit of continual improvement, we anticipate that the LHSTP will continue to rapidly evolve, improving our learners’ experience and adapting to future conditions.

Acknowledgments

Funding: AAMC pioneer 2014 and Champion 2015 awards, Duke University Office of the Chancellor for Health Affairs, Duke University School of Medicine, Duke Institute for Healthcare Innovation LHSTP Graduates(not listed in author line): Aaron Mitchell, MD; Kevin Shah MD, MBA; Angela Lowenstern, MD; Leslie Pineda, MD; Anastasiya Chystsiakova, MD; Jeffrey Yang, MD; Mohammed Adam, MD; Melissa Wells, MD; John Yeatts, MD; Kathryn Hudson, MD. Disclosures: Dr. Cameron is supported by the Duke Training Grant in Nephrology 5T32DK007731. Dr. Abernethy has research funding DARA Biosciences, Glaxo Smith Kline, Celgene, Helsinn Therapeutics, Dendreon, Kanglaite, Bristol Myers Squibb and Pfizer, as well as federal sources; these funds are all distributed to Duke University Medical Center to support research including salary support for Dr. Abernethy. Pending industry funded projects include: Galena Biopharma and Insys Therapeutics. Since 2012, she has had consulting agreements with or received honoraria from (>$5,000 annually) Bristol Myers Squibb and ACORN Research. Dr. Abernethy has corporate leadership responsibility in Flatiron Health Inc (health information technology [HIT] company; Chief Medical Office & Senior Vice President), athenahealth Inc (HIT company; Director), Advoset LLC (education company; Owner), and Orange Leaf Associates LLC (IT development company; Owner).

References

- 1.Institute of Medicine Roundtable on Evidence-Based M . The National Academies Collection: Reports funded by National Institutes of Health. In: Olsen LA, Aisner D, McGinnis JM, editors. The Learning Healthcare System: Workshop Summary. Washington (DC): National Academies Press (US) National Academy of Sciences; 2007. [PubMed] [Google Scholar]

- 2.Greene SM, Reid RJ, Larson EB. Implementing the Learning Health System: From Concept to Action. Annals of Internal Medicine. 2012;157(3):207–10. doi: 10.7326/0003-4819-157-3-201208070-00012. [DOI] [PubMed] [Google Scholar]

- 3.Institute of M . The National Academies Collection: Reports funded by National Institutes of Health. In: Grossmann C, Powers B, McGinnis JM, editors. Digital Infrastructure for the Learning Health System: The Foundation for Continuous Improvement in Health and Health Care: Workshop Series Summary. Washington (DC): National Academies Press (US) National Academy of Sciences.; 2011. [PubMed] [Google Scholar]

- 4.Forrest CB, Crandall WV, Bailey LC, Zhang P, Joffe MM, Colletti RB, et al. Effectiveness of anti-TNFalpha for Crohn disease: research in a pediatric learning health system. Pediatrics. 2014;134(1):37–44. doi: 10.1542/peds.2013-4103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Horvath MM, Rusincovitch SA, Brinson S, Shang HC, Evans S, Ferranti JM. Modular design, application architecture, and usage of a self-service model for enterprise data delivery: the Duke Enterprise Data Unified Content Explorer (DEDUCE) Journal of biomedical informatics. 2014;52:231–42. doi: 10.1016/j.jbi.2014.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Horvath MM, Winfield S, Evans S, Slopek S, Shang H, Ferranti J. The DEDUCE Guided Query tool: providing simplified access to clinical data for research and quality improvement. Journal of biomedical informatics. 2011;44(2):266–76. doi: 10.1016/j.jbi.2010.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Samsa GPL, T W, Zaas A, Howie L, Abernethy AP. Designing a Course in Statistics for a Learning Health Systems Training Program. The Journal of Effective Teaching. 2014;14(3):55–67. [Google Scholar]

- 8.Gellad ZF, Thompson CP, Taheri J. Endoscopy unit efficiency: quality redefined. Clinical gastroenterology and hepatology: the official clinical practice journal of the American Gastroenterological Association. 2013;11(9):1046–9.e1. doi: 10.1016/j.cgh.2013.06.005. [DOI] [PubMed] [Google Scholar]

- 9.Bot BM, Burdick D, Kellen M, Huang ES. clearScience: Infrastructure for Communicating Data-Intensive Science. AMIA Joint Summits on Translational Science proceedings AMIA Summit on Translational Science. 2013;2013:27. [PubMed] [Google Scholar]

- 10.Drawz PE, Archdeacon P, McDonald CJ, Powe NR, Smith KA, Norton J, et al. CKD as a Model for Improving Chronic Disease Care through Electronic Health Records. Clinical journal of the American Society of Nephrology : CJASN. 2015;10(8):1488–99. doi: 10.2215/CJN.00940115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Richesson RL, Chute CG. Health information technology data standards get down to business: maturation within domains and the emergence of interoperability. Journal of the American Medical Informatics Association : JAMIA. 2015;22(3):492–4. doi: 10.1093/jamia/ocv039. [DOI] [PMC free article] [PubMed] [Google Scholar]