Abstract

Background

Clinicians can miss up to half of patients’ symptomatic toxicities in cancer clinical trials and routine practice. Although patient-reported outcome questionnaires have been developed to capture this information, it is unclear whether clinicians will make use of patient-reported outcomes to inform their own toxicity documentation, or to prompt symptom management activities.

Methods

44 lung cancer patients that participated in a phase 2 treatment trial self-reported 13 symptomatic toxicities derived from the National Cancer Institute’s Common Terminology Criteria for Adverse Events and Karnofsky Performance Status via tablet computers in waiting areas immediately preceding scheduled visits. During visits, clinicians viewed patients’ self-reported toxicity and performance status ratings on a computer interface and could agree or disagree/reassign grades (“shared” reporting). Agreement of clinicians with patient-reported grades was tabulated, and compared using weighted kappa statistics. Clinical actions in response to patient-reported severe (grade 3/4) toxicities were measured (e.g. treatment discontinuation, dose reduction, supportive medications). For comparison, 45 non-trial patients with lung cancer being treated in the same clinic by the same physicians were simultaneously enrolled in a parallel cohort study in which patients also self-reported toxicity grades but reports were not shared with clinicians (“non-shared” reporting).

Results

Toxicities and performance status were reported by patients and reviewed by clinicians at (780/782) 99.7% of study visits in the phase 2 trial which used “shared” reporting. Clinicians agreed with patients 93% of the time with kappas 0.82–0.92. Clinical actions were taken in response to 67% of severe patient-reported toxicities. In the “non-shared” reporting comparison group, clinicians agreed with patients 56% of the time with kappas 0.04–0.48 (significantly worse than shared reporting for all symptoms), and clinical actions were taken in response to 44% of severe patient-reported toxicities.

Conclusion

Clinicians will frequently agree with patient-reported symptoms and performance status, and will use this information to guide documentation and symptom management. (ClinicalTrials.gov: NCT00807573).

Keywords: Patient-reported outcome, adverse event, toxicity, drug development, clinical trial

Introduction

Symptomatic toxicities such as nausea or sensory neuropathy and performance status impairments are common during cancer treatment.1,2 Capturing information about these patient experiences is an essential component of clinical research and of treatment management. The current standard approach for capturing this information in clinical trials involves research staff eliciting and documenting patients’ toxicities using items from the National Cancer Institute’s Common Terminology Criteria for Adverse Events (CTCAE),3 and performance status using standardized questions such as Karnofsky Performance Status (KPS).4 However, this approach, which does not involve systematic patient self-reporting, has been found to miss up to half of patients’ symptoms and functional limitations5–9 and to have low levels of inter-rater reliability.10 The ultimate consequence of this low recognition is underestimation of treatment toxicities and potentially inappropriate clinical management for ailing patients.11

Patient self-reporting has been proposed as an approach to improve the accuracy of symptom and performance status monitoring in trials, and has been found to be reliable, feasible, and valued by patients and clinicians.12–20 There are ongoing efforts to develop a patient-reported outcomes extension of the CTCAE called the PRO-CTCAE.21 However, there are several basic unanswered questions related to implementation of patient-reported toxicities and performance status in trials. First, given that patient and clinician reporting is discrepant, how might we reconcile this discrepancy in a given clinical trial when both patients and clinicians are reporting on the same domains? Second, will clinicians agree with the reports of their patients? And third, will clinicians find the patient-reported information to be actionable (e.g. useful as a basis to prompt treatment changes)?

To address these questions, we designed a pilot study in which patients enrolled in a phase 2 clinical trial and self-reported symptomatic toxicities from the CTCAE and KPS using previously developed and tested items.8,18,22 This information was conveyed electronically to clinicians who could either agree or disagree with the patients’ grades as an approach to reconciliation at the point of care.

Methods

Patients and procedures

All patients enrolling on a previously reported23 phase 2 trial at the Memorial Sloan Kettering Cancer Center evaluating paclitaxel plus pemetrexed and bevacizumab in untreated advanced non-small cell lung cancers, also participated in this patient-reported outcomes correlative study. All participants signed an informed consent document for this Institutional Review Board-approved study (ClinicalTrials.gov: NCT00807573). Treatment was continued until progressive disease or unacceptable toxicity.

At baseline and at each subsequent medical visit, participants were provided with a wireless tablet computer in the waiting area prior to the clinical encounter, via which they self-reported 13 symptomatic toxicities using previously developed and tested patient versions of the CTCAE and KPS,18 and a previously described patient software interface.22 The 13 symptomatic toxicities included alopecia, anorexia, cough, dyspnea, epiphora, epistaxis, fatigue, hoarseness, mucositis, myalgia, nausea, pain, and sensory neuropathy. CTCAE items representing individual toxicities are graded on a five-point ordinal scale anchored to discrete clinical criteria with higher numbers being worse and grades 3 and 4 generally indicating a need for clinical action.3 KPS is graded using an 11-point ordinal percentage scale separated by 10-point increments (e.g. 10%, 20%, 30% … 100%) with higher numbers being better.4

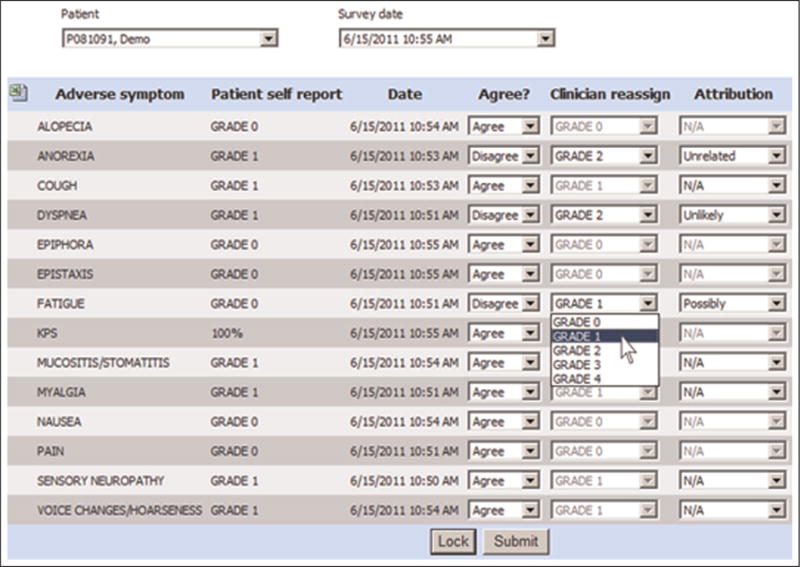

The patient-assigned grades were transmitted to a practitioner software interface that allowed clinicians to view the patient-assigned grades and either agree or reassign any grades in real-time (“shared” reporting) (Figure 1). The practitioner interface was accessed by research nurses via their own tablet computers during visits, following which attending oncologist physician investigators accessed it to view both the patient- and nurse-assigned grades, with an opportunity to further modify the grades before digitally locking them for submission to the institutional clinical research database. The final investigator-locked grades were used as the definitive adverse event documentation for the trial.

Figure 1.

Clinical trial web interface via which clinicians can review and agree or modify patient-reported CTCAE and KPS ratings in real-time.

CTCAE: Common Terminology Criteria for Adverse Events; KPS: Karnofsky Performance Status.

For comparison, a parallel cohort study was conducted simultaneously with the phase 2 trial to which individuals with similar eligibility being treated in the same clinic by the same physicians but who were not enrolled in the clinical trial were enrolled. In this comparison study, a smaller subset of six CTCAE symptomatic toxicities plus KPS were self-reported by patients at clinic visits via tablet computers, but reports were not shared with research nurses or oncologists (“non-shared” reporting). The measured symptoms included anorexia, cough, dyspnea, fatigue, nausea, and pain. These patients received treatment at the discretion of their physicians. This research component was also Institutional Review Board-approved and patients underwent informed consent. Clinical staff similarly documented symptomatic toxicities using CTCAE grades, but without access to patient self-reports.

Outcomes

For both, the phase 2 trial (“shared” reporting) group and the comparison study (“non-shared” reporting) group, the proportion of patients and clinicians agreeing and disagreeing on each CTCAE symptomatic toxicity and KPS was tabulated. The primary comparison was conducted at the third scheduled clinic visit for each patient, and as sensitivity analyses were tabulated at the fifth visit and also by aggregating all CTCAE data across six visits. Clinicians’ actions taken on days when patients self-reported severe (grade 3 or 4) events were tracked via chart abstraction. Clinical actions were categorized based on prior research24 as: no action; holding chemotherapy for that visit; discontinuing treatment; dose-reducing chemotherapy; referring to a subspecialty service; referring for imaging; prescribing supportive medication; increasing analgesic dose; sending to emergency room; and ordering a blood transfusion. In the shared reporting group, rates of clinician agreement, upgrading, and downgrading at each level of patient grade and for each symptom were calculated. A brief paper questionnaire was administered to clinicians caring for patients in the clinical trial after 6 months of participation, based on feasibility assessments used in prior similar research,18 to assess ease of use and satisfaction with the shared reporting system.

Statistical analysis

For both the phase 2 trial (“shared” reporting) group and the comparison study (“non-shared” reporting) group, clinician agreement with patient reports was evaluated for each symptomatic toxicity and overall. We calculated the proportion of patient-clinician symptom pairs for which an identical grade was assigned by patient and clinician, and the proportion of pairs where the clinicians graded lower (downgraded) and graded higher (upgraded). The level of agreement between patients and clinicians for each symptom and KPS was estimated for the shared and non-shared reporting groups with a weighted kappa statistic.15 A kappa value less than 0.4 is generally considered as poor agreement; 0.4–0.75 is considered as fair-to-good agreement; and 0.75 or more is considered as excellent agreement. In addition, the level of agreement for each symptom was compared between groups using a chi-square statistic. Clinical actions taken in response to severe (grade 3 or 4) toxicities were tabulated descriptively for each group.

Results

Baseline characteristics of patients enrolled in the phase 2 trial (shared reporting group; N = 44) and in the comparison (non-shared reporting group; N = 45) are shown in Table 1. Participants were similar in sex, age, race, and baseline performance status.

Table 1.

Baseline characteristics of the patient participants.

| Patient attributes | Phase 2 trial group (Shared CTCAE/KPS Reporting) (N = 44) |

Comparison group (Non-shared CTCAE/KPS reporting) (N = 45) |

|---|---|---|

| Sex | ||

| Female | 22 (50%) | 21 (47%) |

| Age | ||

| Median (range) | 59 (31–77) | 59 (38–76) |

| >70 | 3 (7%) | 2 (4%) |

| 50–70 | 35 (80%) | 33 (73%) |

| <50 | 7 (16%) | 10 (22%) |

| Race | ||

| Caucasian | 41 (93%) | 38 (84%) |

| African-American | 0 (0%) | 4 (9%) |

| Other | 3 (7%) | 3 (7%) |

| Baseline KPS | ||

| ≥90 | 19 (43%) | 17 (38%) |

| 80 | 20 (45%) | 23 (51%) |

| 70 | 5 (11%) | 5 (11%) |

| ≤60 | 0 (0%) | 0 (0%) |

CTCAE: Common Terminology Criteria for Adverse Events; KPS: Karnofsky Performance Status.

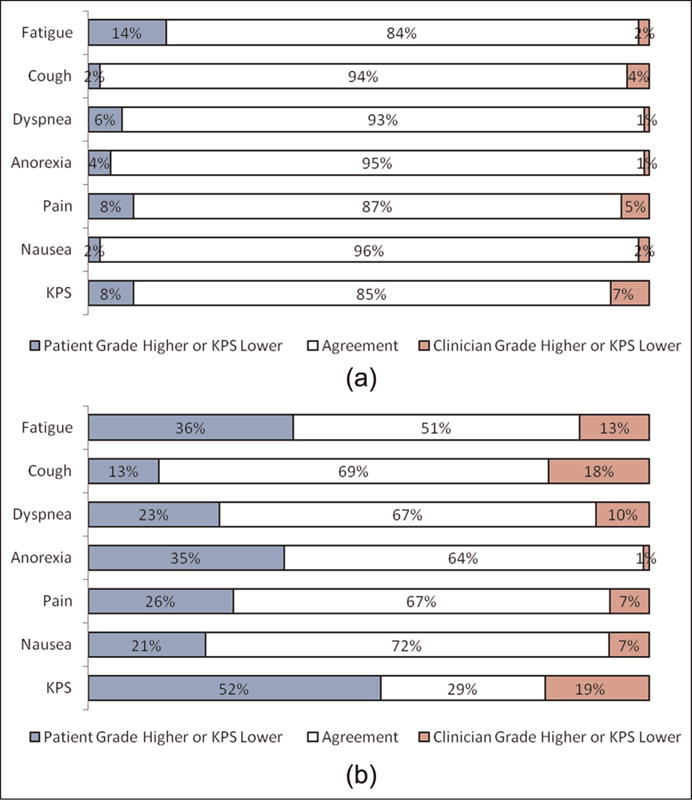

Figure 2 shows the proportion of patient-clinician pairs in each group with agreement and disagreement for the six CTCAE symptoms reported in both groups and for KPS. In the shared reporting group, overall agreement of clinician-reported grades with patient-reported grades was 93%, with agreement for specific symptoms and KPS ranging from 84%–96%. This magnitude of agreement was observed across all 13 assessed symptoms. In contrast, in the non-shared reporting group, overall agreement was lower at 56%, ranging for various symptoms from 51%–72%. Results were similar in the planned sensitivity analyses. In both groups, in cases of discrepant grading between patients and clinicians, 82% of the time the discrepancy was by 1 grade point on the CTCAE scale, and in 18% by 2 or more points.

Figure 2.

(a) Agreement between patient and clinician CTCAE and KPS ratings when patient-reported adverse events are shared with clinicians in real-time at the point of care (“shared” reporting) and (b) agreement between patient and clinician CTCAE and KPS ratings when patient-reported adverse events are not shared with clinicians (“non-shared” reporting).

CTCAE: Common Terminology Criteria for Adverse Events; KPS: Karnofsky Performance Status.

Note: Levels of agreement for additional measured symptoms were similar with the shown symptoms, including alopecia (89%); epiphora (88%); epistaxis (95%); mucositis (89%); myalgia (79%); sensory neuropathy (98%); and voice changes/hoarseness (91%).

A comparison of levels of agreement between clinician-patient pairs in the shared and non-shared reporting groups is shown Table 2. For the shared reporting group, weighted kappas ranged from 0.82–0.92, indicating excellent agreement, whereas for the non-shared group, kappas ranged from 0.04–0.48, indicating generally poor agreement. Weighted kappas were statistically significantly higher in the shared group than in the non-shared group for all symptoms with all p < 0.01.

Table 2.

Agreement between patients and clinicians in their toxicity and performance status ratings at cycle 3 of treatment, comparing shared reporting (patient reports shared with clinicians at point of care) versus non-shared reporting (patient reports not shared with clinicians).

| Weighted kappa values

|

|||

|---|---|---|---|

| Shared reporting | Non-shared reporting | p value | |

| Pain | 0.82 | 0.39 | 0.001 |

| Nausea | 0.91 | 0.48 | <0.001 |

| Fatigue | 0.84 | 0.04 | <0.001 |

| Dyspnea | 0.91 | 0.38 | <0.001 |

| Cough | 0.92 | 0.36 | 0.002 |

| Anorexia | 0.9 | 0.21 | <0.001 |

| KPS | 0.83 | 0.24 | <0.001 |

KPS: Karnofsky Performance Status.

In the shared reporting group, for the 7% of cases when clinicians did not agree with patients (i.e. 685 of the 9610 patient-reported toxicities), rates of agreement varied depending on the initial grade level reported by patients (Table 3). There was greater disagreement at higher grade levels, with more downgrading by clinicians than upgrading. When considering Grade 3 toxicities specifically, most of the patient-reported events consisted of fatigue, pain, and dyspnea (accounting for 104/168 (62%) of reported toxicities), and the majority of these were downgraded by clinicians (eTable 1; online only).

Table 3.

Rates of clinician agreement with patient-reported symptomatic toxicities, by grade level of patient reports, when patient-reported toxicities were shared with clinicians in a phase 2 clinical trial (N = 44).

| Patient-reported grade level | Total # reported by patients | Clinician agreed # (%) | Clinician upgraded # (%) | Clinician downgraded # (%) |

|---|---|---|---|---|

| Grade 0 | 6158 | 6000 (97%) | 152 (2%) | Not applicable |

| Grade 1 | 2553 | 2407 (94%) | 94 (3.5%) | 49 (2%) |

| Grade 2 | 731 | 471 (64%) | 4 (<1%) | 253 (35%) |

| Grade 3 | 168 | 47 (28%) | Not applicable | 121 (72%) |

Clinical actions taken concomitantly with patient-reported severe (grade 3 or 4) toxicities are shown in eTable 2 (online only). In the shared rating group, 126/187 (67%) of patient-reported severe toxicities were associated with clinical actions. In the non-shared group, 22/51 (44%) of patient-reported severe toxicities were associated with clinical actions. In both groups, the most frequent severe toxicities were fatigue, pain, and dyspnea; the most frequent clinical actions were treatment-held and supportive medications prescribed.

In a paper questionnaire administered to the 12 clinicians caring for patients enrolled in the clinical trial (four physicians and eight research nurses), the amount of time to electronically retrieve and reassign patient-reported grades at any visit was reported as 2 min (range, 1–6); all respondents (12/12; 100%) felt the software was easy to use, intuitive, and they would feel comfortable using it in future studies; 10/12 (83%) used the system to aid their toxicity assessments during the trial although fewer (4/11; 36%) felt they used the system regularly to make clinical decisions.

Discussion

In this pilot study, an electronic system for sharing patient-reported symptoms with clinicians in real-time was feasible to implement in a phase 2 clinical trial, with virtually all patients and clinicians completing symptom reports and reviews at the point of care. Moreover, clinicians made use of this information to inform their own symptom assessments, documentation, and symptom management activities. Prior research has reported that clinicians under-detect symptoms.5–9 This study provides evidence that this under-detection may be substantially alleviated by sharing patient reports with clinicians as a standard part of the symptom assessment and documentation workflow.

Agreement between patients and clinicians was 93% when patient reports were shared at appointments, which was improved compared to the non-shared comparison group (and to historical comparisons7,8), where overall agreement was 56%. Although this represents substantial and improved agreement, levels of agreement notably diminished as the severity of patient-reported toxicities rose. While it was rare for a clinician to disagree with a patient-reported grade of 0 (no symptom) or 1 (mild), clinicians more frequently downgraded grade 2 and 3 patient-reported toxicities. Moreover, while it was unusual for a clinician to upgrade a patient’s grade 2 up to a grade 3, it was more common for a clinician to downgrade a 3 to a 2.

The reasons for clinician downgrades of grade 3 toxicities may be related to clinicians’ preferences not to hold or dose-reduce chemotherapy treatments for patients enrolled in the trial, which was required in the protocol for any documented grade 3 toxicity. Despite this potential inclination, clinicians nonetheless agreed with patient-reported grade 3 toxicities about a third of the time. Another potential reason for clinician downgrades is that the patient questionnaire used in this study was an early patient adaptation of the CTCAE22 which was subsequently substantially revised and validated under contract to the National Cancer Institute.21 A particular limitation of the grade 3 designations in the questionnaire version used in this study was inclusion of clinical criteria such as need for an intervention (e.g. intravenous fluids), which a patient may not be in the best position to answer. The new version, the PRO-CTCAE,21 includes no such criteria and was rigorously developed and tested with patient and clinician input. Notably, there is an ongoing multicenter cooperative group trial comparing shared versus non-shared reporting using the PRO-CTCAE. Regardless, this finding suggests that although sharing patient reports can enhance clinician reporting, for analysis purposes, patient self-reports may be considered in their unadulterated forms as a reflection of the unfiltered patient experience.

There are several additional limitations of this study, including its conduct in a single center and use of a non-randomized comparison. Although the comparison groups appeared similar at baseline, their subsequent experiences with treatment likely differed, underlined by the observation that during a similar reporting period, there were 187 severe (≥grade 3) symptomatic toxicities reported in the clinical trial population compared to 51 in the non-clinical trial population. The differences between groups are inherent limitations of a pilot study, which are being addressed in an ongoing follow-up cooperative group study that includes randomization to reporting or not.

In conclusion, this study provides compelling evidence that a shared reporting approach brings clinician perception and documentation into greater alignment with the patient perspective. This approach not only has implications for research conduct, but also for enhancing symptom management and communication. With proliferation of electronic health records and patient portals, it is likely that patients will increasingly be asked to report their own health status.25 This study provides a preview of how that information might be electronically integrated into clinical workflow.

Acknowledgments

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Funding for this research was provided by the Society of Memorial Sloan Kettering Cancer Center.

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- 1.Reilly CM, Bruner DW, Mitchell SA, et al. A literature synthesis of symptom prevalence and severity in persons receiving active cancer treatment. Support Care Cancer. 2013;21:1525–1550. doi: 10.1007/s00520-012-1688-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Henry DH, Viswanathan HN, Elkin EP, et al. Symptoms and treatment burden associated with cancer treatment: results from a cross-sectional national survey in the U.S. Support Care Cancer. 2008;16:791–801. doi: 10.1007/s00520-007-0380-2. [DOI] [PubMed] [Google Scholar]

- 3.National Cancer Institute, National Institutes of Health, U.S. Department of Health and Human Services. (NIH publication # 09-7473).Common Terminology Criteria for Adverse Events (CTCAE), version 4.0. Published 29 May 2009; Revised version 4.03 14 June 2010), http://evs.nci.nih.gov/ftp1/CTCAE/CTCAE_4.03_2010-06-14_QuickReference_5x7.pdf (accessed 1 May 2015)

- 4.Schag CC, Heinrich RL, Ganz PA. Karnofsky performance status revisited: reliability, validity, and guidelines. J Clin Oncol. 1984;2:187–193. doi: 10.1200/JCO.1984.2.3.187. [DOI] [PubMed] [Google Scholar]

- 5.Fromme EK, Eilers KM, Mori M, et al. How accurate is clinician reporting of chemotherapy adverse effects? A comparison with patient-reported symptoms from the Quality-of-Life Questionnaire C30. J Clin Oncol. 2004;22:3485–3490. doi: 10.1200/JCO.2004.03.025. [DOI] [PubMed] [Google Scholar]

- 6.Laugsand EA, Sprangers MA, Bjordal K, et al. Health care providers underestimate symptom intensities of cancer patients: a multicenter European study. Health Qual Life Outcomes. 2010;8:104. doi: 10.1186/1477-7525-8-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Basch E, Iasonos A, McDonough T, et al. Patient versus clinician symptom reporting using the National Cancer Institute Common Terminology Criteria for Adverse Events: results of a questionnaire-based study. Lancet Oncol. 2006;7:903–909. doi: 10.1016/S1470-2045(06)70910-X. [DOI] [PubMed] [Google Scholar]

- 8.Basch E, Jia X, Heller G, et al. Adverse symptom event reporting by patients vs clinicians: relationships with clinical outcomes. J Natl Cancer Inst. 2009;101:1624–1632. doi: 10.1093/jnci/djp386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pakhomov SV, Jacobsen SJ, Chute CG, et al. Agreement between patient-reported symptoms and their documentation in the medical record. Am J Manag Care. 2008;14:530–539. [PMC free article] [PubMed] [Google Scholar]

- 10.Atkinson TM, Li Y, Coffey CW, et al. Reliability of adverse symptom event reporting by clinicians. Qual Life Res. 2012;21:1159–1164. doi: 10.1007/s11136-011-0031-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fisch MJ, Lee JW, Weiss M, et al. Prospective, observational study of pain and analgesic prescribing in medical oncology outpatients with breast, colorectal, lung, or prostate cancer. J Clin Oncol. 2012;30:1980–1988. doi: 10.1200/JCO.2011.39.2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cleeland CS, Wang XS, Shi Q, et al. Automated symptom alerts reduce postoperative symptom severity after cancer surgery: a randomized controlled clinical trial. J Clin Oncol. 2011;29:994–1000. doi: 10.1200/JCO.2010.29.8315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gilbert JE, Howell D, King S, et al. Quality improvement in cancer symptom assessment and control: the Provincial Palliative Care Integration Project (PPCIP) J Pain Symptom Manage. 2012;43:663–678. doi: 10.1016/j.jpainsymman.2011.04.028. [DOI] [PubMed] [Google Scholar]

- 14.Valderas JM, Kotzeva A, Espallargues M, et al. The impact of measuring patient-reported outcomes in clinical practice: a systematic review of the literature. Qual Life Res. 2008;17:179–193. doi: 10.1007/s11136-007-9295-0. [DOI] [PubMed] [Google Scholar]

- 15.Chen J, Ou L, Hollis SJ. A systematic review of the impact of routine collection of patient reported outcome measures on patients, providers and health organisations in an oncologic setting. BMC Health Serv Res. 2013;13:211. doi: 10.1186/1472-6963-13-211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kotronoulas G, Kearney N, Maguire R, et al. What is the value of the routine use of patient-reported outcome measures toward improvement of patient outcomes, processes of care, and health service outcomes in cancer care? A systematic review of controlled trials. J Clin Oncol. 2014;32:1480–1501. doi: 10.1200/JCO.2013.53.5948. [DOI] [PubMed] [Google Scholar]

- 17.Detmar SB, Muller MJ, Schornagel JH, et al. Health-related quality-of-life assessments and patient-physician communication: a randomized controlled trial. JAMA. 2002;288:3027–3034. doi: 10.1001/jama.288.23.3027. [DOI] [PubMed] [Google Scholar]

- 18.Basch E, Artz D, Dulko D, et al. Patient online self-reporting of toxicity symptoms during chemotherapy. J Clin Oncol. 2005;23:3552–3561. doi: 10.1200/JCO.2005.04.275. [DOI] [PubMed] [Google Scholar]

- 19.Velikova G, Booth L, Smith AB, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22:714–724. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- 20.Berry DL, Blumenstein BA, Halpenny B, et al. Enhancing patient-provider communication with the electronic self-report assessment for cancer: a randomized trial. J Clin Oncol. 2011;29:1029–1035. doi: 10.1200/JCO.2010.30.3909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Basch E, Reeve BB, Mitchell SA, et al. Development of the National Cancer Institute’s patient-reported outcomes version of the common terminology criteria for adverse events (PRO-CTCAE) J Natl Cancer Inst. 2014;106(9):dju244. doi: 10.1093/jnci/dju244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Basch E, Artz D, Iasonos A, et al. Evaluation of an online platform for cancer patient self-reporting of chemotherapy toxicities. J Am Med Inform Assoc. 2007;14:264–268. doi: 10.1197/jamia.M2177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pietanza MC, Basch EM, Lash A, et al. Harnessing technology to improve clinical trials: study of real-time informatics to collect data, toxicities, image response assessments, and patient-reported outcomes in a phase II clinical trial. J Clin Oncol. 2013;31:2004–2009. doi: 10.1200/JCO.2012.45.8117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Santana MJ, Feeny D, Johnson JA, et al. Assessing the use of health-related quality of life measures in the routine clinical care of lung-transplant patients. Qual Life Res. 2010;19:371–379. doi: 10.1007/s11136-010-9599-3. [DOI] [PubMed] [Google Scholar]

- 25.Chung AE, Basch E. Incorporating the patient’s voice into electronic health records through patient-reported outcomes as the “review of systems”. J Am Med Inform Assoc. 2015;22:914–916. doi: 10.1093/jamia/ocu007. [DOI] [PMC free article] [PubMed] [Google Scholar]