Abstract

We prove conditional asymptotic normality of a class of quadratic U-statistics that are dominated by their degenerate second order part and have kernels that change with the number of observations. These statistics arise in the construction of estimators in high-dimensional semi- and non-parametric models, and in the construction of nonparametric confidence sets. This is illustrated by estimation of the integral of a square of a density or regression function, and estimation of the mean response with missing data. We show that estimators are asymptotically normal even in the case that the rate is slower than the square root of the observations.

Keywords: Quadratic functional, Projection estimator, Rate of convergence, U-statistic

1. Introduction

Let (X1, Y1), …, (Xn, Yn) be i.i.d. random vectors, taking values in sets 𝒳 × ℝ, for an arbitrary measurable space (𝒳, 𝒜) and ℝ equipped with the Borel sets. For given symmetric, measurable functions Kn: 𝒳 × 𝒳 → ℝ consider the U-statistics

| (1) |

Would the kernel (x1, y1, x2, y2) ↦ Kn(x1, x2)y1y2 of the U-statistic be independent of n and have a finite second moment, then either the sequence would be asymptotically normal or the sequence n(Un−EUn) would converge in distribution to Gaussian chaos. The two cases can be described in terms of the Hoeffding decomposition of Un, where is the best approximation of Un − EUn by a sum of the type and is the remainder, a degenerate U-statistic (compare (28) in Section 5). For a fixed kernel Kn the linear term dominates as soon as it is nonzero, in which case asymptotic normality pertains; in the other case and the U-statistic possesses a nonnormal limit distribution.

If the kernel depends on n, then the separation between the linear and quadratic cases blurs. In this paper we are interested in this situation and specifically in kernels Kn that concentrate as n → ∞ more and more near the diagonal of 𝒳 × 𝒳. In our situation the variance of the U-statistics is dominated by the quadratic term . However, we show that the sequence (Un − EUn)/σ(Un) is typically still asymptotically normal. The intuitive explanation is that the U-statistics behave asymptotically as “sums across the diagonal r = s” and thus behave as sums of independent variables. Our formal proof is based on establishing conditional asymptotic normality given a binning of the variables Xr in a partition of the set 𝒳.

Statistics of the type (1) arise in many problems of estimating a functional on a semiparametric model, with Kn the kernel of a projection operator (see [1]). As illustrations we consider in this paper the problems of estimating ∫ g2(x) dx or ∫ f2(x) dG(x), where g is a density and f a regression function, and of estimating the mean treatment effect in missing data models. Rate-optimal estimators in the first of these three problems were considered by [2, 3, 4, 5, 6], among others. In Section 3 we prove asymptotic normality of the estimators in [4, 5], also in the case that the rate of convergence is slower than , usually considered to be the “nonnormal domain”. For the second and third problems estimators of the form (1) were derived in [1, 7, 8, 9] using the theory of second-order estimating equations. Again we show that these are asymptotically normal, also in the case that the rate is slower than .

Statistics of the type (1) also arise in the construction of adaptive confidence sets, as in [10], where the asymptotic normality can be used to set precise confidence limits.

Previous work on U-statistics with kernels that depend on n includes [14, 15, 16, 17, 18]. These authors prove unconditional asymptotic normality using the martingale central limit theorem, under somewhat different conditions. Our proof uses a Lyapounov central limit theorem (with moment 2 + ε) combined with a conditioning argument, and an inequality for moments of U-statistics due to E. Giné. Our conditions relate directly to the contraction of the kernel, and can be verified for a variety of kernels. The conditional form of our limit result should be useful to separate different roles for the observations, such as for constructing preliminary estimators and for constructing estimators of functionals. Another line of research (as in [11]) is concerned with U-statistics that are well approximated by their projection on the initial part of the eigenfunction expansion. This has no relation to the present work, as here the kernels explode and the U-statistic is asymptotically determined by the (eigen) directions “added” to the kernel as the number of observations increases. By making special choices of kernel and variables Yi, the statistics (1) can reduce to certain chisquare statistics, studied in [12, 13].

The paper is organized as follows. In Section 2 we state the main result of the paper, the asymptotic normality of U-statistics of the type (1) under general conditions on the kernels Kn. Statistical applications are given in Section 3. In Section 4 the conditions of the main theorem shown to be satisfied by a variety of popular kernels, including wavelet, spline, convolution, and Fourier kernels. The proof of the main result is given in Section 5, while proofs for Section 4 are given in an appendix.

The notation a ≲ b means a ≤ Cb for a constant C that is fixed in the context. The notations an ~ bn and an ≪ bn mean that an/bn → 1 and an/bn → 0, as n → ∞. The space L2(G) is the set of measurable functions f: 𝒳 → ℝ that are square-integrable relative to the measure G and ‖f‖G is the corresponding norm. The product f × g of two functions is to be understood as the function (x1, x2) ↦ f(x1)g(x2), whereas the product F × G of two measures is the product measure.

2. Main result

In this section we state the main result of the paper, the asymptotic normality of the U-statistics (1), under general conditions on the kernels Kn and distributions of the vectors (Xr, Yr). For q > 0 let

be versions of the conditional (absolute) moments of Y1 given X1. For simplicity we assume that μ1 and and μ2 are uniformly bounded. The marginal distribution of X1 is denoted by G.

The kernels are assumed to be measurable maps Kn: 𝒳 × 𝒳 → ℝ that are symmetric in their two arguments and satisfy for every n. Thus the corresponding kernel operators (with abuse of notation denoted by the same symbol)

| (2) |

are continuous, linear operators Kn: L2(G) → L2(G). We assume that their operator norms ‖Kn‖ = sup{‖Knf‖G: ‖f‖G = 1} are uniformly bounded:

| (3) |

By the Banach-Steinhaus theorem this is certainly the case if Knf → f in L2(G) as n → ∞ for every f ∈ L2(G). The operator norms ‖Kn‖ are typically much smaller than the L2(G×G)-norms of the kernels. The squares of the latter are typically of the same order of magnitude as the square L2(G × G)-norms weighted by μ2 × μ2, which we denote by

| (4) |

We consider the situation that these square weighted norms are strictly larger than n:

| (5) |

Under condition (5) the variance of the U-statistic (1) is dominated by the variance of the quadratic part of its Hoeffding decomposition. In contrast, if kn = n, the linear and quadratic parts contribute variances of equal order. This case can be handled by the methods of this paper, but requires a special discussion on the joint limits of the linear and quadratic terms, which we omit. The remaining case kn ≪ n leads to asymptotically linear U-statistics, and is well understood.

The remaining conditions concern the concentration of the kernels Kn to the diagonal of 𝒳 × 𝒳. We assume that there exists a sequence of finite partitions 𝒳 = ∪m𝒳n,m in measurable sets such that

| (6) |

| (7) |

| (8) |

| (9) |

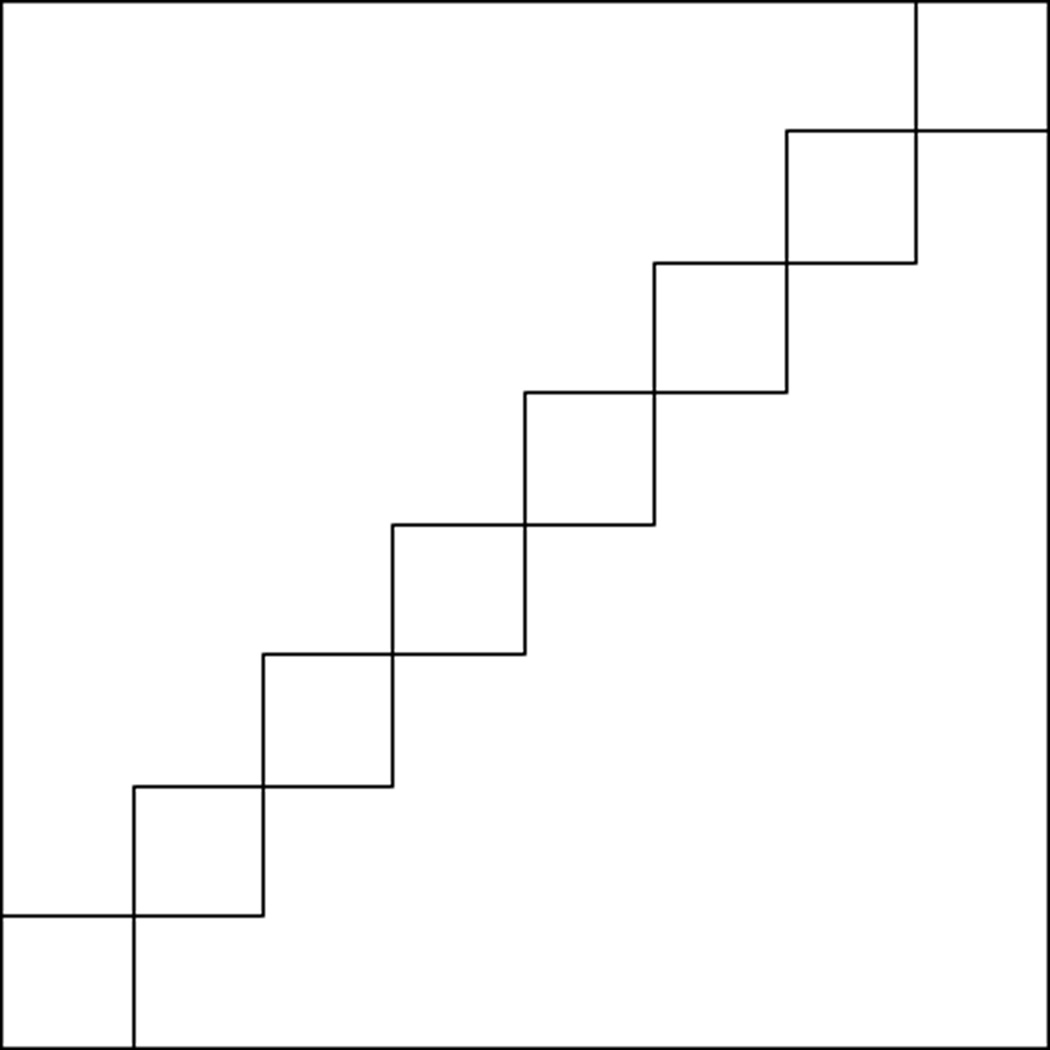

The sum in the first condition (6) is the integral of the square kernel (weighted by the function μ2 × μ2) over the set ∪m(𝒳n,m × 𝒳n,m) (shown in Figure 1). The condition requires this to be asymptotically equivalent to the integral kn of this same function over the whole product space 𝒳 × 𝒳. The other conditions implicitly require that the partitioning sets are not too different and not too numerous.

Figure 1.

The diagonal of 𝒳 × 𝒳 covered by the set ∪m(𝒳n,m × 𝒳n,m).

A final condition requires implicitly that the partitioning is fine enough. For some q > 2, the partitions should satisfy

| (10) |

This condition will typically force the number of partitioning sets to infinity at a rate depending on n and kn (see Section 4). In the proof it serves as a Lyapounov condition to enforce normality.

The existence of partitions satisfying the preceding conditions depends mostly on the kernels Kn, and is established for various kernels in Section 4. The following theorem is the main result of the paper. Its proof is deferred to Section 5.

Let In be the vector with as coordinates In,1, …, In,n the indices of the partitioning sets containing X1, …, Xn, i.e. In,r = m if Xr ∈ 𝒳n,m. Recall that the bounded Lipschitz distance generates the weak topology on probability measures.

Theorem 2.1

Assume that the function μ2 is uniformly bounded. If (2) and (5) hold and there exist finite partitions 𝒳 = ∪m𝒳n,m such that (6)–(10) hold, then the bounded Lipschitz distance between the conditional law of (Un − EUn)/σ(Un) given In and the standard normal distribution tends to zero in probability. Furthermore varUn ~ 2kn/n2 for kn given in (4).

The conditional convergence in distribution implies the unconditional convergence. It expresses that the randomness in Un is asymptotically determined by the fine positions of the Xi within the partitioning sets, the numbers of observations falling in the sets being fixed by In.

In most of our examples the kernels are pointwise bounded above by a multiple of kn, and (4) arises, because the area where Kn is significantly different from zero is of the order . Condition (10) can then be simplified to

| (11) |

Lemma 2.1

Assume that the functions μ2 and μq are bounded away from zero and infinity, respectively. If ‖Kn‖∞ ≲ kn, then (10) is implied by (11).

Proof

The sum in (10) is bounded up to a constant by ∫ |Kn|q d(G × G), which is bounded above by a constant times , by the definition of kn.

3. Statistical applications

In this section we give examples of statistical problems in which statistics of the type (1) arise as estimators.

3.1. Estimating the integral of the square of a density

Let X1, …, Xn be i.i.d. random variables with a density g relative to a given measure ν on a measurable space (𝒳, 𝒜). The problem of estimating the functional ∫ g2 dν has been addressed by many authors, including [2], [6] and [19]. The estimators proposed by [4, 5], which are particularly elegant, are based on an expansion of g on an orthonormal basis e1, e2, … of the space L2(𝒳, 𝒜, ν), so that , for θi = ∫ gei dν the Fourier coefficients of g. Because , the square Fourier coefficient can be estimated unbiasedly by the U-statistic with kernel (x1, x2) ↦ ei(x1)ei(x2). Hence the truncated sum of squares can be estimated unbiasedly by

This statistic is of the type (1) with kernel and the variables Y1, …, Yn taken equal to unity.

The estimator Un is unbiased for the truncated series , but biased for the functional of interest . The variance of the estimator can be computed to be of the order k/n2 ∨ 1/n (cf. (29) below). If the Fourier coefficients are known to satisfy , then the bias can be bounded by , and trading square bias versus the variance leads to the choice k = n1/(2β+1/2).

In the case that β > 1/4, the mean square error of the estimator is 1/n and the sequence can be shown to be asymptotically linear in the efficient influence function 2(g − ∫ g2 dν) (see (28) with μ(x) = E(Y1| X1 = x) ≡ 1 and [4], [5]). More interesting from our present perspective is the case that 0 < β < 1/4, when the mean square error is of order n−4β/(2β+1/2) ≫ 1/n, and the variance of Un is dominated by its second-order term. By Theorem 2.1 the estimator, centered at its expectation, and with the orthonormal basis (ei) one of the bases discussed in Section 4, is still asymptotically normally distributed.

The estimator depends on the parameter β through the choice of k. If β is not known, then it would typically estimated from the data. Our present result does not apply to this case, but extension are thinkable.

3.2. Estimating the integral of the square of a regression function

Let (X1, Y1), …, (Xn, Yn) be i.i.d. random vectors following the regression model Yi = b(Xi) + εi for unobservable errors εi that satisfy E(εi|Xi) = 0. It is desired to estimate ∫ b2 dG for G the marginal distribution of X1, …, Xn.

If the distribution G is known, then an appropriate estimator can take exactly the form (1), for Kn the kernel of an orthonormal projection on a suitable kn-dimensional space in L2(G). Its asymptotics are as in Section 3.1.

Because an orthogonal projection in L2(G) can only be constructed if G is known, the preceding estimator is not available if G is unknown. If the regression function b is regular of order β ≥ 1/4, then the parameter can be estimated at -rate (see [1]). In this section we consider an estimator that is appropriate if b is regular of order β < 1/4 and the design distribution G permits a Lebesgue density g that is bounded away from zero and sufficiently smooth.

Given initial estimators b̂n and ĝn for the regression function b and design density g, we consider the estimator

| (12) |

Here (x1, x2) ↦ Kk,g(x1, x2) is a projection kernel in the space L2(G). For definiteness we construct this in the form (14), where the basis e1, …, ek may be the Haar basis, or a general wavelet basis, as discussed in Section 4. Alternatively, we could use projections on the Fourier or spline basis, or convolution kernels, but the latter two require twicing (see (16)) to control bias, and the arguments given below must be adapted.

The initial estimators b̂n and ĝn may be fairly arbitrary rate-optimal estimators if constructed from an independent sample of observations. (e.g. after splitting the original sample in parts used to construct the initial estimators and the estimator (12)). We assume this in the following theorem, and also assume that the norm of b̂n in Cβ[0, 1] is bounded in probability, or alternatively, if the projection is on the Haar basis, that this estimator is in the linear span of e1, …, ekn. This is typically not a loss of generality.

Let Ê and denote expectation and variance given the additional observations. Set μq(x) = E(|ε1|q|X1 = x) and let ‖·‖3 denote the L3-norm relative to Lebesgue measure.

Corollary 3.1

Let b̂n and ĝn be estimators based on independent observations that converge to b and g in probability relative to the uniform norm and satisfy ‖b̂n − b‖3 = OP(n−β/(2β+1)) and ‖ĝn − g‖3 = OP(n−γ/(2γ+1)). Let μq be finite and uniformly bounded for some q > 2. Then for b ∈ Cβ[0, 1] and strictly positive g ∈ Cγ[0, 1], with γ ≥ β, and for kn satisfying (5),

Furthermore, the sequence tends in distribution to the standard normal distribution.

For kn = n1/(2β+1/2) the estimator Tn of ∫ b2 dG attains a rate of convergence of the order n−2β/(2β+1/2) + n−2β/(2β+1)−γ/(2γ+1). If γ > β/(4β2 + β + 1/2), then this reduces to n−4β/(1+4β), which is known to be the minimax rate when g is known and b ranges over a ball in Cβ[0, 1], for β ≤ 1/4 (see [3] or [20]). For smaller values of γ the estimator can be improved by considering third or higher order U-statistics (see [9]).

3.3. Estimating the mean response with missing data

Suppose that a typical observation is distributed as X = (Y A, A, Z) for Y and A taking values in the two-point set {0, 1} and conditionally independent given Z, with conditional mean functions b(z) = P(Y = 1|Z = z) and a(z)−1 = P(A = 1|Z = z), and Z possessing density g relative to some dominated measure ν.

In [7] we introduced a quadratic estimator for the mean response EY = ∫ bg dν, which attains a better rate of convergence than the conventional linear estimators. For initial estimators ân, b̂n and ĝn, and Kk,α̂n,ĝn a projection kernel in L2(g/a), this takes the form

Apart from the (inessential) asymmetry of the kernel, the quadratic part has the form (1). Just as in the preceding section, the estimator can be shown to be asymptotically normal with the help of Theorem 2.1.

4. Kernels

In this section we discuss examples of kernels that satisfy the conditions of our main result. Detailed proofs are given in an appendix.

Most of the examples are kernels of projections K, which are characterised by the identity K f = f, for every f in their range space. For a projection given by a kernel, the latter is equivalent to f (x) = ∫ f (υ)K(x, υ) dG(υ) for (almost) every x, which suggests that the measure υ ↦ K(x, υ) dG(υ) acts on f as a Dirac kernel located at x. Intuitively, if the projection spaces increase to the full space, so that the identity is true for more and more f, then the kernels (x, υ) ↦ K(x, υ) must be increasingly dominated by their values near the diagonal, thus meeting the main condition of Theorem 2.1.

For a given orthonormal basis e1, e2, … of L2(G), the orthogonal projection onto lin (e1, …, ek) is the kernel operator Kk: L2(G) → L2(G) with kernel

| (13) |

It can be checked that it has operator norm 1, while the square L2-norm of the kernel is k.

A given orthonormal basis e1, e2, … relative to a given dominating measure, can be turned into an orthonormal basis of L2(G), for g a density of G. The kernel of the orthogonal projection in L2(G) onto lin () is

| (14) |

If g is bounded away from zero and infinity, the conditions of Theorem 2.1 will hold for this kernel as soon as they hold for the kernel (13) relative to the dominating measure.

The orthogonal projection in L2(G) onto the linear span lin (f1, …, fk) of an arbitrary set of functions fi possesses the kernel

| (15) |

for A the inverse of the (k × k)-matrix with (i, j)-element 〈fi, fj〉G. In statistical applications this projection has the advantage that it projects onto a space that does not depend on the (unknown) measure G. For the verification of the conditions of Theorem 2.1 it is useful to note that the matrix A is well-behaved if f1, …, fk are orthonormal relative to a measure G0 that is not too different from G: from the identity , one can verify that the eigenvalues of A are bounded away from zero and infinity if G and G0 are absolutely continuous with a density that is bounded away from zero and infinity.

Orthogonal projections K have the important property of making the inner product quadratic in the approximation error. Nonorthogonal projections, such as the convolution kernels or spline kernels discussed below, lack this property, and may result in a large bias of an estimator. Twicing kernels, discussed in [21] as a means to control the bias of plug-in estimators, remedy this problem. The idea is to use the operator K + K* − K K*, where K* is the adjoint of K: L2(G) → L2(G), instead of the original operator K. Because I − K − K* + K K* = (I − K)(I − K*), it follows that

If K is an orthogonal projection, then K = K* and the twicing kernel is K + K* − K K* = K, and nothing changes, but in general using a twicing kernel can cut a bias significantly.

If K is a kernel operator with kernel (x1, x2) ↦ K(x1, x2), then the adjoint operator is a kernel operator with kernel (x1, x2) ↦ K(x2, x1), and the twicing operator K + K* − K K* is a kernel operator with kernel (which depends on G)

| (16) |

4.1. Wavelets

Consider expansions of functions f ∈ L2(ℝd) on an orthonormal basis of compactly supported, bounded wavelets of the form

| (17) |

where the base functions are orthogonal for different indices (i, j, υ) and are scaled and translated versions of the 2d base functions :

Such a higher-dimensional wavelet basis can be obtained as tensor products of a given father wavelet ϕ0 and and mother wavelet ϕ1 in one dimension. See for instance Chapter 8 of [22].

We shall be interested in functions f with support 𝒳 = [0, 1]d. In view of the compact support of the wavelets, for each resolution level i and vector υ only to the order 2id base elements are nonzero on 𝒳; denote the corresponding set of indices j by Ji. Truncating the expansion at the level of resolution i = I then gives an orthogonal projection on a subspace of dimension k of the order 2Id. The corresponding kernel is

| (18) |

Proposition 4.1

For the wavelet kernel (18) with k = kn = 2Id satisfying kn/n → ∞ and kn/n2 → 0 conditions (2), (6), (7), (8), (9) and (10) are satisfied for any measure G on [0, 1]d with a Lebesgue density that is bounded and bounded away from zero and regression functions μ2 and μq (for some q > 2) that are bounded and bounded away from zero.

4.2. Fourier basis

Any function f ∈ L2[−π, π] can be represented through the Fourier series f = ∑j∈ℤ fjej, for the functions and the Fourier coefficients . The truncated series fk = ∑|j|≤k fjej gives the orthogonal projection of f onto the linear span of the function {ej : |j| ≤ k}, and can be written as Kk f for Kk the kernel operator with kernel (known as the Dirichlet kernel)

| (19) |

Proposition 4.2

For the Fourier kernel (19) with k = kn satisfying n ≪ kn ≪ n2 conditions (2), (6)–(10) are satisfied for any measure G on ℝ with a bounded Lebesgue density and regression functions μ2 and μq (for some q > 2) that are bounded and bounded away from zero.

4.3. Convolution

For a uniformly bounded function ϕ: ℝ → ℝ with ∫ |ϕ| dλ < ∞, and a positive number σ, set

| (20) |

For σ ↓ 0 these kernels tend to the diagonal, with square norm of the order σ−1.

Proposition 4.3

For the convolution kernel (20) with σ = σn satisfying n−2 ≪ σn ≪ n−1 conditions (2), (6)–(10) are satisfied for any measure G on [0, 1] with a Lebesgue density that is bounded and bounded away from zero and regression functions μ2 and μq (for some q > 2) that are bounded and bounded away from zero.

4.4. Splines

The Schoenberg space Sr(T, d) of order r for a given knot sequence T: t0 = 0 < t1 < t2 < ⋯ < tl < 1 = tl+1 and vector of defects d = (d1, …, dl) ∈ {0, …, r − 1} are the functions f: [0, 1] → ℝ whose restriction to each subinterval (ti, ti+1) is a polynomial of degree r − 1 and which are r − 1 − di times continuously differentiable in a neighbourhood of each ti. (Here “0 times continuously differentiable” means “continuous” and “−1 times continuously differentiable” means no restriction.) The Schoenberg space is a k = r + ∑i di-dimensional vector space. Each “augmented knot sequence”

| (21) |

defines a basis N1, …, Nk of B-splines. These are nonnegative splines with ∑j Nj = 1 such that Nj vanishes outside the interval (). Here the “basic knots” () are defined as the knot sequence (tj), but with each ti ∈ (0, 1) repeated di times. See [23], pages 137, 140 and 145). We assume that |ti−1 − ti| ≤ |t−1 − t0| if i < 0 and |ti+1 − ti| ≤ |tl+1 − tl| if i > l.

The quasi-interpolant operator is a projection Kk: L1[0, 1] → Sr(T, d) with the properties

for every 1 ≤ p ≤ ∞ and a constant Cr depending on r only (see [23], pages 144–147). It follows that the projection Kk inherits the good approximation properties of spline functions, relative to any Lp-norm. In particular, it gives good approximation to smooth functions.

The quasi-interpolant operator Kk is a projection onto Sr(T, d) (i.e. and Kk f = f for f ∈ Sr(T, d)), but not an orthogonal projection. Because the B-splines form a basis for Sr(T, d), the operator can be written in the form Kk f = ∑j cj(f)Nj for certain linear functionals cj : L1[0, 1] → ℝ. It can be shown that, for any 1 ≤ p ≤ ∞,

| (22) |

([23], page 145.) In particular, the functionals cj belong to the dual space of L1[0, 1] and can be written as cj(f) = ∫ fcj dλ for (with abuse of notation) certain functions cj ∈ L∞[0, 1]. This yields the representation of Kk as a kernel operator with kernel

| (23) |

Proposition 4.4

Consider a sequence (indexed by l) of augmented knot sequences (21) with for every 0 ≤ i ≤ l and splines with fixed defects di = d. For the corresponding (symmetrized) spline kernel (23) with l = ln conditions (2), (6), (7), (8), (9) and (10) are satisfied if ln/n → ∞ and ln/n2 → 0 for any measure G on [0, 1] with a Lebesgue density that is bounded and bounded away from zero and regression functions μ2 and μq (for some q > 2) that are bounded and bounded away from zero.

5. Proof of Theorem 2.1

For Mn the cardinality of the partition 𝒳 = ∪m𝒳n,m, let Nn,1, …, Nn,Mn be the numbers of Xr falling in the partitioning sets, i.e.

The vector Nn = (Nn,1, …, Nn,Mn) is multinomially distributed with parameters n and vector of success probabilities pn = (pn, 1, …, pn,Mn) given by

Given the vector In = (In, 1, …, In,n) the vectors (X1, Y1), …, (Xn, Yn) are independent with distributions determined by

| (24) |

| (25) |

We define U-statistics Vn by restricting the kernel Kn to the set ∪m𝒳n,m × 𝒳n,m, as follows:

| (26) |

The proof of Theorem 2.1 consists of three elements. We show that the difference between Un and Vn is asymptotically negligible due to the fact that the kernels shrink to the diagonal, we show that the statistics Vn are conditionally asymptotically normal given the vector of bin indicators In, and we show that the conditional and unconditional means and variances of Vn are asymptotically equivalent. These three elements are expressed in the following four lemmas, which should be understood all implicitly to assume the conditions of Theorem 2.1.

Lemma 5.1

var(Un − Vn)/ var Un → 0.

Lemma 5.2

.

Lemma 5.3

.

Lemma 5.4

.

5.1. Proof of Theorem 2.1

By Lemmas 5.1 and 5.3 the sequence ((Un − EUn)−(Vn − E(Vn| In)) / sd Vn tends to zero in probability. Because conditional and unconditional convergence in probability to a constant is the same, we see that it suffices to show that (Vn − E(Vn| In))/ sd Vn converges conditionally given In to the normal distribution, in probability. This follows from Lemmas 5.4 and 5.2.

The variance of Un is computed in (29) in Section 5.2. By the Cauchy-Schwarz inequality (cf. (2)),

Because μ2 is bounded by assumption and the norms ‖Kn‖ are bounded in n by assumption (2), the right sides are bounded in n. In view of (5) it follows that the first two terms in the final expression for the variance are of lower order than the third, whence

| (27) |

5.2. Moments of U-statistics

To compute or estimate moments of Un we employ the Hoeffding decomposition (e.g. [24], Sections 11.4 and 12.1) of Un given by

| (28) |

The variables and are uncorrelated, and so are all the variables in the single and double sums defining and . It follows that

| (29) |

See equation (4) for the definition of kn.

There is no similarly simple expression for higher moments of a U-statistic, but the following useful bound is (essentially) established in [25].

Lemma 5.5

(Giné, Latala, Zinn). For any q ≥ 2 there exists a constant Cq such that for any i.i.d. random variables X1, …, Xn and degenerate symmetric kernel K,

Proof

The second inequality is immediate from the fact that the L2-norm is bounded above by the Lq-norm, and 3q/2 − 1 ≥ q, for q ≥ 2. For the first inequality we use (3.3) in [25] (and decoupling as explained in Section 2.5 of that paper) to see that the left side of the lemma is bounded above by a multiple of

Because Lq-norms are increasing in q, the second term on the right is bounded above by n−3q/2+1E|K(X1, X2)|q, which is also a bound on the third term, as n2 − 2q ≤ n−3q/2+1 for q ≥ 2.

We can apply the preceding inequality to the degenerate part of the Hoeffding decomposition (28) of Un and combine it with the Marcinkiewicz-Zygmund inequality to obtain a bound on the moments of Un.

Corollary 5.1

For any q ≥ 2 there exists a constant Cq such that for the U-statistic given by (1) and (28),

Proof

The first inequality follows from the Marcinkiewicz-Zygmund inequality and the fact that E|Z − EZ|q ≤ 2qE|Z|q, for any random variable Z. To obtain the second we apply Lemma 5.5 to , which is a degenerate U-statistic with kernel Kn (X1, X2)Y1Y2 − Πn (X1, X2, Y1, Y2), for Πn the sum of the conditional expectations of Kn (X1, X2)Y1Y2 relative to (X1, Y1) and (X2, Y2) minus EUn. Because (conditional) expectation is a contraction for the Lq-norm (E|E(Z| 𝒜)|q ≤ E|Z|q for any random variable Z and conditioning σ-field 𝒜), we can bound the L2- and Lq-norms of the degenerate kernel, appearing in the bound obtained from Lemma 5.5, by a constant (depending on q) times the L2- of Lq-norm of the kernel Kn (X1, X2)Y1Y2.

5.3. Proof of Lemma 5.1

The statistic Un − Vn is a U-statistic of the same type as Un, except that the kernel Kn is replaced by Kn (1 − 1𝒳n) for 𝒳n = ⋃m (𝒳n,m × 𝒳n,m). The variance of Un − Vn is given by formula (29), but with Kn replaced by the kernel operator with kernel Kn,n = Kn (1 − 1𝒳n). The corresponding kernel operator is Kn,n f = Kn f − ∑m Kn (f1𝒳n,m)1𝒳n,m, and hence

It follows that the operator norms ‖Kn,n‖2 of the operators Kn,n are uniformly bounded in n (cf. equation (3) for the operators Kn). Applying decomposition (29) to the kernel Kn,n we see that var(Un − Vn) = O(n−1) + 2kn,n/n2, where kn,n is the L2(G × G)-norm kn,n of the kernel Kn,n weighted by μ2 × μ2, as in (4) but with Kn replaced by Kn,n. By assumption (6) the norm kn,n is negligible relative to the same norm (denoted kn) of the original kernel. Because the variance of Un is asymptotically equivalent to 2kn/n2 and kn/n → ∞, this proves the claim.

5.4. Proof of Lemma 5.2

The variable Vn can be written as the sum Vn = ∑m Vn,m, for

| (30) |

Given the vector of bin-indicators In the observations (Xr, Yr) are independently generated from the conditional distributions in which Xr is conditioned to fall in bin 𝒳n,In,r, as given in (24)–(25). Because each variable Vn,m depends only on the observations (Xr, Yr) for which Xr falls in bin 𝒳n,m, the variables Vn,1, …, Vn,Mn are conditionally independent. The conditional asymptotic normality of Vn given In can therefore be established by a central limit theorem for independent variables.

The variable Vn,m is equal to Nn,m (Nn,m − 1)/ (n(n − 1)) times a U-statistic of the type (1), based on Nn,m observations (Xr, Yr) from the conditional distribution where Xr is conditioned to fall in 𝒳n,m. The corresponding kernel operator is given by

| (31) |

We can decompose each Vn,m into its Hoeffding decomposition relative to the conditional distribution given In. We shall show that

| (32) |

To prove Lemma 5.2 it then suffices to show that the sequence converges conditionally given In weakly to the standard normal distribution, in probability. By Lyapounov’s theorem, this follows from, for some q > 2,

| (33) |

By Lemma 5.4 the conditional standard deviation sd(Vn| In) is asymptotically equivalent in probability to the unconditional standard deviation, and by Lemma 5.1 this is equivalent to sd Un, which is equivalent to . Thus in both (32) and (33) the conditional standard deviation in the denominator may be replaced by .

In view of the first assertion of Corollary 5.1,

By Lemma 5.6 (below, note that (npn,m)2 ≲ (npn,m)3 in view of (9)) the expectation of the right side is bounded above by a constant times

In view of (2) the sum over m of this expression is bounded above by a multiple of 1/n, which is o(kn/n2) by assumption (5). Because , this concludes the proof of (32).

In view of the second assertion of Corollary 5.1,

By Lemma 5.6 the expectation of the right side is bounded above by a constant times

With αn,m (q) = ∫∫ |Kn|q μq × μq 1𝒳n,m × 𝒳n,m dG × G it follows that

The right side tends to zero by assumptions (6), (7) and (10). This concludes the proof of (33).

5.5. Proof of Lemma 5.3

Only pairs (Xr, Xs) that fall in one of the sets 𝒳n,m × 𝒳n,m contribute to the double sum (26) that defines Vn. Given In there are Nn,m (Nn,m − 1) pairs that fall in 𝒳n,m and the distribution of the corresponding vectors (Xr, Yr), (Xs, Ys) is determined as in (24)–(25). From this it follows that

Defining the numbers αn,m = ∫∫ Kn μ × μ 1𝒳n,m × 𝒳n,m dG × G, we infer that

By the Cauchy-Schwarz inequality, the numbers αn,m satisfy

In particular ∑m |αn,m| ≲ 1. In view of (2) the numbers given in (34) (below) are of the order Mn/n2 + 1/n. Lemma 5.7 (below) therefore implies that the right side of the second last display is of the order , because (9) implies that Mn ≲ n. By assumption (5) this is smaller than , which is of the same order as sd Vn.

5.6. Proof of Lemma 5.4

By (29) applied to the variables Vn,m defined in (30),

where the operator Kn,m is given in (31), the distribution Gn,m is defined in (24), and

We can split this into three terms. By Lemma 5.6 the expected value of the first term is bounded by a multiple of

Similarly the expected value of the absolute value of the second term is bounded by a multiple of

These two terms divided by kn/n2 tend to zero, by (5).

By Lemma 5.1 and (27) we have that var Vn ~ 2kn/n(n − 1), which in term is asymptotically equivalent to 2 ∑m αn,m (2)/n(n − 1), by (6). It follows that

Here the coefficients αn,m (2)/kn satisfy the conditions imposed on αn,m in Corollary 5.2, in view of (6) and (7). Therefore this corollary shows that the expression on the right is oP (kn/n2).

5.7. Auxiliary lemmas on multinomial variables

Lemma 5.6

Let N be binomially distributed with parameters (n, p). For any r ≥ 2 there exists a constant Cr such that ENr1N≥2 ≤ Cr ((np)r ∨ (np)2).

Proof

For r = ṟ + δ with ṟ an integer and 0 ≤ δ < 1 there exists a constant Cr with Nr1N≥2 ≤ CrNδ N (N − 1) ⋯ (N − ṟ + 1) + CrNδ N (N − 1) for every N. Hence

for N1 and N2 binomially distributed with parameters n − ṟ and p and n − 2 and p, respectively. By Jensen’s inequality , which is bounded above by (np)δ, yielding the upper bound Cr ((np)r + (np)2+δ). If np ≤ 1, then this is bounded above by 2Cr (np)2 and otherwise by 2Cr (np)r.

The next result is a law of large numbers for a quadratic form in multinomial vectors of increasing dimension. The proof is based on a comparison of multinomial variables to Poisson variables along the lines of the proof of a central limit theorem in [12].

Lemma 5.7

For each n let Nn be multinomially distributed with parameters (n, pn, 1, …, pn,Mn) with maxm pn,m → 0 as n → ∞ and lim infn→∞ n minm pn,m > 0. For given numbers αn,m let

| (34) |

Then

Proof

Because ∑m αn,m ((n − 1)/n − 1) = ∑m αn,m (−1/n), it suffices to prove the statement of the lemma with n(n − 1) replaced by n2. Using the fact that ∑m Nn,m = n we can rewrite the resulting quadratic form as, with λn,m = npn,m,

for C1 and C2 the Poisson-Charlier polynomials of degrees 1 and 2, given by

Together with x ↦ C0(x) = 1 the functions x ↦ C1(x, λ) and x ↦ C2(x, λ) are the polynomials 1, x, x2 orthonormalized for the Poisson distribution with mean λ by the Gramm-Schmidt procedure. For X = (X1, …, XMn) let

Thus up to a factor the statistic Tn (Nn) is the quadratic form of interest.

If the variables Nn,1, …, Nn,Mn were independent Poisson variables with mean values λn,m, then the mean of Tn (Nn) would be zero and the variance would be given by , and hence in that case Tn (Nn) = OP (sn). We shall now show that the difference between multinomial and Poisson variables is of the order .

To make the link between multinomial and Poisson variables, let ñ be a Poisson variable with mean n and given ñ = k let Ñn = (Ñn,1, …, Ñn,Mn) be multinomially distributed with parameters k and pn = (pn,1, …, pn,Mn). The original multinomial vector Nn is then equal in distribution to Ñn given ñ = n. Furthermore, the vector Ñn is unconditionally Poisson distributed as in the preceding paragraph, whence, for any Mn → ∞,

The left side is bigger than

where the vector Nn (k) is multinomial with parameters k and pn. Because the sequence tends to a standard normal distribution as n → ∞, the probability tends to the positive constant Φ(1) − Φ(−1). We conclude that the sequence of minima on the right tends to zero. The probability of interest is the term with k = n in the minimum. Therefore the proof is complete once we show that the minimum and maximum of the terms are comparable.

To compare the terms with different k we couple the multinomial vectors Nn (k) on a single probability space. For given k < k′ we construct these vectors such that for a multinomial vector with parameters k′ − k and pn independent of Nn (k). For any numbers N and N′ we have that . Therefore,

For and the binomial variable Nn,m (k′ − k) has first and second moment bounded by a multiple of and . From this the right side of the display can be seen to be of the order ∑m |αn,m |O(n−1/2)≔ ρn. Similarly, we have and

can be seen to be of the order , which is also of the order ρn.

We infer from this that E|Tn (Nn (k)) − Tn (Nn (n)) = O(ρn), uniformly in , and therefore

uniformly in , for every Mn → ∞, by Markov’s inequality. In the preceding paragraph it was seen that the minimum of the right side over k with tends to zero for any Mn → ∞. Hence so does the left side.

Under the additional condition that

it follows from Corollary 4.1 in [12] that the sequence times the quadratic form in the preceding lemma tends in distribution to the standard normal distribution. Thus in this case the order claimed by the lemma is sharp as soon as n−1/2 ∑m |αn,m| is not bigger than sn.

Corollary 5.2

For each n let Nn be multinomially distributed with parameters (n, pn,1, …, pn,Mn) with lim infn→∞ n minm pn,m > 0. If αn,m are numbers with ∑m |αn,m| = O(1) and maxm |αn,m| → 0 as n → ∞, then

Proof

Since npn,m ≳ 1 by assumption the numbers sn defined in (34) satisfy

The corollary is a consequence of Lemma 5.7.

6. Proofs for Section 3

Proof of Corollary 3.1

We consider the distribution of Tn conditionally given the observations used to construct the initial estimators b̂n and ĝn. By passing to subsequences of n, we may assume that these sequences converge almost surely to b and g relative to the uniform norm. In the proof of distributional convergence the initial estimators b̂n and ĝn may therefore be understood to be deterministic sequences that converge to limits b and g.

The estimator (12) is a sum of a linear and quadratic part. The (conditional) variance of the linear term is of the order 1/n, which is of smaller order than kn/n2. It follows that tends to zero in probability.

To study the quadratic part we apply Theorem 2.1 with the kernel Kn of the theorem taken equal to the present Kkn,ĝn and the Yr of the theorem taken equal to the present Yr − b̂n(Xr). For given functions b1 and g1, set

The function μq(b̂n) converges uniformly to the function μq(b), which is uniformly bounded by assumption, for q = 1, q = 2 and some q > 2. Furthermore , where the function g × g/ĝn × ĝn converges uniformly to one. Therefore, the conditions of Theorem 2.1 (for the case that the observations are non-i.i.d.; cf. the remark following the theorem) are satisfied by Theorem 4.1 or 4.2. Hence the sequence tends to a standard normal distribution, for k̂n = kn(b̂n, ĝn). From the conditions on the initial estimators it follows that k̂n/kn(b, g) → 1. Here kn(b, g) is of the order the dimension kn of the kernel.

Let Tn(b1, g1) be as Tn, but with the initial estimators b̂n and ĝn replaced by b1 and g1. Its expectation is given by

In particular e(b, g) = ∫ b2 dG. Using the fact that Kkn,g is an orthogonal projection in L2(G) we can write

| (35) |

By the definition of Kkn,g the absolute value of the first term on the right can be bounded as

By assumption b is β-Hölder and g is γ-Hölder for some γ ≥ β and bounded away from zero. Then is β-Hölder and hence its uniform distance to lin (e1, …, ek) is of the order (1/k)β. If the norm of b̂n in Cβ[0, 1] is bounded, then we can apply the same argument to the functions , uniformly in n, and conclude that the expression in the display with b̂n instead of b1 is bounded above by OP (1/kn)2β. If the projection is on the Haar basis and b̂n is contained in lin (e1, …, ekn), then the approximation error can be seen to be of the same order, from the fact that the product of two projections on the Haar basis is itself a projection on this basis.

For we can write

If multiplied by a symmetric function in (x1, x2) and integrated with respect to G × G, the arguments x1 and x2 in the second term can be exchanged. The second term on the right in (35) can therefore be written

Here ‖ · ‖G,3 is the L3(G)-norm, we use the fact that L2-projection on a wavelet basis decreases Lp-norms for p = 3/2 up to constants, and the multiplicative constants depend on uniform upper and lower bounds on the functions g1 and g. We evaluate this expression for b1 = b̂n and g1 = ĝn, and see that it is of the order .

Finally we note that Êb,gTn = e(b̂n, ĝn) and combine the preceding bounds.

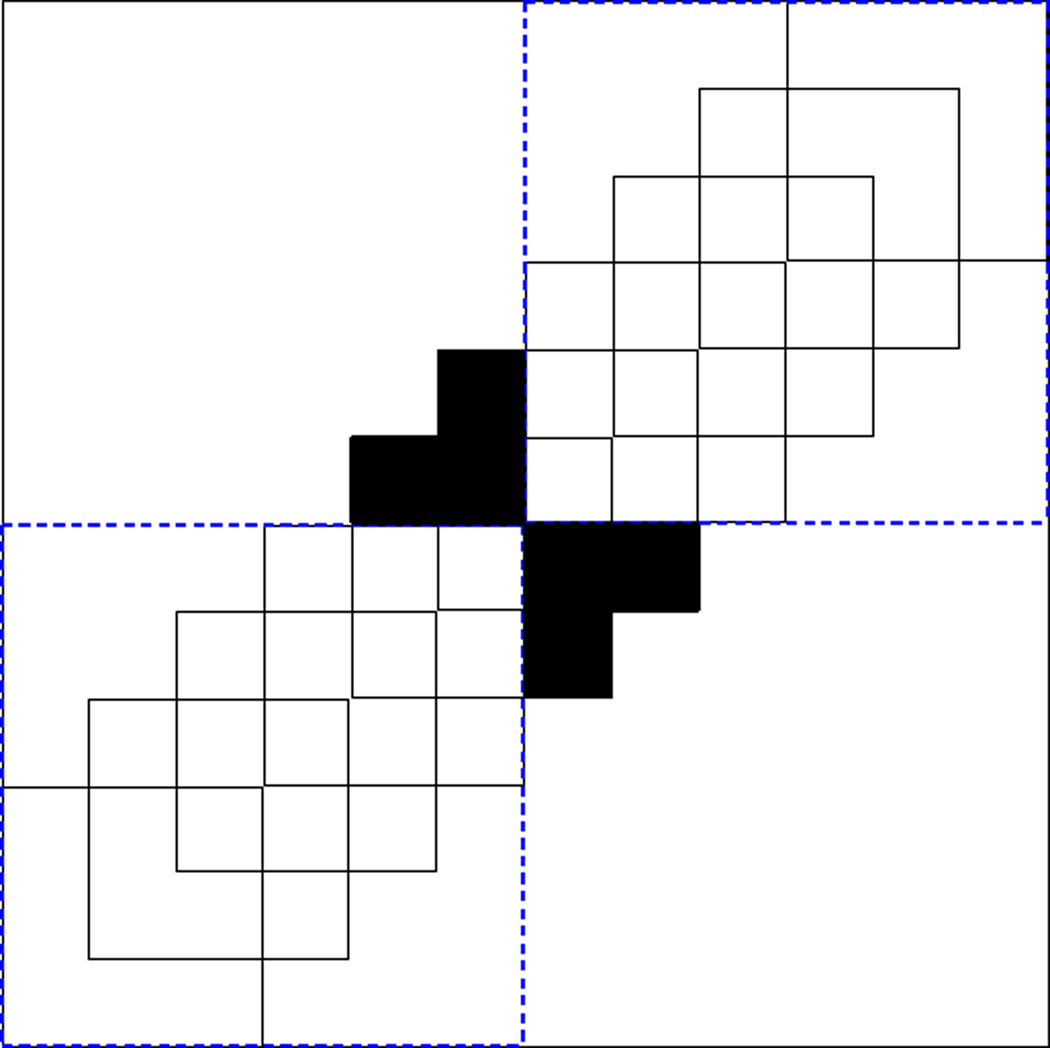

Figure 2.

The support cubes of the wavelets and the bigger cubes 𝒳n,m × 𝒳n,m.

Acknowledgments

The research leading to these results has received funding from the European Research Council under ERC GrantAgreement320637.

7. Appendix: proofs for Section 4

Lemma 7.1

The kernel of an orthogonal projection on a k-dimensional space has operator norm ‖Kk‖2 = 1, and square L2(G×G)-norm .

Proof

The operator norm is one, because an orthogonal projection decreases norm and acts as the identity on its range. It can be verified that the kernel of a kernel operator is uniquely defined by the operator. Hence the kernel of a projection on a k-dimensional space can be written in the form (13), from which the L2-norm can be computed.

Proof of Proposition 4.1

We can reexpress the wavelet expansion (17) to start from level I as

The projection kernel Kk sets the coefficients in the second sum equal to zero, and hence can also be expressed as

The double integral of the square of this function over ℝ2d is equal to the number of terms in the double sum (cf. (13) and the remarks following it), which is O(2Id). The support of only a small fraction of functions in the double sum intersects the boundary of 𝒳. Because also the density of G and the function μ2 are bounded above and below, it follows that the weighted double integral kn of relative to G as in (4) is also of the exact order O(2Id).

Each function has uniform norm bounded above by 2Id times the uniform norm of the base wavelet of which it is a shift and dilation. A given point (x1, x2) belongs to the support of fewer than of these functions, for a constant C1 that depends on the shape of the support of the wavelets. Therefore, the uniform norm of the kernel Kk is of the order kn.

By assumption each function is supported within a set of the form 2−I (C + j) for a given cube C that depends on the type of wavelet, for any υ. It follows that the function vanishes outside the cube 2−I (C + j) × 2−I (C + j). There are O(2Id) of these cubes that intersect 𝒳 × 𝒳; these intersect the diagonal of 𝒳 × 𝒳, but may be overlapping. We choose the sets 𝒳n,m to be blocks (cubes) of adjacent cubes 2−I (C + j), giving sets 𝒳n,m. [In the case d = 1, the “cubes” are intervals and they can be ordered linearly; the meaning of “adjacent” is then clear. For d > 1 cubes are “adjacent” in d directions. We stack ln cubes 2−I (C + j) in each direction, giving cubes 𝒳n,m of sides with lengths ln times the length of a cube 2−I (C + j).]

Because the kernels are bounded by a multiple of kn, condition (10) is implied by (11), in view of Lemma 2.1, The latter condition reduces to , the probabilities G(𝒳n,m) being of the order 1/Mn.

The set of cubes 2−I (C + j) that intersects more than one set 𝒳n,m is of the order . To see this picture the set 𝒳 as a supercube consisting of the M cubes 𝒳n,m, stacked together in a M1/d × ⋯ × M1/d-pattern. For each coordinate i = 1, …, d the stack of cubes 𝒳 can be sliced in M1/d layers each consisting of (M1/d)d−1 cubes 𝒳m,n, which are cubes 2−I (C + j). The union of the boundaries of all slices (i = 1, …, d and slices for each i) contains the union of the boundaries of the sets 𝒳n,m. The boundary between two particular slices is intersected by at most cubes 2−I (C + j), for a constant C2 depending on the amount of overlap between the cubes. Thus in total of the order cubes intersect some boundary.

If Kk(x1, x2) ≠ 0, then there exists j and υ with , which implies that there exists j such that x1, x2 ∈ 2−I (C + j). If the cube 2−I (C + j) is contained in some 𝒳n,m, then (x1, x2) ∈ 𝒳n,m × 𝒳n,m. In the other case 2−I (C + j) intersects the boundary of some 𝒳n,m. It follows that the set of (x1, x2) in the complement of ∪m𝒳n,m × 𝒳n,m where Kk(x1, x1) ≠ 0 is contained in the union U of all cubes 2−I (C + j) that intersect the boundary of some 𝒳n,m. The integral of over this set satisfies

Here we use that G(2−I (C + j)) ≲ 1/kn. This completes the verification of (6).

By the spatial homogeneity of the wavelet basis, the contributions of the sets 𝒳n,m × 𝒳n,m to the integral of are comparable in magnitude. Hence condition (7) is satisfied for any Mn → ∞.

In order to satisfy conditions (8) and (9) we must choose Mn → ∞ with Mn ≲ n. This is compatible with choices such that Mn/kn → 0 and .

Proof of Proposition 4.2

Because Kk is an orthogonal projection on a (2k+1)-dimensional space, Lemma 7.1 gives that the operator norm satisfies ‖Kk‖ = 1 and that the numbers kn as in (4) but with μ2 = 1 are equal to .

By the change of variables x1 − x2 = u, x1 + x2 = υ we find, for any ε ∈ (0, π], and Kk(x1, x2) = Dk(x1 − x2),

By the symmetry of the Dirichlet kernel about π we can rewrite as . Splitting the integral on the right side of the preceding display over the intervals (ε, π] and (π, 2π], and rewriting the second integral, we see that the preceding display is equal to

For ε = 0 this expression is equal to the square L2-norm of the kernel Kk, which shows that . On the interval (ε, π) the kernel Dk is bounded above by . Therefore, the preceding display is bounded above by

We conclude that, for small ε > 0,

This tends to zero as k → ∞ whenever ε = εk ↓ 0 such that .

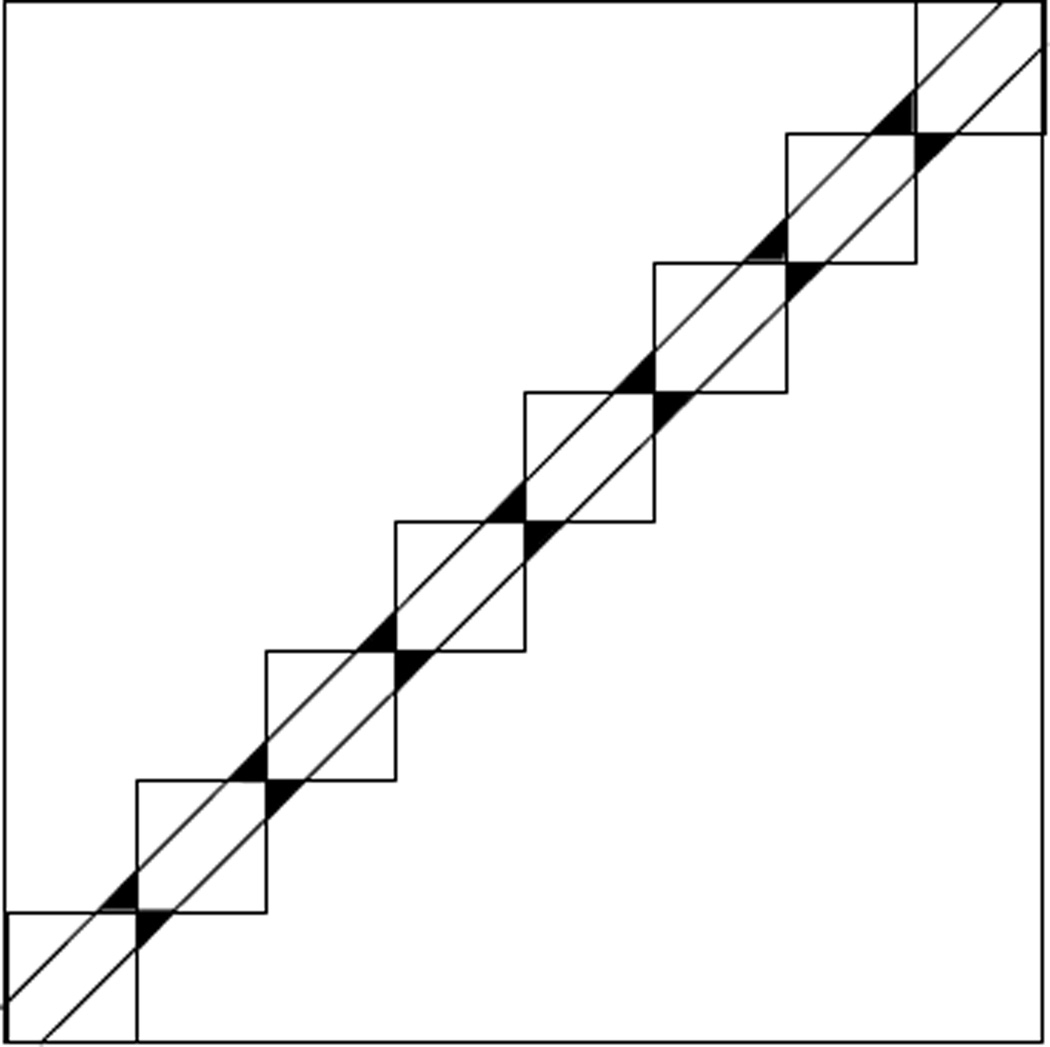

We choose a partition (−π, π] = ∪m𝒳n,m in Mn = 2π/δ intervals of length δ for δ → 0 with δ ≫ ε and ε satisfying the conditions of the preceding paragraph. Then the complement of ∪m𝒳n,m × 𝒳n,m is contained in {(x1, x2): |x1 − x2| > ε} except for a set of 2(Mn − 1) triangles, as indicated in Figure 3. In order to verify (6) it suffices to show that (2k + 1)−1 times the integral of over the union of the triangles is negligible. Each triangle has sides of length of the order ε, whence, for a typical triangle Δ, by the change of variables x1 − x2 = u, x2 = υ, and an interval I of length of the order ε,

Hence (6) is satisfied if 2(Mn − 1)ε → 0, i.e. ε ≪ δ.

Figure 3.

The triangles used in the proofs of Theorems 4.2 and 4.3, and the sets 𝒳n,m × 𝒳n,m.

Because is independent of m, (7) is satisfied as soon as the number of sets in the partitions tends to infinity.

Because 0 ≤ Kk ≤ 2k + 1, condition (10) is implied by (11), which is satisfied if δ ≪ n/k.

The desired choices are compatible, as by assumption k/n2 → 0.

Proof of Proposition 4.3

Without loss of generality we can assume that ∫ |ϕ| dλ = 1. By a change of variables

Here | ∫ g(x − συ)g(x) dx| ≤ ‖g‖∞ and, as σ ↓ 0,

for every fixed υ, by the L1-continuity theorem. We conclude by the dominated convergence theorem that . Because μ2 is bounded away from 0 and ∞, the numbers kn defined in (4) are of the exact order σ−1.

By another change of variables, followed by an application of the Cauchy-Schwarz inequality, for any f ∈ L2(G),

Therefore, the operator norms of the operators Kσ are uniformly bounded in σ > 0.

We choose a partition ℝ = ∪m𝒳n,m consisting of two infinite intervals (−∞, −a] and (a, ∞) and a regular partition of the interval (−a, a] in such a way that every partitioning set satisfies G(𝒳n,m) ≤ δ. We can achieve this with a partition in Mn = O(1/δ) sets.

Because |Kσ| is bounded by a multiple of σ−1, condition (10) is implied by (11), which takes the form δ/(σn) → 0, in view of Lemma 2.1.

For an arbitrary partitioning set 𝒳n,m,

It follows that (7) is satisfied as soon as δ → 0.

Finally, we verify condition (6) in two steps. First, for any ε ↓ 0, by the change of variables x1 − x2 = υ, x2 = x,

This converges to zero as σ → 0 for any ε = εσ > 0 with ε ≫ σ. Second, for ε ≪ δ the complement of the set ∪m𝒳n,m × 𝒳n,m is contained in {(x1, x2): |x1 − x2| > ε} except for a set of 2(Mn − 1) triangles, as indicated in Figure 3. In order to verify (6) it suffices to show that σ times the integral of over the union of the triangles is negligible. Each triangle has sides of length of the order ε, whence, for a typical triangle Δ, with projection I on the x1-axis,

The total contribution of all triangles is 2(Mn − 1) times this expression. Hence (6) is satisfied if 2(Mn − 1)ε → 0, i.e. ε ≪ δ.

The preceding requirements can be summarized as σ ≪ ε ≪ δ ≪ σn, and are compatible.

Proof of Proposition 4.4

Inequality (22) implies that cj(f) = 0 for every f that vanishes outside the interval , whence the representing function gj is supported on this interval. It follows that the function (x1, x2) ↦ Nj(x1)cj(x2) vanishes outside the square , which has area of the order l−2. We form a partition (0, 1] = ∪m𝒳n,m by selecting subsets of the basic knot sequences such that for every i and define . The numbers Mn are chosen integers much smaller than ln, and we may set for p = ⌊ln/Mn⌋.

Because Kk is a projection on Sr(T, d) and the function x1 ↦ Kk(x1, x2) is contained in Sr(T, d) for every x2, it follows that ∫ Kk(x1, x2)Kk(x1, x2) dx1 = Kk(x2, x2) for every x2, and hence

because the identities Ni = KkNi = ∑j cj(Ni)Nj imply that cj(Ni) = δij by the linear independence of the B-splines. Because the density of G and the function μ2 are bounded above and below the L2(G × G)-norm kn as in (4) is of the same order as the dimension kn = r + lnd of the spline space.

Inequality (22) implies that the norm of the linear map cj, which is the infinity norm ‖cj‖∞ of the representing function, is bounded above by a constant times , which is of the order kn. Therefore,

The set in the right side is the union of Mn cubes of areas not bigger than the area of the sets , which is bounded above by a constant times . (See Figure 7.) The preceding display is therefore bounded above by

For Mn/kn → 0 this tends to zero. This completes the verification of (6).

The verification of the other conditions follows the same lines as in the case of the wavelet basis.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Robins J, Li L, Tchetgen E, van der Vaart A. Probability and statistics: essays in honor of David A. Freedman, Vol. 2 of Inst. Math. Stat. Collect., Inst. Math. Statist. Beachwood, OH: 2008. Higher order influence functions and minimax estimation of nonlinear functionals; pp. 335–421. URL http://dx.doi.org/10.1214/193940307000000527. [Google Scholar]

- 2.Bickel PJ, Ritov Y. Estimating integrated squared density derivatives: sharp best order of convergence estimates. Sankhyā Ser. A. 1988;50(3):381–393. [Google Scholar]

- 3.Birgé L, Massart P. Estimation of integral functionals of a density. Ann. Statist. 1995;23(1):11–29. [Google Scholar]

- 4.Laurent B. Efficient estimation of integral functionals of a density. Ann. Statist. 1996;24(2):659–681. [Google Scholar]

- 5.Laurent B. Estimation of integral functionals of a density and its derivatives. Bernoulli. 1997;3(2):181–211. [Google Scholar]

- 6.Laurent B, Massart P. Adaptive estimation of a quadratic functional by model selection. Ann. Statist. 2000;28(5):1302–1338. [Google Scholar]

- 7.Robins J, Li L, Tchetgen E, van der Vaart AW. Quadratic semiparametric von Mises calculus. Metrika. 2009;69(2–3):227–247. doi: 10.1007/s00184-008-0214-3. URL http://dx.doi.org/10.1007/s00184-008-0214-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.van der Vaart A. Higher order tangent spaces and influence functions. Statist. Sci. 2014;29(4):679–686. [Google Scholar]

- 9.Tchetgen E, Li L, Robins J, van der Vaart A. Higher order estimating equations for high-dimensional semiparametric models. doi: 10.1214/16-AOS1515. preprint. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Robins J, van der Vaart A. Adaptive nonparametric confidence sets. Ann. Statist. 2006;34(1):229–253. [Google Scholar]

- 11.Mikosch T. A weak invariance principle for weighted U-statistics with varying kernels. J. Multivariate Anal. 1993;47(1):82–102. [Google Scholar]

- 12.Morris C. Central limit theorems for multinomial sums. Ann. Statist. 1975;3:165–188. [Google Scholar]

- 13.Ermakov MS. Asymptotic minimaxity of chi-squared tests. Teor. Veroyatnost. i Primenen. 1997;42(4):668–695. [Google Scholar]

- 14.Weber NC. Central limit theorems for a class of symmetric statistics. Math. Proc. Cambridge Philos. Soc. 1983;94(2):307–313. URL http://dx.doi.org/10.1017/S0305004100061168. [Google Scholar]

- 15.Bhattacharya RN, Ghosh JK. A class of U-statistics and asymptotic normality of the number of k-clusters. J. Multivariate Anal. 1992;43(2):300–330. URL http://dx.doi.org/10.1016/0047-259X(92)90038-H. [Google Scholar]

- 16.Jammalamadaka SR, Janson S. Limit theorems for a triangular scheme of U-statistics with applications to inter-point distances. Ann. Probab. 1986;14(4):1347–1358. [Google Scholar]

- 17.de Jong P. A central limit theorem for generalized quadratic forms. Probab. Theory Related Fields. 1987;75(2):261–277. URL http://dx.doi.org/10.1007/BF00354037. [Google Scholar]

- 18.de Jong P. A central limit theorem for generalized multilinear forms. J. Multivariate Anal. 1990;34(2):275–289. URL http://dx.doi.org/10.1016/0047-259X(90)90040-O. [Google Scholar]

- 19.Kerkyacharian G, Picard D. Estimating nonquadratic functionals of a density using Haar wavelets. Ann. Statist. 1996;24(2):485–507. [Google Scholar]

- 20.Robins J, Tchetgen Tchetgen E, Li L, van der Vaart A. Semiparametric minimax rates. Electron. J. Stat. 2009;3:1305–1321. doi: 10.1214/09-EJS479. URL http://dx.doi.org/10.1214/09-EJS479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Newey WK, Hsieh F, Robins JM. Twicing kernels and a small bias property of semiparametric estimators. Econometrica. 2004;72(3):947–962. [Google Scholar]

- 22.Daubechies I. Ten lectures on wavelets, Vol. 61 of CBMS-NSF Regional Conference Series in Applied Mathematics; Society for Industrial and Applied Mathematics (SIAM); Philadelphia, PA. 1992. [Google Scholar]

- 23.DeVore RA, Lorentz GG. Constructive approximation, Vol. 303 of Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences] Berlin: Springer-Verlag; 1993. [Google Scholar]

- 24.van der Vaart AW. Asymptotic statistics, Vol. 3 of Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge: Cambridge University Press; 1998. [Google Scholar]

- 25.Giné E, Latala R, Zinn J. High dimensional probability, II (Seattle, WA 1999), Vol. 47 of Progr. Probab. Boston, MA: Birkhäuser Boston; 2000. Exponential and moment inequalities for U-statistics; pp. 13–38. [Google Scholar]