Abstract

Childhood obesity is a complex, worldwide problem. Significant resources are invested in its prevention, and high-quality evaluations of these efforts are important. Conducting trials in school settings is complicated, making process evaluations useful for explaining results. Intervention fidelity has been demonstrated to influence outcomes, but others have suggested that other aspects of implementation, including participant responsiveness, should be examined more systematically. During Food, Health & Choices (FHC), a school-based childhood obesity prevention trial designed to test a curriculum and wellness policy taught by trained FHC instructors to fifth grade students in 20 schools during 2012–2013, we assessed relationships among facilitator behaviors (i.e., fidelity and teacher interest), participant behaviors (i.e., student satisfaction and recall), and program outcomes (i.e., energy balance-related behaviors) using hierarchical linear models, controlling for student, class, and school characteristics. We found positive relationships between student satisfaction and recall and program outcomes, but not fidelity and program outcomes. We also found relationships between teacher interest and fidelity when teachers participated in implementation. Finally, we found a significant interaction between fidelity and satisfaction on behavioral outcomes. These findings suggest that individual students in the same class responded differently to the same intervention. They also suggest the importance of teacher buy-in for successful intervention implementation. Future studies should examine how facilitator and participant behaviors together are related to both outcomes and implementation. Assessing multiple aspects of implementation using models that account for contextual influences on behavioral outcomes is an important step forward for prevention intervention process evaluations.

Keywords: childhood obesity prevention, process evaluation, implementation, fidelity, responsiveness

Background

Childhood obesity (i.e., Body mass index (BMI) ≥95th percentile age for sex) prevalence has increased threefold since 1970 in the U.S., based on data from the nationally representative National Health and Nutrition Examination survey (NHANES; Ogden & Carroll, 2010). Furthermore, non-white children and adolescents, and children from families with lower socioeconomic status experience disproportionately high levels of childhood obesity (Pratt, Arteaga & Loria, 2014). Worldwide, trends are similar (de Onis, Blossner & Borghi, 2010). Childhood obesity has been linked to chronic disease in both childhood (Herouvi1, Karanasios, Karayianni1 & Karavanaki, 2013) and adulthood (Cote, Harris, Panagiotopoulos, Sandor & Devlin, 2013), contributing to the high economic and social burden of obesity. A complex health and environmental problem, obesity has proved extremely challenging to treat, underscoring the importance of obesity prevention efforts. Most interventions developed to prevent childhood obesity target energy balance-related behavior (EBRB), including dietary and physical activity behaviors, such as fruit and vegetable consumption or television viewing (van Stralen, Yildirim, te Velde, Brug, van Mechelen, & Chinapaw, 2011).

Significant resources are invested in childhood obesity prevention interventions; thus, emphasis has been placed on establishing an evidence base for childhood obesity prevention interventions through randomized controlled trials (RCTs). However, results from RCT evaluations of childhood obesity prevention interventions are mixed. Pooled in meta-analyses, childhood obesity prevention interventions are demonstrated to be highly heterogeneous and though generally positive, effects are typically small (Waters et al., 2011).

In RCTs implemented in schools, variations in sites, stakeholders, and participant characteristics are difficult to control. Efforts to improve prevention interventions through process evaluations, which suggest how variations in implementation influenced results, have focused on fidelity as a major predictor of intervention success (Carroll, Patterson, Wood, Booth, Rick & Balain, 2007; Dusenbury, Brannigan, Falco & Hasen, 2003; Durlak & Dupre, 2008; Pettigrew, Graham, Miller-Day, Hecht, Krieger & Shin, 2015; Proctor et al., 2011). Fidelity is the most commonly assessed implementation factor in prevention interventions (Durlak & Dupre, 2008) and specifically, childhood obesity prevention interventions (e.g., Griffin, 2014; Lee, Contento & Koch, 2013; Martens, van Assema, Paulussen, Schaalma & Brug, 2006). Further study of other aspects of implementation (Kam, Greenberg & Walls, 2003) and the interrelationships among them (Proctor et al, 2011) is needed.

Berkel, Mauricio, Schoenfelder, and Sandler (2011) proposed “an integrated model of program implementation,” a theoretical framework categorizing intervention implementation into “facilitator behaviors” and “participant behaviors,” and hypothesizing links to program outcomes. Facilitator behaviors included: fidelity, defined as “adherence to program curriculum;” quality of delivery, defined as the “skill with which the program is implemented;” and adaptation, defined as “additions made to the program during implementation.” In this model, the term “participant behaviors” referred to responsiveness, which they defined as “involvement and interest in the program,” including participation, satisfaction, and home practice. Though responsiveness has been measured less frequently in process evaluations (Durlak & Dupre, 2008), the inclusion of student satisfaction in childhood obesity prevention intervention process evaluations has been on the rise (e.g., Christian, Evans, Ransley, Greenwood, Thomas & Cade 2011; Lee et al, 2013; Martens et al, 2006; Waters et al, 2011; Singh, Chinapaw, Brug & Van Mechelen, 2009). School-based process evaluations have also measured student recall (e.g., Schneider et al, 2009) and use of materials (e.g., Christian et al, 2011; Martens et al, 2006; Schmied, Parada, Horton, Ibarra & Ayala, 2015).

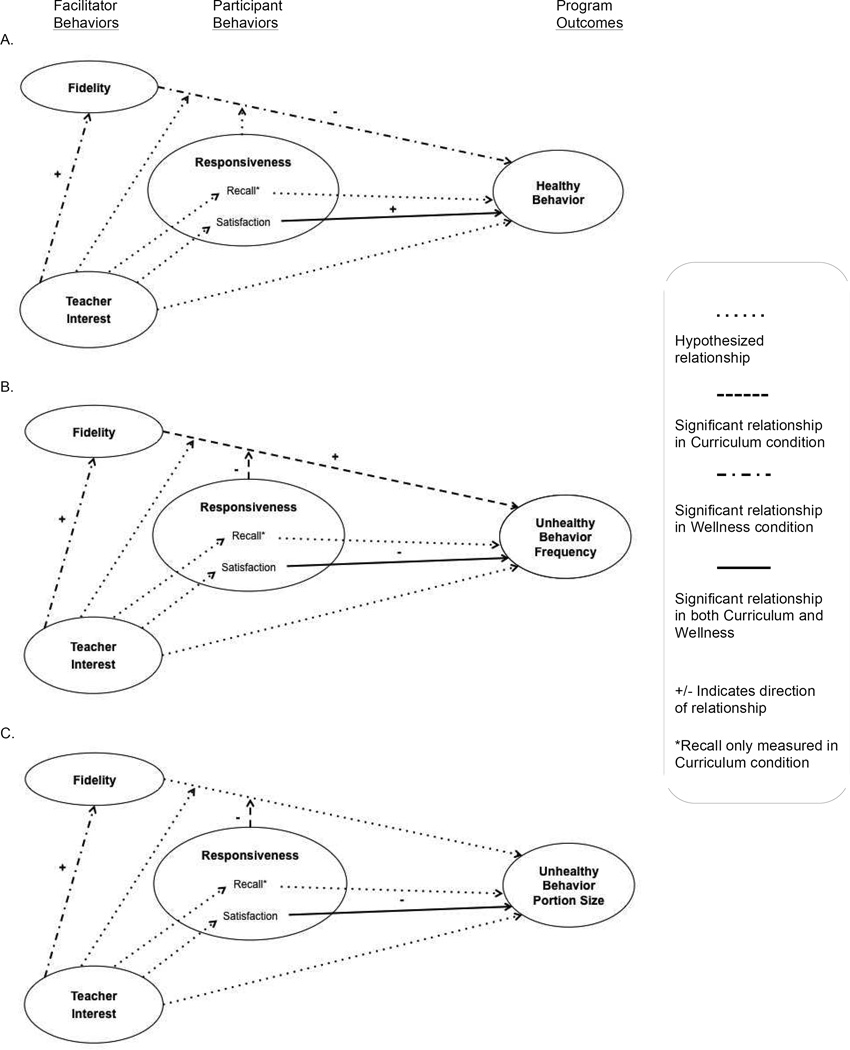

As part of the Food, Health & Choices (FHC) school-based obesity prevention trial process evaluation, we were able to assess many of the theoretical relationships proposed by Berkel et al (2011). We measured facilitator behaviors including the percent of program activities completed in each classroom (i.e., fidelity) and the classroom teachers’ interest in the program based on their attitudes toward FHC and participation in teaching FHC (i.e., quality). Because FHC was designed using a participatory approach and extensive formative evaluation in schools that were very similar to those in which it was implemented, we did not measure adaptation of the intervention. We measured participant behaviors, including satisfaction with and recall of the intervention. We then tested how facilitator behaviors and participant behaviors predicted program outcomes (EBRBs) during FHC based on Berkel et al’s (2011) model. Because facilitator attitudes towards and skills in implementing an intervention have been linked to fidelity (Durlak & Dupre, 2008), we included an additional relationship, between teacher interest and fidelity, in our model. We hypothesized that: 1) Fidelity, teacher interest, student satisfaction, and student recall would predict EBRBs; 2) Teacher interest would predict fidelity and both teacher interest and fidelity would predict student satisfaction and recall; 3) Teacher interest, student satisfaction, and recall would each moderate the relationship between fidelity and EBRBs; and 4) Student satisfaction and recall would mediate the relationship between fidelity and EBRBs. These relationships are depicted in Figure 1. Here, we present the results of statistical models assessing these relationships, accounting for three levels of influence on outcomes and controlling for contextual factors.

Figure 1.

Relationships among Facilitator Behaviors, Participant Behaviors, and Energy Balance-Related Behavior (EBRB) Program Outcomes during Food, Health & Choices (FHC) Childhood Obesity Prevention Intervention

Methods

FHC, a cluster randomized controlled trial, was conducted during 2012–2013 in high-need New York City (NYC) public elementary schools. FHC tested the impact of a theory-based nutrition science inquiry curriculum, classroom-level nutrition and physical activity wellness policy, and combination of the two on 5th grade students’ EBRBs and BMIs. Schools were recruited via mail in six NYC districts. Twenty (50%) interested schools were excluded because of charter status (n=1), classroom structure (n=1), or lack of follow-up (n=18). Participating schools (n=20) were stratified based on percent of students eligible for free and reduced-price lunch and average reading and math scores. Then 5 schools were randomly assigned to each of 4 study conditions: Curriculum, Wellness, Curriculum+Wellness, or delayed control (Contento, Koch & Gray, 2015), see Figure S.1 (available online).

The Intervention

The intervention was developed using the Nutrition Education DESIGN Procedure (Contento, 2015), a participant-centered approach involving the systematic operationalization of theory-based determinants of behavior change in program activities and evaluation plans.

Curriculum

Students in schools randomized to Curriculum received a 23-lesson nutrition science curriculum based on social cognitive and self-determination theories that centered on 6 EBRBs: Choose more fruits and vegetables and physical activity; and choose less sugar-sweetened beverages, fast food, processed packaged snacks, and screen time. Graduate student nutrition educators (FHC instructors) were hired and trained by the research team to teach the curriculum, which was approved by the NYC Department of Education (DOE) to substitute for two science units taught in 5th grade. The first 12 lessons were taught bi-weekly during the fall. During the winter and early spring, while students and teachers prepared for state standardized tests, 5 “booster” lessons were taught about once a month. Finally, after state tests, the final 6 lessons were taught bi-weekly for three weeks.

Wellness

Students in schools randomized to Wellness received a classroom wellness policy, including a snack policy addressing foods brought into the classroom and “Dance Breaks,” choreographed 10-minute bouts of physical activity during class time. FHC instructors facilitated both activities during weekly classroom visits throughout the school year; classroom teachers were responsible for facilitating Wellness on remaining schooldays.

Curriculum+Wellness

Students in schools randomized to Curriculum+Wellness received both the curriculum and wellness policy interventions, as described above.

Control

Data were collected from students in schools randomized to the control condition during the intervention year. During the following school year (2013–14), 5th grade students in these schools received Curriculum+Wellness.

Participants

5th grade students participated in the study. Data from students who were absent, no longer attending a participating school, or whose parent withdrew them from the study were not included. Students who were outliers on body composition (n=5) or age (n=1) were excluded. Table 1 shows the baseline characteristics of the study participants and schools.

Table 1.

Baseline Characteristics of Food, Health & Choices Student Participants and Schools

| Variables | Study Sample | Curriculum | Wellness | Control |

|---|---|---|---|---|

| Mean (SD) | Mean (SD) | Mean (SD) | Mean (SD) | |

| Student Demographics | n=1159 | n=604 | n=569 | n=301 |

| Age in years | 10.6 (0.58) | 10.5 (0.52) | 10.5 (0.54) | 10.9 (0.64) |

| Gender (% boys) | 49.3 | 47.7 | 49.9 | 51.5 |

| BMI-za | 0.69 (1.2) | 0.65 (1.2) | 0.76 (1.2) | 0.75 (1.2) |

| Boys | 0.78 (1.2) | 0.75 (1.2) | 0.85 (1.2) | 0.81 (1.2) |

| Girls | 0.60 (1.2) | 0.57 (1.2) | 0.67 (1.2) | 0.68 (1.2) |

| School Demographics | n=20 | n=10 | n=10 | n=5 |

| % Eligible for free/reduced price lunch |

86.0 (19.3) | 85.4 (22.2) | 88.6 (13.0) | 84.9 (23.5) |

| % Black | 29.6 (22.0) | 31.5 (24.0) | 31.6 (24.3) | 21.4 (19.0) |

| % Hispanic | 59.0 (23.2) | 54.3 (25.7) | 61.0 (25.1) | 67.1 (19.0) |

| % English language learners |

16.6 (10.6) | 14.0 (8.0) | 16.7 (9.4) | 20.1 (15.6) |

Notes: Students in 5 schools received both Curriculum and Wellness treatment, these students are included in both groups; SD= standard deviation;

BMIz measures relative BMI (i.e., Body Mass Index in kg/m2) adjusted for age and sex, BMIz of 0.0=50th percentile relative to external reference standards1.

1 Must, A., & Anderson, S. E. (2006). Pediatric mini review. Body mass index in children and adolescents: considerations for population-based applications. International Journal of Obesity, 30, 590–594.

Measures

We collected EBRB outcome data at baseline and post-intervention and socio-demographic data for students and schools at baseline. We collected data about facilitator and participant behavior throughout the intervention. Data were collected separately for each arm; thus, students and FHC instructors provided Curriculum data during lessons and Wellness data during Wellness activities.

Facilitator behaviors

We measured facilitator behaviors at the classroom level. These included fidelity (i.e., adherence) and teacher interest (i.e., quality). Although classroom teachers did not teach the curriculum and only facilitated some of the wellness activities, we considered their attitude toward and participation in FHC part of the quality of delivery. FHC instructors facilitated the curriculum and some of the wellness activities and were highly trained to consistently present the activities; they were not independently observed frequently enough to reliably measure quality of delivery.

Fidelity

We measured fidelity by calculating percent completion. FHC instructors completed previously validated feedback forms (Contento, Koch, Lee & Calabrese-Barton, 2010) after each session. FHC instructors received extensive training and weekly follow up to improve feedback form accuracy. We calculated fidelity for each session by dividing completed activities completed by total activities in the lesson; a mean completion score was calculated for each class and reported on a 100-point scale. 1,348 feedback forms were collected (76% response rate) from Curriculum (n=611) and Wellness (n=737) sessions.

Teacher interest

We also used the feedback forms completed by FHC instructors after each session to measure two variables related to teachers on 3-point scales: Teacher participation (uninvolved, somewhat involved, very involved) and teacher attitude (negative, neutral, positive). The pairs of teacher variables were highly correlated within each classroom, so teacher participation and attitude were averaged to create a teacher interest score. Teacher interest during observed FHC sessions was used to operationalize quality, defined as clear, comprehensible and enthusiastic program delivery by Berkel et al (2011).

Participant behaviors

We measured participant behaviors at the individual student level. Both satisfaction in both Wellness and Curriculum conditions and recall in the Curriculum condition represented responsiveness to the intervention.

Student satisfaction

We measured satisfaction using a survey administered to students during the final FHC session. Students who participated in the Curriculum (n=494, 66%) and Wellness conditions (n=474, 68%) answered the question, “Overall, did you like Food, Health & Choices?” on a scale of 1 to 5, from “didn’t like it at all” to “loved it.” Students in Wellness answered additional questions about satisfaction with the dance breaks and snack policy.

Student recall

We also measured recall of the curriculum with lesson artifacts collected about ¾ through the curriculum. Students participating in Curriculum (n=515, 69%) completed prompts with the six FHC behavioral goals. Student responses were coded by recall of the behaviors (no recall=0, recall=1, partial recall=0.5) and percent recall was calculated for each student. To facilitate comparison, percentages were transformed into 5-point ordinal variables.

Program Outcome

We collected students’ self-reported EBRB data at baseline and post-intervention using the previously validated Food Health & Choices Questionnaire (FHC-Q; n=938, 70% response rate). The FHC-Q consists of 45 items about EBRB frequency and size/duration and 71 items about theory-based psychosocial mediators. FHC-Q EBRBs include fruit and vegetable, sugar-sweetened beverage, processed packaged snack, fast food, physical activity and recreational screen time behaviors. Eating behavior questions capture the frequency of food eaten per week and portion size usually eaten at one time. Physical activity and recreational screen time questions capture the weekly frequency of activities and per session duration of the activity. Frequency questions are asked with “In the past week, I ate…(or I did…)” and portion size/duration questions are asked with the stem “How much did you usually eat/drink at one time…” or “How long each time did I…” For each of the six EBRBs measured by the FHC-Q, there are 4–14 items. The psychometric properties and administration of the FHC-Q are discussed elsewhere (Gray et al., 2016). The range of the percentage missing data was from 7% to 26% depending on scales (Gray, Burgermaster, Tipton, Contento, Koch & Di Noia, 2015). Little’s MCAR test (Little, 1988) showed insufficient evidence to suggest that the missing pattern was not completely at random (χ2=15664.43; p=.065).

Contextual factors

We collected data about students, classes, and schools to include as covariates in our models. As BMI has been related to EBRB reporting (Fisher, Johnson, Lindquist, Birch & Goran, 2000), we included baseline BMI in our models. We assessed BMI with a portable stadiometer (Seca model 213) and a Tanita® body composition analyzer (Model SC-331s) using a standardized protocol and calculated BMI (kg/m2) and BMIz-score (BMIz; Kuczmarski et al., 2000). We also collected gender and age data. Evidence linking classroom norms to fidelity and outcomes in behavior interventions (e.g., Lee et al, 2013; Peets, Poyhonen, Juvonen, & Samivalli, 2015) suggests that a class’s behavior might influence implementation and outcomes. We used feedback forms completed by FHC instructors after each session to measure two variables that reflected classroom norms: student participation (uninvolved, somewhat involved, very involved), and student behavior (major problems, minor problems, no problems). These variables were averaged to create a class engagement score. We also used public NYCDOE data for school covariates, specifically: percentage of students eligible for free and reduced price lunch (%FRL), percentage of Black students (%Black), percentage of Hispanic students (%Hispanic), and percentage of English language learners (%ELL).

Analysis

We calculated means and standard deviations to describe each variable and conducted an exploratory factor analysis (EFA; available online). We then used factor loadings with a multiply-imputed dataset to calculate pre- and post-test factor scores for each participant (n=1 137; available online) and transformed these scores to percentage scores ranging from 1–100%. For all EBRB scales, a higher score indicated more of the behavior.

Hierarchical linear modeling

We used hierarchical linear modeling (HLM) because treatment was assigned randomly to intact schools (Raudenbrush & Byrk, 2002). Model fit has been improved in 3-level HLMs with student-, classroom-, and school-level covariates compared to 2-level HLMs with student- and school-level covariates (Koth, Bradshaw & Leaf, 2008). Therefore, we used 3-level models when student-level variables were available. We previously found that school-level characteristics explained a significant amount of the clustering indicated by significantly high baseline intraclass correlations for BMI and EBRB across the schools (Gray et al., 2015); therefore, they were included in our models. To treat missing data, we performed multiple imputation in SPSS v.22 (IBM) and used averaged results of 10 imputed datasets in analyses conducted in HLM 7 (Raudenbush, Bryk, Cheong, Fai, Congdon, & du Toit, 2011).

Assessing the relationship between facilitator behaviors, participant behaviors, and EBRB outcomes

3-level HLMs were used to test hypotheses that fidelity, teacher interest and responsiveness (i.e., student satisfaction and recall) were related to EBRB outcomes. Implementation and outcomes have been previously compared across treatment and control groups in childhood obesity prevention process evaluations (e.g., Gray, Contento & Koch, 2015; Saunders, Ward, Felton, Dowda & Pate, 2006). We assessed fidelity, satisfaction, and recall using a piecewise regression approach (Paulson, 2007). This approach assumes that the regression line is flat for the control group and depicts a gap in control and treatment group trends, allowing comparison across the whole range of fidelity and responsiveness. In order to facilitate a piecewise approach, we calculated interaction terms for the interaction between fidelity, satisfaction, recall and a binary treatment variable (0=control, 1=intervention). Ordinal variables were transformed to stabilize variance prior to calculating the interaction variables (Cohen, Cohen, West & Aiken, 2002). Students who received Curriculum and students who received Wellness (in both cases including data from students in schools randomized to Curriculum+Wellness) were compared to students in the control group. Fidelity, satisfaction, and recall were assumed to be 0 for the control group because these students did not participate in FHC during the intervention year. We examined each EBRB scale separately, controlling for group mean-centered baseline age, gender, BMIz, and EBRB at the student level (L1), and grand mean-centered %FRL, %ELL, %Black, and %Hispanic at the school level (L3). Multicollinearity precluded controlling for treatment. An example model is provided [Eqn. 1].

| [Eqn. 1] |

We also used 3-level models to assess the relationships between teacher interest and EBRB. Models controlled for group mean-centered baseline age, gender, BMIz, and EBRB at the student level (L1); group mean-centered class engagement at the classroom level (L2); and grand mean-centered %FRL, %ELL, %Black, and %Hispanic at the school level (L3). We controlled for treatment group at L3 as these models did not use piecewise regression.

Assessing the relationship between facilitator behaviors and participant behaviors

2-level HLMs were fitted to examine the relationship between teacher interest and fidelity, both classroom level variables. No individual student level variables were included in these models. The dependent variables, Curriculum fidelity and Wellness fidelity, both classroom level (L1), were examined separately. Models controlled for class engagement at the classroom level (L1) and grand mean-centered %FRL, %Black, %Hispanic and %ELL at the school level (L2). An example model is provided [Eqn. 2].

| [Eqn. 2] |

3-level HLMs assessed relationships between teacher interest and student responsiveness. The dependent variables, Wellness satisfaction, Curriculum satisfaction, and Curriculum recall, all student level (L1), were examined separately. Models controlled for group mean-centered baseline age, gender, and BMIz at the student level (L1); group mean-centered fidelity and class engagement at the classroom level (L2); treatment, and grand mean-centered %FRL, %ELL, %Black, and %Hispanic at the school level (L3). An example model is provided [Eqn. 3].

| [Eqn. 3] |

Moderation models

We tested the influence of the interactions between teacher interest and fidelity and between responsiveness and fidelity on EBRBs.

Results

Descriptive analyses of process variables were similar across conditions, as shown in Table 2; however, fidelity was consistently high in Curriculum, and lower and more variable in Wellness. A 3-factor EFA reduced the 45 EBRB items from the FHC-Q to 3 factors (χ2=3704, p<.0001, RMSEA=0.05; available online): Healthy behaviors (HB), unhealthy eating behavior frequency and sedentary behavior (UBF), and unhealthy behavior portion size (UBS). These factor scales are described by treatment at baseline and posttest in Table 2.

Table 2.

Description of Facilitator Behavior, Participant Behavior, and Program Energy Balance-Related Behavior (EBRB) Outcome Means and Standard Deviations by Treatment

| Curriculum (school n=10, class n=30, student n=760) |

Wellness (school n=10, class n=30, student n=756) |

Control (school n=5, student n=377) |

||||

|---|---|---|---|---|---|---|

| Facilitator Behaviors | ||||||

| Fidelitya (class level, max=100) |

95.2±4.0 | 66.7±32.6e | - | |||

| Teacher Interesta (class level, max=3) |

2.4±0.3 | 2.5±0.4 | - | |||

| Participant Behaviors (Responsiveness) | ||||||

| Overall Satisfactionb (student level, max=5) |

4.0±1.0 | 4.1 ±1.0 | - | |||

| Dance Breaks Satisfactionb (student level, max=5) |

- | 4.3±0.9 | - | |||

| Snack Policy Satisfactionb (student level, max=5) |

- | 3.5±1.1 | - | |||

| Curriculum Recallb (student level, max=5) |

−3.4±1.2 | - | ||||

| Program Outcomes (EBRBs) | Pretest | Posttest | Pretest | Posttest | Pretest | Posttest |

| Healthy Behavior (HB)c (student level, max=100) |

55.08 ±12.15 | 54.52 ±10.82 | 55.14 ±12.59 | 53.38 ±10.85 | 52.45 ±9.78 | 52.62 ±10.78 |

| Unhealthy Eating Behavior Frequency and Sedentary Behavior (UBF)c (student level, max=100) |

61.10 ±17.28 | 58.51 ±16.04 | 60.81 ±16.56 | 57.64 ±14.68 | 64.71 ±15.22 | 59.02 ±13.30 |

| Unhealthy Eating Behavior Portion Size (UBS)c (student level, max=100) |

63.68 ±18.09 | 59.79 ±16.16 | 64.1 ±17.35 | 59.67 ±15.04 | 67.93 ±15.53 | 61.29 ±12.64 |

Notes: Data for students in Curriculum+Wellness were collected as part of each intervention and are included with the corresponding descriptives.

Data are from curriculum feedback forms (n=611) and wellness classroom tracking posters (class n=29) Maximum curriculum was 23 45-minute lessons, maximum wellness was daily 165–175 10-minute dance breaks, including dance breaks without a FHC instructor present. Teacher interest is a mean score calculated from mean teacher participation and mean teacher attitude for each class

Data are from curriculum student surveys (n=494) and wellness student surveys (n=474), except curriculum recall data, which are from lesson artifacts collected ¾ of the way through the curriculum (n=515).

All scales based on self-reported student EBRB data from the FHC-Q administered at baseline and after the Food, Health & Choices intervention; scales calculated using factor loadings from a Promax-rotated exploratory factor analysis and are reported on a scale of 0–100, with higher scores indicating more of the indicated behavior

One school’s fidelity data were excluded, making school n=9, class n=26, student n=954

Main effects

We used 3-level HLMs to test hypotheses that fidelity, teacher interest, satisfaction, and recall were each related to healthier EBRB outcomes (i.e., HB, UBF, UBS). The results of these models are described below and summarized in Table 3. We used 2- and 3-level HLMs to test hypotheses that teacher interest was related to fidelity, and that teacher interest and fidelity were related to satisfaction and recall. The results of these models are described below.

Table 3.

Relationships between Facilitator Behaviors, Participant Behaviors, and Program Energy Balance-Related Behavior (EBRB) Outcomes

| Program Outcomes (EBRBs)a (student level; max=100) |

|||

|---|---|---|---|

| Healthy Behavior (HB) |

Unhealthy Eating Behavior Frequency and Sedentary Behavior (UBF) |

Unhealthy Eating Behavior Portion Size (UBS) |

|

| Facilitator Behaviors (class level) |

estimate (SE) | estimate (SE) | estimate (SE) |

| Fidelity (max=100) | |||

| Curriculumb | −0.307 (0.181) | 0.683 (0.322)* | 0.236 (0.292) |

| Wellnessc | −0.063 (0.027)* | 0.051 (0.050) | −0.005 (0.044) |

| Teacher Interestd (max=3) | −1.44 (1.478) | 1.66 (2.785) | 2.210 (2.703) |

| Participant Behaviors (Responsiveness) (student level) |

|||

| Curriculum Recalle (max=5) | 3.224 (1.825) | −2.740 (2.279) | −2.804 (2.491) |

| Curriculum Satisfactionf (max=5) | 5.129 (1.969)** | −8.222 (2.568)*** | −5.276 (2.606)* |

| Wellness Satisfactiong (max=5) | 4.119 (2.002)* | −4.437 (2.489) | −7.11 (2.504)** |

| Dance Breaks Satisfaction | 3.191 (2.166) | −0.662 (2.710) | −3.098 (2.820) |

| Snack Policy Satisfaction | 4.208 (1.833)* | −5.267 (2.237)* | −7.873 (2.284)*** |

| Moderators | |||

| Teacher Interest X Curriculum Fidelity |

0.906 (1.286) | 0.516 (1.027) | 1.634 (0.915) |

| Teacher Interest X Wellness Fidelity |

−0.015 (0.183) | −0.178 (0.132) | −0.130 (0.133) |

| Curriculum Recall X Fidelity | 0.511 (0.545) | −0.765 (0.721) | 0.292 (0.780) |

| Curriculum Satisfaction X Fidelity |

0.037 (0.155) | −0.017 (0.232) | −0.032 (0.203) |

| Wellness Satisfaction X Fidelity | 0.014 (0.022) | −0.056 (0.026)* | −0.059 (0.025)* |

Note: Models controlled for %free and reduced price lunch, %Black, %Hispanic, %English language learners, and study condition at school level, class engagement and teacher interest at class level, and baseline age, gender, EBRB, and BMI z-score at student level. Predictors were interaction variables (e.g., recallXtreatment).

Scales calculated using factor loadings from a Promax-rotated exploratory factor analysis of self-reported student EBRB data from the FHC-Q.

From feedback forms, maximum curriculum was 23 45-minute lessons; control=0; school n=15, class n=44, student n=1140.

From tracking posters, maximum wellness was 175 10-minute dance breaks; control=0; school n=14, class n=41, student n=954 (one school excluded)

From feedback forms, score calculated from mean teacher participation and mean teacher attitude for each class; school n=15, class n=45, student n=1053.

Data from lesson artifacts collected ¾ through the curriculum; recall for all students in control=0; school n=15, class n=44, student n=1140.

Data from student surveys at the conclusion of FHC; satisfaction for all students in control=0; school n=15, class n=44, student n=1140.

Data from student surveys at the conclusion of FHC; satisfaction for all students in control =0; school n=15, class n=45, student n=1118.

p<.05

p<.01

p<.001

Facilitator behavior and EBRB outcomes

Six models examining the relationship between Curriculum fidelity (school n=15, class n=44, student n=1140), Wellness fidelity (school n=14, class n=41, student n=954), and HB, UBF, and UBS compared intervention to control using piecewise regression. Models controlled for school level demographics and baseline age, gender, BMIz, and EBRBs. It proved particularly challenging to collect fidelity data on Wellness from one school with four classes. Though Wellness activities were implemented, teachers resisted fidelity questions and did not complete tracking activities. The FHC instructor reported questionable reliability of the feedback received from the classes in that particular school and the research team decided to exclude those data from the analysis, which reduced school sample size by one for analyses including fidelity. Three models were used to examine the relationship between teacher interest and HB, UBF, and UBS controlling for school level demographics, treatment, class engagement, and baseline student age, gender, BMIz (school n=15, class n=45, student n=1053).

Wellness fidelity was significantly negatively related to HB; for every 1-point increase in wellness fidelity on a 100-point scale, HB decreased by 0.06 on a 100-point scale. Curriculum fidelity was significantly and positively related to UBF; for every 1-point increase in curriculum fidelity, student UBF increased by 0.68 on a 100-point scale. Other relationships between fidelity and EBRBs were not significant. Fidelity had negative relationships with HB and an inconsistent, but generally positive relationship with unhealthy EBRBs; the more FHC was presented in a classroom, the students tended to report less healthy EBRBs. Teacher interest was not significantly associated with any EBRBs.

Participant behavior and EBRB outcomes

The 15 models examining the relationship between Curriculum recall, Curriculum satisfaction (both school n=15, class n=44, student n=1140), Wellness Satisfaction (school n=15, class n=45, student n=1118), and HB, UBF, and UBS compared intervention to control using a piecewise regression approach. All models controlled for school level demographics and student baseline age, gender, BMIz and EBRB.

Curriculum satisfaction was significantly related to HB, UBF, and UBS, compared to control; for every 1-point increase in Curriculum satisfaction on a 5-point scale, HB increased 5 points on a 100-point scale, and UBF and UBS decreased 8 and 5 points, respectively, on a 100-point scale. Wellness satisfaction was significantly related to HB and UBS; for every 1-point increase in Wellness satisfaction on a 5-point scale, HB increased 4 points on a 100-point scale, and UBS decreased 7 points on a 100-point scale. Though Curriculum recall was not significantly associated with any EBRB, it was positively related to HB and negatively related to UBF and UBS.

Additional sub-analyses examining satisfaction with each of the two Wellness components, dance breaks and snack policy, indicated positive relationships with HB and negative relationships with UBF and UBS. Dance breaks satisfaction was not significantly associated with any EBRB, but was positively related to HB and negatively related to UBF and UBS. Snack policy satisfaction was significantly related to HB, UBF, and UBS; for every 1-point increase in snack policy satisfaction on a 5-point scale, HB increased 4 points on a 100-point scale, and a UBS and UBF decreased 5 and 8 points, respectively, on a 100-point scale.

Across all measures of student-level responsiveness, there was a positive relationship with HB and a negative relationship with UBF and UBS; though not all of these relationships were significant, positive student responses to FHC (in so far as they recalled or liked it), tended to co-occur with more positive EBRBs.

Teacher interest and fidelity

Two 2-level HLMs were used to examine relationships between teacher interest and fidelity in classes receiving Curriculum (school n=10, class n=30) and classes receiving Wellness (school n=9, class n=25). Both models controlled for school level demographics, study condition, and class engagement.

In classes that received Curriculum, teacher interest was not significantly related to fidelity (b=−1.69, SE=2.67, t=−0.63, p=.54). In classes that received Wellness, teacher interest was significantly positively related to fidelity (b=47.44, SE=16.93, t=2.80, p=.01); for every 1-point increase in teacher interest on a scale of 1 to 3, fidelity increased by 47 points on a 100-point scale. When classroom teachers in Wellness schools were interested and participated while the FHC instructor was facilitating, their classes received more of the FHC program.

Teacher interest, fidelity, and student satisfaction and recall

Three 3-level HLMs were used to examine the relationships among teacher interest and Wellness satisfaction (school n=9, class n=25, student n=354), Curriculum satisfaction (school n=10, class n=30, student n=426), and Curriculum recall (school n=10, class n=29, student n=447). All models controlled for school level demographics, study condition, class engagement, fidelity, and student baseline age, gender, and BMIz. Teacher interest was not related to satisfaction in Wellness (b=−0.45, SE=0.33, t=−1.37, p=.19) or Curriculum (b=−0.38, SE=0.29, t=−1.32, p=.20); nor was it related to Curriculum recall (b=0.34, SE=0.36, t=0.95, p=.36). Fidelity was not related to satisfaction in Wellness (b=0.001, SE=0.004, t=0.20, p=.85) or Curriculum (b=−0.02, SE=0.03, t=−0.78, p=.47); nor was it related to Curriculum recall (b=0.02, SE=0.03, t=0.74, p=.47).

Moderation models

We used 3-level HLMs to test hypotheses that teacher interest and student satisfaction and recall moderate the relationship between fidelity and EBRBs (i.e., HB, UBF, UBS). The results of these models are described below and summarized in Table 3. There were no significant interactions between teacher interest and fidelity for any EBRBs. We found a significant interaction effect of satisfaction and fidelity on UBF and UBS in Wellness. The more students liked FHC, the greater the effect of fidelity on reducing unhealthy behaviors.

Mediation models

Assumptions for mediation were not met because there were no significant main effects for the relationship between fidelity and responsiveness.

Discussion

As part of the FHC school-based obesity prevention trial process evaluation, we tested relationships between facilitator behaviors, participant behaviors, and program EBRB outcomes hypothesized by Berkel et al (2011) using HLM to account for clustering at the class and school levels. We found that fidelity was negatively related to EBRB outcomes (i.e., HB, UBF, and UBS) and teacher interest was not related to EBRB outcomes, but measures of student responsiveness (i.e., satisfaction and recall) were related to improved EBRB. When assessing relationships between facilitator behaviors and participant behaviors, we found that teacher interest predicted fidelity, but only in Wellness, the condition in which teachers were partially responsible for facilitating the intervention. Neither teacher interest nor fidelity predicted participant behaviors (i.e., student satisfaction and recall). Moderation models indicated a significant interaction between satisfaction and fidelity on unhealthy behaviors in the Wellness condition. These findings, summarized in Figure 1, suggest that individual students in the same class responded differently to the same intervention. They also suggest the importance of teacher buy-in for successful FHC implementation.

Among FHC participants, as individual students’ responsiveness increased, HB also tended to increase, while UBF and UBS tended to decrease. This is consistent with prior research examining responsiveness in childhood obesity prevention (e.g., Gray et al., 2015; Schmied et al, 2015) as well as other school-based prevention interventions (e.g., Sanchez, Steckler, Nitirat, Hallfors, Cho & Brodish, 2007). Although the relationships between EBRB, curriculum recall and dance breaks satisfaction were not significant, given the small classroom and school level sample sizes and pattern of results, they may be related to behavioral outcomes as well (Shadish, Cook & Campbell, 2002). Furthermore, the lack of significant relationship between dance breaks and EBRB could be due to the nearly universally high satisfaction ratings for dance breaks, which limited variability. It is important to note that it is not possible to ascertain the direction of this relationship based on the analyses presented here. Students who liked and remembered the intervention may have therefore improved their behaviors, or it is possible that students who already engaged in more of the EBRB liked and remembered the intervention better.

The statistically significant relationships between fidelity and EBRB in the opposite direction than hypothesized suggest that the classroom experience of FHC was distinct from the individual experience. Although surprising given the frequent relationship between fidelity and outcomes in prevention interventions (Durlak & Dupre, 2008), a negative relationship between fidelity and outcomes is not unheard of, especially when responsiveness and outcomes are found to be related (e.g., Sanchez et al, 2007; Schmied et al, 2015). These relationships are clarified by the significant effect of the interaction between satisfaction and fidelity on UBS and UBF in Wellness indicating that the more students liked FHC, the greater the relationship between intervention fidelity and less unhealthy EBRBs.

The finding that fidelity and teacher interest were significantly related in Wellness is important because while Curriculum was entirely implemented by FHC instructors, Wellness relied on classroom teachers for some of the implementation. FHC instructors facilitated the dance breaks and snack policy weekly, but fidelity was calculated based on how many dance breaks were completed over all school days. That teachers’ attitude toward and engagement in Wellness, as observed by FHC instructors, were so strongly related to fidelity is consistent with prior research linking teacher interest and delivery (Gray et al., 2015; Martens et al, 2006). This may reflect the barriers (e.g., testing pressures, unwillingness to use the program) explored by Greaney and colleagues (2014) in their examination of teacher implementation of a school-based childhood obesity prevention intervention. It also raises the question of if teachers or specialists are better equipped to deliver programs (Rohrbach, Dent, Skara, Sun & Sussman, 2007).

Despite the fact that FHC was a large trial, small sample sizes at the school level reduced statistical power. The analyses in this paper should be interpreted as exploratory and future work should aim to test these theories directly. The direction of the relationships tested was inferred from the theoretical framework and may not represent the true relationships. Finally, the use of self-reported measures and the contribution of unmeasured factors, such as home environment, to EBRB may have limited the explanatory power of our results.

Implications

Our findings suggest that responsiveness, specifically satisfaction, is an important aspect of implementation and merits further examination to better understand the relationship between satisfaction and outcomes, the interaction between satisfaction and fidelity, and how to address both in the design and evaluation of prevention interventions. The relationship between teacher interest and fidelity has implications for teacher professional development programs, including requirements for pre-service teachers and suggests that it may be more effective to have nutrition and physical activity educators implement interventions. Future studies should evaluate the cost effectiveness of using trained specialists in school-based prevention interventions.

Taken together, these findings have implications for viable validity, or “the extent to which an evaluation provides evidence that an intervention is…practical, affordable, suitable, evaluable, and helpful in the real-world” (Chen, 2010, p. 207). That those who liked FHC also tended to have better outcome behaviors suggests the value of determining what makes some students more responsive (e.g., attendance, personal characteristics, or social support) and adapting interventions accordingly. Finally, by assessing multiple aspects of implementation and using models that account for contextual influences on behavioral outcomes, this process evaluation provides an important step forward in the evaluation of prevention interventions.

Supplementary Material

Acknowledgments

The authors would like to thank: FHC Instructors Emily Abrams, Lorraine Bandelli, Casey Luber, Jennifer Markowitz, Betsy Ginn, Greta Kollman, Shien Chiou; FHC Research assistants/data collectors; FHC schools, teachers, and students. The authors would also like to thank the reviewers for their insightful comments.

Funding: USDA AFRI NIFA grant #2010-85215-20661.

Dr. Burgermaster’s involvement in the preparation of this manuscript was supported by training grants T15LM007079 and T32HL007343; at the time of this study, she was a doctoral candidate at Teachers College Columbia University.

Footnotes

Compliance with Ethical Standards

Disclosure of potential conflicts of interest: The authors state they have no conflict of interest.

Ethical approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent: We obtained informed consent from all participants included in this study.

Contributor Information

Marissa Burgermaster, Department of Biomedical Informatics, Columbia University Medical Center, 622 West 168th Street, PH-20, New York, NY 10032, Phone: 212-305-4190.

Heewon Lee Gray, Program in Nutrition, Department of Health and Behavior Studies, Teachers College Columbia University, 525 West 120th Street, Box 137, New York, NY 10027.

Elizabeth Tipton, Applied Statistics, Department of Human Development, Teachers College Columbia University, 525 West 120th Street, Box 118, New York, NY 10027.

Isobel Contento, Program in Nutrition, Department of Health and Behavior Studies, Teachers College Columbia University, 525 West 120th Street, Box 137, New York, NY 10027.

Pamela Koch, Program in Nutrition, Department of Health and Behavior Studies, Teachers College Columbia University, 525 West 120th Street, Box 137, New York, NY 10027.

References

- Berkel C, Mauricio AM, Schoenfelder E, Sandler IN. Putting the pieces together: An integrated model of program implementation. Prevention Science. 2011;12(1):23–33. doi: 10.1007/s11121-010-0186-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christian MS, Evans CE, Ransley JK, Greenwood DC, Thomas JD, Cade JE. Process evaluation of a cluster randomised controlled trial of a school-based fruit and vegetable intervention: Project Tomato. Public Health Nutrition. 2012;15(03):459–465. doi: 10.1017/S1368980011001844. [DOI] [PubMed] [Google Scholar]

- Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implementation Science. 2007;2(1):40. doi: 10.1186/1748-5908-2-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen HT. The bottom up approach to integrative validity: A new perspective for program evaluation. Evaluation and Program Planning. 2010;33:205–214. doi: 10.1016/j.evalprogplan.2009.10.002. [DOI] [PubMed] [Google Scholar]

- Cohen J, Cohen P, West SG, Aiken LS. Applied Multiple Regression/ Correlation Analysis for the Behavioral Sciences. 3rd. Mahwah, NJ: Lawrence Erlbaum; 2002. [Google Scholar]

- Contento IR. Nutrition Education: Linking Research, Theory, and Practice. 3rd. Burlington, MA: Jones & Bartlett Learning; 2015. [PubMed] [Google Scholar]

- Contento I, Koch P, Gray HL. Reducing Childhood Obesity: An Innovative Curriculum With Wellness Policy Support. Journal of Nutrition Education and Behavior. 2015;47(4):S98. doi: 10.1016/j.jneb.2018.12.001. [DOI] [PubMed] [Google Scholar]

- Contento IR, Koch PA, Lee H, Calabrese-Barton A. Adolescents demonstrate improvement in obesity risk behaviors after completion of Choice, Control & Change, a curriculum addressing personal agency and autonomous motivation. Journal of the American Dietetic Association. 2010;110(12):1830–1839. doi: 10.1016/j.jada.2010.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cote AT, Harris KC, Panagiotopoulos C, Sandor GG, Devlin AM. Childhood obesity and cardiovascular dysfunction. Journal of the American College of Cardiology. 2013;62:1309–19. doi: 10.1016/j.jacc.2013.07.042. [DOI] [PubMed] [Google Scholar]

- de Onis M, Blossner M, Borghi E. Global prevalence and trends of overweight and obesity among preschool children. American Journal of Clinical Nutrition. 2010;92:1257–1264. doi: 10.3945/ajcn.2010.29786. [DOI] [PubMed] [Google Scholar]

- Durlak J, DuPre E. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Education Research. 2003;18(2):237–256. doi: 10.1093/her/18.2.237. [DOI] [PubMed] [Google Scholar]

- Fisher JO, Johnson RK, Lindquist C, Birch LL, Goran MI. Influence of body composition on the accuracy of reported energy intake in children. Obesity Research. 2000;8:597–603. doi: 10.1038/oby.2000.77. [DOI] [PubMed] [Google Scholar]

- Gray HL, Burgermaster M, Tipton E, Contento IR, Koch PA, Di Noia J. Intraclass correlation coefficients for obesity indicators and energy balance-related behaviors among New York City public elementary schools. Health Education & Behavior. 2015;43(2):172–181. doi: 10.1177/1090198115598987. [DOI] [PubMed] [Google Scholar]

- Gray HL, Contento IR, Koch PA. Linking implementation process to intervention outcomes in a middle school obesity prevention curriculum, Choice, Control and Change. Health Education Research. 2015;30(2):248–261. doi: 10.1093/her/cyv005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gray HL, Koch PA, Contento IR, Bandelli LN, Ang I, Di Noia J. Validity and reliability of behavior and theory-based psychosocial determinants measures, using audience response system technology in urban upper-elementary schoolchildren. Journal of Nutrition Education and Behavior. 2016;48(7):437–452. doi: 10.1016/j.jneb.2016.03.018. [DOI] [PubMed] [Google Scholar]

- Greaney ML, Hardwick CK, Spadano-Gasbaro JL, Mezgebu S, Horan CM, Schlotterbeck S, …Peterson KE. Implementing a multicomponent school-based obesity prevention intervention: A qualitative study. Journal of Nutrition Education and Behavior. 2014;46:576–582. doi: 10.1016/j.jneb.2014.04.293. [DOI] [PubMed] [Google Scholar]

- Griffin TL, Pallan MJ, Clarke JL, Lancashire ER, Lyon A, Parry JM, Adab P. Process evaluation design in a cluster randomised controlled childhood obesity prevention trial: the WAVES study. International Journal of Behavioral Nutrition and Physical Activity. 2014;11:112. doi: 10.1186/s12966-014-0112-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herouvil D, Karanasios D, Karayianni1 C, Karavanaki K. Review: Cardiovascular disease in childhood: The role of obesity. European Journal of Pediatrics. 2013;172:721–732. doi: 10.1007/s00431-013-1932-8. [DOI] [PubMed] [Google Scholar]

- IBM Corp. IBM SPSS Statistics for Macintosh, Version 22.0. Armonk, NY: IBM Corp.; 2013. [Google Scholar]

- Kam CM, Greenberg MT, Walls CT. Examining the role of implementation quality in school-based prevention using the PATHS curriculum. Prevention Science. 2003;4(1):55–63. doi: 10.1023/a:1021786811186. [DOI] [PubMed] [Google Scholar]

- Koth CW, Bradshaw CP, Leaf PJ. A multilevel study of predictors of student perceptions of school climate: The effect of classroom-level factors. Journal of Educational Psychology. 2008;100(1):96–104. [Google Scholar]

- Kuczmarski RJ, Ogden CL, Grummer-Strawn M, Flegal KM, Guo SS, Wei R, Johnson CL. CDC growth charts: United States. Advance Data. 2000;314:1–27. [PubMed] [Google Scholar]

- Lee H, Contento IR, Koch P. Using a systematic conceptual model for a process evaluation of a middle school obesity risk-reduction nutrition curriculum intervention: Choice, Control & Change. Journal of Nutrition Education and Behavior. 2013;45:126–136. doi: 10.1016/j.jneb.2012.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Little RJA. A Test of Missing Completely at Random for Multivariate Data with Missing Values. Journal of the American Statistical Association. 1988;83(404):1198–1202. [Google Scholar]

- Martens M, van Assema P, Paulussen T, Schaalma H, Brug J. Krachtvoer: process evaluation of a Dutch programme for lower vocational schools to promote healthful diet. Health Education Research. 2006;21(5):695–704. doi: 10.1093/her/cyl082. [DOI] [PubMed] [Google Scholar]

- Ogden CL, Carroll MD. Prevalence of obesity among children and adolescents: United States, trends 1963–1965 through 2007–2008. Atlanta, GA: US Department of Health and Human Services, CDC, National Center for Health Statistics; 2010. Retrieved from: http://www.cdc.gov/nchs/data/hestat/obesity_child_07_08/obesity_child_07_08.pdf. [Google Scholar]

- Paulson DS. Handbook of Regression and Modeling: Applications for the Clinical and Pharmaceutical Industries. Boca Raton, FL: Chapman and Hall/CRC; 2006. [Google Scholar]

- Peets K, Pöyhönen V, Juvonen J, Salmivalli C. Classroom norms of bullying alter the degree to which children defend in response to their affective empathy and power. Developmental psychology. 2015;51(7):913. doi: 10.1037/a0039287. [DOI] [PubMed] [Google Scholar]

- Pettigrew J, Graham JW, Miller-Day M, Hecht ML, Krieger JL, Shin YJ. Adherence and delivery: Implementation quality and program outcomes for the seventh-grade keepin’ it REAL program. Prevention Science. 2015;16(1):90–99. doi: 10.1007/s11121-014-0459-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pratt CA, Arteaga S, Loria C. Forging a future of better cardiovascular health: Addressing childhood obesity. Journal of the American College of Cardiology. 2014;63:369–371. doi: 10.1016/j.jacc.2013.07.088. [DOI] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Hensley M. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raudenbrush SW, Byrk AS. Hierarchical Linear Models: Applications and Data Analysis Methods. 2nd. Thousand Oaks, CA: Sage; 2002. [Google Scholar]

- Raudenbush SW, Bryk AS, Cheong AS, Fai YF, Congdon RT, du Toit M. HLM 7: Hierarchical Linear and Nonlinear modeling. Lincolnwood, IL: Scientific Software International; 2011. [Google Scholar]

- Rohrbach LA, Dent CW, Skara S, Sun P, Sussman S. Fidelity of implementation in Project Towards No Drug Abuse (TND): A comparison of classroom teachers and program specialists. Prevention Science. 2007;8(2):125–132. doi: 10.1007/s11121-006-0056-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sánchez V, Steckler A, Nitirat P, Hallfors D, Cho H, Brodish P. Fidelity of implementation in a treatment effectiveness trial of Reconnecting Youth. Health Education Research. 2007;22:95–107. doi: 10.1093/her/cyl052. [DOI] [PubMed] [Google Scholar]

- Saunders RP, Ward D, Felton GM, Dowda M, Pate RR. Examining the link between program implementation and behavior outcomes in the lifestyle education for activity program (LEAP) Evaluation and Program Planning. 2006;29(4):352–364. doi: 10.1016/j.evalprogplan.2006.08.006. [DOI] [PubMed] [Google Scholar]

- Schmied E, Parada H, Horton L, Ibarra L, Ayala G. A process evaluation of an efficacious family-based intervention to promote healthy eating: the Entre Familia: Reflejos de Salud study. Health Education and Behavior. 2015;42:583–592. doi: 10.1177/1090198115577375. [DOI] [PubMed] [Google Scholar]

- Schneider M, Hall WJ, Hernandez AE, Hindes K, Montez G, Pham T, Zeveloff A. Rationale, design and methods for process evaluation in the HEALTHY study. International Journal of Obesity. 2009;33:S60–S67. doi: 10.1038/ijo.2009.118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-experimental Designs for Generalized Causal Inference. 2nd. Independence, KY: Cengage; 2001. [Google Scholar]

- Singh AS, Chinapaw MJM, Brug J, Van Mechelen W. Process evaluation of a school-based weight gain prevention program: the Dutch Obesity Intervention in Teenagers (DOiT) Health Education Research. 2009 doi: 10.1093/her/cyp011. [DOI] [PubMed] [Google Scholar]

- Steckler A, Ethelbah B, Martin CJ, Stewart D, Pardilla M, Gittelsohn J, Vu M. Pathways process evaluation results: a school-based prevention trial to promote healthful diet and physical activity in American Indian third, fourth, and fifth grade students. Preventive Medicine. 2003;37:S80–S90. doi: 10.1016/j.ypmed.2003.08.002. [DOI] [PubMed] [Google Scholar]

- van Stralen MM, de Meij J, Te Velde SJ, Van der Wal MF, Van Mechelen W, Knol DL, Chinapaw MJ. Mediators of the effect of the JUMP-in intervention on physical activity and sedentary behavior in Dutch primary schoolchildren from disadvantaged neighborhoods. International Journal of Behavioral Nutrition and Physical Activity. 2012;9(1):131. doi: 10.1186/1479-5868-9-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waters E, de Silva-Sanigorski A, Hall BJ, Brown T, Campbell KJ, Gao Y, Summerbell CD. Interventions for preventing obesity in children. Cochrane Database Systematic Reviews. 2011;12:CD001871. doi: 10.1002/14651858.CD001871.pub3. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.