Abstract

Heart disease is one of the most common diseases in the world. The objective of this study is to aid the diagnosis of heart disease using a hybrid classification system based on the ReliefF and Rough Set (RFRS) method. The proposed system contains two subsystems: the RFRS feature selection system and a classification system with an ensemble classifier. The first system includes three stages: (i) data discretization, (ii) feature extraction using the ReliefF algorithm, and (iii) feature reduction using the heuristic Rough Set reduction algorithm that we developed. In the second system, an ensemble classifier is proposed based on the C4.5 classifier. The Statlog (Heart) dataset, obtained from the UCI database, was used for experiments. A maximum classification accuracy of 92.59% was achieved according to a jackknife cross-validation scheme. The results demonstrate that the performance of the proposed system is superior to the performances of previously reported classification techniques.

1. Introduction

Cardiovascular disease (CVD) is a primary cause of death. An estimated 17.5 million people died from CVD in 2012, representing 31% of all global deaths (http://www.who.int/mediacentre/factsheets/fs317/en/). In the United States, heart disease kills one person every 34 seconds [1].

Numerous factors are involved in the diagnosis of heart disease, which complicates a physician's task. To help physicians make quick decisions and minimize errors in diagnosis, classification systems enable physicians to rapidly examine medical data in considerable detail [2]. These systems are implemented by developing a model that can classify existing records using sample data. Various classification algorithms have been developed and used as classifiers to assist doctors in diagnosing heart disease patients.

The performances obtained using the Statlog (Heart) dataset [3] from the UCI machine learning database are compared in this context. Lee [4] proposed a novel supervised feature selection method based on the bounded sum of weighted fuzzy membership functions (BSWFM) and Euclidean distances and obtained an accuracy of 87.4%. Tomar and Agarwal [5] used the F-score feature selection method and the Least Square Twin Support Vector Machine (LSTSVM) to diagnose heart diseases, obtaining an average classification accuracy of 85.59%. Buscema et al. [6] used the Training with Input Selection and Testing (TWIST) algorithm to classify patterns, obtaining an accuracy of 84.14%. The Extreme Learning Machine (ELM) has also been used as a classifier, obtaining a reported classification accuracy of 87.5% [7]. The genetic algorithm with the Naïve Bayes classifier has been shown to have a classification accuracy of 85.87% [8]. Srinivas et al. [9] obtained an 83.70% classification accuracy using Naïve Bayes. Polat and Güneş [10] used the RBF kernel F-score feature selection method to detect heart disease. The LS-SVM classifier was used, obtaining a classification accuracy of 83.70%. In [11], the GA-AWAIS method was used for heart disease detection, with a classification accuracy of 87.43%. The Algebraic Sigmoid Method has also been proposed to classify heart disease, with a reported accuracy of 85.24% [12]. Wang et al. [13] used linear kernel SVM classifiers for heart disease detection and obtained an accuracy of 83.37%. In [14], three distance criteria were applied in simple AIS, and the accuracy obtained on the Statlog (Heart) dataset was 83.95%. In [15], a hybrid neural network method was proposed, and the reported accuracy was 86.8%. Yan et al. [16] achieved an 83.75% classification accuracy using ICA and SVM classifiers. Şahan et al. [17] proposed a new artificial immune system named the Attribute Weighted Artificial Immune System (AWAIS) and obtained an accuracy of 82.59% using the k-fold cross-validation method. In [18], the k-NN, k-NN with Manhattan, feature space mapping (FSM), and separability split value (SSV) algorithms were used for heart disease detection, and the highest classification accuracy (85.6%) was obtained by k-NN.

From these works, it can be observed that feature selection methods can effectively increase the performance of single classifier algorithms in diagnosing heart disease [19]. Noisy features and dependency relationships in the heart disease dataset can influence the diagnosis process. Typically, there are numerous records of accompanied syndromes in the original datasets as well as a large number of redundant symptoms. Consequently, it is necessary to reduce the dimensions of the original feature set by a feature selection method that can remove the irrelevant and redundant features.

ReliefF is one of the most popular and successful feature estimation algorithms. It can accurately estimate the quality of features with strong dependencies and is not affected by their relations [20]. There are two advantages to using the ReliefF algorithm: (i) it follows the filter approach and does not employ domain specific knowledge to set feature weights [21, 22], and (ii) it is a feature weighting (FW) engineering technique. ReliefF assigns a weight to each feature that represents the usefulness of that feature for distinguishing pattern classes. First, the weight vector can be used to improve the performance of the lazy algorithms [21]. Furthermore, the weight vector can also be used as a method for ranking features to guide the search for the best subset of features [22–26]. The ReliefF algorithm has proved its usefulness in FS [20, 23], feature ranking [27], and building tree-based models [22], with an association rules-based classifier [28], in improving the efficiencies of the genetic algorithms [29] and with lazy classifiers [21].

ReliefF has excellent performance in both supervised and unsupervised learning. However, it does not help identify redundant features [30–32]. ReliefF algorithm estimates the quality of each feature according to its weight. When most of the given features are relevant to the concept, this algorithm will select most of them even though only some fraction is necessary for concept description [32]. Furthermore, the ReliefF algorithm does not attempt to determine the useful subsets of these weakly relevant features [33].

Redundant features increase dimensionality unnecessarily [34] and adversely affect learning performance when faced with shortage of data. It has also been empirically shown that removing redundant features can result in significant performance improvement [35]. Rough Set (RS) theory is a new mathematical approach to data analysis and data mining that has been applied successfully to many real-life problems in medicine, pharmacology, engineering, banking, financial and market analysis, and others [36]. The RS reduction algorithm can reduce all redundant features of datasets and seek the minimum subset of features to attain a satisfactory classification [37].

There are three advantages to combining ReliefF and RS (RFRS) approach as an integrated feature selection system for heart disease diagnosis.

(i) The RFRS method can remove superfluous and redundant features more effectively. The ReliefF algorithm can select relevant features for disease diagnosis; however, redundant features may still exist in the selected relevant features. In such cases, the RS reduction algorithm can remove remaining redundant features to offset this limitation of the ReliefF algorithm.

(ii) The RFRS method helps to accelerate the RS reduction process and guide the search of the reducts. Finding a minimal reduct of a given information system is an NP-hard problem, as was demonstrated in [38]. The complexity of computing all reducts in an information system is rather high [39]. On one hand, as a data preprocessing tool, the features revealed by the ReliefF method can accelerate the operation process by serving as the input for the RS reduction algorithm. On the other hand, the weight vector obtained by the ReliefF algorithm can act as a heuristic to guide the search for the reducts [25, 26], thus helping to improve the performance of the heuristic algorithm [21].

(iii) The RFRS method can reduce the number and improve the quality of reducts. Usually, more than one reduct exists in the dataset; and larger numbers of features result in larger numbers of reducts [40]. The number of reducts will decrease if superfluous features are removed using the ReliefF algorithm. When unnecessary features are removed, more important features can be extracted, which will also improve the quality of reducts.

It is obvious that the choice of an efficient feature selection method and an excellent classifier is extremely important for the heart disease diagnosis problem [41]. Most of the common classifiers from the machine learning community have been used for heart disease diagnosis. It is now recognized that no single model exists that is superior for all pattern recognition problems, and no single technique is applicable to all problems [42]. One solution to overcome the limitations of a single classifier is to use an ensemble model. An ensemble model is a multiclassifier combination model that results in more precise decisions because the same problem is solved by several different trained classifiers, which reduces the variance of error estimation [43]. In recent years, ensemble learning has been employed to increase classification accuracies beyond the level that can be achieved by individual classifiers [44, 45]. In this paper, we used an ensemble classifier to evaluate the feature selection model.

To improve the efficiency and effectiveness of the classification performance for the diagnosis of heart disease, we propose a hybrid classification system based on the ReliefF and RS (RFRS) approach in handling relevant and redundant features. The system contains two subsystems: the RFRS feature selection subsystem and a classification subsystem. In the RFRS feature selection subsystem, we use a two-stage hybrid modeling procedure by integrating ReliefF with the RS (RFRS) method. First, the proposed method adopts the ReliefF algorithm to obtain feature weights and select more relevant and important features from heart disease datasets. Then, the feature estimation obtained from the first phase is used as the input for the RS reduction algorithm and guide the initialization of the necessary parameters for the genetic algorithm. We use a GA-based search engine to find satisfactory reducts. In the classification subsystem, the resulting reducts serve as the input for the chosen classifiers. Finally, the optimal reduct and performance can be obtained.

To evaluate the performance of the proposed hybrid method, a confusion matrix, sensitivity, specificity, accuracy, and ROC were used. The experimental results show that the proposed method achieves very promising results using the jack knife test.

The main contributions of this paper are summarized as follows.

(i) We propose a feature selection system to integrate the ReliefF approach with the RS method (RFRS) to detect heart disease in an efficient and effective way. The idea is to use the feature estimation from the ReliefF phase as the input and heuristics for the RS reduction phase.

(ii) In the classification system, we propose an ensemble classifier using C4.5 as the base classifier. Ensemble learning can achieve better performance at the cost of computation than single classifiers. The experimental results show that the ensemble classifier in this paper is superior to three common classifiers.

(iii) Compared with three classifiers and previous studies, the proposed diagnostic system achieved excellent classification results. On the Statlog (Heart) dataset from the UCI machine learning database [3], the resulting classification accuracy was 92.59%, which is higher than that achieved by other studies.

The rest of the paper is organized as follows. Section 2 offers brief background information concerning the ReliefF algorithm and RS theory. The details of the diagnosis system implementation are presented in Section 3. Section 4 describes the experimental results and discusses the proposed method. Finally, conclusions and recommendations for future work are summarized in Section 5.

2. Theoretical Background

2.1. Basic Concepts of Rough Set Theory

Rough Set (RS) theory, which was proposed by Pawlak, in the early 1980s, is a new mathematical approach to addressing vagueness and uncertainty [46]. RS theory has been applied in many domains, including classification system analysis, pattern reorganization, and data mining [47]. RS-based classification algorithms are based on equivalence relations and have been used as classifiers in medical diagnosis [37, 46]. In this paper, we primarily focus on the RS reduction algorithm, which can reduce all redundant features of datasets and seek the minimum subset of features necessary to attain a satisfactory classification [37]. A few basic concepts of RS theory are defined [46, 47] as follows.

Definition 1 . —

U is a certain set that is referred to as the universe; R is an equivalence relation in U. The pair A = (U, R) is referred to as an approximation space.

Definition 2 . —

P ⊂ R, ∩P (the intersection of all equivalence relations in P) is an equivalence relation, which is referred to as the R-indiscernibility relation, and it is represented by Ind(R).

Definition 3 . —

Let X be a certain subset of U. The least composed set in R that contains X is referred to as the best upper approximation of X in R and represented by R −(X); the greatest composed set in R contained in X is referred to as the best lower approximation of X in R, and it is represented by R −(X).

(1)

Definition 4 . —

An information system is denoted as

(2) where U is the universe that consists of a finite set of n objects, A = {C ∪ D}, in which C is a set of condition attributes and D is a set of decision attributes, V is the set of domains of attributes, and F is the information function for each a ∈ A, x ∈ U, F(x, a) ∈ V a.

Definition 5 . —

In an information system, C and D are sets of attributes in U. X ∈ U/ind(Q), and posp(Q), which is referred to as a positive region, is defined as

(3)

Definition 6 . —

P and Q are sets of attributes in U, P, Q⊆A, and the dependency r p(Q) is defined as

(4) Card (X) denotes the cardinality of X. 0 ≤ r p(Q) ≤ 1.

Definition 7 . —

P and Q are sets of attributes in U, P, Q⊆A, and the significance of a i is defined as

(5)

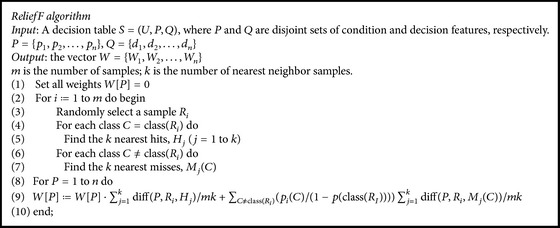

2.2. ReliefF Algorithm

Many feature selection algorithms have been developed; ReliefF is one of the most widely used and effective algorithms [48]. ReliefF is a simple yet efficient procedure for estimating the quality of features in problems with dependencies between features [20]. The pseudocode of ReliefF algorithm is listed in Algorithm 1.

Algorithm 1.

Pseudocode of ReliefF.

3. Proposed System

3.1. Overview

The proposed hybrid classification system consists of two main components: (i) feature selection using the RFRS subsystem and (ii) data classification using the classification system. A flow chart of the proposed system is shown in Figure 1. We describe the preprocessing and classification systems in the following subsections.

Figure 1.

Structure of RFRS-based classification system.

3.2. RFRS Feature Selection Subsystem

We propose a two-phase feature selection method based on the ReliefF algorithm and the RS (RFRS) algorithm. The idea is to use the feature estimation from the ReliefF phase as the input and heuristics for the subsequent RS reduction phase. In the first phase, we adopt the ReliefF algorithm to obtain feature weights and select important features; in the second phase, the feature estimation obtained from the first phase is used to guide the initialization of the parameters required for the genetic algorithm. We use a GA-based search engine to find satisfactory reducts.

The RFRS feature selection subsystem consists of three main modules: (i) data discretization, (ii) feature extraction using the ReliefF algorithm, and (iii) feature reduction using the heuristic RS reduction algorithm we propose.

3.2.1. Data Discretization

RS reduction requires categorical data. Consequently, data discretization is the first step. We used an approximate equal interval binning method to bin the data variables into a small number of categories.

3.2.2. Feature Extraction by the ReliefF Algorithm

Module 2 is used for feature extraction by the ReliefF algorithm. To deal with incomplete data, we change the diff function. Missing feature values are treated probabilistically [20]. We calculate the probability that two given instances have different values for a given feature conditioned over the class value [20]. When one instance has an unknown value, then

| (6) |

When both instances have unknown values, then

| (7) |

Conditional probabilities are approximated by relative frequencies in the training set. The process of feature extraction is shown as follows.

The Process of Feature Extraction Using ReliefF Algorithm

Input. A decision table S = (U, P, Q), P = {a 1, a 2,…, a m}, Q = {d 1, d 2,…, d n} (m ≥ 1, n ≥ 1).

Output. The selected feature subset K = {a 1, a 2,…, a k}(1 ≤ k ≤ m).

Step 1. Obtain the weight matrix of each feature using ReliefF algorithmW = {w 1, w 2,…, w i,…, w m} (1 ≤ i ≤ m).

Step 2. Set a threshold, δ.

Step 3. If w i > δ, then feature a i is selected.

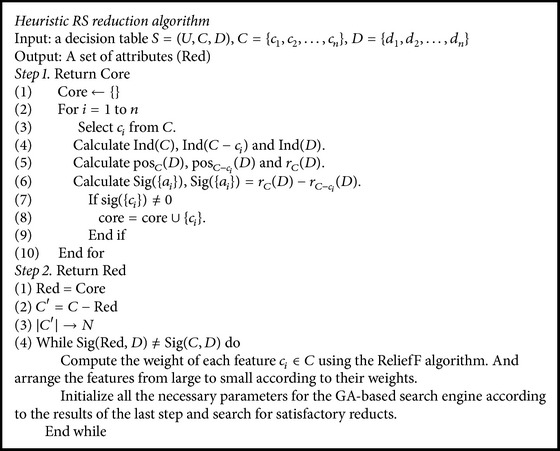

3.2.3. Feature Reduction by the Heuristic RS Reduction Algorithm

The evaluation result obtained by the ReliefF algorithm is the feature rank. A higher ranking means that the feature has stronger distinguishing qualities and a higher weight [30]. Consequently, in the process of reduct searching, the features in the front rank should have a higher probability of being selected.

We proposed the RS reduction algorithm by using the feature estimation as heuristics and a GA-based search engine to search for the satisfactory reducts. The pseudocode of the algorithm is provided in Algorithm 2. The algorithm was implemented in MATLAB R2014a.

Algorithm 2.

Pseudocode of heuristic RS reduction algorithm.

3.3. Classification Subsystem

In the classification subsystem, the dataset is split into training sets and corresponding test sets. The decision tree is a nonparametric learning algorithm that does not need to search for optimal parameters in the training stage and thus is used as a weak learner for ensemble learning [49]. In this paper, the ensemble classifier uses the C4.5 decision tree as the base classifier. We use the boosting technique to construct ensemble classifiers. Jackknife cross-validation is used to increase the amount of data for testing the results. The optimal reduct is the reduct that obtains the best classification accuracy.

4. Experimental Results

4.1. Dataset

The Statlog (Heart) dataset used in our work was obtained from the UCI machine learning database [3]. This dataset contains 270 observations and 2 classes: the presence and absence of heart disease. The samples include 13 condition features, presented in Table 1. We denote the 13 features as C 1 to C 13.

Table 1.

Feature information of Statlog (Heart) dataset.

| Feature | Code | Description | Domain | Data type | Mean | Standard deviation |

|---|---|---|---|---|---|---|

| Age | C 1 | — | 29–77 | Real | 54 | 9 |

| Sex | C 2 | Male, female | 0, 1 | Binary | — | — |

| Chest pain type | C 3 | Angina, asymptomatic, abnormal | 1, 2, 3, 4 | Nominal | — | — |

| Resting blood pressure | C 4 | — | 94–200 | Real | 131.344 | 17.862 |

| Serum cholesterol in mg/dl | C 5 | — | 126–564 | Real | 249.659 | 51.686 |

| Fasting blood sugar > 120 mg/dl | C 6 | — | 0, 1 | Binary | — | — |

| Resting electrocardiographic results | C 7 | Norm, abnormal, hyper | 0, 1, 2 | Nominal | — | — |

| Maximum heart rate achieved | C 8 | — | 71–202 | Real | 149.678 | 23.1666 |

| Exercise-induced angina | C 9 | — | 0, 1 | Binary | — | — |

| Old peak = ST depression induced by exercise relative to rest | C 10 | — | 0–6.2 | Real | 1.05 | 1.145 |

| Slope of the peak exercise ST segment | C 11 | Up, flat, down | 1, 2, 3 | Ordered | — | — |

| Number of major vessels (0–3) colored by fluoroscopy | C 12 | — | 0, 1, 2, 3 | Real | — | — |

| Thal | C 13 | Normal, fixed defect, reversible defect | 3, 6, 7 | Nominal | — | — |

4.2. Performance Evaluation Methods

4.2.1. Confusion Matrix, Sensitivity, Specificity, and Accuracy

A confusion matrix [50] contains information about actual and predicted classifications performed by a classification system. The performance of such systems is commonly evaluated using the data in the matrix. Table 2 shows the confusion matrix for a two-class classifier.

Table 2.

The confusion matrix.

| Predicted patients with heart disease | Predicted healthy persons | |

|---|---|---|

| Actual patients with heart disease | True positive (TP) | False negative (FN) |

| Actual healthy persons | False positive (FP) | True negative (TN) |

In the confusion matrix, TP is the number of true positives, representing the cases with heart disease that are correctly classified into the heart disease class. FN is the number of false negatives, representing cases with heart disease that are classified into the healthy class. TN is the number of true negatives, representing healthy cases that are correctly classified into the healthy class. Finally, FP is the number of false positives, representing the healthy cases that are incorrectly classified into the heart disease class [50].

The performance of the proposed system was evaluated based on sensitivity, specificity, and accuracy tests, which use the true positive (TP), true negative (TN), false negative (FN), and false positive (FP) terms [33]. These criteria are calculated as follows [41]:

| (8) |

4.2.2. Cross-Validation

Three cross-validation methods, namely, subsampling tests, independent dataset tests, and jackknife tests, are often employed to evaluate the predictive capability of a predictor [51]. Among the three methods, the jackknife test is deemed the least arbitrary and the most objective and rigorous [52, 53] because it always yields a unique outcome, as demonstrated by a penetrating analysis in a recent comprehensive review [54, 55]. Therefore, the jackknife test has been widely and increasingly adopted in many areas [56, 57].

Accordingly, the jackknife test was employed to examine the performance of the model proposed in this paper. For jackknife cross-validation, each sequence in the training dataset is, in turn, singled out as an independent test sample and all the parameter rules are calculated based on the remaining samples, without including the one being treated as the test sample.

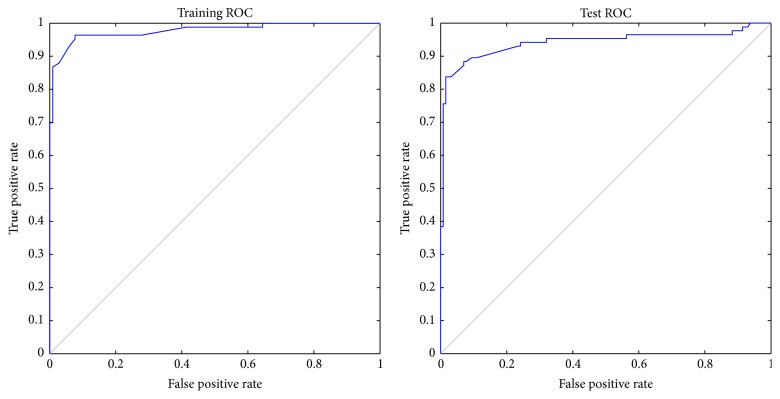

4.2.3. Receiver Operating Characteristics (ROC)

The receiver operating characteristic (ROC) curve is used for analyzing the prediction performance of a predictor [58]. It is usually plotted using the true positive rate versus the false positive rate, as the discrimination threshold of classification algorithm is varied. The area under the ROC curve (AUC) is widely used and relatively accepted in classification studies because it provides a good summary of a classifier's performance [59].

4.3. Results and Discussion

4.3.1. Results and Analysis on the Statlog (Heart) Dataset

First, we used the equal interval binning method to discretize the original data. In the feature extraction module, the number of k-nearest neighbors in the ReliefF algorithm was set to 10, and the threshold, δ, was set to 0.02. Table 3 summarizes the results of the ReliefF algorithm. Based on these results, C 5 and C 6 were removed. In Module 3, we obtained 15 reducts using the heuristic RS reduction algorithm implemented in MATLAB 2014a.

Table 3.

Results of the ReliefF algorithm.

| Feature | C 2 | C 13 | C 7 | C 12 | C 9 | C 3 | C 11 | C 10 | C 8 | C 4 | C 1 | C 6 | C 5 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Weight | 0.172 | 0.147 | 0.126 | 0.122 | 0.106 | 0.098 | 0.057 | 0.046 | 0.042 | 0.032 | 0.028 | 0.014 | 0.011 |

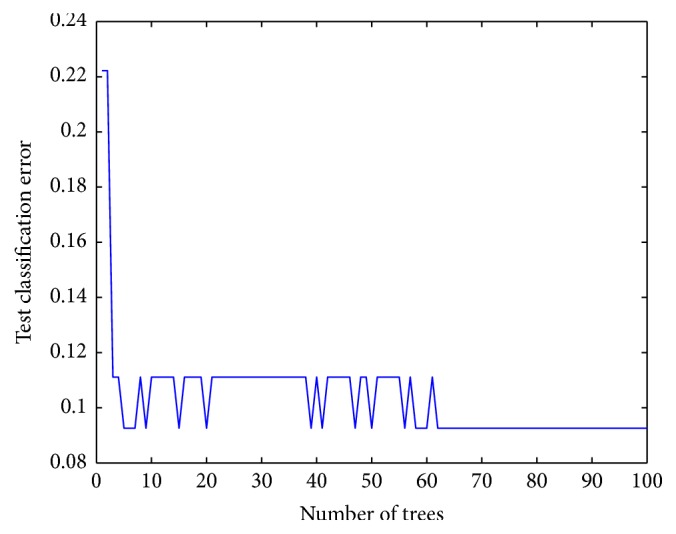

Trials were conducted using 70%–30% training-test partitions, using all the reduced feature sets. Jackknife cross-validation was performed on the dataset. The number of desired base classifiers k was set to 50, 100, and 150. The calculations were run 10 times, and the highest classification performances for each training-test partition are provided in Table 4.

Table 4.

Performance values for different reduced subset.

| Code | Reduct | Number | Test classification accuracy (%) | |||

|---|---|---|---|---|---|---|

| Ensemble classifier | ||||||

| K | Sn | Sp | ACC | |||

| R 1 | C 3, C 4, C 7, C 8, C 10, C 12, C 13 | 7 | 50 | 83.33 | 87.5 | 85.19 |

| 100 | 83.33 | 95.83 | 88.89 | |||

| 150 | 86.67 | 83.33 | 85.19 | |||

| R 2 | C 1, C 3, C 7, C 8, C 11, C 12, C 13 | 7 | 50 | 86.67 | 91.67 | 88.89 |

| 100 | 93.33 | 87.50 | 92.59 | |||

| 150 | 93.33 | 87.04 | 90.74 | |||

| R 3 | C 1, C 2, C 4, C 7, C 8, C 9, C 12 | 7 | 50 | 86.67 | 83.33 | 85.19 |

| 100 | 93.33 | 79.17 | 87.04 | |||

| 150 | 80 | 91.67 | 85.19 | |||

| R 4 | C 1, C 4, C 7, C 8, C 10, C 11, C 12, C 13 | 8 | 50 | 86.67 | 83.33 | 85.19 |

| 100 | 93.33 | 83.33 | 88.89 | |||

| 150 | 86.67 | 87.5 | 87.04 | |||

In Table 4, R 2 obtains the best test set classification accuracy (92.59%) using the ensemble classifiers when k = 100. The training process is shown in Figure 2. The training and test ROC curves are shown in Figure 3.

Figure 2.

Training process of R 7.

Figure 3.

ROC curves for training and test sets.

4.3.2. Comparison with Other Classifiers

In this section, our ensemble classification method is compared with the individual C4.5 decision tree and Naïve Bayes and Bayesian Neural Networks (BNN) methods. The C4.5 decision tree and Naïve Bayes are common classifiers. Bayesian Neural Networks (BNN) is a classifier that uses Bayesian regularization to train feed-forward neural networks [60] and has better performance than pure neural networks. The classification accuracy results of the four classifiers are listed in Table 5. The ensemble classification method has better performance than the individual C4.5 classifier and the other two classifiers.

Table 5.

Classification results using the four classifiers.

| Classifiers | Test classification accuracy of R 2 (%) | ||

|---|---|---|---|

| Sn | Sp | Acc | |

| Ensemble classifier (k = 50) | 86.67 | 91.67 | 88.89 |

| Ensemble classifier (k = 100) | 93.33 | 87.50 | 92.59 |

| Ensemble classifier (k = 150) | 93.33 | 87.04 | 90.74 |

| C4.5 tree | 93.1 | 80 | 87.03 |

| Naïve Bayes | 93.75 | 68.18 | 83.33 |

| Bayesian Neural Networks (BNN) | 93.75 | 72.72 | 85.19 |

4.3.3. Comparison of the Results with Other Studies

We compared our results with the results of other studies. Table 6 shows the classification accuracies of our study and previous methods.

Table 6.

Comparison of our results with those of other studies.

| Author | Method | Classification accuracy (%) |

|---|---|---|

| Our study | RFRS classification system | 92.59 |

| Lee [4] | Graphical characteristics of BSWFM combined with Euclidean distance | 87.4 |

| Tomar and Agarwal [5] | Feature selection-based LSTSVM | 85.59 |

| Buscema et al. [6] | TWIST algorithm | 84.14 |

| Subbulakshmi et al. [7] | ELM | 87.5 |

| Karegowda et al. [8] | GA + Naïve Bayes | 85.87 |

| Srinivas et al. [9] | Naïve Bayes | 83.70 |

| Polat and Güneş [10] | RBF kernel F-score + LS-SVM | 83.70 |

| Özşen and Güneş [11] | GA-AWAIS | 87.43 |

| Helmy and Rasheed [12] | Algebraic Sigmoid | 85.24 |

| Wang et al. [13] | Linear kernel SVM classifiers | 83.37 |

| Özşen and Güneş [14] | Hybrid similarity measure | 83.95 |

| Kahramanli and Allahverdi [15] | Hybrid neural network method | 86.8 |

| Yan et al. [16] | ICA + SVM | 83.75 |

| Şahan et al. [17] | AWAIS | 82.59 |

| Duch et al. [18] | KNN classifier | 85.6 |

BSWFM: bounded sum of weighted fuzzy membership functions; LSTSVM: Least Square Twin Support Vector Machine; TWIST: Training with Input Selection and Testing; ELM: Extreme Learning Machine; GA: genetic algorithm; SVM: support vector machine; ICA: imperialist competitive algorithm; AWAIS: attribute weighted artificial immune system; KNN: k-nearest neighbor.

The results show that our proposed method obtains superior and promising results in classifying heart disease patients. We believe that the proposed RFRS-based classification system can be exceedingly beneficial in assisting physicians in making accurate decisions.

5. Conclusions and Future Work

In this paper, a novel ReliefF and Rough Set- (RFRS-) based classification system is proposed for heart disease diagnosis. The main novelty of this paper lies in the proposed approach: the combination of the ReliefF and RS methods to classify heart disease problems in an efficient and fast manner. The RFRS classification system consists of two subsystems: the RFRS feature selection subsystem and the classification subsystem. The Statlog (Heart) dataset from the UCI machine learning database [3] was selected to test the system. The experimental results show that the reduct R 2 (C 1, C 3, C 7, C 8, C 11, C 12, C 13) achieves the highest classification accuracy (92.59%) using an ensemble classifier with the C4.5 decision tree as the weak learner. The results also show that the RFRS method has superior performance compared to three common classifiers in terms of ACC, sensitivity, and specificity. In addition, the performance of the proposed system is superior to that of existing methods in the literature. Based on empirical analysis, the results indicate that the proposed classification system can be used as a promising alternative tool in medical decision making for heart disease diagnosis.

However, the proposed method also has some weaknesses. The number of the nearest neighbors (k) and the weight threshold (θ) are not stable in the ReliefF algorithm [20]. One solution to this problem is to compute estimates for all possible numbers and take the highest estimate of each feature as the final result [20]. We need to perform more experiments to find the optimal parameter values for the ReliefF algorithm in the future.

Acknowledgments

This study was supported by the National Natural Science Foundation of China (Grant no. 71432007).

Competing Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Kochanek K. D., Xu J., Murphy S. L., Miniño A. M., Kung H.-C. Deaths: final data for 2009. National Vital Statistics Reports. 2011;60(3):1–116. [PubMed] [Google Scholar]

- 2.Temurtas H., Yumusak N., Temurtas F. A comparative study on diabetes disease diagnosis using neural networks. Expert Systems with Applications. 2009;36(4):8610–8615. doi: 10.1016/j.eswa.2008.10.032. [DOI] [Google Scholar]

- 3. UCI Repository of Machine Learning Databases, http://archive.ics.uci.edu/ml/datasets/Statlog+%28Heart%29.

- 4.Lee S.-H. Feature selection based on the center of gravity of BSWFMs using NEWFM. Engineering Applications of Artificial Intelligence. 2015;45:482–487. doi: 10.1016/j.engappai.2015.08.003. [DOI] [Google Scholar]

- 5.Tomar D., Agarwal S. Feature selection based least square twin support vector machine for diagnosis of heart disease. International Journal of Bio-Science and Bio-Technology. 2014;6(2):69–82. doi: 10.14257/ijbsbt.2014.6.2.07. [DOI] [Google Scholar]

- 6.Buscema M., Breda M., Lodwick W. Training with Input Selection and Testing (TWIST) algorithm: a significant advance in pattern recognition performance of machine learning. Journal of Intelligent Learning Systems and Applications. 2013;5(1):29–38. doi: 10.4236/jilsa.2013.51004. [DOI] [Google Scholar]

- 7.Subbulakshmi C. V., Deepa S. N., Malathi N. Extreme learning machine for two category data classification. Proceedings of the IEEE International Conference on Advanced Communication Control and Computing Technologies (ICACCCT '12); August 2012; Ramanathapuram, India. pp. 458–461. [DOI] [Google Scholar]

- 8.Karegowda A. G., Manjunath A. S., Jayaram M. A. Feature subset selection problem using wrapper approach in supervised learning. International Journal of Computer Applications. 2010;1(7):13–17. doi: 10.5120/169-295. [DOI] [Google Scholar]

- 9.Srinivas K., Rani B. K., Govrdhan A. Applications of data mining techniques in healthcare and prediction of heart attacks. International Journal on Computer Science and Engineering. 2010;2:250–255. [Google Scholar]

- 10.Polat K., Güneş S. A new feature selection method on classification of medical datasets: kernel F-score feature selection. Expert Systems with Applications. 2009;36(7):10367–10373. doi: 10.1016/j.eswa.2009.01.041. [DOI] [Google Scholar]

- 11.Özşen S., Güneş S. Attribute weighting via genetic algorithms for attribute weighted artificial immune system (AWAIS) and its application to heart disease and liver disorders problems. Expert Systems with Applications. 2009;36(1):386–392. doi: 10.1016/j.eswa.2007.09.063. [DOI] [Google Scholar]

- 12.Helmy T., Rasheed Z. Multi-category bioinformatics dataset classification using extreme learning machine. Proceedings of the IEEE Congress on Evolutionary Computation (CEC '09); May 2009; Trondheim, Norway. pp. 3234–3240. [DOI] [Google Scholar]

- 13.Wang S.-J., Mathew A., Chen Y., Xi L.-F., Ma L., Lee J. Empirical analysis of support vector machine ensemble classifiers. Expert Systems with Applications. 2009;36(3):6466–6476. doi: 10.1016/j.eswa.2008.07.041. [DOI] [Google Scholar]

- 14.Özşen S., Güneş S. Effect of feature-type in selecting distance measure for an artificial immune system as a pattern recognizer. Digital Signal Processing. 2008;18(4):635–645. doi: 10.1016/j.dsp.2007.08.004. [DOI] [Google Scholar]

- 15.Kahramanli H., Allahverdi N. Design of a hybrid system for the diabetes and heart diseases. Expert Systems with Applications. 2008;35(1-2):82–89. doi: 10.1016/j.eswa.2007.06.004. [DOI] [Google Scholar]

- 16.Yan G., Ma G., Lv J., Song B. Combining independent component analysis with support vector machines. Proceedings of the in 1st International Symposium on Systems and Control in Aerospace and Astronautics (ISSCAA '06); January 2006; Harbin, China. pp. 493–496. [Google Scholar]

- 17.Şahan S., Polat K., Kodaz H., Günes S. The medical applications of attribute weighted artificial immune system (AWAIS): diagnosis of heart and diabetes diseases. Artificial Immune Systems. 2005;3627:456–468. [Google Scholar]

- 18.Duch W., Adamczak R., Grabczewski K. A new methodology of extraction, optimization and application of crisp and fuzzy logical rules. IEEE Transactions on Neural Networks. 2001;12(2):277–306. doi: 10.1109/72.914524. [DOI] [PubMed] [Google Scholar]

- 19.McRae M. P., Bozkurt B., Ballantyne C. M., et al. Cardiac ScoreCard: a diagnostic multivariate index assay system for predicting a spectrum of cardiovascular disease. Expert Systems with Applications. 2016;54:136–147. doi: 10.1016/j.eswa.2016.01.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Robnik-Šikonja M., Kononenko I. Theoretical and empirical analysis of ReliefF and RReliefF. Machine Learning. 2003;53(1-2):23–69. doi: 10.1023/a:1025667309714. [DOI] [Google Scholar]

- 21.Wettschereck D., Aha D. W., Mohri T. A review and empirical evaluation of feature weighting methods for a class of lazy learning algorithms. Artificial Intelligence Review. 1997;11(1–5):273–314. doi: 10.1023/a:1006593614256. [DOI] [Google Scholar]

- 22.Kononenko I., Šimec E., Šikonja M. R. Overcoming the myopia of inductive learning algorithms with RELIEFF. Applied Intelligence. 1997;7(1):39–55. doi: 10.1023/a:1008280620621. [DOI] [Google Scholar]

- 23.Kira K., Rendell L. A practical approach to feature selection. Proceedings of the International Conference on Machine Learning; 1992; Aberdeen, Scotland. Morgan Kaufmann; pp. 249–256. [Google Scholar]

- 24.Kononenko I. Machine Learning: ECML-94: European Conference on Machine Learning Catania, Italy, April 6–8, 1994 Proceedings. Vol. 784. Berlin, Germany: Springer; 1994. Estimating attributes: analysis and extension of ReliefF; pp. 171–182. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 25.Ruiz R., Riquelme J. C., Aguilar-Ruiz J. S. Proceedings of 8th International Work-Conference on Artificial Neural Networks (IWANN '05), Barcelona, Spain, June 2005. Vol. 3512. Berlin, Germany: Springer; 2005. Heuristic search over a ranking for feature selection; pp. 742–749. (Lectures Notes in Computer Science). [Google Scholar]

- 26.Spolaôr N., Cherman E. A., Monard M. C., Lee H. D. Advances in Artificial Intelligence—SBIA 2012: 21th Brazilian Symposium on Artificial Intelligence, Curitiba, Brazil, October 20–25, 2012. Proceedings. Vol. 7589. Berlin, Germany: Springer; 2012. Filter approach feature selection methods to support multi-label learning based on ReliefF and information gain; pp. 72–81. (Lectures Notes in Computer Science). [DOI] [Google Scholar]

- 27.Ruiz R., Riquelme J. C., Aguilar-Ruiz J. S. Fast feature ranking algorithm. Proceedings of the Knowledge-Based Intelligent Information and Engineering Systems (KES '03); 2003; Berlin, Germany. Springer; pp. 325–331. [Google Scholar]

- 28.Jovanoski V., Lavrac N. Feature subset selection in association rules learning systems. Proceedings of Analysis, Warehousing and Mining the Data; 1999; pp. 74–77. [Google Scholar]

- 29.Liu J. J., Kwok J. T. Y. An extended genetic rule induction algorithm. Proceedings of the Congress on Evolutionary Computation; July 2000; LA Jolla, Calif, USA. pp. 458–463. [Google Scholar]

- 30.Zhang L.-X., Wang J.-X., Zhao Y.-N., Yang Z.-H. A novel hybrid feature selection algorithm: using ReliefF estimation for GA-Wrapper search. Proceedings of the International Conference on Machine Learning and Cybernetics; November 2003; Xi'an, China. IEEE; pp. 380–384. [Google Scholar]

- 31.Zhao Z., Wang L., Liu H. Efficient spectral feature selection with minimum redundancy. Proceedings of the 24th AAAI Conference on Artificial Intelligence; 2010; Atlanta, Ga, USA. AAAI; [Google Scholar]

- 32.Nie F., Huang H., Cai X., Ding C. H. Advances in Neural Information Processing Systems. MIT Press; 2010. Efficient and robust feature selection via joint ℓ 2,1-norms minimization; pp. 1813–1821. [Google Scholar]

- 33.Jiang S.-Y., Wang L.-X. Efficient feature selection based on correlation measure between continuous and discrete features. Information Processing Letters. 2016;116(2):203–215. doi: 10.1016/j.ipl.2015.07.005. [DOI] [Google Scholar]

- 34.Kearns M. J., Vazirani U. V. An Introduction to Computational Learning Theory. Cambridge, Mass, USA: MIT Press; 1994. [Google Scholar]

- 35.Bellman R. Adaptive Control Processes: A Guided Tour, RAND Corporation Research Studies. Princeton, NJ, USA: Princeton University Press; 1961. [Google Scholar]

- 36.Pawlak Z. Rough set theory and its applications to data analysis. Cybernetics and Systems. 1998;29(7):661–688. doi: 10.1080/019697298125470. [DOI] [Google Scholar]

- 37.Hassanien A.-E. Rough set approach for attribute reduction and rule generation: a case of patients with suspected breast cancer. Journal of the American Society for Information Science and Technology. 2004;55(11):954–962. doi: 10.1002/asi.20042. [DOI] [Google Scholar]

- 38.Skowron A., Rauszer C. The discernibility matrices and functions in information systems. In: Słowinski R., editor. Intelligent Decision Support-Handbook of Applications and Advances of the Rough Sets Theory, System Theory, Knowledge Engineering and Problem Solving. Vol. 11. Dordrecht, Netherlands: Kluwer Academic; 1992. pp. 331–362. [Google Scholar]

- 39.Pawlak Z. Rough set approach to knowledge-based decision support. European Journal of Operational Research. 1997;99(1):48–57. doi: 10.1016/S0377-2217(96)00382-7. [DOI] [Google Scholar]

- 40.Wang X., Yang J., Teng X., Xia W., Jensen R. Feature selection based on rough sets and particle swarm optimization. Pattern Recognition Letters. 2007;28(4):459–471. doi: 10.1016/j.patrec.2006.09.003. [DOI] [Google Scholar]

- 41.Ma C., Ouyang J., Chen H.-L., Zhao X.-H. An efficient diagnosis system for Parkinson's disease using kernel-based extreme learning machine with subtractive clustering features weighting approach. Computational and Mathematical Methods in Medicine. 2014;2014:14. doi: 10.1155/2014/985789.985789 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.El-Baz A. H. Hybrid intelligent system-based rough set and ensemble classifier for breast cancer diagnosis. Neural Computing and Applications. 2015;26(2):437–446. doi: 10.1007/s00521-014-1731-9. [DOI] [Google Scholar]

- 43.Eom J.-H., Kim S.-C., Zhang B.-T. AptaCDSS-E: a classifier ensemble-based clinical decision support system for cardiovascular disease level prediction. Expert Systems with Applications. 2008;34(4):2465–2479. doi: 10.1016/j.eswa.2007.04.015. [DOI] [Google Scholar]

- 44.Ma Y. Ensemble Machine Learning: Methods and Applications. New York, NY, USA: Springer; 2012. [DOI] [Google Scholar]

- 45.Etemad S. A., Arya A. Classification and translation of style and affect in human motion using RBF neural networks. Neurocomputing. 2014;129:585–595. doi: 10.1016/j.neucom.2013.09.001. [DOI] [Google Scholar]

- 46.Pawlak Z. Rough sets. International Journal of Computer & Information Sciences. 1982;11(5):341–356. doi: 10.1007/BF01001956. [DOI] [Google Scholar]

- 47.Pawlak Z. Rough sets and intelligent data analysis. Information Sciences. 2002;147(1–4):1–12. doi: 10.1016/s0020-0255(02)00197-4. [DOI] [Google Scholar]

- 48.Huang Y., McCullagh P. J., Black N. D. An optimization of ReliefF for classification in large datasets. Data and Knowledge Engineering. 2009;68(11):1348–1356. doi: 10.1016/j.datak.2009.07.011. [DOI] [Google Scholar]

- 49.Sun J., Jia M.-Y., Li H. AdaBoost ensemble for financial distress prediction: an empirical comparison with data from Chinese listed companies. Expert Systems with Applications. 2011;38(8):9305–9312. doi: 10.1016/j.eswa.2011.01.042. [DOI] [Google Scholar]

- 50.Kohavi R., Provost F. Glossary of terms. Machine Learning. 1998;30(2-3):271–274. [Google Scholar]

- 51.Chen W., Lin H. Prediction of midbody, centrosome and kinetochore proteins based on gene ontology information. Biochemical and Biophysical Research Communications. 2010;401(3):382–384. doi: 10.1016/j.bbrc.2010.09.061. [DOI] [PubMed] [Google Scholar]

- 52.Chou K.-C., Zhang C.-T. Prediction of protein structural classes. Critical Reviews in Biochemistry and Molecular Biology. 1995;30(4):275–349. doi: 10.3109/10409239509083488. [DOI] [PubMed] [Google Scholar]

- 53.Chen W., Lin H. Identification of voltage-gated potassium channel subfamilies from sequence information using support vector machine. Computers in Biology and Medicine. 2012;42(4):504–507. doi: 10.1016/j.compbiomed.2012.01.003. [DOI] [PubMed] [Google Scholar]

- 54.Chou K.-C., Shen H.-B. Recent progress in protein subcellular location prediction. Analytical Biochemistry. 2007;370(1):1–16. doi: 10.1016/j.ab.2007.07.006. [DOI] [PubMed] [Google Scholar]

- 55.Feng P., Lin H., Chen W., Zuo Y. Predicting the types of J-proteins using clustered amino acids. BioMed Research International. 2014;2014:8. doi: 10.1155/2014/935719.935719 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Chou K.-C., Shen H.-B. ProtIdent: a web server for identifying proteases and their types by fusing functional domain and sequential evolution information. Biochemical and Biophysical Research Communications. 2008;376(2):321–325. doi: 10.1016/j.bbrc.2008.08.125. [DOI] [PubMed] [Google Scholar]

- 57.Chou K.-C., Cai Y.-D. Prediction of membrane protein types by incorporating amphipathic effects. Journal of Chemical Information and Modeling. 2005;45(2):407–413. doi: 10.1021/ci049686v. [DOI] [PubMed] [Google Scholar]

- 58.Tom F. ROC graphs: notes and practical considerations for researchers. Machine Learning. 2004;31:1–38. [Google Scholar]

- 59.Huang J., Ling C. X. Using AUC and accuracy in evaluating learning algorithms. IEEE Transactions on Knowledge and Data Engineering. 2005;17(3):299–310. doi: 10.1109/TKDE.2005.50. [DOI] [Google Scholar]

- 60.Foresee F. D., Hagan M. T. Gauss-Newton approximation to Bayesian learning. Proceedings of the International Conference on Neural Networks; 1997; Houston, Tex, USA. pp. 1930–1935. [Google Scholar]