Abstract

Stimuli that are more predictive of subsequent reward also function as better conditioned reinforcers. Moreover, stimuli attributed with incentive salience function as more robust conditioned reinforcers. Some theories have suggested that conditioned reinforcement plays an important role in promoting suboptimal choice behavior, like gambling. The present experiments examined how different stimuli, those attributed with incentive salience versus those without, can function in tandem with stimulus-reward predictive utility to promote maladaptive decision-making in rats. One group of rats had lights associated with goal-tracking as the reward-predictive stimuli and another had levers associated with sign-tracking as the reward-predictive stimuli. All rats were first trained on a choice procedure in which the expected value across both alternatives was equivalent but differed in their stimulus-reward predictive utility. Next, the expected value across both alternatives was systematically changed so that the alternative with greater stimulus-reward predictive utility was suboptimal in regard to primary reinforcement. The results demonstrate that in order to obtain suboptimal choice behavior, incentive salience alongside strong stimulus-reward predictive utility may be necessary; thus, maladaptive decision-making can be driven more by the value attributed to stimuli imbued with incentive salience that reliably predict a reward rather than the reward itself.

Keywords: incentive salience, suboptimal choice, decision making, sign tracking, goal tracking

1. Introduction

Normative theories, such as optimal foraging theory (Stephen and Krebs, 75) and rational choice theory (Scott, 2000), suggest that an individual should behave optimally or choose alternatives that maximize reinforcement and minimize effort. Although theoretically useful, the decisions an individual makes can deviate from the predictions provided by normative theories and result in less overall reinforcement than alternatives (Pyke, 1984; Herrnstein, 1990; Shafir and LeBoeuf, 2002). Indeed, such suboptimal behavior, also described as maladaptive decision-making (Zentall and Stagner, 2011), often appears in human pathologies such as gambling (Brand et al. 2005), substance abuse (Bechara and Damasio, 2002), and eating-disorders (Brogan et al. 2010), all of which ultimately result in a net loss of resources (Leiseur et al. 1986; Leiseur, 1992; Hoek et al. 2003). Thus, maladaptive decision-making can be persistent and recurring despite the unfavorable outcomes that are associated with such behavior (Dickerson, 1993; Blaszczynski and Silove, 1995; Chen and Kandel, 1995; Hyman and Malenka, 2001; Baler and Volkow, 2006).

While maladaptive decision-making is present in a variety of pathologies, one disorder in which it is readily apparent is gambling and risk taking behavior (cf. Zentall, 2015). Human gambling behavior, when surveyed, has been attributed to a multitude of subjective rationalizations that, from the individual’s perspective, account for the occurrence and recurrence of the phenomenon (Neighbors et al. 2002). Interestingly, researchers have theorized that the basis of some subjective rationalizations is an effect of enhanced saliency of wins versus loses (Tversky and Kahneman, 1975) or a misunderstanding of probabilities (Teigen, 1983; Sanbonmatsu et al. 1997). Given that human gambling behavior is complex and influenced by individual experiences, researchers have often used animal models to better isolate the mechanisms mediating gambling-like behavior (e.g., Fantino et al. 1979; Spetch et al. 1990; Madden et al. 2007; Zeeb et al. 2009; Zentall, 2016). One approach that has been fruitful in this endeavor consists of giving animals a choice between one of two options, each leading to a specific stimulus-reward outcome in which one option is suboptimal relative to the other, and examining the choices made. Using a similar approach (McDevitt et al. 2016; Zentall, 2016), researchers have consistently demonstrated pigeons show a large suboptimal preference for an option that offers less primary reinforcement over an option that offers greater primary reinforcement (e.g., Dunn and Spetch, 1990; Spetch et al. 1990; Gipson et al. 2009; Stagner and Zentall, 2010; Smith et al. 2016). Furthermore, the effects demonstrated by the suboptimal choice procedure used in pigeons have also been replicated in human subjects (Molet et al. 2012), suggesting comparable mechanisms that affect decision-making.

The suboptimal choice procedure operates as a concurrent-chain schedule (Autor, 1960; Herrnstein, 1964). First, subjects are presented with a choice between two options, to which responding is required to make a choice; this choice phase is referred to as the initial-link. After making a choice, each option results in an event referred to as the terminal-link. Furthermore, by keeping the choice phase equivalent (i.e., initial-link response requirements), preference for one option over another option is assumed to be driven by the terminal-link event and the primary outcomes. More specifically, in the suboptimal choice procedure (e.g. Smith et al. 2016) one alternative leads to a terminal-link in which a stimulus that perfectly predicts reinforcement is occasionally presented (e.g., 25% of the time) or leads directly to a non-signaled reward omission. The other alternative leads to a different terminal-link in which a different stimulus is always presented, but it predicts reinforcement probabilistically. Thus, in the suboptimal choice procedure, the effects of primary reinforcement (i.e., food) can be dissociated from those of conditioned reinforcement, (the different stimuli in the terminal-links that predict reward). Using concurrent-chain schedules, there have been findings suggesting that terminal-link stimuli associated with reward outcome function as conditioned reinforcers and can influence the relative allocation of choices (Fantino, 1969; Moore, 1985; Dunn and Spetch, 1990; Williams and Dunn, 1991; Mazur, 1991, 1995, 1997; Williams, 1994; Roper and Zentall, 1999; Stagner et al. 2012; Pisklak et al. 2015; Smith and Zentall, 2016; Smith et al. 2016), even if preference leads to significantly reduced primary reinforcement. Although not all stimuli associated with reward become conditioned reinforcers (cf. Kelleher and Gollub, 1962), conditions in which a reward-associated terminal-link stimulus is a conditioned reinforcer function by how well it serves as a predictor (e.g. probability) for reward and can promote suboptimal choice behavior (Stagner and Zentall, 2010; Vasconcelos et al. 2015; McDevitt et al. 2016; Smith and Zentall, 2016; Smith et al., 2016).

Despite previous studies (e.g. Smith and Zentall, 2016; Smith et al. 2016) demonstrating that a terminal-link stimulus with the greatest predictive probability of reinforcement can produce suboptimal choice in pigeons, similar procedures applied in rodents fail to produce a similar effect; instead, rats tend to behave optimally and choose an alternative that provides the greatest amount of reinforcement, regardless of how predictive a terminal-link stimulus might be (Roper and Baldwin, 2004; Trujano and Orduna, 2015; Trujano et al. 2016). While the above findings suggest species differences between pigeons and rats on the suboptimal choice procedure, it is also possible that the specific conditions used within the procedures could greatly influence choice behavior. Conceptually, the stimulus that is present in each terminal-link functions as a conditioned stimulus (CS), as it is predictive of the subsequent reinforcer (unconditioned stimulus; US). However, there are scenarios in which a CS can be attributed with incentive value that goes beyond its predictive function (Robinson and Berridge, 2008; Robinson and Flagel, 2009; Meyer et al. 2014; Beckmann and Chow, 2015). Importantly, CSs attributed with incentive value serve as more robust conditioned reinforcers (Robinson and Flagel, 2009; Meyer et al. 2014; Beckmann and Chow, 2015). For example, when pitted against each other, rats have shown a preference for a CS attributed with incentive value over a CS without incentive value, even if the preference results in significantly reduced primary reinforcement (cf. Beckmann and Chow, 2015; Chow et al. 2016). Collectively, these studies suggest that not all CSs function equivalently and, despite being equally predictive, CSs attributed with incentive value can significantly influence decision-making, leading to maladaptive decisions.

Although pigeons trained on a suboptimal choice procedure show preference for the terminal-link stimulus with the greatest predictive probability of reinforcement, it should be noted that the stimuli used in those experiments (e.g. Smith and Zentall, 2016; Smith et al. 2016) consists of lights. For pigeons, light stimuli are known to elicit sign-tracking behavior (approach and contact with the stimulus; Hearst and Jenkins, 1974), in the form of key pecks (Brown and Jenkins, 1968; Silva et al. 1992), which is often a key feature of stimuli attributed with incentive salience (Meyers et al. 2014; Beckmann and Chow, 2015). For example, Silva et al (1992) demonstrated that pigeons sign-track to a light-CS that predicted food and continued to do so as it was moved further away from the location of reward delivery, resulting in maladaptive sign-tracking to the light-CS that led to reduced eating time. Thus, for pigeons, it is possible that the use of a light stimulus, which elicits key pecking, could be coupled with incentive value attribution (Timberlake, 1994; Mazur, 2007) that in turn could drive maladaptive choice within the suboptimal choice procedure.

Parallel to suboptimal choice procedures with pigeons, studies using rats have also used lights (Roper and Baldwin, 2004; Trujano and Orduna, 2015; Trujano et al. 2016). Notably, lights are known to elicit goal-tracking behavior (Holland, 1977; Cleland and Davey, 1983; Beckmann and Chow, 2015), described as approach to the location of reward delivery (Boakes, 1977), when a food US is used and are not accompanied by the attribution of incentive value that has been shown to promote suboptimal choice behavior. Importantly, it has been shown that rats have a tendency to sign-track to a lever CS (Davey et al. 1982; Chang, 2014; Holland et al. 2014), and levers associated with sign-tracking behavior function as robust conditioned reinforcers (Robinson and Flagel, 2009). Therefore, in the present study we examined how different terminal-link stimuli, with and without incentive salience (i.e., levers vs. lights), can influence decision-making in rats using a suboptimal choice procedure (Smith et al, 2016). If incentive salience does not play a role, both groups should similarly prefer the optimal alternative, regardless of the type of terminal-link stimuli used. However, if using stimuli attributed with incentive salience does play a role, levers as terminal-link stimuli should direct decision making towards suboptimal choice behavior.

2. Methods

2.1. Animals

Twelve adult male Sprague-Dawley rats (Harlan Inc.; Indianapolis, IN, USA), weighing approximately 250–275 g at the beginning of experimentation, were used. Rats were individually housed in a temperature-controlled environment with a 12:12 hr light:dark cycle, with lights on at 0600 h. All rats were first acclimated to the colony environment and handled daily for one week prior to experimentation, had ad libitum access to water in their home cage throughout experimentation, and were maintained at approximately 85% free-feed body weight throughout experimentation. All experimentation was conducted during the light phase. All experimental protocols were conducted according to the 2010 NIH Guide for the Care and Use of Laboratory Animals (8th edition) and were approved by the Institutional Animal Care and Use Committee at the University of Kentucky.

2.2. Apparatus

Experiments were conducted in operant conditioning chambers (ENV-008, MED Associates, St. Albans, VT) enclosed within sound-attenuating compartments (ENV-018M, MED Associates). Each chamber was connected to a personal computer interface (SG-502, MED Associates), and all chambers were operated using MED-PC. Within each operant chamber, a recessed food receptacle (ENV-200R2MA) outfitted with a head-entry detector (ENV-254-CB) was located on the front response panel of the chamber, two retractable response levers were mounted on either side of the food receptacle (ENV-122CM), and a white cue light (ENV-221M) was mounted above each response lever. The back response panel was outfitted with two nosepoke response receptacles (ENV-114BM) directly opposite to the front response levers, and a house-light (ENV-227M) was located at the top of the back panel between the two nosepoke response receptacles. Sonalert© tone generators (ENV-223 AM and ENV223-HAM) were located on either side of the house-light. Food pellets (45-mg Noyes Precision Pellets; Research Diets, Inc., New Brunswick, NJ) were delivered via a dispenser (ENV-203M-45).

2.3. Establishing Procedures

2.3.1. Magazine Shaping

During the last two days of acclimation to the colony, after animals were handled, 10 to 15 food pellets were dropped into their home cages. Animals were then trained to retrieve food pellets from the food receptacle for two consecutive days; rats were placed in the operant chambers and given 45 minutes to retrieve and consume 25 food pellets, delivered on a 60-s fixed time schedule.

2.3.2. Nosepoke Training

Following magazine shaping, rats were trained to nosepoke on a fixed ratio (FR) 1 schedule of reinforcement, in which a single break of the photo-beam within the illuminated recessed nosepoke-receptacle resulted in the offset of the nosepoke light and delivery of a food pellet in the magazine. Each session consisted of 30 trials, 15 left- and 15 right-nosepoke presentations. Nosepokes were illuminated individually and pseudo-randomly, in which no more than 6 presentations of the same side could occur sequentially. Trials were separated by a 10-s inter-trial interval (ITI). Rats were trained to nosepoke for food pellets for three consecutive days.

2.3.3. Orienting Response

After nosepoke training, an orienting response was added to the response chain. The start of each trial was now signaled by the illumination of the house-light. A contingent response, head-entry into the magazine, resulted in the offset of the house-light and illumination of either the left or right nosepoke. Completing the FR1 requirement on the presented nosepoke resulted in the offset of the nosepoke light and delivery of a food pellet. Each session consisted of 30 trials, 15 left- and 15 right-nosepoke pseudo-random presentations, and were separated by a 10-s ITI. Rats were trained on this response chain for three days.

2.4. Experiment 1: Suboptimal Choice

2.4.1. Phase 1: Initial Preference

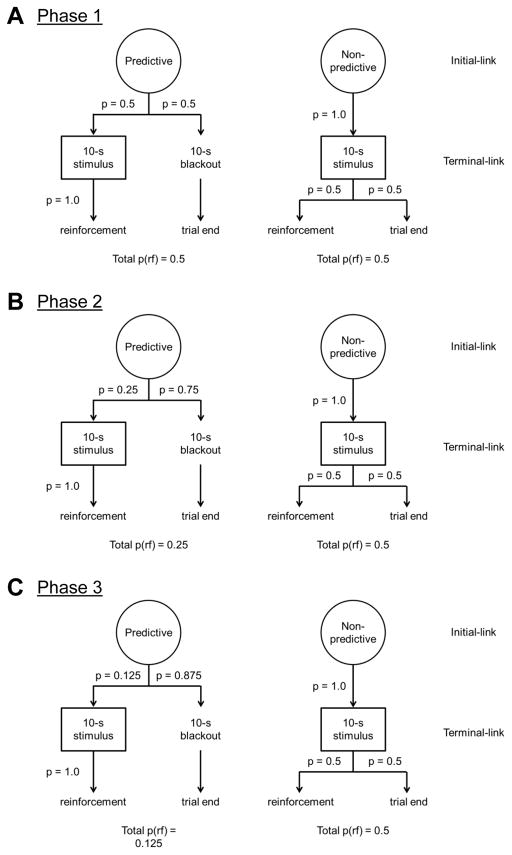

Rats were then trained on a suboptimal choice procedure (Fig. 1) based on the methods described in Smith et al. (2016). Briefly, the suboptimal choice procedure consisted of a total of 72 trials per session, in which initial-link nosepoke-responses resulted in the presentation of one of two spatially distinct terminal-link stimuli (levers or lights) followed by equivalent reinforcement of both alternatives. Each session consisted of 48 forced trials (24 trials per alternative) and 24 choice trials. All trials began with the illumination of the house-light, in which a contingent orienting response, head-entry into the magazine, resulted in the offset of the house-light and illumination of a nosepoke light (left, right, or both depending on the trial type). Forced trials consisted of the illumination of a single nosepoke-receptacle, in which a contingent nosepoke resulted in one of two outcome chains. One initial-link option, described as the predictive alternative, had a 50% probability to either produce the presentation of a 100% predictive 10-s terminal-link stimulus (lever or light; balanced for side), followed by a single food pellet delivery on stimulus offset, or a 10-s blackout terminal-link period leading to no reinforcement. The alternative initial-link option, described as the non-predictive alternative, always produced the presentation of a 10-s terminal-link stimulus (lever or light; opposite to the predictive option), but following stimulus offset there was a 50% chance of a single food pellet delivery. Thus, the expected value across both alternatives was equivalent at 1:1. Choice trials functioned similar to forced trials; except both nosepoke-receptacles were illuminated and animals were allowed to allocate choices across both alternatives. All trials were separated by a 10-s ITI. See Figure 1 for schematics of the different phases.

Fig. 1.

Diagram of the choice procedure. Each trial began with the illumination of the house-light, in which a contingent orienting response resulted in the illumination of the right, left, or both nosepokes receptacles depending on trial type. A response into one of the illuminated nosepokes resulted in the presentation of a predictive stimulus followed by food or a blackout period; whereas, a response into the opposite nosepoke resulted in the presentation of a non-predictive stimulus with a chance for food following stimulus offset. Note that panels (A), (B), and (C) correspond to the three different phases in experiment, in which the probability of obtaining the predictive stimulus was manipulated.

Sign-tracking responses were recorded as lever presses during the 10-s terminal-link stimulus presentation, while goal-tracking responses were recorded as breaks of a photobeam within the food receptacle during the same period. Additionally, goal-tracking during the 10-s terminal-link blackout periods were also recorded. Both sign- and goal-tracking during either terminal-link had no effects on the occurrence of subsequent reinforcement.

2.4.2. Phase 2 and 3: Decreasing frequency of predictive stimulus

Following training on the first phase of the suboptimal choice procedure, in which the expected value for both alternatives was equivalent, animals were then moved onto Phase 2 and, subsequently, Phase 3 to determine if suboptimal behavior (choice of an alternative with lower expected value) could be demonstrated in rats.

In Phase 2, responses to the predictive alternative during forced and choice trials resulted in the presentation of the 100% predictive stimulus and subsequent reinforcement 25% of the time. The remaining 75% of the time, the predictive alternative resulted in a 10-s non-reinforced blackout. The non-predictive alternative remained constant, in which the non-predictive stimulus was always presented and followed by a 50% chance of reinforcement. Thus, the expected value for the predictive alternative to non-predictive alternative was 1:2.

In Phase 3, responses to the predictive alternative during forced choice trials resulted in the presentation of the 100% predictive stimulus and subsequent reinforcement 12.5% of the time. The remaining 87.5% of the time, the predictive alternative resulted in a 10-s non-reinforced blackout. The non-predictive alternative remained constant, in which the non-predictive stimulus was always presented and followed by a 50% chance of reinforcement. Thus, the expected value for the predictive alternative to non-predictive alternative was 1:4.

2.5. Reversal Phase

Following Phase 3 in Experiment 1, a reversal was conducted in order to test for discrimination learning of the initial-link contingencies (Wyckoff, 1952; Kelleher, 1956). All trials functioned exactly like Phase 1, in which the expected values were equivalent across both alternatives (i.e., 50% probability in obtaining the predictive stimulus and 50% probability of reinforcement following presentation of the non-predictive stimulus), except now, the initial-link response lead to the opposite terminal-link used in Phase 1.

2.6. Analysis

Prior to analyzing the data, discrimination proportions (total response rate during predictive stimulus presentation divided by total response rate during predictive stimulus presentation plus the response rate during the 10-s blackout) were calculated to determine if rats had learned the contingencies associated with each choice alternative. Data were analyzed using linear mixed effects modeling (Gelman and Hill, 2006). Choice of the predictive alternative was analyzed for each individual phase with stimulus (nominal) as a fixed between-subject factor, session (continuous) as a fixed within-subject factor, and subject as a random factor. For comparisons between phases, choice of the predictive alternative was averaged across the last four sessions of training with stimulus (nominal) as a fixed between-subject factor, phase (continuous) as a fixed within-subject factor, and subject as a random factor. A derivation of the concatenated matching law (Baum and Rachlin, 1969; Killeen, 1972; Grace, 1994), was applied in the form:

where B represents behavior allocated to the predictive (Bp) and non-predictive (Bn) side, R represents the scheduled rate of primary reinforcement, and V represents the value of the stimulus or its function as a conditioned reinforcer. Rp was set as the probability of primary reinforcement, while Vp was set at 1.0, the predictive utility of the terminal-link stimulus, on the predictive side. Rn was set at 0.5, the probability of primary reinforcement, while Vn was set at 0.5, the predictive utility of the terminal-link stimulus, on the non-predictive side. The free parameters sr (sensitivity to primary reinforcement) and sc (sensitivity to conditioned reinforcement) capture the extent of changes in choice for the predictive alternative with changes in the relative rate of reinforcement for the primary reinforcer and value for the terminal-link stimulus. The model was fit to the data via nonlinear mixed effects modeling (NLME; Pinheiro et al. 2007), in which choice of the predictive alternative was averaged across the last four sessions with stimulus (nominal) as a fixed between-subject factor, phase (continuous) as a fixed within-subject factor, and subject as a random factor.

Sign- and goal-tracking rates during forced trials were averaged across the last four sessions for each phase. Due to the absence of sign-tracking to a light stimulus, sign-tracking response rates for the lever group were analyzed alone with phase (continuous) and stimulus predictability (nominal) as fixed within-subject factors, and subject as a random factor. Goal-tracking rates were analyzed with stimulus (nominal) as a fixed between-subject factor, phase (continuous) and stimulus predictability (nominal) as fixed within-subject factors, and subject as a random factor. Additionally, the sign- and goal-tracking rates for the lever stimulus were compared with response type (nominal), stimulus predictability (nominal), and phase (continuous) as fixed within-subject factors, and subject as a random factor. Moreover, goal-tracking rates during the 10-s blackout period were analyzed with stimulus (nominal) as a fixed between-subject factor, phase (continuous) as a fixed within-subject factor, and subject as a random factor. Similarly, response rates during reversal were analyzed as mentioned above, except phase was excluded.

Additionally, one-sample t-tests were conducted on the percent choice of the predictive alternative, averaged across the last four sessions of each phase, against indifference (50%) to determine if choice was significantly greater than chance. Correlations between total response rates (sign- and goal-tracking rates combined; averaged across the last four sessions) during presentation of the predictive stimulus and choice for the predictive alternative (averaged across the last four sessions) were calculated using Pearson’s r (α = 0.05). The percentage of the maximum pellets earned during choice trials was averaged across the last four sessions of each phase and analyzed with stimulus (nominal) as a fixed between-subject factor, phase (continuous) as a fixed within-subject factor, and subject as a random factor. Note the maximum number of pellets available on choice trials was 12 for each session across all experiments. All post hoc tests were conducted with Tukey HSD. For all tests, α was set at 0.05.

3. Results

3.1. Experiment 1: Obtaining suboptimal choice behavior via incentive salience

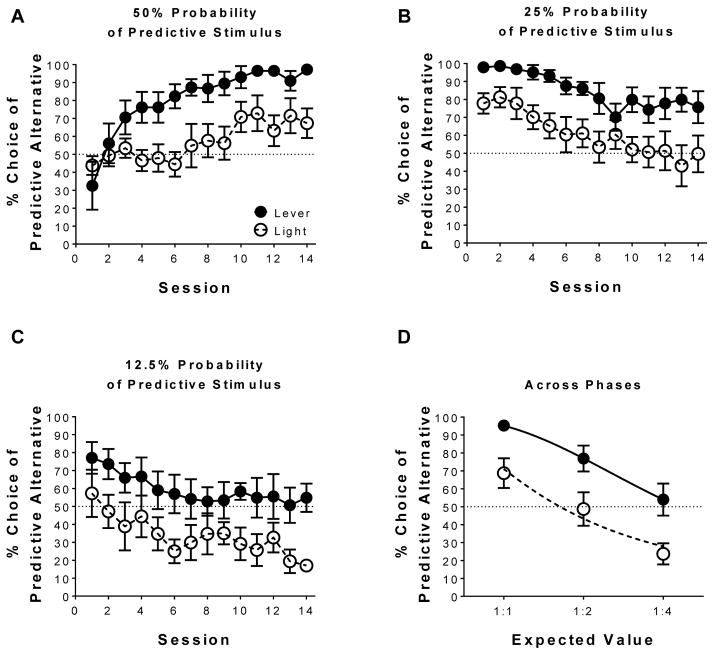

Fig. 2 illustrates the percent choice of the predictive alternative during Phase 1 (A), Phase 2 (B), Phase 3 (C), and the average during the last four sessions of each phase (D), in which the frequency that the predictive stimulus was presented decreased as a function of phase. Fig. 2A illustrates initial acquisition on the suboptimal choice procedure, in which the predictive alternative and non-predictive alternative were equivalent in expected value at 50% probability of reinforcement. Linear mixed effects analysis revealed a main effect of session [F(1,10) = 22.87, p < 0.05], indicating that preference for the predictive alternative increased with training, and a main effect of stimulus [F(1,10) = 9.24, p <0.05], indicating a lever stimulus increased preference for the predictive alternative to a greater extent than a light stimulus. Fig. 2B illustrates the effect of reducing the primary reinforcement associated with the predictive alternative to 25% on the suboptimal choice procedure, in which the predictive alternative and non-predictive alternative was 1:2 in expected value. Linear mixed effects analysis revealed a main effect of session [F(1,10) = 23.28, p < 0.05], indicating that preference for the predictive alternative decreased with training, and a main effect of stimulus [F(1,10) = 7.08, p < 0.05], indicating a lever stimulus increased preference for the predictive alternative to a greater extent than a light stimulus. Fig. 2C illustrates the effect of reducing the primary reinforcement associated with the predictive alternative to 12.5% on the suboptimal choice procedure, in which the predictive alternative and non-predictive alternative was 1:4 in expected value. Linear mixed effects analysis revealed a main effect of session [F(1,10) = 17.43, p < 0.05], indicating preference for the predictive alternative decreased over sessions, and a main effect of stimulus [F(1,10) = 5.08, p < 0.05], indicating a lever stimulus again increased preference responding for the predictive alternative to a greater extent than a light stimulus.

Fig. 2.

Predictive stimuli can bias preference when overall reinforcement is equivalent; by decreasing the probability in obtaining the predictive stimulus preference switches towards the alternative. Mean (±SEM) percent choice of the predictive alternative (lever n = 6; light n = 6) when the probability of obtaining the predictive stimulus was (A) 50%, (B) 25%, and (C) 12.5%. (D) Mean (±SEM) percent choice of the predictive alternative during the last four sessions of each training for each phase as a function of decreasing predictive to non-predictive alternative expected values. Lines are best fits from the matching equation for each stimulus condition.

Fig. 2D illustrates the average choice for the predictive alternative over the last four sessions of training across the three phases. Linear mixed effects analysis revealed a main effect of phase [F(1,10) = 74.35, p < 0.05], indicating preference for the predictive alternative decreased as the expected value for the predictive alternative to non-predictive alternative decreased. Linear mixed effects analysis also revealed a main effect of stimulus [F(1,10) = 9.46, p < 0.05], indicating that preference for the predictive alternative was greater with a lever stimulus than a light stimulus. Additionally, one-sample t-tests revealed that the percent choice of the predictive alternative averaged across the last four sessions of training was greater than indifference for the lever stimulus during Phase 1 [t(5) = 21.75, p < 0.05] and Phase 2 [t(5) = 3.72, p < 0.05]; conversely, the percent choice for the predictive alternative for the light stimulus was greater than indifference during Phase 1 [t(5) = 2.27, p < 0.05], but lower than indifference during Phase 3 [t(5) = 4.46, p < 0.05]. Collectively, the above results suggest that stimuli that have predictive utility can preferentially influence choice; however, a lever stimulus imbued with incentive salience can promote suboptimal choice to a much greater extent than a light stimulus not accompanied by incentive salience.

The lines in Fig. 2D represent best-fit functions from the concatenated matching law used to determine whether primary or conditioned reinforcement had a greater influence on choice. Nonlinear mixed effects modeling revealed a main effect of stimulus condition on the sensitivity parameter to conditioned reinforcement (sc) [F(1,21) = 29.16, p <0.05], indicating choice in the lever group was influenced to a greater extent by conditioned reinforcement than primary reinforcement; this suggests that the value attributed to the lever stimulus in the terminal-link drives initial-link preference more than a food pellet. The best-fit sensitivity to the primary reinforcement (sr) parameter estimate was 1.96 ± 0.49, and the best-fit sensitivity to the conditioned reinforcement (sc) parameter estimate was 1.45 ± 1.01 for the light stimulus condition, indicating primary reinforcement played a marginally greater role in influencing choice for this condition. In contrast, the best-fit sensitivity to the primary reinforcement (sr) parameter estimate was 2.84 ± 0.44, and the best-fit sensitivity to the conditioned reinforcement (sc) parameter estimate was 4.76 ± 0.82 for the lever stimulus condition, indicating that the conditioned reinforcing value of the terminal-link stimulus determined preference to a greater extent than primary reinforcement for the lever condition. Thus, utilizing a stimulus attributed with incentive salience (i.e., a lever) as the reward-associated terminal-link stimulus produced greater influence of stimulus value on preference, overshadowing the effects of primary reinforcement on choice.

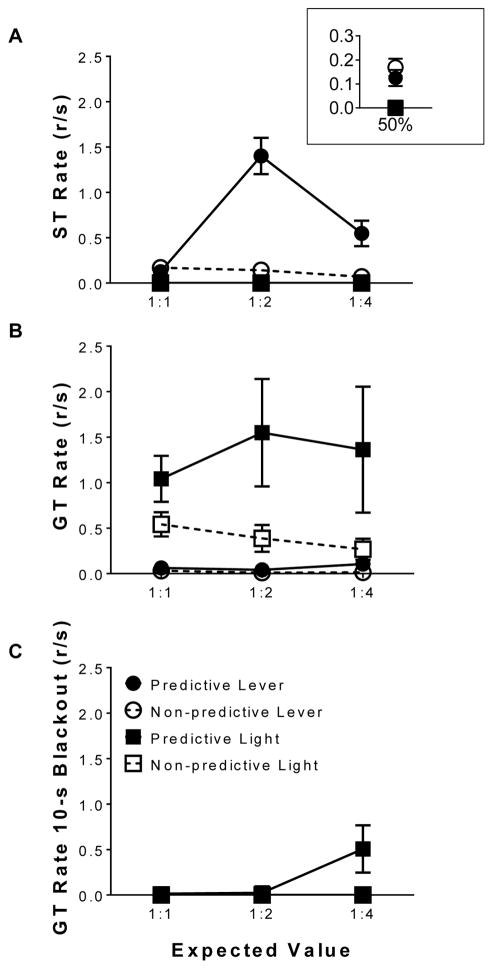

Fig. 3 illustrates the corresponding response rates during the last four sessions of training for each phase. Fig. 3A illustrates sign-tracking rates to the predictive and non-predictive lever stimuli. Linear mixed effects analysis revealed a main effect of stimulus predictability [F(1,5) = 45.62, p < 0.05], indicating sign-tracking rates were higher for the predictive stimulus than the non-predictive stimulus. Linear mixed effects analysis also revealed a stimulus predictability x phase interaction [F(1,5) = 20.07, p < 0.05], indicating that sign-tracking rates across the phases were greater for the predictive stimulus than the non-predictive stimulus. Fig. 3B illustrates goal-tracking rates to the predictive and non-predictive stimuli. Linear mixed effects analysis revealed a main effect of stimulus [F(1,10) = 18.80, p < 0.05], indicating goal-tracking rates were greater for a light stimulus than a lever stimulus. Linear mixed effects analysis also revealed a main effect of stimulus predictability [F(1,10) = 11.10, p < 0.05], indicating that goal-tracking rates were greater for a predictive stimulus than a non-predictive stimulus. Finally, linear mixed effects analysis revealed a stimulus x stimulus predictability interaction [F(1,10) = 8.88, p < 0.05], indicating goal-tracking rates were dependent on the type of stimulus presented and the function it served; post hoc analysis revealed that the goal-tracking rates for the predictive light stimulus was greater than goal-tracking rates for both the predictive and non-predictive lever stimuli and greater than goal-tracking rates for the non-predictive light stimulus.

Fig. 3.

Response rates during the terminal-link averaged across the last four sessions of each phase. Mean (±SEM) response rates for (A) sign-tracking, (B) goal-tracking, and (C) responding during the absence of the predictive stimulus as a function of decreasing predictive to non-predictive alternative expected values (lever n = 6; light n = 6).

Additionally, when sign- and goal-tracking rates were compared against one another for the lever stimulus only, linear mixed effects analysis revealed a main effect of response type [F(1,59) = 24.80, p < 0.05], indicating that sign-tracking rates were higher than goal-tracking rates, and a main effect of stimulus predictability [F(1,59) = 17.73, p < 0.05], indicating that sign-tracking rates were higher than goal-tracking rates for the predictive stimulus. Linear mixed effects analysis also revealed a phase x stimulus predictability interaction [F(1,59) = 4.92, p < 0.05], indicating that response rates for the predictive stimulus increased as a function of phase, and a response type x stimulus predictability interaction [F(1,59) = 12.33, p < 0.05], indicating that the conditioned response rates were dependent on stimulus predictability; post hoc tests revealed that sign-tracking to the predictive stimulus was greater than sign-tracking to the non-predictive stimulus and goal-tracking for both the predictive and non-predictive lever stimulus.

Fig. 3C illustrates goal-tracking rates during the 10-s blackout period when the predictive stimulus was not presented. Linear mixed effects analysis revealed no significant differences. Collectively, the above results, demonstrate that a predictive stimulus will elicit greater rates of conditioned responding to a greater extent than non-predictive stimuli. Additionally, a lever stimulus primarily elicited sign-tracking behavior, whereas a light stimulus elicited goal-tracking behavior

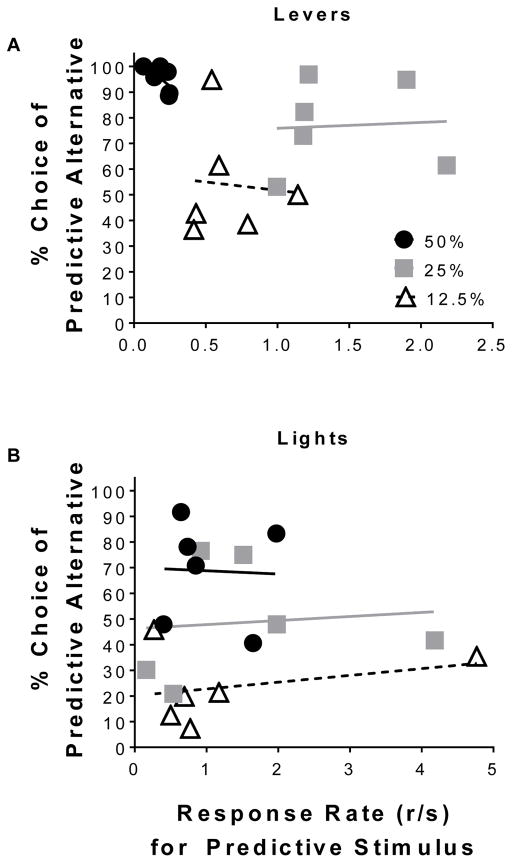

Fig. 4 illustrates the correlation between response rate and percent choice of the predictive lever (A) and light (B) alternatives. There were no significant correlations between response rate and choice of the predictive lever alternative during Phase 1 (r = −0.66, NS), Phase 2 (r = 0.061, NS), or Phase 3 (r = −0.082, NS). Likewise, there were no significant correlations between response rate and choice of the predictive light alternative during Phase 1 (r = −0.038, NS), Phase 2 (r = 0.099, NS), or 3 (r = 0.31, NS). Collectively, the above results demonstrate that response rates in the presence of predictive stimuli and preference for said stimuli are separable.

Fig. 4.

Conditioned responding for the predictive stimulus was not correlated with preference for the predictive stimulus. Relationship between rates of conditioned responding for the predictive stimulus (lever n = 6; light n = 6) and choice of the predictive (A) lever- and (B) light stimulus alternative. Note: panel (B) is on a different scale.

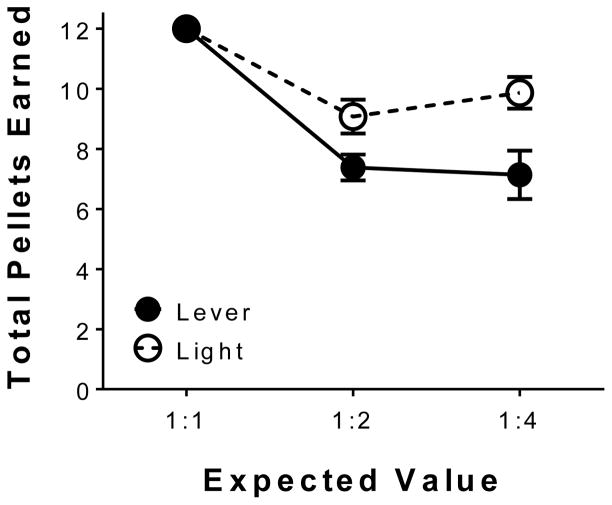

Fig. 5 illustrates the average number of reinforcers earned during choice across the last four sessions of training per phase. Note that the maximum number of pellets that could be obtained during each session for each phase was 12. Linear mixed effects analysis revealed significant main effect of phase [F(1,10) = 52.45, p < 0.05], indicating the number of pellets earned decreased as a function of phase, and a main effect of stimulus [F(1,10) = 7.83, p < 0.05], indicating the number of pellets earned was dependent on the terminal-link stimulus. Additionally, linear mixed effects analysis revealed a significant phase x stimulus interaction [F(1,10) = 7.98, p < 0.05], indicating that the number of pellets earned decreased across phase and was dependent on the type of stimulus present in the terminal-link. Collectively, the above results indicate a lever stimulus attributed with incentive value promoted greater suboptimal choice behavior, leading to a significant reduction in the number of reinforcers earned.

Fig. 5.

A lever stimulus promotes suboptimal choice behavior to a greater extent than a light stimulus as seen with the number of reinforcers earned during choice trials. Mean (±SEM) total pellets earned during the last four sessions of training across (lever n = 6; light n = 6).

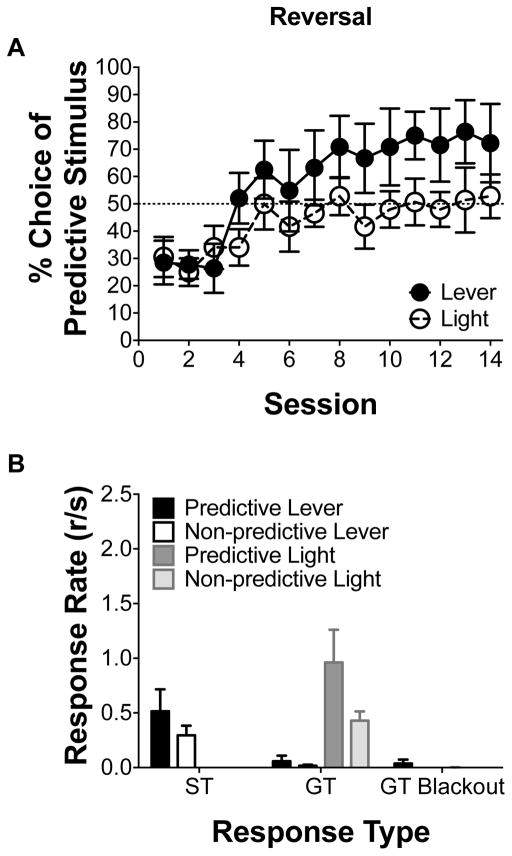

3.2. Reversal on the suboptimal choice procedure

Fig. 6 illustrates choice and response rate following a reversal, in which initial-link responding resulted in the opposite terminal-link used in the first three phases; additionally, the overall probability of reinforcement across both alternatives was reset to 50% as in Phase 1 from Experiment 1. Fig. 6A illustrates the percent choice of the predictive alternative. Linear mixed effects analysis revealed a main effect of session [F(1,10) = 14.86, p < 0.05], indicating preference for the predictive alternative increased with training following reversal. Additionally, a one-sample t-test revealed that the percent choice of the predictive option averaged across the last four sessions of training was greater than indifference for the lever stimulus [t(5) = 2.02, p < 0.05]. Collectively, the above results suggest that the animals were sensitive to the contingency reversal and that preferences observed during the first three phases in Experiment 1 were not due to any side biases.

Fig. 6.

Following a reversal, when overall reinforcement is equivalent, the predictive lever stimulus can bias preference. Mean (±SEM) percent choice of the predictive alternative (lever n = 6; light n = 6) during a reversal. (B) Mean response rates for sign- and goal-tracking responding during the absence of the predictive stimulus during the last four sessions of reversal training.

Fig. 6B illustrates response rates for sign-tracking, goal-tracking, and goal-tracking during the blackout period for a predictive and non-predictive stimulus. Linear mixed effects analysis revealed a main effect of stimulus for goal-tracking [F(1,10) = 18.23, p < 0.05], indicating a light stimulus elicited goal-tracking. Additionally, when sign- and goal-tracking rates were compared against one another for the lever stimulus, linear mixed effects analysis revealed a main effect of response type [F(1,5) = 6.51, p < 0.05], indicating sign-tracking rates were higher than goal-tracking rates for the lever.

4. Discussion

The present experiment examined how different terminal-link stimuli (i.e., levers vs. lights) differentially associated with incentive salience attribution can function in tandem with varying terminal-link stimulus predictive utility (100% vs. 50%) to promote suboptimal choice behavior. The results reported here reveal a number of factors that can promote suboptimal choice behavior. First, consistent with the current literature (e.g., Smith and Zentall, 2016; Smith et al. 2016), reward-associated stimuli that have greater predictive utility (i.e., 100%) can increase preference for a predictive alternative when the expected value is equivalent (1:1) across both alternatives, in which choice on either alternative does not influence overall reinforcement. Second, when a reward-associated stimulus is attributed with incentive value it can also preferentially increase choice for that choice alternative relative to a stimulus that is equally reward-predictive but is not associated with incentive salience. Together, the results suggest that predictive utility and incentive salience can promote suboptimal choice behavior, as robust preference for the alternative associated with a stimulus that had greater predictive utility and incentive salience was resistant to significant decreases in its expected value. Finally, a contingency reversal indicated that animals were sensitive to the contingencies during the initial phases and that the results prior to reversal training were not due to any side biases. Collectively, the findings herein demonstrate that terminal-link stimuli that have greater predictive utility along with the propensity for the attribution of incentive salience can function together to promote suboptimal choice behavior.

To our knowledge, the present study is the first to obtain suboptimal choice behavior in rats using a suboptimal choice procedure commonly used with pigeons (Pisklak et al. 2015; McDevitt et al. 2016; Zentall, 2016; Smith and Zentall, 2016; Smith et al. 2016) and replicated with humans (Molet et al. 2012). Importantly, like previous studies using lights as terminal-link stimuli to assess suboptimal choice behavior in rats using a similar procedure (Roper and Baldwin, 2004; Trujano and Orduna, 2015; Trujano et al. 2016), we also demonstrated optimal choice behavior in rats when using lights as reward-associated stimuli. However, when levers were used as the reward-associated stimuli, rats exhibited suboptimal choice behavior. In pigeons, it has been noted that light stimuli elicit sign-tracking (Brown and Jenkins, 1968), which can result in maladaptive behavior, such as a reduction in access to primary reinforcement (Silva et al. 1992) and suboptimal choice. Additionally, it has been demonstrated that CSs that elicit sign-tracking are indicative of greater conditioned reinforcing value in rats, relative to CSs that elicit goal-tracking, and can promote suboptimal behavior (e.g. Beckmann and Chow, 2015; Chow et al. 2016). Hence, by using lever stimuli that elicit sign-tracking, equating stimulus function to that used with pigeons, terminal-link stimuli are capable of producing suboptimal choice in both rats and pigeons. Thus, the predictive utility of a reward-associated stimulus alone does not appear to be sufficient to promote suboptimal behavior, rather those same reward-associated stimuli attributed with incentive salience can function to promote maladaptive decision-making.

Even though it is evident that stimuli imbued with incentive salience can promote maladaptive decision-making as seen herein and in similar procedures (Beckmann and Chow, 2015), the present study also reveals that reward-associated stimuli with the greatest predictive utility can preferentially shift choices; although the overall rates of primary reinforcement across both alternatives during the initial phase of this experiment were equivalent, rats in both groups had a preference for a reward-associated terminal-link stimulus that maintained better predictive utility. Previous experiments with rats that assessed how a predictive versus a non-predictive terminal-link stimulus influence suboptimal choice have demonstrated that predictive utility had no effect on preference and did not promote suboptimal choice (Roper and Baldwin, 2004; Trujano and Orduna, 2015; Trujano et al. 2016). While it is clear that rats, in both groups of the present experiment, preferred a predictive terminal-link stimulus over a non-predictive terminal-link stimulus when primary reinforcement probabilities were equivalent across both options, suboptimal choice behavior was not demonstrated until the expected value for the predictive-stimulus alternative, relative to the non-predictive alternative, was reduced to 1:2 and subsequently to 1:4. When the expected value of the predictive-stimulus option was reduced, rats with lights as terminal-link stimuli demonstrated more optimal decision-making by choosing the non-predictive alternative associated with a higher rate of primary reinforcement, regardless of the predictive utility of the terminal-link stimuli associated with that option. Likewise, a matching analysis, used to quantitatively predict the allocation of behavior based on the relative value of the available outcomes, revealed that sensitivity to primary reinforcement (sr = 1.96 ± 0.49) and conditioned reinforcement (sc = 1.45 ± 1.01) for the light stimulus condition were similar, suggesting that conditioned and primary reinforcement played comparable roles in influencing choice. Similarly, rats with levers as terminal-link stimuli demonstrated a shift in preference for the predictive alternative towards the non-predictive side as the relative expected value decreased, but they did so to a much lesser extent, as rats were still choosing the predictive-stimulus alternative associated with relatively lower expected value in both the 1:2 and 1:4 expected value conditions. Moreover, a matching analysis revealed that sensitivity to primary reinforcement (sr = 2.84 ± 0.44) was relatively lower than the sensitivity to conditioned reinforcement (sc = 4.76 ± 0.82) for the lever stimulus, indicating that the predictive utility of the lever-alternative served as a better conditioned reinforcer and directed choice behavior toward a suboptimal result. Altogether, the present results suggest that stimuli attributed incentive salience, when coupled with high predictive utility, can function to promote maladaptive decision-making.

Interestingly, previous research (Fantino, 1969; Moore, 1985; Dunn and Spetch, 1990; Williams and Dunn, 1991; Mazur, 1991, 1995, 1997; Williams, 1994; Roper and Zentall, 1999; Stagner et al. 2012; Pisklak et al. 2015; Smith and Zentall, 2016; Smith et al. 2016) has suggested the importance of terminal-link stimuli as conditioned reinforcers and their ability to influence choice through various approaches. On the contrary, other approaches have been used that closely resemble the procedure used herein and have found contrasting results leading to different theories on the mechanisms that drive suboptimal choice behavior. For example, Trujano et al (2016) used a suboptimal choice procedure closely resembling the one used herein; however, a light stimulus, instead of a direct blackout, was used to signal losses on the predictive alternative. When a summation test (cf. Rescorla, 1969) was conducted, the rates of responding during the test resembled rates of responding to the light stimulus that signaled loss, indicating that the light associated with a loss attained conditioned inhibitory properties; thus, it was concluded that the light stimulus that signals loss prevents suboptimal choice behavior from occurring by promoting optimal behavior (Trujano et al. 2016). Interestingly, suboptimal choice studies with rats (Roper and Baldwin, 2004; Trujano and Orduna, 2015; Trujano et al. 2016) have used a stimulus that signals a loss; such stimuli may result in conditioned inhibition that may prevent suboptimal choice by inhibiting its choice. However, in the present study, only rats in the lever condition demonstrated suboptimal choice, while rats in the light condition demonstrated more optimal behavior similar to the results of other studies (e.g., Roper and Baldwin, 2004; Trujano and Orduna, 2015; Trujano et al. 2016) in which a stimulus for loss was used. Thus, whatever conditioned inhibition was present in the current experiments, it would have been equivalent between the two groups; however, only the lever group demonstrated suboptimal choice, suggesting that the attribution of incentive salience to the lever stimulus promoted suboptimal choice, rather than any differential effects of conditioned inhibition. Nevertheless, future research should explicitly investigate if conditioned inhibitory effects have any influence on suboptimal preference and whether or not there are any differential stimulus effects in inhibition efficacy.

While the present findings demonstrate that incentive salience promotes suboptimal choice, a theoretical framework called the “incentive hope model” has been developed to describe the effects of uncertainty and its relation to incentive salience (Anselme, 2015; 2016). The incentive hope model suggests that uncertainty will increase the value attributed to a stimulus via counterconditioning and incentive hope working together. Counterconditioning is the process in which any inhibitory effect due to non-reinforcement will be countered through experience with reinforcement. ‘Incentive hope’ is described to be a process of wanting the reward that has a motivational component, which is distinct from expectation that can be described as requiring knowledge of outcomes that is non-motivational in nature. Additionally, for an expectation to be motivating, the known outcome must be wanted (Anselme and Robinson, 2016). It is hypothesized that the greatest amount of ‘incentive hope’ exists in settings in which the uncertainty of an outcome is maximal (i.e., 50%), while ‘incentive hope’ is minimal when expectations are known (i.e., 0% and 100%), since the outcomes are guaranteed. In the first phase of the suboptimal choice procedure used here, counterconditioning to the initial-link choice can be assumed to be equivalent for both options, since both the predictive and non-predictive alternatives resulted in 50% reinforcement, with equivalent amounts of non-reinforced to reinforced trials. However, in the terminal-links, the predictive side can be assumed to function under known expectations of 0% and 100%, such that ‘hope’ is minimal, while the non-predictive side (i.e., 50% chance for reinforcement) is at maximal uncertainty and maximal ‘hope’. Thus, if ‘incentive hope’ increases the value attributed to stimuli, then preference for the non-predictive alternative would be expected. The data presented here demonstrated nearly-exclusive preference for the predictive alternative, in which expectations were known and ‘hope’ should have been minimal. Furthermore, although the ‘incentive hope’ model aims to capture the effects of uncertainty in relation to stimulus value, the framework proposed by Anselme (2015, 2016) is built upon performance or rate data; there has been some suggestion that greater rates of sign-tracking are indicative of greater incentive salience attribution to a CS, as seen by the increased rates of responding to a probabilistic CS (Anselme et al. 2013; Robinson et al. 2014). However, in these studies, value was never directly tested via a conditioned reinforcement test or choice. Consistent with other studies (Picker and Poling, 1982; Poling et al. 1985), the correlations reported herein suggest that neither sign- nor goal-tracking rates predicted preference, demonstrating a clear dissociation between the rate of conditioned responding elicited by a conditioned stimulus and the value attributed to that stimulus as revealed by preference. Importantly, the response topographies (i.e., sign- or goal-tracking) elicited by the different terminal-link stimuli (levers vs. lights) were more indicative of differential value.

Although sign- and goal-tracking are both elicited responses, it is possible that these forms of conditioned responding reflect engagement of distinct and separable appetitive systems (Timberlake et al. 1982; Timberlake, 1994) that might be differentially related to conditioned reinforcement and stimulus value. Additionally, the differential value associated with stimuli that elicit sign- vs. goal-tracking responses has been hypothesized to be driven by some arbitration process between independent, parallel systems (Clark et al. 2012; Dayan et al. 2006; LeSaint et al. 2014) that drive stimulus-response (S-R) or Pavlovian relationships (Timberlake et al. 1982) versus action-outcome (A-O) or instrumental relationships (Yin and Knowlton, 2006). Like previous findings demonstrating that responses governed by S-R relationships are insensitive to outcomes (Stiers and Silberberg, 1974; Beckmann and Chow, 2015) and responses governed by A-O relationships are dependent on response-reinforcer contingencies (Dayan et al. 2006; Yin and Knowlton, 2006), the results herein suggest that sign-tracking associated with the lever stimulus is mediated by S-R relations, whereas goal-tracking associated with the light stimulus is mediated by A-O relations in the suboptimal choice procedure. Although sign-tracking appears to be mediated by S-R relations, there is some evidence that, under certain circumstances, sign-tracking could also be mediated by A-O relations (cf. Robinson and Berridge, 2013). It is hypothesized that the lever stimulus used here is representative of an S-R relationship due to the nature of the US (i.e., palatable food pellet) used; however, application of neuroscience techniques (e.g., DREADDs or fast-scan cyclic voltammetry) in future studies might be better able to elucidate the functional role of the terminal-link stimuli. Thus, future studies using stimuli that differentially engage the above processes as reward-associated terminal-link stimuli in a suboptimal choice procedure may provide insight into the relative role of each process in maladaptive decision making, offering potential understanding of the mechanisms underlying the insensitivity to primary outcomes associated with pathological gambling behavior (Cavedini et al. 2002; van Eimeren et al. 2009; van Holst et al. 2012).

The suboptimal choice procedure used herein and in other studies (e.g., Smith and Zentall, 2016; Smith et al. 2016) is one of many different paradigms used to study the potential mechanisms that underlie maladaptive decision-making (e.g., gambling and gambling-like behavior). Other procedures, such as the rat gambling task (rGT; cf. Zeeb et al. 2009) and probabilistic discounting (Floresco et al. 2008; St. Onge et al 2011), have also been utilized to study maladaptive decision-making, along with the neurobehavioral mechanisms that are associated with such behavior. In particular, models designed specifically to study gambling, such as the rGT, rely on obtaining optimal choice behavior first, then via environmental, neurochemical, or pharmacological manipulations create suboptimal choice behavior; through these means valuable insight regarding the neurobiological systems that govern gambling-like behavior has been revealed (Zeeb et al. 2009; Zeeb and Winstanley, 2011; Baarendse et al. 2013). However, the rGT is multi-dimensional in nature, in that it simultaneously manipulates reward magnitude, probability of reinforcement, probability of punishment, and delay across four different concurrent options, making the isolation of the role of each of those dimensions in governing gambling-like behavior difficult. Unlike other rodent models utilized to study maladaptive decision-making, the suboptimal choice procedure used herein offers a means to study the specific role of incentive salience attribution in suboptimal choice behavior, more akin to human gambling behavior in which cues associated with gambling are prevalent and highly valued (Sodano and Wulfert, 2010; van Holst et al. 2012). Moreover, the procedure allows for the study of how incentive salience contributes to maladaptive decision-making more broadly, demonstrating how differential stimulus value can induce preferences that are incompatible with normative theories of choice, such as optimal foraging theory and rational choice theory.

Altogether, the findings herein offer a model of suboptimal choice behavior, in rats, using a suboptimal choice procedure that has proven consistent across different species, including humans (Molet et al. 2012; Zentall, 2014). More specifically, the findings herein demonstrate that the attribution of incentive salience to stimuli, in tandem with stimulus predictive utility, may play a critical role in maladaptive decision-making. Granting that the suboptimal choice procedure resembles gambling-like behavior, the mechanisms that drive such behavior could provide insight into maladaptive decision-making in general, and it may prove informative in other pathologies such as addiction and obesity as well. By being able to model such suboptimal choice behavior in rodent models, the possibility to better understand the neurobehavioral mechanisms that drive this maladaptive behavior can be further investigated.

Highlights.

Incentive salience was necessary to promote suboptimal choice.

Stimuli imbued with incentive salience overshadowed primary reinforcement value.

Rate of sign-tracking was not reflective of stimulus value.

Acknowledgments

We would like to thank Joshua N. Lavy and Andrew Edel for their technical support. This work was supported by the National Institute on Drug Abuse (NIDA), DA033373 and DA016176.

Footnotes

Disclosure

The authors report no conflicts of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anselme P. Incentive salience attribution under reward uncertainty: A Pavlovian model. Behavioural processes. 2015;111:6–18. doi: 10.1016/j.beproc.2014.10.016. [DOI] [PubMed] [Google Scholar]

- Anselme P. Motivational control of sign-tracking behaviour: A theoretical framework. Neuroscience & Biobehavioral Reviews. 2016;65:1–20. doi: 10.1016/j.neubiorev.2016.03.014. [DOI] [PubMed] [Google Scholar]

- Anselme P, Robinson MJ, Berridge KC. Reward uncertainty enhances incentive salience attribution as sign-tracking. Behavioural brain research. 2013;238:53–61. doi: 10.1016/j.bbr.2012.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anselme P, Robinson MJ. “Wanting,”“liking,” and their relation to consciousness. Journal of Experimental Psychology: Animal Learning and Cognition. 2016;42(2):123–140. doi: 10.1037/xan0000090. [DOI] [PubMed] [Google Scholar]

- Autor SM. Unpublished doctoral dissertation. Harvard University; 1960. The strength of conditioned reinforcers as a function of frequency and probability of reinforcement. [Google Scholar]

- Baarendse PJ, Winstanley CA, Vanderschuren LJ. Simultaneous blockade of dopamine and noradrenaline reuptake promotes disadvantageous decision making in a rat gambling task. Psychopharmacology. 2013;225(3):719–731. doi: 10.1007/s00213-012-2857-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baler RD, Volkow ND. Drug addiction: the neurobiology of disrupted self-control. Trends in molecular medicine. 2006;12(12):559–566. doi: 10.1016/j.molmed.2006.10.005. [DOI] [PubMed] [Google Scholar]

- Baum WM, Rachlin HC. Choice as time allocation1. Journal of the experimental analysis of behavior. 1969;12(6):861–874. doi: 10.1901/jeab.1969.12-861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Damasio H. Decision-making and addiction (part I): impaired activation of somatic states in substance dependent individuals when pondering decisions with negative future consequences. Neuropsychologia. 2002;40(10):1675–1689. doi: 10.1016/s0028-3932(02)00015-5. [DOI] [PubMed] [Google Scholar]

- Beckmann JS, Chow JJ. Isolating the incentive salience of reward-associated stimuli: value, choice, and persistence. Learning & Memory. 2015;22(2):116–127. doi: 10.1101/lm.037382.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blaszczynski A, Silove D. Cognitive and behavioral therapies for pathological gambling. Journal of Gambling Studies. 1995;11(2):195–220. doi: 10.1007/BF02107115. [DOI] [PubMed] [Google Scholar]

- Boakes RA. Performance on learning to associate a stimulus with positive reinforcement. Operant-Pavlovian interactions. 1977:67–97. [Google Scholar]

- Brand M, Kalbe E, Labudda K, Fujiwara E, Kessler J, Markowitsch HJ. Decision-making impairments in patients with pathological gambling. Psychiatry research. 2005;133(1):91–99. doi: 10.1016/j.psychres.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Brogan A, Hevey D, Pignatti R. Anorexia, bulimia, and obesity: shared decision making deficits on the Iowa Gambling Task (IGT) Journal of the International Neuropsychological Society. 2010;16(04):711–715. doi: 10.1017/S1355617710000354. [DOI] [PubMed] [Google Scholar]

- Brown PL, Jenkins HM. Auto-shaping of the pigeon’s key-peck1. Journal of the experimental analysis of behavior. 1968;11(1):1–8. doi: 10.1901/jeab.1968.11-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavedini P, Riboldi G, Keller R, D’Annucci A, Bellodi L. Frontal lobe dysfunction in pathological gambling patients. Biological psychiatry. 2002;51(4):334–341. doi: 10.1016/s0006-3223(01)01227-6. [DOI] [PubMed] [Google Scholar]

- Chang SE. Effects of orbitofrontal cortex lesions on autoshaped lever pressing and reversal learning. Behavioural brain research. 2014;273:52–56. doi: 10.1016/j.bbr.2014.07.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen K, Kandel DB. The natural history of drug use from adolescence to the mid-thirties in a general population sample. American journal of public health. 1995;85(1):41–47. doi: 10.2105/ajph.85.1.41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chow JJ, Nickell JR, Darna M, Beckmann JS. Toward isolating the role of dopamine in the acquisition of incentive salience attribution. Neuropharmacology. 2016;109:320–331. doi: 10.1016/j.neuropharm.2016.06.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark JJ, Hollon NG, Phillips PE. Pavlovian valuation systems in learning and decision making. Current opinion in neurobiology. 2012;22(6):1054–1061. doi: 10.1016/j.conb.2012.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke D, Tse S, Abbott MW, Townsend S, Kingi P, Manaia W. Reasons for starting and continuing gambling in a mixed ethnic community sample of pathological and non-problem gamblers. International Gambling Studies. 2007;7(3):299–313. [Google Scholar]

- Cleland GG, Davey GC. Autoshaping in the rat: The effects of localizable visual and auditory signals for food. Journal of the experimental analysis of behavior. 1983;40(1):47–56. doi: 10.1901/jeab.1983.40-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davey GC, Cleland GG, Oakley DA. Applying Konorski’s model of classical conditioning to signal-centered behavior in the rat: Some functional similarities between hunger CRs and sign-tracking. Animal Learning & Behavior. 1982;10(2):257–262. [Google Scholar]

- Dayan P, Niv Y, Seymour B, Daw ND. The misbehavior of value and the discipline of the will. Neural networks. 2006;19(8):1153–1160. doi: 10.1016/j.neunet.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Dickerson M. Internal and external determinants of persistent gambling: Problems in generalising from one form of gambling to another. Journal of Gambling Studies. 1993;9(3):225–245. [Google Scholar]

- Dunn R, Spetch ML. Choice with uncertain outcomes: Conditioned reinforcement effects. Journal of the experimental analysis of behavior. 1990;53(2):201–218. doi: 10.1901/jeab.1990.53-201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fantino E. Choice and rate of reinforcement1, 2. Journal of the experimental analysis of behavior. 1969;12(5):723–730. doi: 10.1901/jeab.1969.12-723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fantino E, Dunn R, Meck W. Percentage reinforcement and choice. Journal of the experimental analysis of behavior. 1979;32(3):335–340. doi: 10.1901/jeab.1979.32-335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB, Maric T, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort-and delay-based decision making. Neuropsychopharmacology. 2008;33(8):1966–1979. doi: 10.1038/sj.npp.1301565. [DOI] [PubMed] [Google Scholar]

- Gelman A, Hill J. Data analysis using regression and multilevel/hierarchical models. Cambridge University Press; 2006. [Google Scholar]

- Gipson CD, Alessandri JJ, Miller HC, Zentall TR. Preference for 50% reinforcement over 75% reinforcement by pigeons. Learning & behavior. 2009;37(4):289–298. doi: 10.3758/LB.37.4.289. [DOI] [PubMed] [Google Scholar]

- Grace RC. A contextual model of concurrent-chains choice. Journal of the experimental analysis of behavior. 1994;61(1):113–129. doi: 10.1901/jeab.1994.61-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hearst E, Jenkins HM. Sign-tracking: The stimulus-reinforcer relation and directed action. Psychonomic Society; 1974. [Google Scholar]

- Herrnstein RJ. Secondary reinforcement and rate of primary reinforcement1. Journal of the experimental analysis of behavior. 1964;7(1):27–36. doi: 10.1901/jeab.1964.7-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein RJ. Rational choice theory: Necessary but not sufficient. American Psychologist. 1990;45(3):356. [Google Scholar]

- Hoek HW, Van Hoeken D. Review of the prevalence and incidence of eating disorders. International Journal of eating disorders. 2003;34(4):383–396. doi: 10.1002/eat.10222. [DOI] [PubMed] [Google Scholar]

- Holland PC. Conditioned stimulus as a determinant of the form of the Pavlovian conditioned response. Journal of Experimental Psychology: Animal Behavior Processes. 1977;3(1):77. doi: 10.1037//0097-7403.3.1.77. [DOI] [PubMed] [Google Scholar]

- Holland PC, Asem JS, Galvin CP, Keeney CH, Hsu M, Miller A, Zhou V. Blocking in autoshaped lever-pressing procedures with rats. Learning & behavior. 2014;42(1):1–21. doi: 10.3758/s13420-013-0120-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyman SE, Malenka RC. Addiction and the brain: the neurobiology of compulsion and its persistence. Nature reviews neuroscience. 2001;2(10):695–703. doi: 10.1038/35094560. [DOI] [PubMed] [Google Scholar]

- Kelleher RT. Discrimination learning as a function of reversal and nonreversal shifts. Journal of Experimental Psychology. 1956;51(6):379. doi: 10.1037/h0047413. [DOI] [PubMed] [Google Scholar]

- Kelleher RT, Gollub LR. A review of positive conditioned reinforcement1. Journal of the experimental analysis of behavior. 1962;5(S4):543–597. doi: 10.1901/jeab.1962.5-s543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen P. The matching law. Journal of the experimental analysis of behavior. 1972;17(3):489–495. doi: 10.1901/jeab.1972.17-489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lesaint F, Sigaud O, Flagel SB, Robinson TE, Khamassi M. Modelling individual differences in the form of pavlovian conditioned approach responses: a dual learning systems approach with factored representations. PLoS Comput Biol. 2014;10(2):e1003466. doi: 10.1371/journal.pcbi.1003466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lesieur HR. Compulsive gambling. Society. 1992;29(4):43–50. [Google Scholar]

- Lesieur HR, Blume SB, Zoppa RM. Alcoholism, drug abuse, and gambling. Alcoholism: Clinical and Experimental Research. 1986;10(1):33–38. doi: 10.1111/j.1530-0277.1986.tb05610.x. [DOI] [PubMed] [Google Scholar]

- Madden GJ, Ewan EE, Lagorio CH. Toward an animal model of gambling: Delay discounting and the allure of unpredictable outcomes. Journal of Gambling Studies. 2007;23(1):63–83. doi: 10.1007/s10899-006-9041-5. [DOI] [PubMed] [Google Scholar]

- Mazur JE. Choice with probabilistic reinforcement: Effects of delay and conditioned reinforcers. Journal of the experimental analysis of behavior. 1991;55(1):63–77. doi: 10.1901/jeab.1991.55-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur JE. Conditioned reinforcement and choice with delayed and uncertain primary reinforcers. Journal of the experimental analysis of behavior. 1995;63(2):139–150. doi: 10.1901/jeab.1995.63-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur JE. Choice, delay, probability, and conditioned reinforcement. Animal Learning & Behavior. 1997;25(2):131–147. [Google Scholar]

- Mazur JE. Species differences between rats and pigeons in choices with probabilistic and delayed reinforcers. Behavioural Processes. 2007;75(2):220–224. doi: 10.1016/j.beproc.2007.02.004. [DOI] [PubMed] [Google Scholar]

- McDevitt MA, Dunn RM, Spetch ML, Ludvig EA. When good news leads to bad choices. Journal of the experimental analysis of behavior. 2016;105(1):23–40. doi: 10.1002/jeab.192. [DOI] [PubMed] [Google Scholar]

- Meyer PJ, Cogan ES, Robinson TE. The form of a conditioned stimulus can influence the degree to which it acquires incentive motivational properties. Plos one. 2014;9(6):e98163. doi: 10.1371/journal.pone.0098163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molet M, Miller HC, Laude JR, Kirk C, Manning B, Zentall TR. Decision making by humans in a behavioral task: Do humans, like pigeons, show suboptimal choice? Learning & behavior. 2012;40(4):439–447. doi: 10.3758/s13420-012-0065-7. [DOI] [PubMed] [Google Scholar]

- Moore J. Choice and the conditioned reinforcing strength of informative stimuli. The Psychological Record. 1985;35(1):89. [Google Scholar]

- Neighbors C, Lostutter TW, Cronce JM, Larimer ME. Exploring college student gambling motivation. Journal of Gambling Studies. 2002;18(4):361–370. doi: 10.1023/a:1021065116500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onge JRS, Abhari H, Floresco SB. Dissociable contributions by prefrontal D1 and D2 receptors to risk-based decision making. The Journal of Neuroscience. 2011;31(23):8625–8633. doi: 10.1523/JNEUROSCI.1020-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picker M, Poling A. Choice as a dependent measure in autoshaping: Sensitivity to frequency and duration of food presentation. Journal of the experimental analysis of behavior. 1982;37(3):393–406. doi: 10.1901/jeab.1982.37-393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinheiro J, Bates D, DebRoy S, Sarkar D. R package version. Vol. 3. R Foundation for Statistical Computing; Vienna, Austria: 2007. Linear and nonlinear mixed effects models; p. 57. [Google Scholar]

- Pisklak JM, McDevitt MA, Dunn RM, Spetch ML. When good pigeons make bad decisions: Choice with probabilistic delays and outcomes. Journal of the experimental analysis of behavior. 2015;104(3):241–251. doi: 10.1002/jeab.177. [DOI] [PubMed] [Google Scholar]

- Poling A, Thomas J, Hall-Johnson E, Picker M. Self-control revisited: Some factors that affect autoshaped responding. Behavioural Processes. 1985;10(1–2):77–85. doi: 10.1016/0376-6357(85)90119-6. [DOI] [PubMed] [Google Scholar]

- Pyke GH. Optimal foraging theory: a critical review. Annual review of ecology and systematics. 1984;15:523–575. [Google Scholar]

- Rachlin H, Baum WM. Response rate as a function of amount of reinforcement for a signalled concurrent response1. Journal of the experimental analysis of behavior. 1969;12(1):11–16. doi: 10.1901/jeab.1969.12-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA. Pavlovian conditioned inhibition. Psychological bulletin. 1969;72(2):77. [Google Scholar]

- Robinson TE, Berridge KC. The incentive sensitization theory of addiction: some current issues. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 2008;363(1507):3137–3146. doi: 10.1098/rstb.2008.0093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson MJ, Berridge KC. Instant transformation of learned repulsion into motivational “wanting”. Current Biology. 2013;23(4):282–289. doi: 10.1016/j.cub.2013.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson MJ, Anselme P, Fischer AM, Berridge KC. Initial uncertainty in Pavlovian reward prediction persistently elevates incentive salience and extends sign-tracking to normally unattractive cues. Behavioural brain research. 2014;266:119–130. doi: 10.1016/j.bbr.2014.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson TE, Flagel SB. Dissociating the predictive and incentive motivational properties of reward-related cues through the study of individual differences. Biological psychiatry. 2009;65(10):869–873. doi: 10.1016/j.biopsych.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roper KL, Baldwin ER. The two-alternative observing response procedure in rats: Preference for nondiscriminative stimuli and the effect of delay. Learning and Motivation. 2004;35(4):275–302. [Google Scholar]

- Roper KL, Zentall TR. Observing behavior in pigeons: The effect of reinforcement probability and response cost using a symmetrical choice procedure. Learning and Motivation. 1999;30(3):201–220. [Google Scholar]

- Sanbonmatsu DM, Posavac SS, Stasney R. The subjective beliefs underlying probability overestimation. Journal of Experimental Social Psychology. 1997;33(3):276–295. [Google Scholar]

- Scott J. Understanding contemporary society: Theories of the present. 2000. Rational choice theory; p. 129. [Google Scholar]

- Shafir E, LeBoeuf RA. Rationality. Annual review of psychology. 2002;53(1):491–517. doi: 10.1146/annurev.psych.53.100901.135213. [DOI] [PubMed] [Google Scholar]

- Silva FJ, Silva K, Pear JJ. Sign-versus goal-tracking: effects of conditioned-stimulus-to-unconditioned-stimulus distance. Journal of the experimental analysis of behavior. 1992;57(1):17–31. doi: 10.1901/jeab.1992.57-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AP, Bailey AR, Chow JJ, Beckmann JS, Zentall TR. Suboptimal choice in pigeons: Stimulus value predicts choice over frequencies. Plos one. 2016;11(7):e0159336. doi: 10.1371/journal.pone.0159336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AP, Zentall TR. Suboptimal choice in pigeons: Choice is primarily based on the value of the conditioned reinforcer rather than overall reinforcement rate. Journal of Experimental Psychology: Animal Learning and Cognition. 2016;42(2):212. doi: 10.1037/xan0000092. [DOI] [PubMed] [Google Scholar]

- Sodano R, Wulfert E. Cue reactivity in active pathological, abstinent pathological, and regular gamblers. Journal of Gambling Studies. 2010;26(1):53–65. doi: 10.1007/s10899-009-9146-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spetch ML, Belke TW, Barnet RC, Dunn R, Pierce WD. Suboptimal choice in a percentage-reinforcement procedure: effects of signal condition and terminal-link length. Journal of the experimental analysis of behavior. 1990;53(2):219–234. doi: 10.1901/jeab.1990.53-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stagner JP, Zentall TR. Suboptimal choice behavior by pigeons. Psychonomic Bulletin & Review. 2010;17(3):412–416. doi: 10.3758/PBR.17.3.412. [DOI] [PubMed] [Google Scholar]

- Stephens DW, Krebs JR. Foraging theory. Princeton University Press; 1986. [Google Scholar]

- Stiers M, Silberberg A. Lever-contact responses in rats: automaintenance with and without a negative response-reinforcer dependency1. Journal of the experimental analysis of behavior. 1974;22(3):497–506. doi: 10.1901/jeab.1974.22-497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- TEIGEN KH. Studies in subjective probability IV: Probabilities, confidence, and luck. Scandinavian journal of psychology. 1983;24(1):175–191. [Google Scholar]

- Timberlake W. Behavior systems, associationism, and Pavlovian conditioning. Psychonomic Bulletin & Review. 1994;1(4):405–420. doi: 10.3758/BF03210945. [DOI] [PubMed] [Google Scholar]

- Timberlake W, Wahl G, King DA. Stimulus and response contingencies in the misbehavior of rats. Journal of Experimental Psychology: Animal Behavior Processes. 1982;8(1):62. [PubMed] [Google Scholar]

- Trujano RE, López P, Rojas-Leguizamón M, Orduña V. Optimal behavior by rats in a choice task is associated to a persistent conditioned inhibition effect. Behavioural Processes. 2016;130:65–70. doi: 10.1016/j.beproc.2016.07.005. [DOI] [PubMed] [Google Scholar]

- Trujano RE, Orduña V. Rats are optimal in a choice task in which pigeons are not. Behavioural Processes. 2015;119:22–27. doi: 10.1016/j.beproc.2015.07.010. [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D. Utility, probability, and human decision making. Springer; 1975. Judgment under uncertainty: Heuristics and biases; pp. 141–162. [DOI] [PubMed] [Google Scholar]

- Van Holst RJ, Van Holstein M, Van Den Brink W, Veltman DJ, Goudriaan AE. Response inhibition during cue reactivity in problem gamblers: an fMRI study. Plos one. 2012;7(3):e30909. doi: 10.1371/journal.pone.0030909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vasconcelos M, Monteiro T, Kacelnik A. Irrational choice and the value of information. Scientific reports. 2015:5. doi: 10.1038/srep13874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams BA. Conditioned reinforcement: Experimental and theoretical issues. The Behavior Analyst. 1994;17(2):261. doi: 10.1007/BF03392675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams BA, Dunn R. Preference for conditioned reinforcement. Journal of the experimental analysis of behavior. 1991;55(1):37–46. doi: 10.1901/jeab.1991.55-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyckoff LB., Jr The role of observing responses in discrimination learning. Part I. Psychological review. 1952;59(6):431. doi: 10.1037/h0053932. [DOI] [PubMed] [Google Scholar]

- Yin HH, Knowlton BJ. The role of the basal ganglia in habit formation. Nature reviews neuroscience. 2006;7(6):464–476. doi: 10.1038/nrn1919. [DOI] [PubMed] [Google Scholar]

- Zeeb FD, Robbins TW, Winstanley CA. Serotonergic and dopaminergic modulation of gambling behavior as assessed using a novel rat gambling task. Neuropsychopharmacology. 2009;34(10):2329–2343. doi: 10.1038/npp.2009.62. [DOI] [PubMed] [Google Scholar]

- Zeeb FD, Winstanley CA. Lesions of the basolateral amygdala and orbitofrontal cortex differentially affect acquisition and performance of a rodent gambling task. The Journal of Neuroscience. 2011;31(6):2197–2204. doi: 10.1523/JNEUROSCI.5597-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zentall TR. Suboptimal choice by pigeons: An analog of human gambling behavior. Behavioural Processes. 2014;103:156–164. doi: 10.1016/j.beproc.2013.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zentall TR. When animals misbehave: analogs of human biases and suboptimal choice. Behavioural Processes. 2015;112:3–13. doi: 10.1016/j.beproc.2014.08.001. [DOI] [PubMed] [Google Scholar]

- Zentall TR. Resolving the paradox of suboptimal choice. Journal of Experimental Psychology: Animal Learning and Cognition. 2016;42(1):1. doi: 10.1037/xan0000085. [DOI] [PubMed] [Google Scholar]

- Zentall TR, Stagner J. Maladaptive choice behaviour by pigeons: an animal analogue and possible mechanism for gambling (sub-optimal human decision-making behaviour) Proceedings of the Royal Society of London B: Biological Sciences. 2011;278(1709):1203–1208. doi: 10.1098/rspb.2010.1607. [DOI] [PMC free article] [PubMed] [Google Scholar]