Abstract

What mechanisms support our ability to estimate durations on the order of minutes? Behavioral studies in humans have shown that changes in contextual features lead to overestimation of past durations. Based on evidence that the medial temporal lobes and prefrontal cortex represent contextual features, we related the degree of fMRI pattern change in these regions with people’s subsequent duration estimates. After listening to a radio story in the scanner, participants were asked how much time had elapsed between pairs of clips from the story. Our ROI analyses found that duration estimates were correlated with the neural pattern distance between two clips at encoding in the right entorhinal cortex. Moreover, whole-brain searchlight analyses revealed a cluster spanning the right anterior temporal lobe. Our findings provide convergent support for the hypothesis that retrospective time judgments are driven by 'drift' in contextual representations supported by these regions.

DOI: http://dx.doi.org/10.7554/eLife.16070.001

Research Organism: Human

eLife digest

How do humans judge how much time has passed during daily life, such as when waiting for the bus? Psychology studies have shown that people remember events to have lasted longer when more changes occurred during that time period. These changes can occur either in the environment (such as changes in location) or in the individual’s internal state (such as changes in goals and emotions).

Brain activity changes from moment to moment. Lositsky et al. hypothesized that when patterns of activity in a person’s brain change a lot across an interval of time, that person will judge that a long time has passed. On the other hand, if brain activity changes less over that interval, individuals will judge that less time has passed.

Some regions of the brain are sensitive to information that unfolds over several minutes; many of these regions are vital for forming memories of episodes from our lives. Using a technique called functional magnetic resonance imaging (fMRI), Lositsky et al. specifically looked at the activity of these regions while volunteers listened to a 25-minute radio drama. Afterwards, the volunteers listened to clips from different events in the story and judged how much time passed between those events.

Even though each pair of audio clips occurred exactly two minutes apart in the original story, people’s time judgments were strongly influenced by how many scene changes happened in the story between the two clips. In a part of the brain called the right anterior temporal lobe – and especially in a region of it called the entorhinal cortex – Lositsky et al. found that brain activity changed more when audio clips were judged to be further apart in time. Activity in this region fluctuated more slowly overall than in the rest of the brain. This could mean that it combines sensory information (about images, sounds, smells and so on) across minutes of time, in order to form a representation of the current situation.

Future research could focus on several unanswered questions. Exactly which environmental and internal changes influence our perception of time? What form does this information take in the entorhinal cortex? Studies show that the entorhinal cortex contains “grid cells” that track our location in space. Could these cells also help judge the passage of time?

Introduction

Imagine that you are at the bus stop when you run into a colleague and the two of you become engrossed in a conversation about memory research. After a few minutes, you realize that the bus still has not arrived. Without looking at your watch, you have some sense of how long you have been waiting. Where does this intuition come from?

Estimation of durations lasting a few seconds has been probed in the neuroimaging, neuropsychology and neuropharmacology literatures (see Wittmann, 2013, for a review). On the other hand, the neural mechanisms underlying time perception on the scale of minutes have remained unexplored. This is particularly true of retrospective judgments, where individuals experience an interval without paying attention to time and must subsequently estimate the interval’s duration. In such cases, individuals must rely on information stored in memory to estimate duration. How is this accomplished?

Memory scholars have long posited that the same contextual cues that help us to retrieve an item from memory can also help us determine its recency. According to extant theories of context and memory (see Manning et al., 2014, for a review), mental context refers to aspects of our mental state that tend to persist over a relatively long time scale; this encompasses our representation of slowly-changing aspects of the external world (e.g., what room we are in) as well as other slowly-changing aspects of our internal mental state (e.g., our current plans). Crucially, these theories posit that slowly-changing contextual features can be episodically associated with more quickly-changing aspects of the world (e.g., stimuli that appear at a particular moment in time; Mensink and Raaijmakers, 1988; Howard and Kahana, 2002).

Bower (1972) first proposed that we could determine how long ago an item occurred by comparing our current context with the context associated with the remembered item. The similarity of these two context representations would reflect their temporal distance, with more similar representations associated with events that happened closer together in time. Thus, a slowly varying mental context could serve as a temporal tag (Polyn and Kahana, 2008). In parallel, researchers in the domain of retrospective time estimation have shown that the degree of context change is a better predictor of duration judgments than alternative explanations, such as the number of items remembered from the interval (Block and Reed, 1978; Block, 1990, 1992). Indeed, changes in task processing (Block and Reed, 1978; Sahakyan and Smith, 2014), environmental context (Block, 1982), and emotions (Pollatos et al., 2014), as well as event boundaries (Poynter, 1983; Zakay et al., 1994; Faber and Gennari, 2015), lead to overestimation of past durations.

In our study, we set out to obtain neural evidence in support of the hypothesis that mental context change drives duration estimates. Specifically, we hypothesized that, in brain regions representing mental context, the degree of neural pattern change between two events (operationalized as change in multi-voxel patterns of fMRI activity) should predict participants’ estimates of how much time passed between those events.

Extensive prior work has implicated the medial temporal lobe (MTL) and lateral prefrontal cortex (PFC) in representing contextual information (Polyn and Kahana, 2008; for reviews of MTL contributions to representing context, see Eichenbaum et al., 2007, and Ritchey and Ranganath, 2012; for related computational modeling work, see Howard and Eichenbaum, 2013). In keeping with our hypothesis, multiple studies have obtained evidence linking neural pattern change in these regions to temporal memory judgments. Manns et al. (2007) recorded from rat hippocampus during an odor memory task; they found that greater change in hippocampal activity patterns between two stimuli predicted better memory for the order in which the stimuli occurred. In the human neuroimaging literature, Jenkins and Ranganath (2010) found that the degree to which activity patterns in rostrolateral prefrontal cortex changed during the encoding of a stimulus predicted better memory for the temporal position of that stimulus in the experiment. Jenkins and Ranganath (2016) also showed that greater pattern distance between two stimuli at encoding in the hippocampus, medial and anterior prefrontal cortex predicted better order memory. Only one study has directly related neural pattern drift to judgments of elapsed time in humans: Ezzyat and Davachi (2014) found that patterns of fMRI activity in left hippocampus were more similar for pairs of stimuli that were later estimated to have occurred closer together in time, despite equivalent time passage between all pairs (a little less than a minute).

While the Ezzyat and Davachi (2014) study provides support for our hypothesis, it has some limitations. First, in Ezzyat and Davachi (2014), participants estimated the temporal distance of stimuli that were linked to their contexts in an artificial way (by placing pictures of objects or famous faces on unrelated scene backgrounds); it is unclear whether these results will generalize to more naturalistic situations where events are linked through a narrative. Second, since participants performed the temporal memory test after each encoding run, they were not entirely naïve to the manipulation. Knowing that they would have to estimate durations between stimuli could have changed participants’ strategy and enhanced their attention to time (for evidence that estimating time prospectively engages different mechanisms, see Hicks et al., 1976, and Zakay and Block, 2004). In the current study, we sought to address the above issues by eliciting temporal distance judgments for pairs of events that had occurred several minutes apart and that were embedded in the context of a rich naturalistic story; participants listened to the entire story before being informed about the temporal judgment task.

Based on the studies reviewed above, we predicted that neural pattern drift in medial temporal and lateral prefrontal regions might support duration estimation. In our study, we examined these regions of interest (ROIs), as well as a broader set of regions that have been implicated in fMRI studies of time estimation, including the inferior parietal cortex, putamen, insula and frontal operculum (see Box 1 for a review). In addition to the ROI analysis, which examined activity patterns in masks that were anatomically defined, we performed a searchlight analysis, which examined activity patterns within small cubes over the whole brain.

Box 1. fMRI literature on prospective time estimation.

As noted in the main text, only one study (Ezzyat and Davachi, 2014) has used fMRI to study retrospective estimation of time intervals lasting more than a few seconds. The vast majority of fMRI studies of time estimation have used prospective tasks, in which participants are asked to deliberately track the duration of a short stimulus or compare the duration of two stimuli. Such studies have repeatedly shown that activity in the putamen, insula, inferior frontal cortex (frontal operculum), and inferior parietal cortex increases as participants pay more attention to the duration of stimuli, as opposed to another time-varying attribute (Coull, 2004; Coull et al., 2004; Livesey et al., 2007; Wiener et al., 2010; Wittmann et al., 2010). Dirnberger et al. (2012) showed that greater activity in the putamen and insula during encoding of aversive emotional pictures predicted better subsequent memory for those pictures, but only when their duration was overestimated relative to neutral images. This suggests that the putamen and insula might mediate the relationship between enhanced processing for emotional stimuli and subjective time dilation. Given the established role of these regions in time processing (albeit of a different sort), we included these regions in the set of a priori ROIs for our main fMRI analysis.

Participants were scanned while they listened to a 25-minute science fiction radio story. Outside the scanner, they were surprised with a time perception test, in which they had to estimate how much time had passed between pairs of auditory clips from the story. Controlling for objective time, we found that the degree of neural pattern distance between two clips at the time of encoding predicted how much time an individual would later estimate passed between them. The effect was significant in the right entorhinal cortex ROI. Extending the anatomical analysis to all masks in cortex revealed an additional effect in the left caudal anterior cingulate cortex (ACC). Moreover, whole-brain searchlight analyses yielded significant clusters spanning the right anterior temporal lobe. Our results suggest that patterns of neural activity in these regions may carry contextual information that helps us make retrospective time judgments on the order of minutes.

Results

Behavioral results

Participants were sensitive to the duration of story intervals

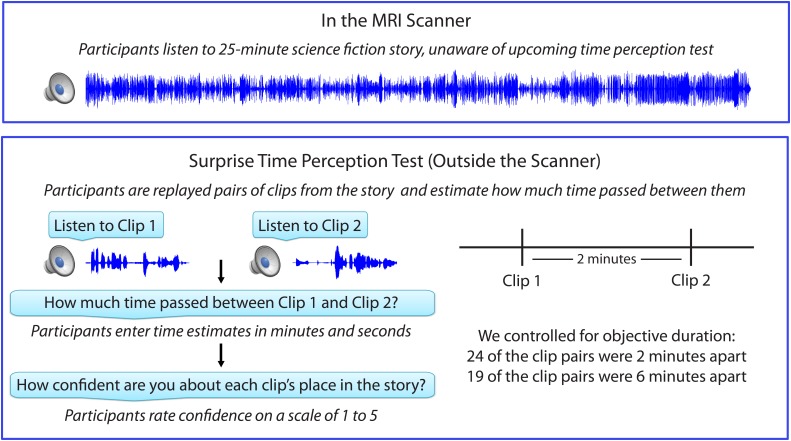

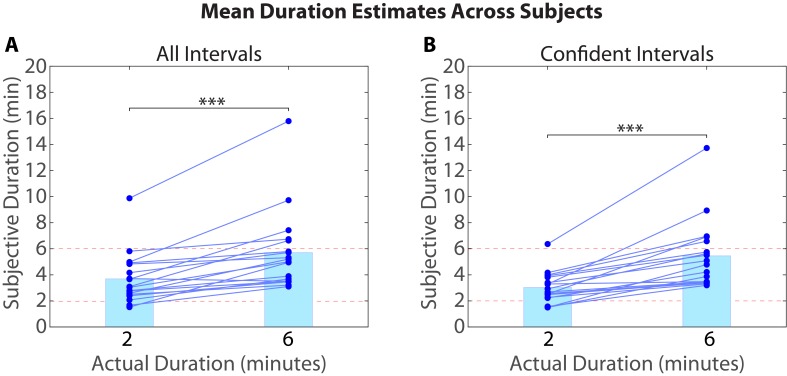

Figure 1 shows the experimental design, which consisted of an fMRI session, followed immediately by a behavioral session. After listening to a 25-min radio story in the scanner, participants were asked how much time had passed between 43 pairs of clips from the story. In actuality, 24 of the clip pairs had been presented 2 minutes apart in the story, while 19 of the clip pairs had been presented 6 minutes apart in the story (participants were not informed of this). Participants were able to estimate the duration of experienced minutes-long intervals far above chance, albeit with substantial intra- and inter-individual variability. On average, across participants, the 6-min intervals (M=5.70 min, SD=3.06) were judged to be significantly longer than the 2-min intervals (M=3.69 min, SD=1.96), t(17) = 5.20, p<10-4 (see Figure 2A).

Figure 1. Experimental design.

Figure 2. Mean duration estimates for all intervals (A) and confident intervals (B) as a function of their actual duration.

Each blue circle represents the mean duration estimate for an individual participant within a given interval duration (2 or 6 min). The blue bar heights represent the global means for 2 and 6-min intervals across intervals and participants.

DOI: http://dx.doi.org/10.7554/eLife.16070.005

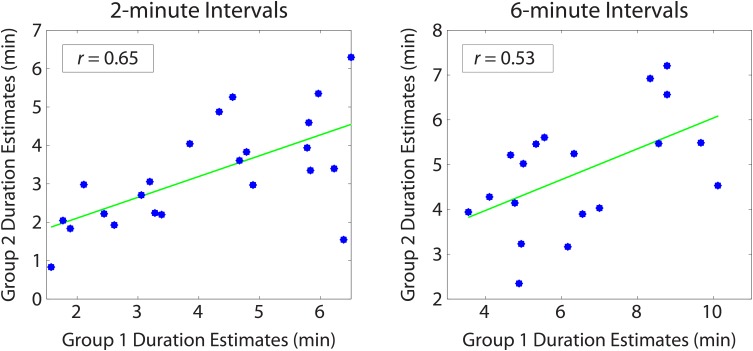

Figure 2—figure supplement 1. Reliability of duration estimates across participants.

As described in the Materials and methods (see Removing low-confidence intervals), participants also provided confidence ratings reflecting their certainty about each clip’s place in the story. Based on this measure, we grouped each participant’s duration estimates into high-confidence and low-confidence intervals. To verify that participants were better at distinguishing 6-min intervals from 2-min intervals when they were confident, we calculated the difference between the mean duration estimates for 6-min intervals and the mean duration estimates for 2-min intervals for every participant. The difference score was significantly higher for high-confidence intervals (M=2.43, SD=1.82) than for all intervals (M=2.01, SD=1.64), t(17)=2.33, p=0.0324. Thus, participants were significantly more accurate at estimating an interval’s duration when they confidently remembered the temporal position of both clips delimiting that interval in the story (see Figure 2B).

For a given interval duration, some intervals were consistently judged to be longer than other intervals across participants, although the actual amount of elapsed time was held constant. To test the reliability of duration estimates across participants, we split the subjects randomly into two groups, averaged the duration estimates within each group, and correlated the two averages with each other. We repeated this procedure 1000 times to ensure that we sampled a variety of group splits. The average correlation between the two groups was 0.64 (SD=0.09) for 2-min intervals and 0.54 (SD=0.15) for 6-min intervals (see Figure 2—figure supplement 1). This analysis suggests that features of the story made some intervals appear consistently shorter and other intervals appear consistently longer across participants.

Duration estimates are influenced by memory of the story

We found that participants estimated six-minute intervals to be significantly longer than two-minute intervals (Figure 2), and that some intervals in the story tended to be systematically over-estimated by participants (Figure 2—figure supplement 1). However, it is possible that participants could judge the temporal distance between two clips purely based on the similarity between them (e.g. Are the same characters speaking? Is the background music the same? Is the topic of conversation similar?).

To ensure that participants were using their memory of the story to judge temporal distance, we ran a control experiment in which 17 participants who had never heard the story were given the exact same memory test. They were asked to try to estimate the amount of time that had elapsed between each pair of clips during the original telling of the story. During debriefing, participants reported making duration estimates based on the perceptual and semantic similarity between the two clips (e.g., which character voices were present, which background music was playing, the topic of conversation).

We found that naïve participants estimated 6-min intervals (M=6.21 min, SD=1.91) to be longer than 2-min intervals (M=5.63 min, SD=1.74; t(16)=2.62, p=0.019), suggesting that the similarity between two clips carried some information about the temporal distance between them. However, naïve participants were significantly less accurate at distinguishing 6-min intervals from 2-min intervals than our original participants who had heard the story. To quantify this, we calculated the difference between the mean duration estimates for 6-min intervals and the mean duration estimates for 2-min intervals for every participant (exactly as above). The difference score was significantly higher for our original participants (M=2.01 min, SD=1.64 min) than for naïve participants (M=0.59 min, SD=0.91 min), t(26.86)=−3.22, p<0.005. Thus, having memory of the story enabled our participants to estimate durations with significantly higher accuracy.

We hypothesized that both our original participants and the naïve participants would use consistent strategies to estimate the temporal distance between two clips, but that these strategies would differ across groups. If this is the case, duration estimates should be more correlated across participants within groups than across participants between groups. The correlation in duration estimates across participants within a group (see Materials and methods) was as strong for naïve participants (M=0.43, SD=0.18, 95% CI [0.40, 0.56]) as for our original participants (M=0.43, SD=0.25, 95% CI=[0.37, 0.58]), suggesting that both groups used a consistent strategy to estimate the distance between two clips. When we correlated duration estimates from our original group of participants with those of our naïve participants, we found that the between-group correlations (M=0.18, SD=0.22, 95% CI=[0.04, 0.28]) were significantly above 0, suggesting that a component of the original duration estimates was influenced by the similarity in content between clips. However, the between-group correlations were significantly lower than the within-group correlations (p<0.0001, as assessed by a permutation test described in the Materials and methods). In other words, there is a reliable component of our original participants’ behavior that cannot be captured by accounting for the perceptual and semantic similarity between clips. In summary, having memory of the story induced a qualitatively different pattern of behavior and produced significantly more accurate duration estimates.

Correlation between number of event boundaries and duration estimates

To gain additional evidence that duration estimates were related to contextual change, we looked at the correlation between estimated duration and the number of event boundaries in the interval between the clips. The number of intervening event boundaries can be viewed as a proxy for contextual change, insofar as event boundaries often encompass changes in scene, characters and conversation topic (Kurby and Zacks, 2008; Zacks et al., 2009). As reviewed in the Introduction, numerous studies have found a relationship between changes in contextual features during an interval and duration estimates for that interval.

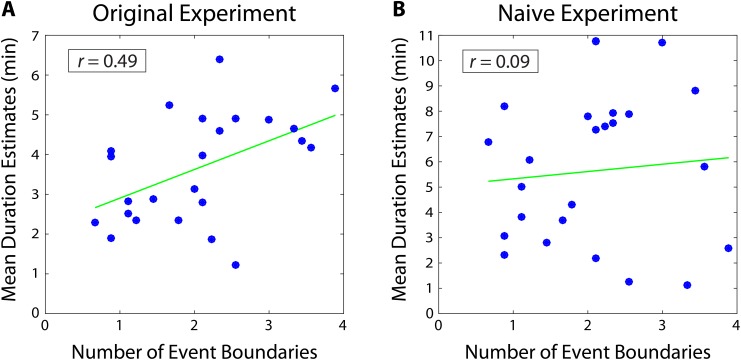

A separate group of participants (n=9) listened to the story and was asked to press a button every time they felt an event boundary was occurring. These data were then averaged across participants to obtain the mean number of event boundaries inside each two-minute interval. We found that the mean number of boundaries in an interval was significantly correlated with the mean duration estimates from our original experiment (r = 0.49, 95% CI [0.27, 0.57]; Figure 3). This suggests that our participants’ retrospective duration estimates were influenced by the number of contextual changes that had occurred during an interval.

Figure 3. Mean duration estimates for 2-minute intervals as a function of the number of event boundaries in each interval.

The number of event boundaries in an interval predicted retrospective duration estimates in our original experiment (A), but did not significantly predict duration estimates of naïve participants (B) who had never heard the story. This suggests that the number of contextual changes between two clips influenced temporal distance judgments significantly more when the content of the story between the two clips could be recalled.

DOI: http://dx.doi.org/10.7554/eLife.16070.008

However, it is important to note that the number of event boundaries between two clips also influences the perceptual and semantic similarity between them (e.g., clips from the same scene might sound more similar than clips from different scenes). Thus, our participants’ duration estimates could correlate with the number of event boundaries, even if they were basing their estimates purely on the perceptual similarity between clips. To explore this possibility, we tested whether the number of event boundaries would correlate with duration estimates from naïve participants, who could only judge temporal distance based on the similarity between clips, given that they had never heard the story.

Importantly, we found that the number of event boundaries in an interval did not significantly correlate with duration estimates of naïve participants (r=0.09, 95% CI [−0.05, 0.21]; Figure 3). Of course, we cannot definitively prove the null hypothesis that naïve duration estimates do not correlate with the number of event boundaries. However, the correlation between the number of boundaries and duration estimates was significantly higher for our original participants than for naïve participants (=0.40, 95% CI [0.15 0.56]). In other words, duration estimates from participants who remembered the story were significantly more correlated with the number of contextual changes between two clips than duration estimates from participants who were judging temporal distance based merely on the similarity between the two clips. This suggests that the number of event boundaries carries information about temporal context that is not contained within the clips alone, and that our original participants’ estimates were influenced by their memory of this contextual information.

fMRI results

We tested whether BOLD pattern change between two clips correlated with temporal distance estimates, using both ROI and whole-brain searchlight analyses. Each type of analysis was performed both within-participants across intervals and within-intervals across participants.

In the within-participant analysis, we correlated each participant’s duration estimates with that participant’s neural pattern distances (see Within-Participant Correlation between Pattern Change and Duration Estimates and Within-Participant Whole-brain Searchlight). In the within-interval analysis, we correlated individual differences in subjective duration for a given interval with individual differences in neural pattern distance for that interval (see Within-Interval Correlation between Pattern Change and Duration Estimates and Within-Interval Whole-brain Searchlight). The two versions of each analysis were performed in order to rule out the possibility that our effects were driven either by participant or interval random effects. In particular, we were concerned that correlations between neural pattern distance and behavior could reflect sensitivity to perceptual or semantic features of the clips (i.e., clip pairs with similar perceptual/semantic features might be associated with shorter duration estimates and greater neural similarity, relative to clip pairs with more dissimilar features). The within-interval analysis addresses this concern by holding clip identity constant.

Next, we fit a mixed-effects model for each ROI (see Mixed-Effects Model Accounting for Naïve Duration Estimates), in which we estimated whether pattern distance in that ROI could predict duration estimates, even when accounting for participant random effects, item (interval) random effects, as well as naïve duration estimates (which are a proxy for the perceptual and semantic similarity between two clips, see Behavioral results).

Finally, we discuss the brain regions that showed significant effects across all analyses (see Comparing Results from ROI and Searchlight Analyses).

As noted in the Materials and methods, the ROI and searchlight analyses were conducted only on high-confidence two-minute intervals. Six-minute intervals were excluded from the fMRI analysis, since we could not successfully dissociate neural pattern change at this timescale from low-frequency scanner noise (see Methodological challenges with analyzing pattern distance over long time scales in the Materials and methods).

Anatomical ROI analyses

We first tested whether pattern change in regions suggested by the literature to be important for representing temporal context (see ROI Selection) correlated with retrospective duration estimates. Anatomical ROIs were derived from FreeSurfer cortical parcellation (Desikan et al., 2006) and from a probabilistic MTL atlas (Hindy and Turk-Browne, 2015).

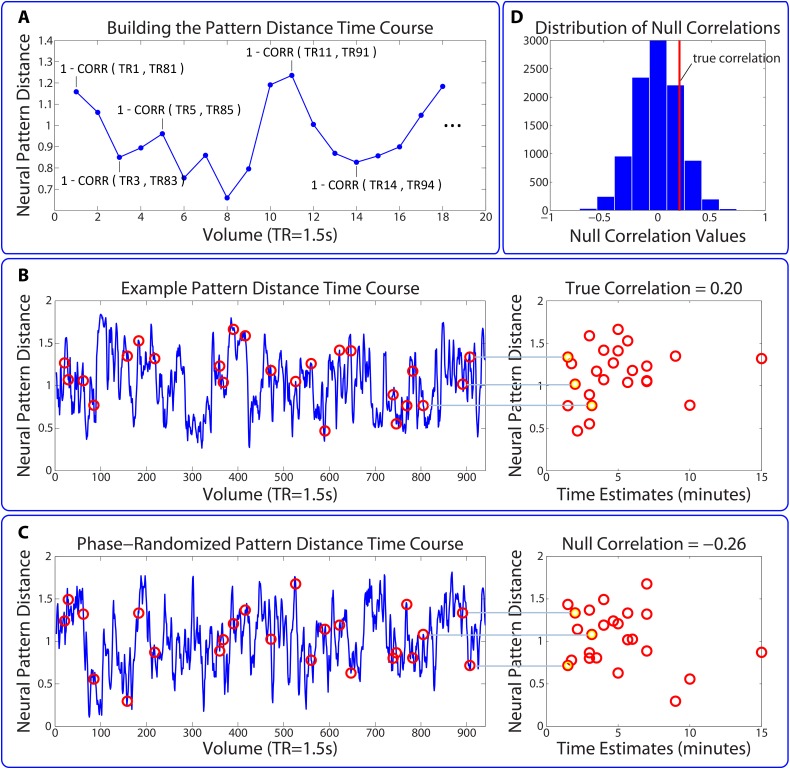

Within-participant correlation between pattern change and duration estimates

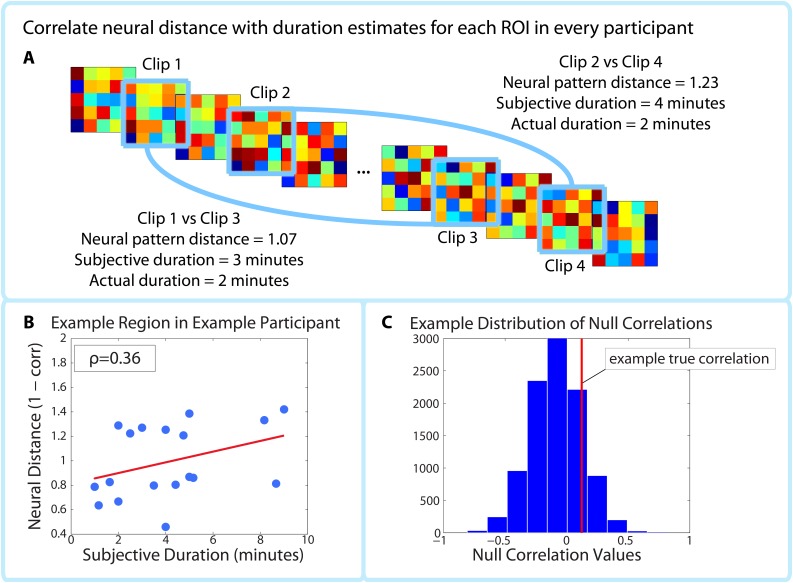

The within-participant analysis procedure is outlined in Figure 4. We calculated the correlation between neural pattern distance and duration estimates within participants (Figure 4A) in each of the 32 ROIs shown in Figure 5. To assess the likelihood of obtaining a correlation of that magnitude by chance, we used a phase randomization procedure (described in Materials and methods) to obtain 10,000 null correlations for each ROI in every participant. This enabled us to calculate a Z-value for every ROI in every participant, which reflects the strength of the actual correlation between pattern distance and duration estimates relative to the distribution of null correlations (Figure 4C). Here we report the regions whose Z-values were consistently positive across participants, corrected for multiple comparisons using False Discovery Rate (Benjamini et al., 2006).

Figure 4. Correlating pattern distance with duration estimates within participants.

For each ROI in each participant, the pattern distance between each pair of clips at encoding was correlated with the participant’s retrospective duration estimate (A–B). The top panel (A) shows two example intervals. The neural distance (1-Pearson’s r) between clips 2 and 4 (second interval) is greater than the neural distance between clips 1 and 3 (first interval), as is the subjective duration estimate. (B) shows the correlation between neural distance and duration estimates in a hypothetical region and participant. (C) We used a permutation test to generate 10,000 surrogate pattern distance vectors (see Figure 4—figure supplement 1), which we then used to obtain a distribution of null correlations between neural distances and duration estimates. For each ROI in each participant, we calculated the z-scored correlation value, which reflects the strength of the empirical correlation relative to the distribution of null correlations. For each ROI, we performed a random effects t-test to assess whether the z-score was reliably positive across participants. P-values from this t-test were then subjected to multiple comparisons correction using False Discovery Rate (FDR).

Figure 4—figure supplement 1. Permutation test assessing the temporal specificity of correlations between pattern change and behavior.

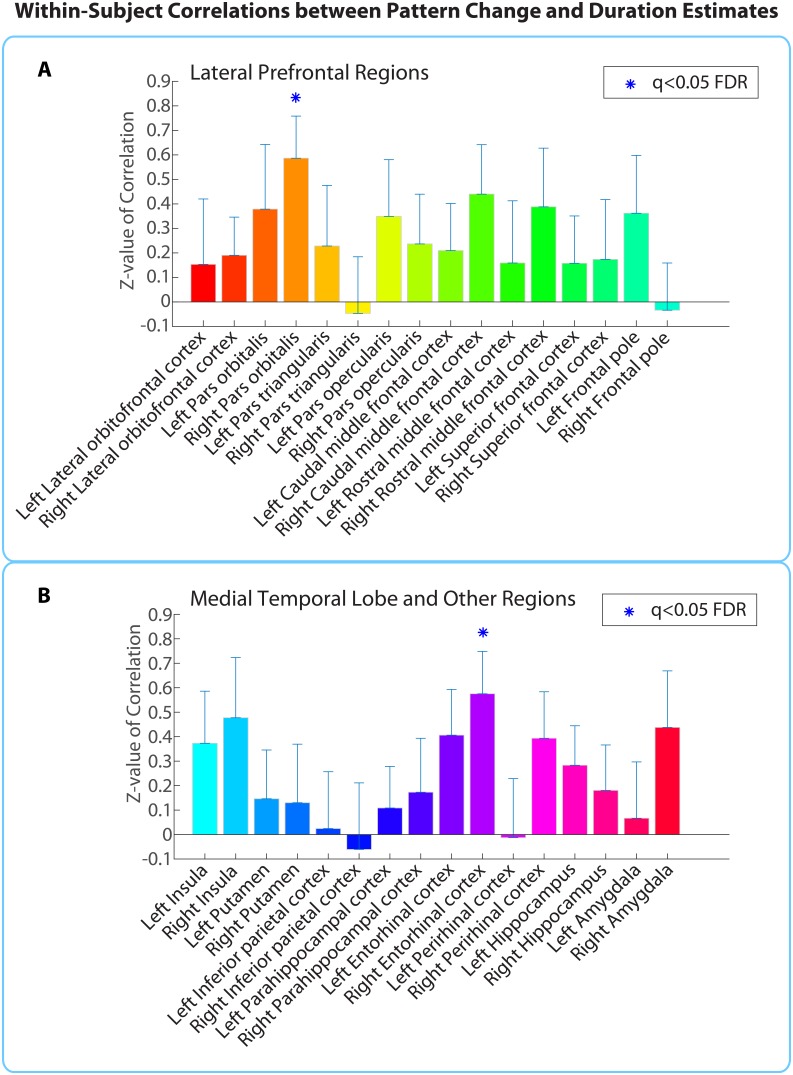

Figure 5. Within-participant ROI analysis: mean Z-values (across all 18 participants) of correlations between pattern distance and duration estimates for the 16 a priori ROIs.

Z-values were obtained from the phase randomization procedure and reflect the strength of the empirical correlation relative to the distribution of null correlations. Error bars represent standard errors of the mean. The blue dots over the right entorhinal cortex and right pars orbitalis indicate that these ROIs survived FDR correction at q<0.05.

DOI: http://dx.doi.org/10.7554/eLife.16070.013

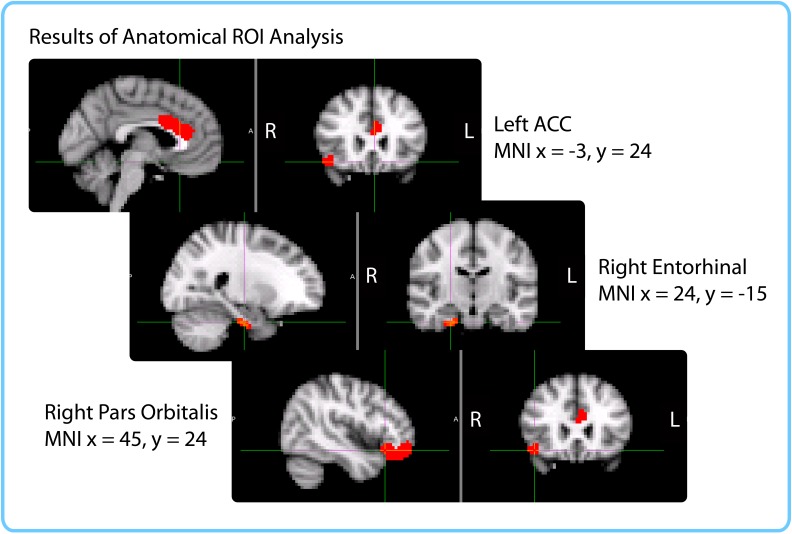

Figure 5—figure supplement 1. Anatomical ROIs that showed a significant correlation between pattern change and duration estimates within participants, after whole-brain FDR correction.

Out of the regions selected a priori, the right entorhinal cortex and right pars orbitalis showed a significant positive correlation between pattern change and duration estimates for high-confidence 2-minute intervals (q<0.05). Figure 5 shows the mean Z-values across participants for all a priori ROIs (16 in each hemisphere), including lateral prefrontal regions (top panel A), medial temporal lobe regions, insula, putamen, and inferior parietal cortex (bottom panel B). While a large number of these regions had Z-values that were positive across participants (e.g., left hippocampus, left entorhinal cortex, right perirhinal cortex, right amygdala, bilateral insula, and right caudal middle frontal cortex, p<0.05 uncorrected), we report only those that survived FDR correction.

As part of an exploratory search, we also performed this analysis on the other brain regions derived from FreeSurfer cortical parcellation. This included the 16 ROIs mentioned above, in addition to regions in the occipital lobe, parietal lobe, medial prefrontal cortex, lateral temporal lobe, basal ganglia, thalamus and brainstem (the complete list of regions can be found in Figure 5—source data 1). Out of the 84 regions tested (42 in each hemisphere), the right entorhinal cortex, right pars orbitalis, and left caudal anterior cingulate cortex (ACC) showed significant positive correlations between pattern change and duration estimates (q<0.1). This suggests that the right entorhinal cortex and right pars orbitalis, which were part of our list of a priori ROIs, contained effects that were apparent even after whole-brain correction, and reveals an additional effect in the left caudal ACC that we had not anticipated. Figure 5—figure supplement 1 displays the locations of these three regions in MNI space.

Within-interval correlation between pattern change and duration estimates

Above, in the within-participants analysis, we found that the neural pattern distance between two clips at encoding was correlated with retrospective duration judgments in the right entorhinal cortex, right pars orbitalis and left caudal ACC. However, in the Behavioral results, we found that the perceptual and semantic similarity between two clips could explain some of the variance in subjective duration across intervals, even though it could not explain all the variance. Thus, it is possible that neural pattern change in the regions we found correlates with the component of duration estimates that is driven by perceptual and semantic content, rather than the component that is driven by abstract, slowly varying contextual features.

To rule out this concern, we performed a within-interval (across participants) version of the ROI analysis. For each ROI, we correlated (1) duration estimates for a given interval across participants with (2) the neural pattern distances for that interval across participants; results were then aggregated across all 2-min intervals. Rather than capturing variance within an individual across intervals of the story, this analysis captures variance across individuals for a given interval of the story. By performing the correlation within a given interval, we hold constant the perceptual and semantic content of the two clips and only leverage individual differences in how long the interval appeared retrospectively.

As described in the Materials and methods, a permutation test was used to assess the statistical significance of each correlation. Duration estimates were scrambled across participants 10,000 times to obtain a distribution of null correlations for every interval in every ROI. This enabled us to calculate a Z-value, which reflects the strength of the actual correlation between pattern distance and duration estimates relative to the distribution of null correlations. Finally, a right-tailed t-test was performed to assess whether the Z-values for a region were reliably above 0 across intervals. The p-values from this t-test were subjected to multiple comparisons correction using FDR.

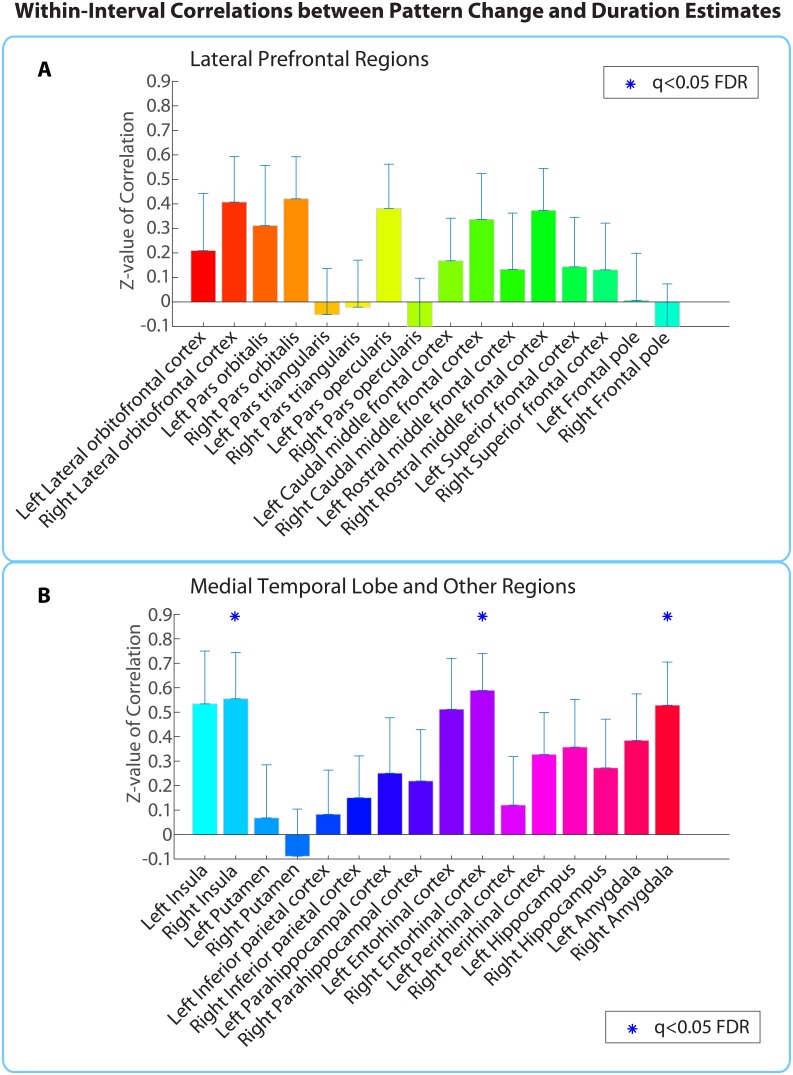

Out of the regions selected a priori, the right entorhinal cortex, right amygdala, and right insula showed a significant positive correlation between pattern change and duration estimates for high-confidence 2-minute intervals (q<0.05). Figure 6 shows the mean Z-values across intervals for all a priori ROIs (16 in each hemisphere).

Figure 6. Within-interval ROI analysis: mean Z-values (across all 2-min intervals) of correlations between pattern distance and duration estimates for the 16 a priori ROIs.

Error bars represent standard errors of the mean. Correlations between pattern change and duration estimates were performed across participants, separately for each interval.

DOI: http://dx.doi.org/10.7554/eLife.16070.016

Extending this analysis to the whole brain (same anatomical masks as in Figure 5—source data 1) revealed only the right entorhinal cortex (q<0.05), suggesting that the effect in this region was strong enough to survive whole-brain correction.

Importantly, the right entorhinal cortex is the only region with significant effects in both the within-interval analysis (Cohen’s d = 0.83) and the within-participant analysis (Cohen’s d = 0.79). If neural pattern distance between two clips in entorhinal cortex were driven solely by changes in clip content, we would have expected the correlation with duration estimates to be larger for the within-participant analysis (where story content differed across intervals) than for the within-interval analysis (where story content is held constant). The fact that the effect sizes are similar shows that perceptual or semantic differences in content between the two clips are not the main factor driving the correlation between duration estimates and neural pattern change in this region.

Mixed-effects model accounting for naïve duration estimates

We analyzed our data using a hierarchical linear regression model (Gelman and Hill, 2006; see Materials and methods for additional detail). This analysis estimates population-level effects of interest, while controlling for the possibility of individual variability between subjects and between clip pairs. In other words, this approach leverages the power of the within-interval analysis to control for the objective content similarity between two clips, while also taking into account variability in the effect across participants. In addition, we included the mean duration estimates from our naïve participants as a covariate in the model (see Behavioral results). Since naïve participants had estimated the temporal distance between each pair of clips without hearing the story, this covariate is a further control for the inherent guessability of the temporal distance between two clips. Both controls strengthen our interpretation that the remaining effect of neural pattern distance on duration estimates is driven by the contextual dissimilarity (rather than perceptual or content dissimilarity) between two clips.

For each anatomical region derived from FreeSurfer and MTL segmentation (42 in each hemisphere), we fit a model where duration estimates were predicted by naïve duration estimates as well as the neural pattern distance in that region (see Materials and methods for the complete formula). We then computed 95% confidence intervals of the fixed-effects parameter estimates using the asymptotic Gaussian approximation (see Materials and methods).

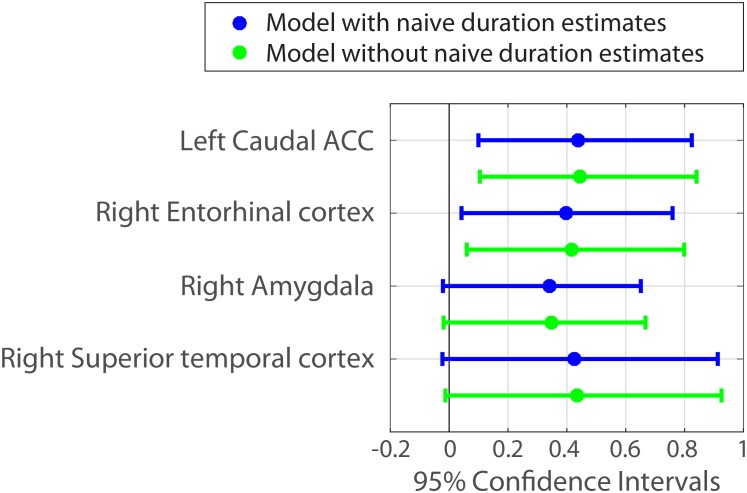

The fixed effect of naïve estimates was positive in all models and its confidence intervals did not include zero in 80% of the models. This reproduced our finding that naïve duration estimates are correlated with the original duration estimates (see Behavioral results), suggesting that interval durations are partially guessable based on the similarity between clips. However, even under this control, the fixed effect of neural pattern distance in left caudal ACC and right entorhinal cortex exhibited confidence intervals that did not include zero (Figure 7). Figure 7—source data 1 contains the parameter estimates and 95% confidence intervals for all 84 anatomical regions.

Figure 7. Parameter estimates and 95% confidence intervals for the fixed effect of neural pattern distance on duration estimates.

We also included the right amygdala and right superior temporal cortex in the figure, because their confidence intervals did not include 0 when a slightly less conservative fitting procedure was used (see Figure 7—source data 1 and Materials and methods).

DOI: http://dx.doi.org/10.7554/eLife.16070.018

Importantly, including the naïve duration estimates as a covariate in the model did not significantly weaken the relationship between neural pattern distance and duration estimates in these regions (though the effects were slightly lower numerically). Figure 7 shows in green the 95% confidence intervals for the same ROIs when naïve duration estimates are excluded from the model.

Whole-brain searchlights

As with the Anatomical ROI analyses, both within-participant and within-interval analyses were performed for the Whole-Brain Searchlight analyses, in order to rule out the possibility that our effects were driven either by participant or interval random effects.

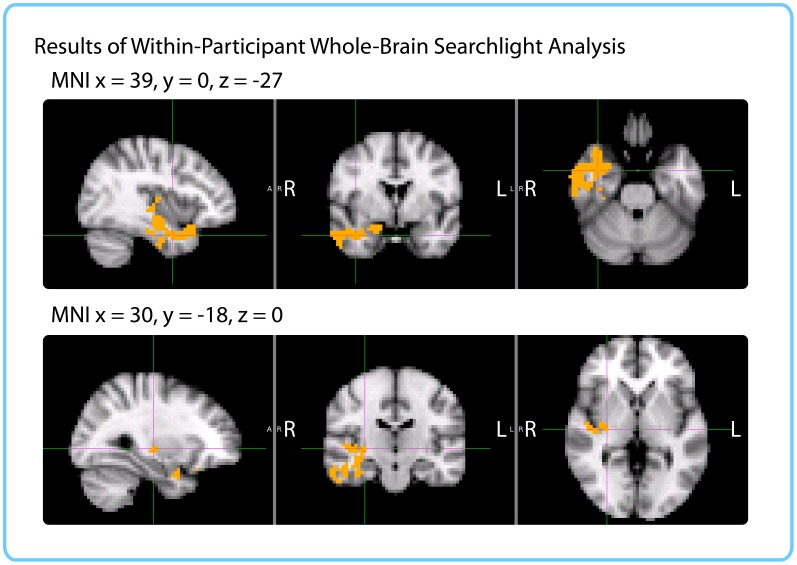

Within-participant whole-brain searchlight

We ran a cubic searchlight with 3x3x3 (27) voxels (972 mm3) through the entire brain and tested for a correlation between pattern change and duration estimates in each searchlight. The same phase-randomization procedure that was used for the within-participant anatomical ROI analysis was also applied here; this procedure generates Z-values that reflect how likely we are to get this strong of a correlation by chance, given the frequency spectrum of the fMRI data. When excluding low-confidence intervals, we found a significant cluster in the right anterior temporal lobe (p=0.034, FWE-corrected; Center of Gravity MNI coordinates (x, y, z) in mm: [45.6, −5.53, −21.7]; cluster size=572 voxels in 3 mm MNI space). Small parts of the cluster also extended to the right posterior insula and right putamen (see Figure 8).

Figure 8. Results of within-participant whole-brain searchlight.

Voxels in orange represent centers of searchlights that exhibited significant correlations between pattern change and duration estimates within participants across intervals (p<0.05, FWE). The significant cluster had peak MNI coordinates (in mm): x = 45.6, y = -5.53, z = -21.7.

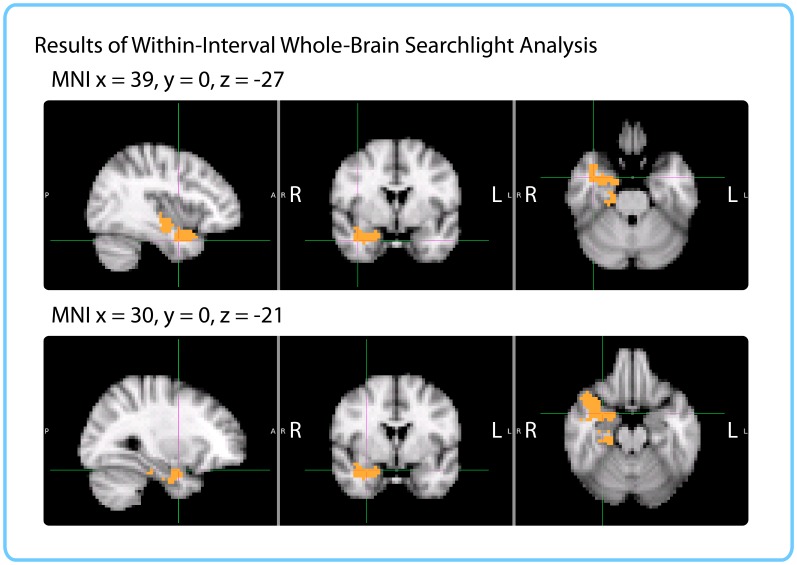

Within-interval Whole-brain searchlight

We also ran a searchlight version of the within-interval analysis. In order to match searchlights across participants, functional data were transformed to 3 mm MNI space. Since this transformation approximately doubles the number of brain voxels, we ran cubic searchlights of radius 2 with 5x5x5 (125) voxels through the entire brain.

As with the ROI analysis, this analysis was performed on high-confidence duration estimates. For each interval, we only included participants who had confidently recollected the temporal position of the two clips delimiting that interval.

To assess the significance of each correlation score, we used the same permutation test as for the ROI analysis. Duration estimates were scrambled across participants 10,000 times to obtain a distribution of null correlations, and Z-values were calculated for each interval. We thus obtained a brain map of Z-values for each of the 24 intervals, and FSL’s randomise function was used to control the family-wise error rate, as above.

Similarly to the within-participant searchlight, we found a significant cluster in the right anterior temporal lobe (p=0.019, FWE-corrected; Center of Gravity MNI coordinates (x, y, z) in mm: [32.1, −10.2, −18.7]; cluster size=535 voxels in 3 mm MNI space). The cluster extended from the right parahippocampal gyrus, hippocampus and amygdala medially to the middle temporal gyrus and temporal pole laterally (see Figure 9).

Figure 9. Results of within-interval whole-brain searchlight.

Voxels in orange represent centers of searchlights that exhibited significant correlations between pattern change and duration estimates across participants (p<0.05, FWE). The significant cluster had center of gravity MNI coordinates (in mm): x = 32.1, y = −10.2, z = −18.7.

Comparing results from ROI and searchlight analyses

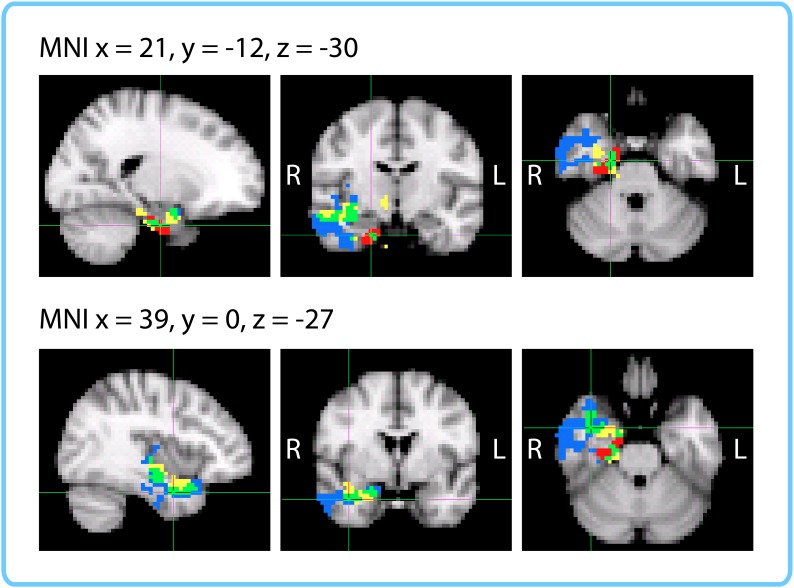

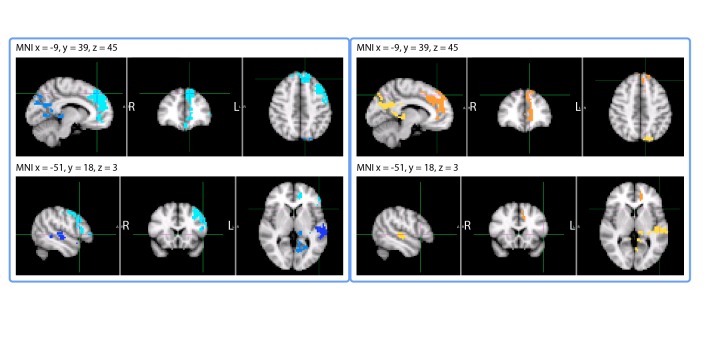

The within-participant ROI analysis revealed significant effects in the right entorhinal cortex, right pars orbitalis and left caudal ACC. The within-interval ROI analysis revealed significant effects in the right entorhinal cortex, right amygdala and right insula. The mixed-effects ROI analysis showed that the right entorhinal cortex and left caudal ACC had confidence intervals above 0, even when naïve duration estimates were accounted for. Both the within-participant and within-interval searchlights revealed significant clusters in the right anterior temporal lobe. Figure 10 enables a comparison of the two searchlight analyses; the right entorhinal cortex ROI that emerged in all three ROI analyses is also overlaid. The within-interval searchlight cluster was located more medially than the within-participant searchlight cluster, though the two overlapped in the right amygdala, right temporal pole, and the cerebral white matter of the right anterior temporal lobe. Moreover, the within-interval searchlight cluster overlapped with the right entorhinal cortex ROI (see green voxels, Figure 10).

Figure 10. Comparison of ROI and Searchlight results.

The within-participant searchlight cluster (p<0.05, FWE) is displayed in blue; the within-interval searchlight cluster (p<0.05, FWE) is displayed in yellow; voxels that overlap between the searchlights are shown in green. The right entorhinal cortex (q<0.05 FDR in both ROI analyses) is displayed in red; voxels that overlap between the within-interval searchlight and the right entorhinal ROI are shown in green.

The difference in the set of regions that passed the significance threshold between the ROI and searchlight analyses is very likely due to the difference in shapes between the searchlight cube and the anatomical masks. Following the anatomy is particularly important for small, elongated regions like entorhinal cortex and caudal ACC, which are unlikely to be perfectly aligned across participants. For the searchlight analyses, the data needed to be transformed to MNI space in order to aggregate the results; consequently, imperfections in alignment can reduce the significance of searchlight results in these regions. On the other hand, anatomical ROI analyses were performed entirely in native space, making them more suitable for idiosyncratically shaped regions.

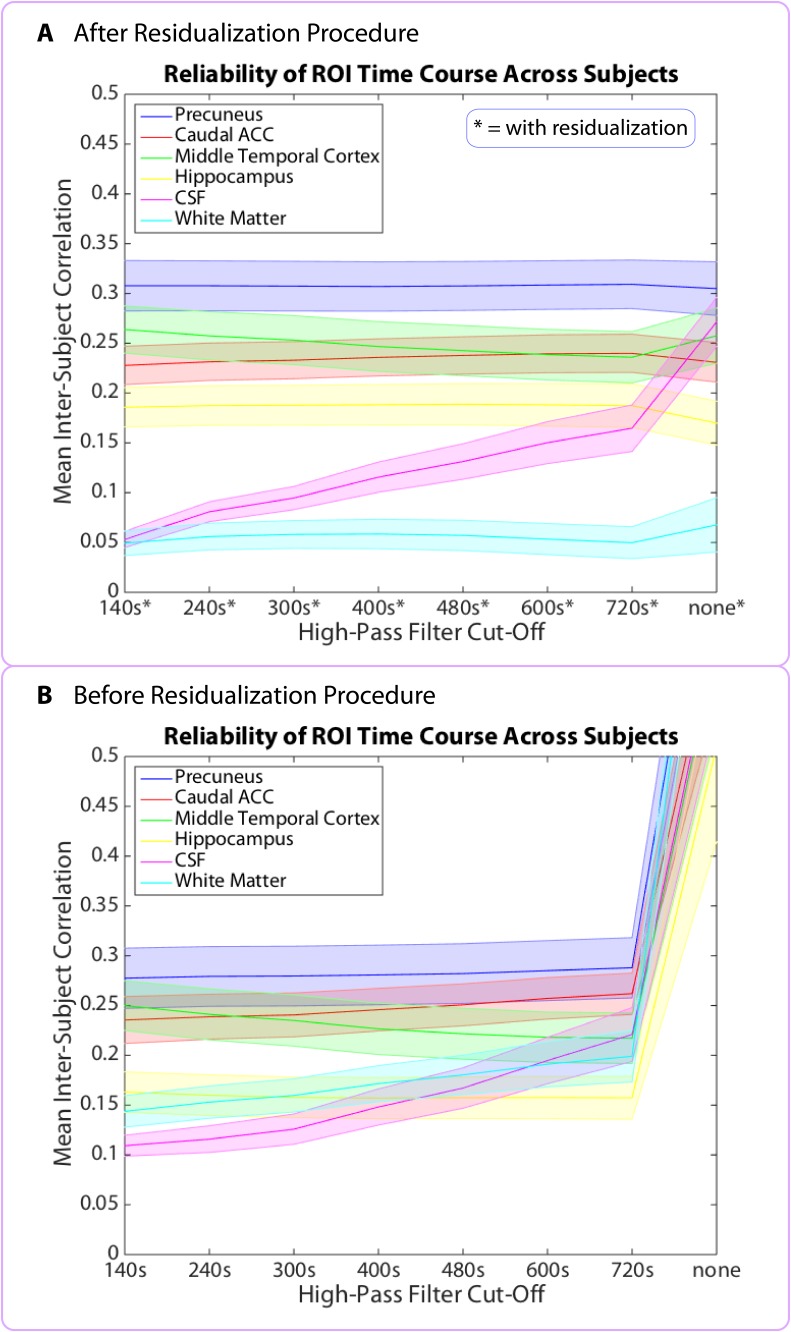

Patterns of activity in entorhinal cortex change slowly over time

To further probe the idea that the regions we found represent slowly changing contextual features, we assessed whether their patterns of activity change slowly over time relative to the rest of the brain. We focused this analysis on the right entorhinal cortex and left caudal ACC, both of which were significant in the mixed-effects ROI analysis.

We quantified the speed of BOLD signal change in three different ways: (1) a multivariate procedure, (2) a multivariate procedure in which we regressed out ROI size, and (3) a univariate procedure. (1) For the multivariate procedure, we obtained the mean auto-correlation function of the pattern in every region, and took the full-width half-maximum (FWHM) of this function as a measure of how slowly the pattern moves away from itself on average (see Materials and methods). (2) Since this analysis was performed on anatomical masks derived from FreeSurfer parcellation, they varied substantially in size. To ensure that differences in the speed of pattern change were not due to differences in ROI size, we also performed the multivariate procedure after regressing the vector of ROI sizes (number of voxels) out of the vector of FWHM values for each participant. (3) Finally, we performed the above analysis for every voxel individually. Rather than calculating the mean auto-correlation function of the pattern in every region, we calculated the auto-correlation function of every voxel’s time course and averaged the auto-correlation functions across all the voxels in a given region. The FWHM was then computed for this mean auto-correlation derived from individual voxel time courses.

Using these three procedures, we compared the FWHMs in the right entorhinal cortex and left caudal ACC with FWHMs in three regions known to be involved in auditory and language processing: the right transverse temporal cortex, which encompasses primary auditory cortex (Destrieux et al., 2010; Shapleske et al., 1999), the right banks of the superior temporal sulcus and the right superior temporal cortex, which are involved in auditory processing and the early cortical stages of speech perception (Binder et al., 2000; Hickok and Poeppel, 2004).

Table 1 shows the FWHMs in the above regions derived using the three procedures, as well as the ranking of the right entorhinal cortex and left caudal ACC mean FWHMs relative to all the other masks in the brain (84 in total).

Table 1.

Speed of pattern change in the right entorhinal cortex and left caudal ACC relative to the rest of the brain. Full-Width Half-Maximum (FWHM) values reflect how slowly patterns of activity (multivariate) or individual voxels (univariate) change over time. The Multivariate (-ROI size) column reflects the slowness of pattern change when controlling for the effect of ROI size.

| Multivariate | Multivariate (-ROI size) | Univariate | ||||

|---|---|---|---|---|---|---|

| Region | FWHM (TRs) | Ranking | FWHM (TRs) | Ranking | FWHM (TRs) | Ranking |

| Right entorhinal | M=18.9, SD=13.8 | 3rd | M=1.2, SD=1.9 | 4th | M=23, SD=15.6 | 1st |

| Left caudal ACC | M=8.3, SD=1.8 | 66th | M=-0.5, SD=0.5 | 67th | M=9.2, SD=3.8 | 46th |

| Right transverse temporal cortex | M=7.3, SD=1.2 | 80th | M=-0.8, SD=0.5 | 83rd | M=7.9, SD=1.2 | 68th |

| Right banks of superior temporal sulcus | M=9.0, SD=2.1 | 48th | M=-0.3, SD=0.4 | 49th | M=8.8, SD=1.7 | 61st |

| Right superior temporal cortex | M=11.0, SD=3.1 | 28th | M=0.4, SD=0.6 | 18th | M=10.3, SD=2.4 | 34th |

Across all three procedures, a right-tailed Wilcoxon signed-rank test indicated that the FWHMs in the right entorhinal cortex were consistently larger across participants than the FWHMs in the right transverse temporal cortex (p<0.00005, p<0.0005 and p<0.00005), the right banks of the superior temporal sulcus (p<0.001, p<0.001 and p<0.0005) and the right superior temporal cortex (p<0.005, p=0.06 and p<0.0005). Thus, single voxels and multivariate patterns in entorhinal cortex changed consistently more slowly than those in regions involved in auditory and language processing. Moreover, the mean FWHM in the right entorhinal cortex was one of the largest among all 84 regions, ranking 3rd, 4th and 1st in the brain across the three procedures. The other regions with the slowest voxel and pattern change included the temporal pole, medial and lateral orbitofrontal cortex, frontal pole, perirhinal cortex, pars orbitalis and inferior temporal cortex.

On the other hand, the left caudal ACC ranked 66th, 67th and 46th out of 84 regions across the three procedures, suggesting that it did not exhibit slow signal change relative to the rest of the brain. Across the three procedures, the FWHMs in the left caudal ACC were larger than those in the right transverse temporal cortex (p<0.01, p<0.005, and p=0.059), but generally smaller than those in the right banks of the superior temporal sulcus (p=0.97, p=0.96, and p=0.42) and the right superior temporal cortex (p=1.0, p=1.0, p=0.98). Thus, patterns in the left caudal ACC changed only slightly more slowly than those in primary auditory cortex.

Taken together, all three variants of the analysis showed that the right entorhinal cortex, along with other regions of the anterior and medial temporal lobe, orbitofrontal cortex and frontal pole, had the slowest pattern change in the brain. These results do not seem to be due to differences in the sizes of the anatomical masks and suggest that the right anterior MTL regions found most consistently in our ROI and searchlight analyses process information that changes slowly over time. Our findings are consistent with those of Stephens et al. (2013), who showed that auditory cortex regions processing momentary stimulus features had intrinsically faster dynamics than higher-order regions that integrated information over longer time scales (see also Lerner et al., 2011).

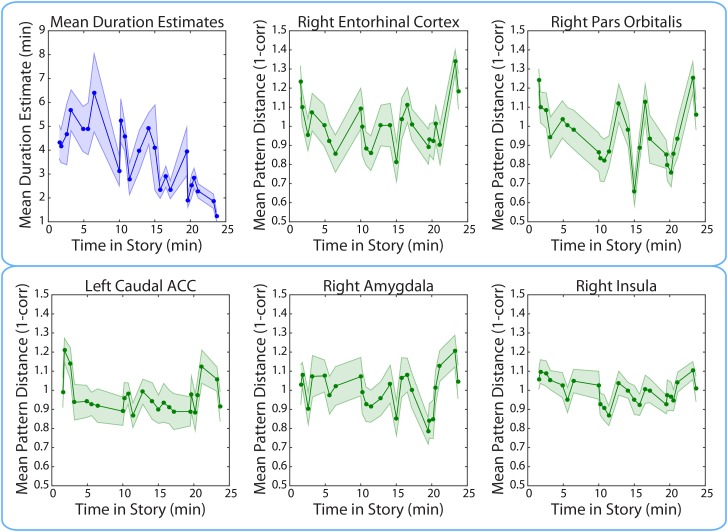

Story position effects cannot explain the correlation between duration estimates and neural pattern change

We found that duration estimates systematically decreased as a function of position in the story, with earlier intervals being estimated as longer than later intervals (Figure 11). The correlation between the estimated duration of an interval and its time in the story was consistently negative across participants (M=−0.40, SD= 0.22; t(16)=−7.59, p<0.00001).

Figure 11. Mean duration estimates and pattern distances (across participants) for all 2-minute intervals as a function of the interval’s position in the story.

The middle time point of each 2-min interval (half-way between the two clips delimiting it) was chosen as the x-coordinate.

DOI: http://dx.doi.org/10.7554/eLife.16070.024

This result may be a replication of the positive time-order effect: the finding that people judge earlier durations in a series of durations to be longer than later durations (Block, 1982, 1985; Brown and Stubbs, 1988). The effect has been interpreted to mean that context usually changes more rapidly at the start of a novel episode (Block, 1982, 1986). However, another possibility is that the characteristics of the particular story we picked are driving this result. In our story, there was a strong negative correlation between the mean number of event boundaries per interval and the position of the interval in the story (r=−0.77). Thus, the decrease in mean duration estimates with story position may be due to the relationship between the number of event boundaries and duration estimates (see Behavioral results).

If the decrease in duration estimates over time is due to a decrease in the amount of contextual change over the course of the story, we might expect BOLD pattern dissimilarity to decrease over time in the brain regions yielded by our ROI analyses. However, there was no consistent correlation between pattern change during an interval and its time in the story for the right entorhinal cortex (M=0.03, SD=0.21; t(16)= 0.65; p=0.53), the right pars orbitalis (M=−0.10, SD=0.22; t(16)=−1.83, p=0.09), the left caudal ACC (M=−0.05, SD=0.18; t(16)=−1.15, p=0.27), the right amygdala (M=−0.02, SD=0.23; t(16)=−0.28, p=0.78) or the right insula (M=−0.08, SD=0.25; t(16)=−1.34, p=0.20). These results suggest that the relationship between duration estimates and pattern dissimilarity in these regions was not driven by a shared effect of story position. Rather, it seems that pattern dissimilarity in these regions correlated with more fine-grained variations in the estimated durations of nearby intervals (Figure 11).

To investigate why the above regions did not show the expected decrease in pattern dissimilarity over time, we assessed whether any brain region in the FreeSurfer or MTL atlas might show this effect. There was no brain region whose pattern of activity changed more at the beginning than at the end of the story. Given that we were looking for a slow change in neural signal (unfolding over the entire course of the story), we thought that our high-pass filter might be removing this slow change; to address this possibility, we analyzed the unfiltered data. When we did this, we found that neural pattern change in the unfiltered data showed a consistent correlation in the opposite direction: almost all brain patterns changed more at the end of the story than at the beginning, including the CSF and white matter (q<0.05, FDR), suggesting that a signal unrelated to neural processing, such as scanner drift or motion, may cause activity patterns to change more as time passes (see Figure 11—source data 1). Thus, even if the degree of neural pattern change were decreasing over time, we might not be able to detect this effect, as it would have to overcome a global signal in the opposite direction that is not due to neural activity and that is present everywhere, including the CSF.

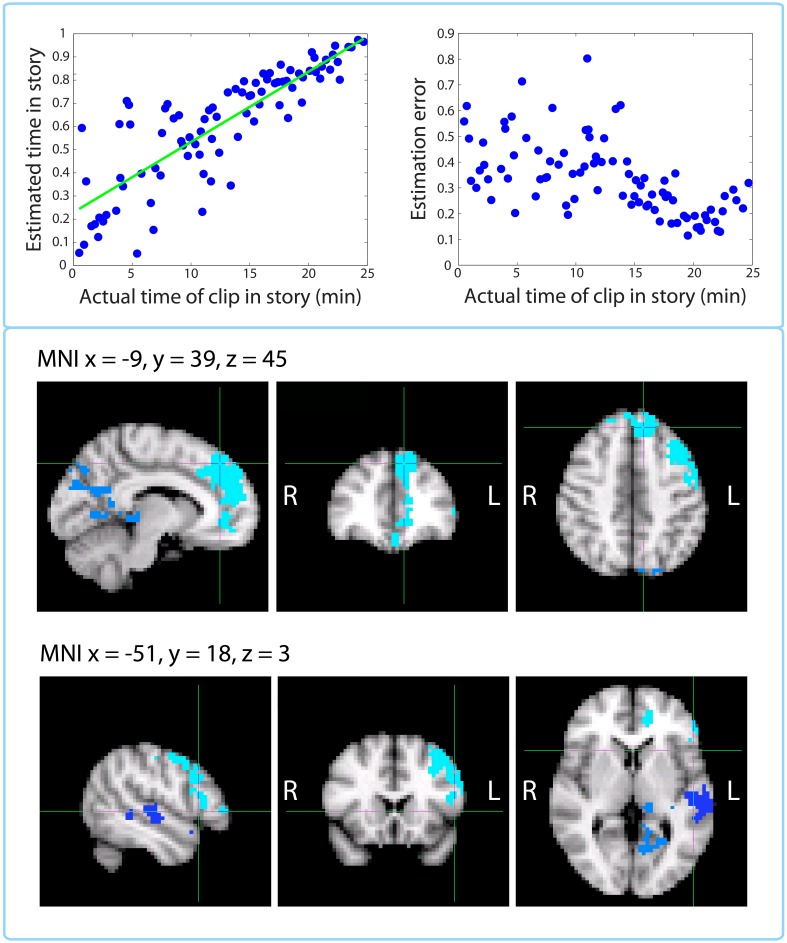

Replication of Jenkins and Ranganath (2010): activity at encoding predicts accuracy of temporal context memory

As described in the Materials and methods (Time perception test section), besides estimating the elapsed duration between pairs of clips from the story, participants were given an additional test, where they indicated each clip’s position on the timeline of the story. The mean correlation (across participants) between the actual and estimated temporal position on the timeline of the story was r=0.885 (SD=0.05), suggesting that participants remembered the temporal position of each clip extremely well (p<10–21). Figure 12 shows the timeline estimates for a representative participant (top left panel), as well as the absolute residual error associated with each clip (top right panel), group averaged and plotted against time in the story.

Figure 12. Replication of Jenkins and Ranganath (2010): activity at encoding predicts accuracy of temporal context memory.

Top left panel: Timeline estimates for a representative participant. The estimated temporal position of each clip is plotted against its actual position in the story. Top right panel: Group-averaged residual error for each clip plotted against its time in the story. Our behavioral results mimic those of Figure 2 in Jenkins and Ranganath (2010) showing that accuracy increases for clips that occurred later in the story. Bottom panels: Clusters that showed a significant correlation between activity at encoding and subsequent accuracy at placing a clip on the timeline of the story. The prefrontal cluster in light blue was significant (p=0.008, FWE), while the medial parietal cluster (p=0.058, FWE) and the lateral temporal cluster in dark blue (p=0.098, FWE) were trending.

This behavioral dataset enabled us reproduce an fMRI analysis from Jenkins and Ranganath (2010), where voxel activity at encoding was correlated with subsequent accuracy in remembering when a trial occurred in the experiment. For each participant, we regressed the estimated timeline position against the actual position and used the absolute value of the residual as a measure of error. We found that the accuracy (negative error) of timeline placements was significantly correlated with encoding activity in large clusters of the left dorsolateral prefrontal cortex and medial prefrontal cortex, including dorsomedial PFC and anterior cingulate (p=0.008, FWE-corrected; Center of Gravity MNI coordinates (x, y, z) in mm: [−20, 34.8, 28.4]; cluster size = 1121 voxels in 3 mm MNI space), as well as sub-threshold clusters in the medial parietal cortex, including precuneus and posterior cingulate (p=0.058, FWE-corrected; Center of Gravity MNI coordinates (x, y, z) in mm: [−10.5, −54, 16.1]; cluster size = 419 voxels), and left superior temporal gyrus (p=0.098, FWE-corrected; Center of Gravity MNI coordinates (x, y, z) in mm: [−56.9, −19.1, −3.72]; cluster size = 270 voxels).

Discussion

While human and animal time perception has been a subject of intense empirical investigation (see Wittmann, 2013), most neuroimaging studies have tested its mechanisms on the scale of milliseconds to seconds and neglected paradigms in which long-term memory plays an important role. Such studies have typically employed prospective paradigms, in which participants must deliberately attend to the duration of a stimulus. However, behavioral studies in humans have consistently demonstrated that retrospective paradigms, in which participants are asked to estimate the duration of an elapsed interval from memory, tap into different cognitive mechanisms from prospective ones (Hicks et al., 1976; Zakay and Block, 2004; Block and Zakay, 2008). In retrospective paradigms, changes in spatial, emotional and cognitive context tend to modulate estimates of elapsed time (Block, 1992; Block and Reed, 1978; Sahakyan and Smith, 2014; Pollatos et al., 2014).

In the present study, we used changes in patterns of BOLD activity as a proxy for mental context change. We sought to extend previous neuroimaging work by testing whether neural pattern change predicts duration estimates on the scale of several minutes and in a more naturalistic setting, where spatial location, situational inference, characters, and emotional elements can all drive contextual change.

Participants were scanned while they listened to a 25-minute radio story and were subsequently asked how much time (in minutes and seconds) had elapsed between pairs of clips from the story (all pairs were in fact two minutes apart). Using this approach, we were able to probe retrospective duration memory repeatedly within participants without needing to interrupt the encoding of the story. This allowed us to leverage within-participant variability in neural pattern change and relate it to a participant’s retrospective duration estimates.

Using a within-participant anatomical ROI analysis (encompassing 16 regions selected a priori), we found that neural pattern distance in the right entorhinal cortex and right pars orbitalis at the time of encoding was correlated with subsequent duration estimates. Extending this analysis to all anatomical ROIs in cortex revealed an additional effect in the left caudal anterior cingulate cortex (ACC). These results converged qualitatively with the results of our whole-brain searchlight analysis, which revealed a significant cluster spanning the right anterior temporal lobe.

To test our interpretation that duration estimates were driven by contextual change, we asked a separate group of participants to identify event boundaries in the story. We found that the number of event boundaries between two clips was very highly correlated with participants’ subsequent duration estimates. Importantly, the number of event boundaries was significantly less correlated with duration estimates for a separate group of 'naïve' participants, who had been asked to estimate the elapsed time between clips without first hearing the story. These behavioral experiments provide evidence that retrospective duration estimates were indeed influenced by memory for intervening contextual changes between clips.

In addition, we sought to rule out the possibility that neural pattern distance between two clips reflected only the perceptual or semantic similarity between them, rather than the degree of mental context change. We performed a within-interval analysis, in which pattern distances for the same pair of clips were correlated with duration estimates across participants. The within-interval ROI analysis yielded effects of the same size in the right entorhinal cortex, right amygdala and right insula. The within-interval whole-brain searchlight revealed a significant cluster in the right anterior temporal lobe. Thus, pattern distance in the right anterior temporal lobe, particularly the right entorhinal cortex, predicted variability in duration estimates even when the perceptual and semantic distance of the clips was controlled as much as possible, suggesting that pattern change in these regions may capture idiosyncratic differences in mental context that cannot be predicted from the stimulus alone.

Finally, if neural pattern distance between two clips reflected only the similarity in content between them, rather than abstract contextual similarity, we would expect the correlation between pattern distance and duration estimates to be weakened when controlling for naïve duration estimates, which were based solely on the perceptual and semantic similarity between two clips. Fitting a mixed-effects model to each ROI showed that neural pattern distance in the right entorhinal cortex, along with the left caudal ACC, exhibited a significant effect on duration estimates even when all other factors, including random effects of participants and intervals, as well as naïve duration estimates, were controlled for.

In support of the hypothesis that these regions represent slowly varying contextual information, we found that the right entorhinal cortex, as well as adjacent regions of the MTL, temporal pole and orbitofrontal cortex, had some of the slowest neural pattern change in the entire brain. This is in line with findings that brain regions at the top of the processing hierarchy (furthest from the primary perceptual areas) integrate information over longer time scales and are therefore best suited for representing abstract information extracted from multiple streams of sensory observations (Stephens et al., 2013; Lerner et al., 2011).

Our results implicating the right entorhinal cortex in representing context fit well with other results in the literature. Multiple lines of evidence have suggested an important role for the entorhinal cortex in representing relationships between the spatial environment, task and incoming stimuli. Lesions of the lateral entorhinal cortex in rodents have shown that this region is necessary for discriminating between novel and familiar associations of object and place, object and non-spatial context, or place and context, while leaving non-associative forms of memory unaffected (Buckmaster et al., 2004; Wilson et al., 2013a; 2013b). Moreover, electrophysiological recordings in rats performing a spatial memory task showed that neurons in the medial entorhinal cortex exhibited greater context sensitivity and greater modulation by task-relevant mnemonic information than hippocampal neurons, while hippocampal neurons carried more specific spatial information (Lipton et al., 2007). Medial entorhinal neurons also exhibited longer firing periods, which led the authors to propose that they could bind a series of hippocampal representations of distinct events (Lipton and Eichenbaum, 2008). Thus, changes in distributed entorhinal activity patterns on the scale of minutes might represent changes in contextual elements that are later retrieved to make duration judgments (for theoretical discussion of the role of entorhinal cortex in contextual representation, see Howard et al., 2005).

While the right entorhinal cortex was the only medial temporal lobe region that survived FDR correction in both our within-participant and within-interval ROI analyses, our whole-brain searchlights found a significant relationship between pattern change and duration estimates in two extensive clusters that overlapped in the right hippocampus, the right perirhinal cortex, right amygdala and right temporal pole.

Two previous studies, Noulhiane et al. (2007) and Ezzyat and Davachi (2014), have directly implicated the MTL in retrospective time estimation in humans. Ezzyat and Davachi (2014) scanned participants while they were presented with trial-unique faces and objects on a scene background, which changed every four trials. After each run, participants were asked whether pairs of stimuli had occurred close together or far apart in time (all pairs were about 50 s apart). They found that neural pattern distance in the left hippocampus at the time of encoding was greater for pairs of stimuli later rated as 'far apart', though only when the stimuli were separated by a scene change. Noulhiane et al. (2007) used a retrospective behavioral paradigm similar to ours in patients with unilateral MTL lesions. In that study, participants were asked to estimate the temporal distance between object pictures that had been randomly inserted into a silent documentary film. They found that the degree of left entorhinal, left perirhinal and left temporopolar cortex damage correlated with the degree to which patients overestimated minutes-long intervals in retrospect. (For related evidence from the animal literature, see Jacobs et al., 2013, who showed that bilateral inactivation of the hippocampus impaired rats’ ability to discriminate between similarly long durations, such as 8 and 12 minutes, but not between less similar intervals, such as 3 and 12 minutes.)

Our ROI and searchlight results are in line with the above set of findings, and suggest that patients with anterior MTL lesions might be impaired in retrospective time estimation because patterns of activity in entorhinal, perirhinal, and temporopolar cortex encode contextual changes on the scale of minutes. The set of regions we found is more extensive than those in Ezzyat and Davachi (2014) and mostly right-lateralized. It is possible that the difference in the extent of our effects could be explained by differences in the paradigms that were used. In both the Noulhiane et al. (2007) and Ezzyat and Davachi (2014) studies, the links between objects and their context had to be deliberately constructed. In our study, the clips whose temporal distance participants estimated were excerpts from a story, and therefore strongly linked with a situational, spatial, and emotional context. Thus, it is possible that activity patterns in a more extensive cluster tracked temporal distance estimates because our auditory story caused changes in a broader set of contextual features.

Extending our anatomical ROI analysis to the entire brain showed that pattern change in the left caudal anterior cingulate cortex (ACC) predicted subsequent duration estimates, and this region remained significant in a mixed-effects model controlling for the effect of naïve duration estimates. However, caudal ACC exhibited more rapid pattern change than the anterior and medial temporal lobe, suggesting that it may represent a qualitatively different, faster-changing signal. Caudal ACC activity has been shown to increase in response to shifts in task contingencies (see Shenhav et al., 2013, for a review) and there is converging evidence that ACC responses are important for adjusting behavior to unexpected changes by increasing attention and learning rate (Bryden et al., 2011; Behrens et al., 2007; McGuire et al., 2014). O’Reilly et al. (2013) have provided evidence that the ACC only responds to surprising outcomes when they necessitate updating beliefs about the current state of the world. Although the present study was not designed to test such accounts, our findings are consistent with a role for ACC in updating predictive models. Events in the story that prompt participants to update their beliefs about the characters’ situation are also likely to cause changes in cognitive context and therefore overestimation of duration. However, future studies are needed to test this interpretation, for instance by manipulating belief updating independently of surprise and measuring its effect on retrospective duration estimates.

In addition to the anatomical ROI analysis, we performed a whole-brain searchlight that yielded an extensive cluster covering the right anterior temporal lobe, extending from the medial temporal regions described above to the middle temporal gyrus and temporal pole. Prior work has suggested that the middle temporal gyrus and temporal pole are involved in narrative comprehension (Ferstl et al., 2008; Mar, 2004) and narrative item memory (Hasson et al., 2007; Maguire et al., 1999). Ezzyat and Davachi (2011) found a similarly located cluster (extending from the right perirhinal cortex to the right middle temporal gyrus) to be involved in integrating information within narrative events. In particular, they showed that activity within these regions gradually increases within events and that this increase predicts the degree to which memories become clustered within events. Retrospective time judgments have been shown to increase with the number of events an interval contains (Poynter, 1983; Zakay et al., 1994; Faber and Gennari, 2015), suggesting that brain regions involved in clustering memories by events may carry important information for estimating durations.

Finally, we were able to replicate an analysis by Jenkins and Ranganath (2010), who showed that activity during encoding in the left lateral prefrontal cortex and right anterior hippocampus predicted accuracy in remembering when a trial had occurred in the experiment. Our analysis revealed a cluster in the left dorsolateral prefrontal cortex that is similar to that found in their study. However, we also found significant clusters in the medial prefrontal and medial parietal cortex. These regions may be important for maintaining narrative information over minutes-long timescales (Lerner et al., 2011; Hasson et al., 2015; Chen et al., 2015), which might explain why their activity predicted temporal context memory for clips from an auditory story, but did not appear in Jenkins and Ranganath (2010), where participants recalled the timing of trials which were not linked by a narrative. Moreover, our clusters overlap highly with the 'posterior medial network' (Ritchey and Ranganath, 2012), which has been consistently implicated in episodic memory, episodic simulation and theory of mind.

Conclusion

After probing human participants’ time perception for intervals from an auditory story they had just heard, we found substantial variability in subjective estimates of the passage of time. This variability was significantly correlated with changes in BOLD activity patterns in the right anterior temporal lobe, particularly the right entorhinal cortex, between the start and end of each interval. Control experiments demonstrated that duration estimates were strongly driven by contextual boundaries and that the relationship between neural distance and behavior still held when we controlled for the perceptual and semantic similarity of the clips. Our findings suggest that patterns of activity in these regions might encode contextual information that participants can later retrieve to infer the durations of intervals on the scale of minutes. Additional work is needed to assess how these regions contribute to representing particular contextual features (such as physical environment, abstract task states, and emotional states) and whether changes in each of these features affect retrospective duration estimates differently.

Materials and methods

Participants

18 participants (13 female) took part in the study. All participants were recruited from the Princeton undergraduate and graduate student population and were between 18 and 31 years of age (mean = 22 years). All participants were screened to ensure no neurological or psychiatric disorders. Written informed consent was obtained for all participants in accordance with the Princeton Institutional Review Board regulations. Participants were compensated $20/hr for the scanning session, and $12/hr for the behavioral session.

Given that no previous studies had related neural pattern change during a naturalistic stimulus to subsequent duration estimates for minutes-long intervals, we could not a priori estimate the variance in the pattern change signal, the variance in duration estimates, or the correlation between them. Therefore, rather than performing a power analysis, we chose a sample size that was in the same range as previous fMRI studies that had used naturalistic stimuli to study memory (Lerner et al., 2011, n=11 per condition; Chen et al., 2015, n=13, 14 and 24 per condition; Chen et al., 2016, n=22 [5 excluded]), as well as fMRI studies that had related neural pattern distance to mnemonic judgments (Ezzyat and Davachi, 2011, n=19; Jenkins and Ranganath, 2010, n=16 (1 excluded); Ezzyat and Davachi, 2014, n=21 (3 excluded), Jenkins and Ranganath, 2016, n=17).

Experimental design and stimuli

The experiment consisted of two parts: an approximately 40-min session in the MRI scanner, during which participants listened to the auditory story, followed immediately by a 1-hr behavioral session, during which participants completed a time perception test on the story they had just heard. Figure 1 illustrates the experimental procedure.

fMRI session

Prior to the fMRI session, participants were instructed to listen carefully to the auditory story while in the scanner, because they might be asked questions about it later. The nature of the follow-up questions was unknown to the participants. While in the scanner, participants listened to a 25-minute-long radio adaptation of a science fiction story called 'Tunnel Under the World' (written by Frederik Pohl), originally aired on the radio drama series, 'X Minus One', in 1956.

Time perception test

After leaving the scanner, participants were surprised with a time perception test, presented on a laptop with the Psychophysics toolbox (Brainard, 1997; Pelli, 1997) for MATLAB (The MathWorks Inc., Natick, MA). For each of 43 questions, participants listened to a 10 s clip from the story, followed by another 10 s clip, and were asked to estimate how much time had passed between the first and second clips when they initially heard the story. Participants were specifically asked to estimate how much time had passed in their own lives, rather than how much narrative time had passed in the story. They were also asked to make the judgments as intuitively as possible, without resorting to deductive reasoning about the sequence of events that unfolded in between the two excerpts.

Participants had complete control over the pacing of the test. On each question, they initiated the playing of the clips, and were able to replay the clips if they missed them the first time. They could take as long as they wished to enter their duration estimates (in minutes and seconds), using the keyboard. Clip pairs were identical across participants, but the order in which the pairs were presented was randomized.

To control for the objective passage of time, we ensured that 24 of the clip pairs were 2 minutes apart and 19 of the pairs were 6 minutes apart. Debriefing showed that participants were unaware of this manipulation, and the high variability of duration estimates for both the 2 and 6-min intervals further confirmed that they were unaware of the fixed interval durations.

After participants had provided duration estimates for all 43 intervals, the 86 clips that had delimited those intervals were replayed in a random order (unpaired), and participants were asked to place each clip on the timeline of the story. For each of the 86 questions, a white line appeared on a black background, representing the full length of the story. Participants could place their cursor at any point on that line, followed by the Enter key. After each placement, they were asked to provide a confidence rating on a scale of 1 to 5, reflecting their confidence about that clip’s place in the story. Participants were instructed to base the confidence rating on their certainty of when that clip occurred in the story, rather than on the vividness of the memory for that clip.

Please note: the first of our 18 participants completed a version of the time perception test that differed only in the following way: the specific intervals in the story whose duration was asked about were different. In all other respects (half of the intervals were 2 min while the other half were 6 min apart), the behavioral test was identical to the subsequent 17 participants. For this reason, however, any analyses where duration estimates are compared across participants were performed on 17 rather than 18 participants. Any within-participant analyses were performed on all 18 data sets.

Naïve time perception test

To address the concern that participants were estimating temporal distance between two clips based purely on the content of the clips (rather than their memory of when the clips had occurred in the story), we administered an identical time perception test to a separate group of 17 participants who had never heard the story. Naïve participants were asked to try their best to guess how much time passed between each pair of clips during the original telling of the story, even though they had never heard the story. Participants were told the length of the story (25 min, 33 s) and informed that the maximum distance between two clips could not exceed that duration.

Event boundary test

A separate group of 9 participants were asked to listen to the same story and to press the space bar every time they thought an event had ended and a new event was beginning. This test was purely behavioral and fMRI data were not collected for these participants.

Behavioral data analysis

Significance of correlation between duration estimates and event boundaries

To assess whether the number of event boundaries in an interval predicted duration estimates for that interval, we related our original participants’ duration estimates with event boundary data collected from a separate group of 9 participants. For each 2-min interval from the time perception test, we counted the number of event boundaries that a participant had indicated during that interval and averaged that number across the 9 participants. This resulted in a mean number of event boundaries per interval, which was then correlated with the mean estimated duration of that interval from our original participants.