Abstract

Background: Answering the question of “what works” in healthcare can be complex and requires the careful design and sequential application of systematic methodologies. Over the last decade, the Samueli Institute has, along with multiple partners, developed a streamlined, systematic, phased approach to this process called the Scientific Evaluation and Review of Claims in Health Care (SEaRCH™). The SEaRCH process provides an approach for rigorously, efficiently, and transparently making evidence-based decisions about healthcare claims in research and practice with minimal bias.

Methods: SEaRCH uses three methods combined in a coordinated fashion to help determine what works in healthcare. The first, the Claims Assessment Profile (CAP), seeks to clarify the healthcare claim and question, and its ability to be evaluated in the context of its delivery. The second method, the Rapid Evidence Assessment of the Literature (REAL©), is a streamlined, systematic review process conducted to determine the quantity, quality, and strength of evidence and risk/benefit for the treatment. The third method involves the structured use of expert panels (EPs). There are several types of EPs, depending on the purpose and need. Together, these three methods—CAP, REAL, and EP—can be integrated into a strategic approach to help answer the question “what works in healthcare?” and what it means in a comprehensive way.

Discussion: SEaRCH is a systematic, rigorous approach for evaluating healthcare claims of therapies, practices, programs, or products in an efficient and stepwise fashion. It provides an iterative, protocol-driven process that is customized to the intervention, consumer, and context. Multiple communities, including those involved in health service and policy, can benefit from this organized framework, assuring that evidence-based principles determine which healthcare practices with the greatest promise are used for improving the public's health and wellness.

Keywords: : evidence-based medicine, systematic review, expert panel, policy, decision-making, patient-centered care

Introduction

Consumers, practitioners, insurance companies, and governments spend billions of dollars annually on therapies that have limited or no solid medical evidence, and which may interact adversely with existing treatments or produce direct adverse effects of their own and even exacerbate existing medical conditions.1 Ideally, any type of treatment should only be offered to the public when it has known mechanisms of action and clinically relevant safety and efficacy data from definitive Phase III, controlled, randomized clinical trials. While such an ideal is not possible, more streamlined and systematic steps can at least be provided that are organized in a way to allow the most appropriate interventions to be based on best current evidence at the time.

In addition to the extensive use of conventional practices that are not evidence based, the public uses many practices from outside of the conventional healthcare system, usually without supervision or knowledge of their effectiveness. These are often called complementary and alternative medicine (CAM). When the two systems (conventional and CAM) are integrated into the mainstream health system, the term complementary and integrative medicine (CIM) or just integrative medicine (IM) is often used.2 The 2007 National Health Interview Survey of more than 30,000 U.S. adults found that 38% of American adults had used some form of CAM within the past year, spending nearly $36 billion on these practices and products, mostly out of their own pocket.3 In addition, it was recently shown that 44% of military members use CAM therapies.4 Despite widespread use and dramatic claims of benefit for serious disease, relatively little has been definitively established regarding the efficacy, effectiveness, and safety for a majority of IM practices.5

In order to select the most appropriate interventions from any practice, whether conventional, CAM, or IM, the first question is often “what works?” when deciding what to do, pay for, or avoid. As simple as this question is to ask, attempts to answer it for any particular treatment are often complex. This is true whether the question is applied to a product, practice, program, or policy, and for any particular outcome, be it cure, enhanced well-being, satisfaction, or cost. Because of this complexity, answering this question requires a careful design and the sequenced application of a set of methodologies.

This article describes a streamlined, systematic, phased process for determining “what works” for any treatment—be it a program, practice, or product—by breaking down the process into a subset of corollary questions designed to piece together the overall picture of the treatment and its outcomes.6–8 The approach is called the Scientific Evaluation and Review of Claims in Health Care (SEaRCH™), and uses three methods combined in a coordinated fashion to help determine what works in healthcare. The first method is the Claims Assessment Profile (CAP),8 which seeks to clarify the healthcare claim and question. The second method is the Rapid Evidence Assessment of the Literature (REAL©), which uses streamlined systematic review methods to determine the current state of the evidence.6 The third method involves the structured use of expert panels (EPs)7 in order to deliver evidence-informed decisions to the end user in a transparent fashion.

SEaRCH has been developed over a number of years with input from scientists, practitioners, healthcare administrators, and policy makers, and it has been “field tested” on multiple treatments and claims.6,7,9–15 This validation testing has been done on existing practices in areas of behavioral medicine, self-care, nutrition, life-style programs, and CIM. However, the principles of this framework are drawn from general scientific evaluation methods, and are applicable to any healthcare claim, whether about a program, practice, or product already in use. Its value is demonstrated not so much in examining new theories but in analyzing existing practices that have not been fully evaluated or validated. This article describes the evolution of SEaRCH as an organized framework, the sequences of corollary questions and methodologies that make it up, and the approaches developed to answer these questions and synthesize their information for determining “what works” in healthcare. Through collaboration and partnerships, it is hoped that this organized framework can be improved and implemented more widely to deliver evidence-informed knowledge of what works in healthcare.

Evolution and Development of SEaRCH

Typically, the scientific process begins in the laboratory or with a new technology, and then moves forward through Phase I, Phase II, and finally Phase III clinical trials. This process can take many years and cost hundreds of millions of dollars. Thus, many promising practices (even perhaps the best practices) never make it through this process. In recognition of this fact, researchers and funding agencies have taken the pragmatic approach of occasionally moving into Phase II and III trials before perfect information regarding mechanisms of action is available. These trials can still often take five or more years to complete and cost tens of millions of dollars. Many of the most promising CIM practices (perhaps even the best practices) are not commercially profitable, and so will never be supported at such levels or delivered to the public. In addition, a number of such trials have been performed, and the results have been negative.16 It is therefore imperative that the best choices possible are made regarding specific treatment protocols, including dosing, duration, and frequency of treatment. In the past, funding agencies such as the National Institutes of Health and the Department of Defense have relied upon information derived from evidence-based reviews, as well as public health significance and best case series, to develop Phase III clinical trial initiatives.17 The latter, however, are risky and expensive without adequate pilot data and systematic review evidence. Clinical trials of a more modest size help to develop preliminary tools and information on appropriate outcomes measures, feasibility of data collection, patient burden, and effect size. Prospective studies with appropriate control groups collect preliminary data and assist in the development of both mechanistic/preclinical studies and larger-scale randomized clinical trials.

SEaRCH was developed over a number of years with the support of The National Institutes of Health (NIH), the Centers for Disease Control and Prevention (CDC), and the U.S. military. These methods included Field Investigations (FI) and the Prospective Outcomes Evaluation Monitoring System (POEMS) system used by the National Cancer Institute. What was needed was an organized way to move the CIM field forward through rigorous evaluation of actual clinical practice. SEaRCH development has grown through public–private partnerships over the last decade. The original concept was conceived in 1996, through a governmental mandate to the NIH, to document and evaluate alternative therapies and practices. Through collaboration with the CDC, the Office of Alternative Medicine (OAM) at the NIH developed the Field Investigation and Practice Assessment (FIPA) program in 1997 and conducted evaluations of dozens of CAM practices around the world.

Field investigations

The concept of conducting CAM research within the practice setting is not unique. From 1995 to 1999, the NIH OAM sponsored the FIPA program, under which OAM conducted 33 site visits of CAM practices. The major goals of this program were to: (1) contact CAM and/or CIM and conventional practices that offered promising therapies for specific diseases; (2) assess the feasibility of conducting a practice outcomes assessment/monitoring program or a randomized controlled trial; and (3) screen unusual cases to see if sufficient data existed to engage in retrospective and/or prospective outcomes studies. The OAM followed up on initial site visits by contracting with the CDC to conduct formal field study investigations of promising CAM clinical practices. An example of this work is a field investigation on naturopathy in the treatment of menopause symptoms by Cramer et al.18 In addition, OAM, in conjunction with the National Cancer Institute, established the Cancer Advisory Panel for Complementary and Alternative Medicine (CAPCAM) to advise them on conducting a Best Case Series program.19 This program led to the development of large-scale randomized clinical trials to study therapies that offered promise for cancer outcomes, and evolved into using the Best Case Series design to screen other therapies making claims of benefit for cancer patients. While case series have been conducted by investigators in a number of countries on local treatments for serious disease, the research methodology used in these studies is often inadequate for supporting objective conclusions. SEaRCH addresses this inadequacy by pulling from and organizing the best of these methods, and gathering the research expertise necessary at the local and international level to collect sound data on these practices.

A variation of the NIH FIPA program was extended in 2003 under the Congressionally mandated CAM Research for Military Operations and Health Care (MIL-CAM) program run by the Samueli Institute. It was further developed under the name Epidemiological Documentation Service (EDS) through a subcontract to the National Foundation of Alternative Medicine (NFAM). The EDS was later taken over by the Samueli Institute where it was further developed, redesigned, and renamed SEaRCH. The goal of this latter redesign was to enable more rapid and complete throughput and assessment of not only CAM practices but any practice, conventional or unconventional, already in use and claiming a particular benefit. Part of the improvement in SEaRCH was done in collaboration with the RAND Corporation, which houses a Samueli/RAND Program on Policy Research in Integrative Health. This collaboration enhanced the descriptive and qualitative section of SEaRCH.8 In addition, the Institute has begun to incorporate EP methodology, adapted from RAND's “Appropriateness” process to complete the SEaRCH design described here.7,20

Prospective outcomes studies

One method not incorporated into SEaRCH in its current version is prospective clinical trials. Walach has developed a cogent argument for the value of prospective outcomes studies.21 He cautions against rushing to placebo-controlled, randomized clinical trials without sufficient information on critical issues such as healing rates, diseases that might respond best to the therapy, time to initial improvement, dose, and so on. To address this, an approach was proposed called the prospective outcomes documentation system (PODS) for getting information on practice delivery and effectiveness in real-world settings. Unlike most prospective studies, PODS (and the similar method developed by NCI called POEMS) captures prospective data without interfering or altering the clinical practice as a whole. An example can be found with prospective outcomes studies in the chiropractic literature. Hayden et al. conducted a prospective cohort study of chiropractic treatment for pediatric patients with low-back pain and concluded they had a favorable response to chiropractic management.22 Nyiendo et al. reported a series of positive findings from their practice-based study of chiropractic and medical patients with low-back pain.23–25

Perhaps the best examples of prospective outcomes evaluations have occurred in the area of CAM treatments for cancer. Richardson et al. collected prospective outcomes data from several oncology clinics that take a CAM approach to cancer therapy.26,27 They reported on both the feasibility and challenges of conducting outcomes research of CAM therapies in cancer clinics. Pfeifer and Jonas used a PODS approach to investigate Immuno-Augmentative Therapy (IAT), a CAM therapy used by thousands of cancer patients that had not been previously evaluated in a systematic fashion for either safety or efficacy.28 This PODS demonstrated no significant improvement in cancer survival following IAT over expected outcomes when all patients were followed up. A previous best case series had reported positive outcomes for IAT.29 Often a PODS or randomized clinical trial is recommended by a research panel after a claim is assessed by SEaRCH. That will be demonstrated later in this article.

SEaRCH Process and Frameworks

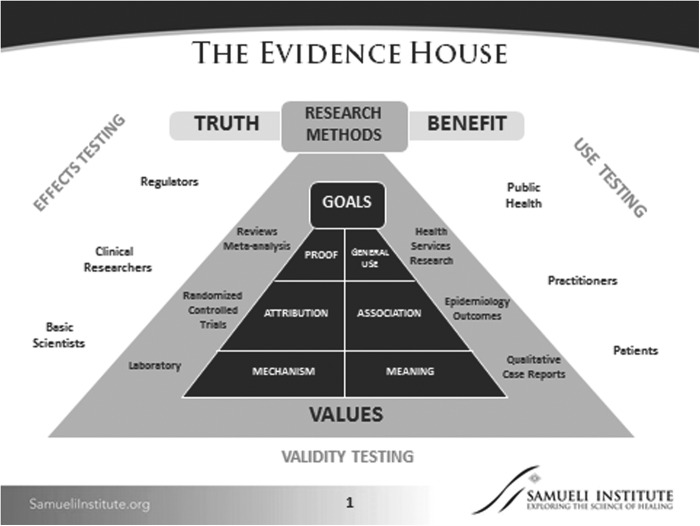

The SEaRCH process is drawn from two primary scientific methods for evaluation of therapeutic claims in medicine and healthcare. The first, called the “Evidence House,”30,31 is a modification of the standard “Evidence Hierarchy” used by multiple groups, including clinical trialists, systematic reviewers, clinical guideline developers, and federal agencies dealing with product regulation (e.g., the Food and Drug Administration), comparative effectiveness research (e.g., Agency for Healthcare Research and Quality), or patient-centered research (e.g., Patient-Centered Outcomes Research Institute; Fig. 1).

FIG. 1.

The Evidence House.

In the Evidence House framework, knowledge elements are matched to particular methods and then these methods are matched to the goals of stakeholders and decision makers such as patients, practitioners, researchers, or regulators. These are placed in a semi-hierarchical arrangement, with laboratory and qualitative research as the foundational methods seeking to collect new information, with the goals of moving them toward determining their “reality” and “relevance,” respectively. Evidence is then added vertically through four other, more complex approaches needed to build on these two types of knowledge. Since SEaRCH is focused on existing practices or products, it draws from qualitative, observational, controlled trial and systematic review approaches to construct a “mixed-method” sequence specifically designed for the central question of interest: “what works in healthcare?”

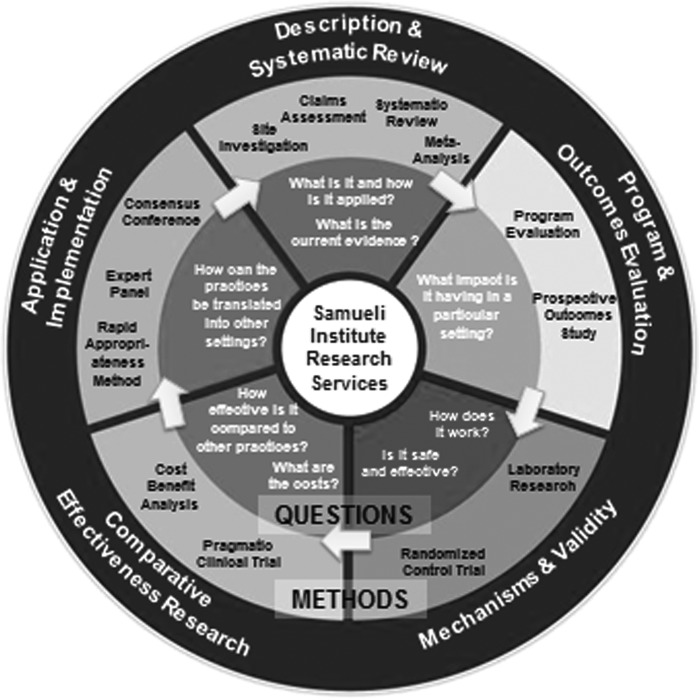

The second framework that was used in the design of SEaRCH is the Methodology Mandala© illustrated in Figure 2. Created primarily to facilitate better management of comparative effectiveness research, the Methodology Mandala uses a circle of questions (inner ring) with a matching set of methods (outer ring), each integrated into a set of coordinated approaches to the questions asked in each knowledge domain. Specifically, the current SEaRCH design draws from and streamlines the evaluation of methods beginning at the top of the Mandala through practice descriptions in order to answer “what is it and how is it being applied?” through a method called the CAP, and then uses systematic review methods to answer “what is the current state of the evidence?” through a streamlined process called the REAL. Then it selects application and implementation methodologies to answer the question, “How can the practice be translated to other settings?” using structured, evidence-informed EPs. Together, these methods, which are all aimed to answer specific questions about what works, are arranged into a simplified, phased methodological process (Table 1). In other words, each method is designed to answer the particular sub-questions needed to complete the knowledge necessary to achieve the “what works in healthcare?” goal. The outcome of this process can be applied to decisions about appropriateness of a practice, policies for payment and implementation, and/or in building a logical research agenda. The use of SEaRCH methodology may point to the use of other methods listed around the Mandala, such as the PODS or randomized trials, and ultimately, the translation of the evidence into public value.

FIG. 2.

Research Methodology Mandala.©

Table 1.

SEaRCH Framework

| Query | SEaRCH method | Outcome |

|---|---|---|

| What is it? How is it applied? | Claims Assessment Profiles and stakeholder engagement | Detailed description of practice, process, and reported outcomes |

| What is the current evidence? | Systematic reviews | Summary of the evidence supporting practice or product |

| How can the practice be utilized? | Expert panels | Detailed outline for next steps in research or clinical application |

SEaRCH, Scientific Evaluation and Review of Claims in Health Care.

SEaRCH Methods

The current version of SEaRCH consists of three primary methods: the CAP, REAL, and EP process. The CAP methodology seeks to describe the practice and clarify the healthcare claim/question. It does this by accurately describing the practice, precisely defining what it claims to do, and determining readiness, capacity, and resources involved in further research or evaluation.8 The second method is the REAL, which is a streamlined, efficient systematic review process conducted to determine the quantity, quality, and strength of evidence and risk/benefit for the treatment as reflected in current research. The REAL provides the evidence base of a healthcare claim, so that groups can identify the gaps and next steps needed in a field.6 The final method involves the structured management of EPs for making value judgments about the use of current evidence.7 There are several types of EPs, depending on the purpose and need. A Clinical Expert Panel focuses expert opinion on the appropriateness of a given clinical practice or product for clinical use. A Research Expert Panel focuses expert opinion on research directions for a practice or product. A Policy Expert Panel focuses on making evidence-based policy judgments needed to direct implementation of a practice claim. Patients can be incorporated into the panel process for making more patient-centered decisions, which is called a Patient Expert Panel.

Method for Addressing the Sub-Questions

Describing the intervention

Each of the above methods is designed to provide the types of information needed to answer “what works in healthcare?” These are posed as a series of sub-questions that must be addressed in order to have a full evidence basis for the answer. The first sub-question involves defining and describing what the intervention is for any claim. If the intervention is a single chemical agent, this becomes relatively simple, including standardization and quality control of the product and isolation of its effects in randomized, placebo-controlled trials. If the product is a combination of chemicals, such as an herb or supplement, quality control and the issues of synergy of product components multiply the complexity logarithmically. If the intervention is a practice, then variation in the practice adds increased complexity to the description. For example, a surgical procedure may be described in uniform terms but delivered in a variety of ways. A procedure such as acupuncture, where different philosophies and individualization of treatment occur, also adds complexity. If an intervention involves a combination of practices customized to the particular patient involving, for example, a product for delivery, a method of education, and a behavioral change (such as in lifestyle change), this further increases variations in delivery. If the intervention requires compliance of the patient or participant, behavioral changes, or changes in attitude and expectation, the key components of the “it” (the intervention or treatment) may be difficult to measure and so may be missed in an evaluation. Multiply this complexity again if there are multiples of interventions in a policy guideline attempting to optimize and coordinate various approaches. These types of interventions—product, practice, program, and policy—layer on each other, and rapidly increase the complexity and variability of delivery, measurement, and analysis. The first methodological segment in SEaRCH—the CAP—is designed to address this complexity. The CAP provides an approach to determine what the “it” is for answering the question of “what works?” A full description and examples of the CAP method can be found in detail elsewhere.8 The outcome of a CAP is a full description of the intervention and its hypothesized outcome and impact on the claim.

Describing the outcomes

In addition to answering the question of what the “it” of the intervention is, the question of what impact “it” has becomes another sub-question the CAP addresses. This is the question of “it works for what?” or “what is the claimed or hypothesized outcome(s) from the treatment?” An outcome might be a defined change in a biochemical parameter such as cholesterol, or it might be the elimination of pathology such as a tumor, or it might be the alleviation or elimination of an illness, as defined by a complex experience of symptoms or a functional ability. If the outcome falls into a more elusive or subjective category such as wellness or prevention, it becomes more complex and difficult to measure. For example, consider how wellness is defined; “who wants it, in what context, and over what time period?” Depending on the stakeholder, the outcome may have nothing to do with individual health at all. Common outcome questions include “what is the cost?” “how many people are getting the service?” or “how does it compare with some other intervention?” Some of these outcomes may or may not be of particular interest to a patient or their family. The involvement of key stakeholders throughout each segment of SEaRCH, not only the CAP but also the REAL and EP, frames the outcomes to ensure relevance for the end user.6–8

Describing the population

Another corollary question is “what works for whom?” Is the outcome something that will be used by or for a specific group? For example, cancer survival and a longer life might be the main goal of the medical profession for cancer patients. However, in an elderly population or those undergoing a serious intervention with many side effects, this may not be the outcome of most interest to a patient. Patients may be more interested in quality of life, or achieving specific lifeline goals.

Describing the comparisons

Finally, the most complex corollary question of all may be “how does it work when compared to what?” To determine if an intervention is producing a value relative to other interventions requires that they be compared on outcome, adverse effects, feasibility, preference, and cost, both human and economic. That is, what is the overall cost-value of two different interventions? A rational approach to such a comparison can only be conducted when the primary intervention is fully described in the CAP.8 For example, if the intervention is a chemical, it means comparing it to the same treatment without the chemical. If the intervention is a practice or a program, it means comparing it to a patient receiving a different practice or program, or receiving no practice or program. The first of these comparisons is called “efficacy,” and the second of these comparisons is called “comparative effectiveness.” These are very different types of evidence, and working with key stakeholders to decide what is the most meaningful and useful evidence needed is essential in framing research questions and agendas.

Design of SEaRCH

Given the complexity of answering the primary question of “what works?” and the sub-questions of “what is it, for what, for whom, and compared to what?” that are essential for ensuring useful evidence, it is little wonder that so few existing interventions (conventional or complementary) are adequately evaluated. Often, some of the sub-questions are answered, but without the full picture of evidence needed to answer the primary question of “what works?” CAP helps to define clearly with the stakeholders involved the answers to those essential questions. The REAL comes into play for determining “what is the current evidence?” to support the “it”, and clarifying the “what, for whom, and compared to what?” issues. This clarification is called the Population, Intervention, Control/Comparator, and Outcomes (i.e., PICO framework for systematic review)32 where the evidence of interest is clearly defined with the key stakeholders upfront, and a streamlined process applied to rigorously and objectively determining the evidence base for the claim. Finally, the EP process allows decision makers in healthcare—policy makers, researchers, clinicians, and patients—to weigh in on making complex decisions but with good evidence as a basis.

Aligning the components of SEaRCH

There is currently no systematic, streamlined, and inexpensive way of going from one sub-question to another in an organized manner in healthcare decisions. SEaRCH is designed to do that. SEaRCH creates a linear, phased set of methods to answer each sub-question. To preserve rigor, each method is applied using current standards of evidence quality.

Streamlining judgment, managing bias, and enhancing rigor

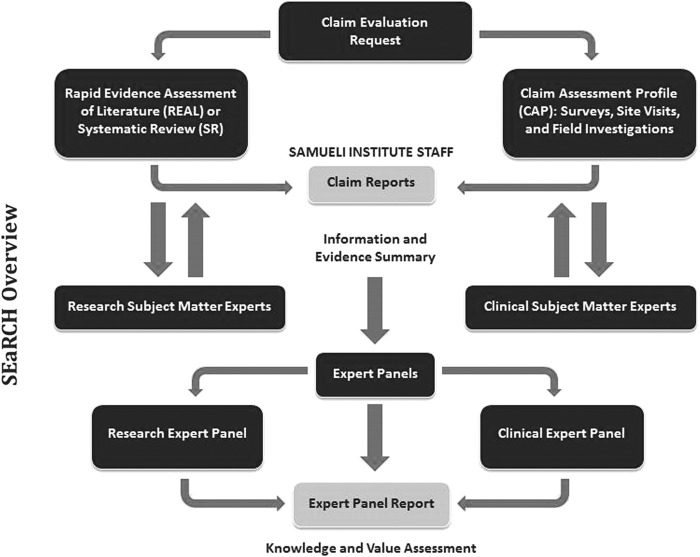

In order to achieve a streamlined process for incorporating judgments about relevance, SEaRCH uses two important systems. First, it uses a structured EP process for the judgment process. That process can focus on what research approaches are needed (research panel), or what is appropriate for clinical practice based on existing evidence (clinical panel), or what implementation guidelines are needed (policy panel), or to determine patient needs, preferences, and perspectives (patient panel). Whatever its composition, the EP uses a semi-quantitative Delphi method. The method involves extracting judgment from the stakeholders (clinicians, researchers, policy makers, and patients) in a way that allows for independent, blinded opinions from diverse perspectives about the relevance and use of the evidence presented. This reduces the bias that is often introduced when EPs are employed and, at the same time, accesses the best expert judgment grounded in evidence. Second, SEaRCH uses an information technology platform throughout its design that increases efficiency and reliability and ensures complete transparency. Through the use of an online technology platform designed specifically for this process, SEaRCH is able to deliver results faster with improved accuracy and reliability and provides a complete audit trail of all changes made during each step of the process. Samueli has applied this system to integrate across the SEaRCH steps so that all data can be captured remotely in the system and the reports shared across the three steps. The technology alone can reduce the cost and time by threefold. Figure 3 graphically illustrates the design of SEaRCH and how the sub-questions are answered in each step.

FIG. 3.

Scientific Evaluation and Review of Claims in Health Care (SEaRCH™) overview.

Each of these methods (the CAP, REAL, and EP) have been described in detail in previous publications.6–8 The approach is designed for practices that are already being used and delivered, such as those in primary care, traditional (indigenous) practices, market-derived products and procedures, military medicine, and CAM/CIM. It is especially useful in controversial areas where independent analysis, bias reduction, transparency, and balanced input from diverse stakeholders are needed. In addition, SEaRCH is useful for the evaluation of self-care practices such as weight loss, dietary supplements, and stress and pain management programs that people adopt and use for self-care.9–12,14,15,33 The approach has been presented at national and international conferences, in research methodology forums, and through workshops and online training. The goal is to be able to use this method in any area of healthcare when confronted with the challenging questions of “what works” in healthcare.

Conclusions

This article describes the overall SEaRCH process and its components. These include the CAP, the REAL, and the EP. The CAP process provides a detailed description of the treatment and claim. The REAL process provides a rigorous assessment of the current literature to support making evidence-based decisions. EPs are the final method used for gaining structured insight into what experts believe about the implications of the evidence for a given treatment or approach. Together, these methods can be used to address the question of “what works?” in healthcare in a rigorous yet efficient and stepwise manner. In summary, SEaRCH is a series of methodologies for evaluating claims about the prevention or treatment of disease and/or for improving health. Through its systematic, streamlined, protocol-driven process, it can critically evaluate claims for healthcare. Consumers, medical and healthcare communities, and researchers can benefit from SEaRCH by using it to evaluate healthcare claims to determine those with greatest promise for treating disease and improving health.

Acknowledgments

The authors would like to acknowledge Mr. Avi Walter for his assistance with the overall SEaRCH™ process developed at the Samueli Institute, and Ms. Viviane Enslein for her assistance with manuscript preparation. In addition, the authors would like to acknowledge all partners who were involved in the evolution and development of SEaRCH framework.

This project was partially supported by award number W81XWH-08-1-0615-P00001 (United States Army Medical Research Acquisition Activity). The views expressed in this article are those of the authors and do not necessarily represent the official policy or position of the U.S. Army Medical Command or the Department of Defense, or those of the National Institutes of Health, Public Health Service, or the Department of Health and Human Services.

Author Disclosure Statement

No competing financial interests exist.

References

- 1.Berwick D. Don Berwick, MD on transitioning to value-based health care. Healthc Financ Manage 2013;67:56–59 [PubMed] [Google Scholar]

- 2.Coulter I, Khorsan R, Crawford C, et al. Challenges of systematic reviewing integrative health care. Integr Med Insights 2013;8:19–28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.National Health Statistics Report: Costs of complementary and alternative medicine (CAM) and frequency of visits to CAM practitioners. Online document at: www.cdc.gov/mmwr/preview/mmwrhtml/mm5735a5.htm, accessed September7, 2016 [PubMed]

- 4.Goertz C, Marriott B, Finch M, et al. Military report more complementary and alternative medicine use than civilians. J Altern Complement Med 2013;19:509–517 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Coulter ID, Khorsan R, Crawford C, et al. Integrative health care under review: An emerging field. J Manipulative Physiol Ther 2010;33:690–710 [DOI] [PubMed] [Google Scholar]

- 6.Crawford C, Boyd C, Jain S, et al. Rapid Evidence Assessment of the Literature (REAL©): Streamlining the systematic review process and creating utility for evidence-based health care. BMC Res Notes 2015;8:631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Coulter I, Elfenbaum P, Jain S, et al. SEaRCH expert panel process: Streamlining the link between evidence and practice. BMC Res Notes 2016;9:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hilton L, Jonas WB. Claim Assessment Profile: A method for capturing health care evidence in the Scientific Evaluation and Review of Claims in Health Care (SEaRCH). J Altern Complement Med 2016. [Epub ahead of print]; DOI: 10.1089/acm.2016.0292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Buckenmaier C, III, Crawford C, Lee C, et al. Are active self-care complementary and integrative therapies effective for management of chronic pain? A Rapid Evidence Assessment of the Literature and recommendations for the field. Pain Med 2014;15:S1–S113 [DOI] [PubMed] [Google Scholar]

- 10.Crawford C, Boyd C, Paat CF, et al. The impact of massage therapy on function in pain populations—A systematic review and meta-analysis of randomized controlled trials: Part I, patients experiencing pain in the general population. Pain Med 2016. May 10 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Boyd C, Crawford C, Paat CF, et al. The impact of massage therapy on function in pain populations—A systematic review and meta-analysis of randomized controlled trials: Part II, cancer pain populations. Pain Med 2016. May 10 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Boyd C, Crawford C, Paat CF, et al. The impact of massage therapy on function in pain populations—A systematic review and meta-analysis of randomized controlled trials: Part III, surgical pain populations. Pain Med 2016. May 10 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Crawford C, Teo L, Elfenbaum P, et al. Moving nutritional science evidence into practice: A methodological approach. Nutr Rev, in press [DOI] [PubMed] [Google Scholar]

- 14.Attipoe S, Zeno SA, Lee C, et al. Tyrosine for mitigating stress and enhancing performance in healthy adult humans: A Rapid Evidence Assessment of the Literature. Mil Med 2015;180:754–765 [DOI] [PubMed] [Google Scholar]

- 15.Costello RB, Lentino CV, Boyd CC, et al. The effectiveness of melatonin for promoting healthy sleep: A Rapid Evidence Assessment of the Literature. Nutr J 2014;13:106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jonas W, Eisenberg D, Hufford D, et al. The evolution of complementary and alternative medicine (CAM) in the USA over the last 20 years. Forsch Komplementmed 2013;20:65–72 [DOI] [PubMed] [Google Scholar]

- 17.National Center for Complementary and Alternative Medicine Third Strategic Plan 2011–2015: Exploring the Science of Complementary and Alternative Medicine. Online doucument at: http://nccam.nih.gov/about/plans/2011, accessed July17, 2013

- 18.Cramer EH, Jones P, Keenan NL, et al. Is naturopathy as effective as conventional therapy for treatment of menopausal symptoms? J Altern Complement Med 2003;9:529–538 [DOI] [PubMed] [Google Scholar]

- 19.Institute of Medicine (U.S.) Committee on the Use of Complementary and Alternative Medicine by the American Public. Complementary and Alternative Medicine in the United States. 1. Introduction. Online document at:://www.ncbi.nlm.nih.gov/books/NBK83804/, accessed October18, 2016

- 20.Shekelle P, Adams A, Chassin M, et al. The Appropriateness of Spinal Manipulation for Low-Back Pain: Project Overview and Literature Review. Santa Monica, CA: RAND, 1991 [Google Scholar]

- 21.Walach H. The efficacy paradox in randomized controlled trials of CAM and elsewhere: Beware of the placebo trap. J Altern Complement Med 2001;7:213–218 [DOI] [PubMed] [Google Scholar]

- 22.Hayden JA, Mior SA, Verhoef MJ. Evaluation of chiropractic management of pediatric patients with low back pain: A prospective cohort study. J Manipulative Physiol Ther 2003;26:1–8 [DOI] [PubMed] [Google Scholar]

- 23.Nyiendo J, Haas M, Goldberg B, et al. A descriptive study of medical and chiropractic patients with chronic low back pain and sciatica: Management by physicians (practice activities) and patients (self-management). J Manipulative Physiol Ther 2001;24:543–551 [DOI] [PubMed] [Google Scholar]

- 24.Haas M, Nyiendo J, Aickin M. One-year trend in pain and disability relief recall in acute and chronic ambulatory low back pain patients. Pain 2002;95:83–91 [DOI] [PubMed] [Google Scholar]

- 25.Stano M, Haas M, Goldberg B, et al. Chiropractic and medical care costs of low back care: Results from a practice-based observational study. Am J Manag Care 2002;8:802–809 [PubMed] [Google Scholar]

- 26.Richardson MA, Russell NC, Sanders T, et al. Assessment of outcomes at alternative medicine cancer clinics: A feasibility study. J Altern Complement Med 2001;7:19–32 [DOI] [PubMed] [Google Scholar]

- 27.Richardson MA, Sanders T, Palmer JL, et al. Complementary/alternative medicine use in a comprehensive cancer center and the implications for oncology. J Clin Oncol 2000;18:2505–2514 [DOI] [PubMed] [Google Scholar]

- 28.Pfeifer BL, Jonas WB. Clinical evaluation of “immunoaugmentative therapy (IAT)”: An unconventional cancer treatment. Integr Cancer Ther 2003;2:112–113 [DOI] [PubMed] [Google Scholar]

- 29.Shekelle P, Coulter I, Hardy ML, et al. Best-case series for the use of immuno-augmentation therapy and naltrexone for the treatment of cancer. Evid Rep Technol Assess (Summ) 2003:2p. [PMC free article] [PubMed] [Google Scholar]

- 30.Jonas W. The Evidence House: How to build an inclusive base for complementary medicine. West J Med 2001;175:79–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jonas W, Guerrera M. Complementary and alternative medicine. In: South-Paul J, Matheny S, Lewis E, eds. Current Diagnosis and Treatment in Family Medicine. 4th ed. New York: McGraw-Hill Education, 2015:519–529 [Google Scholar]

- 32.Richardson WS, Wilson MC, Nishikawa J, et al. The well-built clinical question: A key to evidence-based decisions. ACP J Club 1995;123:A12–13 [PubMed] [Google Scholar]

- 33.Crawford C, Teo L, Yang E, et al. Is hyperbaric oxygen therapy effective for traumatic brain injury? A rapid evidence assessment of the literature and recommendations for the field. J Head Trauma Rehabil 2016. September 6 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]