Abstract

Introduction

The OR is one of the most commonly used measures of association in preventive medicine, and yet it is unintuitive and easily misinterpreted by journal authors and readers.

Methods

This article describes correct interpretations of ORs, explains how ORs are different from risk ratios (RRs), and notes potential supplements and alternatives to the presentation of ORs that may help readers avoid confusion about the strength of associations.

Results

ORs are often interpreted as though they have the same meaning as RRs (i.e., ratios of probabilities rather than ratios of odds), an interpretation that is incorrect in cross-sectional and longitudinal analyses. Without knowing the base rate of the outcome event in such analyses, it is impossible to evaluate the size of the absolute or relative change in risk associated with an OR, and misinterpreting the OR as an RR leads to the overestimation of the effect size when the outcome event is common rather than rare in the study sample. In case-control analyses, whether an OR can be interpreted as an RR depends on how the controls were selected.

Conclusions

Education, peer reviewer vigilance, and journal reporting standards concerning ORs may improve the clarity and accuracy with which this common measure of association is described and understood in preventive medicine and public health research.

Introduction: A Tale of Three ORs

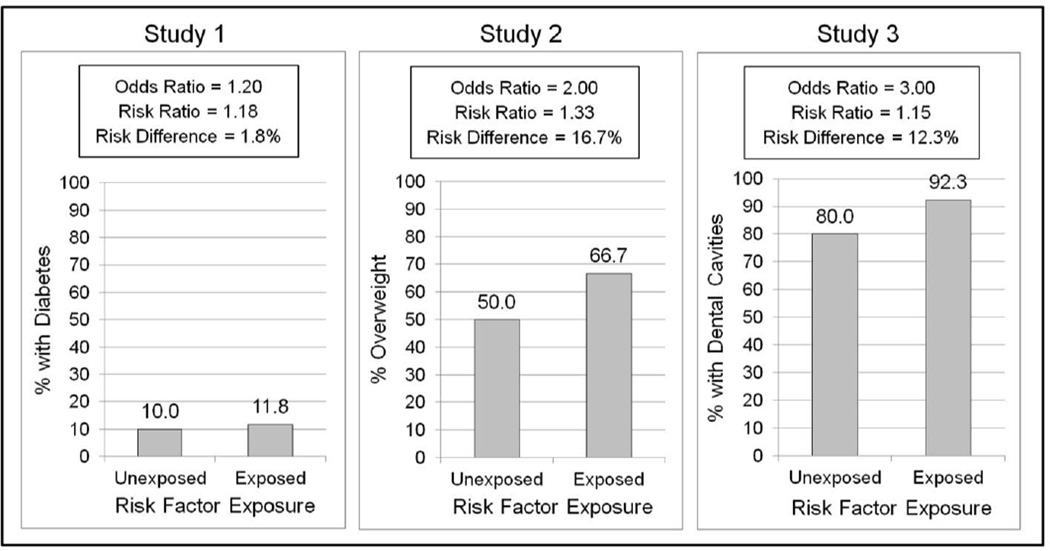

Imagine the results of three hypothetical, cross-sectional studies on a risk factor for various health problems. The risk factor was associated with increased odds of being diagnosed with diabetes in Study 1 (OR=1.2), increased odds of being overweight in Study 2 (OR=2.0), and increased odds of having dental cavities in Study 3 (OR=3.0). Of these, which outcome has the greatest association with the risk factor? Some readers may be tempted to say that the risk factor increases risk for dental cavities the most, compared with diabetes or being overweight, believing that an OR of 3.0 means that exposed participants were 3.0 times more likely than other participants to have cavities, and that ORs of 1.2 and 2.0 reflect 20% and 100% increases in risk, respectively. These interpretations of ORs are incorrect.

In fact, the increase in risk associated with each OR depends on the rate of the outcome in the study sample. Figure 1 shows hypothetical results of each study. As shown, in Study 1, if only 10% of the participants unexposed to the risk factor had diabetes, then an OR of 1.2 would reflect an increase in risk from 10% to 11.8% (an 18% increase). In Study 2, if 50% of the participants unexposed to the risk factor were overweight, then an OR of 2.0 would reflect an increase in risk from 50% to 66.7% (a 33% increase). Finally, in Study 3, if 80% of the participants unexposed to the risk factor had cavities (e.g., if the study was conducted in a very high-risk population), then an OR of 3.0 would reflect an increase in risk from 80% to 92.3% (a 15% increase). Thus, based on these hypothetical outcome rates, Study 2 would have shown the largest increase in risk in both relative and absolute terms, even though the OR from the study was much smaller than the OR from Study 3 (i.e., 2.0 vs 3.0). Moreover, Study 3 would have shown the smallest relative risk (a 15% increase in risk), despite having the largest OR.

Figure 1.

Hypothetical results of three cross-sectional risk factor studies showing that the increase in risk associated with a given OR depends on the rate of the outcome variable in the study sample. Study 3 found the largest OR (3.0) but the smallest risk ratio (15% increase in risk) and the second largest risk difference (increase in risk from 80% to 92.3%).

Distinguishing Between ORs and Risk Ratios

Because ORs are ratios of odds rather than probabilities, they are unintuitive. A probability is an easy-to-understand concept that refers to the number of times an event is expected to occur divided by the number of chances for it to occur (frequentist interpretation) or the degree of belief that an event will occur (Bayesian interpretation). For instance, in ten fair coin flips, the probability of “heads” is 5 divided by 10, or 0.5. By contrast, odds refer to the number of times an event is expected to occur divided by the number of times it is expected to not occur. In ten fair coin flips, the odds of “heads” are 5 divided by 5. In daily life, risk is rarely described in terms of odds, as odds have “no clear conceptual meaning (outside of horse racing circles),”1 and many consumers of public health research may be unaware that odds are defined differently from probabilities.

As shown in Table 1, ORs are defined in terms of odds, whereas risk ratios (RRs) are defined in terms of probabilities. For instance, in a hypothetical study of the cross-sectional association between sitting time and obesity in which 450 of 1,000 sedentary participants were obese, compared with 300 of 1,000 non-sedentary participants, the OR for obesity would be 1.9 (i.e., [450/550] / [300/700]). However, sedentariness would be associated with only a 50% increase in the risk of obesity (i.e., RR=[450/1,000] / [300/1,000]=1.5). The practical meaning of the results based on the OR (i.e., 1.9 times higher odds of obesity among sedentary individuals) may be unclear to readers, and misinterpreting the OR as an RR would overestimate the size of the association.

Table 1.

Terms of Interest

| Term | Definition |

|---|---|

| Risk ratio (RR) | the probability of an outcome event in one group divided by the probability of the event in another group |

| OR | the odds of an outcome event in one group divided by the odds of the event in another group |

| Risk difference | the probability of an outcome event in one group minus the probability of the event in another group |

Confusion about the meaning of odds leads many authors and readers of scientific articles to misinterpret ORs from cross-sectional and longitudinal studies to mean the same thing as RRs.2 For instance, a report may incorrectly conclude that a risk factor “doubled the risk (OR=2.0)” for an adverse outcome, or that people in one group were “over three times as likely (OR=3.2)” or “75% more likely (OR=1.75)” than those in another group to engage in a risk behavior. Such incorrect interpretations of ORs have been flagged in medicine and public health for decades,2–10 and yet they proliferate.

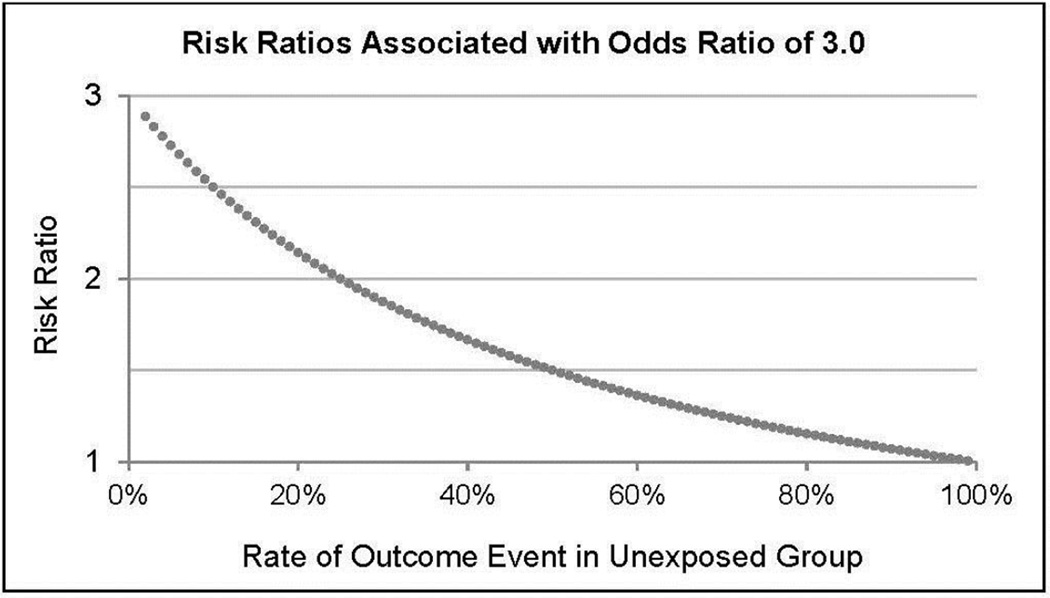

In cross-sectional and longitudinal analyses, misinterpreting an OR as an RR leads to inflating the size of an association. The extent of overestimation depends on the base rate of the outcome variable in the study sample. If the outcome has a very low base rate in the sample (i.e., if it is very uncommon among people unexposed to the risk factor), the OR may give a rough approximation of the RR (e.g., Study 1, Figure 1). This is referred to as the “rare disease assumption” in epidemiology.11 However, as the base rate of the outcome variable increases, the OR and RR deviate, because the OR increases while the RR does not. Thus, when outcomes are common, the OR can severely exceed the RR. Figure 2 provides an example, showing that an OR of 3.0 does not indicate a tripling of risk and, in fact, corresponds to a shrinking RR as the base rate of the outcome in the sample increases.

Figure 2.

Risk ratios associated with an OR of 3.0, depending on the rate of the outcome event among participants unexposed to the risk factor, in cross-sectional and longitudinal analyses.

In case-control analyses, ORs can be used to estimate RRs without the need for the rare disease assumption, but this depends on how the controls are selected.1,12 To understand the logic behind this approach, it should be noted that ORs have a statistical property whereby the OR for an outcome based on an exposure is mathematically equivalent to the OR for the exposure based on the outcome.13 Because of this property, in case-control analyses, the OR for an outcome based on an exposure can be calculated by selecting cases and controls and comparing their odds of exposure. Furthermore, if the controls are selected without regard to their status on the outcome variable (i.e., if controls may or may not have experienced the outcome event), the controls will provide information about the odds of exposure among the overall sample including cases and non-cases, and the OR from such case-control analyses will be equivalent to the RR regardless of the base rate of the outcome event in the study sample (i.e., no rare disease assumption needed).12

Understanding the difference between ORs and RRs in cross-sectional and longitudinal analyses is critical because researchers in the field of preventive medicine often deal with highly common outcomes (e.g., obesity, adverse outcomes in high-risk populations), which causes the two measures of association (RR and OR) to diverge. In cases where the RR is small but the outcome event is common, a large OR may lead to unwarranted attention or conclusions among those who interpret and apply results. Such misinterpretations may be particularly common among those who disseminate or apply (rather than conduct) research—such as the media, policymakers, or clinicians—if they are not familiar with the statistical calculations underlying ORs and RRs. Moreover, misinterpretations of ORs may also have implications for meta-analytic syntheses of research results, as statistical recommendations for converting ORs to effect sizes for metaanalyses do not require incorporation of the likelihood of the outcome.14

Improving Communication About Relative and Absolute Risk

Although unintuitive, the OR remains a very commonly used measure in public health research because of its statistical properties. In cross-sectional and longitudinal analyses, the frequent use of ORs likely owes to the fact that the statistic is easy to compute in logistic regression models using commonly available statistical software.6 In particular, AORs can easily be computed to describe associations after controlling for one or more potentially confounding variables.6 As mentioned above, the OR also has statistical properties that make it an ideal statistic for use in case-control analyses.13

The popularity of ORs, combined with their unintuitive nature, means that researchers need to be better educated to make proper use of ORs. As with other concepts in the increasingly complex field of medical statistics, this requires a foundational understanding of probability.15 Moreover, given the interest of non-researchers (e.g., policymakers and the media) in scientific research,16,17 future papers in which ORs are presented might take steps to help readers understand the sizes of the relative and absolute risks being described.

One potential method of clarifying the sizes of relative and absolute risk is to calculate and present the predicted probability of the outcome at various levels of the predictor variable (e.g., exposed versus unexposed). This can be done using standard output from a logistic regression.18 When a logistic regression model includes covariates, the predicted probabilities can be calculated based on specific values of the covariates (e.g., their average values, modal values, or reference levels), but it should be noted that the resulting predicted probabilities will allow inference only to the stratum specified by the values of the covariates in the calculations.19

Predicted probabilities can also be averaged across values of the covariates (incorporating weights that reflect the distribution of the covariates in the population of interest) to yield predicted probabilities for the overall population.19 These predicted probabilities can be used to compare the risk of exposed versus unexposed individuals using an RR.19,20

Additionally, predicted probabilities from logistic regression can be compared using a risk difference by subtracting the predicted probability among unexposed individuals from that among exposed individuals. When the risk factor is causal, the difference between exposed and unexposed individuals is termed the attributable risk,21 a statistic that can be helpful in describing the potential benefits of risk mitigation efforts. Presenting the absolute risk among exposed and unexposed individuals is consistent with expert recommendations on communicating research findings to the public, which point out that it is inadequate to describe relative risk (e.g., “twice the risk”) without describing the absolute levels of the risks involved.22 After all, a doubling of risk may reflect an increase from 0.001% to 0.002% or from 10% to 20%, which have much different implications and importance.22

Alternatives to logistic regression are also available to calculate and present relative risk information without the need for ORs, as described in a recent paper.7 Simulations revealed that Poisson regression, log-binomial regression, and a “doubling of cases” method (i.e., in which the data are manipulated such that a logistic regression can produce an RR) can yield correct RRs and CIs.7 Each of these methods has unique limitations that should be considered in relation to a data set, researchers’ programming expertise, and the goals of the analysis (e.g., log-binomial regression models may fail to converge in some cases).7 Also, although presenting RRs rather than ORs can help clarify the size of the relative risk being reported, it does not clarify the absolute levels of risk involved. Thus, providing information about the base rate of the risk is still useful for interpretation.

Of note, clearly and accurately presenting measures of association also involves other considerations such as avoiding misleading readers about causality. As noted in a seminal discussion of the population attributable fraction, policymakers and others have an inherent interest in “how much of the disease burden in a population could be eliminated…”23 Thus, statistically intuitive measures of relative and absolute risk are most useful “when the factor of interest is clearly causally related to the end point and when there is consensus that the exposure is amenable to intervention.”23 When these conditions are not met, authors should note this and explain the limitations concerning what can be learned from the particular measure of association. When part or all of an association may be caused by another variable, even a statistically accurate presentation of an OR or RR may generate unwarranted attention concerning a risk factor among researchers, the public, or those interested in mitigating risk.

Ensuring clear and accurate effect size presentation is critical to scientific advancement in preventive medicine, as reflected in policy statements from professional organizations24 and discussions of “the importance of meaning” in public health research.25,26 Making progress toward this goal may require a multipronged approach. Raising awareness among researchers and authors about the meaning of ORs is one strategy, including the present article, which can contribute to this effort. Vigilance is also required on the part of journal reviewers during the peer review process to ensure accurate interpretation of ORs and to suggest alternatives or complements to the presentation of ORs to clearly describe relative and absolute risk. Finally, a parsimonious way of reaching the research community, including all authors—and, by extension, an entire journal readership—may be to include standards for the presentation of relative risk information directly in the journal’s instructions for authors. Instructions may include a warning against misinterpreting ORs as RRs and potential supplements and alternatives to the presentation of ORs to clearly describe levels of relative and absolute risk.

Acknowledgments

The authors gratefully acknowledge three anonymous reviewers for comments on a previous draft of this manuscript.

Although the first author is a U.S. Food and Drug Administration/Center for Tobacco Products (FDA/CTP) employee, this work was not done as part of his official duties. This publication reflects the views of the authors and should not be construed to reflect the FDA/CTP’s views or policies.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

No financial disclosures were reported by the authors of this paper.

References

- 1.Rodrigues L, Kirkwood BR. Case-control designs in the study of common diseases: updates on the demise of the rare disease assumption and the choice of sampling scheme for controls. Int J Epidemiol. 1990;19(1):205–213. doi: 10.1093/ije/19.1.205. http://dx.doi.org/10.1093/ije/19.1.205. [DOI] [PubMed] [Google Scholar]

- 2.Katz KA. The (relative) risks of using odds ratios. Arch Dermatol. 2006;142(6):761–764. doi: 10.1001/archderm.142.6.761. http://dx.doi.org/10.1001/archderm.142.6.761. [DOI] [PubMed] [Google Scholar]

- 3.Knol MJ, Duijnhoven RG, Grobbee DE, Moons KG, Groenwold RH. Potential misinterpretation of treatment effects due to use of odds ratios and logistic regression in randomized controlled trials. PLoS One. 2011;6(6):e21248. doi: 10.1371/journal.pone.0021248. http://dx.doi.org/10.1371/journal.pone.0021248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Osborne JW. Bringing balance and technical accuracy to reporting odds ratios and the results of logistic regression analyses. Pract Assess Res Eval. 2006;11(7):1–6. [Google Scholar]

- 5.Sackett DL, Deeks JJ, Altman DG. Down with odds ratios! Evid Based Med. 1996;1(6):164–166. [Google Scholar]

- 6.Schmidt CO, Kohlmann T. When to use the odds ratio or the relative risk? Int J Public Health. 2008;53(3):165–167. doi: 10.1007/s00038-008-7068-3. http://dx.doi.org/10.1007/s00038-008-7068-3. [DOI] [PubMed] [Google Scholar]

- 7.Knol MJ, Le Cessie S, Algra A, Vandenbroucke JP, Groenwold RH. Overestimation of risk ratios by odds ratios in trials and cohort studies: alternatives to logistic regression. CMAJ. 2012;184(8):895–899. doi: 10.1503/cmaj.101715. http://dx.doi.org/10.1503/cmaj.101715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.O’Connor AM. Interpretation of odds and risk ratios. J Vet Intern Med. 2013;27(3):600–603. doi: 10.1111/jvim.12057. http://dx.doi.org/10.1111/jvim.12057. [DOI] [PubMed] [Google Scholar]

- 9.Tajeu G, Sen B, Allison DB, Menachemi N. Misuse of Odds Ratios in Obesity Literature: An Empirical Analysis of Published Studies. Obes. 2012;20(8):1726–1731. doi: 10.1038/oby.2012.71. http://dx.doi.org/10.1038/oby.2012.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Holcomb WL, Jr, Chaiworapongsa T, Luke DA, Burgdorf KD. An odd measure of risk: use and misuse of the odds ratio. Obstet Gynecol. 2001;98(4):685–688. doi: 10.1016/s0029-7844(01)01488-0. http://dx.doi.org/10.1097/00006250-200110000-00028. [DOI] [PubMed] [Google Scholar]

- 11.Greenland S, Thomas DC. On the need for the rare disease assumption in case-control studies. Am J Epidemiol. 1982;116(3):547–553. doi: 10.1093/oxfordjournals.aje.a113439. [DOI] [PubMed] [Google Scholar]

- 12.Pearce N. What does the odds ratio estimate in a case-control study? Int J Epidemiol. 1993;22(6):1189–1192. doi: 10.1093/ije/22.6.1189. http://dx.doi.org/10.1093/ije/22.6.1189. [DOI] [PubMed] [Google Scholar]

- 13.Bland JM, Altman DG. The odds ratio. BMJ. 2000;320:1468. doi: 10.1136/bmj.320.7247.1468. http://dx.doi.org/10.1136/bmj.320.7247.1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chinn S. A simple method for converting an odds ratio to effect size for use in metaanalysis. Stat Med. 2000;19(22):3127–3131. doi: 10.1002/1097-0258(20001130)19:22<3127::aid-sim784>3.0.co;2-m. http://dx.doi.org/10.1002/1097-0258(20001130)19:22<3127::AID-SIM784>3.0.CO;2-M. [DOI] [PubMed] [Google Scholar]

- 15.Brimacombe MB. Biostatistical and medical statistics graduate education. BMC Med Educ. 2014;14:18. doi: 10.1186/1472-6920-14-18. http://dx.doi.org/10.1186/1472-6920-14-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Choi BCK. Twelve Essentials of Science-based Policy. Prev Chronic Dis. 2005;2(4):A16. [PMC free article] [PubMed] [Google Scholar]

- 17.Moynihan R, Bero L, Ross-Degnan D, et al. Coverage by the news media of the benefits and risks of medications. N Engl J Med. 2000;342(22):1645–1650. doi: 10.1056/NEJM200006013422206. http://dx.doi.org/10.1056/NEJM200006013422206. [DOI] [PubMed] [Google Scholar]

- 18.Bewick V, Cheek L, Ball J. Statistics review 14: Logistic regression. Crit Care. 2005;9(1):112–118. doi: 10.1186/cc3045. http://dx.doi.org/10.1186/cc3045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Muller CJ, MacLehose RF. Estimating predicted probabilities from logistic regression: different methods correspond to different target populations. Int J Epidemiol. 2014;43(3):62–970. doi: 10.1093/ije/dyu029. http://dx.doi.org/10.1093/ije/dyu029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wilcosky TC, Chambless LE. A comparison of direct adjustment and regression adjustment of epidemiologic measures. J Chronic Dis. 1985;38(10):849–856. doi: 10.1016/0021-9681(85)90109-2. http://dx.doi.org/10.1016/0021-9681(85)90109-2. [DOI] [PubMed] [Google Scholar]

- 21.Cole P, MacMahon B. Attributable risk percent in case-control studies. Br J Prev Soc Med. 1971;25(4):242–244. doi: 10.1136/jech.25.4.242. http://dx.doi.org/10.1136/jech.25.4.242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Woloshin S, Schwartz LM. [Accessed January 17, 2016];Questions to guide reporting: Dartmouth Institute for Health Policy & Clinical Practice. 2016 www.tdi.dartmouth.edu/images/uploads/TDICautiontipsheet.pdf.

- 23.Rockhill B, Newman B, Weinberg C. Use and misuse of population attributable fractions. Am J Public Health. 1998;88(1):15–19. doi: 10.2105/ajph.88.1.15. http://dx.doi.org/10.2105/AJPH.88.1.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wilkinson L. Statistical methods in psychology journals: Guidelines and explanations. Am Psychol. 1999;54(8):594–604. http://dx.doi.org/10.1037/0003-066X.54.8.594. [Google Scholar]

- 25.Chan LS. Minimal clinically important difference (MCID)--adding meaning to statistical inference. Am J Public Health. 2013;103(11):e24–e25. doi: 10.2105/AJPH.2013.301580. http://dx.doi.org/10.2105/AJPH.2013.301580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Vaughan RD. The importance of meaning. Am J Public Health. 2007;97(4):592–593. doi: 10.2105/AJPH.2006.105379. http://dx.doi.org/10.2105/AJPH.2006.105379. [DOI] [PMC free article] [PubMed] [Google Scholar]