Abstract

Numerous professional bodies have questioned whether traditional input-based continuing professional development (CPD) schemes are effective at measuring genuine learning and improving practice performance and patient health. The most commonly used type of long-established CPD activities, such as conferences, lectures and symposia, have been found to have a limited effect on improving practitioner competence and performance, and no significant effect on patient health outcomes. Additionally, it is thought that the impact of many CPD activities is reduced when they are undertaken in isolation outside of a defined structure of directed learning. In contrast, CPD activities which are interactive, encourage reflection on practice, provide opportunities to practice skills, involve multiple exposures, help practitioners to identify between current performance and a standard to be achieved, and are focused on outcomes, are the most effective at improving practice and patient health outcomes.

CONTINUING professional development (CPD) is a career-long process that requires practitioners to enhance their knowledge, acquire new skills and build on existing ones (Bamrah and Bhugra 2009). The main objective of CPD in the medical profession is to promote up-to-date and high-quality patient care by ensuring that clinicians have access to the necessary learning opportunities to maintain and improve their ability to practice (Grant and Stanton 2001).

Traditionally, CPD has been primarily delivered in the form of lectures, conferences and workshops. These activities support supplementary learning within an input-based system and typically require clinicians to record the time spent doing a CPD activity, or the number of points or credits accrued by way of attendance at CPD events. Traditional, or input-based schemes have historically been regarded as simple and cost effective, and provide an easily quantifiable method of measuring individual CPD activity (Friedman and Woodhead 2007). However, professional bodies have begun to question whether simply recording the time spent on CPD is an indication of genuine learning, or will lead to any change in practice (IAESB 2008).

More recently, outcomes-based CPD schemes have been introduced, or considered, by a number of professional bodies and policy- makers (Moore and others 2009). Outcomes-based CPD seeks to evidentially measure outputs; that is, the impact of CPD on personal and professional development, and outcomes for patients. The objective of this approach is to therefore provide a measurement of genuine learning and professional improvement. However, producing a definitive measure of learning and impact on practice is complicated and requires more time and resources than with an input-based scheme (Friedman and Woodhead 2007, IAESB 2008).

In this review, the effectiveness of traditional CPD activities on learning and impact on clinical competency in the medical professions are appraised by examining the relevant key literature. Proposals for potentially increasing the impact of these activities, and others, by organising them within defined outcomes-based structures are then described. There is particular focus on the types of framework that may be effective at linking desired outputs to appropriate assessment within outcomes-based CPD approaches. The aim of this review is to assist the veterinary profession in developing an informed view on the benefits of introducing an outcomes-based approach to CPD.

Analysis of the literature

A literature search was conducted using two different keyword search strategies. The first strategy used the keywords, ‘CPD or continuing professional development AND impact or impacts AND outcome or outcomes’. The second strategy used the keywords, ‘CME or continuing medical education AND impact or impacts AND outcome or outcomes’. The search keywords, ‘CME or continuing medical education’ were incorporated as within the medical profession these terms are often used interchangeably with CPD or continuing professional development.

A search of four databases (CAB Abstracts, CAB Direct, PubMed and VetMed) found 205 papers with search strategy 1, and 617 papers with search strategy 2. Sixty-two papers that were directly relevant to the review remit were identified for the purposes of this review. The remaining papers were discarded as they did not provide relevant information regarding the effectiveness of CPD activities or schemes.

Traditional (input-based) CPD: associated activities and their impact on clinical practice and healthcare outcomes

In recent decades, medical professionals in developed countries have spent significant amounts of time taking part in traditional CPD activities. During the 1990s, physicians reported spending, on average, 50 hours a year participating in traditional CPD activities (Difford 1992, Goulet and others 1998). These activities are often similar in format to those provided in higher education. They most commonly consist of didactic events, such as workshops, seminar series and attendance at symposia and conferences, and, on the whole, do not form part of a formal structure that seeks to use them to address specific gaps in professional performance. Activities generally comprise lectures and presentations which are often supplemented by printed materials. This format of CPD is underpinned by a belief that an increase in knowledge will improve the way in which medical professionals practise and lead to improved patient outcomes (Davis and others 1999).

Systematic reviews that investigated the impact of traditional CPD activities consistently found that the most commonly used techniques, such as conferences, lectures and symposia, had very little impact on improving professional practice and healthcare outcomes (Davis and others 1995, 1999, Bloom 2005, Forsetlund and others 2009). Indeed, none of the reviews reported a major effect of didactic activities on clinician performance (Table 1). Additionally, the same reviews unanimously reported that didactic activities had no statistically significant effect on patient outcomes (Table 2). However, the majority of reviews indicate that activities that enhance participant activity, use multiple exposures to content, encourage reflection on current practices, provide opportunities to practise skills and help clinicians identify gaps between current performance and an identified standard often result in highly significant changes in practice, and, in some instances, patient outcomes (Mann and others 1997, O'Brien and others 2001, Bloom 2005, Marinopoulos and others 2007, Drexel and others 2010). Examples of such activities include interactive workshops, academic outreach and audit/feedback and, given their effectiveness, it has been suggested that they should attract more credits in an input-based system (Bloom 2005).

Table 1:

Effects of tested CPD activities on clinician performance

| CPD activity | High | Moderate | Low | None |

|---|---|---|---|---|

| Didactic programmes | 0 | 3 | 7 | 10 |

| Interactive | 5 | 6 | 2 | 0 |

| Audit/feedback | 6 | 11 | 4 | 2 |

| Academic outreach | 6 | 8 | 1 | 0 |

| Opinion leaders | 0 | 3 | 4 | 2 |

| Reminders | 9 | 9 | 5 | 0 |

| Clinical practice guidelines | 0 | 3 | 2 | 0 |

| Information only | 0 | 2 | 3 | 8 |

Values represent number of systematic review studies reporting high, moderate, low or no effects of a CPD activity on clinician care processes (adapted from Bloom [2005])

Table 2:

Effects of tested CPD activities on patient health outcomes

| CPD activity | High | Moderate | Low | None |

|---|---|---|---|---|

| Didactic programmes | 0 | 0 | 0 | 14 |

| Interactive | 0 | 3 | 1 | 3 |

| Audit/feedback | 0 | 5 | 3 | 2 |

| Academic outreach | 1 | 4 | 1 | 0 |

| Opinion leaders | 1 | 0 | 0 | 0 |

| Reminders | 2 | 4 | 2 | 2 |

| Clinical practice guidelines | 0 | 1 | 0 | 0 |

| Information only | 0 | 0 | 1 | 2 |

Values represent number of systematic review studies reporting high, moderate, low or no effects of a CPD activity on patient health outcomes (adapted from Bloom [2005])

The effectiveness of interactive activities on clinician performance and patient outcomes is further enhanced when they are added to, and mixed with, didactic events (Davis and others 1995, 1999, Lougheed and others 2007, Forsetlund and others 2009). Indeed, there is a considerable amount of evidence that suggests that any low efficacy CPD activity can yield a measurable benefit when mixed with interactive techniques (Haynes and others 1984, Johnston and others 1994, Wensing and Grol 1994, Drexel and others 2010).

Outcomes-based CPD models

Outcomes-based CPD schemes place greater responsibility on participants to set out their CPD requirements and demonstrate how their CPD activities have improved their professional performance and patient health. This CPD model more explicitly recognises that different professionals will have different development needs (Department of Health 2003) and requires individual practitioners to take greater ownership of their professional development by following four broad stages of an outcomes-based CPD cycle (FGDP 2011). These stages are:

▪ Reflecting on their practice to identify their own developmental needs;

▪ Undertaking appropriate CPD activities to meet the developmental need(s) they have identified;

▪ Applying what they learnt to their practice; and

▪ Measuring the impact of CPD on their practice and patient health, and identifying any further developmental needs.

The recent trend away from input-based quantitative CPD models to more structured outcomes-based qualitative CPD approaches has been challenging for those professional bodies that have introduced such schemes due to the difficulty of definitively measuring outcomes (Jones and Jenkins 2006). However, outcomes-based CPD frameworks have now been formulated, published and, in some instances, put into practice, or incorporated into existing schemes (Department of Health 2003, AOMRC 2015), in an attempt to focus both well-established and novel CPD activities on achieving desired outcomes. This approach is reinforced by research which has shown that while many CPD activities in isolation contribute little to improved clinician performance or patient health outcomes, CPD activities that are planned according to certain principles within a defined structure can significantly impact these areas (Marinopoulos 2007).

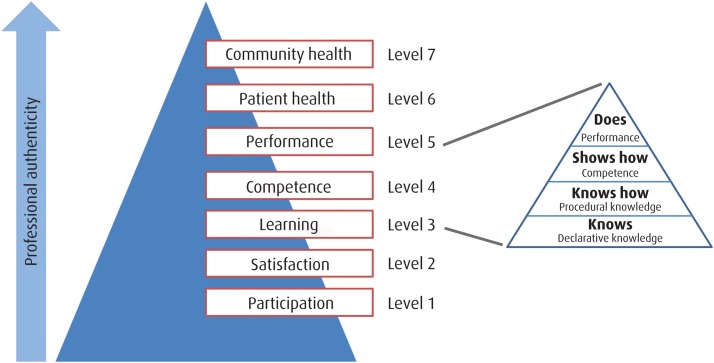

One prominent framework, devised by Moore and others (2009), synthesises a number of frameworks from Dixon (1978), Lloyd and Abrahamson (1979), Miller (1990), Kirkpatrick (1998) and Moore (2003) to produce an overarching conceptual framework which attempts to cultivate meaningful approaches to address the issues of professional clinical competence and performance. This synthesis has resulted in the creation of a framework for the assessment of continuous learning consisting of seven levels of outcomes (Table 3). This pyramidal framework notably incorporates Miller's pyramid (Miller 1990) of four progressive levels of competence which differentiate the ways of knowing; that is, ‘knows’, ‘knows how, ‘shows how’, and ‘does’ (Fig 1). First, a clinician must know what to do; that is, the acquisition and interpretation of facts (referred to in Moore and others' [2009] framework as learning: declarative knowledge – level 3A). Second, a clinician knows how to do something; that is, can describe a procedure (learning: procedural knowledge – level 3B). At the next developmental level a clinician shows how to do something by way of demonstration (competence – level 4). Finally, a clinician does; that is using the competence in practice with patients (performance – level 5). Other outcomes frameworks have also incorporated these principles but in a less explicit way (Department of Health 2003, AOMRC 2015).

Table 3:

Outcomes framework (devised by Moore and others [2009])

| CPD framework | Description | Data source |

|---|---|---|

| Level 1 – participation | Number of physicians and health care professionals who participated in the CPD activity | Attendance records |

| Level 2 – satisfaction | The degree to which the setting and delivery of the CPD activity met the participants' expectations | Questionnaires completed by attendees following the CPD activity |

| Level 3a – learning: declarative knowledge | The degree to which participants can articulate what the CPD activity intended to convey | Objective: pre- and post-test knowledge |

| Subjective: self-report of knowledge gain | ||

| Level 3b – learning: procedural knowledge | The degree to which participants state how to do what the CPD activity intended for them to do | Objective: pre- and post-test knowledge |

| Subjective: self-report of knowledge gain | ||

| Level 4 – competence | The degree to which participants demonstrate/show in an educational setting how to do what the CPD activity intended them to be able to do | Objective: observation in an education setting |

| Subjective: self-report of competence, intention to change | ||

| Level 5 – performance | The degree to which participants do what the CPD activity intended them to be able to do in practice | Objective: observation of performance in patient care setting, patient charts, administrative databases |

| Subjective: self-reports of performance | ||

| Level 6 – patient health | The degree to which the health status of a community of patients changes in response to changes in the practice behaviour of CPD participants | Objective: health status measures recorded in patient charts or administrative databases |

| Subjective: patient self-report of health status | ||

| Level 7 – community | The degree to which the health status of a community of patients changes in response to changes in the practice behaviour of CPD participants | Objective: epidemiological data reports |

| Subjective: community self-report |

FIG 1:

Model for assessing outcomes of CPD activities (adapted from Miller [1990] and Moore and others [2009])

Within such a model, it is proposed that a number of forms of assessment should be used at different stages of a CPD activity within a wider continuous assessment strategy which is integrated with a strategy for measuring outcomes (Balmer 2013). First, a needs assessment, undertaken before the commencement of a CPD activity and using a gap-analysis approach, is necessary to determine what participants know and what they should know. As with other proposed outcomes-based CPD approaches, participants are also compelled to reflect on their practice to identify their own developmental needs (Department of Health 2003, FGDP 2011, AOMRC 2015). Second, formative assessment should take place during a CPD activity to check that it is on track to achieve the desired results. Proponents of outcomes-based CPD frameworks suggest that formative assessment, incorporating practice and 360 degree feedback sessions should be a central part of an outcomes approach so that CPD participants are provided with a supporting framework to develop the skills needed to achieve their objectives (Moore and others 2009). Finally, summative assessment can be employed at the end of a CPD activity to attempt to determine if it has achieved its objectives. Summative assessment techniques used in CPD programmes to date include self-report questionnaires, knowledge tests and commitment-to-change approaches (with follow-up) (Moore and others 2004, Wakefield 2004).

While summative assessment techniques are well established and have been shown to be effective at measuring knowledge gains (level 3A of Moore and others' [2009] pyramid) from CPD activities (Confos and others 2003, Leong and others 2010, Domino and others 2011), there is little evidence to suggest a definitive strategy for assessing and measuring competence, performance and patient health, within any proposed outcomes-based framework. This is due to the difficulty in linking clinical performance and patient health status to a CPD activity.

The Allied Health Professions CPD Outcomes Model (Department of Health 2003) put forward three broad types of evidence for demonstrating competence:

▪ Analogous – evidence rooted in everyday clinical practice;

▪ Analytical – evidence requiring participants to stand back from, and evaluate, their practice; and

▪ Reputational – evidence drawing on verification from participants' colleagues.

Reliable forms of analogous evidence can include observation during practice and feedback during a CPD activity, objective structured clinical examinations, mini-clinical exercise, oral examinations based on patient cases, fictitious case scenarios and clinician questionnaires (van der Vleuten and Schuwirth 2005). Analytical evidence may include self-audit and the preparation of self-reflective statements. However, self-analytical approaches have received criticism for lacking transparency and placing too much trust in the individual (Bradshaw 1998, Moore and others 2004). Reputational evidence will often involve feedback, and reflection on feedback, from colleagues.

Measurement of performance is necessary in an outcomes model, since what clinicians do in controlled assessment situations correlates poorly with their actual performance in practice (Rethans and others 2002). Performance measurements focus on clinical activities, such as screening, evaluation, detection, diagnosis, prevention, development of management plans, prescribing and follow up. The question being addressed in this instance is whether clinical performance improved due to the incorporation of what was learned in a CPD activity; for example, an increase in the appropriate ordering of tests. Measurement could involve chart audit using data sources, such as patient health records and administrative data contained in databases. Administrative data sources have been shown to be effective at determining CPD impact on clinical performance and typically include information on demographics, diagnoses and codes for procedures (Price and others 2005). A randomised controlled trial has also demonstrated that self-reported commitment to change after a CPD activity, in addition to reinforcing learning, can be an effective way of detecting improvements in clinical performance (Domino and others 2011). Self-report questionnaires to clinicians and patients can also supplement these methods but may have credibility issues (Moore and others 2009).

For determining whether the health status of a clinician's patients has improved after the clinician's participation in a CPD activity, patient health records and administrative data have been shown to be successful in supporting research and quality-improvement initiatives (Norcini 2005). Additionally, clinician and patient questionnaires are again proposed to represent useful supplementary measurement tools (Garratt and others 2002).

While some medical professional bodies, such as the Royal College of Surgeons, have not formally implemented an outcomes-based CPD framework akin to that of Moore and others (2009), they do attempt to emphasise the importance of outcomes above the accrual of credits or points, by interlinking their CPD scheme with Good Medical Practice and Good Surgical Practice principles and guidelines (Table 4). Surgeons devise their own CPD activities to address their needs in the context of their current and future career roles, and document the learning achieved, particularly in terms of reinforcing good practice. Documentation can include personal development plans, logbooks, records of CPD activities undertaken and other appropriate CPD evidence, which are collected into a portfolio for independent scrutiny. Scheme planners also stress the value of reflective practice (Schostak and others 2010).

Table 4:

Royal College of Surgeons CPD scheme and classification

| New CPD classification | General Medical Council's principles of professional practice | Examples of related activities | Examples of CPD activities |

|---|---|---|---|

| Clinical | Good clinical care Maintaining good surgical practice |

Clinical skills updating Patient management and referral Technical aspects of treatment Clinical practice within and across teams Working within guidelines Record keeping, audit and use of IT |

Instructional meetings and lectures Simulators and workshops Generic/speciality courses Clinical audit and research Multidisciplinary meetings Journal clubs Visiting centres of excellence |

| Professional | Relationships with patients Working with colleagues Teaching, training and supervising Probity in professional practice Health |

Communication and interpersonal skills Teaching and mentoring Work as a surgical tutor Regional adviser or postgraduate tutor Examining Appraising peers Ethics and research Editing and reviewing Work and representative duties with colleges and specialist associations Work with government and national agencies Independent practice Medico-legal work University commitment |

Multiprofessional meetings Formal training to teach and educate Formal training as an examiner Training in interpersonal skills, committee work etc IT training Writing research papers and preparing grant applications |

| Managerial | Lead positions and responsible positions within the service delivering surgical care | Work as clinical lead or medical/surgical director Clinical Government/effectiveness lead Cancer lead within a trust Director of medical education Membership of speciality Training committees etc |

Management training Attendance at specialist conferences and meetings College and specialist association Administrative meetings Professional visit and exchanges Chairing meetings/inquiries etc |

Implementation and effectiveness of outcomes-based CPD approaches

The implementation of outcomes-based CPD frameworks is still in its infancy and there is little published literature that describes attempts to determine whether substantial impacts on clinical performance and patient health have occurred in fields administered by professional bodies who have adopted outcomes frameworks.

While the veterinary profession in the UK has yet to implement an overarching outcomes-based CPD framework, the RCVS modified the design of its professional Certificate in Advanced Veterinary Practice (CertAVP) so that it included a compulsory professional key skills (PKS) module, which incorporated an outcomes-focused evaluation approach. In one version of this qualification, the module required participants to submit a series of reflective essays for which they would receive formative feedback to encourage the development of iterative skills, literature sourcing skills, and sound judgements based on qualitative evidence. The writing of reflective statements provides participants with the opportunity to identify personal areas for improvement within a framework which defines broad areas of practice (May and Kinnison 2015).

The content from learning summaries of 12 PKS module participants was evaluated using a methodology similar to Kirkpatrick's (1998) four-level evaluation model (1 Reaction, 2 Learning, 3 Behaviour and 4 Results). Matrices were developed to direct independent coding of content against learning (overarching attitudinal, behavioural and outcomes-related codes). Comparisons of these matrices revealed that participation in the PKS module changed practitioner behaviour which, in turn, positively impacted practice team behaviours and, ultimately, patients and owners (May and Kinnison 2015).

The authors of this study therefore concluded that an individual outcomes-focused approach to CPD within the PKS module, through the use of reflective accounts of participant experiences, can result in changes beyond just knowledge gains (Sargeant and others 2011). The study also reinforced previous research which demonstrated the value of using Kirkpatrick's (1998) model for evaluating the impact of learning experiences (Olson and Toomoan 2012, May and Kinnison 2015).

Discussion

There is a substantial and increasing volume of evidence that suggests that the most commonly used traditional CPD activities are ineffective at improving practitioner performance and patient health outcomes, when undertaken in isolation within input-based systems. Therefore, the continuation of CPD schemes which do not link activities to outcomes is increasingly untenable given the modern expectations of clients and their political representatives.

A number of the systematic reviews cited in this paper stress that research into the mechanisms of action by which CPD improves practitioner performance and patient health outcomes needs greater theoretical and methodological sophistication. However, the existing reviews do provide an evidential consensus that CPD activities that are outcomes-focused are more likely to be effective at improving practitioner performance and patient health. Given their capacity to increase knowledge, traditional CPD activities can only begin to positively impact practitioner performance and patient outcomes when carried out in a more interactive fashion within outcomes-based frameworks providing supported CPD that recognises an individual's needs and encourages self-directed learning. Within the veterinary profession this outlook is now beginning to be successfully explored with the new CertAVP, which promotes self-directed learning in practice and pushes participants to take responsibility for their own development.

While there is currently a dearth of published literature regarding the success of overarching outcomes-based CPD schemes, the initial implementation of existing outcomes-focused models in specific areas of medical practice has demonstrated that they can be successful in transferring new knowledge into practice. This improvement should be optimised if future studies can more fully take into account the wider political, social and organisational factors that influence practitioner performance and patient health outcomes (Cervero and Gaines 2014). Additionally, CPD planners could benefit by incorporating methodologies from other knowledge transfer initiatives. The field of knowledge translation, which focuses on enabling practitioners through interventions to apply best evidence in practice, has developed mostly in parallel with CPD. CPD represents an effective vehicle for knowledge transfer and some CPD and knowledge translation planners are beginning to consider the possible benefits of bringing the knowledge transfer and CPD communities together to identify commonalities and shared gaps so that opportunities for synergy can be recognised (Légaré and others 2011, Sargeant and others 2011). In human healthcare, it is estimated that 30 per cent to 40 per cent of patients do not receive care that is informed by the best evidence, and that 20 per cent to 50 per cent receive inappropriate care (Mann and Sargeant 2013). Knowledge transfer and CPD partnerships are therefore put forward as a way of forming better strategies to enhance the application of evidence in practice and ultimately improving health outcomes for patients.

References

- AOMRC (2015) www.aomrc.org.uk. Accessed September 2, 2016

- BALMER J. T. (2013). The transformation of continuing medical education (CME) in the United States. Advances in Medical Education and Practice 4, 171–182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- BAMRAH J. S., BHUGRA D. (2009) CPD and recertification: improving patient outcomes through focused learning. Advances in Psychiatric Treatment 15, 2–6 [Google Scholar]

- BLOOM B. S. (2005) Effects of continuing medical education in improving physician clinical care and patient health: a review of systematic reviews. International Journal of Technology Assessment in Health Care 21, 380–385 [DOI] [PubMed] [Google Scholar]

- BRADSHAW A. (1998) Defining ‘competency’ in nursing (Part II): an analytical overview. Journal of Clinical Nursing 7, 103–111 [PubMed] [Google Scholar]

- CERVERO R. M., GAINES J. K. (2014) Effectiveness of continuing medical education: updated synthesis of systematic reviews. www.accme.org/sites/default/files/2014_Effectiveness_of_Continuing_Medical_Education_Cervero_and_Gaines_0.pdf. Accessed September 15, 2016

- CONFOS N., FRITH J., MITCHELL P. (2003) Training GPs to screen for diabetic retinopathy. The impact of short term intensive education. Australian Family Physician 32, 381–382 [PubMed] [Google Scholar]

- DAVIS D., O'BRIEN M. A. T., FREEMANTLE N., WOLF F. M., MAZMANIAN P., TAYLOR-VAISEY A. (1999) Impact of formal continuing medical education: do conferences, rounds, and other traditional continuing education activities change physician behaviour or health care outcomes? Journal of the American Medical Association 282, 867–874 [DOI] [PubMed] [Google Scholar]

- DAVIS D. A., THOMSON M. A., OXMAN A. D., HAYNES R. B. (1995) Changing physician performance. A systematic review of the effect of continuing medical education strategies. Journal of the American Medical Association 274, 700–705 [DOI] [PubMed] [Google Scholar]

- DEPARTMENT OF HEALTH (2003) Allied health professions project: demonstrating competence through continuing professional development – final report. http://collections.europarchive.org/tna/20080102105757/dh.gov.uk/en/Consultations/Closedconsultations/DH_4071458. Accessed September 15, 2016

- DIFFORD F. (1992) General practitioners' attendance at courses accredited for the postgraduate education allowance. British Journal of General Practice 42, 290–293 [PMC free article] [PubMed] [Google Scholar]

- DIXON J. (1978) Evaluation criteria in studies of continuing health education in the health professions: a critical review and a suggested strategy. Evaluation and the Health Professions 1, 47–65 [DOI] [PubMed] [Google Scholar]

- DOMINO F. J., CHOPRA S., SELIGMAN M., SULLIVAN K., QUIRK M. E. (2011) The impact on medical practice of commitments to change following CME lectures: a randomised controlled trial. Medical Teacher 33, e495–500 [DOI] [PubMed] [Google Scholar]

- DREXEL C., MERLO K., BASILE J. N., WATKINS B., WHITFIELD B., KATZ J. M., PINE B., SULLIVAN T. (2010) Highly interactive multi-session programs impact physician behaviour on hypertension management: outcomes of a new CME model. Journal of Clinical Hypertension 13, 97–105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- FGDP (2011) Comments to inform the General Dental Council's review of continuing professional development. www.fgdp.org.uk/_assets/pdf/consultation%20responses/gdc%20cpd%20review_fgdp(uk)%20views_final.pdf. Accessed September 15, 2016

- FORSETLUND L., BJØRNDAL A., RASHIDIAN A., JAMTVEDT G., O'BRIEN M. A., WOLF F. &. OTHERS (2009) Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews 2: CD003030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- FRIEDMAN A., WOODHEAD S. (2007) Approaches to CPD measurement research project. Accounting Education: An International Journal 16, 431–432 [Google Scholar]

- GARRATT A. M., SCHMIDT L., FITZPATRICK R. (2002) Patient-assessed health outcome measures for diabetes: a structured review. Diabetic Medicine 19, 1–11 [DOI] [PubMed] [Google Scholar]

- GOULET F., GAGNON R. J., DESROSLERS G., JACQUES A., SINDON A. (1998) Participation in CME activities. Canadian Family Physician 44, 541–548 [PMC free article] [PubMed] [Google Scholar]

- GRANT J., STANTON F. (2001) The effectiveness of continuing professional development. Postgraduate Medical Journal 77, 551–552 [Google Scholar]

- HAYNES R. B., DAVIS D. A., MCKIBBON A., TUGWELL P. (1984) A critical appraisal of the efficacy of continuing medical education. Journal of the American Medical Association 251, 61–64 [PubMed] [Google Scholar]

- IAESB (2008) Approaches to continuing professional development (CPD) measurement: International Accounting Education Standards Board Information Paper. www.ifac.org/sites/default/files/publications/files/approaches-to-continuing-pr.pdf. Accessed September 2, 2016

- JOHNSTON M. E., LANGTON K. B., HAYNES R. B., MATHIEU A. (1994) Effects of computer-based clinical decision support systems on clinician performance and patient outcome: a critical appraisal of research. Annals of Internal Medicine 120, 135–142 [DOI] [PubMed] [Google Scholar]

- JONES R., JENKINS F. (2006) Developing the Allied Health Professional. Radcliffe Publishing [Google Scholar]

- KIRKPATRICK D. (1998) Evaluating Training Programs: The Four Levels. 2nd edn. Jossey Bass [Google Scholar]

- LÉGARÉ F., BORDUAS F., MACLEOD T., SKETRIS I., CAMPBELL B., JACQUES A. (2011) Partnerships for knowledge translation and exchange in the context of continuing professional development. Journal of Continuing Education in the Health Professions 31, 181–187 [DOI] [PubMed] [Google Scholar]

- LEONG L., NINNIS J., SLATKIN N., RHINER M., SCHROEDER L., PRITT B. &. OTHERS (2010) Evaluating the impact of pain management (PM) education on physician practice patterns – a continuing medical education (CME) outcomes study. Journal of Cancer Education 25, 224–228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LLOYD J. S., ABRAHAMSON S. (1979) Effectiveness of continuing medical education: a review of the evidence. Evaluation and the Health Professions 2, 251–280 [DOI] [PubMed] [Google Scholar]

- LOUGHEED M. D., MOOSA D., FINLAYSON S., HOPMAN W. M., QUINN M., SZPIRO K., REISMAN J. (2007) Impact of a provincial asthma guidelines continuing medication project: the Ontario Asthma Plan of Action's Provider Education in Asthma Care Project. Canadian Respiratory Journal 14, 111–117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MANN K. V., LINDSAY E. A., PUTNAM R. W., DAVIS D. A. (1997) Increasing physician involvement in cholesterol-lowering practices: the role of knowledge, attitudes and perceptions. Advances in Health Sciences Education: Theory and Practice 2, 237–253 [DOI] [PubMed] [Google Scholar]

- MANN K. V., SARGEANT J. M. (2013) Continuing professional development. In Oxford Textbook of Medical Education. Ed Walsh K. Oxford University Press; pp 350–361 [Google Scholar]

- MARINOPOULOS S. S., DORMAN T., RATANAWONGSA N., WILSON L. M., ASHAR B. H., MAGAZINER S. L. &. OTHERS (2007) Effectiveness of Continuing Medical Education. http://archive.ahrq.gov/downloads/pub/evidence/pdf/cme/cme.pdf. Accessed September 15, 2016 [PMC free article] [PubMed]

- MAY S. A., KINNISON T. (2015) Continuing professional development: learning that leads to change in individual and collective clinical practice. Veterinary Record doi:10.1136/vr.103109 [DOI] [PubMed] [Google Scholar]

- MILLER G. E. (1990) The assessment of clinical skills/competence/performance. Academic Medicine 65 9 Suppl, S63–67 [Google Scholar]

- MOORE D. E. (2003) A framework for outcomes evaluation in the continuing professional development of physicians. In The Continuing Professional Development of Physicians: From Research to Practice. Eds Davis D., Barnes B. E., Fox R.. American Medical Association Press; pp 249–274 [Google Scholar]

- MOORE D. A., GILBERT R. O., THATCHER W., SANTOS J. E., OVERTON M. W. (2004) Levels of continuing medical education program evaluation: assessing a course on dairy reproductive management. Journal of Veterinary Medical Education 42, 146–155 [DOI] [PubMed] [Google Scholar]

- MOORE D. E., GREEN J. S., GALLIS H. A. (2009) Achieving desired results and improved outcomes: Integrating planning and assessment throughout learning activities. Journal of Continuing Education in the Health Professions 29, 1–15 [DOI] [PubMed] [Google Scholar]

- NORCINI J. J. (2005) Current perspectives in assessment: the assessment of performance at work. Medical Education 39, 880–889 [DOI] [PubMed] [Google Scholar]

- O'BRIEN M. A., FREEMANTLE N., OXMAN A. D., WOLF F., DAVIS D. A., HERRIN J. (2001) Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database of Systematic Review 1, CD003030 [DOI] [PubMed] [Google Scholar]

- OLSON C. A., TOOMOAN T. R. (2012) Didactic CME and practice change: don't throw that baby out quite yet. Advances in Health Sciences Education 17, 441–451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- PRICE D. W., XU S., MCCLURE D. (2005) Effect of CME on primary care and OB/GYN treatment of breast masses. Journal of Continuing Education in the Health Professions 25, 240–247 [DOI] [PubMed] [Google Scholar]

- RETHANS J. J., NORCINI J. J., BARÓN-MALDONADO M., BLACKMORE D., JOLLY B. C., LADUCA T. &. OTHERS (2002) The relationship between competence and performance: implications for assessing practice performance. Medical Education 36, 901–909 [DOI] [PubMed] [Google Scholar]

- SARGEANT J., BORDUAS F., SALES A., KLEIN D., LYNN B., STENERSON H. (2011) CPD and KT: models used and opportunities for synergy. Journal of Continuing Education in the Health Professions 31, 167–173 [DOI] [PubMed] [Google Scholar]

- SCHOSTAK J., DAVIS M., HANSON J., SCHOSTAK J., BROWN T., DRISCOLL P. &. OTHERS (2010) The effectiveness of continuing professional development. www.gmc-uk.org/Effectiveness_of_CPD_Final_Report.pdf_34306281.pdf. Accessed September 15, 2016 [DOI] [PubMed] [Google Scholar]

- VAN DER VLEUTEN C. P. M., SCHUWIRTH L. W. T. (2005) Assessing professional competence: from methods to programmes. Medical Education 39, 309–317 [DOI] [PubMed] [Google Scholar]

- WAKEFIELD J. (2004) Commitment to change: exploring its role in changing physician behaviour through continuing education. Journal of Continuing Education in the Health Professions 24, 197–204 [DOI] [PubMed] [Google Scholar]

- WENSING M., GROL R. (1994) Single and combined strategies for implementing changes in primary care: a literature review. International Journal for Quality in Health Care 6, 115–132 [DOI] [PubMed] [Google Scholar]