Abstract

Facial color varies depending on emotional state, and emotions are often described in relation to facial color. In this study, we investigated whether the recognition of facial expressions was affected by facial color and vice versa. In the facial expression task, expression morph continua were employed: fear-anger and sadness-happiness. The morphed faces were presented in three different facial colors (bluish, neutral, and reddish color). Participants identified a facial expression between the two endpoints (e.g., fear or anger) regardless of its facial color. The results showed that the perception of facial expression was influenced by facial color. In the fear-anger morphs, intermediate morphs of reddish-colored and bluish colored faces had a greater tendency to be identified as angry faces and fearful faces, respectively. In the facial color task, two bluish-to-reddish colored face continua were presented in three different facial expressions (fear-neutral-anger and sadness-neutral-happiness). Participants judged whether the facial color was reddish or bluish regardless of its expression. The faces with sad expression tended to be identified as more bluish, while the faces with other expressions did not affect facial color judgment. These results suggest that an interactive but disproportionate relationship exists between facial color and expression in face perception.

Facial color is suggestive of emotional states, as expressed with the phrases “red with anger” and “white with fear”. Physiological responses such as heart rate, blood pressure, and skin temperature depend upon affective states, as reviewed in Kreibig et al.1. Several physiological studies have demonstrated that human facial color also varies with emotional states. The face often flushes during anger2,3,4 or pleasure5, and sometimes goes pale when experiencing fear or fear mixed with anger4,6. Therefore, facial color may have a role in the interpretation of an individual’s emotions from his/her face. In a study by Drummond6, the participants responded to questionnaire items to associate facial color with emotion. The respondents associated flushing with anger and pallor with fear. They reported a propensity for facial flushing that is linked with blushing, or a propensity for pallor across a range of threatening and distressing situations. These results suggest that we associate facial color with emotion and vice versa. Facial color is also drawing much attention in the field of computer vision research that involves new techniques to understand the visual world through imaging devices such as video cameras. For example, Ramirez et al.7 found that facial skin color is a reliable feature for detecting the valence of the emotion with high accuracy.

Facial color provides useful clues for the estimation of an individual’s mental or physical condition. Several studies suggest that the evolution of trichromacy is related to the detection of social signals or the detection of predators8,9,10, although there are other theories on the evolution of color vision, such as fruit theory11 and young leaf theory12. Changizi et al.9 proposed that color vision in primates was selected for discriminating skin color modulations, presumably for the purpose of discriminating emotional states, socio-sexual signals, and threat displays. Tan and Stephen13 suggested that humans are highly sensitive to facial color, as the participants of their study could discriminate color differences in face photographs, but not in skin-colored patches. Several behavioral studies have shown that facial skin color is related to the perception of age, sex, health condition, and attractiveness of the face14,15,16,17,18,19. Facial skin color distribution, independent of facial shape-related features, has a significant influence on the perception of female facial age, attractiveness, and health17. Stephen et al.19 instructed participants to manipulate the skin color in photographs of the face to enhance the appearance of health; they found that participants increased skin redness, suggesting that facial color may play a role in the perception of health in human faces. Similarly, increased skin redness enhances the appearance of dominance, aggression, and attractiveness in male faces that are viewed by female participants, which suggests that facial redness is perceived as conveying similar information about male qualities20. Thus, facial color conveys important facial information to facilitate social communication.

Few studies have examined the relationship between facial color and expression at the level of face perception21,22. Song et al.23 reported that emotional expressions can bias the judgment of facial brightness. However, there is insufficient evidence that facial color (chromaticity) contributes to the perception of emotion (e.g., a flushing face looks angrier). Therefore, in the present study, we investigated whether facial color affects the perception of facial expression. Moreover, we examined the opposite effect, that is, whether facial expression affects facial color perception, and considered the relationship between facial color and expression at the level of face perception.

Materials and Methods

Participants

Twenty healthy participants (10 females) participated in experiments 1 (mean age = 23.30 years, S.D. = 3.40), and twenty healthy participants (10 females) participated in experiments 2 (mean age = 23.90 years, S.D. = 3.81). Seventeen out of 20 participants from experiment 2 also participated in experiment 1 at approximately 2 months before experiment 2. All participants were right-handed according to the Edinburgh handedness inventory24 and had normal or corrected-to-normal visual acuity. According to self-report, none of the participants had color blindness. All participants provided written informed consent. The experimental procedures received the approval of the Committee for Human Research at the Toyohashi University of Technology, and the experiment was strictly conducted in accordance with the approved guidelines of the committee.

Stimuli

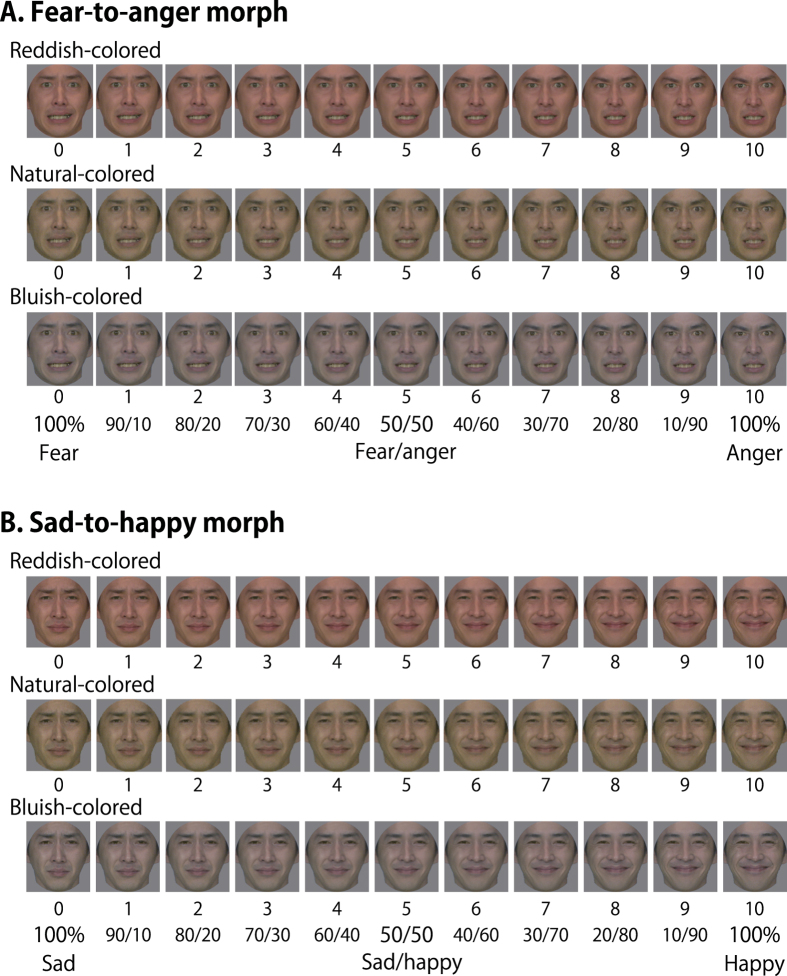

For experiment 1, color images of an emotional face (i.e., 1 female and 1 male posing as fearful, angry, sad, or happy) were taken from the ATR Facial Expression Image Database (DB99) (ATR-Promotions, Kyoto, Japan, http://www.atr-p.com/face-db.html). The database has 10 types of facial expressions from 4 female and 6 male Asian models. The database also includes the results of a psychological evaluation experiment (not published) that tested the validity of the database. External features (i.e., neck, ears, and hairline) were removed from the face images using Photoshop CS2 (Adobe Systems Inc., San Jose, CA, USA). We created different-colored faces for each of the face images by manipulating the CIELab a* (red-green) or b* (yellow-blue) values of their skin area. The CIELab color space is modeled on the human visual system, and designed to be perceptually uniform. Therefore, the change of any component value produces approximately the same perceptible change in color anywhere in the three-dimensional space25. There were three facial color conditions, reddish-colored (+12 units of a*), bluish-colored (−12 units of b*), and natural-colored (not manipulated). Expression continua were created by morphing between 2 different expressions for one identity of the same facial color condition in 10 equal steps using SmartMorph software (MeeSoft, http://meesoft.logicnet.dk/). Two pairs of expressions (i.e., fear-to-anger and sadness-to-happiness) were selected for expression morphing because these pairs can be justifiably associated with different facial colors. That is, fear and sadness could be linked to a bluish face and anger and happiness could be linked to a reddish face (e.g. ref. 26). In total, 132 images were used in this experiment (2 expression morph continua × 3 facial colors × 2 models (one female) × 11 morph increments). Size of all face images was 219 × 243 pixels (11.0° × 12.2° as visual angle). Images were normalized for mean luminance and root mean square (RMS) contrast, and presented in the center of a neutral gray background. Figure 1 shows examples of the morph continua.

Figure 1. Examples of the morph continua for the three facial color conditions used in experiment 1.

(A) Fear-to-anger and (B) sadness-to-happiness. Color images of an emotional face were taken from the ATR Facial Expression Image Database (DB99) (ATR-Promotions, Kyoto, Japan, http://www.atr-p.com/face-db.html).

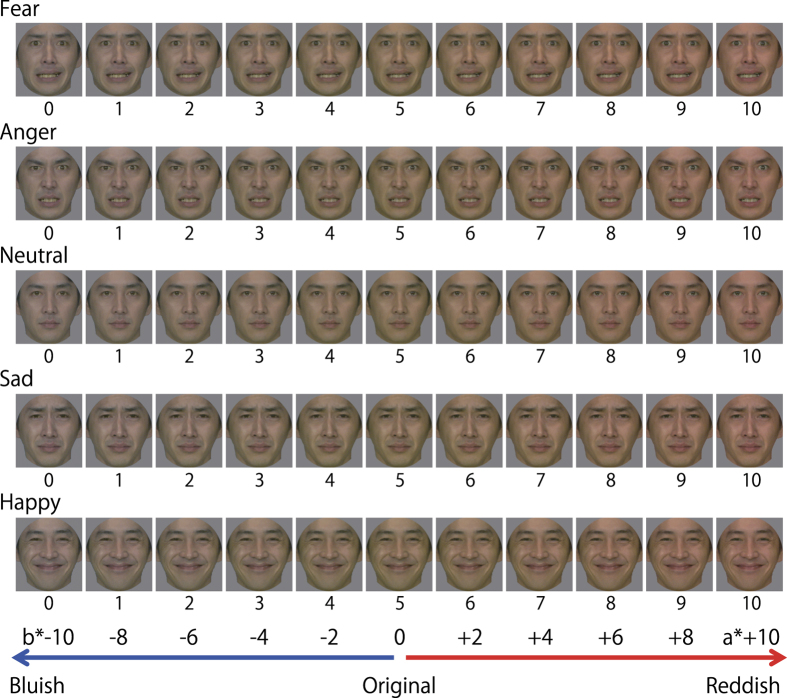

For experiment 2, the colored emotional face stimuli were the same as that used in experiment 1 except that a neutral expression was also used. We created an 11-step (from 0 to 10) differential color face continuum for each of the five expressions (i.e., fearful, angry, sad, happy, and neutral) by manipulating the CIELab a* and b* values of their skin area. Step 0 had the most bluish-colored face by reducing 10 units of b* from step 5 in increments of 2 units of b*. Step 10 had the most reddish-colored face by increasing 10 units of a* from step 5 in increments of 2 units of a*. Hence, step 5 of the continuum was the original face image color (no color manipulation). In total, 110 images were used in this experiment (5 expressions × 2 models (one female) × 11 facial color conditions). The size of all of the face images was the same as in experiment 1. Figure 2 shows examples of the facial color continua.

Figure 2. Examples of the facial color continua for the five expressions used in experiment 2.

Color images of an emotional face were taken from the ATR Facial Expression Image Database (DB99) (ATR-Promotions, Kyoto, Japan, http://www.atr-p.com/face-db.html).

Procedure

Experiment 1 was performed in four blocks, as follows: (1) a fear-to-anger block with a male face); (2) a fear-to-anger block with a female face); (3) a sadness-to-happiness block with a male face); and (4) a sadness-to-happiness block with a female face. Each block consisted of three morph continua, which were different in facial color; thus, there were 33 images (3 facial colors × 11 morph increments). Each trial began with a fixation for 250 ms, followed by a blank interval of 250 ms, and then an expression morphed face was presented for 300 ms. Participants were asked to identify the expression of the face regardless of its facial color as quickly and accurately as possible by pressing one of two buttons. The two-alternative facial expressions were the endpoints of the morph continuum, i.e., fear or anger in blocks 1 and 2, and sadness or happiness in blocks 3 and 4. After the face was presented, a white square was presented for 1,700 ms. Each face was presented 8 times in a random order, resulting in a total of 264 trials (3 facial colors × 11 morph increments × 8 times) per block. The four blocks were run in a random order for each participant.

In experiment 2, we defined three facial expression conditions based on an association between facial color and expression, reddish-associated (anger, happiness), bluish-associated (fear, sadness), and neutral. We also used two different expression sets consisting of three expression conditions, fear-neutral-anger and sadness-neutral-happiness. The experiment was performed in four blocks, as follows: (1) a fear-neutral-anger block with a male face); (2) a fear-neutral-anger block with a female face; (3) a sadness-neutral-happiness block with a male face; and (4) a sadness-neutral-happiness block with a female face. Each block consisted of three facial color continua that were different in facial expression. Each face was presented 8 times in a random order, resulting in a total of 264 trials (3 facial colors × 11 morph increments × 8 times) per block. The procedure was identical to that used in experiment 1, except for the subject’s task. Participants were asked to identify whether the facial color was ‘reddish’ or ‘bluish’ regardless of its expression as quickly and accurately as possible by pressing one of two buttons.

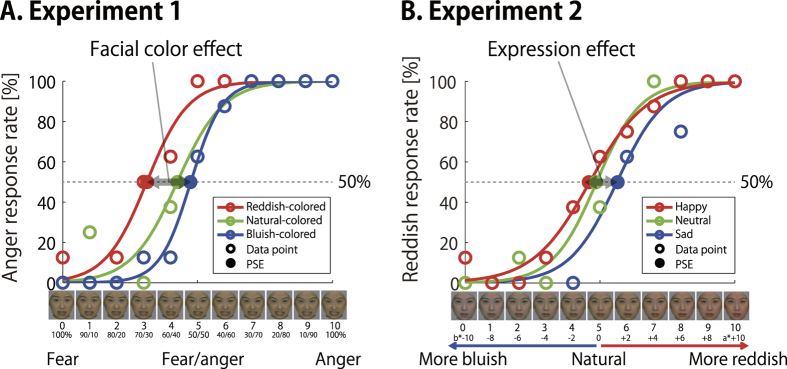

Data analysis

The expression identification rate (experiment 1), facial color identification rate (experiment 2), and mean response times were computed for each face stimuli. The expression identification rate and facial color identification rate from each subject were fit with a psychometric function using a generalized linear model with a binomial distribution in Matlab software (MathWorks, Natick, MA, USA) (Fig. 3). To compare a facial color difference in expression identification and an expression difference in facial color identification, the point of subjective equality (PSE) was computed and analyzed in a repeated measures analysis of variance for each morph condition (i.e., fear-to-anger and sadness-to-happiness) or each expression set (i.e., fear-neutral-anger and sadness-neutral-happiness). In experiment 1, the PSE was the level of expression continuum at which the probability of facial expression identification is equal to two expressions (Fig. 3A). In experiment 2, the PSE was the level of facial color continuum at which the probability of facial color judging is equal to ‘reddish’ or ‘bluish’ (Fig. 3B). Facial color (i.e., reddish-colored, bluish-colored, and natural-colored) was the within-subject factor in experiment 1, and facial expression (i.e., a reddish-associated expression (anger, happiness), a bluish-associated expression (fear, sadness), and a neutral expression) was the within-subject factor in experiment 2. A post-hoc analysis was performed using the Bonferroni method. This statistical analysis was performed using SPSS software (IBM, Armonk, NY, USA).

Figure 3. Psychometric function and PSE of a representative participant.

We computed the PSE for each facial color/expression condition to measure the shift in the psychometric function along the x-axis by facial color/expression. (A) An example is shown for fear-to-anger female face continua in used experiment 1. The x-axis shows the morph continuum and the y-axis shows the percentage of time that the participant (Subject H.N.) judged that facial expression as angry. (B) An example is shown for fear-neural-anger female face continua used in experiment 2. The x-axis shows the facial color continuum and the y-axis shows the percentage of time that the participant (Subject H. T.) judged that facial color as reddish. Color images of an emotional face were taken from the ATR Facial Expression Image Database (DB99) (ATR-Promotions, Kyoto, Japan, http://www.atr-p.com/face-db.html).

We analyzed response times (RTs) using a linear mixed-effect (lme) model with participants as random effects, and three facial colors and the percent anger (or happiness) in morphs (0 to 10) as fixed effects in experiment 1. In experiment 2, we used three facial expressions and facial color levels (0 to 10) as fixed effects. Test statistics and degrees of freedom for mixed models were estimated using Kenward-Roger’s approximation with package “lmerTest”27. Effect sizes are calculated as marginal and conditional coefficients of determination (R2m and R2c) using the package “MuMIn”28.

Results

Point of subjective equality and reaction times in experiment 1

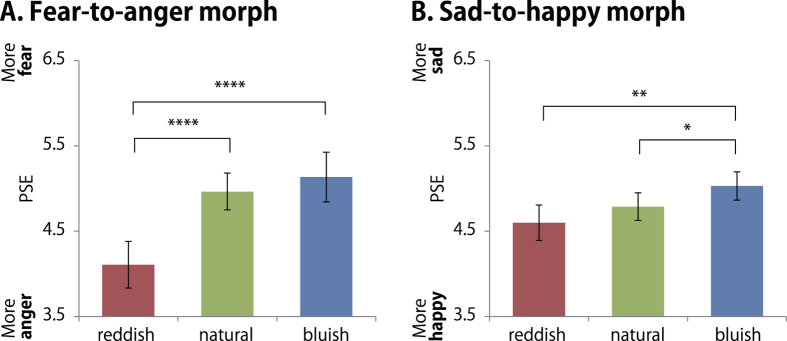

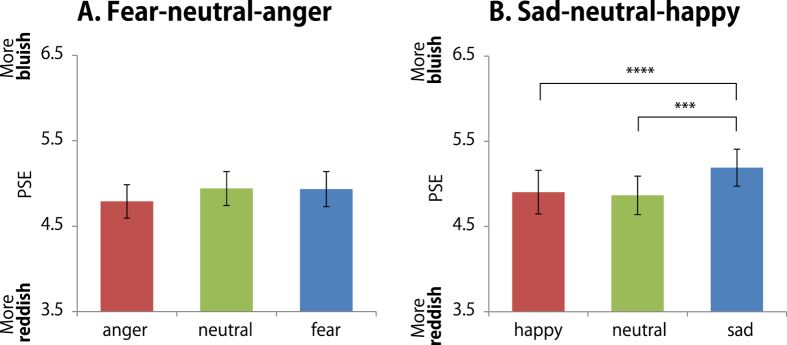

The average PSE for fear-to-anger and sadness-to-happiness continua are shown in Fig. 4. For the fear-to-anger continua, a higher PSE indicates that the participants judged the continuum as more fearful than angry faces, while a lower PSE indicates that the participants judged the continuum as angrier (Fig. 4A). For the sadness-happiness continua, a higher PSE indicated that the participants judged the continuum as sadder, while a lower PSE indicated that the participants judged the continuum as happier (Fig. 4B). Data from two participants (one male) were removed from the group analysis because they showed a remarkably different pattern of expression identification, and thus their PSE could not be calculated. As a result, the group analysis was performed for the data from 18 participants.

Figure 4. The PSEs for the facial expression discrimination task in experiment 1.

(A) Fear-to-anger continua. (B) Sadness-to-happiness continua. The error bars show the standard error of the mean of the PSEs across participants. Asterisks indicate a significant difference as a result of multiple comparison test for a facial color main effect; *p < 0.05, **p < 0.01, ***p < 0.005, ****p < 0.001.

For the fear-to-anger continua, we found a significant facial color main effect on PSE [F(2, 34) = 18.954; p < 0.001; partial η2 = 0.527], as the PSE for reddish-colored faces was lower than that of bluish- and natural-colored faces [both, p < 0.001], with no difference found between the bluish- and natural-colored faces [p = 1] (Fig. 4A). These results indicate that the participants judged the reddish-colored face continuum as angrier than the bluish- and neutral-colored continua.

In the sadness-to-happiness continua, we found a significant facial color main effect on PSE [F(2, 34) = 8.39; p < 0.005; partial η2 = 0.330], as the PSE for bluish-colored faces was higher than that for reddish- and natural-colored faces [respectively, p < 0.01 and p < 0.05], with no difference found between the reddish- and natural-colored faces [p > 0.3] (Fig. 4B). These results indicate that the participants judged the bluish-colored face continuum as sadder than the reddish- and neutral-colored continua.

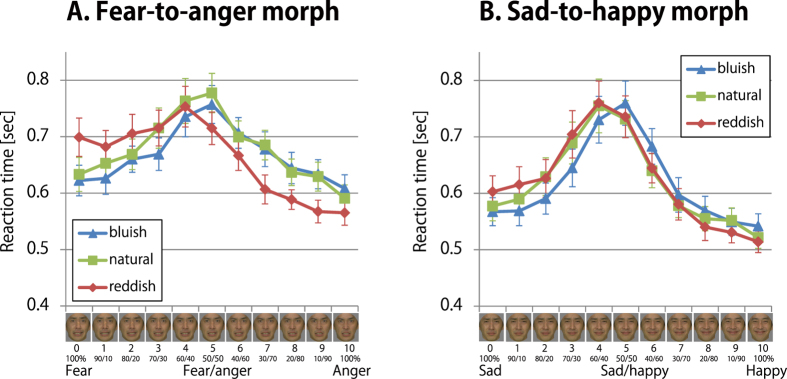

Regarding reaction times, in the fear-to-anger continua [R2m = 0.055 and R2c = 0.642], there was a significant main effect of expression morph increment on RT [F(1, 537) = 48.77; p < 0.001 (Fig. 5A). We also found a significant facial color main effect on RT [F(2, 395.18) = 12.09; p < 0.001], as the RT for the reddish-colored faces was shorter than that for the natural-colored faces [p < 0.05]. In addition, there was a significant expression morph increment × facial color interaction [F(2, 537) = 19.3; p < 0.001]. We found a simple main effect of morph on reddish-colored and natural colored faces [F(1, 179) = 88.42; p < 0.001, R2m = 0.144, R2c = 0.678; F(1, 179) = 5.659; p < 0.05, R2m = 0.012, R2c = 0.583]. In short, the expression morph increment × facial color interaction indicates that the morphs affected the RT for the reddish-colored and natural colored faces compared to bluish-colored faces (see Fig. 5A).

Figure 5. The RTs for the facial expression discrimination task in experiment 1.

(A) Fear-to-anger continua. (B) Sadness-to-happiness continua. The error bars show the standard error of the mean of the RTs across participants. Color images of an emotional face were taken from the ATR Facial Expression Image Database (DB99) (ATR-Promotions, Kyoto, Japan, http://www.atr-p.com/face-db.html).

In the sadness-to-happiness continua [R2m = 0.040 and R2c = 0.537], there was also a significant main effect of expression morph increment on RT [F(1, 537) = 42.36; p < 0.001] (Fig. 5B). There was a significant facial color main effect on RT [F(2, 395.18) = 3.694; p < 0.05], but no significant difference was found between the conditions (p > 0.6). Importantly, we found a significant expression morph increments × facial color interaction [F(2, 571) = 4.008; p < 0.001]. We found a simple main effect of morph on the reddish-colored and natural colored faces [F(1, 179) = 29.48; p < 0.001, R2m = 0.072, R2c = 0.518; F(1, 179) = 14.92; p < 0.001, R2m = 0.0378, R2c = 0.501].

Point of subjective equality and reaction times in experiment 2

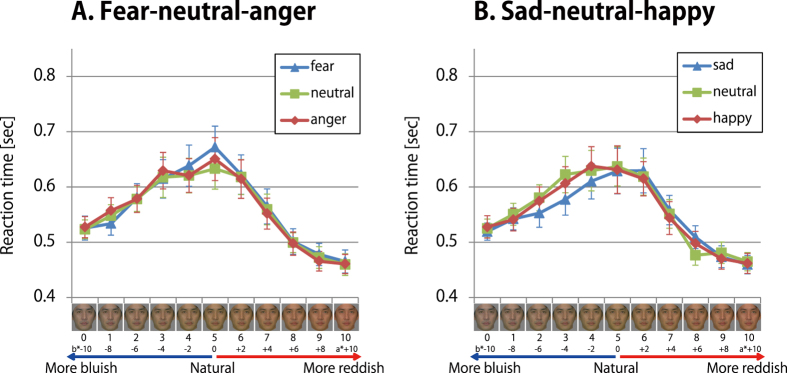

The average PSE for fear-neutral-anger and sadness-neutral-happiness sets are shown in Fig. 6. For both expression sets, a higher PSE indicates that the participants responded to the facial color continuum as more bluish, while a lower PSE indicates that the participants responded to the continuum as more reddish (Fig. 6A). Data from one subject (a female) was removed from the group analysis because she had remarkably different pattern of facial color identification compared to the others, and thus her PSE could not be calculated. As a result, the group analysis was performed for the data from 19 participants.

Figure 6. The PSEs for the facial color discrimination task in experiment 2.

(A) Fear-neutral-anger set. (B) Sadness-neutral-happiness set. The error bars show the standard error of the mean of the PSEs across participants. Asterisks indicate a significant difference as a result of a multiple comparison test for a main effect for facial expression; *p < 0.05, **p < 0.01, ***p < 0.005, ****p < 0.001.

In the fear-neutral-anger set, there was no significant facial expression main effect on PSE [F(2, 36) = 1.690; p > 0.1; partial η2 = 0.086] (Fig. 6A).

In the sadness-neutral-happiness set, we found a significant facial expression main effect on the PSE [F(2, 36) = 9.090; p < 0.005; partial η2 = 0.336], as the PSE for sad expression faces was higher than that for happy and neutral expression faces [both, p < 0.005], with no difference between happy and neutral expression faces [p = 1] (Fig. 6B).

Regarding reaction times, we found a significant main effect of facial color level on fear-neutral-anger [F(1, 567.0) = 75.46; p < 0.001, R2m = 0.0449, R2c = 0.634] (Fig. 7A) and sadness-neutral-happiness sets [F(1, 567.0) = 68.10; p < 0.001, R2m = 0.0416, R2c = 0.630] (Fig. 7B). However, there was no facial expression main effect or interaction for the fear-neutral-anger [p = 0.841, p = 0.720] and sadness-neutral-happiness sets [p = 0.313, p = 0.449].

Figure 7. The RTs for the facial color discrimination task in experiment 2.

(A) Fear-neutral-anger set. (B) Sadness-neutral-happiness set. The error bars show the standard error of the mean of the RTs across participants. Color images of an emotional face were taken from the ATR Facial Expression Image Database (DB99) (ATR-Promotions, Kyoto, Japan, http://www.atr-p.com/face-db.html).

Discussion

The present study investigated the relationship between facial color and expression on face perception. We demonstrated that facial color influences the perception of facial expression. In contrast, facial expression only slightly affects the perception of facial color. The present results reveal a link between facial color and emotion perception, and provide evidence that facial color (chromaticity) contributes to emotion perception. Moreover, our results that facial color promotes facial expression recognition, and vice versa, partially supports the hypothesis that the evolution of trichromacy is related to the detection of social signals.

In experiment 1, facial color shifted the expression categorical boundary (PSE) and modulated the reaction times of expression judgment. These results show that reddish-colored faces enhance anger perception, and bluish-colored faces enhance sadness perception. Specifically, there was a robust facilitative effect of reddish-colored faces on anger perception; this indicates that a reddish-colored face appears angrier. Facial flushing during anger has been reported in physiological studies2,4,29. Although other emotional states such as pleasure5 also induce a facial color change, our results suggest that the perceptual effect of facial color is especially robust for anger perception. In sadness-happiness discrimination, bluish (pale) faces enhanced sadness perception. Alkawaz et al.30 investigated the correlation between blood oxygenation in changing facial skin color and emotional expressions to show that sadness produced pale faces, while happiness reddish-colored faces. In addition, this difference was smaller than the one between anger and fear. Their results were consistent with our findings.

In experiment 2, the facial color boundary was significantly shifted only for the sad expression, suggesting that the sad face appeared pale (bluish). The fear-neutral-anger expression set had no effect on color perception. The disproportionate results of the facial expression effect have been due to differences in the emotional information of the facial expressions. As presented above, distinguishing between fear and anger was more difficult than distinguishing between sadness and happiness; hence, an unconscious expression effect during facial color discrimination might have less of an effect in the fear-neutral-anger expression set. The expression effect was too small to influence the RT for facial color even in the sadness-neutral-happiness set compared to the facial color effect observed in experiment 1. These results show that facial expression has a limited effect on facial color perception. Previous electroencephalogram (EEG) studies show that facial color is processed at around 170 ms after a face is presented (N170 stage)31,32. In general, facial expression is processed at a later stage of face perception processing33,34,35,36.

A potential limitation of the present study is that we only used Japanese faces as visual stimuli. The accuracy of emotion discrimination is higher when the race of facial stimuli is the same race as the perceiver37. The effect of facial color obtained in this study is also likely to produce similar effects to such cross-race effects.

The present study conducted two psychophysical experiments to investigate the effect of facial color and expression on face perception. We demonstrated that facial color influences the perception of facial expression. In contrast, facial expression only slightly affects the perception of facial color. Our results suggest an interactive but disproportionate relationship between facial color and expression on face perception. We provide psychophysical evidence for the effect of facial color on facial expression perception. The participants of the present study were all Japanese; therefore, future studies are needed to investigate the interaction of facial color and facial expression in other ethnic groups.

Additional Information

How to cite this article: Nakajima, K. et al. Interaction between facial expression and color. Sci. Rep. 7, 41019; doi: 10.1038/srep41019 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Acknowledgments

This work was supported by grants from Grants-in-Aid for Scientific Research from the Japan Society for the Promotion of Science and Technology (grant number 26240043), and from the Strategic Information and Communications R&D Promotion Programme (SCOPE) of the Ministry of Internal Affairs and Communications, Japan.

Footnotes

Author Contributions T.M. and S.N. conceived the experiment(s), K.N. conducted the experiment(s), and K.N. and T.M. analyzed the results. All authors reviewed the manuscript.

References

- Kreibig S. D. Autonomic nervous system activity in emotion: A review. Biological Psychology 84, 394–421 (2010). [DOI] [PubMed] [Google Scholar]

- Darwin C. The expression of the emotions in man and animals, Vol. 526. (University of Chicago Press, 1965). [Google Scholar]

- Drummond P. D. & Quah S. H. The effect of expressing anger on cardiovascular reactivity and facial blood flow in Chinese and Caucasians. Psychophysiology 38, 190–196 (2001). [PubMed] [Google Scholar]

- Montoya P., Campos J. J. & Schandry R. See red? Turn pale? Unveiling emotions through cardiovascular and hemodynamic changes. The Spanish journal of psychology 8, 79–85 (2005). [DOI] [PubMed] [Google Scholar]

- Drummond P. D. The effect of anger and pleasure on facial blood flow. Australian Journal of Psychology 46, 95–99 (1994). [Google Scholar]

- Drummond P. D. Correlates of facial flushing and pallor in anger-provoking situations. Personality and Individual Differences 23, 575–582 (1997). [Google Scholar]

- Ramirez G. A., Fuentes O., Crites S. L., Jimenez M. & Ordonez J. In Computer Vision and Pattern Recognition Workshops (CVPRW), 2014 IEEE Conference on 474–479 (2014).

- Surridge A. K., Osorio D. & Mundy N. I. Evolution and selection of trichromatic vision in primates. Trends Ecol. Evol. 18, 198–205 (2003). [Google Scholar]

- Changizi M. A., Zhang Q. & Shimojo S. Bare skin, blood and the evolution of primate colour vision. Biol. Lett. 2, 217–221 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vorobyev M. Ecology and evolution of primate colour vision. Clin Exp Optom 87, 230–238 (2004). [DOI] [PubMed] [Google Scholar]

- Allen G. The Colour-sense: Its Origin and Development: An Essay in Comparative Psychology. (Houghton, 1879). [Google Scholar]

- Lucas P. W., Darvell B. W., Lee P. K., Yuen T. D. & Choong M. F. Colour cues for leaf food selection by long-tailed macaques (Macaca fascicularis) with a new suggestion for the evolution of trichromatic colour vision. Folia Primatol (Basel) 69, 139–152 (1998). [DOI] [PubMed] [Google Scholar]

- Tan K. W. & Stephen I. D. Colour detection thresholds in faces and colour patches. Perception 42, 733–741 (2013). [DOI] [PubMed] [Google Scholar]

- Bruce V. & Langton S. The use of pigmentation and shading information in recognising the sex and identities of faces. Perception 23, 803–822 (1994). [DOI] [PubMed] [Google Scholar]

- Tarr M. J., Kersten D., Cheng Y. & Rossion B. It’s Pat! Sexing faces using only red and green. Journal of Vision 1, 337 (2001). [Google Scholar]

- Jones B. C., Little A. C., Burt D. M. & Perrett D. I. When facial attractiveness is only skin deep. Perception 33, 569–576 (2004). [DOI] [PubMed] [Google Scholar]

- Fink B., Grammer K. & Matts P. Visible skin color distribution plays a role in the perception of age, attractiveness, and health in female faces☆. Evolution and Human Behavior 27, 433–442 (2006). [Google Scholar]

- Matts P. J., Fink B., Grammer K. & Burquest M. Color homogeneity and visual perception of age, health, and attractiveness of female facial skin. J Am Acad Dermatol 57, 977–984 (2007). [DOI] [PubMed] [Google Scholar]

- Stephen I. D., Smith M. J. L., Stirrat M. R. & Perrett D. I. Facial Skin Coloration Affects Perceived Health of Human Faces. Int J Primatol 30, 845–857 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephen I. D., Oldham F. H., Perrett D. I. & Barton R. A. Redness enhances perceived aggression, dominance and attractiveness in men’s faces. Evolutionary psychology: an international journal of evolutionary approaches to psychology and behavior 10, 562–572 (2012). [PubMed] [Google Scholar]

- Yamada T. & Watanabe T. In Robot and Human Interactive Communication, 2004. ROMAN 2004. 13th IEEE International Workshop on 341–346 (IEEE, 2004). [Google Scholar]

- Yasuda M., Webster S. & Webster M. Color and facial expressions. Journal of Vision 7, 946 (2007). [Google Scholar]

- Song H., Vonasch A. J., Meier B. P. & Bargh J. A. Brighten up: Smiles facilitate perceptual judgment of facial lightness. Journal of Experimental Social Psychology 48, 450–452 (2012). [Google Scholar]

- Oldfield R. C. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113 (1971). [DOI] [PubMed] [Google Scholar]

- Martinkauppi B. Face Colour Under Varying Illumination: Analysis and Applications. (University of Oulu Finland, 2002). [Google Scholar]

- Jung Y., Weber C., Keil J. & Franke T. In Intelligent Virtual Agents, Vol. 5773. (eds Ruttkay Z., Kipp M., Nijholt A. & Vilhjálmsson H.) 504–505 (Springer Berlin Heidelberg, 2009). [Google Scholar]

- Kuznetsova A., Brockhoff P. B. & Christensen R. H. B. Package ‘lmerTest’. R package version, 2.0–29 (2015). [Google Scholar]

- Barton K. & Barton M. K. Package ‘MuMIn’. Version 1, 18 (2013). [Google Scholar]

- Drummond P. D. & Quah S. A. W. H. A. N. The effect of expressing anger on cardiovascular reactivity and facial blood flow in Chinese and Caucasians. 190–196 (2001). [PubMed] [Google Scholar]

- Alkawaz M. H., Mohamad D., Saba T., Basori A. H. & Rehman A. The Correlation Between Blood Oxygenation Effects and Human Emotion Towards Facial Skin Colour of Virtual Human. 3d Res 6 (2015). [Google Scholar]

- Minami T., Goto K., Kitazaki M. & Nakauchi S. Effects of color information on face processing using event-related potentials and gamma oscillations. Neuroscience 176, 265–273 (2011). [DOI] [PubMed] [Google Scholar]

- Nakajima K., Minami T. & Nakauchi S. The face-selective N170 component is modulated by facial color. Neuropsychologia 50, 2499–2505 (2012). [DOI] [PubMed] [Google Scholar]

- Rellecke J., Sommer W. & Schacht A. Emotion Effects on the N170: A Question of Reference? Brain topography 26, 62–71 (2013). [DOI] [PubMed] [Google Scholar]

- Rellecke J., Sommer W. & Schacht A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biological psychology 90, 23–32 (2012). [DOI] [PubMed] [Google Scholar]

- Eimer M. & Holmes A. An ERP study on the time course of emotional face processing. Neuroreport 13, 427–431 (2002). [DOI] [PubMed] [Google Scholar]

- Nakajima K., Minami T. & Nakauchi S. Effects of facial color on the subliminal processing of fearful faces. Neuroscience 310, 472–485 (2015). [DOI] [PubMed] [Google Scholar]

- Elfenbein H. A. & Ambady N. On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychol Bull 128, 203–235 (2002). [DOI] [PubMed] [Google Scholar]