Short abstract

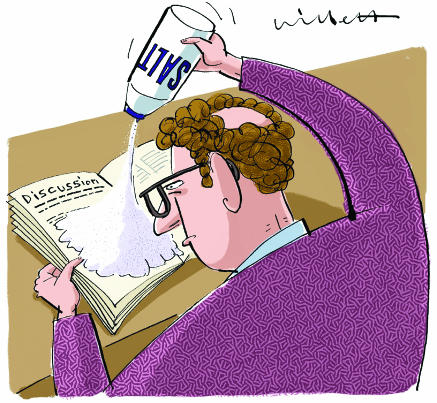

Plenty of advice is available to help readers identify studies with weak methods, but would you be able to identify misleading claims in a report of a well conducted study?

Science is often not objective.1 Emotional investment in particular ideas and personal interest in academic success may lead investigators to overemphasise the importance of their findings and the quality of their work. Even more serious conflicts arise when for-profit organisations, including pharmaceutical companies, provide funds for research and consulting, conduct data management and analyses, and write reports on behalf of the investigators.

Although guides to help recognise methodological weaknesses that may introduce bias are now widely available,2,3 these criteria do not protect readers against misleading interpretations of methodologically sound studies. In this article, we present a guide that provides clinicians with tools to defend against biased inferences from research studies (box).

Guide to avoid being misled by biased presentation and interpretation of data

Read only the Methods and Results sections; bypass the Discussion section

Read the abstract reported in evidence based secondary publications

Beware faulty comparators

Beware composite endpoints

Beware small treatment effects

Beware subgroup analyses

Read methods and results only

The discussion section of research reports often offers inferences that differ from those a dispassionate reader would draw from the methods and results.4 The table gives details of two systematic reviews summarising a similar set of randomised trials assessing the effect of albumin for fluid resuscitation. The trials included in both reviews were small and methodologically weak, and their results are heterogeneous. Both the reviews provide point estimates suggesting that albumin may increase mortality and confidence intervals that include the possibility of a considerable increase in mortality. Nevertheless, one set of authors took a strong position that albumin is dangerous, the other that it is not. Their positions were consistent with the interests of funders of their reviews.5

Table 1.

Comparison of two systematic reviews of albumin for fluid resuscitation

| Wilkes, 2001w1 | Alderson, 2002w2 | |

|---|---|---|

| Funding body | Plasma Proteins Therapeutic Association | NHS |

| Trials included | 42 short term trials reporting mortality | 31 short term trials reporting mortality |

| Overall difference in mortality between albumin and crystalloid (relative risk, 95% CI) | 1.11 (0.95 to 1.28) | 1.52 (1.17 to 1.99) |

| Difference in burns patients | 1.76 (0.97 to 3.17) | 2.4 (1.11 to 5.19) |

| Interests of funding body | Promotes access to and reimbursement for the use of albumin | Pays for use of albumin |

| Authors' conclusions | Results “should serve to allay concerns regarding the safety of albumin” | Recommend banning use of albumin outside rigorously conducted randomised controlled trials |

This is not an idiosyncratic example. Systematic examinations of the relation between funding and conclusions have found that the odds of recommending an experimental drug as treatment of choice increases fivefold with for-profit organisation funding (odds ratio 5.3, 95% confidence interval 2.0 to 14.4) compared with not-for-profit funding).6

Figure 1.

If editors insisted that discussion sections of original articles included a systematic review of the relevant literature, this first pointer would no longer be relevant. However, few original trial reports include systematic reviews,7 and this may not change in the foreseeable future.

To follow this advice, readers must be able to make sense of the methods and results. Fortunately, clinicians can access many educational materials to acquire skills in interpreting studies' designs and their findings.2,3

Read the abstract reported in pre-appraised resources

Secondary journals, such as the ACP Journal Club, Evidence-Based Medicine, and Evidence-Based Mental Health, publish structured abstracts produced by a team of clinicians and methodologists. These abstracts often include critical information about the research methods (allocation concealment, blinding, completeness of follow up) omitted from the original papers.8 The conclusions of this “secondary” abstract are the product of critical appraisal by people without competing financial or personal interests.

The objectivity and methodological sophistication of those preparing the independent structured abstracts will often provide additional value for clinicians. For example, substantial discrepancies occur between the full report of the PROGRESS trial and the abstract in ACP Journal Club.w3 w4 The title of the original publication describes the study as testing “A perindopril-based blood pressure lowering regimen” and reports that the perindopril regimen resulted in a 28% relative risk reduction in the risk of recurrent stroke (95% confidence interval 17-38%).w3 The ACP Journal Club abstract and its commentary identified the publication as describing two parallel but separate randomised placebo controlled trials including about 6100 patients with a history of stroke or transient ischaemic attack:

In one trial, patients were randomised to receive perindopril or placebo, and active treatment had no appreciable effect on stroke (relative risk reduction = 5%, 95% confidence interval - 19% to 23%).

In the second trial, patients were allocated to receive perindopril plus indapamide or double placebo. Combined treatment resulted in a 43% relative risk reduction (30% to 54%) in recurrent stroke.

The ACP Journal Club commentary notes that the authors disagree with the interpretation of the publication as reporting two separate trials (which explains why it is difficult for even the knowledgeable reader to get a clear picture of the design from the original publication).

Beware faulty comparators

Several systematic reviews have shown that industry funded studies typically yield larger treatment effects than not-for-profit funded studies.9,10 One likely explanation is choice of comparators.11 Researchers with an interest in a positive result may choose a placebo comparator rather than an alternative drug with proved effectiveness. For instance, in a study of 136 trials of new treatments for multiple myeloma, 60% of studies funded by for-profit organisations, but only 21% of trials funded by not-for-profit organisations, compared their new interventions against placebo or no treatment.12 Box A on bmj.com gives other examples.

When reading reports of randomised trials, clinicians should ask themselves: “Should the comparator have been another active agent rather than placebo; if investigators chose an active comparator, was the dose, formulation, and administration regimen optimal?”

Beware composite end points

Investigators often use composite end points to enhance the statistical efficiency of clinical trials. Problems in the interpretation of these trials arise when composite end points include component outcomes to which patients attribute very different importance—for example, the primary outcome in a trial of irbesartan v placebo for diabetic nephropathy was a composite end point including death, end stage renal disease, and doubling of serum creatinine concentration.13 Problems may also arise when the most important end point occurs infrequently or when the apparent effect on component end points differs. Thus, the reported effect on “important cardiovascular end points” may reflect mostly the effect of treatment on angina rather than on mortality and non-fatal myocardial infarction (see bmj.com for another example).

When the more important outcomes occur infrequently, clinicians should focus on individual outcomes rather than on composite end points. Under these circumstances, inferences about the effect of treatment on the more important end points (which, because they occur infrequently will have very wide confidence intervals) will be weak. In focusing on the more reliable estimates of effects on the less important outcomes, readers will see that, for instance, a putative reduction in death, myocardial infarction, and revascularisation is really just an effect on the frequency of revascularisation.14

Beware small treatment effects

Increasingly, investigators are conducting very large trials to detect small treatment effects. Results suggest small treatment effects when either the point estimate is close to no effect (a relative or absolute risk reduction close to 0; a relative risk or odds ratio close to 1) or the confidence interval includes values close to no effect. In one large trial, investigators randomly allocated just over 6000 participants to receive angiotensin converting enzyme inhibitors or diuretics for hypertension and concluded “initiation of antihypertensive treatment involving ACE inhibitors in older subjects... seems to lead to better outcomes than treatment with diuretic agents.”15 In absolute terms, the difference between the regimens was very small: there were 4.2 events per 100 patient years in the angiotensin converting enzyme group and 4.6 events per 100 patient years in the diuretic group. The relative risk reduction corresponding to this absolute difference (11%) had an associated 95% confidence interval of - 1% to 21%.

Here, we have two reasons to doubt the importance of the apparent difference between the two types of antihypertensive drug. Firstly, the point estimate suggests a very small absolute difference (0.4 events per 100 patient years) and, secondly, the confidence interval suggests it may have been even smaller—indeed, there may have been no true difference at all.

When the absolute risk of adverse events in untreated patients is low, the presentation may focus on relative risk reduction and de-emphasise or ignore absolute risk reduction. Other techniques for making treatment effects seem large include misleading graphical representations,16 and using different time frames to present harms and benefits (see box C on bmj.com).

Beware subgroup analyses

Clinicians often wonder if a subgroup of patients will achieve larger benefits when exposed to a particular treatment. Because the play of chance can lead to apparent but spurious differences in effects between subgroups, clinicians should be cautious about reports of subgroup analyses.

Summary points

Many interests may influence researchers towards favourable interpretation and presentation of their findings

Guides helping clinicians to identify weak studies that are open to bias are available

Clinicians also need to be able to detect when authors of methodologically strong studies make misleading claims

Six pointers to help clinicians avoid being misled are presented

In the trial of angiotensin converting enzyme inhibitor versus diuretic based antihypertensive treatment above, the complete conclusion reads “initiation of antihypertensive treatment involving ACE inhibitors in older subjects, particularly men, appears to lead to better outcomes than treatment with diuretic agents.”15 The possibility that effect differed by sex was not one of a small number of prior hypotheses, and the size of the difference in effect was small (relative risk reductions of 17% (95% confidence interval 3 to 29%) in men and 0% (- 20% to 17%) in women).

The difference between the angiotensin converting enzyme inhibitors and diuretics was significant in men but not women. Investigators might use this fact to argue for differences in the effect between men and women. The real issue, however, is whether chance is a sufficient explanation for the difference in effect between women and men, and it is in this case. The P value associated with the null hypothesis that the underlying relative risk is identical in men and women is 0.15. Thus, if there were no true difference in effect between men and women, we would see apparent differences of 17%, or greater, 15% of the time. Overall, the inference that the effect differs in men satisfies only one of seven criteria for a valid subgroup analysis.17

Clinicians may sometimes encounter parallel examples when investigators discount effects of beneficial treatments because of apparent lack of effects in subgroups. Here, clinicians must ask themselves whether there is strong evidence that patients in a subgroup should be denied the beneficial treatment. The answer will usually be “no.” In general, we advise clinicians to be sceptical concerning claims of differential treatment effects in subgroups of patients.

Conclusion

We have presented six pointers to help clinicians protect themselves and their patients from potentially misleading presentations and interpretations of research findings. These strategies are unlikely to be foolproof. Decreasing the dependence of the research endeavour on for-profit funding, implementing a requirement for mandatory registration of clinical trials, and instituting more structured approaches to reviewing and reporting research18,19 may reduce biased reporting. At the same time, it is likely that potentially misleading reporting will always be with us, and the guide we have presented will help clinicians to stay armed.

Supplementary Material

Illustrative examples and references w1-w15 are on bmj.com

Illustrative examples and references w1-w15 are on bmj.com

Contributors and sources: The authors are clinical epidemiologists who are involved in critical review of medical and surgical studies as part of their educational and editorial activities. In that context, they have collected some of the examples presented here. Other examples were collected by searching titles of records in PubMed using the textwords “misleading,” “biased,” and “spin” using the Related Articles feature for every pertinent hit, and by noting relevant references from retrieved papers. VMM and GHG wrote the first draft of the article. All authors contributed to the ideas represented in the article, made critical contributions and revisions to the first draft, and approved the final version. GHG is guarantor.

Funding: VMM is a Mayo Foundation scholar. PJD is supported by a Canadian Institutes of Health Research senior research fellowship award. MB was supported by a Detweiler fellowship, Royal College of Physicians and Surgeons of Canada and is currently supported by a Canada Research Chair.

Competing interests: VMM, RJ, HJS, PJD, and GHG are associate editors of the ACP Journal Club and Evidence-Based Medicine. JLB and RJ edit an evidence based medicine journal in Poland. MB is editor of the evidence based orthopaedic trauma section in the Journal of Orthopaedic Trauma.

References

- 1.Horton R. The rhetoric of research. BMJ 1995;310: 985-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Greenhalgh T. How to read a paper. London: BMJ Books, 2001.

- 3.Guyatt G, Rennie D, eds. Users' guides to the medical literature. A manual for evidence-based clinical practice. Chicago, IL: AMA Press, 2002.

- 4.Bero LA, Rennie D. Influences on the quality of published drug studies. Int J Technol Assess Health Care 1996;12: 209-37. [DOI] [PubMed] [Google Scholar]

- 5.Cook D, Guyatt G. Colloid use for fluid resuscitation: evidence and spin. Ann Intern Med 2001;135: 205-8. [DOI] [PubMed] [Google Scholar]

- 6.Als-Nielsen B, Chen W, Gluud C, Kjaergard LL. Association of funding and conclusions in randomized drug trials: a reflection of treatment effect or adverse events? JAMA 2003;290: 921-8. [DOI] [PubMed] [Google Scholar]

- 7.Clarke M, Alderson P, Chalmers I. Discussion sections in reports of controlled trials published in general medical journals. JAMA 2002;287: 2799-801. [DOI] [PubMed] [Google Scholar]

- 8.Devereaux PJ, Manns BJ, Ghali WA, Quan H, Guyatt GH. Reviewing the reviewers: the quality of reporting in three secondary journals. CMAJ 2001;164: 1573-6. [PMC free article] [PubMed] [Google Scholar]

- 9.Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA 2003;289: 454-65. [DOI] [PubMed] [Google Scholar]

- 10.Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ 2003;326: 1167-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mann H, Djulbegovic B. Biases due to differences in the treatments selected for comparison (comparator bias). James Lind Library. www.jameslindlibrary.org/essays/bias/comparator-_bias.html (accessed 2 Oct 2004).

- 12.Djulbegovic B, Lacevic M, Cantor A, Fields KK, Bennett CL, Adams JR, et al. The uncertainty principle and industry-sponsored research. Lancet 2000;356: 635-8. [DOI] [PubMed] [Google Scholar]

- 13.Lewis EJ, Hunsicker LG, Clarke WR, Berl T, Pohl MA, Lewis JB, et al. Renoprotective effect of the angiotensin-receptor antagonist irbesartan in patients with nephropathy due to type 2 diabetes. N Engl J Med 2001;345: 851-60. [DOI] [PubMed] [Google Scholar]

- 14.Waksman R, Ajani AE, White RL, Chan RC, Satler LF, Kent KM, et al. Intravascular gamma radiation for in-stent restenosis in saphenous-vein bypass grafts. N Engl J Med 2002;346: 1194-9. [DOI] [PubMed] [Google Scholar]

- 15.Wing LM, Reid CM, Ryan P, Beilin LJ, Brown MA, Jennings GL, et al. A comparison of outcomes with angiotensin-converting-enzyme inhibitors and diuretics for hypertension in the elderly. N Engl J Med 2003;348: 583-92. [DOI] [PubMed] [Google Scholar]

- 16.Tufte E. The visual display of quantitative information. Cheshire, CT: Graphics Press, 1983.

- 17.Oxman A, Guyatt G, Green L, Craig J, Walter S, Cook D. When to believe a subgroup analysis. In: Guyatt G, Rennie D, eds. Users' guides to the medical literature. A manual for evidence-based clinical practice. Chicago, IL: AMA Press, 2002: 553-65.

- 18.Docherty M, Smith R. The case for structuring the discussion of scientific papers. BMJ 1999;318: 1224-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet 2001;357: 1191-4. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.