Abstract

Gaussian vector autoregressive (VAR) processes have been extensively studied in the literature. However, Gaussian assumptions are stringent for heavy-tailed time series that frequently arises in finance and economics. In this paper, we develop a unified framework for modeling and estimating heavy-tailed VAR processes. In particular, we generalize the Gaussian VAR model by an elliptical VAR model that naturally accommodates heavy-tailed time series. Under this model, we develop a quantile-based robust estimator for the transition matrix of the VAR process. We show that the proposed estimator achieves parametric rates of convergence in high dimensions. This is the first work in analyzing heavy-tailed high dimensional VAR processes. As an application of the proposed framework, we investigate Granger causality in the elliptical VAR process, and show that the robust transition matrix estimator induces sign-consistent estimators of Granger causality. The empirical performance of the proposed methodology is demonstrated by both synthetic and real data. We show that the proposed estimator is robust to heavy tails, and exhibit superior performance in stock price prediction.

1. Introduction

Vector autoregressive models are widely used in analyzing multivariate time series. Examples include financial time series (Tsay, 2005), macroeconomic time series (Sims, 1980), gene expression series (Fujita et al., 2007; Opgen-Rhein & Strimmer, 2007), and functional magnetic resonance images (Qiu et al., 2015).

Let X1, …, XT ∈ ℝd be a stationary multivariate time series. We consider VAR models1 such that

where A is the transition matrix, and E2, …, ET are latent innovations. The transition matrix characterizes the dependence structure of the VAR process, and plays a fundamental role in forecasting. Moreover, the sparsity pattern of the transition matrix is often closely related to Granger causality. In this paper, we focus on estimating the transition matrix in high dimensional VAR processes.

VAR models have been extensively studied under the Gaussian assumption. The Gaussian VAR model assumes that the latent innovations are i.i.d. Gaussian random vectors, and are independent from past observations (Lütkepohl, 2007). Under this model, there is vast literature on estimating the transition matrix under high dimensional settings. These estimators can be categorized into regularized estimators and Dantzig-selector-type estimators. The former can be formulated by

| (1.1) |

where Y ≔ (X1, …, XT − 1) ∈ ℝd×(T−1), X ≔ (X2, …, XT) ∈ ℝd×(T−1), l(·) is a loss function, and Pρ(·) is a penalty function with penalty parameter ρ. Common choices of the loss function include least squares loss and negative log-likelihood (Hamilton, 1994). For the penalty function, various ℓ1 penalties (Wang et al., 2007; Hsu et al., 2008; Shojaie & Michailidis, 2010) and ridge penalty (Hamilton, 1994) are widely used. Theoretical properties of ℓ1 penalized estimators are studied in Narki & Rinaldo (2011), Song & Bickel (2011), and Basu & Michailidis (2013).

In parallel to the penalized minimum loss estimators, Han & Liu (2013) proposed a Dantzig-selector-type estimator, which is formulated as the solution to a linear programming problem. In contrast to the ℓ1 regularized estimators, consistency of the Dantzig-selector-type estimator do not rely on restricted eigenvalue conditions. These conditions do not explicitly account for the effect of serial dependence. Moreover, the Dantzig-selector-type estimator weakens the sparsity assumptions required by the ℓ1 regularized estimators.

Although extensively studied in the literature, Gaussian VAR models are restrictive in their implications of light tails. Heavy-tailed time series frequently arise in finance, macroeconomics, signal detection, and statistical physics, to name just a few (Feldman & Taqqu, 1998). For analyzing these data, more flexible models and robust estimators are desired.

In this paper, we develop a unified framework for modeling and estimating heavy-tailed VAR processes. In particular, we propose an elliptical VAR model that allows for heavy-tailed processes. The elliptical VAR model covers the Gaussian VAR model as a special case. Under this model, we show that the transition matrix is closely related to quantile-based scatter matrices. The relation serves as a quantile-based counterpart of the Yule-Walker equation2 (Lütkepohl, 2007). Motivated by this relation, we propose a quantile-based robust estimator of the transition matrix. The estimator falls into the category of Dantzig-selector-type estimators, and enjoys similar favorable properties as the estimator in Han & Liu (2013). We investigate the asymptotic behavior of the estimator in high dimensions, and show that although set in a more general model, it achieves the same rates of convergence as the Gaussian-based estimators. The effect of serial dependence is also explicitly characterized in the rates of convergence.

As an application of the framework developed in this paper, we investigate Granger causality estimation under the elliptical VAR process. We show that just as in Gaussian VAR models, Granger causality relations are also captured by the sparsity patterns of the transition matrix. The robust transition matrix estimator developed in this paper induces sign-consistent estimators of these relations.

2. Background

In this section, we introduce the notation employed in this paper, and provide a review on elliptical distributions and robust scales. Elliptical distributions provide a basis for our model, while robust scales motivate our methodology.

2.1. Notation

Let v = (υ1, …, υd)⊤ be a d-dimensional real vector, and M = [Mjk] ∈ ℝd1 × d2 be a d1 × d2 matrix with Mjk as the (j, k) entry. Denote by vI the subvector of v whose entries are index by a set I ⊂ {1, …, d}. Similarly, denote by MU,V the submatrix of M whose entries are indexed by U ⊂ {1, …, d1} and V ⊂ {1, …, d2}. Let MU,* = MU,{1, …, d2}. For 0 < q < ∞, we define the vector ℓq norm of v as , and the vector ℓ∞ norm of v as . Let the matrix ℓmax norm of M be ‖M‖max ≔ maxjk |Mjk|, the matrix ℓ∞ norm be , and the Frobenius norm be . Let X = (X1, …, Xd)⊤ and Y = (Y1, …, Yd)⊤ be two random vectors. We write if X and Y are identically distributed. We use 0, 1, … to denote vectors with 0, 1, … at every entry.

2.2. Elliptical Distribution

Definition 2.1 (Fang et al. (1990))

A random vector X ∈ ℝd follows an elliptical distribution with location μ ∈ ℝd and scatter S ∈ ℝd×d if and only if there exists a nonnegative random variable ξ ∈ ℝ, a rank k matrix R ∈ ℝd×k with S = RR⊤, a random vector U ∈ ℝk independent of ξ and uniformly distributed in the k dimensional sphere, 𝕊k−1, such that

| (2.1) |

In this case, we denote X ~ ECd(μ, S, ξ). S is called the scatter matrix, and ξ is called the generating variate.

Remark 2.2

(2.1) is often referred to as the stochastic representation of the elliptical random vector X. Of note, by Theorem 2.3 in Fang et al. (1990) and the proof of Theorem 1 in Cambanis et al. (1981), Definition 2.1 is equivalent if we replace “” with simply “=”.

Proposition 2.3 (Theorems 2.15 and 2.16 in Fang et al. (1990))

Suppose X ~ ECd(μ, S, ξ) and rank(S) = k. Let B ∈ ℝp×d be a matrix and ν ∈ ℝp be a vector. Denote l = rank(BSB⊤). Then, we have

where B ~ Beta(l/2, (k−l)/2) follows a Beta distribution if k > l, and B = 1 if k = l.

2.3. Robust Scales

Let X ∈ ℝ be a random variable with a sequence of observations X1, …, XT. Denote F as the distribution function of X. For a constant q ∈ [0, 1], we define the q-quantiles of X and to be

Here X(1) ≤ ⋯ ≤ X(T) are the order statistics of the sample . We say Q(X; q) is unique if there exists a unique x such that ℙ(X ≤ x) = q. We say is unique if there exists a unique such that X = X(k). Following Rousseeuw & Croux (1993), we define the population and sample quantile-based scales as

| (2.2) |

where X̃ is an independent copy of X. can be computed using O(T log T) time and O(T) storage (Rousseeuw & Croux, 1993).

3. Model

In this paper, we model the time series of interest by an elliptical VAR process.

Definition 3.1

A sequence of observations X1, …, XT ∈ ℝd is an elliptical VAR process if and only if the following conditions are satisfied:

- X1, …, XT follow a lag-one VAR process

where A ∈ ℝd×d is the transition matrix, and E2, …, ET ∈ ℝd are latent innovations.(3.1) - are stationary and absolutely continuous elliptical random vectors:

where Σ and Ψ are positive definite matrices, and ξ > 0 with probability 1.(3.2)

Remark 3.2

The elliptical VAR process in Definition 3.1 can be generated by an iterative algorithm following Rémillard et al. (2012). In detail, by the property of elliptical distributions, the density function of can be written by for some function g, and the density function of Xt and the conditional density function of Et+1 given Xt can be written by

where g1 and g2 are defined by

The elliptical VAR process X1, …, XT can be generated by the following algorithm:

Generate X1 from h1 (x).

- For t = 2, …, T,

- generate Et from h2(e | Xt−1);

- set Xt = AXt−1 + Et.

Remark 3.3

By definition, it follows that an elliptical VAR process is a stationary process. A special case of the elliptical VAR process is the Gaussian VAR process. An elliptical VAR process is Gaussian VAR if (3.2) is replaced by

The elliptical VAR process generalizes the Gaussian VAR process in two aspects. First, the elliptical model generalizes the Gaussian model by allowing heavy tails. This makes robust methodologies necessary for estimating the process. Secondly, the elliptical VAR model does not require that the observations are independent from future latent innovations.

Next, we show that there exists an elliptical random vector such that the two conditions in Definition 3.1 are satisfied. To this end, let and define

| (3.3) |

where

By Proposition 2.3, L is an elliptical random vector. The next Lemma gives sufficient and necessary conditions for L to satisfy the two conditions in Definition 3.1.

Lemma 3.4

- L ~ EC(2T−1)d(0, Ω, ζ) satisfies Condition 1. Partition the scatter Ω according to the dimensions of and :

We have(3.4) (3.5) - L satisfies Condition 2 if and only if the following equations hold:

(3.6) (3.7) (3.8)

for t = 1, …, T − 1, u = 1, …, T − t, j = 1, …, T, and k = 2, …, T − 1. Here ΩXE = [(ΩXE)IjIk] is a partition of ΩXE into d × d matrices, where Il ≔ {(l − 1)d + 1, …, ld} for l = 1, …, T.(3.9)

Lemma 3.4 is a consequence of Proposition 2.3. Detailed proof is collected in the supplementary material. Lemma 3.4 shows that there exists an elliptical random vector that satisfies the two conditions in Definition 3.1. On the other hand, the algorithm in Remark 3.2 generate a unique sequence of random vectors X1, …, XT, E2, …, ET. Therefore, we immediately have the following proposition.

Proposition 3.5

Let X1, …, XT be an elliptical VAR process with latent innovations E2, …, ET. Then is an absolutely continuous elliptical random vector.

Denote Σ1 ≔ Σt,t+1. We call Σ a scatter matrix of the elliptical VAR process, and Σ1 a lag-one scatter matrix. For any c > 0, since L ~ ECd(0, Ω, ζ) implies , cΣ and cΣ1 are also scatter matrix and lag-one scatter matrix of the elliptical VAR process.

Next, we show that the scatter matrix and lag-one scatter matrix are closely related to the robust scales defined in Section 2.3. In particular, we show that the robust scale σQ motivates an alternative definition of the scatter matrix and lag-one scatter matrix.

Let X1, …, XT be an elliptical VAR process with Xt = (Xt1, …, Xtd)⊤. We define

| (3.10) |

where the entries are given by

, for j = 1, …, d,

, for j ≠ k,

, for j′, k′ = 1, …, d.

The next theorem shows that RQ and are scatter matrix and lag-one scatter matrix of the elliptical VAR process.

Theorem 3.6

For the elliptical VAR process in Definition 3.1, we have

| (3.11) |

where mQ is a constant.

The proof of Theorem 3.6 exploits the summation stability of elliptical distributions and Proposition 2.3. Due to space limit, the detailed proof is collected in the supplementary material. Combining Lemma 3.4 and Theorem 3.6, we obtain the following theorem.

Theorem 3.7

For the elliptical VAR process in Definition 3.1, let RQ and be defined as in (3.10). Then, we have

| (3.12) |

(3.12) serves as a quantile-based counterpart as the Yule Walker equation Var(X1) = Cov(X1, X2)A⊤. Theorem 3.7 motivates the robust estimator of A introduced in the next section.

4. Method

In this section, we propose a robust estimator for the transition matrix A. We first introduce robust estimators of RQ and . Based on these estimators, the transition matrix A can be estimated by solving an optimization problem.

Let X1, …, XT be an elliptical VAR process. We define

where the entries are given by

, for j =1, …, d,

, for j ≠ k ∈ {1, …, d},

, for j, k = 1, …, d.

Motivated by Theorem 3.7, we proposed to estimate A by

| (4.1) |

The optimization problem (4.1) can be further decomposed into d subproblems (Han & Liu, 2013). Specifically, the j-th row of  can be estimated by

| (4.2) |

Thus, the d rows of A can be estimated in parallel. (4.2) is essentially a linear programming problem, and can be solved efficiently using the simplex algorithm.

Remark 4.1

Since σ̂Q can be computed using O(T log T) time (Rousseeuw & Croux, 1993), the computational complexity of R̂Q and are O(d2T log T). Since T ≪ d in practice, R̂Q and can be computed almost as efficiently as their moment-based counterparts

| (4.3) |

which have O(d2T) complexity and are used in Han & Liu (2013).

5. Theoretical Properties

In this section, we present theoretical analysis of the proposed transition matrix estimator. Due to space limit, the proofs of the results in this section are collected in the supplementary material.

The consistency of the estimator depends on the degree of dependence over the process X1, …, XT. We first introduce the ϕ-mixing coefficient for quantifying the degree of dependence.

Definition 5.1

Let {Xt}t∈Z be a stationary process. Define and to be the σ-fileds generated by {Xt}t≤0 and {Xt}t≥n, respectively. The ϕ-mixing coefficient is defined by

Let {Xt}t∈ℤ be an infinite elliptical VAR process in the sense that any contiguous subsequence of {Xt}t∈ℤ is an elliptical VAR process. For brevity, we also call {Xt}t∈ℤ an elliptical VAR process. Let ϕj(n), , and be the ϕ-mixing coefficients of {Xtj}t∈ℤ, {Xtj + Xtk}t∈ℤ, {Xtj − Xtk}t∈ℤ, {Xtj′ + Xt+1, k′}t∈ℤ, and {Xtj′ − Xt+1,k′}t∈ℤ, respectively. Here j, k, j′, k′ ∈ {1, …, d} but j ≠ k. Define

and . Φ and Θ characterize the degree of dependence over the multivariate process {Xt}t∈ℤ.

Next, we introduce an identifiability condition on the distribution function of X1.

Condition 1

Let X̃1 = (X̃11, …, X̃1d)⊤ and X̃2 = (X̃21, …, X̃2d)⊤ be independent copies of X1 and X2. Let Fj, , and be the distribution functions of |X1j − X̃1j|, |X1j + X1k − X̃1j − X̃1k|, |X1j − X1k − X̃1j + X̃1k|, |X1j′ + X2k′ − X̃1j′ − X̃2k′|, and |X1j′ − X2k′ − X̃1j′ + X̃2k′|. We assume that there exist constants κ > 0 and η > 0 such that

for any .

Then next lemma presents the rates of convergence for R̂Q and .

Lemma 5.2

Let {Xt}t∈ℤ be an elliptical VAR process satisfying Condition 1. Let X1, …, XT be a sequence of observations from {Xt}t∈ℤ. Suppose that log d/T → 0 as T → ∞. Then, for T large enough, with probability no smaller than 1 − 8/d2, we have

| (5.1) |

| (5.2) |

where the rates of convergence are defined by

| (5.3) |

| (5.4) |

Here .

Based on Lemma 5.2, we can further deliver the rates of convergence for  under the matrix ℓmax norm and ℓ1 norm. We start with some additional notation. For α ∈ [0, 1), s > 0, and MT > 0 that may scale with T, we define the matrix class

ℳ(0, s, MT) is the set of sparse matrices with at most s non-zero entries in each row and bounded ℓ1 norm. ℳ(α, s, MT) is also investigated in Cai et al. (2011) and Han & Liu (2013).

Theorem 5.3

Let {Xt}t∈ℤ be an elliptical VAR process satisfying Condition 1, and X1, …, XT be a sequence of observations. Suppose that log d/T → 0 as T → ∞, the transition matrix A ∈ ℳ(α, s, MT), and RQ is non-singular. Define

If we choose the tuning parameter

in (4.1), then, for T large enough, with probability no smaller than 1 − 8/d2, we have

| (5.5) |

| (5.6) |

Remark 5.4

If we assume that η ≥ C1 and , ‖(RQ)−1‖1 ≤ C2 for some absolute constants C1, C2 > 0, the rates of convergence in Theorem 5.3 reduces to

Here characterizes the degree of serial dependence in the process {Xt}t∈ℤ. If we further assume polynomial decaying ϕ-mixing coefficients

| (5.7) |

we have and the rate of convergence are further reduced to and , which are the parametric rates obtained in Han & Liu (2013) and Basu & Michailidis (2013). Condition (5.7) has been commonly assumed in the time series literature (Pan & Yao, 2008)

6. Granger Causality

In this section, we demonstrate an application of framework developed in this paper. In particular, we discuss the characterization and estimation of Granger causality under the elliptical VAR model. We start with the definition of Granger causality.

Definition 6.1 (Granger (1980))

Let {Xt}t∈ℤ be a stationary process, where Xt = (Xt1, …, Xtd)⊤. For j ≠ k ∈ {1, …, d}, {Xtk}t∈ℤ Granger causes {Xtj}t∈ℤ if and only if there exists a measurable set A such that

for all t ∈ ℤ, where Xs,\k is the subvector obtained by removing Xsk from Xs.

For a Gaussian VAR process {Xt}t∈ℤ, we have that {Xtk}t∈ℤ Granger causes {Xtj}t∈ℤ if and only if the {j, k) entry of the transition matrix is non-zero (Lütkepohl, 2007). In the next theorem, we show that a similar property holds for the elliptical VAR process.

Theorem 6.2

Let {Xt}t∈ℤ be an elliptical VAR process with transition matrix A. Suppose Xt has finite second order moment, and Var(Xtk | Xs,\k}s≤t ≠ 0 for any k ∈ {1, …, d}. Then, for j ≠ k ∈ {1, …, d}, we have

If Ajk ≠ 0, then {Xtk}t∈ℤ Granger causes {Xtj}t∈ℤ.

If we further assume that Et+1 is independent of {Xs}s≤t for any t ∈ ℤ, we have that {Xtk}t∈ℤ Granger causes {Xtj}t∈ℤ if and only if Ajk ≠ 0.

The proof of Theorem 6.2 exploits the autoregressive structure of the process X1, …, XT, and the properties on conditional distributions of elliptical random vectors. We refer to the supplementary material for the detailed proof.

Remark 6.3

The assumption that Var(Xtk | Xs,\k}s≤t ≠ 0 requires that Xtk cannot be perfectly predictable from the past or from the other observed random variables at time t. Otherwise, we can simply remove {Xtk}t∈ℤ from the process {Xt}t∈ℤ, since predicting {Xtk}t∈ℤ is trivial.

Assuming that Et+1 is independent of {Xs}s≤t for any t ∈ ℤ, the Granger causality relations among the processes {{Xjt}t∈ℤ : j = 1, …, d} is characterized by the non-zero entries of A. To estimate the Granger causality relations, we define à = [Ãjk], where

for some threshold parameter γ. To evaluate the consistency between à and A regarding sparsity pattern, we define function sign(x) ≔ I(x > 0) − I(x < 0). For a matrix M, define sign(M) ≔ [sign(Mjk)].

The next theorem gives the rate of γ such that à recovers the sparsity pattern of A with high probability.

Theorem 6.4

Assume that the conditions in Theorem 5.3 holds, and A ∈ ℳ(0, s, MT). If we set

then, with probability no smaller than 1 − 8/d2, we have sign(Ã) = sign(A), provided that

| (6.1) |

Theorem 6.4 is a direct consequence of Theorem 5.3. We refer to the supplementary material for a detailed proof.

7. Experiments

In this section, we demonstrate the empirical performance of the proposed transition matrix estimator using both synthetic and real data. In addition to the proposed robust Dantzig-selector-type estimator (R-Dantzig), we consider the following two competitors for comparison:

Lasso: an ℓ1 regularized estimator defined in (1.1) with and Pρ(M) = ρ ∑jkMjk.

Dantzig: the estimator proposed in Han & Liu (Han & Liu, 2013), which solves (4.1) with R̂Q and replaced by Ŝ and Ŝ1 defined in (4.3).

Lasso is solved using R package glmnet. Dantzig and R-Dantzig are solved by the simplex algorithm.

7.1. Synthetic Data

In this section, we demonstrate the effectiveness of R-Dantzig under synthetic data. To generate the time series, we start with an initial observation X1 and innovations E2, …, ET. Specifically, we consider three distributions for :

Setting 1: a multivariate Gaussian distribution: N(0, Φ);

Setting 2: a multivariate t distribution with degree of freedom 3, and covariance matrix Φ;

Setting 3: an elliptical distribution with log-normal generating variate, log N(0, 2), and covariance matrix Φ.

Here the covariance matrix Φ is block diagonal: Φ = diag(Σ, Ψ, …, Ψ) ∈ ℝTd×Td. We set d = 50 and T = 25. Using , we can generate by

where G is given by

By Proposition 2.3, follows a multivariate Gaussian distribution in Setting 1, a multivariate t distribution in Setting 2, and an elliptical distribution in Setting 3 with the same log-normal generating variate.

We generate the parameters A and Σ following Han & Liu (2013). Specifically, we generate the transition matrix A using the huge R package, with patterns band, cluster, hub, and random. We refer to Han & Liu (2013) for a graphical illustration of the patterns. Then we rescale A so that ‖A‖2 = 0.8. Given A, we generate Σ such that ‖Σ‖2 = 2‖A‖2. Using (3.7), we set Ψ = Σ − AΣA⊤.

Table 1 presents the errors in estimating the transition matrix and their standard deviations. The tuning parameters λ and ρ are chosen by cross validation. The results are based on 1,000 replicated simulations. We note two observations: (i) Under the Gaussian model (Setting 1), R-Dantzig has comparable performance as Dantzig, and out-performs Lasso. (ii) In Settings 2–3, R-Dantzig produces significantly smaller estimation errors than Lasso and Dantzig. Thus, we conclude that R-Dantzig is robust to heavy tails.

Table 1.

Averaged errors and standard deviations in estimating the transition matrix under the matrix Frobenius norm (ℓF), ℓmax norm, and ℓ∞ norm. The results are based on 1,000 replications.

| Lasso | Dantzig | R-Danzig | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ℓF | ℓmax | ℓ∞ | ℓF | ℓmax | ℓ∞ | ℓF | ℓmax | ℓ∞ | ||

| Setting 1 | band | 4.26(0.74) | 0.60(0.25) | 2.15(0.38) | 3.24(1.07) | 0.49(0.22) | 1.10(0.07) | 3.65(0.01) | 0.50(0.03) | 1.06(0.05) |

| cluster | 3.04(0.65) | 0.52(0.19) | 1.82(0.35) | 2.25(0.39) | 0.41(0.11) | 1.00(0.58) | 2.47(0.01) | 0.44(0.01) | 1.13(0.04) | |

| hub | 2.77(0.61) | 0.66(0.05) | 2.53(0.22) | 1.87(0.01) | 0.64(0.02) | 1.87(0.02) | 1.90(0.01) | 0.65(0.01) | 1.90(0.06) | |

| random | 2.71(0.01) | 0.47(0.01) | 1.08(0.02) | 2.58(0.36) | 0.48(0.19) | 1.21(0.83) | 2.74(0.01) | 0.47(0.01) | 1.19(0.08) | |

| Setting 2 | band | 9.53(0.58) | 1.11(0.22) | 10.45(1.29) | 3.72(0.19) | 0.52(0.10) | 1.18(0.66) | 3.62(0.01) | 0.47(0.02) | 0.84(0.08) |

| cluster | 8.52(0.38) | 1.00(0.13) | 9.24(1.16) | 2.58(0.22) | 0.46(0.03) | 1.24(0.27) | 2.57(0.27) | 0.44(0.01) | 1.09(0.50) | |

| hub | 8.20(0.28) | 0.97(0.09) | 8.53(1.02) | 3.87(0.01) | 0.78(0.03) | 3.30(0.02) | 1.88(0.01) | 0.64(0.01) | 1.90(0.06) | |

| random | 8.65(0.19) | 0.98(0.10) | 9.55(1.42) | 2.79(0.07) | 0.56(0.01) | 1.35(0.15) | 2.72(0.02) | 0.48(0.01) | 1.12(0.10) | |

| Setting 3 | band | 9.43(0.25) | 1.07(0.16) | 10.83(1.16) | 3.79(0.18) | 0.52(0.02) | 1.16(0.01) | 3.69(0.11) | 0.49(0.04) | 1.14(0.45) |

| cluster | 8.59(0.34) | 0.94(0.10) | 9.70(0.98) | 2.66(0.10) | 0.44(0.02) | 1.51(0.22) | 2.55(0.11) | 0.43(0.01) | 1.32(0.26) | |

| hub | 8.16(0.35) | 0.95(0.10) | 8.79(0.88) | 2.51(0.11) | 0.66(0.03) | 2.34(0.15) | 2.01(0.23) | 0.64(0.01) | 2.07(0.30) | |

| random | 8.81(0.43) | 1.04(0.12) | 9.31(1.25) | 2.71(0.13) | 0.47(0.01) | 1.28(0.16) | 2.55(0.10) | 0.46(0.01) | 1.04(0.29) | |

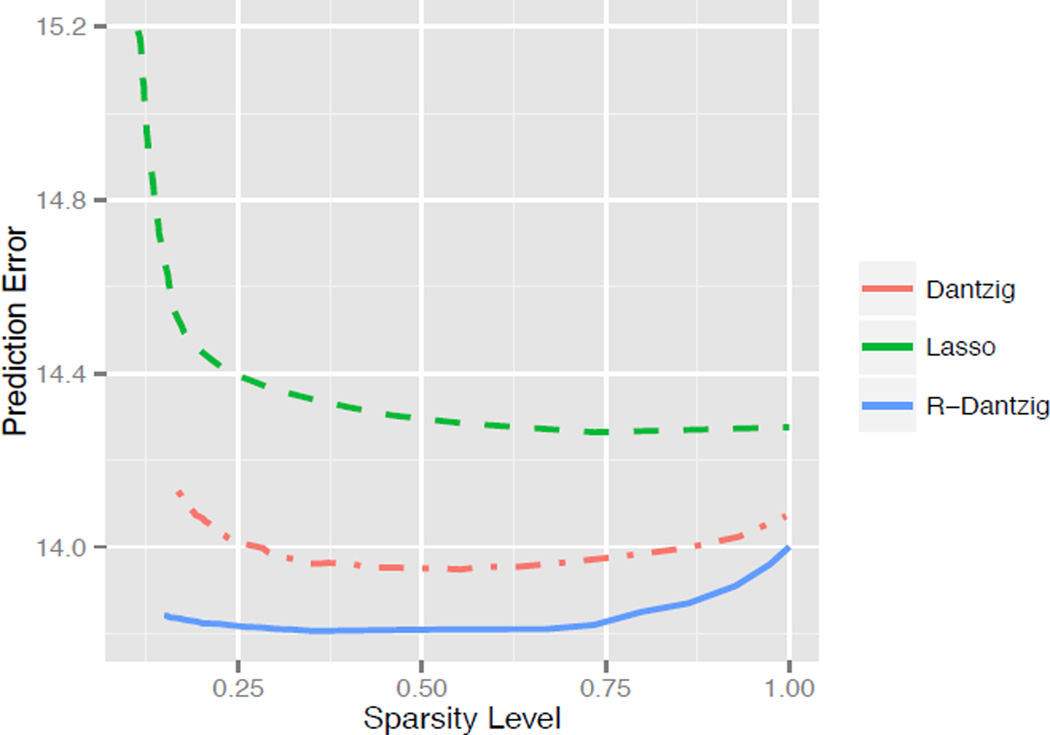

Figure 1 plots the prediction errors εs against sparsity s for the three transition matrix estimators. We observe that R-Dantzig achieves smaller prediction errors compared to Lasso and Dantzig.

Figure 1.

Prediction errors in stock prices plotted against the sparsity of the estimated transition matrix.

7.2. Real Data

In this section, we exploit the VAR model in stock price prediction. We collect adjusted daily closing prices3 of 435 stocks in the S&P 500 index from January 1, 2003 to December 31, 2007. This gives us T = 1, 258 closing prices of the 435 stocks. Let Xt be a vector of the 435 closing prices on day t, for t = 1, …, T. We model by a VAR process, and estimate the transition matrix using Lasso, Dantzig, and R-Dantzig. Let Âs be an estimate of the transition matrix with sparsity s4. We define the prediction error associated with Âs to be

8. Conclusion

In this paper, we developed a unified framework for modeling and estimating heavy-tailed VAR processes in high dimensions. Our contributions are three-fold. (i) In model level, we generalized the Gaussian VAR model by an elliptical VAR model to accommodate heavy-tailed time series. The model naturally couples with quantile-based scatter matrices and Granger causality. (ii) Methodologically, we proposed a quantile-based estimator of the transition matrix, which induces an estimator of Granger causality. Experimental results demonstrate that the proposed estimator is robust to heavy tails. (iii) Theoretically, we showed that the proposed estimator achieves parametric rates of convergence in matrix ℓmax norm and ℓ∞ norm. The theory explicitly captures the effect of serial dependence, and implies sign-consistency of the induced Granger causality estimator. To our knowledge, this is the first work on modeling and estimating heavy-tailed VAR processes in high dimensions. The methodology and theory proposed in this paper have broad impact in analyzing non-Gaussian time series. The techniques developed in the proofs have independent interest in understanding robust estimators under high dimensional dependent data.

Footnotes

Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 2015.

For simplicity, we only consider order one VAR models in this paper. Extensions to higher orders can be obtained using the same technique as in Chapter 2.1 of Lütkepohl (2007).

The Yule-Walker equation connects the transition matrix with the covariance matrix and the lag-one autocovariance matrix of the process.

The adjusted closing prices account for all corporate actions such as stock splits, dividends, and rights offerings.

s ∈ [0, 1] is defined to be the fraction of non-zero entries in Âs, and can be controlled by the tuning parameters λ and ρ.

Contributor Information

Huitong Qiu, Email: HQIU7@JHU.EDU, Johns Hopkins University, 615 N. Wolfe St., Baltimore, MD 21210 USA.

Sheng Xu, Email: SHXU@JHU.EDU, Johns Hopkins University, 615 N. Wolfe St., Baltimore, MD 21210 USA.

Fang Han, Email: FHAN@JHU.EDU, Johns Hopkins University, 615 N. Wolfe St., Baltimore, MD 21210 USA.

Han Liu, Email: HANLIU@PRINCETON.EDU, Princeton University, 98 Charlton Street, Princeton, NJ 08544 USA.

Brian Caffo, Email: BCAFFO@JHU.EDU, Johns Hopkins University, 615 N. Wolfe St., Baltimore, MD 21210 USA.

References

- Basu Sumanta, Michailidis George. Estimation in high-dimensional vector autoregressive models. arXiv preprint arXiv:1311.4175. 2013 [Google Scholar]

- Cai Tony, Liu Weidong, Luo Xi. A constrained ℓ1 minimization approach to sparse precision matrix estimation. Journal of the American Statistical Association. 2011;106(494):594–607. [Google Scholar]

- Cambanis Stamatis, Huang Steel, Simons Gordon. On the theory of elliptically contoured distributions. Journal of Multivariate Analysis. 1981;11(3):368–385. [Google Scholar]

- Fang Kai-Tai, Kotz Samuel, Ng Kai Wang. Symmetric Multivariate and Related Distributions. Chapman and Hall; 1990. [Google Scholar]

- Feldman Raya, Taqqu Murad. A Practical Guide to Heavy Tails: Statistical Techniques and Applications. Springer; 1998. [Google Scholar]

- Fujita André, Sato João R, Garay-Malpartida Humberto M, Yamaguchi Rui, Miyano Satoru, Sogayar Mari C, Ferreira Carlos E. Modeling gene expression regulatory networks with the sparse vector autoregressive model. BMC Systems Biology. 2007;1(1):1–39. doi: 10.1186/1752-0509-1-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granger Clive WJ. Testing for causality: a personal viewpoint. Journal of Economic Dynamics and Control. 1980;2:329–352. [Google Scholar]

- Hamilton James Douglas. Time series analysis. Vol. 2. Princeton University Press; 1994. [Google Scholar]

- Han Fang, Liu Han. Transition matrix estimation in high dimensional time series; Proceedings of the 30th International Conference on Machine Learning; 2013. pp. 172–180. [Google Scholar]

- Hsu Nan-Jung, Hung Hung-Lin, Chang Ya-Mei. Subset selection for vector autoregressive processes using lasso. Computational Statistics & Data Analysis. 2008;52(7):3645–3657. [Google Scholar]

- Lütkepohl Helmut. New Introduction to Multiple Time Series Analysis. Springer; 2007. [Google Scholar]

- Nardi Yuval, Rinaldo Alessandro. Autoregressive process modeling via the lasso procedure. Journal of Multivariate Analysis. 2011;102(3):528–549. [Google Scholar]

- Opgen-Rhein Rainer, Strimmer Korbinian. Learning causal networks from systems biology time course data: an effective model selection procedure for the vector autoregressive process. BMC bioinformatics. 2007;8(Suppl 2):S3. doi: 10.1186/1471-2105-8-S2-S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan Jiazhu, Yao Qiwei. Modeling multiple time series via common factors. Biometrika. 2008;95(2):365–379. [Google Scholar]

- Qiu Huitong, Han Fang, Liu Han, Caffo Brian. Joint estimation of multiple graphical models from high dimensional time series. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2015 doi: 10.1111/rssb.12123. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rémillard Bruno, Papageorgiou Nicolas, Soustra Frédéric. Copula-based semiparametric models for multivariate time series. Journal of Multivariate Analysis. 2012;110:30–42. [Google Scholar]

- Rousseeuw Peter J, Croux Christophe. Alternatives to the median absolute deviation. Journal of the American Statistical Association. 1993;88(424):1273–1283. [Google Scholar]

- Shojaie Ali, Michailidis George. Discovering graphical granger causality using the truncating lasso penalty. Bioinformatics. 2010;26(18):i517–i523. doi: 10.1093/bioinformatics/btq377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sims Christopher A. Macroeconomics and reality. Econometrica. 1980:1–48. [Google Scholar]

- Song Song, Bickel Peter J. Large vector autoregressions. arXiv preprint arXiv:1106.3915. 2011 [Google Scholar]

- Tsay Ruey S. Analysis of financial time series. Vol. 543. John Wiley & Sons; 2005. [Google Scholar]

- Wang Hansheng, Li Guodong, Tsai Chih-Ling. Regression coefficient and autoregressive order shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2007;69(1):63–78. [Google Scholar]