Abstract

Objective

To examine the elements of capacity, a measure of organizational resources supporting program implementation that result in successful completion of public health program objectives in a public health initiative serving 50 communities.

Design

We used crisp set Qualitative Comparative Analysis (QCA) to analyze case study and quantitative data collected during the evaluation of the Communities Putting Prevention to Work (CPPW) program.

Setting

CPPW awardee program staff and partners implemented evidence-based public health improvements in counties, cities, and organizations (eg, worksites, schools).

Participants

Data came from case studies of 22 CPPW awardee programs that implemented evidence-based, community-and organizational-level public health improvements.

Intervention

Program staff implemented a range of evidence-based public health improvements related to tobacco control and obesity prevention.

Main Outcome Measure

The outcome measure was completion of approximately 60% of work plan objectives.

Results

Analysis of the capacity conditions revealed 2 combinations for completing most work plan objectives: (1) having experience implementing public health improvements in combination with having a history of collaboration with partners; and (2) not having experience implementing public health improvements in combination with having leadership support.

Conclusion

Awardees have varying levels of capacity. The combinations identified in this analysis provide important insights into how awardees with different combinations of elements of capacity achieved most of their work plan objectives. Even when awardees lack some elements of capacity, they can build it through strategies such as hiring staff and engaging new partners with expertise. In some instances, lacking 1 or more elements of capacity did not prevent an awardee from successfully completing objectives.

Implications for Policy & Practice

These findings can help funders and practitioners recognize and assemble different aspects of capacity to achieve more successful programs; awardees can draw on extant organizational strengths to compensate when other aspects of capacity are absent.

Keywords: capacity, case study, public health, QCA

From 2010 to 2013, the Centers for Disease Control and Prevention (CDC) funded Communities Putting Prevention to Work (CPPW) initiatives in 50 county-, city-, and tribal-level public health departments to accelerate and expand high-impact, evidence-based, population-wide environmental improvement strategies to sustain reductions in risk factors for chronic disease.1,2 The goal of this funding was to support sustainable, high-impact interventions—policy, system, and environmental improvements—that reach moderate to large portions of the population and change health behaviors that directly impact chronic disease outcomes. CPPW awardees sought to increase physical activity, provide and improve access to nutritious foods, decrease obesity prevalence, reduce tobacco use, and protect people from the harms associated with exposure to secondhand smoke.

CPPW was designed to address the needs of the diverse demographics of the United States in 4 distinct types of population areas: large cities, urban areas, tribal communities, and small cities and rural areas. Funding recipients were expected to have the infrastructure to rapidly deploy programs and interventions to their target population. They were expected to use evidence-based strategies to design and implement a comprehensive community action plan to improve health outcomes with sustainable effects within their state or locality.

Awardees sought to implement their CPPW strategies through partnerships with local, community, and state organizations. These included governmental agencies, private organizations and foundations, and other groups. Awardees intended to advance sustainable outcomes through education, coalition building, and partnerships. In accordance with US law, no federal funds provided by CDC were permitted to be used by awardees for lobbying or to influence, directly or indirectly, specific pieces of pending or proposed legislation at the federal, state, or local levels.

The term “capacity” has been defined as an organization’s “potential to perform—its ability to successfully apply its skills and resources to accomplish its goals and satisfy its stakeholders’ expectations.”3 The literature reflects the multidimensional nature of capacity; accordingly, this evaluation includes elements that have a strong evidence base within the literature and aligns with our understanding of the goals of the CPPW initiative.

Awardees began their work with varying degrees of human, physical, and material, financial, and intellectual resources and experience. When considered together, these elements are considered to represent an organization’s capacity.3–15 In practice, organizations have varying levels of each element of capacity; some programs may have a large staff and leadership support but little experience and few partners in the topic area of interest. Others may have limited financial resources but an extensive range of partnerships. Elements of capacity do not occur in isolation. Examining combinations of different elements of capacity may provide clearer guidance on which factors play a role in successful program implementation.

Previous research has emphasized the importance of organizational capacity for successful implementation of public health strategies16,17 but has not examined how multiple elements of capacity can work together to produce successful program outcomes. Public health practitioners and funders would likely benefit from knowing which elements of capacity in combination can support successful program implementation with multiple interventions. This knowledge can allow funders and practitioners to identify different configurations of elements of capacity that can be successful as they plan community-based public health initiatives.4,5 In this article, we present results from a study of CPPW awardees that address how elements of organizational capacity work together to lead to successful implementation of objectives in initiatives to reduce risk factors for chronic disease.

Methods

For the implementation evaluation, we used case study methods that best captured the environment in which program staff implemented their initiatives and the activities to achieve their objectives. Available resources and time available for data collection limited us to a sample size of 22. We used criteria, defined later, to select a diverse subset of 22 of the 50 community awardees. Some of these criteria served as indirect proxies for experience, whereas others helped ensure inclusion of a diverse array of geographic and programmatic awardees. We sought to develop a sample that included representation across all categories of the criteria (eg, awardees from each awardee-type category). To achieve a purposive sample of awardees for inclusion in the multicase study, we included awardees that varied on the following criteria:

CPPW funding focus (ie, tobacco, obesity, or dual tobacco and obesity funding);

Involvement in previous CDC-funded community health initiatives (eg, Steps to a Healthier US; Racial and Ethnic Approaches to Community Health [REACH]);

Awardee type (ie, small city/rural, large city, urban area, or tribe);

Geographic region (using US Census regions);

Explicit focus on populations affected by health inequities (eg, African American, American Indian, Hispanic); and

Content of their community action plan.

Driven by the CPPW Case Study Evaluation Conceptual Model (see Supplemental Digital Content Figure, available at: http://links.lww.com/JPHMP/A227), a conceptual framework for community-level improvements, we collected data using semistructured qualitative interviews in 2 phases covering early- and late-stage implementation. The data collection methods for this study are described in greater detail elsewhere.18

Analytic approach

To determine which combination of elements of capacity is most prevalent among the awardees that successfully implemented objectives, we used Qualitative Comparative Analysis (QCA). QCA examines which conditions (explanatory factors)—alone or in combination—are necessary and/or sufficient for producing an outcome; in this case, high levels (>60%) of successful implementation of objectives.16,17 We selected a 60% threshold for our definition of success because of the complex nature of the work being engaged in and the measurement period (2 years). QCA uses formal logic, a branch of mathematics, to assess which specific combinations of conditions (at high or low levels) are necessary and/or sufficient for an outcome to occur (ie, achieving ∼60% of objectives). A condition is necessary if, “whenever the outcome is present, the condition is also present.”17(p329) When a sufficient condition is present, therefore, the outcome is also present.17

Although QCA complements other analytic methods, it offers several advantages to evaluation.18 Programs such as CPPW involve multiple components that may work together, and different program models can lead to success. This method accommodates the complexity associated with evaluating such programs. QCA assesses the impact of combinations of factors together, rather than independently (ie, conjectural causation), and allows for multiple pathways to an outcome (ie, equifinality). Unlike statistical methods, QCA allows evaluators to analyze small N samples (if the study includes a configural research question); it differs from conventional qualitative methods in that it formalizes conditions through a calibration process and draws upon formal mathematical analyses to make systematic comparisons.19

Data and sample

Data were drawn from 2 rounds of site visits (early and late implementation) to selected awardees during November 2010–June 2011 and November 2011–June 2012. A trained case study team conducted interviews with up to 20 program staff members, leadership, key partner, and stakeholder representatives from each awardee for a total of 828 in-depth interviews across all 22 community awardees. In most cases, the lead organization for an awardee was a city or county health department, although community-based organizations served as leads for a few awardees. Interview data were coded in NVivo and summarized in a program description form. Prior to data collection, all study protocols were approved by the RTI International Institutional Review Board and the Office of Management and Budget.

Measures

Using the CPPW Case Study Evaluation Conceptual Model (see Supplemental Digital Content Figure, available at: http://links.lww.com/JPHMP/A227), the project team selected and defined conditions based on the literature and whether data could be feasibly collected during the site visits. For the purposes of this study, we include the following elements of capacity as conditions in our model: public health improvement and topical experience (hereafter, “experience”); leadership support; history of collaboration with partners (hereafter, “history of collaboration”); and staff turnover (whether the principal investigator, program director, or key staff left during the funding period). The experience condition captured whether key staff had either topical or technical experience. Topical experience reflected whether key staff previously worked on tobacco use and control or obesity prevention efforts; technical experience involved whether key staff had implemented public health initiatives that might employ similar strategies as CPPW. The condition, history of collaboration, captured whether the awardee had extant partnerships. Leadership support captured leadership resources; the staff turnover condition served as a measure of staff resources. The outcome condition for this analysis was successful completion objectives. Work plan objectives included adopting, designing, planning, or implementing an evidence-based public health strategy and promoting or raising awareness about tobacco prevention and control and obesity prevention issues (see Table 1 for sample objectives).

TABLE 1.

Examples of CPPW Awardee Objectivesa

| • By March 2012, 5 higher education institutions, including at least 1 community college, will adopt smoke-free campus policies. |

| • By March 2012, increase access to healthy foods among high-need populations through increase in electronic benefit transfer machine usage among 55 Farmer Markets in high-need areas. |

| • By March 2012, implement components of the School Wellness Policy through the work of School Wellness Councils by decreasing competitive foods of minimal nutritional value and increasing opportunities for physical activity in 200 public schools. |

Abbreviation: CPPW, Communities Putting Prevention to Work.

CPPW awardees worked to achieve numerous intervention objectives that they developed as part of their Community Action Plans. Objectives were specific to each awardee. All objectives were “SMART”, meaning they were specific, measurable, achievable, realistic, and time-limited, as the examples demonstrate.

The objectives included in this analysis were only those specific to the policy, systems, and environmental improvements. No major infrastructure objectives, such as coalition development, or process objectives, such as number of meetings held, were included in the analyses; thus, across the awardees, the objectives are commensurate. We selected a 60% completion rate of objectives at the end of the funding period as the threshold for our outcome because CPPW leadership agreed that 60% completion of objectives in the work plan was appropriate because the amount of time awardees had to complete the work from start-up and the type of policy, systems, and environmental improvements the awardees were trying to achieve. A 100% completion rate would have been unreasonable, given the combination of timing and the type of interventions being implemented. Supplemental Digital Content Table 1 (available at: http://links.lww.com/JPHMP/A228) lists conditions and their definitions. We calibrated each condition dichotomously; this is known as “crisp sets” in QCA.

Analysis

At the completion of the site visits, the project team abstracted data from each awardee profile form and constructed an analytic table called a “truth table” to aid in evaluating the combinations of conditions (see Supplemental Digital Content Table 2, available at: http://links.lww.com/JPHMP/A229). To run the analyses, we used the R QCA20 and SetMethods21 packages. The project team assessed each individual condition for necessity and sufficiency, examined the necessary and sufficient combinations of conditions (hereafter, “combinations”) that resulted in successful completion of objectives, and calculated measures of consistency and coverage. Consistency indicates the proportion of cases with a given combination that also exhibit the outcome; high consistency shows that a combination works all or most of the time (ie, sufficient to produce the outcome). Total coverage assesses how many (or the proportion of) cases that exhibit the outcome are accounted for across all combinations (ie, necessity). High coverage indicates whether combinations are common enough to be useful in the field.

In alignment with good QCA analytic practices, we assessed the conservative, intermediate, and parsimonious solutions, as well as these solutions for the negation of the outcome.22 Each of these solutions set different expectations for managing truth table rows without empirical cases (eg, rows 3 and 4 in Supplemental Digital Content Table 2, available at: http://links.lww.com/JPHMP/A229). Conservative solution makes no assumptions about the truth table rows without cases and is a subset of the intermediate and parsimonious solutions; the intermediate solution provides theoretical expectations about whether a condition will contribute to successfully completing objectives. The parsimonious solution allows the software to determine how to use truth table rows without empirical cases to produce the fewest solution combinations.

We expected experience, leadership support, and history of collaboration to contribute to the outcome but did not expect staff turnover to do so. The intermediate and parsimonious solutions were equivalent; we present those solutions in this article (the conservative solution is available on request). We examined our solutions at 0.75 and 0.80 consistency thresholds, considered sound thresholds for consistency.17

We also tested the model by program focus and rurality. No differences occurred in results. Although we attempted to assess the results by other factors, including geography and program focus, the number of cases in each model was reduced so substantially that we could not generate a model.

Results

None of the conditions (ie, experience, leadership support, history of collaboration, and staff turnover) were perfectly necessary or sufficient for successfully completing objectives; no necessary combinations were present.

However, analysis of the sufficient combinations of these conditions revealed 2 highly consistent combinations that led to successful completion of objectives:

Having experience in combination with having a history of collaboration.

Not having experience in combination with having leadership support.

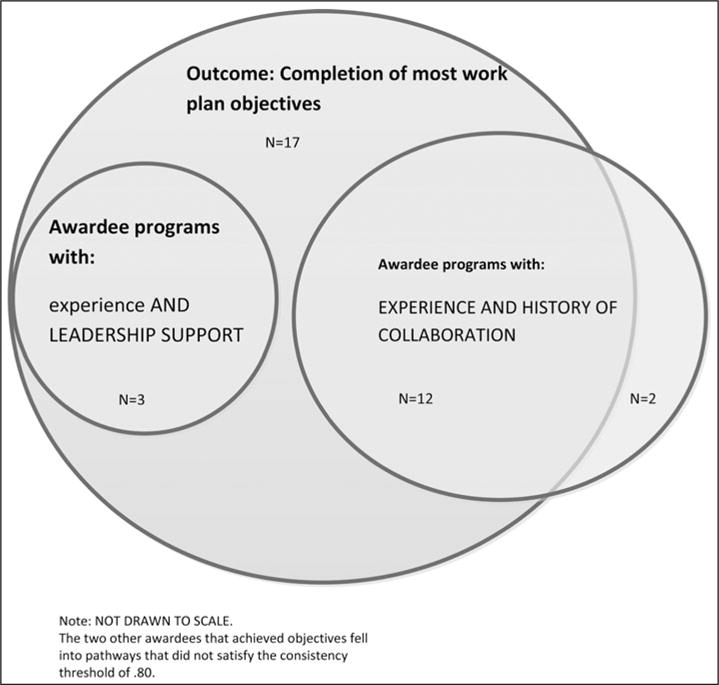

The Figure displays these results; Table 2 displays the consistency and coverage values for these combinations. The first combination in Table 2 is the most dominant solution (0.706 raw and unique coverage, 12 of the 17 awardees that successfully completed objectives, compared with 0.176 raw and unique coverage for the second combination, 3 of the 17 awardees). Furthermore, 12 of the 14 awardee programs in the first combination successfully completed objectives. The second combination successfully completed objectives 100% of the time but encompasses fewer awardees than the first combination, making it less empirically relevant.

FIGURE. Combinations for Completing Most Work Plan Objectivesa.

aFigure not drawn to scale. Conditions presented in all capital letters (eg, LEADERSHIP SUPPORT) indicate the presence of the condition; conditions presented in lower case letters (eg, experience) indicate the absence of the condition. A total of 17 achieved the program objectives, but 2 cases fell into combinations that were not successful at least 80% of the time. Thus, they are not shown.

TABLE 2.

Coverage and Consistency Values for Capacity and High Achievement of Objectivesa

| Combination | Raw Coverage | Unique Coverage | Solution Consistency |

|---|---|---|---|

| EXPERIENCE and HISTORY OF COLLABORATION | 0.706 | 0.706 | 0.857 |

| Experience and LEADERSHIP SUPPORT | 0.176 | 0.176 | 1.00 |

| Total coverage = 0.882 | |||

| Total consistency = 0.882 |

Conditions presented in all capital letters (e.g., LEADERSHIP SUPPORT) indicate the presence of the condition; conditions presented in lowercase letters (eg, experience) indicate the absence of the condition. Coverage indicates the relevance of a combination (similar to R2 in regression). Raw coverage is the proportion of all cases represented by a combination, unique coverage indicates the proportion of cases that are covered by only a particular combination, and total coverage indicates the proportion of cases covered by all of the combinations. Consistency quantifies the extent to which cases that share a combination exhibit the outcome (cf, a correlation coefficient in regression) and assesses the strength of the relationship.

These 2 combinations accounted for 15 of the 17 awardees (ie, total coverage) that successfully completed 60% of work plan objectives. The remaining 11.8% of awardee programs that completed work plan objectives were in combinations that were not successful most of the time. Taken together, these 2 combinations produced the outcome 88.2% of the time (ie, total consistency).

Discussion

In this article, we explored how different combinations of elements of organizational capacity can support successful completion of objectives. The results provide public health agencies and funders with knowledge about elements of capacity that, when in place simultaneously, are likely to lead to successful completion of objectives in public health initiatives such as CPPW.

Because the “qualitative” in QCA entails examining the complex processes underlying the combinations the analysis produces,22 we also reviewed the underlying data to understand why the 2 combinations apparently worked for the awardees. In the first, and most common, combination, 12 awardees had a high level of experience and a history of collaboration. Awardees with topical knowledge and experience implementing public health initiatives reported that they could begin work more quickly because they knew the key stakeholders to be included in the efforts and could build on previous efforts that were foundational to the CPPW initiative. For example, one awardee had experience in implementing “walking school buses” and drew on that experience to develop and implement broader Safe Routes to Schools interventions. History of collaboration enabled awardee programs to draw on partners for resources and knowledge to develop CPPW objectives quickly, recruit individuals to join a Leadership Team, and identify community strengths and challenges for implementing objectives. This suggests that before planning and implementing a public health initiative, public health practitioners may wish to invest time in building partnerships. As one respondent explained, “It takes about 2 years to build [such] a collaborative.” Having experience and extant collaborations may have given awardees more time to complete their implementation objectives.

The second combination represents 3 awardees, and although these awardees lacked experience, strong leadership facilitated the completion of objectives. With 2 of the awardees, the project leadership immediately began the processes to get contracts in place with key partners and hired new staff to get the program running as soon as funding became available. The third awardee had low levels of capacity on all the dimensions we assessed, except leadership support. In this awardee, the leader recognized that the health department lacked adequate capacity to implement CPPW on its own and involved a new, well-resourced partner to assume primary responsibilities for implementation. Across these awardees, program leaders quickly identified and addressed deficits in capacity.

These results highlight the multidimensional yet mutually reinforcing nature of organizational capacity and its role in successfully completing work plan objectives. Although each CPPW awardee was unique and brought forth different resources to its effort, elements such as experience, history of collaboration, leadership support, and turnover can and did have an impact on an organization’s ability to plan and implement evidence-based public health strategies. As these findings suggest, successful awardees that lack certain elements of capacity can quickly identify ways to enhance capacity by integrating partners with experience or by hiring essential personnel quickly. Additional work is needed to better understand and measure these constructs.

Limitations

This study has several limitations. First, the results are not statistically generalizable; however, they do shed light on common combinations to success across multiple awardees. This is useful information for practitioners interested in implementing similar initiatives and demonstrates that programs can have varied models and resources and still achieve program objectives.

Second, the outcome for these analyses does not capture health impacts. Because this study was designed as an implementation evaluation, we were limited in the outcome data available. For this reason, we defined our outcome in terms of work plan objectives achieved because these data were available from a complementary CPPW evaluation effort. Whether achieving objectives translated into population health impacts was beyond the scope of this study. Data from longitudinal analyses can better address whether such initiatives generate desired long-term impacts.

Third, in calibrating the conditions and outcomes, few guidelines or external standards exist about what constitutes the right amount of the constructs in the QCA model. For example, to determine “success,” our decision rule depended on what level of objective completion the funding organization would identify as satisfactory; in other programs or contexts, this level of success may or may not be acceptable. However, the evaluation team based the calibrations on empirically observable practices or documented experiences of awardees, such as whether the awardee had described any previous program experience that they could draw upon for implementing CPPW. In addition, in assigning values to each condition, the team triangulated data sources (eg, comparing the awardee application with interview data); triangulation validated the team’s assessments of each condition.

Fourth, as seen in the truth table (see Supplemental Digital Content Table 2, available at: http://links.lww.com/JPHMP/A229), some combinations lacked empirical cases; this is known as limited diversity and is common in small and intermediate N analyses, in part, due to clustering. In this context, we were unlikely to have a case with absolutely no elements of capacity because submitting an application for funding by itself may imply the presence of knowledge and resources. Thus, social reality predetermines whether some cases are likely to exist.22 To ensure that our results were robust despite the limited diversity, we assessed the conservative, intermediate, and parsimonious solutions and the simplifying assumptions to achieve those solutions. The solutions were logically consistent.

Finally, organizational capacity is a complex construct. Because we had a small number of cases, we could only reasonably include up to 4 conditions without generating substantial limited diversity. We selected these 4 aspects of capacity because they aligned with evidence on capacity within the scientific literature, they were relevant to our understanding of the CPPW initiative, and the data could be collected in a feasible manner across awardees. Different or additional aspects of organizational capacity may exist that may be more relevant in other settings.

Despite these limitations, the findings presented in this article provide information useful to public health practitioners and funders. The results can enhance their understanding of the elements of capacity that in combination promote the achievement of work plan objectives. Assessing whether key elements of capacity are in place or can be efficiently developed prior to implementation can help funders and practitioners identify and develop more successful programs.

Supplementary Material

Implications for Policy & Practice.

-

■

In public health practice, awardees often have varying types of capacity: some have strong leaders but few partnerships; others have long-established partners but less experience. Less often, awardees have all the elements of capacity in place.

-

■

This study demonstrated that even with the presence or absence of different elements of capacity, awardees can achieve their program objectives.

-

■

Public health practitioners can use the findings to leverage existing elements and identify other elements they may be able to strengthen their organizational capacity.

-

■

Practitioners can then highlight those elements when applying for funding.

-

■

The findings also provide some insight into key criteria funders may wish to consider when developing or reviewing new awards.

-

■

Funders can benefit from knowing that multiple combinations of capacity can contribute to success and can use the successful pathways outlined in the truth table (see Supplemental Digital Content Table 2, available at: http://links.lww.com/JPHMP/A229) to enhance their review process.

-

■

Potential awardees need not have complete capacity but should have several strengths to offset weaker aspects of their capacity.

Acknowledgments

The Centers for Disease Control and Prevention (CDC) provided funding for this project. The authors gratefully acknowledge all of the Communities Putting Prevention to Work awardees who participated in this evaluation. They also thank Devon McGowan and Karen Strazza, for assistance with NVivo coding, and Kyle Longest, for consultation on the Qualitative Comparative Analysis.

Footnotes

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of CDC.

In accordance with US law, no federal funds provided by CDC were permitted to be used by community grantees for lobbying or to influence, directly or indirectly, specific pieces of pending or proposed legislation at the federal, state, or local levels.

The authors declare no conflicts of interest.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s Web site (http://www.JPHMP.com).

References

- 1.Bunnell R, O’Neil D, Soler R, et al. Fifty Communities Putting Prevention to Work: accelerating chronic disease prevention through policy, systems and environmental change. J Commun Health. 2012;37(5):1081–1090. doi: 10.1007/s10900-012-9542-3. [DOI] [PubMed] [Google Scholar]

- 2.Soler R, Orenstein D, Honeycutt A, et al. Community-based interventions to decrease obesity and tobacco exposure and reduce health care costs: outcome estimates from Communities Putting Prevention to Work for 2010–2020. Prev Chronic Dis. 2016;13:150272. doi: 10.5888/pcd13.150272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Horton D, Alexaki A, Bennett-Lartey S, et al. Evaluating capacity development: experiences from research and development organizations around the world. Chapter 2. http://www.idrc.ca/en/ev-31556-201-1-DO_TOPIC.html. Published 2003. Accessed November 4, 2010.

- 4.Goodman RM. A construct for building the capacity of community-based initiatives in racial and ethnic communities: a qualitative cross-case analysis. J Public Health Manag Pract. 2008;14(suppl):S18–S25. doi: 10.1097/01.PHH.0000338383.74812.18. [DOI] [PubMed] [Google Scholar]

- 5.Montemurro GR, Raine KD, Nykiforouk CIJ, Mayan M. Exploring the process of capacity-building among community-based health promotion workers in Alberta, Canada [published online ahead of print February 27, 2013] Health Promot Int. doi: 10.1093/heapro/dat008. [DOI] [PubMed] [Google Scholar]

- 6.Foster-Fishman PG, Berkowitz SL, Lounsbury DW, Jacobson S, Allen NA. Building collaborative capacity in community coalitions: a review and integrative framework. Am J Community Psychol. 2001;29(2):241–261. doi: 10.1023/A:1010378613583. [DOI] [PubMed] [Google Scholar]

- 7.Freudenberg N. Community capacity for environmental health promotion: determinants and implications for practice. Health Educ Behav. 2004;31(4):472–490. doi: 10.1177/1090198104265599. [DOI] [PubMed] [Google Scholar]

- 8.Giamartino G, Wandersman A. Organizational climate correlates of viable urban block organizations. Am J Community Psychol. 1983;11(5):529–541. [Google Scholar]

- 9.Gottlieb NH, Brink SG, Gingiss PL. Correlates of coalition effectiveness: the Smoke Free Class of 2000 Program. Health Educ Res. 1993;8(3):375–384. doi: 10.1093/her/8.3.375. [DOI] [PubMed] [Google Scholar]

- 10.Hays CE, Hays SP, DeVille JO, Mulhall PF. Capacity for effectiveness: the relationship between coalition structure and community impact. Eval Program Plann. 2000;23(3):373–379. [Google Scholar]

- 11.Kegler MC, Steckler A, McLeroy K, Malek SH. Factors that contribute to effective community health promotion coalitions: a study of 10 project ASSIST coalitions in North Carolina. Health Educ Behav. 1998;25(3):338–353. doi: 10.1177/109019819802500308. [DOI] [PubMed] [Google Scholar]

- 12.Minkler M, Vasquez VB, Tajik M, Petersen D. Promoting environmental justice through community-based participatory research: the role of community and partnership capacity. Health Educ Behav. 2008;35(1):119–137. doi: 10.1177/1090198106287692. [DOI] [PubMed] [Google Scholar]

- 13.Raynor J, York P, Sim S. What Makes an Effective Advocacy Organization? A Framework for Determining Advocacy Capacity. Los Angeles, CA: The California Endowment; 2009. [Google Scholar]

- 14.Reisman J, Gienapp A, Stachowiak S. A Guide to Measuring Advocacy and Policy. Baltimore, MD: Annie E Casey Foundation; 2007. [Google Scholar]

- 15.Rogers T, Howard-Pitney B, Feighery EC, et al. Characteristics and participant perceptions of tobacco control coalitions in California. Health Educ Res. 1993;8(3):345–357. [Google Scholar]

- 16.Longest KC, Thoits PA. Gender, the stress process, and health: a configurational approach. Soc Ment Health. 2012;2(3):187–206. [Google Scholar]

- 17.Ragin C. Fuzzy-Set Social Science. Chicago, IL: The University of Chicago Press; 2000. [Google Scholar]

- 18.Kane H, Lewis MA, Williams PA, Kahwati LC. Using qualitative comparative analysis to understand and quantify translation and implementation. Transl Behav Med. 2014;4(2):201–208. doi: 10.1007/s13142-014-0251-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rihoux B, Ragin CC. Configurational Comparative Methods: Qualitative Comparative Analysis (QCA) and Related Techniques. Thousand Oaks, CA: Sage; 2009. [Google Scholar]

- 20.Dusa A, Thiem A. Qualitative Comparative Analysis. 2014;2015 R Package; http://cran.r-project.org/package=QCA. Accessed April 4, 2016. [Google Scholar]

- 21.Quaranta M. SetMethods: A Package Companion to Set-Theoretic Methods for the Social Sciences. 2013 R Package; http://cran.r-project.org/package=SetMethods. Accessed April 4, 2016.

- 22.Schneider CQ, Wagemann C. Set-Theoretic Methods for the Social Sciences: A Guide to Qualitative Comparative Analysis. Cambridge, England: Cambridge University Press; 2012. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.