Significance

What principles govern large-scale reorganization of the brain? In the blind, several visual regions preserve their task specificity but switch to tactile or auditory input. It remains open whether this type of reorganization is unique to the visual cortex or is a general mechanism in the brain. Here we asked deaf and hearing adults to discriminate between temporally complex sequences of stimuli during an fMRI experiment. We show that the same auditory regions were activated when deaf subjects performed the visual version of the task and when hearing subjects performed the auditory version of the task. Thus, switching sensory input while preserving the task specificity of recruited areas might be a general principle that guides cortical reorganization in the brain.

Keywords: cross-modal plasticity, perception, auditory cortex, sensory deprivation, fMRI

Abstract

The principles that guide large-scale cortical reorganization remain unclear. In the blind, several visual regions preserve their task specificity; ventral visual areas, for example, become engaged in auditory and tactile object-recognition tasks. It remains open whether task-specific reorganization is unique to the visual cortex or, alternatively, whether this kind of plasticity is a general principle applying to other cortical areas. Auditory areas can become recruited for visual and tactile input in the deaf. Although nonhuman data suggest that this reorganization might be task specific, human evidence has been lacking. Here we enrolled 15 deaf and 15 hearing adults into an functional MRI experiment during which they discriminated between temporally complex sequences of stimuli (rhythms). Both deaf and hearing subjects performed the task visually, in the central visual field. In addition, hearing subjects performed the same task in the auditory modality. We found that the visual task robustly activated the auditory cortex in deaf subjects, peaking in the posterior–lateral part of high-level auditory areas. This activation pattern was strikingly similar to the pattern found in hearing subjects performing the auditory version of the task. Although performing the visual task in deaf subjects induced an increase in functional connectivity between the auditory cortex and the dorsal visual cortex, no such effect was found in hearing subjects. We conclude that in deaf humans the high-level auditory cortex switches its input modality from sound to vision but preserves its task-specific activation pattern independent of input modality. Task-specific reorganization thus might be a general principle that guides cortical plasticity in the brain.

It is well established that the brain is capable of large-scale reorganization following sensory deprivation, injury, or intensive training (1–8). What remain unclear are the organizational principles that guide this process. In the blind, high-level visual regions preserve their task specificity despite being recruited for different sensory input (9, 10). For example, the blind person’s ventral visual stream responds to tactile and auditory object recognition (11, 12), tactile and auditory reading (13, 14), and auditory perception of body shapes (15). Similarly, the blind person’s dorsal visual stream is activated by tactile and auditory space perception (16) as well as by auditory motion perception (17). This division of labor in blind persons corresponds to the typical task specificity of visual areas in sighted persons. It remains open, however, whether task-specific reorganization is unique to the visual cortex or, alternatively, whether this kind of plasticity is a general principle applying to other cortical areas as well.

Several areas in the auditory cortex are known to be recruited for visual and tactile input in the deaf (18–22). However, the only clear case of task-specific reorganization of the auditory cortex has been demonstrated in deaf cats. Particularly, Lomber et al. (20) showed that distinct auditory regions support peripheral visual localization and visual motion detection in deaf cats, and that the same auditory regions support auditory localization and motion detection in hearing cats. Following their line of work, using cooling-loop cortex deactivation, Meredith et al. (21) demonstrated that a specific auditory region, the auditory field of the anterior ectosylvian sulcus, is critical for visual orientation in deaf cats and for auditory orientation in hearing cats. In deaf cats, recruitment of the auditory cortex for visual orientation was spatially specific, because deactivation of the primary auditory cortex did not produce deficits in this visual function.

In deaf humans, the auditory cortex is known to be recruited for peripheral visual perception and simple tactile processing (23–29). However, the task specificity of this reorganization remains to be demonstrated, because no perceptual study directly compared cross-modal activations in deaf humans with the typical organization of the auditory cortex for auditory processing in hearing persons. Finding an instance of task-specific recruitment in the human auditory cortex would provide evidence that task-specific reorganization is a general principle in the brain. Here we enrolled deaf and hearing subjects into an fMRI experiment during which they were asked to discriminate between two rhythms, i.e., two temporally complex sequences of stimuli (Fig. 1A). Both deaf and hearing subjects performed the task visually, in the central visual field (Fig. 1B). Hearing subjects also performed the same task in the auditory modality (Fig. 1B).

Fig. 1.

Experimental design and behavioral results. (A) The experimental task and the control task performed in the fMRI. Subjects were presented with pairs of sequences composed of flashes/beeps of short (50-ms) and long (200-ms) duration separated by 50- to 150-ms blank intervals. The sequences presented in each pair either were identical or the second sequence was a permutation of the first. The subjects were asked to judge whether two sequences in the pair were the same or different. The difficulty of the experimental task (i.e., the number of flashes/beeps presented in each sequence and the pace of presentation) was adjusted individually, before the fMRI experiment, using an adaptive staircase procedure. In the control task, the same flashes/beeps were presented at a constant pace (50-ms stimuli separated by 150-ms blank intervals), and subjects were asked to watch/listen to them passively. (B) Outline of the study. Deaf and hearing subjects participated in the study. Both groups performed the tasks visually, in the central visual field. Hearing subjects also performed the tasks in the auditory modality. Before the fMRI experiment, an adaptive staircase procedure was applied. (C) Behavioral results. (Left) Output of the adaptive staircase procedure (average length of sequences to be presented in the experimental task in the fMRI) for both subject groups and sensory modalities. (Right) Performance in the fMRI (the accuracy of the same/different decision in the experimental task). Thresholds: ***P < 0.001. Error bars represent SEM.

In hearing individuals, rhythm processing is performed mostly in the auditory domain (30–32). If task-specific reorganization applies to the human auditory cortex, visual rhythms should recruit the auditory cortex in deaf persons. Moreover, the auditory areas activated by visual rhythm processing in the deaf should also be particularly engaged in auditory rhythm processing in hearing persons. Confirming these predictions would constitute a clear demonstration of task-specific reorganization of the human auditory cortex.

Finally, dynamic visual stimuli are known to be processed in the dorsal visual stream (33). Thus, our last prediction was that visual rhythm processing in the deaf should induce increased functional connectivity between the dorsal visual stream and the auditory cortex. Such a result would further confirm that the auditory cortex in the deaf is indeed involved in this visual function.

Results

Behavioral Results.

Fifteen congenitally deaf adults and 15 hearing adults participated in the visual part of the experiment. Eleven of the 15 originally recruited hearing subjects participated in the auditory part of the experiment; the four remaining subjects refused to undergo an fMRI scan for a second time. All subjects were right-handed, and the groups were matched for sex, age, and education level (all P > 0.25). Deaf subjects were interviewed before the experiment to obtain detailed characteristics of their deafness, language experience, and their use of hearing aids (Methods, Subjects and Table S1).

Table S1.

Characteristics of deaf participants

| Subject | Age, y | Sex | Cause of deafness | Hearing loss, left ear/right ear/mean | Hearing aid use | How well the subject understands speech with hearing aid | Native language, oral/sign | Languages primarily used at the moment of the experiment |

| MN | 34 | M | Maternal disease/ drug side effects | 120/120/120 dB | Uses currently | Moderately | Oral | Sign and oral |

| DK | 20 | F | Hereditary deafness | 113/115/114 dB | Used in the past | Poorly | Oral | Sign and oral |

| KN | 33 | F | Maternal disease/ drug side effects | 102/120/111 dB | Uses currently | Moderately | Oral | Sign and oral |

| MGA | 37 | F | Maternal disease/ drug side effects | 110/110/110 dB | Used in the past | Poorly | Oral | Sign |

| MW | 29 | F | Maternal disease/ drug side effects | 100/120/110 dB | Uses currently | Well | Oral | Sign and oral |

| DO | 31 | M | Hereditary deafness | 103/103/103 dB | Uses currently | Moderately | Oral | Sign and oral |

| AP | 42 | F | Hereditary deafness | 103/101/102 dB | Never used | Sign | Sign | |

| MM | 27 | M | Hereditary deafness | 94/107/101 dB | Used in the past | Poorly | Sign | Sign |

| JK | 19 | M | Hereditary deafness | 90/110/100 dB | Uses currently | Well | Sign | Sign and oral |

| AS | 19 | F | Hereditary deafness | 94/103/99 dB | Uses currently | Very well | Sign | Sign and oral |

| ML | 23 | M | Hereditary deafness | 95/100/98 dB | Uses currently | Moderately | Sign | Sign and oral |

| MG | 36 | F | Hereditary deafness | 95/95/95 dB | Uses currently | Poorly | Sign | Sign |

| OS | 30 | F | Hereditary deafness | 90/90/90 dB | Used in the past | Poorly | Sign | Sign and oral |

| KM | 29 | F | Hereditary deafness | 78/92/85 dB | Uses currently | Poorly | Sign | Sign and oral |

| KS | 26 | F | Hereditary deafness | 70/60/65 dB | Uses currently | Well | Sign | Sign |

Information was reported by participants on the day of the experiment. Hearing loss was determined using a standard pure-tone audiometry.

Subjects were presented with pairs of rhythmic sequences composed of a similar number of short (50-ms) and long (200-ms) duration flashes/beeps, separated by 50- to 150-ms blank intervals (Methods, fMRI Experiment and Fig. 1A). To equalize subject performance levels, we used an adaptive staircase procedure before the fMRI scan in which the length of visual and auditory rhythms presented in the fMRI part (i.e., the number of visual flashes or auditory beeps presented in each sequence in the experimental task) (Fig. 1A) was adjusted (Methods, Adaptive Staircase Procedure and Fig. 1 B and C). The average length of visual rhythms established by the adaptive procedure was 7.07 flashes presented in each sequence for deaf subjects (SD = 1.23) and 7.2 flashes presented in each sequence for sighted subjects (SD = 1.42). The accuracy of performance during the fMRI experiment, with individual lengths of sequences in the experimental task applied, was 64.4% (SD = 12.1%) and 62.2% (SD = 15.3%) for these two groups, respectively (both are above chance level, P < 0.01). Neither of these two measures differed significantly between the deaf and the hearing groups (all P > 0.25) (Fig. 1C).

The length of the auditory rhythm sequences established by the adaptive procedure for hearing subjects (mean = 12.09, SD = 2.95) was significantly higher than the length of the visual rhythm sequences established either for the same subjects [t (10) = 6.62, P < 0.001] or for deaf subjects [t (24) = 5.33, P < 0.001]. During the fMRI experiment, these longer auditory rhythm sequences led to similar performance for auditory rhythms (mean = 69.3%, SD = 12.5%) and visual rhythms presented in hearing subjects [(t (10) = 0.60, P > 0.25]. The performance of the hearing subjects for auditory rhythms also was similar to the performance of the deaf subjects for visual rhythms [t (24) = 0.99, P > 0.25]. Thus, as expected (30–32), auditory rhythms were easier for hearing subjects than visual rhythms, resulting in a significantly higher average length of sequences obtained in the staircase procedure. During the fMRI experiment, however, the accuracy of performance did not differ significantly between subject groups or sensory modalities (Fig. 1C), showing that the staircase procedure was effective in controlling subjects’ performance in the fMRI and that between-group and between-modality differences in neural activity cannot be explained by different levels of behavioral performance.

fMRI Results.

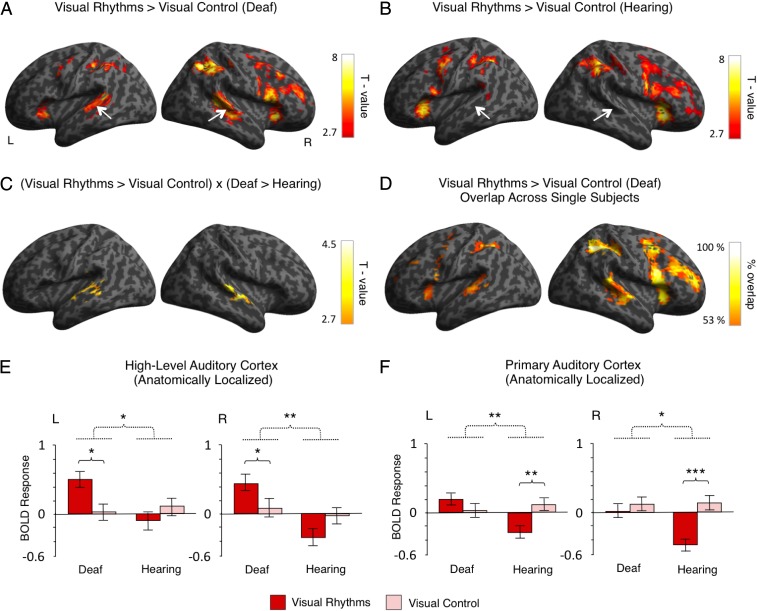

We started by comparing the activations induced by visual rhythms (Fig. 1A) relative to visual control (simple visual flashes with a constant interval) (Fig. 1A) in both subject groups. In the deaf subjects, this contrast revealed a bilateral activation in the superior and the middle temporal gyri, including the auditory cortex (right hemisphere: peak MNI = 51,−40, 8, t = 6.41; left hemisphere: peak MNI = −57, −49, 8; t = 5.64) (Fig. 2A). The activation in the auditory cortex was constrained mostly to posterior–lateral, high-level auditory areas [the posterior–lateral part of area Te 3 (34)]. It overlapped only marginally with the primary auditory cortex [areas Te 1.0, Te 1.1, and Te 1.2 (35); Methods, fMRI Data Analysis for a description of how these areas were localized]. Additional activations were also found in frontal and parietal regions (Fig. 2A and Table S2). In the hearing subjects, we found similar frontal and parietal activations but no effects in the auditory cortex (Fig. 2B and Table S2).

Fig. 2.

Visual rhythms presented in the central visual field activated the auditory cortex in deaf subjects. (A and B) Activations induced by visual rhythms relative to regular visual stimulation in deaf subjects (A) and hearing subjects (B). The auditory cortex is indicated by white arrows. (C) Interaction between the task and the subject’s group. The only significant effect of this analysis was found in the auditory cortex, bilaterally. (D) Overlap in single-subject activations for visual rhythms relative to regular visual stimulation across all deaf subjects. (E and F) The results of independent ROI analyses. ROIs were defined in the high-level auditory cortex (E) and the primary auditory cortex (F) based on an anatomical atlas. The analysis confirmed that visual rhythms enhanced activity in the high-level auditory cortex of deaf subjects, whereas no effect was found in the hearing subjects. Significant interaction between the task and the group also was found in the primary auditory cortex. However, this effect was driven mainly by significant deactivation of this region for visual rhythms in hearing subjects. Thresholds: (A–C) P < 0.005 voxelwise and P < 0.05 clusterwise. (D) Each single-subject activation map was assigned a threshold of P < 0.05 voxelwise and P < 0.05 clusterwise. Only overlaps that are equal to or greater than 53% of all deaf subjects are presented. (E and F) *P < 0.05; **P < 0.01; ***P < 0.001. Dashed lines denote interactions. Error bars represent SEM.

Table S2.

Activations for visual rhythms relative to the visual control task in the deaf and the hearing subjects and the interaction between the task and the group

| Contrast | Region | Brodmann's area | Hemisphere | t Statistic | Cluster size | MNI coordinates | ||

| Visual rhythms vs. visual control in the deaf | ||||||||

| Insula | 13 | Right | 8.29 | 874 | 33 | 26 | −2 | |

| Middle frontal gyrus | 9 | Right | 6.48 | 51 | 23 | 37 | ||

| Inferior frontal gyrus | 44 | Right | 6.31 | 51 | 14 | 8 | ||

| Supramarginal gyrus | 40 | Right | 7.61 | 449 | 48 | −40 | 44 | |

| Inferior parietal lobule | 39 | Right | 7.46 | 36 | −49 | 41 | ||

| Posterior-medial frontal gyrus | 8 | Right | 7.52 | 557 | 3 | 17 | 48 | |

| Middle cingulate cortex | 8 | Right | 7.16 | 9 | 29 | 34 | ||

| Superior medial gyrus | NA | Right | 7.08 | 3 | 29 | 41 | ||

| Inferior parietal lobule | 40 | Left | 6.55 | 281 | −42 | −37 | 44 | |

| 39 | Left | 4.76 | −36 | −55 | 44 | |||

| NA | Left | 4.55 | −36 | −49 | 37 | |||

| Insula | 13 | Left | 6.45 | 138 | −33 | 23 | −2 | |

| Middle temporal gyrus | 22 | Right | 6.41 | 341 | 51 | −40 | 8 | |

| Superior temporal gyrus | 22 | Right | 6.34 | 60 | −25 | −2 | ||

| 22 | Right | 6.09 | 63 | −16 | −2 | |||

| Middle temporal gyrus | 21 | Left | 5.64 | 209 | −57 | −49 | 8 | |

| 21 | Left | 5.48 | −54 | −40 | 5 | |||

| 22 | Left | 4.68 | −60 | −22 | 1 | |||

| Precentral gyrus | 6 | Left | 4.44 | 67 | −45 | −1 | 52 | |

| 6 | Left | 3.86 | −36 | −7 | 62 | |||

| 6 | Left | 3.82 | −42 | 2 | 37 | |||

| Visual rhythms vs. visual control in the hearing | ||||||||

| Insula | 13 | Left | 9.15 | 543 | −33 | 23 | 1 | |

| Inferior frontal gyrus | 44 | Left | 6.61 | −57 | 5 | 19 | ||

| Precentral gyrus | 6 | Left | 6.60 | −45 | −1 | 44 | ||

| Posterior–medial frontal gyrus | 8 | Right | 9.10 | 538 | 6 | 14 | 48 | |

| 6 | Left | 7.26 | −6 | 8 | 48 | |||

| 6 | Left | 6.35 | −3 | 2 | 62 | |||

| Supramarginal gyrus | 40 | Right | 8.68 | 469 | 45 | −40 | 44 | |

| Angular gyrus | 39 | Right | 5.21 | 30 | −58 | 44 | ||

| Angular gyrus | 39 | Right | 4.79 | 39 | −58 | 52 | ||

| Insula | 47 | Right | 8.58 | 1024 | 33 | 29 | −2 | |

| 13 | Right | 8.25 | 42 | 20 | −2 | |||

| Inferior frontal gyrus | 44 | Right | 7.74 | 45 | 20 | 8 | ||

| Inferior parietal lobule | 7 | Left | 6.77 | 299 | −30 | −46 | 44 | |

| 40 | Left | 6.56 | −42 | −37 | 44 | |||

| Supramarginal gyrus | NA | Left | 4.32 | −48 | −40 | 30 | ||

| Putamen | 49 | Right | 4.38 | 68 | 18 | 11 | −6 | |

| Caudate nucleus | 48 | Right | 3.86 | 9 | 11 | 5 | ||

| Pallidum | 49 | Right | 3.51 | 21 | 8 | 1 | ||

| Interaction: (visual rhythms vs. visual control) × (the deaf vs. the hearing) | ||||||||

| Superior temporal gyrus | 22 | Right | 5.41 | 129 | 63 | −16 | −2 | |

| 41 | Right | 3.95 | 60 | −25 | 5 | |||

| 22 | Right | 3.76 | 54 | −4 | −2 | |||

| Middle temporal gyrus | 21 | Left | 4.08 | 110 | −63 | −13 | −6 | |

| 22 | Left | 3.82 | −60 | −22 | 1 | |||

| 21 | Left | 3.67 | −57 | −40 | 1 | |||

NA, not applicable.

To test directly for activations specific to deaf subjects only, we performed a whole-brain interaction analysis between the task and the subjects’ group (visual rhythms vs. visual control × deaf subjects vs. hearing subjects). In line with previous comparisons, we observed a significant, bilateral effect in the auditory cortex (right hemisphere: peak MNI = 63, −16, −2, t = 5.41; left hemisphere: peak MNI = −63, −13, −6, t = 4.08) (Fig. 2C and Table S2). This result confirms that visual rhythms induced significant auditory cortex activations only in deaf subjects.

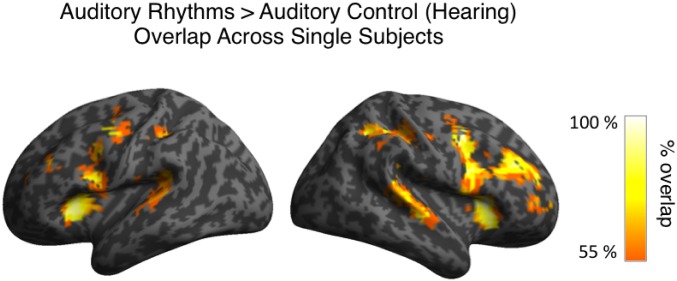

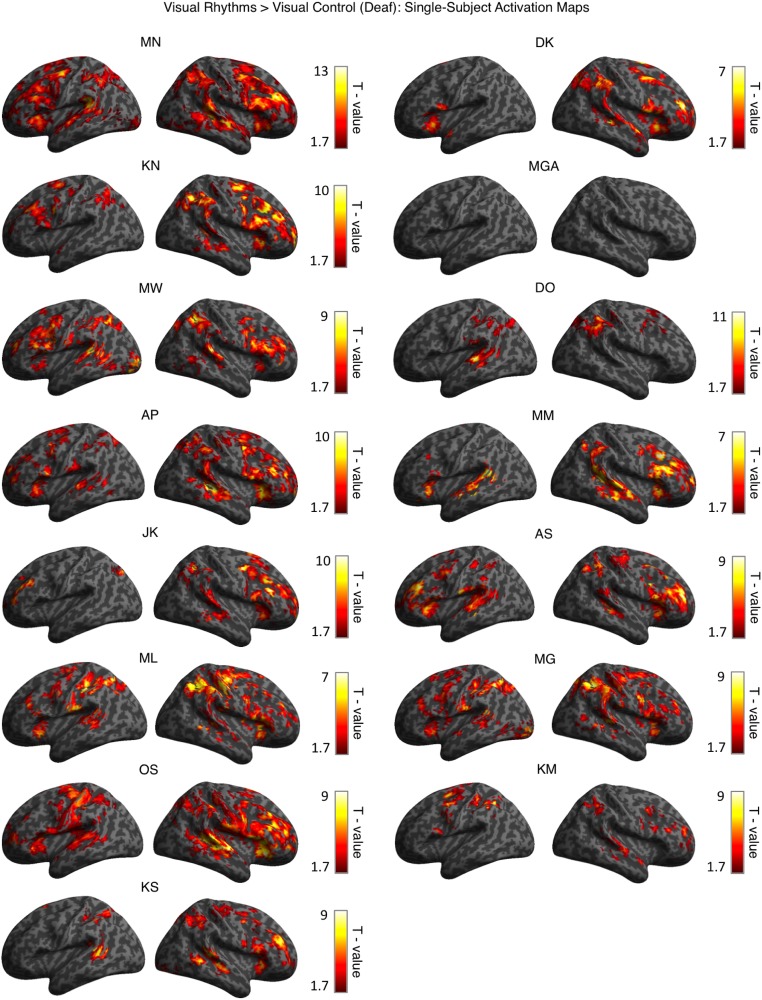

Because the outcomes of deprivation-driven plasticity might differ in subjects with various causes of deafness and levels of auditory experience, we tested for within-group consistency in activations for visual rhythms in the deaf. To this end, we overlapped single-subject activation maps for visual rhythms vs. visual control contrast for all deaf subjects (Methods, fMRI Data Analysis and Fig. 2D). In the right high-level auditory cortex, these activations overlapped in 80% of all deaf subjects. In the left high-level auditory cortex, the consistency of activations for visual rhythms was lower (found in 60% of all deaf subjects), in line with reports showing that cross-modal responses in the deaf are generally right-lateralized (25, 26, 36). A similar but slightly more symmetric overlap was found for auditory activations in hearing subjects (right high-level auditory cortex, 82% of all hearing subjects; left high-level auditory cortex, 73%) (Fig. S1). These results show that cross-modal activations for visual rhythms in the deaf are as robust as the typical activations for auditory rhythms in the hearing. Single-subject activation maps for all deaf subjects are shown in Fig. S2.

Fig. S1.

Overlap in single-subject activations for auditory rhythms relative to auditory control across all hearing subjects. Each single-subject activation map was assigned a threshold of P < 0.05 voxelwise and P < 0.05 clusterwise. Only overlaps equal to or greater than 55% of all hearing subjects are presented.

Fig. S2.

Single-subject activation maps for visual rhythms vs. visual control contrast for all deaf subjects. Each map was assigned a threshold of P < 0.05 voxelwise and P < 0.05 clusterwise. The order of subjects corresponds to Table S1.

In deaf cats, cross-modal activations are spatially specific: They are found in high-level auditory areas and usually do not extend to the primary auditory cortex (20, 21, 37). To test directly if this specificity is shown in our data, we applied anatomically guided region-of-interest (ROI) analysis of the high-level auditory cortex (area Te 3) and the primary auditory cortex (the combined areas Te 1.0, Te 1.1, and Te 1.2) (Methods, fMRI Data Analysis) (34, 35, 38). This analysis confirmed that visual rhythms induced significant activity in the high-level auditory cortex in deaf subjects (visual rhythms vs. visual control in deaf subjects, right hemisphere: P = 0.019; left hemisphere: P = 0.019) (Fig. 2E) and that this effect was specific to the deaf group [visual rhythms vs. visual control × deaf subjects vs. hearing subjects, right hemisphere: F(1, 27) = 9.66, P = 0.004; left hemisphere: F(1, 27) = 5.45, P = 0.027] (Fig. 2E).

In the primary auditory cortex, we also found a significant interaction effect between task and subject group [visual rhythms vs. visual control × deaf subjects vs. hearing subjects, right hemisphere: F(1, 27) = 5.15, P = 0.032; left hemisphere: F(1, 27) = 10.178, P = 0.004] (Fig. 2F). However, this interaction was driven mostly by a significant deactivation of this region for visual rhythms in the hearing (visual rhythms vs. visual control in hearing subjects, right hemisphere: P < 0.001; left hemisphere: P = 0.001) (Fig. 2F). In nondeprived subjects, such deactivations are commonly found in primary sensory cortices of one modality while another sensory modality is stimulated (e.g., ref. 39). We did not observe a significant increase in the activation of the primary auditory cortex for visual rhythms in deaf subjects (visual rhythms vs. visual control in deaf subjects, right hemisphere: P > 0.25; left hemisphere: P = 0.212) (Fig. 2F). The ROI analysis thus confirmed that visual rhythms presented in the central visual field activated the high-level auditory cortex in deaf subjects but not in hearing subjects. In the deaf subjects, this cross-modal activation did not extend to the primary auditory cortex.

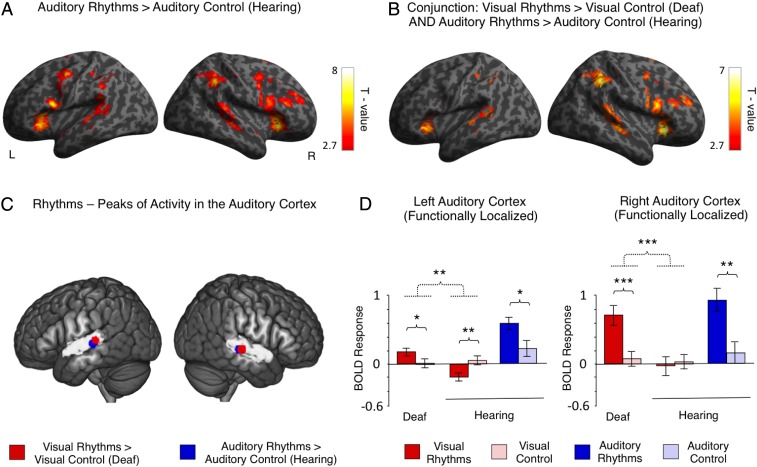

We then asked whether the same auditory areas, independently of sensory modality, are recruited for rhythms in the deaf and the hearing. To this aim, we compared activations induced by visual rhythms in the deaf with activations induced by auditory rhythms in the hearing (Methods, fMRI Data Analysis and Fig. 3). Relative to the auditory control, auditory rhythms in hearing subjects activated the superior and middle temporal gyri, including the auditory cortex (right hemisphere: peak MNI = 66, −34, 12, t = 4.78; left hemisphere: peak MNI = −57, −43, 23, t = 4.94) (Fig. 3A), as well as frontal and parietal regions (Fig. 3A and Table S3). The activation in the auditory cortex was constrained mostly to posterior–lateral, high-level auditory areas. Thus, the activation pattern observed in hearing subjects for auditory rhythms was similar to the activations observed in deaf subjects for visual rhythms (Fig. 2A). Next, we used a conjunction analysis (logical AND) (40) to test statistically for regions activated by both visual rhythms in deaf subjects and auditory rhythms in hearing subjects (visual rhythms vs. visual control in deaf subjects AND auditory rhythms vs. auditory control in hearing subjects). The analysis confirmed that activation patterns induced by visual rhythms in the deaf and auditory rhythms in the hearing subjects largely overlapped, despite differences in sensory modality (Fig. 3B and Table S3). In line with previous analyses, statistically significant overlap also was found in the auditory cortex (right hemisphere: peak MNI = 63, −25, −2, t = 4.27; left hemisphere: peak MNI = −63, −28 1, t = 3.94), confirming that visual rhythms and auditory rhythms recruited the same auditory areas.

Fig. 3.

The auditory cortex processes rhythm independently of sensory modality. (A) Activations induced by auditory rhythms relative to regular auditory stimulation in hearing subjects. (B) Brain regions that were activated both by visual rhythms relative to regular visual stimulation in deaf subjects and auditory rhythms relative to regular auditory stimulation in hearing subjects (conjunction analysis). (C) Peaks of activation for visual and auditory rhythms in the auditory cortex. Peaks for visual rhythms relative to regular visual stimulation in deaf subjects are illustrated in red. Peaks for auditory rhythms relative to regular auditory stimulation in hearing subjects are depicted in blue. The high-level auditory cortex is illustrated in gray, based on an anatomical atlas. The peaks are visualized as 6-mm spheres. Note the consistency of localization of peaks, even though deaf and hearing subjects performed the task in different sensory modalities. (D) The results of an ROI analysis in which activations in the auditory cortex induced by visual rhythms and auditory rhythms were used as independent localizers for each other. ROIs for comparisons between visual tasks were defined based on activation in the auditory cortex induced by auditory rhythms relative to regular auditory stimulation in hearing subjects. ROIs for comparison between auditory tasks were defined based on visual rhythms vs. regular visual stimulation contrast in deaf subjects. Dotted lines denote interactions. Error bars represent SEM. Thresholds: (A and B) P < 0.005 voxelwise and P < 0.05 clusterwise. (D) *P < 0.05; **P < 0.01; ***P < 0.001.

Table S3.

Activations for auditory rhythms relative to the auditory control task in the hearing subjects and conjunction of activations for auditory rhythms relative to the auditory control task in the hearing subjects and visual rhythms relative to visual control task in the deaf subjects

| Contrast | Region | Brodmann's area | Hemisphere | t Statistic | Cluster size | MNI coordinates | ||

| Auditory rhythms vs. auditory control in the hearing subjects | ||||||||

| Posterior–medial frontal gyrus | 6 | Right | 8.82 | 529 | 6 | 5 | 62 | |

| 6 | Left | 7.79 | −3 | −1 | 62 | |||

| 8 | Right | 7.43 | 6 | 14 | 48 | |||

| Insula | 13 | Left | 7.87 | 570 | −33 | 23 | 1 | |

| Inferior frontal gyrus | 44 | Left | 6.67 | −51 | 8 | 19 | ||

| Precentral gyrus | 6 | Left | 5.84 | −48 | −4 | 48 | ||

| Cerebellum | NA | Right | 6.96 | 62 | 30 | −58 | −28 | |

| NA | Right | 2.75 | 18 | −52 | −24 | |||

| Insula | 13 | Right | 6.96 | 810 | 33 | 26 | −2 | |

| Insula | 13 | Right | 6.43 | 42 | 20 | −2 | ||

| Precentral gyrus | 6 | Right | 5.93 | 51 | 5 | 44 | ||

| Supramarginal gyrus | 40 | Right | 6.43 | 258 | 45 | −43 | 41 | |

| Angular gyrus | 39 | Right | 3.96 | 39 | −58 | 52 | ||

| Postcentral gyrus | 1 | Right | 3.14 | 51 | −28 | 52 | ||

| Superior temporal gyrus | 39 | Left | 4.94 | 296 | −57 | −43 | 23 | |

| 22 | Left | 4.42 | −60 | −25 | −2 | |||

| Middle temporal gyrus | 21 | Left | 4.39 | −51 | −46 | 8 | ||

| Superior temporal gyrus | 22 | Right | 4.78 | 199 | 66 | −34 | 12 | |

| 22 | Right | 4.76 | 54 | −22 | −2 | |||

| 22 | Right | 4.67 | 54 | −34 | 12 | |||

| Conjunction: (auditory rhythms vs. auditory control in the hearing subjects) AND (visual rhythms vs. visual control in the deaf subjects) | ||||||||

| Insula | 13 | Right | 6.96 | 421 | 33 | 26 | −2 | |

| Inferior frontal gyrus | 45 | Right | 5.02 | 48 | 20 | 1 | ||

| 44 | Right | 4.91 | 51 | 11 | 19 | |||

| Posterior–medial frontal gyrus | 8 | Right | 6.87 | 425 | 3 | 20 | 48 | |

| 6 | Left | 6.55 | −6 | 2 | 55 | |||

| 8 | Left | 5.85 | −6 | 17 | 44 | |||

| Insula | 13 | Left | 6.73 | 126 | −33 | 23 | −2 | |

| Supramarginal gyrus | 40 | Right | 6.33 | 243 | 45 | −40 | 44 | |

| Angular gyrus | 39 | Right | 3.96 | 39 | −58 | 52 | ||

| Middle frontal gyrus | 9 | Right | 5.36 | 112 | 51 | 32 | 30 | |

| 10 | Right | 4.32 | 36 | 44 | 19 | |||

| 9 | Right | 3.98 | 39 | 38 | 26 | |||

| Superior temporal gyrus | 22 | Right | 4.66 | 171 | 66 | −37 | 12 | |

| 22 | Right | 4.56 | 48 | −25 | −2 | |||

| 22 | Right | 4.52 | 60 | −25 | −2 | |||

| Middle temporal gyrus | 21 | Left | 4.39 | 88 | −51 | −46 | 8 | |

| 22 | Left | 4.16 | −60 | −22 | −2 | |||

| 21 | Left | 3.01 | −63 | −37 | 8 | |||

| Inferior parietal lobule | 40 | Left | 4.27 | 81 | −42 | −46 | 41 | |

| 7 | Left | 3.82 | −30 | −49 | 41 | |||

| NA | Left | 3.38 | −33 | −43 | 34 | |||

NA, not applicable.

To follow the task-specific hypothesis further, we asked whether activation for visual rhythms and auditory rhythms peaked in the same auditory region. To this aim, in both subject groups we plotted peaks of activation for rhythms within the auditory cortex (deaf subjects: visual rhythms vs. visual control contrast; hearing subjects: auditory rhythms vs. auditory control contrast). In both hemispheres, we found a close overlap in the localization of these peaks in deaf and hearing subjects (∼1-voxel distance from peak to peak), despite the different sensory modality in which the task was performed (Fig. 3C). Activations for both visual rhythms in the deaf and auditory rhythms in the hearing subjects peaked in the posterior and lateral part of the high-level auditory cortex.

To confirm the spatial consistency of activations induced by visual rhythms in the deaf subjects and auditory rhythms in the hearing subjects, we performed a functionally guided ROI analysis in the auditory cortex (Methods, fMRI Data Analysis) (Fig. 3D). In this analysis we used activation for visual rhythms in deaf subjects and activation for auditory rhythms in hearing subjects as independent localizers for each other. Our prediction was that the auditory areas most recruited for rhythm perception in one sensory modality would also be significantly activated by the same task performed in the other modality. In line with this prediction, in ROIs based on auditory rhythms in hearing subjects, we found a bilateral increase in activation for visual rhythms, relative to visual control, in deaf subjects (right hemisphere: P < 0.001; left hemisphere: P = 0.01) (Fig. 3D). The task × group interaction analysis confirmed that this effect was specific only to deaf subjects [visual rhythms vs. visual control × deaf subjects vs. hearing subjects, right hemisphere: F(1, 27) = 17.32, P < 0.001; left hemisphere: F(1, 27) = 13.34, P = 0.001] (Fig. 3D). Similarly, in ROIs defined based on visual rhythms in deaf subjects, we found increased activation for auditory rhythms, relative to auditory control, in hearing subjects (right hemisphere: P = 0.004; left hemisphere: P = 0.049) (Fig. 3D). In summary, this ROI analysis confirmed that in the auditory cortex the activation patterns for visual rhythms in deaf subjects and auditory rhythms in hearing subjects matched each other closely.

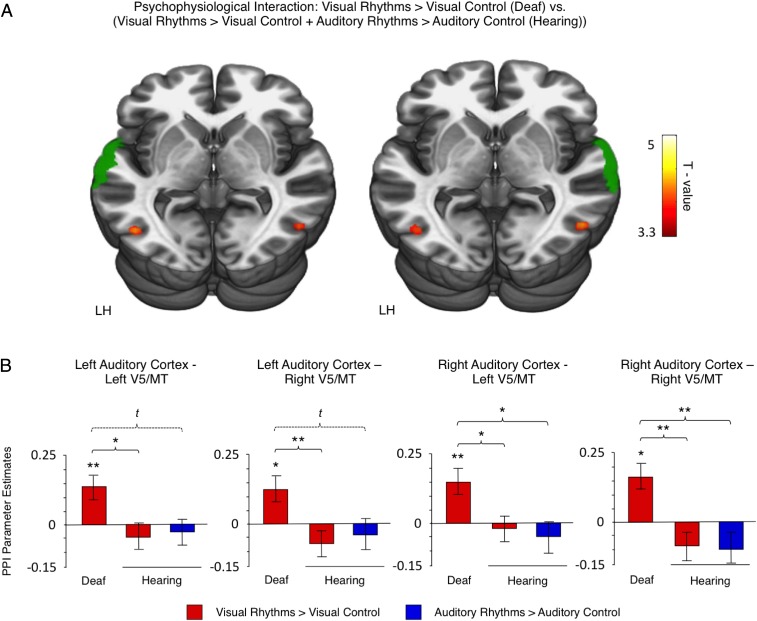

Dynamic visual stimuli are known to be processed in the dorsal visual stream (33). If the auditory cortex in deaf subjects indeed supports visual rhythm processing, one could expect that the connectivity between the dorsal visual stream and the auditory cortex would increase. Thus, in the last analysis, we investigated whether communication between the dorsal visual cortex and the high-level auditory cortex increases when the deaf subjects perform visual rhythm discrimination. To this aim, we measured task-related changes in functional connectivity with a psychophysiological interaction (PPI) analysis (Methods, fMRI Data Analysis). In line with our prediction, when deaf subjects performed visual rhythms, we observed a strengthened functional coupling between the high-level auditory cortex and the dorsal visual stream, namely the V5/MT cortex (Table S4). To determine whether this effect was specific to the deaf subjects, we compared task-related changes in functional connectivity between deaf and hearing subjects [(visual rhythms vs. visual control in deaf subjects) vs. (visual rhythms vs. visual control in hearing subjects + auditory rhythms vs. auditory control in hearing subjects)] (Fig. 4A). In this comparison also we observed a significant, bilateral effect in the V5/MT cortex (Fig. 4A and Table S4), confirming that functional coupling between the V5/MT cortex and the high-level auditory cortex increased only when deaf subjects performed visual rhythms. This finding was tested further in the ROI analysis based on anatomical masks of V5/MT cortex (Methods, fMRI Data Analysis and Fig. 4B). The ROI analysis confirmed that functional connectivity between the high-level auditory cortex and the V5/MT cortex increased when deaf subjects performed visual rhythms (one-sample t tests, all P < 0.05) (Fig. 4B) and that this increase was specific only to deaf subjects (F-tests for the main effects of the group, all P < 0.05; Bonferroni-corrected t tests, all P < 0.05; in addition, two trends are reported, at Bonferroni-corrected significance level of P < 0.1) (Fig. 4B). This result confirms that, indeed, in deaf subjects the auditory cortex takes over visual rhythm processing.

Table S4.

PPI with the high-level auditory cortex as a seed region

| Contrast | Region | Brodmann's area | Hemisphere | t Statistic | Cluster size | MNI coordinates | ||

| Seed region: the right high-level auditory cortex | ||||||||

| Visual rhythms vs. visual control in the deaf subjects | ||||||||

| Cerebellum | NA | Left | 5.59 | 26 | −27 | −85 | −17 | |

| Inferior occipital gyrus | 18 | Left | 4.18 | 14 | −21 | −97 | −6 | |

| Calcarine gyrus | 18 | Left | 3.60 | −12 | −103 | 1 | ||

| Inferior occipital gyrus | 19 | Left | 4.05 | 18 | −42 | −73 | 1 | |

| Inferior temporal gyrus | 37 | Right | 3.91 | 7 | 51 | −64 | 1 | |

| Middle occipital gyrus | 18 | Left | 3.64 | 5 | −24 | −97 | 8 | |

| Visual rhythms vs. visual control in the hearing subjects | ||||||||

| Lingual gyrus | 18 | Right | 4.61 | 17 | 9 | −58 | 8 | |

| Auditory rhythms vs. auditory control in the hearing subjects | ||||||||

| Cuneus | 18 | Right | 5.77 | 114 | 12 | −94 | 19 | |

| Calcarine gyrus | 18 | Right | 5.32 | 15 | −94 | 8 | ||

| Middle occipital gyrus | 18 | Right | 4.60 | 24 | −94 | 12 | ||

| Calcarine gyrus | 18 | Left | 4.83 | 50 | −15 | −94 | 5 | |

| Lingual gyrus | 18 | Left | 3.89 | 7 | −9 | −88 | −10 | |

| Calcarine gyrus | 18 | Left | 3.82 | 6 | −3 | −91 | 16 | |

| Cerebellum | NA | Left | 3.73 | 6 | −15 | −79 | −17 | |

| (Visual rhythms vs. visual control in the deaf subjects) vs. (visual rhythms vs. visual control in the hearing subjects + auditory rhythms vs. auditory control in the hearing subjects) | ||||||||

| Inferior temporal gyrus | 37 | Right | 4.79 | 14 | 51 | −64 | 1 | |

| Middle occipital gyrus | 19 | Left | 4.36 | 17 | −42 | −70 | 5 | |

| Seed region: the left high-level auditory cortex | ||||||||

| Visual rhythms vs. visual control in the deaf subjects | ||||||||

| Cerebellum | NA | Left | 6.16 | 32 | −24 | −85 | −17 | |

| Fusiform gyrus | 19 | Left | 3.85 | −36 | −79 | −13 | ||

| Inferior occipital gyrus | 19 | Left | 4.65 | 21 | −45 | −73 | 1 | |

| Inferior temporal gyrus | 37 | Right | 3.96 | 5 | 51 | −67 | 1 | |

| Visual rhythms vs. visual control in the hearing subjects | ||||||||

| Lingual gyrus | 18 | Right | 4.26 | 10 | 12 | −55 | 5 | |

| Auditory rhythms vs. auditory control in the hearing subjects | ||||||||

| Calcarine gyrus | 18 | Left | 4.86 | 34 | −12 | −94 | 5 | |

| Middle occipital gyrus | 18 | Left | 4.22 | −21 | −94 | 8 | ||

| Calcarine gyrus | 18 | Right | 4.08 | 15 | 12 | −94 | 12 | |

| (Visual rhythms vs. visual control in the deaf subjects) vs. (visual rhythms vs. visual control in the hearing subjects + auditory rhythms vs. auditory control in the hearing subjects) | ||||||||

| Inferior temporal gyrus | 19 | Left | 4.59 | 17 | −48 | −70 | −2 | |

| Inferior temporal gyrus | 37 | Right | 4.35 | 9 | 51 | −64 | 1 | |

NA, not applicable.

Fig. 4.

Functional connectivity between the auditory cortex and the V5/MT cortex was strengthened when deaf subjects performed visual rhythm discrimination. (A) Visual regions showing increased functional coupling with the high-level auditory cortex during visual rhythm processing relative to visual control in deaf subjects vs. no effect in hearing subjects (PPI analysis, between-group comparison). The analyses for the left and the right auditory cortex were performed separately. The seed regions (depicted in green) were defined based on an anatomical atlas. LH, left hemisphere. (B) The results of an ROI analysis, based on V5/MT anatomical masks. Thresholds: (A) P < 0.001 voxelwise and P < 0.05 clusterwise. The analysis was masked with the visual cortex anatomical mask. (B) t, trend level, P < 0.1; *P < 0.05; **P < 0.01; ***P < 0.001. Error bars represent SEM.

Discussion

In our study, we found that in deaf subjects the auditory cortex was activated when subjects discriminated between temporally complex visual stimuli (visual rhythms) presented in the central visual field. The same auditory areas were activated when hearing subjects performed the task in the auditory modality (auditory rhythms). In both sensory modalities, activation for rhythms peaked in the posterior and lateral part of high-level auditory cortex. In deaf subjects, the task induced a strengthened functional coupling between the auditory cortex and area V5/MT, known for processing dynamic visual stimuli. No such effect was detected in hearing subjects.

Neural plasticity was traditionally thought to be constrained by the brain’s sensory boundaries, so that the visual cortex processes visual stimuli and responds to visual training, the tactile cortex processes tactile stimuli and responds to tactile training, and so on. In contrast to this view, a growing number of studies show instances of large-scale reorganization that overcomes these sensory divisions. This phenomenon is particularly prominent in sensory deprivation. The visual cortex becomes recruited for tactile and auditory perception in the blind (1, 2, 6, 8, 13, 16, 17, 41–43), and the auditory cortex becomes recruited for tactile and visual perception in the deaf (20–23, 26–29, 44). Such large-scale reorganization is also possible after intensive training in nondeprived subjects (5, 12, 45–49). In particular, the ventral visual cortex was shown to be critical for learning tactile braille reading in sighted adults (5), suggesting that such plasticity could be the mechanism underlying complex learning without sensory deprivation.

Although the notion of large-scale reorganization of the brain is well established, the organizational principles governing this process remain unclear. It is known that several areas in the visual cortex preserve their task specificity despite being recruited for different sensory input (9, 10). In blind persons, for example, the ventral visual stream responds to tactile and auditory object recognition (11, 12), tactile and auditory reading (13, 14), and auditory perception of body shapes (15), whereas the dorsal visual stream is activated by auditory motion perception (17) and auditory/tactile spatial processing (16). Cases of task-specific, sensory-independent reorganization of the visual cortex were also shown in nondeprived subjects (5, 12, 47, 49). It is still open, however, whether task-specific plasticity is unique to the visual cortex or applies to other cortical areas as well.

In our study, exactly the same auditory areas were recruited for visual rhythms in the deaf subjects and for auditory rhythms in the hearing subjects. This finding directly confirms the prediction of the task-specific reorganization hypothesis that cross-modal activations in the auditory cortex of deaf humans should match the task-related organization of this region for auditory processing in the hearing. Our study therefore goes beyond the visual cortex and shows that task-specific, sensory-independent reorganization also can occur in the auditory cortex of deaf humans.

So far, the only clear case of task-specific reorganization beyond the visual cortex was demonstrated in the auditory cortex of deaf cats. Meredith et al. (21), in particular, showed that the same auditory area is critical for visual orienting in deaf cats and for auditory orienting in hearing cats. Our study constitutes a clear report of task-specific reorganization in a third system—the human auditory system. Some notable previous studies have hinted at the possibility of task-specific reorganization in the human auditory cortex. Cardin et al. (50), for example, scanned two groups of deaf subjects with different language experience (proficient users of sign language and nonsigners) while they watched sign language. The authors showed that auditory deprivation and language experience affect distinct parts of the auditory cortex, suggesting a degree of task specialization in the auditory cortex of deaf humans. Furthermore, Karns et al. (27) demonstrated a significant overlap in the activations for peripheral visual stimuli and peripheral tactile stimuli in the deaf auditory cortex. However, none of these studies has demonstrated that cross-modal activations in the auditory cortex of deaf humans match the typical division of labor for auditory processing in hearing persons. Studies on sign language have shown that sign language in the deaf and spoken language in the hearing induce similar patterns of activation in the auditory cortex (51–53). In these studies, however, it is hard to dissociate the cross-modal, perceptual component of activations induced by sign language from top-down, semantic influences. In our study, we used the same, nonsemantic task in both groups of subjects and rigorously controlled for between-group and between-modality differences in behavioral performance. By these means, we were able to gather clear-cut evidence for task-specific reorganization in the human auditory cortex. Our findings show that preserving the functional role of recruited areas while switching their sensory input is a general principle, valid in many species and cortical regions.

In line with the task-specific reorganization hypothesis, our results show that specific auditory regions in deaf persons are particularly engaged in visual rhythm processing. First, visual rhythms did not activate the primary auditory cortex (Fig. 2F). Second, activation for this task in deaf subjects was constrained mostly to the posterior and lateral part of high-level auditory cortex (Fig. 2A), and the same auditory areas were activated by auditory rhythm processing in hearing subjects (Fig. 3 A–C). Several studies show that the posterior part of the high-level auditory cortex is involved in processing temporally complex sounds (54, 55), including music (56). This region is considered a part of postero-dorsal auditory stream involved in spatial and temporal processing of sounds (57). Our results are congruent with these reports. In addition, our data show that, like the high-level visual areas, the posterior part of the high-level auditory cortex can process complex, temporal information independently of sensory modality.

It is well established that the auditory cortex becomes engaged in peripheral visual processing in the deaf (20, 24), and a number of experiments have demonstrated activations of this region for peripheral visual localization or peripheral motion detection tasks (25–27, 29). These peripheral visual functions are enhanced in deaf people (58–62) and deaf cats (20). In contrast, examples of the deaf auditory cortex involvement in central visual perception are scarce and are constrained to high-level processing, namely sign language comprehension (50–53, 63). In the case of simple, nonsemantic tasks, only one study has shown that motion detection in the central visual field can induce activations in the auditory cortex of deaf subjects (36). At the same time, several experiments found no behavioral enhancements for central visual stimuli either in deaf people (58, 59) or in deaf cats (20). In our experiment, visual rhythms recruited the auditory cortex in deaf subjects even though the task was presented in the foveal visual field (Fig. 2). Although rhythmic, temporally complex patterns can be coded in various sensory modalities (e.g, see refs. 32 and 64), such stimuli are processed most efficiently in the auditory domain (30–32) (also Results, Behavioral Results and Fig. 1C). These results show that even central visual perception can be supported by the deaf person’s auditory cortex as long as the visual function performed matches the typical, functional repertoire of this region in hearing persons.

The connectivity that supports large-scale reorganization of the brain remains to be described. It was proposed that such a change might be supported either by the formation of new neural connections or by the “unmasking” of cross-modal input in the existing pathways (2). In line with the latter hypothesis, recent studies, using injections of retrograde tracers, show that the connectivity of the auditory cortex in deaf cats is essentially the same as in hearing animals (65–67). This finding suggests that in cats the large-scale reorganization of the brain uses pathways that are formed during typical development. Several studies show that the same might hold true in humans. Instances of cross-modal reorganization of the human visual cortex were reported in nondeprived, sighted subjects after only several days or hours of training (46, 47, 49, 68). Because such a period is perhaps too short for new pathways to be established, the existing connectivity between sensory cortices must have been used in these cases. One particular pathway that was altered in our deaf subjects, relative to hearing subjects, is the pathway linking high-level auditory cortex with the V5/MT area in the dorsal visual stream (Fig. 4). The latter region is known to support motion processing, irrespective of the sensory modality of input (17, 69, 70). Cross-modal activations of the V5/MT area by both moving and static auditory stimuli were previously observed in blind persons (17, 71–73). Increased activation of this region relative to hearing subjects was also observed in deaf subjects during their perception of peripheral visual motion (74), a function that is known to be supported by the auditory cortex in the deaf (25, 26, 36). Our results concur with these studies and suggest that the V5/MT area might support increased, general-purpose communication between the visual and auditory cortices in blindness or deafness.

Finally, our results contribute to a recent debate about the extent to which sensory cortices preserve their large-scale organization in congenitally deprived subjects. In the congenitally blind, the large-scale division of the visual cortex into the dorsal stream and the ventral stream was shown to be comparable to that in nondeprived subjects (75). A preserved topographic organization was found even in the primary visual cortex of blind people (76). Results from the auditory cortex are less conclusive. In deaf cats, the localization of several auditory areas is shifted, suggesting significant topographic plasticity in deafness (77). On the other hand, a recent resting-state human functional connectivity study suggests that the large-scale topography of auditory cortex in deaf humans is virtually the same as in those who hear (78). Our study complements the latter result and shows preserved functional specialization in the auditory cortex of congenitally deaf humans. These findings suggest that the large-scale organization of human sensory cortices is robust and develops even without sensory experience in a given modality. Of course, one cannot exclude the possibility that some shifts occur in the organization of sensory cortices in sensory-deprived humans. Indeed, one can speculate that prolonged sensory deprivation could lead to the expansion of sensory areas subserving supramodal tasks (i.e., tasks that can be performed in many sensory modalities, for example object recognition) and to the shrinkage of areas involved in tasks that are largely specific to one sense (for example, color or pitch perception).

In conclusion, we demonstrate that the auditory cortex preserves its task specificity in deaf humans despite switching to a different sensory modality. Task-specific, sensory-independent reorganization (10) is well documented in the visual cortex, and our study directly confirms that similar rules might guide plasticity in the human auditory cortex. Our results suggest that switching the sensory but not the functional role of recruited areas might be a general principle that guides large-scale reorganization of the brain.

Methods

Subjects.

Fifteen congenitally deaf adults (10 females, mean age ± SD = 27.6 ± 4.51 y, average length of education ± SD = 13.5 ± 2.1 y) and 15 hearing adults (10 females, mean age ± SD = 26.2 ± 6.09 y, average length of education ± SD = 13.7 ± 2.0 y) participated in the visual part of the experiment. Eleven of 15 the originally recruited hearing subjects participated in the auditory part of the experiment (eight females, mean age ± SD = 26.5 ± 5.4 y, average length of education ± SD = 14 ± 2.04 y); the four remaining subjects refused to undergo fMRI scan for a second time. The deaf and the hearing subjects were matched for sex, age, and years of education (all P > 0.25). All subjects were right-handed, had normal or corrected-to-normal vision, and no neurological deficits. Hearing subjects had no known hearing deficits and reported normal comprehension of speech in everyday situations, which was confirmed in interactions with experimenters even in the context of noise from the MRI system. In deaf subjects, the mean hearing loss, as determined by a standard pure-tone audiometry procedure, was 98 dB for the left ear (range, 70–120 dB) and 103 dB for the right ear (range, 60–120 dB). The causes of deafness were either genetic (hereditary deafness) or pregnancy-related (i.e., maternal disease or drug side effects). All deaf subjects reported being deaf from birth. The majority of them had used hearing aids in the past or used them at the time of testing. However, the subjects’ speech comprehension with a hearing aid varied from poor to very good (Table S1). All deaf subjects were proficient in Polish Sign Language (PJM) at the time of the experiment. Detailed characteristics of each deaf subject can be found in Table S1.

The research described in this article was approved by the Committee for Research Ethics of the Institute of Psychology of the Jagiellonian University. Informed consent was obtained from each participant in accord with best-practice guidelines for MRI research.

fMRI Experiment.

The experimental task (rhythm discrimination) (Fig. 1A) was adapted from Saenz and Koch (32). Deaf subjects performed the task in the visual modality, and hearing subjects performed the task in both visual and auditory modalities (Fig. 1B). In the visual version of the task (visual rhythms), subjects were presented with small, bright, flashing circles shown on a dark gray background (diameter: 3°; mean display luminance: 68 cd/m2). The flashes were presented foveally on a 32-inch LCD monitor (60-Hz refresh rate). During fMRI subjects viewed the monitor via a mirror. In the auditory version of the task (auditory rhythms), tonal beeps (360 Hz, ∼60 dB) were delivered binaurally to subjects via MRI-compatible headphones.

Subjects were presented with pairs of sequences that were composed of a similar number of flashes/beeps of short (50-ms) and long (200-ms) duration, separated by 50- to 150-ms intervals (see the adaptive staircase procedure described below). The sequences presented in each pair either were identical (e.g., short-long-short-long-short-long) or the second sequence was permutation of the first (e.g., short-long-short-long-short-long vs. short-short-long-long-long-short). The second sequence was presented 2 s after the offset of first sequence. The subjects were asked to judge whether two sequences in a pair were the same or different and to indicate their choice by pressing one of two response buttons (the left button for identical sequences, and the right button for different sequences). The responses were given after each pair, within 2 s of the end of presentation, and this time window was indicated by a question mark displayed on the center of the screen. In the control condition (Fig. 1A), the same flashes/beeps were presented at a constant pace (50 ms separated by 150-ms blank intervals), and subjects were asked to watch/listen to them passively. The stimuli presentation and data collection were controlled using Presentation software (Neurobehavioral Systems).

Subjects were presented with seven experimental blocks and three control blocks. Each experimental block was composed of three pairs of sequences. The total duration of each block was 28 s. Blocks were separated by 8-, 10-, or 12-s rest periods. For hearing subjects, the visual and auditory parts of the experiment were performed in separate runs. In both sensory modalities, subjects were instructed to keep their eyes open and to stare at the center of the screen.

Adaptive Staircase Procedure.

To make sure that different levels of behavioral performance do not induce between-group and between-modality differences in neural activity, an adaptive staircase procedure was applied before the fMRI experiment (Fig. 1B). In this procedure, the difficulty of the upcoming fMRI task was matched to an individual subject’s performance.

Deaf subjects performed the staircase procedure for the visual modality, and hearing subjects performed it for both the visual and the auditory modalities. The procedure was performed in front of a computer screen (60-Hz refresh rate). Visual stimuli were presented foveally. Their luminance and size was identical to those used in the fMRI experiment. Auditory stimuli were presented with the same parameters as in the fMRI experiment, using headphones. Responses were recorded using a computer mouse (the left button for identical sequences and the right button for different sequences).

Subjects began an experimental task (i.e., visual/auditory rhythm discrimination) with sequences of six flashes/ beeps. The length of the sequences and the pace of presentation were then adjusted to the individual subject’s performance. After a correct answer, the length of a sequence increased by one item; after an incorrect answer the length of a sequence decreased by two items. The lower limit of sequence length was six flashes/beeps, and the upper limit was 17. The pace of presentation was manipulated accordingly by increasing or decreasing the duration of blank intervals between flashes/beeps (six to eight flashes/beeps in the sequence: 150 ms; 9–11 flashes/beeps in the sequence: 100 ms; 12 flashes/beeps in the sequence: 80 ms; 13–17 flashes/beeps in the sequence: 50 ms). This staircase procedure ended after six reversals between correct and incorrect answers. The average length of sequences at these six reversal points was used to set individual length and pace of sequences presented in the fMRI experiment.

Sequences presented in both the staircase adaptive procedure and the fMRI experiment were randomly generated, separately for each subject and sensory modality. It is highly unlikely that exactly the same pairs of sequences were presented twice to any one subject. Thus, no priming effects were expected in our data. The hearing subjects performed all experimental procedures twice (i.e., in the visual modality and the auditory modality), and some between-modality learning effects cannot be fully excluded. However, the amount of training received by the subjects in each sensory modality was relatively small (i.e., 20–30 trials in the training and the staircase procedure; 21 trials in the fMRI experiment). It is unlikely that such an amount of practice would account for very pronounced between-modality differences in behavioral performance or significantly altered brain activity in the hearing subjects.

Outline of the Experimental Session.

At the beginning of the experimental session, each deaf subject was interviewed, and detailed characteristics of his/her deafness, language experience, and the use of hearing aids were obtained (Table S1). The subjects were familiarized with the tasks and completed a short training session. Then, the adaptive staircase procedure was performed. Deaf subjects performed the staircase procedure visually, and the hearing subjects performed it in the visual modality and then in the auditory modality. After the end of the staircase procedure, subjects were familiarized with the fMRI environment, and short training was performed inside the fMRI scanner. Finally, the actual fMRI experiment was performed, with individual length of the sequences applied, as determined by the staircase procedure. In the hearing subjects, the visual version of the experiment was followed by the auditory version of the experiment, performed after short break.

fMRI Data Acquisition.

Data were acquired on the Siemens MAGNETOM Tim Trio 3T scanner using a 32-channel head coil. We used a gradient-echo planar imaging (EPI) sequence sensitive to blood oxygen level-dependent (BOLD) contrast [33 contiguous axial slices, phase encoding direction, posterior–anterior, 3.6-mm thickness, repetition time (TR) = 2,190 ms, angle = 90°, echo time (TE) = 30 ms, base resolution = 64, phase resolution = 200, matrix = 64 × 64, no integrated parallel imaging techniques (iPAT)]. For anatomical reference and spatial normalization, T1-weighted images were acquired using a magnetization-prepared rapid acquisition gradient echo (MPRAGE) sequence (176 slices; field of view = 256; TR = 2,530 ms; TE = 3.32 ms; voxel size = 1 × 1 × 1 mm).

Behavioral Data Analysis.

Behavioral data analysis was performed using SPSS 22 (SPSS Inc.). Two dependent variables were analyzed. The first variable was the output of the adaptive staircase procedure, i.e., the number of visual flashes/auditory beeps presented in each sequence in each experimental trial in the fMRI experiment (Fig. 1C, Left; shorter sequences are easier, and longer sequences are more difficult). The second variable was the subject’s performance in the fMRI experiment, i.e., the number of correct same/different decisions (Fig. 1C, Right). In both cases, three comparisons were made: (i) visual rhythms in deaf subjects vs. visual rhythms in hearing subjects; (ii) visual rhythms in the deaf subjects vs. auditory rhythms in the hearing subjects; and (iii) visual rhythms in the hearing subjects vs. auditory rhythms in the hearing subjects. The first two comparisons were made using two-sample t tests. The third comparison was made using a paired t test. Bonferroni correction was applied to account for multiple comparisons.

Our task was designed for accuracy-level measurements and is not suitable for reaction time analysis. In particular, our adaptive staircase procedure was designed to obtain task performance in the 60–70% range. At this performance level, potential differences in reaction time, even if detectable, would be very problematic to interpret.

fMRI Data Analysis.

The fMRI data were analyzed using SPM 8 software (www.fil.ion.ucl.ac.uk/spm/software/spm8/). Data preprocessing included (i) slice acquisition time correction; (ii) realignment of all EPI images to the first image; (iii) coregistration of the anatomical image to the mean EPI image; (iv) segmentation of the coregistered anatomical image (using the new segment SPM 8 option); (v) normalization of all images to MNI space; and (vi) spatial smoothing (5 mm FWHM). The signal time course for each subject was modeled within a general linear model (79) derived by convolving a canonical hemodynamic response function with the time series of the experimental stimulus categories (experimental task, control task, response period) and six estimated movement parameters as regressors. For hearing subjects, separate models were created for the visual and auditory versions of the task. Individual contrast images then were computed for each experimental stimulus category vs. the rest period. These images were subsequently entered into an ANOVA model for random-effect group analysis. Two group models were created, one to compare activations induced by the visual version of the task in deaf and hearing subjects and the second to compare activations induced by the visual version of the task in deaf subjects and the auditory version of the task in hearing subjects. In all contrasts, we applied a voxelwise threshold of P < 0.005, corrected for multiple comparisons across the whole brain using Monte Carlo simulation, as implemented in the REST toolbox (80). A probabilistic cytoarchitectonic atlas of the human brain, as implemented in the SPM Anatomy toolbox (38), was used to support the precise localization of the observed effects. The atlas contains masks of area Te 3, which corresponds to human high-level auditory cortex (34), and areas Te 1.0, Te 1.1, and Te 1.2, which correspond to the primarylike, core auditory areas (35, 81, 82).

Two maps representing the consistency of activations across single subjects were created: One represents the overlap in activations for visual rhythms vs. visual control in deaf subjects (Fig. 2D), and the second represents the overlap in activations for auditory rhythms vs. auditory control in hearing subjects (Fig. S1). In both cases, single-subject activation maps were assigned a statistical threshold of P = 0.05, corrected for multiple comparisons across the whole brain using Monte Carlo simulation. These maps then were binarized (i.e., each voxel that survived the threshold was assigned a value of 1, and all other voxels were assigned a value of 0) and added to each other. Finally, the resulting images were divided by the number of subjects in the respective groups (i.e., 15 in the case of visual rhythms in the deaf subjects and 11 in the case of auditory rhythms in the hearing). Thus, for each voxel in the brain, the final maps represent the proportion of subjects who exhibited activation.

Two ROI analyses were performed. In the first analysis, probabilistic cytoarchitectonic maps of the auditory cortex were used, as implemented in the Anatomy SPM toolbox (38). Two ROIs were tested in each hemisphere: the primary auditory cortex [the combined Te 1.0, Te 1.1, and Te 1.2 masks (35)] and the high-level auditory cortex [the Te 3 mask (34)]. We did not use an external auditory localizer for ROI definition in the hearing group (83) because such a method is not feasible in the case of deaf subjects. Application of different methods of ROI definition in the deaf and the hearing groups would lead to a bias in between-group comparisons. In the second, functionally guided ROI analysis, visual and auditory versions of the task were used as localizers independent of each other. First, the 50 most significant voxels were extracted from auditory rhythms vs. auditory control contrast in hearing subjects, masked with the auditory cortex mask (the primary auditory cortex and the high-level auditory cortex masks combined; see above). These voxels then were used as an ROI for comparisons between visual stimulus categories in deaf and hearing subjects. Subsequently, the same procedure was applied to extract the 50 most significant voxels from the visual rhythms vs. visual control contrast in deaf subjects. These voxels then were used as an ROI for comparisons between auditory stimulus categories in hearing subjects. In both ROI analyses, all statistical tests were corrected for multiple comparisons using Bonferroni correction.

Finally, the PPI analysis was used to investigate task-specific changes in functional connectivity between the auditory cortex and the visual cortex (84, 85). In all subjects, we investigated changes in functional connectivity of the auditory cortex when they performed visual rhythms, relative to visual control. Additionally, changes in functional connectivity of the auditory cortex during auditory rhythms, relative to auditory control, were tested in hearing subjects. Because visual rhythms were expected to enhance different activation patterns in deaf and hearing subjects, defining individual seeds might have resulted in including different functional regions in the PPI analysis in deaf and hearing subjects, leading to a biased between-group comparison. Therefore, the same, anatomically defined mask of the high-level auditory cortex (34) was used as a seed region in all subjects and in both sensory modalities. The general linear model was created for each subject including time series of following regressors: (i) a vector coding whether the subject performed the experimental or control task (the psychological regressor) convolved with canonical hemodynamic response function; (ii) signal time course from the seed region (the physiological regressor); (iii) the interaction term of the two former regressors (the psychophysiological interaction regressor); and (iv) six estimated movement parameters. Thus, psychophysiological interaction regressor in this model explained only the variance above that explained by main effect of the task or simple physiological correlation (85). One-sample t tests were used to compute individual contrast images for the psychophysiological interaction regressor, and these images were subsequently entered into a random-effect group analysis.

The aim of the PPI analysis was to search for increases in functional connectivity between the dorsal visual stream and the auditory cortex during visual rhythm processing in deaf subjects. Thus, the group analysis was masked with the broad visual cortex mask (V1, V2, V3, V4, and V5/MT bilateral masks combined, as implemented in the Anatomy SPM toolbox) (86–89) and thresholded at P < 0.001 voxelwise, corrected for multiple comparisons across this mask using Monte Carlo simulation. Several studies show that the deaf have a superior ability to detect visual motion, raising the possibility that in deafness the V5/MT cortex is preferentially connected with the auditory cortex (20, 59, 60). Thus, the V5/MT anatomical mask (89) was used as an ROI in which we compared PPI parameter estimates for each subject’s group and each sensory modality. All statistical tests were corrected for multiple comparisons using Bonferroni correction.

Acknowledgments

We thank Paweł Boguszewski, Karolina Dukała, Richard Frackowiak, Bernard Kinow, Maciej Szul, and Marek Wypych for help. This work was supported by National Science Centre Poland Grant 2015/19/B/HS6/01256, European Union Seventh Framework Programme Marie Curie Career Reintegration Grant CIG 618347 “METABRAILLE,” and funds from the Polish Ministry of Science and Higher Education for co-financing of international projects, in the years 2013–2017 (to M.S.). Ł.B., K.J., and A.M. were supported by National Science Centre Poland Grants 2014/15/N/HS6/04184 and 2012/05/E/HS6/03538 (to Ł.B.) and 2014/14/M/HS6/00918 (to K.J. and A.M.). P.M. and P.R. were supported within the National Programme for the Development of Humanities of the Polish Ministry of Science and Higher Education Grant 0111/NPRH3/H12/82/2014. The study was conducted with the aid of Center of Preclinical Research and Technology research infrastructure purchased with funds from the European Regional Development Fund as part of the Innovative Economy Operational Programme, 2007–2013.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. J.P.R. is a Guest Editor invited by the Editorial Board.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1609000114/-/DCSupplemental.

References

- 1.Hyvärinen J, Carlson S, Hyvärinen L. Early visual deprivation alters modality of neuronal responses in area 19 of monkey cortex. Neurosci Lett. 1981;26(3):239–243. doi: 10.1016/0304-3940(81)90139-7. [DOI] [PubMed] [Google Scholar]

- 2.Rauschecker JP. Compensatory plasticity and sensory substitution in the cerebral cortex. Trends Neurosci. 1995;18(1):36–43. doi: 10.1016/0166-2236(95)93948-w. [DOI] [PubMed] [Google Scholar]

- 3.Sur M, Garraghty PE, Roe AW. Experimentally induced visual projections into auditory thalamus and cortex. Science. 1988;242(4884):1437–1441. doi: 10.1126/science.2462279. [DOI] [PubMed] [Google Scholar]

- 4.Merabet LB, Pascual-Leone A. Neural reorganization following sensory loss: The opportunity of change. Nat Rev Neurosci. 2010;11(1):44–52. doi: 10.1038/nrn2758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Siuda-Krzywicka K, et al. Massive cortical reorganization in sighted Braille readers. eLife. 2016;5:e10762. doi: 10.7554/eLife.10762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rauschecker JP, Korte M. Auditory compensation for early blindness in cat cerebral cortex. J Neurosci. 1993;13(10):4538–4548. doi: 10.1523/JNEUROSCI.13-10-04538.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bavelier D, Neville HJ. Cross-modal plasticity: Where and how? Nat Rev Neurosci. 2002;3(6):443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- 8.Kahn DM, Krubitzer L. Massive cross-modal cortical plasticity and the emergence of a new cortical area in developmentally blind mammals. Proc Natl Acad Sci USA. 2002;99(17):11429–11434. doi: 10.1073/pnas.162342799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pascual-Leone A, Hamilton R. The metamodal organization of the brain. Prog Brain Res. 2001;134:427–445. doi: 10.1016/s0079-6123(01)34028-1. [DOI] [PubMed] [Google Scholar]

- 10.Heimler B, Striem-Amit E, Amedi A. Origins of task-specific sensory-independent organization in the visual and auditory brain: Neuroscience evidence, open questions and clinical implications. Curr Opin Neurobiol. 2015;35:169–177. doi: 10.1016/j.conb.2015.09.001. [DOI] [PubMed] [Google Scholar]

- 11.Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4(3):324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- 12.Amedi A, et al. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat Neurosci. 2007;10(6):687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- 13.Reich L, Szwed M, Cohen L, Amedi A. A ventral visual stream reading center independent of visual experience. Curr Biol. 2011;21(5):363–368. doi: 10.1016/j.cub.2011.01.040. [DOI] [PubMed] [Google Scholar]

- 14.Striem-Amit E, Cohen L, Dehaene S, Amedi A. Reading with sounds: Sensory substitution selectively activates the visual word form area in the blind. Neuron. 2012;76(3):640–652. doi: 10.1016/j.neuron.2012.08.026. [DOI] [PubMed] [Google Scholar]

- 15.Striem-Amit E, Amedi A. Visual cortex extrastriate body-selective area activation in congenitally blind people “seeing” by using sounds. Curr Biol. 2014;24(6):687–692. doi: 10.1016/j.cub.2014.02.010. [DOI] [PubMed] [Google Scholar]

- 16.Renier LA, et al. Preserved functional specialization for spatial processing in the middle occipital gyrus of the early blind. Neuron. 2010;68(1):138–148. doi: 10.1016/j.neuron.2010.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Poirier C, et al. Auditory motion perception activates visual motion areas in early blind subjects. Neuroimage. 2006;31(1):279–285. doi: 10.1016/j.neuroimage.2005.11.036. [DOI] [PubMed] [Google Scholar]

- 18.Heimler B, Weisz N, Collignon O. Revisiting the adaptive and maladaptive effects of crossmodal plasticity. Neuroscience. 2014;283:44–63. doi: 10.1016/j.neuroscience.2014.08.003. [DOI] [PubMed] [Google Scholar]

- 19.Pavani F, Röder B. In: Crossmodal Plasticity as a Consequence of Sensory Loss: Insights from Blindness and Deafness. The New Handbook of Multisensory Processing. Stein BE, editor. The MIT Press; Boston: 2012. pp. 737–759. [Google Scholar]

- 20.Lomber SG, Meredith MA, Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat Neurosci. 2010;13(11):1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- 21.Meredith MA, et al. Crossmodal reorganization in the early deaf switches sensory, but not behavioral roles of auditory cortex. Proc Natl Acad Sci USA. 2011;108(21):8856–8861. doi: 10.1073/pnas.1018519108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Meredith MA, Lomber SG. Somatosensory and visual crossmodal plasticity in the anterior auditory field of early-deaf cats. Hear Res. 2011;280(1-2):38–47. doi: 10.1016/j.heares.2011.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Auer ET, Jr, Bernstein LE, Sungkarat W, Singh M. Vibrotactile activation of the auditory cortices in deaf versus hearing adults. Neuroreport. 2007;18(7):645–648. doi: 10.1097/WNR.0b013e3280d943b9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bavelier D, Dye MWG, Hauser PC. Do deaf individuals see better? Trends Cogn Sci. 2006;10(11):512–518. doi: 10.1016/j.tics.2006.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fine I, Finney EM, Boynton GM, Dobkins KR. Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. J Cogn Neurosci. 2005;17(2001):1621–1637. doi: 10.1162/089892905774597173. [DOI] [PubMed] [Google Scholar]

- 26.Finney EM, Fine I, Dobkins KR. Visual stimuli activate auditory cortex in the deaf. Nat Neurosci. 2001;4(12):1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- 27.Karns CM, Dow MW, Neville HJ. Altered cross-modal processing in the primary auditory cortex of congenitally deaf adults: A visual-somatosensory fMRI study with a double-flash illusion. J Neurosci. 2012;32(28):9626–9638. doi: 10.1523/JNEUROSCI.6488-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Levänen S, Jousmäki V, Hari R. Vibration-induced auditory-cortex activation in a congenitally deaf adult. Curr Biol. 1998;8(15):869–872. doi: 10.1016/s0960-9822(07)00348-x. [DOI] [PubMed] [Google Scholar]

- 29.Scott GD, Karns CM, Dow MW, Stevens C, Neville HJ. Enhanced peripheral visual processing in congenitally deaf humans is supported by multiple brain regions, including primary auditory cortex. Front Hum Neurosci. 2014;8:177. doi: 10.3389/fnhum.2014.00177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Glenberg AM, Mann S, Altman L, Forman T, Procise S. Modality effects in the coding and reproduction of rhythms. Mem Cognit. 1989;17(4):373–383. doi: 10.3758/bf03202611. [DOI] [PubMed] [Google Scholar]

- 31.Guttman SE, Gilroy LA, Blake R. Hearing what the eyes see: Auditory encoding of visual temporal sequences. Psychol Sci. 2005;16(3):228–235. doi: 10.1111/j.0956-7976.2005.00808.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Saenz M, Koch C. The sound of change: Visually-induced auditory synesthesia. Curr Biol. 2008;18(15):R650–R651. doi: 10.1016/j.cub.2008.06.014. [DOI] [PubMed] [Google Scholar]

- 33.Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 34.Morosan P, Schleicher A, Amunts K, Zilles K. Multimodal architectonic mapping of human superior temporal gyrus. Anat Embryol (Berl) 2005;210(5-6):401–406. doi: 10.1007/s00429-005-0029-1. [DOI] [PubMed] [Google Scholar]

- 35.Morosan P, et al. Human primary auditory cortex: Cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage. 2001;13(4):684–701. doi: 10.1006/nimg.2000.0715. [DOI] [PubMed] [Google Scholar]

- 36.Shiell MM, Champoux F, Zatorre RJ. Reorganization of auditory cortex in early-deaf people: Functional connectivity and relationship to hearing aid use. J Cogn Neurosci. 2015;27(1):150–163. doi: 10.1162/jocn_a_00683. [DOI] [PubMed] [Google Scholar]

- 37.Kral A, Schröder J-H, Klinke R, Engel AK. Absence of cross-modal reorganization in the primary auditory cortex of congenitally deaf cats. Exp Brain Res. 2003;153(4):605–613. doi: 10.1007/s00221-003-1609-z. [DOI] [PubMed] [Google Scholar]

- 38.Eickhoff SB, et al. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25(4):1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- 39.Laurienti PJ, et al. Deactivation of sensory-specific cortex by cross-modal stimuli. J Cogn Neurosci. 2002;14(3):420–429. doi: 10.1162/089892902317361930. [DOI] [PubMed] [Google Scholar]

- 40.Nichols T, Brett M, Andersson J, Wager T, Poline J-B. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25(3):653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- 41.Sadato N, et al. Activation of the primary visual cortex by Braille reading in blind subjects. Nature. 1996;380(6574):526–528. doi: 10.1038/380526a0. [DOI] [PubMed] [Google Scholar]

- 42.Büchel C, Price C, Friston K. A multimodal language region in the ventral visual pathway. Nature. 1998;394(6690):274–277. doi: 10.1038/28389. [DOI] [PubMed] [Google Scholar]