Abstract

Darwin regarded emotions as predispositions to act adaptively, thereby suggesting that characteristic body movements are associated with each emotional state. To this date, investigations of emotional cognition have predominantly concentrated on processes associated with viewing facial expressions. However, expressive body movements may be just as important for understanding the neurobiology of emotional behavior. Here, we used functional MRI to clarify how the brain recognizes happiness or fear expressed by a whole body. Our results indicate that observing fearful body expressions produces increased activity in brain areas narrowly associated with emotional processes and that this emotion-related activity occurs together with activation of areas linked with representation of action and movement. The mechanism of fear contagion hereby suggested may automatically prepare the brain for action.

Keywords: emotion communication, body movement, action, social cognition, amygdala

When danger lurks, fear spreads through a crowd as body postures alter in rapid cascade from one individual to the next. Animal ethologists have described situations in which emotions are communicated behaviorally through rapidly changing behavior as can be observed in the flight of a flock of birds when a source of danger appears at the horizon (1). The notion of emotional contagion is sometimes used to refer to similar automatic posture adjustments in humans (2). From Darwin's evolutionary perspective, communication of emotion by body movements occupies a privileged position as emotions embody action schemes that have evolved in the service of survival (3). However, at present, little is known about the possible mechanisms in the human brain sustaining bodily communication of emotion in the service of adaptive action. The present study begins to explores this issue.

To date, most investigations of the perception of emotion have concentrated on brain activity generated by the recognition of still images of facial expressions, and virtually all that is known about perception of emotion in humans is based on such data. Major insights concern the role of the amygdala in concert with that of the fusiform cortex, prefontal cortex, orbitofrontal cortex (OFC), medial frontal cortex, superior temporal sulcus, and somatosensory cortex (4, 5). Interestingly, however, some of these same areas also seem to play a role in processing biological movement. For example, viewing biological movement patterns, which are experienced as pleasant, activates subcortical structures, including the amygdala (6), and visual perception of biological motion activates two areas in the occipital and fusiform cortex (7). Recent findings in non-human primates have drawn attention to the brain's ability to represent actions through canonical neurons (similarly active when viewing an object and grasping it) and mirror neurons (similarly active when observing an action and performing the action) (8, 9). However, to date, it is not known whether these brain areas play a role when humans view body movements expressing emotion.

Studies on neutral body postures and movements have already revealed some intriguing similarities between visual perception of faces and of bodies. For example, faces and bodies both have configural properties as indexed by the inversion effect (10), and the global structure of the whole body is also an important factor in the perception of biological motion (11). Evidence from single-cell recordings suggests a degree of specialization for either face or body images (9). Neurons reacting selectively to body posture have been found in recordings from monkey superior temporal sulcus. Similarly, a functional MRI study exploring the contrast between objects and neutral body postures revealed activity in lateral occipitotemporal cortex (12). On the other hand, there appear to be similarities between emotional body expressions and faces. A striking finding (13) is that observing bodily expressions activates two well known face areas (inferior occipital gyrus and middle fusiform gyrus) predominantly associated with processing faces but also linked with biological movement (6). These activations in face-related areas may result from mental imagery (14), or alternatively, and more probably, from context driven high-level perceptual mechanisms filling in the face information missing in the input. However, this is unlikely to be the only explanation for similarities between facial and bodily expressions of fear. In a direct comparison of facial expressions and bodily expressions, we observed that the N170 waveform is obtained for faces and bodies alike, but not for objects (15). The time window of the N170 waveform suggests that there is similarity in visual encoding between faces and bodies. A further indication is provided by the fact that fusiform gyrus activity is specifically related to presentation of fearful bodily expressions. This finding suggests a mechanism whereby amygdala modulates activation in visual areas, including fusiform face cortex as previously found for facial expressions (16, 17).

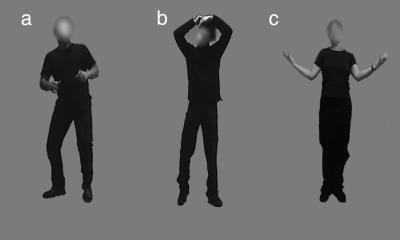

The present study investigated the perception of fearful and happy bodily expressions by using functional MRI. We used a two-condition paradigm, in which images of bodily expressions of fear and happiness alternated with images of meaningful but emotionally neutral body movements. These whole-body actions provide an appropriate control condition because, similar to emotional body movements, they contain biological movement, they have semantic properties (unlike abstract movement patterns), and they are familiar. Selecting meaningful neutral body movements allowed us to create comparable conditions with respect to implicit movement perception, which is a process we expected to take place when participants viewed still images of body actions. To focus specifically on whole-body expressions, in all images the faces were blanked out. To avoid task interference, we used a passive viewing situation and participants were not given instructions that might have prompted imitation or mental imagery of the actions shown. Our main hypothesis was that viewing bodily expressions of emotions (either fear or happiness) would specifically activate areas known to be involved in processing emotional signals, as well as areas dedicated to action representation and motor areas.

Methods

Participants. Functional magnetic resonance images of brain activity of seven participants (four males) were collected in a 3-T high-speed echoplanar imaging device (Siemens, Erlangen, Germany) by using a quadrature head coil. Informed written consent was obtained for each participant before the scanning session, and the Massachusetts General Hospital Human Studies Committee approved all procedures under Protocol no. 2002P-000228. Image volumes consisted of 45 contiguous 3-mm-thick slices covering the entire brain (repetition time of 3,000 ms, 3.125 × 3.125 mm in-plane resolution, 128 images per slice; echo time of 30 ms, flip angle of 90°; 20 × 20 cm field of view, 64 × 64 matrix).

Materials. Video recordings of 16 semiprofessional actors (eight women, 22–35 years of age) were used for stimulus construction. Actors performed with their whole body meaningful but emotionally neutral actions (pouring water into a glass, combing one's hair, putting on trousers, or opening a door) or expressive gestures (happy, fearful, sad, or angry). In each case, before the recordings they were briefed with a set of standardized instructions. For the neutral body actions, instructions specified the action to be performed. For emotional body actions, instructions specified a familiar scenario (for example, opening a door and finding an armed robber in front of you). Still images were obtained from the videos by selecting manually the most informative frames from the video file and converting them to grayscale pictures. Four-choice identification tests were administered to a group of participants (n = 18, nine women, 22–35 years of age), one with 64 pictures of the expressive gestures (16 actors × 4 emotions) and one with 64 pictures of emotionally neutral gestures (16 actors × 4 types of gestures). Average identification accuracy was 75% for emotional and 94% for neutral gestures. On the basis of these results, the eight best-recognized images of happy and fearful gestures were retained from the emotion category for subsequent testing. For the neutral gestures, the eight best-recognized picture were equally retained. Mean percent correct four-choice identification for the retained pictures was 85% for the emotional and 95% for the neutral gestures.

A second pilot experiment was run with a different group of subjects (n = 10, six women, 24–33 years of age) to obtain data on intensity of evoked movement impression. The 24 images retained for the main experiment were presented one by one in random order and subjects were instructed to rate the movement information on a five-point scale (from 1 for the weakest impression to 5 for the strongest). The mean ratings for the three categories were nearly identical (neutral, 3.5; fearful 3.5; and happy, 3.3). The possibility that the obtained differences in activation level were artifacts of differences in evoked movement can safely be discarded.

In the scanner, the 24 selected images (8 fearful, 8 happy, and 8 neutral) were used in two separate runs by using an AB-blocked design (fearful vs. neutral; happy vs. neutral). Each block lasted 24 s, during which the pictures were randomly presented for 300 ms followed for 1,700 ms by a blank interval during which only a fixation cross was present.

Data Analysis. The techniques used in our analysis are similar to those described in ref. 18. Each functional run was first motioncorrected with afni software (19) and spatially smoothed by using a three-dimensional Gaussian filter with full width at half maximum of 6 mm. The mean offset and linear drift were estimated and removed from each voxel. The spectrum of the remaining signal was computed by using the fast Fourier transform at each voxel. The task-related component was estimated as the spectral component at the task fundamental frequency. The noise was estimated by summing the remaining spectral components after removing the task harmonics and those components immediately adjacent to the fundamental. The phase at the fundamental was used to determine whether the blood oxygenation level-dependent (BOLD) signal was increasing in response to the first stimulus (positive phase) or the second stimulus (negative phase).

Each participant's functional MRI scan was registered to a high-resolution T1. The real and imaginary components of the Fourier transform of each participant's signal were resampled from locations in the cortex onto the surface of a template sphere to bring them into a standard space. The techniques for mapping between an individual volume and this spherical space are detailed by Fischl et al. (20). The T1 volume was also registered to the MNI305 Talairach brain (21). Functional data were registered to the T1 volume. This procedure allowed the results of the individual per-voxel analysis to be resampled into both volume-based Talairach space and the surface-based spherical space to perform group random effects analysis. Group-average significance maps for the cortical surface and for the volume were computed, by using General Linear Model analyses to perform random-effects averages of the real and imaginary components of the signal across subjects on a per-vertex and per-voxel basis. Cross-subject variance was computed as the variance pooled across the real and imaginary components. Significance of the average activation was determined by using an F statistic and mapped from the standard sphere to a target individual's cortical surface (20). Maps were visualized on this individual's surface geometry, overlaying a group curvature pattern averaged in spherically morphed space (20, 22).

On the cortical surfaces, clusters of contiguous vertices with a significance of P < 0.05 and covering an area of at least 185 mm3 were identified. Talairach coordinates and the corresponding structure of the center of each cluster, identified by visual inspection of the target individual's anatomy, are given in Table 1. To correct these clusters for multiple comparisons, 5,000 Monte Carlo simulations of the averaging and clustering procedure were run, by using as input volumes synthesized white Gaussian noise, smoothed and resampled into spherical space. Clusters were found in 187 cases, yielding a significance of P = 0.0374 for the clusters. We similarly identified clusters in the Talairach space average, by using P < 0.05 and volume of >135 mm3 as constraints.

Table 1. Areas of activation observed in the comparison of fearful bodily expressions with neutral ones.

| Areas of activation | Side | x | y | z |

|---|---|---|---|---|

| Detection and orientation | ||||

| Superior colliculus | L | -5 | -31 | -2 |

| R | 4 | -31 | -3 | |

| Pulvinar | L | -7 | -25 | 5 |

| R | 6 | -25 | 7 | |

| Visual areas | ||||

| Striate cortex | L | -8 | -82 | 5 |

| L | -20 | -100 | 8 | |

| R | 14 | -100 | 10 | |

| Fusiform gyrus | L | -32 | -51 | -14 |

| R | 35 | -60 | -12 | |

| Inferior occipital gyrus | L | -9 | -68 | 40 |

| Precuneus | R | 43 | -78 | -7 |

| Emotional evaluation | ||||

| OFC | R | 38 | 30 | -12 |

| Posterior cingulate cortex | L | -8 | -49 | 27 |

| Anterior insula | L | -31 | 10 | 10 |

| R | 31 | 16 | -4 | |

| Retrosplenial cortex | R | 13 | -45 | 0 |

| Nucleus accumbens | L | -5 | 10 | -6 |

| R | 4 | 10 | -3 | |

| Amygdala | R | 24 | 0 | -16 |

| Action representation/premotor areas | ||||

| IFG, BA 44 | L | -43 | 14 | 10 |

| R | 44 | 17 | 23 | |

| IFG, BA 45 | L | -51 | 25 | 1 |

| R | 43 | 28 | -2 | |

| IFG, BA 47 | L | -42 | 22 | -9 |

| Precentral gyrus, BA 6 | L | -44 | 12 | 36 |

| R | 50 | 3 | 0 | |

| R | 45 | 2 | 38 | |

| SMA | L | -5 | -1 | 59 |

| R | 8 | 10 | 45 | |

| R | 11 | -7 | 66 | |

| Inferior parietal lobule | L | -39 | -55 | 50 |

| Intraparietal sulcus, posterior part | R | 27 | -83 | 21 |

| Motor areas | ||||

| Precentral gyrus, BA 4 | L | -33 | -6 | 54 |

| L | -44 | -3 | 44 | |

| R | 54 | -3 | 35 | |

| Caudate nucleus | R | 15 | 6 | 15 |

| L | -10 | 3 | 13 | |

| Putamen | R | 24 | 1 | 9 |

| L | -25 | -1 | 5 | |

| Other areas | ||||

| Parahippocampal gyrus | L | -13 | -41 | -3 |

| R | 24 | -31 | -8 |

Subheadings represent presumptive functional groupings. All clusters reported here are <0.05 corrected and have a minimal size of 128 mm2 (see Methods). L, left; R, right; BA, Brodmann's area.

Results

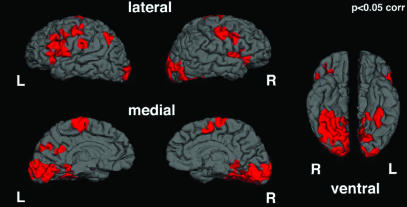

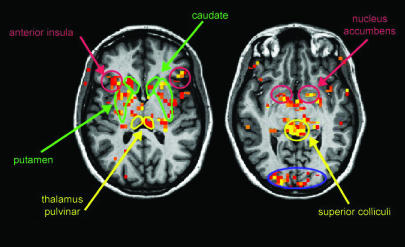

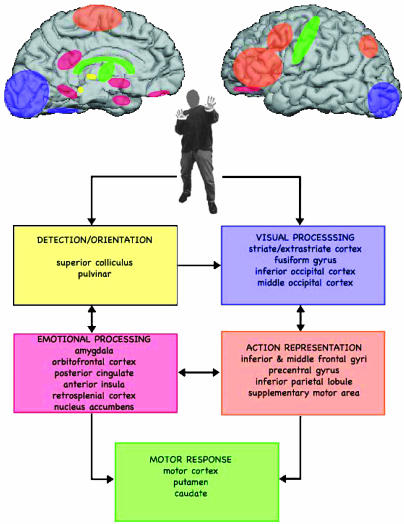

Observed activities for the fear vs. neutral comparison were grouped in functionally related clusters: areas related to emotional stimulus detection and orientation, areas related to visual processing, areas associated with emotional evaluation, areas associated with action representation, and motor response areas (Figs. 1, 2, 3, 4 and Table 1).

Fig. 1.

Areas of activation corresponding to viewing body expression of fear vs. neutrality are represented on the cortical surface. Data were obtained by random-groups average of the subjects and are corrected for multiple comparisons (see Table 1). L, left; R, right.

Fig. 2.

Activity in subcortical structures. Random-group average of fearful vs. neutral body images. Data are rendered on one template brain, in a common Talaraich space (radiological conventions). Data presented are at a threshold of P < 0.01 uncorrected. Areas are color-coded according to the Fig. 3 legend.

Fig. 3.

Schematic representation of areas activated in the processing of bodily expression of fear. Areas of activation selective for viewing fearful expressive bodies are represented in presumptive functional groupings. Five different functional functionally grouped areas are activated: areas involved in stimulus detection and orientation (yellow), visual processing (purple), emotional processing (red), action representation (orange), and motor response (green). Arrows indicate interactions between these different groups of areas.

Fig. 4.

Examples of stimuli used in this experiment. (a) Fearful. (b) Neutral. (c) Happy.

Discussion

Our major finding is that viewing fearful whole-body expressions produces higher activity in areas specifically known to process emotional information (amygdala, orbitofrontal cortex, posterior cingulate, anterior insula, retrospenial cortex, and nucleus accumbens) than viewing images of meaningful but emotionally neutral body actions. In contrast, a similar comparison of happy bodily expressions with neutral ones only yielded increased activity in visual areas. Besides activity in emotion-related areas, we also observed significant fear-related activation in areas dedicated to action representation and in motor areas. The integrated activity of these groups of areas may constitute a mechanism for fear contagion and for preparation of action in response to seeing fear, which presumably operates in a direct, automatic, and noninferential fashion, similar to what has so far been argued for automatic recognition of fear in facial expressions (4, 23).

Observing fearful body expressions modulates cortical and subcortical visual areas. Previous studies (24) have shown that the emotional component of a stimulus is reflected in higher activation in visual cortices as we find here for both the contrast between fear vs. neutral and happy vs. neutral body representations. The activity related to stimulus detection/orientation and visual processes in superior colliculus and pulvinar is compatible with models in which a rapid automatic route for fear detection is envisaged (5, 25, 26). A major function of this route is to sustain rapid orientation and detection of potentially dangerous signals based on coarse visual analysis, as can be performed by the superior colliculus. This subcortical motor activity may be part of a broader subcortical pathway for processing fear signals and involving projection from retina to superior colliculus and to pulvinar, as previously argued for faces (26–28). The pathway allows processing within a limited range of spatial frequencies that is still sufficient for facial expressions as illustrated by residual visual abilities of patients with striate cortex lesions (28). This same nonstriate subcortical-based route can also sustain recognition of bodily expression of emotion in patients with striate cortex damage (B.d.G., L. Weiskrantz, and N.H., unpublished data).

Our results indicate that viewing bodily expression of emotion influences activity in visual cortical areas that have shown modulation of activity as a function of emotional valence of the stimuli (striate and extrastriate cortex, fusiform gyrus, inferior occipital gyrus, and middle occipital cortex) (16, 17, 29). In contrast, in the happy vs. neutral condition, the only areas that were significantly more activated for the happy condition were located in the left and the right visual cortices (see Table 2). Emotional modulation of visual processes has so far only been observed when facial expressions were used as stimuli.

Table 2. Areas of activation observed in the comparison of happy bodily expressions with neutral ones.

| Area of activation | Side | x | y | z |

|---|---|---|---|---|

| Visual areas | ||||

| Striate cortex | Left | -10 | -95 | 20 |

| Left | -13 | -84 | -2 | |

| Right | 13 | -98 | 18 | |

| Right | 11 | -86 | 3 |

All clusters reported here are < 0.05 corrected and have a minimal size of 128 mm2 (see Methods).

The present finding of amygdala activation for fear expressed in the whole body contrasts with neuropsychological reports suggesting that amygdala damage only impairs emotion recognition for faces, but not for scenes in which facial expressions were erased and has led to the notion that amygdala might be specialized only for facial expression of fear (30). The present results clearly indicate otherwise. As our design maximized similarity between conditions for all stimulus aspects, except those related to emotion, we did not predict condition-specific activity in visual areas associated with viewing human bodies in lateral occipitotemporal cortex (12). Our results raise the possibility that the similarity in neural activity for the perception of bodily expressions and facial expressions may be due to synergies between the mechanisms underlying recognition of facial expressions and body expressions, and to common structures involved on the one hand in action representation, and on the other in rapid detection of salient information like a fearful bodily expressions.

Of special interest, given the use of body images, is the activity in OFC, which influences response to stimuli at multiple levels of processing (31). Signals arising in OFC control regulatory processes in emotions and feelings in the body but also in the brain's representation of the body (32). OFC acts in concert with the amygdala and somatosensory/insular cortices, both activated here. Observed activity in anterior insula is consistent with the role of this structure in connecting prefrontal cortex and the limbic system and with the role of insula in interoception (33). Activity in posterior cingulate cortex is consistent with earlier findings of a role for this structure in studies of emotional salience (34). Interestingly, besides their role in emotional processes, posterior cingulate and retrosplenial cortex are associated with spatial coding (35). Also of interest is the finding that nucleus acccumbens figures among the emotion-specific activations, indicating that this structure plays a role not only in reward (36) but also, more generally, in processing affective stimuli, including negative ones.

An important area of research in the last decade concerns the way in which the brain recognizes actions. In studies of nonhuman primates, both canonical and mirror neurons have been observed (8, 9, 37). Findings from cell recordings in monkeys, as well as from neuroimaging studies in humans (9, 38–40), provide increasing insight for a network of structures dedicated to action representation. The superior temporal sulcus, the parietal cortex, and the premotor cortex are activated during the perception of simple finger movements (41), pantomimes (42–44), and object-directed actions (9, 43–45). Areas that are reported to be active under conditions of imitation and imagined action or motor imagery are the dorsolateral prefrontal cortex, precentral gyrus, supplementary motor area (SMA), inferior parietal lobe, cingulate, subcortical nuclei, and cerebellum (44). Now, our results indicate that viewing images of bodily expressions of fear activates central structures in the network of areas previously associated with the observation of action (44): the premotor cortex, SMA, inferior frontal gyrus (IFG), middle frontal gyrus, and parietal cortex. In the present case, the activations are not explained by the presence of object-directed movements or even of movement per se. Passive viewing of still images of bodily expressions activates areas in the occipitoparietal pathway reaching the SMA, cingulate gyrus, and middle frontal gyrus, which has been detected during the observation of actions with the intent to imitate later and voluntary action (42, 44), and suggests that passive viewing can initiate motor preparation. Activity in caudate nucleus was also observed for motion stimuli (46). Interestingly, the present activations may follow from a process whereby the brain fills in the missing dynamic information (47). Yet, the important point here is that this process is specific for fear, as indicated by the fact that the fear images produce increased activation in well known emotional areas. It is also unlikely that the activity in these emotion-related areas would be produced by the presence of more implicit motion cues in the fear than in the neutral body images. As indicated by the results of the second pilot experiment, there is no difference between the images in this respect. So far, activation of emotion-related brain areas for movement per se has not been reported in the literature. In the happy-neutral blocks, the only activity related to the emotion as compared with the neutral condition concerns visual areas. This finding suggests that seeing happy bodily expressions evokes considerably less condition-specific activity in areas related to action representation and in motor areas. Presumably, action representation and motor imagery are also present in the happy-neutral condition without being specific for perceiving either happy or neutral bodily expressions.

Of particular interest is the activity in SMA. Our central prediction was that viewing bodily expressions would activate areas related to processing emotion and generate activity in motor areas through cingulate and prefrontal areas with a crucial role to be played by SMA, given the connections of SMA to M1. Activity in the pre-SMA face area is consistent with previous reports (43, 48) on its role in preparation to act more than in action observation. In this case, pre-SMA activity may reflect preparation to act upon perceiving fear in others. SMA itself may also play a role in movement control in case of emotion-inducing stimuli. The present results are consistent with findings on reciprocal interactions between the amygdala and subcortical and cortical structures involving the striatum and OFC (49). The present finding of the role of body representations in emotional states evokes the findings on spontaneous and automatic imitation of facial expression observed earlier (50). As been argued in the latter case, such imitative reflexes are nonintentional and cannot be observed with the eye but they stand out clearly in electromyography measurements. At the conceptual level, such emotional resonance or contagion effects may correspond to minor functional changes in the threshold of bodily states in the service of automatic action preparation. Processes responsible for contextual integration with real-world knowledge presumably regulate and suppress these emotional body reflexes when they are not adaptive.

Whole-body expressions of emotion have not been used previously to study emotional processes in the brain. Our findings are consistent with the notion that the amygdala is a critical substrate in the neural system necessary for triggering somatic states from primary inducers (51), among which the sight of a fearful body expression figures. The observed coupling of the strong emotion-related activity with structures involved in canonical action representation (predominantly the parietomotor circuit) and the mirror neurons circuit (predominantly the intraparietal sulcus, dorsal premotor cortex, superior temporal sulcus, and right parietal operculum) suggests that the two structures play a role in social communication (52). Further research is needed to clarify these issues. Finally, the present results indicate a role of the putamen and caudate nucleus in viewing bodily expressions of fear. Interestingly, the caudate nucleus and putamen are predominantly known for their involvement in motor tasks but have also been associated with motivational-emotional task components. The caudate nucleus and putamen are damaged in Parkinson's disease (53) and Huntington's disease (54), which are both characterized by motor disorders as well as by emotion deficits.

Our study shows that presentation of stimuli expressing fear produces, besides activation of centers associated with emotion, activations of circuits mediating actions. This finding clearly suggests an intrinsic link between emotion and action. Yet, it does not imply that neutral stimuli or stimuli expressing happiness do not activate these circuits, which we know they do (55). As a matter of fact, we capitalized on the findings that still images of neutral actions elicit action representation and are thus appropriate to use as controls for investigating emotional body expressions, which, as we predicted, also elicit action representation.

A central issue to consider in future research concerns the relation between emotion and movement. However, at present, we do not know whether the dynamics and kinematics of fearful body movements are quantitatively and qualitatively different from those of neutral actions and from movements expressing emotions other than fear. For example, it is not yet known whether actions and body expressions of emotions are all built from the same basic set of motor primitives. In that perspective, the movement properties of emotional body expressions are best seen as modulations of a basic set of all-purpose motor primitives, or a general motor syntax. Alternatively, emotional body expressions represent a domain of sui generis motor competence.

Acknowledgments

We thank M. Balsters for assistance with preparation of the materials. This work was supported by a grant from Tilburg University and National Institutes of Health Grant RO1 NS44824-01 (to N.H.).

Abbreviations: OFC, orbitofrontal cortex; SMA, supplementary motor area; IFG, inferior frontal gyrus.

References

- 1.Tinbergen, N. (1963) Z. Tierpsychol. 20, 410–433. [Google Scholar]

- 2.Levenson, R. W. (2003) Ann. N.Y. Acad. Sci. 1000, 348–366. [DOI] [PubMed] [Google Scholar]

- 3.Darwin, C. (1965) The Expression of the Emotions in Man and Animals (Univ. of Chicago, Chicago).

- 4.Dolan, R. J. (2002) Science 8, 1191–1194. [DOI] [PubMed] [Google Scholar]

- 5.Adolphs, R. (2002) Behav. Cogn. Neurosci. Rev. 1, 21–61. [DOI] [PubMed] [Google Scholar]

- 6.Bonda, E., Petrides, M., Ostry, D. & Evans, A. (1996) J. Neurosci. 16, 3737–3744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Grossman, E. D. & Blake, R. (2002) Neuron 35, 1167–1175. [DOI] [PubMed] [Google Scholar]

- 8.di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V. & Rizzolatti, G. (1992) Exp. Brain Res. 91, 176–180. [DOI] [PubMed] [Google Scholar]

- 9.Rizzolatti, G., Fadiga, L., Gallese, V. & Fogassi, L. (1996) Brain Res. Cogn. Brain Res. 3, 131–141. [DOI] [PubMed] [Google Scholar]

- 10.Reed, C. L., Stone, V. E., Bozova, S. & Tanaka, J. (2003) Psychol. Sci. 14, 302–308. [DOI] [PubMed] [Google Scholar]

- 11.Grezes, J., Fonlupt, P., Bertenthal, B., Delon-Martin, C., Segebarth, C. & Decety, J. (2001) NeuroImage 13, 775–785. [DOI] [PubMed] [Google Scholar]

- 12.Downing, P. E., Jiang, Y., Shuman, M. & Kanwisher, N. (2001) Science 293, 2470–2473. [DOI] [PubMed] [Google Scholar]

- 13.Hadjikhani, N. & de Gelder, B. (2003) Curr. Biol. 13, 2201–2205. [DOI] [PubMed] [Google Scholar]

- 14.O'Craven, K. M. & Kanwisher, N. (2000) J. Cognit. Neurosci. 12, 1013–1023. [DOI] [PubMed] [Google Scholar]

- 15.Stekelenburg, R. & de Gelder, B. (2004) NeuroReport 15, 777–780. [DOI] [PubMed] [Google Scholar]

- 16.Morris, J. S., Friston, K. J., Buchel, C., Frith, C. D., Young, A. W., Calder, A. J. & Dolan, R. J. (1998) Brain 121, 47–57. [DOI] [PubMed] [Google Scholar]

- 17.Rotshtein, P., Malach, R., Hadar, U., Graif, M. & Hendler, T. (2001) Neuron 32, 747–757. [DOI] [PubMed] [Google Scholar]

- 18.Hadjikhani, N., Joseph, R. M., Snyder, J. Chabris, C. F. Clark, J., Steele, S., McGrath, L., Aharon, I., Feczko, E., Harris, G. & Tager-Flusberg, H. (2004) NeuroImage 22, 1141–1150. [DOI] [PubMed] [Google Scholar]

- 19.Cox, R. W. (1996) Comput. Biomed. Res. 29, 162–173. [DOI] [PubMed] [Google Scholar]

- 20.Fischl, B., Sereno, M. I., Tootell, R. B. & Dale, A. M. (1999) Hum. Brain Mapp. 8, 272–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Talairach, J. & Tournoux, P. (1988) Co-Planar Stereotaxic Atlas of the Human Brain (Thieme, Stuttgart).

- 22.Fischl, B., Sereno, M. I. & Dale, A. M. (1999) NeuroImage 9, 195–207. [DOI] [PubMed] [Google Scholar]

- 23.Adolphs, R. (2002) Curr. Opin. Neurobiol. 12, 169–177. [DOI] [PubMed] [Google Scholar]

- 24.Pessoa, L. & Ungerleider, L. G. (2004) Prog. Brain Res. 144, 171–182. [DOI] [PubMed] [Google Scholar]

- 25.LeDoux, J. E. (1992) Curr. Opin. Neurobiol. 2, 191–197. [DOI] [PubMed] [Google Scholar]

- 26.de Gelder, B., Vroomen, J., Pourtois, G. & Weiskrantz, L. (1999) NeuroReport 10, 3759–3763. [DOI] [PubMed] [Google Scholar]

- 27.Morris, J. S., Ohman, A. & Dolan, R. J. (1999) Proc. Natl. Acad. Sci. USA 96, 1680–1685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Morris, J. S., DeGelder, B., Weiskrantz, L. & Dolan, R. J. (2001) Brain 124, 1241–1252. [DOI] [PubMed] [Google Scholar]

- 29.de Gelder, B., Frissen, I., Barton, J. & Hadjikhani, N. (2003) Proc. Natl. Acad. Sci. USA. 100, 13105–13110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Adolphs, R. & Tranel, D. (2003) Neuropsychologia 41, 1281–1289. [DOI] [PubMed] [Google Scholar]

- 31.Miller, E. K. & Cohen, J. D. (2001) Annu. Rev. Neurosci. 24, 167–202. [DOI] [PubMed] [Google Scholar]

- 32.Damasio, A. R. (1999) The Feeling of What Happens (Harcourt Brace, New York).

- 33.Singer, T., Seymour, B., O'Doherty, J., Kaube, H., Dolan, R. J. & Frith, C. D. (2004) Science 303, 1157–1162. [DOI] [PubMed] [Google Scholar]

- 34.Maddock, R., Garrett, A. & Buonocore, M. (2003) Hum. Brain Mapp. 18, 30–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Small, D. M., Gitelman, D. R., Gregory, M. D., Nobre, A. C., Parrish, T. B. & Mesulam, M. M. (2003) NeuroImage 18, 633–641. [DOI] [PubMed] [Google Scholar]

- 36.Schultz, W. (2004) Curr. Opin. Neurobiol. 14, 139–147. [DOI] [PubMed] [Google Scholar]

- 37.Murata, A., Gallese, V., Luppino, G., Kaseda, M. & Sakata, H. (2000) J. Neurophysiol. 83, 2580–2601. [DOI] [PubMed] [Google Scholar]

- 38.Jeannerod, M. (2001) NeuroImage 14, S103–S109. [DOI] [PubMed] [Google Scholar]

- 39.Gallese, V., Fadiga, L., Fogassi, L. & Rizzolatti, G. (1996) Brain 119, 593–609. [DOI] [PubMed] [Google Scholar]

- 40.Decety, J. & Grezes, J. (1999) Trends Cogn. Sci. 3, 172–178. [DOI] [PubMed] [Google Scholar]

- 41.Iacoboni, M., Koski, L. M., Brass, M., Bekkering, H., Woods, R. P., Dubeau, M. C., Mazziotta, J. C. & Rizzolatti, G. (2001) Proc. Natl. Acad. Sci. USA 98, 13995–13999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Decety, J., Grezes, J., Costes, N., Perani, D., Jeannerod, M., Procyk, E., Grassi, F. & Fazio, F. (1997) Brain 120, 1763–1777. [DOI] [PubMed] [Google Scholar]

- 43.Buccino, G., Binkofski, F., Fink, G. R., Fadiga, L., Fogassi, L., Gallese, V., Seitz, R. J., Zilles, K., Rizzolatti, G. & Freund, H. J. (2001) Eur. J. Neurosci. 13, 400–404. [PubMed] [Google Scholar]

- 44.Grezes, J., Armony, J. L., Rowe, J. & Passingham, R. E. (2003) NeuroImage 18, 928–937. [DOI] [PubMed] [Google Scholar]

- 45.Decety, J., Chaminade, T., Grezes, J. & Meltzoff, A. N. (2002) NeuroImage 15, 265–272. [DOI] [PubMed] [Google Scholar]

- 46.LaBar, K. S., Crupain, M. J., Voyvodic, J. T. & McCarthy, G. (2003) Cereb. Cortex 13, 1023–1033. [DOI] [PubMed] [Google Scholar]

- 47.Kourtzi, Z. & Kanwisher, N. (2000) J. Cognit. Neurosci. 12, 48–55. [DOI] [PubMed] [Google Scholar]

- 48.Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C. & Lenzi, G. L. (2003) Proc. Natl. Acad. Sci. USA 100, 5497–5502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Rolls, E. T. (1994) Rev. Neurol. (Paris) 150, 648–660. [PubMed] [Google Scholar]

- 50.Dimberg, U. (1982) Psychophysiology 19, 643–647. [DOI] [PubMed] [Google Scholar]

- 51.Bechara, A., Damasio, H. & Damasio, A. R. (2003) Ann. N.Y. Acad. Sci. 985, 356–369. [DOI] [PubMed] [Google Scholar]

- 52.Gallese, V., Keysers, C. & Rizzolatti, G. (2004) Trends Cogn. Sci., in press. [DOI] [PubMed]

- 53.Jacobs, D. H., Shuren, J., Bowers, D. & Heilman, K. M. (1995) Neurology 45, 1696–1702. [DOI] [PubMed] [Google Scholar]

- 54.Kuhl, D. E., Phelps, M. E., Markham, C. H., Metter, E. J., Riege, W. H. & Winter, J. (1982) Ann. Neurol. 12, 425–434. [DOI] [PubMed] [Google Scholar]

- 55.Johnson-Frey, S. H., Maloof, F. R., Newman-Norlund, R., Farrere, C., Inati, S. & Grafton, S. T. (2003) Neuron 39, 1053–1058. [DOI] [PubMed] [Google Scholar]