Abstract

Objective. To define the competencies for individuals designated as assessment leads in colleges and schools of pharmacy.

Methods. Twenty-three assessment experts in pharmacy participated in a modified Delphi process to describe competencies for an assessment lead, defined as the individual responsible for curricular assessment and assessment-related to doctor of pharmacy program accreditation. Round 1 asked open-ended questions about knowledge, skills, and attitudes. Round 2 grouped responses for comment and rating for consensus, which was prospectively set at 80%.

Results. Twelve competencies were defined and grouped into 3 areas: Context for Assessment, Managing the Process of Assessment, and Leadership of Assessment Activities. In order to verify the panel’s work, assessment competencies from other disciplines were reviewed and compared.

Conclusions. The competencies describe roles for assessment professionals as experts, managers, and leaders of assessment processes. They can be used by assessment professionals in self-assessing areas for professional development and by administrators in selecting, developing, and supporting designated leads.

Keywords: assessment, competencies, leadership, faculty development

INTRODUCTION

The role of assessment in pharmacy professional education is growing. This growth is driven not only by accreditation requirements, but also the desire to provide competitive educational programs that produce competent practitioners. Assessment can help evaluate the best teaching strategies to reach today’s learners and provides a data-driven approach to determining optimal use of scarce resources. When used to its fullest capacity, and when coupled with a strong culture and good knowledge of organizational change, assessment can transform curricula.1

Recognition of the importance of assessment has produced an increase in educators interested in and dedicated to the assessment of student learning and programmatic outcomes. Professionals tasked with assessment roles are seeking guidance and opportunities for networking and learning as evidenced by the growth of the American Association of Colleges of Pharmacy (AACP) Special Interest Group (SIG) for Assessment established in 2010. Membership in this SIG has increased from 219 pharmacy educators when it originally formed, to 767 members in 2015, a nearly fourfold increase in only five years.2 In addition, the academy is responding with scholarly assistance to help assessment professionals establish grounding in the art and science of assessment. In particular, three recent publications, a formative assessment toolkit,3 a paper on integrating the new Center for the Advancement of Pharmacy Education (CAPE) outcomes into pharmacy curricula,4 and a paper on assessing the CAPE outcomes5 have all provided guidance for accomplishing assessment work in pharmacy colleges and schools.

While fostering broad engagement and collaboration in assessment is encouraged,6 and providing tools and guidance is a necessity, the roles and responsibilities of the assessment person are critical considerations. Despite growing experience with assessment, colleges/schools face significant challenges, including advancing assessment of the affective domain, integrating assessment with continuing quality improvement practices, and building a culture of assessment. In addition, the increased emphasis on assessment in the Accreditation Council for Pharmacy Education’s Standards 20167 must also be considered. The current expectations for and complexity of assessment necessitates the identification of an assessment lead in a doctor of pharmacy (PharmD) program. Yet, as the technical and leadership needs for assessment have continued to mature, it may be challenging to determine the expertise needed for new assessment leads and the professional development priorities for existing assessment professionals.

The aim of this work was to draw together a group of experienced pharmacy assessment professionals to identify and obtain consensus on assessment-related competencies for individuals designated as the assessment lead in a college or school. These competencies will be useful to assessment professionals in self-assessing areas for professional development and to administrators in selecting, developing and supporting designated leads.

METHODS

A two-round modified Delphi process was used to obtain opinions from assessment experts on the competencies needed in an assessment lead in a college or school of pharmacy. The Delphi process uses multiple rounds of structured, anonymous data collection to draw together the collective wisdom of a group of experts.8 In the initial round, expert panelists generally respond to open-response questions.9 This input is then collated and presented back to the group for review and refinement.10,11 Statements defined from early rounds can be rated by the panelists to determine consensus in later rounds.9,12

Several characteristics of the Delphi process encourage quality participation and input. In contrast to discussion, the Delphi process allows each panelist an equal opportunity to present ideas.13 With its anonymity, it encourages expression that is free from group pressure.14 With the feeding back of results through successive rounds, it also allows for opinions to be heard in a nonadversarial manner.8 Views can be retracted, altered, or added after seeing others responses and giving the issue further consideration.14 In addition, the Delphi process allows for obtaining opinion without physically bringing experts together.8

The Delphi process has been used to define competencies in medical education15,16 and pharmacy education.17,18 In addition, it has been used to define competencies for student affairs professionals,19 and teachers.20,21

The Delphi process relies on the identification and use of experts within the discipline.9 However, the optimal number of Delphi panelists is not agreed upon in the literature 12,14,22 and studies show a wide variation in panel size.9 In determining panel size, the availability of time and money has been acknowledged as important and influential in decision-making.9 Hasson, Keeney and McKenna discuss the ramifications of panel size and caution that larger sizes result in greater generation of data, which influences the amount of analysis and the potential for analysis difficulties.8 Ten to 15 subjects has been suggested as sufficient when the subjects are homogenous.10 Recent Delphi processes in pharmacy education have sought minimum panel sizes of 20.23,24 Considering the pool of available experts and its breadth in terms of experiences, a panel size of 20-30 was deemed appropriate.

To identify experts who could serve as participants, an invitation email with direction to an online form was circulated to members of the AACP Assessment SIG. Participants were asked to indicate their assessment-related experiences and panelists were selected from the respondent group. Criteria for selection included: college or university assessment committee membership; a publication or award in assessment; and provided university-level assessment consultation or chaired or led a self-study of a college or school’s PharmD program, or served on an ACPE site visit team for a college or school’s self-study, providing assessment expertise, or served as an officer for an assessment-related special interest group within a professional organization. Points were awarded based on the total number of activities reported. Those with the most points in these categories were selected to participate. An email was delivered inviting their participation in the Delphi process and providing information on the study.

In round 1, open-ended questions were used to gather input on the desired competencies for curricular assessment and assessment related to PharmD program accreditation. Panelists were asked to comment on the necessary knowledge and skills, as well as the necessary attitudes, values, beliefs, and behaviors. Recognizing that an individual leading assessment may hold various titles within a college or school of pharmacy (eg, assistant or associate dean, director, coordinator, or assessment committee chair), panelists were asked to focus on the competencies needed for the point person or lead, who may or may not execute each assessment initiative on their own.

Panelist comments were reviewed by two members of the research team and performance-based competency statements were drafted. A report was generated with the competency statements and the direct quotes from panelists supporting each statement. Because of the structure of the questions, the panelists’ comments tended toward more knowledge-based language; however, the performance-based competencies were fully vetted by the participants in the subsequent rounds.

The round 1 report was returned to participants. Panelists were asked to indicate their level of agreement with each competency statement using a 5-point Likert rating system (ie, strongly disagree, disagree, neither agree nor disagree, agree, strongly agree). A consensus level was set prospectively as there is no agreed upon consensus level in the literature.9,22 Keeney et al argues that the importance of the topic can guide consensus level, suggesting that 100% consensus may be desirable for life or death issues, while 51% may be appropriate for preferences.22 For this study, the consensus level was set at 80% of panelists agreeing or strongly agreeing with a competency statement. In addition to rating the competency statements, panelists were specifically asked to comment on areas where they disagreed with a competency statement.

The competencies from round 2 were sent to participants with the instructions: “If desired, please feel free to comment on the competency or to suggest additions or modifications to the descriptors/additional detail.” Responses provided additional context and background on the panelists’ perspectives and were used by the investigators to expand competency descriptors. In preparing the data for presentation, the descriptors were modified for readability from the direct quotes from panelists in order to give some direction in understanding the competency. However, they were not vetted or voted upon by the panelists and should not be interpreted as subcompetencies.

For all rounds, panelist responses were collected via the Web-based survey software program Qualtrics (Qualtrics Labs Inc., Provo, UT). This study was determined exempt by the University of Minnesota’s institutional review board.

As a means of verifying the panel’s work, assessment competencies from other disciplines were sought. Two sets of assessment-specific competencies were identified and reviewed: the Essential Competencies for Program Evaluators (ECPE)25 and the Assessment Skills and Knowledge for Student Affairs Practitioners and Scholars (ASK).26 These standards were compared to the competencies defined by the Delphi panel.

RESULTS

The initial request sent to the AACP Assessment SIG identified 115 potential experts based on their self-reported assessment-related activities and expertise. Thirty-one of these individuals were invited to participate in the study, 23 agreed to become study panelists, and 21 panelists completed round 2. Eighteen panelists participated in the optional comment period.

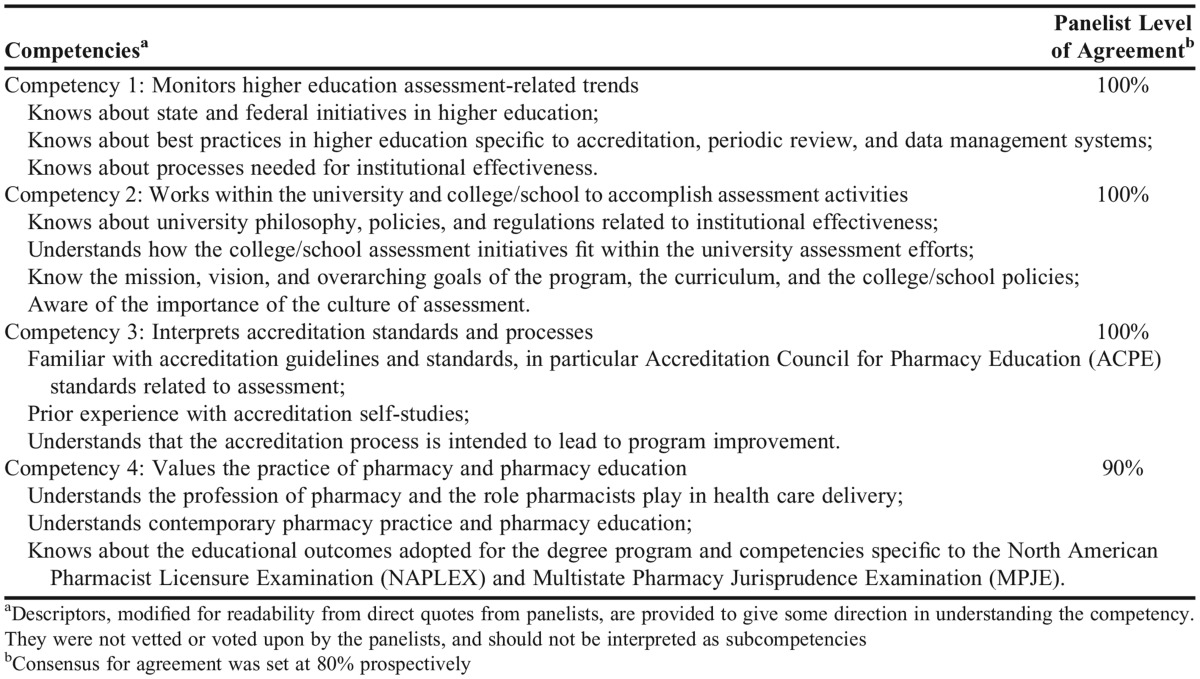

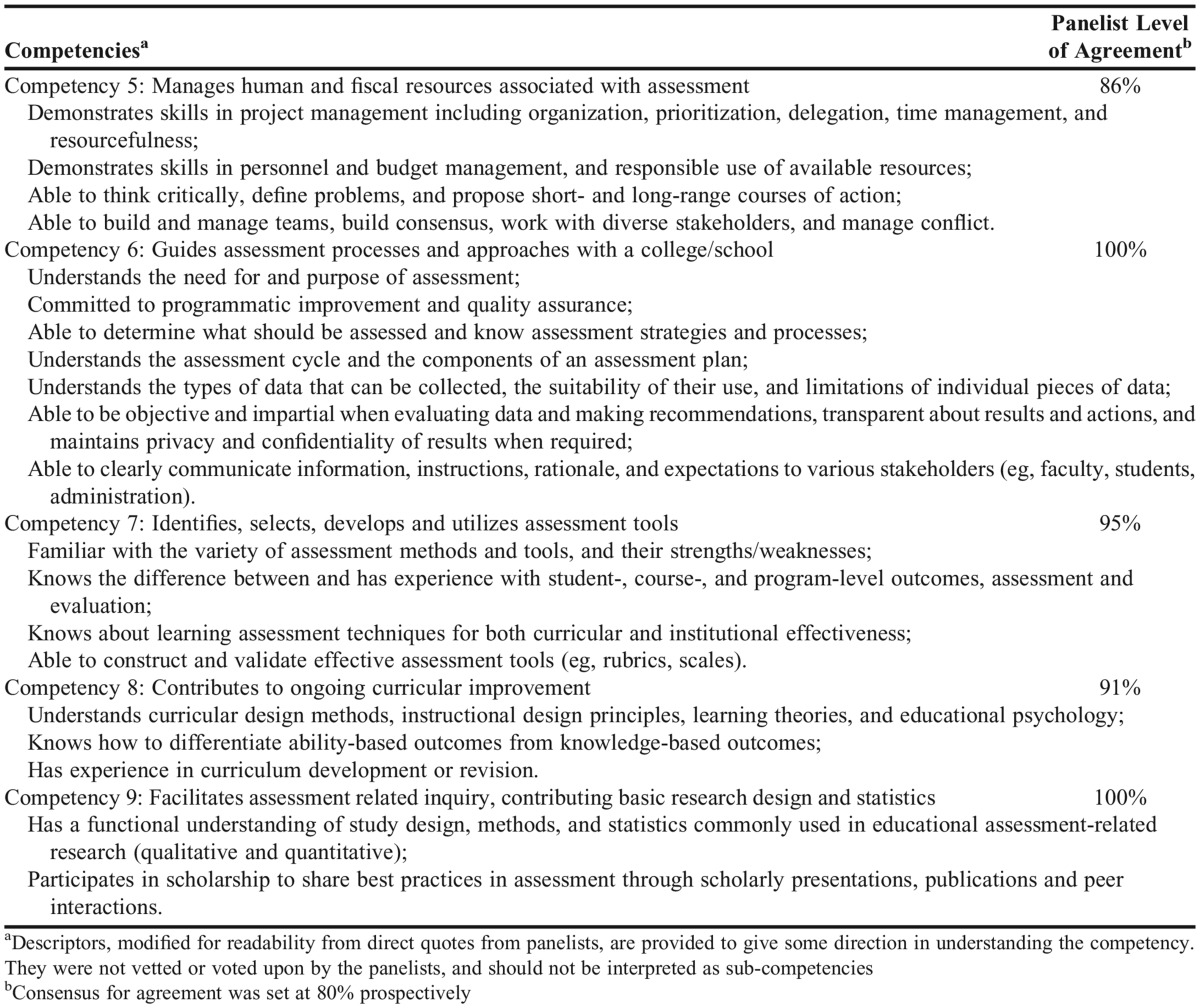

Twelve competency statements were defined. To facilitate communication and discussion, competencies were grouped by the authors into 3 areas: Context for Assessment (Table 1), Managing the Process of Assessment (Table 2), and Leadership of Assessment Activities (Table 3).

Table 1.

Defined Assessment Competencies for Area 1: Context of Assessment

Table 2.

Defined Assessment Competencies for Area 2: Managing the Process of Assessment

Table 3.

Defined Assessment Competencies for Area 3: Leadership of Assessment Activities

Competencies from related fields acknowledge the importance of the Context of Assessment (area 1). The ASK standards highlight benchmarking and the politics of assessment,26 while the ECPE Standards require situational analysis to become familiar with a program, its stakeholders, and its position within the larger organization.25 The competencies from the pharmacy Delphi panelists expand on these topics and categorize context-related competencies into the broader areas of higher education; the university, school, or college; accreditation; and the field of pharmacy.

Competencies from related fields also focus heavily on Managing the Process of Assessment (area 2). The competencies for program evaluators (ECPE) includes categories for systematic inquiry, such as “design, data collection, analysis, interpretation, and reporting” and project management or “the nuts and bolts of conducting an evaluation, such as budgeting, coordinating resources, and supervising procedures.”25 Similarly, the competencies for student affairs practitioners (ASK) contain standards for assessment design, articulating learning and development outcomes, selection of data collection and management methods, assessment instruments, surveys used for assessment purposes, interviews and focus groups used for assessment purposes, assessment methods, program review and evaluation, and effective reporting and use of results.26 Pharmacy Delphi panelists emphasized elements of managing the process of assessment, such as managing the resources for assessment, guiding the process, and selecting the tools. Additionally, the pharmacy panelists specifically called out the role of assessment professionals in contributing to curricular improvement and participating in scholarly activities.

The competencies from pharmacy Delphi panelists differ from competencies in related fields in two ways: emphasis on leadership and definition of professional principles. The pharmacy Delphi panelists placed greater emphasis on Leadership of Assessment Activities (area 3). Competencies from related fields included some leadership-related elements, such as interpersonal competence (ie, “people skills, communication, negotiation, conflict, collaboration, and cross-cultural skills”)25 and educating others about assessment methods and outcomes.26

Competencies from related fields include a standard for assessment ethics26 and a category for professional practice.25 These professional principles competencies address institutional review boards, the Family Educational Rights and Privacy Act (FERPA), confidentiality, integrity, and honesty.25,26 The absence of a professional principles competency is a potential oversight in the work of the pharmacy Delphi panelists.

In addition, the global competencies developed in this study lack the detail found in other related competencies work. For example, competency 7: “identifies, selects, develops and utilizes assessment tools,” translates into three separate ASK competencies (ie, selection of data collection and management methods, assessment instruments, and surveys used for assessment purposes). Furthermore, each ASK competency has up to 7 ability statements.26

DISCUSSION

In this study, 21 pharmacy assessment experts completed a two-round Delphi process resulting in the definition of 12 competency statements for an assessment lead. The competencies focus on the knowledge, skills, and attitudes needed to lead assessment initiatives for a college or school, including curricular assessment and assessment related to PharmD program accreditation. The competencies were examined in comparison to other competencies that have been defined for assessment professionals in other areas of higher education.

In Context for Assessment (area 1), “monitors higher education assessment-related trends” (competency 1), “works within the university and college/school to accomplish assessment activities” (competency 2), and “interprets accreditation-related standards and process” (competency 3) are competencies that would be anticipated as needed for an assessment lead. These competencies likely appear in job descriptions and guide development efforts. However, “values the practice of pharmacy and pharmacy education” (competency 4) may require more intentional examination and action. In open commenting, Delphi participants shared that many assessment leads may not have a pharmacy background. As a result, they may not come to their positions with an appreciation of the current practice challenges graduates are being educated to address or the unique needs of pharmacy (or health professions) education. As nonpharmacy individuals enter colleges and schools as assessment leads, explicit consideration should be given to the pharmacy-related knowledge and experiences that will help them accomplish their work. Similarly, pharmacy-trained individuals who transition into assessment roles may need to gain assessment-specific knowledge and experience to accomplish their work.

Managing the Process of Assessment (area 2) addresses the management competencies necessary to run assessment activities efficiently and effectively, including planning, budgeting, organizing, and staffing. In particular, competency 5 addresses the management of resources (human and fiscal) that are associated with assessment. While some assessment leads have direct responsibilities for hiring of personnel and management of budgets, others have only indirect authority. Being a good steward of resources is critical for a lead whether or not they possess formal authority. For example, the assessment lead may serve an important role in recruiting faculty members to assessment committees or recommending the purchase of tools (eg, software, simulators).

Leadership of Assessment Activities (area 3) is a recognition of the assessment lead’s role in “the process of influencing an organized group toward accomplishing their goals.”27 Hoey and Bailey investigated the changing roles in institutional assessment to determine if the scope of skills necessary is broadening from a focus on technical expertise to include aspects of leadership and strategic planning.28 Leadership has been described as setting direction, motivating action, and aligning people.29 Strong leaders have been shown to use five practices: Model the Way, Inspire a Shared Vision, Challenge the Process, Enable Others to Act, and Encourage the Heart.30 In other words, strong leaders have the ability to: create standards of excellence, set an example for others to follow, look for innovative ways to improve the organization, envision the future of what the organization can become, experiment and take risks, foster collaboration, build spirited teams, recognize contributions, and celebrate accomplishments.30 These practices and behaviors, which can be developed, are important to the work of the assessment lead.

The three areas of competency suggest roles for assessment professionals as experts, managers, and leaders. Hiring or internally recruiting an assessment lead that has all of the expertise needed may not be possible. To that end, encouraging assessment professionals to determine their needs relative to these competencies, identifying areas for knowledge or skill expansion, is important. Similar to pharmacists engaging in continuing professional development,31,32 assessment leads are required to take responsibility for their own professional growth and colleges and schools must support them in this process.

While having a strong assessment lead is important, colleges and schools must also deliberately work to identify collaborations, develop teams, and build a culture of assessment in order to advance assessment efforts. The role of the assessment lead is not to “do assessment” for the organization, but to recruit faculty members and help to ensure that team members are all heading in the same direction. It is essential for the lead to involve and encourage others, while also providing expertise in assessment theories, principles, methods, analytical techniques, and reporting strategies.

Collaborations are available in many locations. Working within the university to accomplish assessment activities (competency 2) will enhance connections to local experts. The importance of broad participation in assessment across an institution has been chronicled by three papers examining contemporary issues in assessment in American higher education: Schuh examined the role of student affairs,33 Volkwein described the role of institutional research,34 and Gilchrist and Oakleaf detailed the role of librarians.35 These papers emphasize that successful assessment programs involve collaboration across the institution and are not simply the role and responsibility of one individual. In leading the assessment efforts (competency 11), the lead needs to engage faculty members, staff, students and stakeholders in the work of assessment, and to motivate meaningful contributions. Recognizing the need for and importance of these skills, Duncan-Hewitt and colleagues36 listed credibility and collegiality as criteria for success in establishing an office of teaching, learning, and assessment. To aid in engaging others, Hutchings provides recommendations for strengthening the connection between faculty members and the enterprise of assessment.37

Assessment is an asset in transforming curricula.1 Each assessment initiative can benefit from a team of individuals that is helping to define the focus, debate options, analyze data, and make recommendations. In serving as a resource to others on assessment-related theories and principles (competency 12), the lead must facilitate assessment at a variety of levels (eg, student learning, programmatic). In order to be successful, a culture of assessment is needed. One of the keys to a culture of assessment is leaders who are actively committed to supporting the efforts of assessment.38 In addition, this culture can be accomplished by recognizing and supporting faculty members who are champions of assessment, allocating resources to support assessment efforts, supporting faculty innovation and inquiry, and providing release time for the work to be conducted. Additional methods for creating a culture of assessment have recently been summarized.6

This study focused on responses from assessment experts in pharmacy. Responses may have been influenced by the panelists’ current job descriptions or by the panelists’ concerns about feasibility given resource constraints. Research that includes perspectives from pharmacy faculty members, administrators, and/or assessment experts from other health professions could yield new insights related to these competency areas. Given that assessment requires the active engagement of many, these competencies could be built upon to describe the desired competencies of assessment committee chairs, assessment committee members, and others tasked with assessment-related responsibilities.

When compared against assessment-related competencies from outside the profession, several opportunities for enhancement were noted. The competencies derived from this research were not as detailed as those from other groups. Further detail may be needed to better support assessment professionals in pharmacy. In addition, defining levels of competency (ie, basic, intermediate advanced) may make the competencies more useful in the professional development of assessment leads.

Several areas within the competencies may require further discussion, debate, and refinement. For instance, competencies related to the professional practice of assessment (eg, ethics, confidentiality) might be further detailed. In addition, competencies related to influence could be elaborated, such as competencies needed for: the scholarship of assessment, advancing assessment practices within the academy or supporting broad scale curricular change. Information on pharmacy assessment professionals’ abilities relative to these competencies could be useful in framing programming and guiding the development of support tools.

CONCLUSIONS

With multi-faceted roles as experts, managers, and leaders, assessment leads must continually work to advance their skills. The competencies defined in this research could be used by assessment leads in self-assessing areas for professional development. While not intended as a job description, an awareness of these competencies can help guide administrators as they identify individuals to serve as assessment leads. Future research is needed to elaborate on the competencies and determine assessment leads’ abilities relative to the competencies.

REFERENCES

- 1.Farris KB, Demb A, Janke KK, Kelley K, Scott SA. Assessment to transform competency-based curricula. Am J Pharm Educ. 2009;73(8):Article 158. doi: 10.5688/aj7308158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.American Association of Colleges of Pharmacy. Faculty and professional staff roster. http://www.aacp.org/about/membership/Documents/AACPRoster.htm. Accessed August 12, 2015.

- 3.Divall MV, Alston GL, Bird E, et al. A faculty toolkit for formative assessment in pharmacy Education. Am J Pharm Educ. 2014;78(9):Article 160. doi: 10.5688/ajpe789160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schwartz AH, Daugherty KK, O’Neil CK, et al. A curriculum committee toolkit for addressing the 2013 CAPE outcomes. http://www.aacp.org/resources/education/cape/Pages/default.aspx. Accessed August 14, 2015.

- 5.Fulford MJ, Souza JM Associates. Are you CAPE-A.B.L.E? Center for the Advancement of Pharmacy Education: an assessment blueprint for learning experiences. 2014. http://www.aacp.org/resources/education/cape/Pages/default.aspx. Accessed August 14, 2015.

- 6.Janke KK, Kelley KA, Kuba SE, et al. Re-envisioning assessment for the Academy and the Accreditation Council for Pharmacy Education’s standards revision process. Am J Pharm Educ. 2013;77(7):Article 141. doi: 10.5688/ajpe777141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Accreditation Council for Pharmacy Education. Accreditation standards and key elements for the professional program in pharmacy leading to the doctor of pharmacy degree. 2015. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf. Accessed February 5, 2015.

- 8.Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000;32(4):1008–1015. [PubMed] [Google Scholar]

- 9.Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–382. doi: 10.1046/j.1365-2648.2003.02537.x. [DOI] [PubMed] [Google Scholar]

- 10. Delbecq A, Van de Ven AH, Gustafson DH. The Delphi technique. In: Group Techniques for Program Planning: A Guide to Nominal Group and Delphi Processes. Glenview, IL: Scott, Foresman and Company; 1975:83-107.

- 11.Fink A, Kosecoff J, Chassin M, Brook RH. Consensus Methods: Characteristics and Guidelines for Use. A Rand Note. Santa Monica, CA: RAND; 1991. http://www.rand.org/pubs/notes/2007/N3367.pdf. Accessed August 15, 2015.

- 12.Hsu C, Sandford B. The Delphi technique: making sense of consensus. Pract Assess Res Eval. 2007;12(10):1–8. [Google Scholar]

- 13.Keeney S, Hasson F, McKenna HP. A critical review of the Delphi technique as a research methodology for nursing. Int J Nurs Stud. 2001;38(2):195–200. doi: 10.1016/s0020-7489(00)00044-4. [DOI] [PubMed] [Google Scholar]

- 14.Williams PL, Webb C. The Delphi technique: a methodological discussion. J Adv Nurs. 1994;19(1):180–186. doi: 10.1111/j.1365-2648.1994.tb01066.x. [DOI] [PubMed] [Google Scholar]

- 15.Kiessling C, Dieterich A, Fabry G, et al. Communication and social competencies in medical education in German-speaking countries: the Basel consensus statement. Results of a Delphi survey. Patient Educ Couns. 2010;81(2):259–266. doi: 10.1016/j.pec.2010.01.017. [DOI] [PubMed] [Google Scholar]

- 16.Penciner R, Langhan T, Lee R, McEwen J, Woods RA, Bandiera G. Using a Delphi process to establish consensus on emergency medicine clerkship competencies. Med Teach. 2011;33(6):e333–e339. doi: 10.3109/0142159X.2011.575903. [DOI] [PubMed] [Google Scholar]

- 17.Janke KK, Traynor AP, Boyle CJ. Competencies for student leadership development in doctor of pharmacy curricula to assist curriculum committees and leadership instructors. Am J Pharm Educ. 2013;77(10):Article 222. doi: 10.5688/ajpe7710222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Byrne A, Boon H, Austin Z, Jurgens T, Raman-Wilms L. Core competencies in natural health products for Canadian pharmacy students. Am J Pharm Educ. 2010;74(3):Article 45. doi: 10.5688/aj740345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Burkard A, Cole D, Ott M, Stoflet T. Entry-Level competencies of new student affairs professionals: a Delphi study. NASPA J. 2004;42(3):283–309. [Google Scholar]

- 20.Smith KS, Simpson RD. Validating teaching competencies for faculty members in higher education: a national study using the Delphi method. Innov High Educ. 1995;19(3):223–234. [Google Scholar]

- 21.Tigelaar DEH, Dolmans DH, Wolfhagen IHAP, Van der Vleuten CPM. The development and validation of a framework for teaching competencies in higher education. High Educ. 2004;48(2):253–268. [Google Scholar]

- 22.Keeney S, Hasson F, McKenna H. Consulting the oracle: ten lessons from using the Delphi technique in nursing research. J Adv Nurs. 2006;53(2):205–212. doi: 10.1111/j.1365-2648.2006.03716.x. [DOI] [PubMed] [Google Scholar]

- 23.Aronson BD, Janke KK, Traynor AP. Investigating student pharmacist perceptions of professional engagement using a modified Delphi process. Am J Pharm Educ. 2012;76(7):Article 125. doi: 10.5688/ajpe767125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Traynor AP, Boyle CJ, Janke KK. Guiding principles for student leadership development in the doctor of pharmacy program to assist administrators and faculty members in implementing or refining curricula. Am J Pharm Educ. 2013;77(10):Article 221. doi: 10.5688/ajpe7710221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stevahn L, King JA, Ghere G, Minnema J. Establishing essential competencies for program evaluators. Am J Eval. 2005;26(1):43–59. [Google Scholar]

- 26. ASK standards: assessment skills and knowledge for student affairs practitioners and scholars. http://www.myacpa.org/ask-standards-booklet. Accessed August 31, 2015.

- 27. Rauch C, Behling O. Functionalism: basis for alternate approach to the study of leadership. In: Hunt J, Hosking D, Schriesheim C, Steward R, eds. Leaders and Managers. Elmsford, NY: Pergamon Press; 1984:45-62.

- 28.Hoey JJ, Bailey M. Developing strategic leadership for institutional effectiveness and assessment. 2013. http://www.aalhe.org/resource-room/conference-resources/2013-conference/hoey-bailey_developing-strategic/. Accessed August 14, 2015.

- 29. Kotter JP. What leaders really do. Harv Bus Rev. 2001;(December):85-96.

- 30. Kouzes JM, Posner BZ. The Leadership Challenge. 4th ed. San Francisco, CA: Jossey-Bass; 2007.

- 31.Rouse MJ. Continuing professional development in pharmacy. Am J Health Syst Pharm. 2004;61(19):2069–2076. doi: 10.1093/ajhp/61.19.2069. [DOI] [PubMed] [Google Scholar]

- 32.Rouse MJ. Continuing professional development in pharmacy. J Am Pharm Assoc. 2004;44(4):517–520. doi: 10.1331/1544345041475634. [DOI] [PubMed] [Google Scholar]

- 33.Schuh JH, Gansemer-Topf AM. The role of student affairs in student learning assessment (NILOA Occasional Paper No.7). 2010. http://learningoutcomesassessment.org/documents/StudentAffairsRole.pdf. Accessed August 15, 2015.

- 34.Volkwein JF. Gaining ground: the role of institutional research in assessing student outcomes and demonstrating institutional effectiveness (NILOA Occasional Paper No.11). 2011. http://learningoutcomesassessment.org/documents/Volkwein%20Occ%20Paper%2011.pdf. Accessed August 15, 2015.

- 35.Gilchrist D, Oakleaf M. An essential partner: the librarian’s role in student learning assessment (NILOA Occasional Paper No.14). 2012. http://learningoutcomesassessment.org/occasionalpaperfourteen.htm. Accessed August 15, 2015.

- 36.Duncan-Hewitt W, Jungnickel P, Evans RL. Development of an office of teaching, learning and assessment in a pharmacy school. Am J Pharm Educ. 2007;71(2):Article 35. doi: 10.5688/aj710235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hutchings P. Opening doors to faculty involvement in assessment (Occasional Paper 4). 2010. http://learningoutcomesassessment.org/documents/OccasionalPaper4.pdf. Accessed August 15, 2015.

- 38. Suskie L. Assessing Student Learning: A Common Sense Guide. 2nd ed. San Francisco, CA: Jossey-Bass; 2009.