Abstract

Objectives. To assess the relative contributions and quality of practice-based evidence (PBE) and research-based evidence (RBE) in The Guide to Community Preventive Services (The Community Guide).

Methods. We developed operational definitions for PBE and RBE in which the main distinguishing feature was whether allocation of participants to intervention and comparison conditions was under the control of researchers (RBE) or not (PBE). We conceptualized a continuum between RBE and PBE. We then categorized 3656 studies in 202 reviews completed since The Community Guide began in 1996.

Results. Fifty-four percent of studies were PBE and 46% RBE. Community-based and policy reviews had more PBE. Health care system and programmatic reviews had more RBE. The majority of both PBE and RBE studies were of high quality according to Community Guide scoring methods.

Conclusions. The inclusion of substantial PBE in Community Guide reviews suggests that evidence of adequate rigor to inform practice is being produced. This should increase stakeholders’ confidence that The Community Guide provides recommendations with real-world relevance. Limitations in some PBE studies suggest a need for strengthening practice-relevant designs and external validity reporting standards.

If we are to achieve important public health goals of preventing and reducing morbidity and mortality, then we must find and use effective population-based public health programs, services, and policies (collectively identified hereafter as interventions) for a range of diverse populations. We must also deploy interventions that can be implemented in the different settings in which people live, work, and play.1–3 Yet, securing adequate information about the effectiveness of interventions across all of these settings and populations can be challenging.

Many people consider randomized controlled trials (RCTs) to be the gold standard for assessing the effectiveness of interventions. In RCTs, the researcher has a substantial degree of control over the situation, the researcher randomizes participants to receive or not to receive the intervention, participants are typically volunteers, participants receive the same intervention in much the same way, the intervention is usually delivered by people with similar levels of training and experience, and there are typically sufficient resources to ensure that the intervention is delivered as intended.4 Such controlled studies put a high priority on guarding against selection bias and confounding to enhance internal validity—that is, does the intervention work under the conditions specified in the study?4

Attention to internal validity is essential because, without it, we can have no confidence that the intervention works at all. However, such controlled situations may be different from real-world situations faced by public health professionals who may wonder if they can achieve comparable outcomes in their populations, settings, and contexts, with fewer resources.5–8 A number of scientists have therefore called for greater emphasis to be placed on external validity—that is, does the intervention work across various populations, settings, resource constraints, and other conditions commonly encountered in practice?9–12 Such considerations have led to the coining of phrases such as “If we want more evidence-based practice, then we need more practice-based evidence.”10

This phrase has been repeated many times by intervention researchers, public health practitioners, policymakers, and others. Accordingly, The Guide to Community Preventive Services (The Community Guide; http://www.thecommunityguide.org)13 receives regular requests to include practice-based evidence (PBE) in our systematic reviews of the effectiveness of informational and educational, behavioral and social, environmental and policy, and health system interventions. Because Community Guide systematic reviews form the basis of evidence-based recommendations made by the Community Preventive Services Task Force (CPSTF), and because many funders and policymakers are now requiring public health practitioners to use evidence-based interventions,14–18 many stakeholders want the CPSTF’s recommendations to be informed by PBE.

Requests to include PBE in Community Guide reviews demonstrate 3 widely held, but false, impressions: (1) The Community Guide only includes evidence from RCTs and other such highly controlled studies, (2) PBE may not have adequate rigor to be included in Community Guide reviews, and (3) The Community Guide’s “gold standard” reviews may not address a wide range of real-world public health needs. Contrary to these impressions, since the inception of The Community Guide in 1996, all of its reviews have assessed the external as well as internal validity of included studies.19 Furthermore, inclusion criteria for all Community Guide reviews permit PBE by allowing a range of different study designs and including evidence gleaned through program evaluation and surveillance.

There is still a widespread lack of clarity about what constitutes PBE. Several recent publications have aimed to define or circumscribe PBE, particularly for public health. One defined PBE as data from field-based practices that demonstrate achievement of intended effects or benefits.20 A recent review delineated PBE as requiring an in-depth understanding of the practice setting—including the challenges faced by the deliverers and recipients of the intervention—and satisfying 1 or more of the following principles:

using participatory approaches to engage stakeholders in the study,

evaluating ongoing programs and policies (via natural experiments),

using study designs that place greater emphasis on external validity,

using system science methods to understand complexity and context, or

using practice-based networks to conduct studies.5,21

In addition, a number of publications aim to increase the rigor and trustworthiness of PBE by recommending specific methods or measures.8,21–28

Despite the openness of The Community Guide to PBE, the extent to which its systematic reviews actually contain PBE, and whether the amount of PBE varies by topic or type of review,13 have never been assessed. In addition, the comparative rigor, or quality, of PBE and of research-based evidence (RBE; i.e., evidence from studies that are researcher-driven and focused primarily on internal validity) included in Community Guide reviews has never been assessed.

The goals of this research project were, therefore, to (1) develop a scheme that could be used to categorize a broad range of study types as PBE or RBE, (2) determine the relative contributions of PBE and RBE in Community Guide systematic reviews, and (3) determine whether PBE and RBE differ in characteristics such as study design, intervention type, setting, study location, and quality of execution.

METHODS

The systematic reviews included in this project were Community Guide reviews that were conducted, completed, and posted on The Community Guide Web site (http://www.thecommunityguide.org) between December 1997 and January 2014. We abstracted information from all studies that contributed to CPSTF recommendations as well as those that were excluded from CPSTF consideration because of “limited” quality of execution (discussed toward the end of Methods and in Table 1).19 Relevant studies were located through Community Guide archives; published journal articles on Community Guide reviews; the book, The Guide to Community Preventive Services: What Works to Promote Health?30; and personal communication with experts involved in the reviews. Where reviews have been updated over time, only the most recent review was used. A small number of studies were included in more than 1 review; because each review was considered to address a distinct evidence base, such studies were included in this analysis each time they appeared.

TABLE 1—

Details on Characteristics of Studies: The Community Guide, 1997–2014

| Study Characteristics | Details or Examples |

| Study design | |

| RCT | RCT study design |

| Non-RCT | Prospective cohort, time-series, before–after, nonrandomized trial |

| Intervention strategy type | |

| Policy | Legislative or policy changes (e.g., universal motorcycle helmet law, smoke-free policies) |

| Program | For example, quitline interventions, mass-reach health communications interventions, community-wide education programs |

| Country | |

| United States | Studies that were conducted in the United States |

| Non-United States | Studies not conducted in the United States, usually in other high-income countries, according to Community Guide methods |

| Setting | |

| Community | Home, community, nursing home, school |

| Health care | Hospital, private practice, clinic, pharmacy |

| Worksite | Conducted among employees in a workplace |

| Suitability of study designa | |

| Greatest | Studies that collected data on exposed and comparison populations prospectively (e.g., RCT, prospective cohort, other designs such as time-series and pre–post study with concurrent comparison group) |

| Moderate | Studies that collected data retrospectively or lacked a comparison group, but conducted multiple pre- and postmeasurements on their study population(s) (e.g., case–control, interrupted time series) |

| Least | Studies with least suitable designs were cross-sectional studies, before–after studies, and those that involved only a single pre- or postmeasurement in the intervention population. |

| Quality of executionb | |

| Good or fair | Studies with < 5 limitations |

| Limited | Studies with ≥ 5 limitations |

Note. RCT = randomized controlled trial.

Suitability of study design: The Community Guide classifies studies on the basis of the suitability of their study design.29

Quality of execution was determined by the systematic review teams who originally conducted the individual reviews, using Community Guide methods on quality scoring.19 The quality scoring methods rate studies based on threats to internal and external validity in 6 areas (descriptions, sampling, measurement, analysis, interpretation of results, and other). Studies with < 5 limitations were considered to be of “good” or “fair” quality, and included in the analysis for the original review. Studies with ≥ 5 limitations were considered “limited,” and were excluded from all analyses. Studies with ≥ 5 limitations therefore did not contribute to Community Preventive Services Task Force recommendations and other findings (recommended against, insufficient evidence).

Operational Definitions for Practice- and Research-Based Evidence

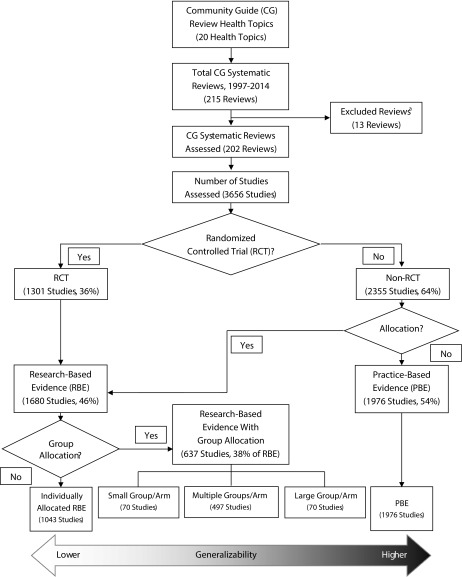

Because they did not exist elsewhere, the first task was to develop operational definitions that would create meaningful distinctions between PBE and RBE, based on information that was readily available for the individual studies included in Community Guide reviews. Figure 1 illustrates the decision process used for categorizing studies. The main feature we used to distinguish RBE from PBE was whether participants were allocated to intervention and comparison conditions. We categorized studies as RBE if the researchers allocated (randomly or nonrandomly) the intervention to individuals or to specific groups of individuals. Conversely, we categorized observational studies as PBE as they typically involve assessing an intervention implemented in practice to improve health or other outcomes, and do not involve allocating individuals or groups to the intervention.

FIGURE 1—

Decision Process for Categorizing Studies as Practice-Based Evidence and Research-Based Evidence Along With the Distribution of Community Guide Studies, by Type of Evidence: 1997–2014

Note. The Community Guide has conducted systematic reviews on 20 health topic areas, consisting of 215 systematic reviews. Each review consists of varying numbers of studies, which were categorized based on allocation of the intervention.

aThirteen reviews were excluded because of lack of information from older reviews (8 reviews) or 0 included studies in the review (5 reviews).

Although these simple operational definitions of PBE and RBE provide an objective means to categorize a large number of studies, they fail to fully reflect the broader conceptualization of PBE that defines it as evidence that is collected with particular attention to its relevance under the constraints of real-world practice.5,20,21 We therefore aimed to also capture some of the variability in external validity, particularly among studies classified as RBE. We considered identifying RCT variants—such as practical clinical trials31,32 and randomized encouragement trials6—developed to strengthen RCTs’ external validity, but information on such variants was not regularly available. Instead, we categorized RBE according to whether allocation to intervention and control conditions was done individually or by group. Our rationale was that results from RBE studies with allocation at the individual level might typically be less likely to translate well to real-world practice because of the high level of control usually exerted over those individuals. Conversely, allocating interventions to groups can increase generalizability because groups are often studied in their natural setting, and the heterogeneity of individuals within groups is likely to be more representative of the natural variation between individuals in the target population.5,6

We also further distinguished between the size and number of groups allocated: (1) 1 group per intervention arm, (2) multiple groups per intervention arm, or (3) 1 or more large geopolitical settings per intervention arm. This was because control over how and to whom the intervention is delivered is generally less rigid when allocation is to larger groups—such as entire cities or communities—than when the intervention is allocated to smaller groups such as classrooms.

As shown in Figure 1, taking into account both study intent and external validity, we postulated that the available evidence on public health interventions exists on a continuum of generalizability to real-world settings. As a general rule, the individual RCT and real-world evaluations fall at the opposite ends of this continuum, and RBE with group allocation (RBE-GA) spans the middle, with larger and less restrictive group allocations tending to produce more generalizable results.

Assessing and Summarizing the Body of Evidence

Details from each study were independently abstracted by two reviewers. Four reviewers in total participated in abstraction, in different combinations of two (Figure 1). We automatically labeled the RCTs as RBE because they randomly allocate participants to intervention and control groups. We assessed all remaining studies for allocation and labeled them accordingly (i.e., allocation = RBE; no allocation = PBE). We labeled RBE studies that allocated participants by group as RBE-GA and categorized them by size and number of groups allocated.

To assess whether the comparative rigor or quality between RBE and PBE differed, we collected other study details from the summary evidence tables for the original reviews, including study location (United States vs non–United States), setting, suitability of study design, and quality of execution rating.18,29 The quality of execution rating assesses limitations related to internal and external validity in the categories of population and intervention description, sampling, measurement, data analysis, interpretation of results, and other biases or concerns. We classified studies as good or fair quality if they had fewer than 5 limitations, and as limited quality if they had 5 or more (Table 1).19

Any disagreements between reviewers were discussed and resolved by consensus and, when required, were resolved by consulting a third reviewer. Initially, the most common disagreements related to the appropriate categorization of RBE studies with group allocation. Disagreements diminished as experience with decision rules increased.

We generated the frequencies for the study characteristics by using Epi Info 7 (Centers for Disease Control and Prevention, Atlanta, GA). We determined the proportion of PBE to RBE studies for each Community Guide health topic, and for each individual Community Guide systematic review within each topic. We conducted cross-tabulations on all study characteristics to allow examination of trends by the type of evidence and to find any significant patterns.

RESULTS

As shown in Figure 1, The Community Guide includes systematic reviews of intervention strategies on 20 health topics to date. Within these health topics, there has been a total of 215 systematic reviews. This project included evidence from 202 systematic reviews, containing 3656 studies, published between 1960 and 2012. We excluded the remaining 13 reviews either because of the inability to obtain detailed information on the review (8 reviews had inadequate information on included studies) or because of the lack of evidence within the review (5 reviews had zero studies included in the analysis, leading to an insufficient evidence recommendation). Included studies used a wide variety of study designs, assessed policy and programmatic interventions implemented on a small scale to nationally, and involved a combination of novel interventions, adaptations of interventions that had been implemented elsewhere, and replications.

There was substantial variation in the number of studies within each health topic (median = 144 studies; range = 15–550), the number of reviews within each health topic (median = 9 reviews; range = 1–37), and the number of studies per review (median = 12; range = 1–155). Of the 3656 studies, 36% of the studies were RCTs, and the remaining 64% were categorized as non-RCT (Figure 1). Of the non-RCTs, 379 studies met the operational definition for RBE, and the remaining 1976 were classified as PBE. The overall proportion of PBE studies and RBE studies, therefore, was 54% (n = 1976) and 46% (n = 1680), respectively (Figure 1). Among the RBE studies, we categorized 38% (n = 637) as RBE-GA.

The categorization of type of evidence within each health topic reviewed in The Community Guide is displayed in Table 2. These topics appear in ascending order by the number of studies included. Tobacco use, motor vehicle injury, vaccinations, oral health, alcohol, health equity, violence, worksite, diabetes, asthma, birth defects, and health communications had a larger proportion of PBE. Cancer, cardiovascular disease, physical activity, mental health, HIV/AIDS, obesity, nutrition, and adolescent health had a larger proportion of RBE. This discrepancy results from a higher proportion of interventions within the former set of topics that are most feasibly implemented within entire geopolitical units (e.g., states), and for which researcher allocation is difficult or impossible.

TABLE 2—

Distribution of Evidence by Community Guide Topic Area: 1997–2014

| Health Topic | No. of Reviews | No. of Studies | PBE, No. (%) | RBE-GA, No. (%) | RBE, No. (%) |

| Adolescent health | 1 | 15 | 2 (13) | 6 (40) | 7 (47) |

| Health communication | 1 | 22 | 14 (64) | 8 (36) | 0 (0) |

| Birth defects | 2 | 25 | 22 (88) | 2 (8) | 1 (4) |

| Asthma | 2 | 31 | 16 (52) | 2 (6) | 13 (42) |

| Nutrition | 1 | 63 | 15 (24) | 45 (71) | 3 (5) |

| Obesity | 12 | 73 | 17 (23) | 16 (22) | 40 (55) |

| Diabetes | 8 | 109 | 69 (63) | 17 (16) | 23 (21) |

| HIV/AIDS | 11 | 119 | 18 (15) | 52 (44) | 49 (41) |

| Mental health | 5 | 121 | 40 (33) | 23 (19) | 58 (48) |

| Worksite | 6 | 141 | 90 (64) | 32 (23) | 19 (13) |

| Physical activity | 14 | 147 | 71 (48) | 41 (28) | 35 (24) |

| Violence | 23 | 147 | 76 (52) | 29 (20) | 42 (29) |

| Cardiovascular disease | 3 | 155 | 25 (16) | 57 (37) | 73 (47) |

| Health equity | 11 | 185 | 112 (61) | 10 (5) | 63 (34) |

| Alcohol | 10 | 218 | 173 (79) | 11 (5) | 34 (16) |

| Oral health | 5 | 263 | 204 (78) | 17 (6) | 42 (16) |

| Motor vehicle injury | 22 | 375 | 326 (87) | 38 (10) | 11 (3) |

| Cancer | 37 | 397 | 85 (21) | 111 (28) | 201 (51) |

| Vaccination | 20 | 500 | 264 (53) | 54 (11) | 182 (36) |

| Tobacco use | 21 | 550 | 337 (61) | 66 (12) | 147 (27) |

| Total | 215 | 3656 | 1976 (54) | 637 (17) | 1043 (29) |

Note. PBE = practice-based evidence; RBE = research-based evidence; RBE-GA = research-based evidence with group allocation. Health topics are listed in ascending order based on the number of studies included in the systematic reviews.

Among the non-RCTs, we categorized 16% as RBE by virtue of allocation (Figure 1 and Table 3). Almost all (96%) studies that evaluated policy interventions were PBE although there was a more balanced distribution between RBE (58%) and PBE (42%) among the studies evaluating program-based interventions (Table 3). Overall, there was an even division between RBE and PBE for US studies (49% vs 51%), with a somewhat higher proportion of non-US studies being PBE (61% PBE vs 39% RBE). Among studies conducted in health care settings, a larger proportion were RBE (64%), whereas studies conducted in worksite (54%) and community (66%) settings included a larger proportion of PBE. The majority (71%) of the studies with greatest suitability of study design were RBE, and the majority of the evidence with moderate (96%) or least (97%) suitability of study design was PBE.

TABLE 3—

Characteristics of All Studies Categorized by Type of Evidence: The Community Guide, 1997–2014

| Characteristic | RBE, No. (%)a | PBE, No. (%)a | Total | RBE-GA, No. (%)b |

| RCT | ||||

| Yes | 1301 (100) | 0 (0) | 1301 | 414 (32) |

| No | 379 (16) | 1976 (84) | 2355 | 223 (59) |

| Intervention type | ||||

| Program | 1588 (58) | 1166 (42) | 2754 | 589 (37) |

| Policy | 27 (4) | 692 (96) | 719 | 9 (33) |

| Program + policy | 65 (36) | 118 (64) | 183 | 39 (60) |

| United States | ||||

| Yes | 1283 (49) | 1361 (51) | 2644 | 497 (39) |

| No | 397 (39) | 615 (61) | 1012 | 140 (35) |

| Setting | ||||

| Health care | 645 (64) | 365 (36) | 1010 | 185 (29) |

| Worksite | 112 (46) | 129 (54) | 241 | 58 (52) |

| Community | 725 (34) | 1403 (66) | 2128 | 347 (48) |

| Health care + community | 198 (71) | 79 (29) | 277 | 47 (24) |

| Suitability of study designc | ||||

| Greatest | 1632 (71) | 655 (29) | 2287 | 612 (38) |

| Moderate | 16 (4) | 424 (96) | 440 | 11 (69) |

| Least | 32 (3) | 897 (97) | 929 | 14 (44) |

| Quality of executiond | ||||

| Good or fair | 1487 (48) | 1629 (52) | 3116 | 564 (38) |

| Limited | 143 (31) | 314 (69) | 457 | 61 (43) |

| Unknown | 50 (60) | 33 (40) | 83 | 12 (24) |

| Total | 1680 (46) | 1976 (54) | 3656 | 637 (38) |

Note. PBE = practice-based evidence; RBE = research-based evidence; RBE-GA = research-based evidence with group allocation; RCT = randomized controlled trial.

% refers to row percentage (e.g., proportion of RBE within RCTs is equal to 1301/1301 × 100% = 100%).

Within the RBE-GA results, % refers to the percentage of RBE studies that can be categorized as RBE-GA (e.g., proportion of RBE-GA within RCTs is equal to 414/1301 × 100% = 32%).

Suitability of study design: The Community Guide classifies studies on the basis of the suitability of their study design.29

Quality of execution was determined by the systematic review teams who originally conducted the individual reviews, using Community Guide methods on quality scoring.19 The quality scoring methods rate studies based on threats to internal and external validity in 6 areas (descriptions, sampling, measurement, analysis, interpretation of results, and other). Studies with < 5 limitations were considered to be of “good” or “fair” quality, and included in the analysis for the original review. Studies with ≥ 5 limitations were considered “limited,” and were excluded from all analyses. Studies with ≥ 5 limitations therefore did not contribute to Community Preventive Services Task Force recommendations and other findings (recommended against, insufficient evidence).

Although PBE studies constitute just over half (52%) of studies with good or fair quality of execution, they represent a higher proportion of studies rated as limited quality (69%), which are eliminated from consideration in Community Guide reviews and their related CPSTF recommendations or other findings. Of all PBE studies, 15.9% were rated as limited quality, compared with 8.5% of all RBE studies. Taking into account only those studies that contributed to CPSTF recommendations (i.e., studies with good or fair quality of execution) the proportion of PBE studies and RBE studies were 52% (n = 1629) and 48% (n = 1487), respectively. We categorized 38% of these studies (n = 564) as RBE-GA.

The RBE-GA studies constitute 38% of the total RBE studies and 17% of the overall body of evidence (Figure 1 and Table 3). These studies tended to be RCTs, program-based interventions, conducted in the United States, within community settings, and with good to fair quality of execution (Table 3). Among the RBE-GA studies, 11% had 1 “smaller” group per study arm, 78% of the studies had more than 1 group per arm, and the remaining 11% had 1 or more large geopolitical units per arm (Figure 1). Types of groups included primary care practices, schools, classrooms, worksites, drinking establishments, offices, stores, and households.

DISCUSSION

To understand the effectiveness of a public health intervention, it is important to consider all types of available evidence.33 In this study, we documented the ubiquity and prominence of PBE in Community Guide systematic reviews of population-based public health interventions. The majority of PBE identified through the review process was of adequate quality—according to Community Guide quality-of-execution standards—to be included in evidence syntheses. This study therefore demonstrates that PBE can be effectively incorporated into systematic reviews alongside RBE.

Within Community Guide reviews, we categorized about half of the studies as PBE, and another quarter as RBE with group allocation—both types of evidence that tend to have higher external validity and practical utility. The large proportion of RBE-GA studies (38%) mitigates some of the concerns regarding the external validity of the RBE studies included in Community Guide reviews. These RBE-GA studies often demonstrated an effort to maximize external validity.

The large body of evidence—3656 studies—that was included in this analysis assessed the effectiveness of public health interventions ranging from community-based education programs, to policy implementation and enforcement activities, to health care systems interventions. The variety of interventions, along with the range of the topics, makes this body of evidence representative of the breadth of published evidence on public health interventions.

We found that PBE was more common for topics that contained a larger proportion of policy-based interventions and interventions conducted in community settings, whereas RBE was more prevalent for topics with a larger proportion of programmatic interventions and interventions in health care settings. Because of the challenges of conducting highly controlled RCTs within the community, many researchers and evaluators use nonrandomized observational designs to assess intervention effectiveness.6–8 Furthermore, policy interventions are generally implemented by policymakers with little ability for researcher participation in allocation decisions. In these situations, practice-based study designs and methods, such as interrupted time series designs, may be the only, or most feasible and effective options for determining the impact of the intervention.6,8 Such designs also have the advantage of generating evidence in a timely and cost-effective manner by taking advantage of interventions that would be implemented regardless of whether they were studied.

Limitations

One limitation of our analysis was the inclusion of the same study in multiple reviews. Some studies may therefore have been included in our assessment more than once. However, each study contributes independently to each review and thus should not bias the overall findings.

Some Community Guide systematic reviews were conducted more than 10 years ago, and included studies from more than 50 years ago. For some of the older reviews, the full list of studies included and the full-text articles were not available and hence are not included in this project. These studies account for approximately 5% of the entire body of evidence. In light of the large body of evidence included in this study, this should not have a substantial effect on the overall results.

To adequately assess the relative proportions of PBE and RBE across the full range of public health topics and interventions types included in The Community Guide, it was necessary to develop an operational definition that could be applied to all studies. We did not have access to detailed evidence tables for some early Community Guide reviews, and it was not feasible to go back, relocate, and reabstract all data of interest from all of the original 3656 studies. Instead, we needed to use variables that were readily accessible in the summary evidence tables originally produced for the systematic reviews from which the individual studies were drawn. This strategy allowed an assessment of PBE consistent with a number of the principles iterated in recent definitions of PBE—securing data from field-based practices, evaluating ongoing programs and policies, using study designs more focused on external validity, and otherwise giving greater consideration to external validity.5,20,21 The latter principle could only be cursorily addressed, however, through using each study’s quality-of-execution rating (the total number of internal and external validity limitations). To further tease out the degree to which specific elements of external validity are adequately addressed in individual studies, future research could focus on a smaller body of evidence from a sample of Community Guide reviews, abstracting data from the original studies to provide a more detailed analysis of how study intent and individual versus group allocation relate to external validity criteria.

Conclusions

This study demonstrates that PBE is prevalent in public health literature, and both PBE and RBE have been well represented in The Community Guide’s systematic reviews. Although the actual distribution differs by health topic and variables such as the setting and type of intervention, there is a substantial presence across all Community Guide reviews of evidence with external validity and practical utility. Both PBE and RBE provide information of adequate quality to make informed public health decisions, and PBE is an integral part of evidence in systematic reviews on effectiveness of public health interventions.

Despite the overall ubiquity of PBE in Community Guide reviews, we eliminated more PBE than RBE because of limited quality of execution. In addition, many PBE and RBE studies included in Community Guide reviews only received a fair quality-of-execution rating, often missing information on external validity. For RBE, we therefore call for more reporting on the domains of PRECIS-2—a tool that helps researchers think about how their design decisions affect applicability.34 For PBE, we call for work to strengthen practice-relevant designs, and for the development of a framework or taxonomy aimed at increasing the amount and quality of PBE available to fortify the evidence base for public health practice. This framework should build on existing work34–38 to address applicability, implementation, fidelity and adaptation, and continuous quality improvement.

Public Health Implications

The inclusion of substantial portions of PBE, in addition to RBE, in Community Guide reviews (1) suggests that PBE of adequate rigor to inform practice is being produced and (2) should increase the confidence of practitioners, policymakers, and others that The Community Guide provides recommendations with relevance for real-world populations, settings, and contexts. Because more PBE than RBE is of limited quality, and because Community Guide reviews include numerous PBE and RBE studies of fair rather than good quality, additional work is warranted on refining practice-relevant study designs and methods and strengthening reporting standards. In addition, because of the importance of program evaluation as a form of PBE, public health practitioners are encouraged to undertake evaluations and partner with evaluators or researchers to publish their findings in peer-reviewed journals. Such evaluation studies provide important information on effectiveness and applicability to different settings and situations. Finally, all researchers, evaluators, and others are encouraged to follow reporting standards for external validity to clarify applicability to other populations, settings, and situations and to enable meaningful inclusion of PBE in building the evidence base for public health practice.

ACKNOWLEDGMENTS

The work of K. K. Proia and N. Vaidya was supported with funds from the Oak Ridge Institute for Science and Education.

We would like to thank Lawrence W. Green for early discussions about the concepts of practice-based evidence and external validity, as well as about the placement of research-based evidence designs with group allocation.

HUMAN PARTICIPANT PROTECTION

Human participant protection was not required because no human participants were involved in the research reported in this article.

REFERENCES

- 1.Ball K, Timperio AF, Crawford DA. Understanding environmental influences on nutrition and physical activity behaviors: where should we look and what should we count? Int J Behav Nutr Phys Act. 2006;3:33. doi: 10.1186/1479-5868-3-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Barr VJ, Robinson S, Marin-Link B et al. The chronic care model: an integration of concepts and strategies from population health promotion and the chronic care model. Hosp Q. 2003;7(1):73–82. doi: 10.12927/hcq.2003.16763. [DOI] [PubMed] [Google Scholar]

- 3.Bunnell R, O’Neil D, Soler R et al. Fifty communities putting prevention to work: accelerating chronic disease prevention through policy, systems and environmental change. J Community Health. 2012;37(5):1081–1090. doi: 10.1007/s10900-012-9542-3. [DOI] [PubMed] [Google Scholar]

- 4.Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-experimental Designs for Generalized Causal Inference. Boston, MA: Houghton Mifflin Company; 2002. [Google Scholar]

- 5.Brownson RC, Diez Roux AV, Swartz K. Commentary: generating rigorous evidence for public health: the need for new thinking to improve research and practice. Annu Rev Public Health. 2014;35:1–7. doi: 10.1146/annurev-publhealth-112613-011646. [DOI] [PubMed] [Google Scholar]

- 6.Mercer SL, DeVinney BJ, Fine LJ, Green LW, Dougherty D. Study designs for effectiveness and translation research: identifying trade-offs. Am J Prev Med. 2007;33(2):139–154. doi: 10.1016/j.amepre.2007.04.005. [DOI] [PubMed] [Google Scholar]

- 7.Sanson-Fisher RW, Bonevski B, Green LW, D’Este C. Limitations of the randomized controlled trial in evaluating population-based health interventions. Am J Prev Med. 2007;33(2):155–161. doi: 10.1016/j.amepre.2007.04.007. [DOI] [PubMed] [Google Scholar]

- 8.Sanson-Fisher RW, D’Este CA, Carey ML, Noble N, Paul CL. Evaluation of systems-oriented public health interventions: alternative research designs. Annu Rev Public Health. 2014;35:9–27. doi: 10.1146/annurev-publhealth-032013-182445. [DOI] [PubMed] [Google Scholar]

- 9.Barkham M, Mellor-Clark J. Rigour and relevance: practice-based evidence in the psychological therapies. In: Rowland N, Goss S, editors. Evidence-Based Counselling and Psychological Therapies: Research and Applications. London, England: Routledge; 2000. [Google Scholar]

- 10.Green LW. From research to “best practices” in other settings and populations. Am J Health Behav. 2001;25(3):165–178. doi: 10.5993/ajhb.25.3.2. [DOI] [PubMed] [Google Scholar]

- 11.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006;96(3):406–409. doi: 10.2105/AJPH.2005.066035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Margison FR, Barkham M, Evans C et al. Measurement and psychotherapy: evidence-based practice and practice-based evidence. Br J Psychiatry. 2000;177(2):123–130. doi: 10.1192/bjp.177.2.123. [DOI] [PubMed] [Google Scholar]

- 13.The Community Guide. The Guide to Community Preventive Services: What Works to Promote Health? 2016. Available at: http://www.thecommunityguide.org. Accessed March 12, 2016.

- 14.Centers for Disease Control and Prevention Partnerships to Improve Community Health (PICH). 2015Available at: http://www.cdc.gov/nccdphp/dch/programs/partnershipstoimprovecommunityhealth/index.html. Accessed March 12, 2016

- 15.Centers for Disease Control and Prevention. National Public Health Improvement Initiative. 2015. Available at: http://www.cdc.gov/stltpublichealth/nphii/about.html. Accessed August 11, 2015.

- 16.Centers for Medicare and Medicaid Services. Medicaid Incentives for the Prevention of Chronic Diseases Model. 2015. Available at: http://innovation.cms.gov/initiatives/mipcd. Accessed August 11, 2015.

- 17. Health Resources and Services Administration. Maternal, infant, and early childhood home visiting. Available at: http://mchb.hrsa.gov/programs/homevisiting. Accessed August 11, 2015.

- 18.US Department of Health and Human Services. Introducing Healthy People 2020. 2014. Available at: https://www.healthypeople.gov/2020/About-Healthy-People. Accessed March 12, 2016.

- 19.Zaza S, Wright-De Aguero LK, Briss PA et al. Data collection instrument and procedure for systematic reviews in the guide to community preventive services. Am J Prev Med. 2000;18(1 suppl):44–74. doi: 10.1016/s0749-3797(99)00122-1. [DOI] [PubMed] [Google Scholar]

- 20.Wilson KM, Brady TJ, Lesesner C on behalf of the NCCDHPH Workgroup on Translation. An organizing framework for translation in public health: the Knowledge to Action Framework. Prev Chronic Dis. 2011;8(2):A46. [PMC free article] [PubMed] [Google Scholar]

- 21.Ammerman A, Woods Smith T, Calancie L. Practice-based evidence in public health: improving reach, relevance, and results. Annu Rev Public Health. 2014;35:47–63. doi: 10.1146/annurev-publhealth-032013-182458. [DOI] [PubMed] [Google Scholar]

- 22.Barkham M, Hardy GE, Mellor-Clark J. Developing and Delivering Practice-Based Evidence: A Guide for the Psychological Therapies. West Sussex, UK: Willey-Blackwell; 2010. [Google Scholar]

- 23.Barkham M, Margison F, Leach C et al. Service profiling and outcomes benchmarking using the CORE-OM: toward practice-based evidence in the psychological therapies. J Consult Clin Psychol. 2001;69(2):184–196. doi: 10.1037//0022-006x.69.2.184. [DOI] [PubMed] [Google Scholar]

- 24.Horn SD, Gossaway J. Practice-based evidence study design for comparative effectiveness research. Med Care. 2007;45(10, suppl 2):S50–S57. doi: 10.1097/MLR.0b013e318070c07b. [DOI] [PubMed] [Google Scholar]

- 25.Lambert MJ, Whipple JL, Smart DW, Vermeersch DA, Nielsen SL, Hawkins EJ. The effects of providing therapists with feedback on patient progress during psychotherapy: are outcomes enhanced? Psychother Res. 2001;11(1):49–68. doi: 10.1080/713663852. [DOI] [PubMed] [Google Scholar]

- 26.Lucock M, Leach C, Iveson S, Lynch K, Horsefield C, Hall P. A systematic approach to practice-based evidence in a psychological therapies service. Clin Psychol Psychother. 2003;10(6):389–399. [Google Scholar]

- 27.McDonald PW, Viehbeck S. From evidence-based practice making to practice-based evidence making: creating communities of (research) and practice. Health Promot Pract. 2007;8(2):140–144. doi: 10.1177/1524839906298494. [DOI] [PubMed] [Google Scholar]

- 28.Urban JB, Trochim W. The role of evaluation in research—practice integration working toward the “golden spike.”. Am J Eval. 2009;30(4):538–553. [Google Scholar]

- 29.Briss PA, Zaza S, Pappaioanau M et al. Developing an evidence-based Guide to Community Preventive Services–methods. Am J Prev Med. 2000;18(1 suppl):35–43. doi: 10.1016/s0749-3797(99)00119-1. [DOI] [PubMed] [Google Scholar]

- 30.Zaza S, Briss PA, Harris KW, editors. The Guide to Community Preventive Services: What Works to Promote Health? New York, NY: Oxford University Press; 2005. Task Force on Community Preventive Services. [Google Scholar]

- 31.Glasgow RE, Magid DJ, Beck A, Ritzwoller D, Estabrooks PA. Practical clinical trials for translating research to practice: design and measurement recommendations. Med Care. 2005;43(6):551–557. doi: 10.1097/01.mlr.0000163645.41407.09. [DOI] [PubMed] [Google Scholar]

- 32.Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290(12):1624–1632. doi: 10.1001/jama.290.12.1624. [DOI] [PubMed] [Google Scholar]

- 33.Nutbeam D. The challenge to provide “evidence” in health promotion. Health Promot Int. 1999;14(2):99–101. [Google Scholar]

- 34.Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: designing trials that are fit for purpose. BMJ. 2015;350:h2147. doi: 10.1136/bmj.h2147. [DOI] [PubMed] [Google Scholar]

- 35.Glasgow RE, Klesges LM, Dzewaltowski DA, Estabrooks PA, Vogt TM. Evaluating the impact of health promotion programs: using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Educ Res. 2006;21(5):688–694. doi: 10.1093/her/cyl081. [DOI] [PubMed] [Google Scholar]

- 36.Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof. 2006;29(1):126–153. doi: 10.1177/0163278705284445. [DOI] [PubMed] [Google Scholar]

- 37.Spencer LM, Schooley MW, Anderson LA et al. Seeking best practices: a conceptual framework for planning and improving evidence-based practices. Prev Chronic Dis. 2013;10:E207. doi: 10.5888/pcd10.130186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Steckler A, McLeroy KR. The importance of external validity. Am J Public Health. 2008;98(1):9–10. doi: 10.2105/AJPH.2007.126847. [DOI] [PMC free article] [PubMed] [Google Scholar]