Abstract

We investigate emerging mobile crowd sensing (MCS) systems, in which new cloud-based platforms sequentially allocate homogenous sensing jobs to dynamically-arriving users with uncertain service qualities. Given that human beings are selfish in nature, it is crucial yet challenging to design an efficient and truthful incentive mechanism to encourage users to participate. To address the challenge, we propose a novel truthful online auction mechanism that can efficiently learn to make irreversible online decisions on winner selections for new MCS systems without requiring previous knowledge of users. Moreover, we theoretically prove that our incentive possesses truthfulness, individual rationality and computational efficiency. Extensive simulation results under both real and synthetic traces demonstrate that our incentive mechanism can reduce the payment of the platform, increase the utility of the platform and social welfare.

Keywords: mobile crowd sensing system, online incentive, truthful mechanism, single-parameter mechanism

1. Introduction

With abundant portable sensors (e.g., camera, compass, microphone, gyroscope, etc.) embedded in mobile devices (e.g., smart phones, wearable devices, tablets, etc.), people are available to collect sensing data when they roam in the city. Owing to the low deploying cost and high sensing coverage, numerous mobile crowd sensing (MCS) systems spring up to solve large-scale mobile sensing tasks, such as wireless signal strengths [1], traffic information mapping [2], air quality monitoring [3], parking [4], and so on.

In a typical MCS system, a cloud-based platform first divides sensing tasks into a set of unit sensing jobs. For example, collecting data for one location at one time slot can become a unit job for collecting sensing data in the city during a long time. Then, the platform publishes these jobs and takes rounds to make irreversible online decisions on user recruitment for every job before the deadline. Mobile users join and leave the system dynamically with pleasure. The selected users submit data to the platform after undertaking assigned sensing jobs.

Motivating users to participate is the key to the success of MCS systems. Since people are selfish in general, few mobile users voluntarily participate in sensing, especially considering the fees of uploading data through cellular networks and limited resources in smart phones, such as memory and energy. Consequently, MCS systems would fail without desirable sensing data from enough participants. To solve this problem, the design of incentive mechanisms is an effective approach via giving some rewards to users as compensations.

Besides the incentive mechanism, the quality of sensing service is crucial to the MCS system. As we know, inefficient sensing produces low quality sensing data, which harms the preciseness of MCS systems directly. To support the desired service, the platform shall select winners with high qualities of sensing service. Recently, some research works [5,6,7] have proposed auction-based incentive mechanisms with the consideration of the service quality. However, in these works, users’ sensing qualities are known to the system as prior information while determining winners.

However, the users’ service qualities are uncertain and unknown to the system during winner selections in practice. Since users move around and the wireless signals are not stable, users’ service qualities vary at times. Moreover, as users’ service qualities can be only calculated after submitting sensing data, the qualities are unknown to the platform while selecting winners. That is to say, service qualities are ex post information. Recently, some research works [8,9,10] have paid attention to the ex post service quality with the consideration of dynamic participation. However, their systems rely on users’ historical movement regulations to estimate users’ service qualities. Without this previous knowledge of users, these works cannot fit in a new MCS system. Besides, these works ignore users’ dishonest behaviors in overstating bidding prices, which results in more payment and less utility of the platform.

To solve the aforementioned problem, we propose a novel truthful incentive-based on online auction mechanism (TOAM) with the consideration of ex post service quality and dynamically-arriving users for a new MCS system without requiring previous knowledge of the users. In TOAM, the platform determines a winner according to the arriving users’ bidding prices and learned expected service qualities in every allocation round. As users are selfish, but rational in general, users may overstate their costs in their bids for higher payoff, which results in poor outcomes [11]. Hence, truthfulness and individual rationality are essential in auction-based incentives, where truthful costs are guaranteed in users’ bids and the payoff of winners is not negative. For the uncertain and unknown service quality, we believe users’ expected service qualities are fixed. Furthermore, one user’s service quality of one job is stochastically drawn from some unknown distributions. Therefore, the platform learns users’ expected service qualities and makes sequential decisions on winner selections with an exploration-exploitation trade-off. The exploration-exploitation is a balance between remaining with the best choice that can gain the highest profits once and exploring a new choice that might give higher profits in the future.

Calculating payment in truthful incentive mechanisms could be computationally impossible owing to the online restriction [12]. To achieve computational efficiency, we adopt a framework of designing a truthful-in-expectation mechanism with single-parameter users [12] in TOAM. Here, the user’s expected service quality is the single parameter. With random sampled bidding prices for winner selections and payment decisions, the key is to design a novel ex post monotonicity of the allocation rule of sensing jobs.

The major technical contributions in our paper are as follows.

To the best of our knowledge, this is the first truthful incentive based on auction theory with consideration of ex post service qualities and dynamically-arriving users in a new MCS system without requiring previous knowledge of users. In TOAM, the platform learns users’ expected service qualities and makes sequential online decisions on winner selections with an exploration-exploitation trade-off.

To achieve truthfulness with the consideration of computational efficiency in our situation, we adopt a framework proposed in [12] and design a novel ex post monotone allocation rule to select proper winners.

We analyze if TOAM possesses truthfulness, individual rationality and computational efficiency theoretically. Besides, extensive simulation results on both real and synthetic traces verify the efficient of our incentive TOAM, which can decrease the payments, improve the utility of the platform and social welfare.

The rest of the paper is organized as follows. We introduce our system model, review some technical preliminaries and formulate our problem in Section 2. Our incentive TOAM is detailed in Section 3. Three attractive properties of TOAM are proven in Section 4. We evaluate TOAM and present the results in Section 5. Related work is reviewed in Section 6, and the conclusion is drawn in Section 7 finally.

2. System Model, Technical Preliminaries and Problem Formulation

2.1. System Model

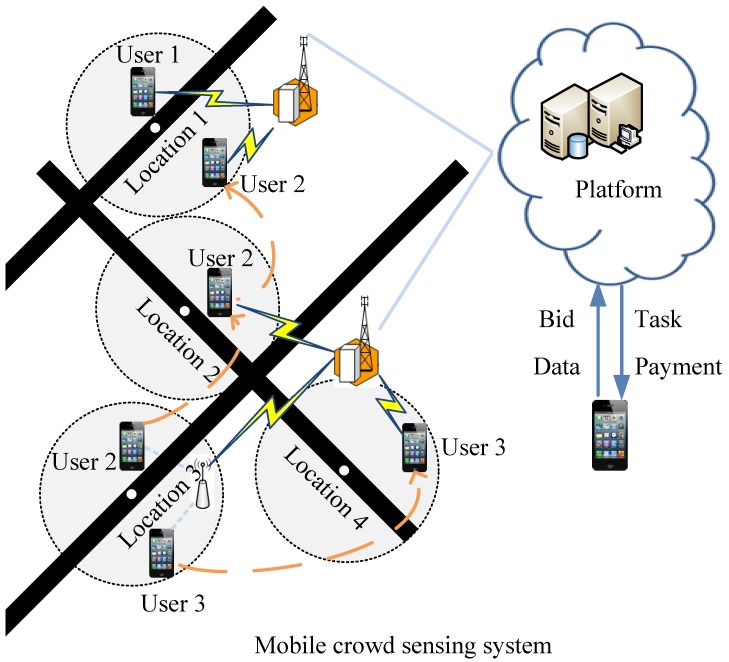

With an instance of MCS systems shown in Figure 1, we introduce our system model. The system consists of two types of entities, namely the platform residing in the cloud and a set of mobile phone users, denoted as . For the platform, the IaaSmodel of the cloud service presented in [13] can be used so as to run the platform in a trusted state. For the mobile phone users, they can download corresponding crowd sensing applications to participate in collecting sensory data.

Figure 1.

An example of the mobile crowd sensing system.

To conduct some homogeneous sensing tasks, at first, the platform publishes a set of unit sensing jobs in order to collect sensory data from a set of locations before deadline T. Then, at any time slot , the platform selects a winner from a set of arriving users at any location to execute a sensing job. To simplify, we assume users can complete one sensing job at their current locations within one slot. Since users roam in the city and participate in sensing activities intermittently, the available users for a job in location vary with time. Therefore, the platform should make sequential online decisions to allocate jobs. An the end of the time slot, the system pays the winner rational money after receiving sensing data.

To select appropriate users, both users’ costs and service qualities should be considered. The cost (i.e., ) of user i for one sensing job is private information, where and are known as the minimum and maximum cost. To calculate the cost, the application in a mobile phone can measure the cost of resources (e.g., computing resource and energy) for undertaking one unit sensing job. and are the maximum and minimum costs of resources in various mobile phones measured by the system before the mobile crowd sensing systems’ release. On account of different sensing devices equipped in mobile phones and users’ diverse behaviors, users have differential service qualities. We believe every user’s sensing ability is static, so we assume the expected service quality (i.e., ) of user i is fixed and also privately held by user i, where and are the minimum and maximum value, respectively. Users’ service qualities vary with time, so we assume that the service quality of user i at time slot t is a stochastic parameter following a fixed distribution on with expectation . There are many methods to reflect users’ service qualities, such as directly calculating the deviation of users’ sensory data from the ground truths [14], inferred from users’ data by utilizing algorithms proposed in [15,16,17] without ground truths, etc. However, as our auction-based incentive is not restricted to any particular methods of quality calculation, the details of these methods are out of the scope of this paper. Instead, we give some values to represent qualities directly, where a high quality value means good service quality.

A general assumption [18] is given in this paper about auction mechanisms, i.e., users are symmetric, independent and risk-neutral. That is to say, users have the same common knowledge, except their private information, and determine their bidding prices independently, so as to achieve their maximum utilities without worrying about risks.

2.2. Technical Preliminaries

We review some crucial solution concepts used in this paper.

Definition 1.

(A user’s utility at time slot t): At time slot t, the utility of user equals if user i is online and selected as a winner to execute one sensing job, where denotes the payment from the platform; otherwise, .

Definition 2.

(Platform’s utility): The utility of the platform is defined as Equation (1), where α is a coefficient that transforms service quality to monetary reward, and (or 0) indicates that user i is chosen (or not) at time t.

(1)

Definition 3.

(Social welfare): Social welfare of the mobile crowd sensing system is defined as Equation (2):

(2)

Definition 4.

(Individual rationality): A mechanism satisfies the individual rationality constraints if the utility of user i is not negative at every time slot t before deadline T.

Definition 5.

(Ex-post monotonicity of the allocation rule [12]): An allocation rule is ex post monotone if increasing one user’s bidding price does not raise his or her probability of winning while keeping others’ bidding prices the same.

Definition 6.

(Truthful-in-expectation mechanism [12,19]): A mechanism is truthful-in-expectation if a risk-neutral user maximizes expected utility by adopting a truthful strategy in bidding, whatever the bids of others, where the expectation is taken with random coin flips of the mechanism.

Definition 7.

(Computational efficiency [20]): An incentive is computational efficiency if it takes polynomial time to produce the outcome.

2.3. Problem Formulation

As users’ service qualities are uncertain and unknown, the goal of the platform is to select winners with the largest expected service qualities and smallest costs to execute sensing jobs. Mathematically, this can be represented as follows:

| (3) |

There are two objectives in the above Equation (3): To maximize the sum of the expected service qualities of winners and to minimize the sum of the costs of winners. It is not easy to solve multi-objective programming; we take a common approach in optimization and convert this multi-objective programming to a single-objective programming. Then, the platform aims to select cost-effective workers with the highest expected price-quality ratios as follows.

| (4) |

We utilize the technique of designing a single-parameter mechanism to solve the above problem. Though two parameters—cost and expected service quality—should be considered in allocations, users’ costs can be revealed in their bidding prices if truthfulness is guaranteed. Thus, the expected service quality is the single-parameter in the above problem. However, in order to guarantee the deterministic truthfulness, it is costly or impossible to calculate winners’ payments by adopting the Vickrey–Clarke–Groves (VCG) auction due to online constraints in MCS systems. Therefore, to satisfy computational efficiency, our goal turns into the design of a truthful-in-expectation mechanism with the consideration of dynamically-arriving users and their unknown service qualities.

For the single-parameter mechanism, Babioff et al. [12] propose a generic framework in which an ex post monotone allocation rule can be transformed into a truthful-in-expectation mechanism via involving random perturbation to users’ bidding prices. To achieve our goal, we aim to design a novel ex post monotone allocation rule that can be transformed into a truthful-in-expectation mechanism by utilizing the transformation proposed in [12].

Given a monotone allocation rule and a parameter , we describe the transformation procedure proposed in [12] with our situation so as to realize a truthful-in-expectation mechanism.

User i submits his or her bidding price (i.e., when he or she participates in the system for the first time.

The platform computes user i’s new bidding price for allocation as follows. With probability , ; else, ,where is picked uniformly at random.

For every time slot t, the platform assigns sensing jobs to users for every sensing location according to the allocation rule , where consists of new bidding prices for all users in .

The platform calculates users’ payments as follows. For every selected user i, if ; otherwise . For any other unselected user k, .

Table 1 lists frequently-used notations.

Table 1.

Frequently-used notations.

| Notation | Description |

|---|---|

| N, i | Set of users and a user. |

| n | Number of users. |

| T, t | Deadline and a time slot. |

| L, | Set of locations and the j-th location. |

| Set of arriving users at a location at time slot t. | |

| , | User i’s cost and bidding price. |

| User i’s new bidding price for allocation. | |

| , | Maximum and minimum cost. |

| , , | User i’s service quality at time slot t, expected service quality and learned expected service quality. |

| , | User i’s payment and utility at time slot t. |

| Utility of the platform. | |

| Active set of users at location at time slot t. | |

| User i’s number of being randomly selected. | |

| , | User i’s total observed service quality and observed service quality at time slot t. |

| Others’ bidding prices, except user i. | |

| User i’s alternate bidding price that is higher than . | |

| B | Bid vector of all users with and . |

| Alternate bid vector of all users with and . | |

| , | User i’s learned expected service quality at time slot t with the bid vector B and . |

| , | User i’s probability of being selected at time slot t with the bid vector B and . |

3. Incentive Mechanism Design

3.1. System Overview

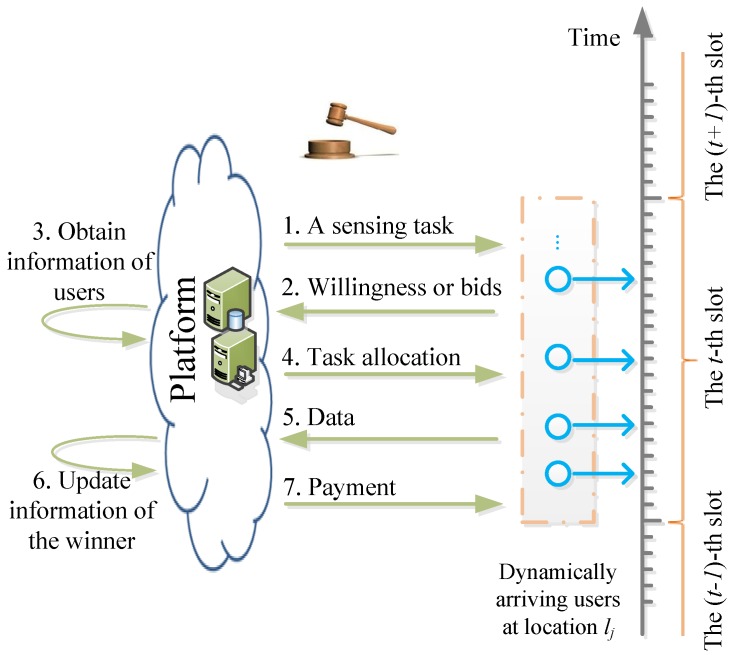

For simplicity, we assume that there is one sensing task that needs to collect data from all sensing locations L during T time slots. The platform divides the tasks into a set of spatio-temporal sensing jobs. For example, T sequential sensing jobs are required to be allocated for each location . Since users move around and participate in sensing intermittently, the platform requires selecting winners irrevocably in sequential auction rounds. Take one auction round for a job at sensing location during time t as an example: TOAM includes seven steps, as shown in Figure 2. We describe the procedure of our mechanism briefly as follows:

The platform announces the sensing job when users arrive at location .

If users are new in the system, they create and submit their optimal bidding prices for this job to the platform independently. Otherwise, users are just required to show their willingness of participation.

The platform obtains users’ information for this allocation. If users are new, the platform gets and records their bidding prices from their bids. Otherwise, the system obtains users’ information from the storage, including users’ bidding prices, estimated expected service qualities, and so on.

Based on users’ new bidding prices perturbed by the platform randomly and users’ expected service qualities observed by the platform, the platform selects a winner to perform this job.

At the end of time t, the winner submits sensory data to the platform.

The platform updates the information of the winner if necessary according to the allocation rule.

The platform pays the winner with a rational price.

Figure 2.

Our incentive framework for a mobile crowd sensing system with dynamic users and uncertain service quality.

3.2. Allocation Algorithm

As we described before, the key of TOAM is to design an ex post monotone sensing task allocation algorithm. In reality, users who compete for one job vary over time. In order to realize the ex post monotone, the allocation algorithm should be designed carefully to control the influence of the liar who overstates his or her bidding price. The main idea of our algorithm can be reduced to four points principally.

For one job, construct an active set of users according to a bidding bound (reserve price) for a later random selection. In order to decrease the winning probability of the liar, we select a bidding bound from the arriving bidding prices with some probabilities. If users’ bidding prices are higher than the bidding bound, remove these users from the set of arriving users. The rest of arriving users construct the active set. To avoid removing too many users at the beginning of the system, the probability of selecting a low bidding bound increases with the passage of time.

With the increasing updated number, we estimate users’ expected service qualities in the non-decreasing trend. By doing so, it reduces the uncertain influence of being selected on the learned expected service qualities.

Update winners’ information (including the expected service qualities) if they are selected randomly. Due to the liar’s overstated bidding price, some others may win instead of the liar. We call these winners direct-winners. These direct-winners’ expected service qualities may be improved in this way. Thus, the liar’s winning probability can be reduced to a certain extent in the future.

Do not update winners’ information if they are selected according to the price-quality ratios. If winners are selected according to price-quality ratios, the updated information of direct-winners may affect the results. However, as winners’ information remains the same in this way, the direct-winners will not disturb others more. Therefore, the influence of the liar is controlled.

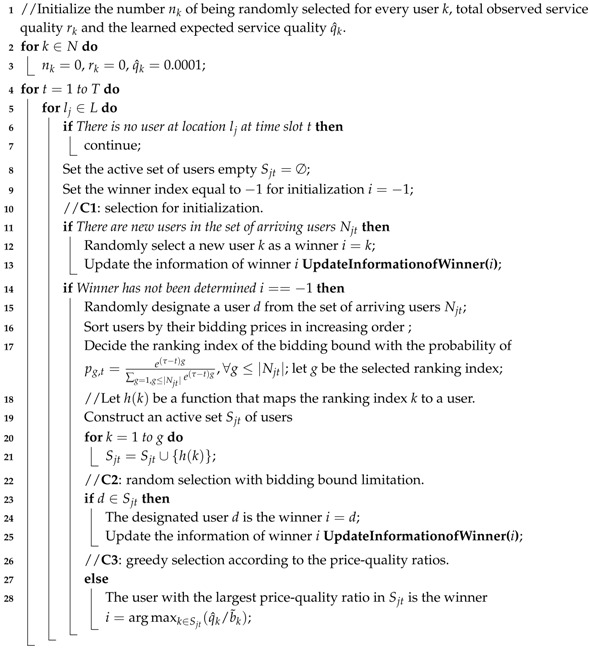

The pseudocode of the allocation algorithm in TOAM is shown in Algorithm 1. We take an allocation round at location during time slot t as an example.

If there is no user for this allocation round, the platform does nothing. Otherwise, in Algorithm 1, there are three cases of being selected from the set of available users, which are random selection for initialization, random selection with the bidding bound limitation and greedy selection according to the price-quality ratios. By these random selections, users will not leave MCS systems since they have not won sensory jobs for a long time. We use , and to represent each case, respectively.

: We randomly select a new user as the winner from . We call users who have joined the system, but have not undertaken sensory jobs new users. The platform updates the winner’s corresponding information with the observed winner’s actual service quality at the end of time slot t by calling updating Algorithm 2 (UpdateInformationofWinner).

: The platform selects the winner randomly with the bidding bound limitation. There are three steps. Firstly, we randomly designate a user from . Secondly, construct an active set of users with the bidding bound limitation. Thirdly, if the designated user is in the active set , he or she is the winner. Then, update the winner’s corresponding information at the end of time slot t by calling updating Algorithm 2 (UpdateInformationofWinner).

: If the randomly-designated user is not in , the platform optimistically picks the user with the highest estimated price-quality ratio among the users in .

| Algorithm 1: Allocation algorithm. |

|

| Algorithm 2: UpdateInformationofWinner. |

|

1 //Input: user i; 2 Update the number of being randomly selected ; 3 Add the observed service quality to the total service quality ; 4 Update the learned expected service quality ); |

4. Theoretic Analysis

To prove the ex post monotonicity of our allocation algorithm, we introduce some definitions at first. Assume user i overstates the bidding price. Let denote the bid vector of all users, where is user i’s bid and denotes others’ bids. Let be the alternative bid vector, where , but the others’ bidding prices remain the same as in B. and are defined as the learned expected service quality of user i at time slot t with the bid vector B and the bid vector , respectively. Let and represent the probability that user i is chosen at time t with the bid vector B and the bid vector , respectively.

Lemma 1.

At any end of time slot t, the learned expected service quality of user i with bid vector B is not lower than with bid vector , that is .

Proof.

From the allocation algorithm, we can see, at any time t, users’ expected service qualities may be updated when they are selected only in case and . Now, we use mathematical induction to prove this lemma.

At time , the case is the only possible case of being chosen since all users are new in the system. Therefore, the probability of being chosen is the same with different bid vectors, that is . Therefore, , and the lemma is true at the end of time slot .

As shown in the algorithm, users’ service qualities remain the same with their last appearances in the system if they quit the system temporarily. Furthermore, users’ service qualities can be updated only when they are in the system. Therefore, we mainly describe the time slots when user i joins the system. Assume the lemma is true at end of time . Now, we need to show that the lemma is also true at the end of time , where user i is in the system at time .

: It is easy to see with different bid vectors if he or she is new in the system at . Therefore, the lemma is true.

: User i can be selected if user i is designated and stays in the active set. Now, we analyze user i’s probability of satisfying these two conditions. (1) As the random designated user is irrelevant with respect to his or her bidding price, user i’s probability of being designated is not related to the bid vector. (2) The selection of the ranking index bound is independent of the users’ bidding prices. Therefore, the ranking index bound is the same with different bid vectors. Since , the ranking index of user i with bid vector B is not larger than that with bid vector . Therefore, user i’s probability of staying in the active set with bid vector B is not lower than that with bid vector . To sum up, . Since the service quality increases with the updated number and , we can get .

Therefore, . To sum up, at any end of time t. ☐

Lemma 2.

At any end of time slot t, the learned expected service quality of user with bid vector Bis not higher than with bid vector , that is .

Proof.

As shown in the proof of Lemma 1, we also adopt mathematical induction to prove this lemma.

From the proof of Lemma 1, it is easy to see . Assume the lemma is true at the end time . Now, we need to show when user j is in the system at time .

: From the proof of Lemma 1, we can see, .

: User j can be selected if user j is the designated user and remains in the active set. As shown in the proof of Lemma 1, user j’s probability of being randomly designated with bid vector B is equal to that with bid vector . Now, we compute user j’s probability of being in the active set. Since users join the system dynamically, user j may meet with user i at time . If user j and user i come across at time , the ranking index of user j with bid vector B is not smaller than that with bid vector due to . If user j does not meet with user i at time , the ranking index of user j with B equals that with . Therefore, user j’s probability of staying in the active set with B is not higher than that with . From the above analysis, we can see . Since the service quality increases with the updated number and , we can derive at the end of time .

Therefore, . To sum up, the lemma is true at any end of time t. ☐

Lemma 3.

At any time slot t, user i’s probability of being selected with bid vector B is not lower than that with bid vector , that is .

Proof.

Mathematical induction is utilized to prove this lemma. It is easy to see that the lemma is true at time , that is .

Assume the lemma is true at the end time . Now, we need to show . As the description of Algorithm 1, there are three cases , and that user i can be selected. For the first two cases and , the lemma is true, as we have proven in the Lemma 1.

Assume user i is in location at time . For case , user i can be selected if the following three conditions are satisfied simultaneously.

User g () is the designated one, but user g is not in active set . As shown in the case of Lemma 2, user g’s probability of being in active set with B is not lower than that with .

User i is in active set . As shown in the case of Lemma 1, user i’s probability of being in with B is not lower than that with .

User i has the highest estimated price-quality ratio among active users in . Since is known from Lemma 1 and , we can derive . For any other competitor g (), we can derive due to , which is known from Lemma 2. Therefore, the probability of having the highest estimated price-quality ratio with bid vector B is not lower than that with .

From the above analysis, is true in the case .

Together with the case and the case proven before, we can derive . To sum up, the lemma is true for any time t. ☐

Theorem 1.

The allocation algorithm in TOAM is ex post monotone.

Proof.

Following directly from Lemma 3 and the definition of the ex post monotonicity of allocation rule in Definition 6, we can derive that our algorithm in TOAM is ex post monotone. ☐

Corollary 1.

Our allocation algorithm produces a truthful-in-expectation mechanism via applying the transformation procedure with probability of perturbation μ.

Theorem 2.

Individual rationality in TOAM is guaranteed.

Proof.

From the transformation procedure presented in Section 2.3, winners’ payments are not lower than their costs. Thus, at any time slot t, the winner’s utility is . If the user is not selected, . Therefore, the user’s individual rationality is ensured since the user’s utility is nonnegative. ☐

Theorem 3.

TOAM achieves computational complexity at each round.

Proof.

The allocation algorithm is the most complex step in our incentive. As shown in Algorithm 1, computation complexity is , where is the computation complexity of ranking with the maximum number of users who are competing for one job. ☐

5. Performance Evaluation

5.1. Simulation Setup

We evaluate our proposed scheme by programming in C++. Our evaluation is based on real trajectory sets from the Dartmouth College mobility traces [21] and synthetic MIT Campus traces, which are generated by a time-variant community model (TVCM) [22]. Considering the integrity of records and the movement regularities of users, we choose the data from 21 September 2003 to 20 October 2003 with 566 access points (APs) for the former datasetand select the top 100 active users. For the latter traces, we generate 30 days traces of 100 users with 100 virtual APs respectively by using the same parameter settings as in [22] to mimic the real MIT mobile social networks [23]. Each AP is regarded as a location. Users can participate in a sensing job when they arrive at any AP. The platform selects a winner if available to collect sensory data for every AP at every sensing time slot.

Each user’s cost and expected service quality are set as two random parameters that follow a [0, 1] uniform distribution respectively. Considering the dishonest behavior of overstating bidding prices, users can raise their prices by a random percentage premium from 0.01% to 99.99%. Every user’s quality of sensing for one sensing job follows a uniform distribution with the expectation of his or her service quality. The coefficient that transforms service quality to monetary reward α is set to two. As the ranges of service and cost are the same, we choose two to balance the influence of the service quality and the bidding price. Moreover, we set the parameter for sampling bidding prices in TOAM. Evaluation results are averaged over 100 runs.

5.2. Comparing Algorithms

We compare TOAM with random and greedy schemes. In the random scheme, the platform randomly chooses a winner if possible to perform a sensing job for every location at every time slot. In the greedy scheme, the platform randomly selects a winner if he or she is new in the system. Otherwise, the platform selects a winner with the highest estimated price-quality ratio among arriving users. In both schemes, the platform updates the learned expected service quality of the winner i following . In both the random and greedy schemes, the winners get paid as much as they ask.

5.3. Performance Comparison

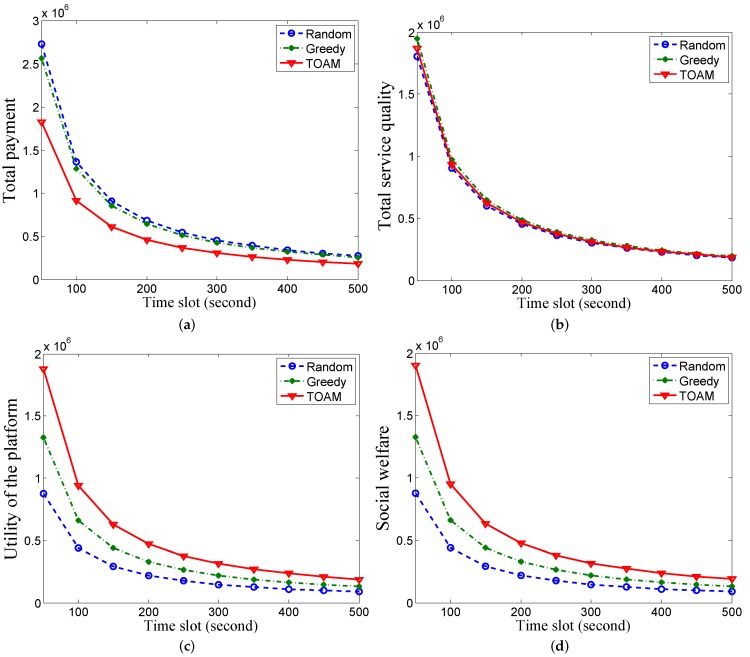

We evaluate the performance of different schemes in four metrics—average total payments, quality level of sensing service, profit of platform and social welfare under two datasets, respectively.

We vary the time slot from 50 s to 500 s with an increment of 50 s in the two datasets. Figure 3 shows the results under the Dartmouth trace datasets. In Figure 3a, the average payment of the platform in TOAM is 33.04% and 28.70% on average lower than that in random and greedy, respectively. This is because TOAM ensures that users provide truthful prices (lowest bidding prices), while users overstate prices to pursue higher payments in random and greedy. The payment decreases with the increasing slot gap because the number of sensing jobs is cut down. Since the highest payment of users is fixed, the payment of the platform decreases with less jobs. In Figure 3b, the average quality of sensing in TOAM is 3.36% greater than that in random and 4.04% lower than that in greedy on average. This is because some users with high costs and qualities may be removed when we select a bidding bound with a probability to construct the active user set.

Figure 3.

Performance comparisons under the Dartmouth dataset with 100 users. (a) Comparison of average payment; (b) Comparison of average service quality; (c) Comparison of the average utility of the platform; (d) Comparison of average social welfare.

In Figure 3c, the utility of the platform in TOAM is 114.10% and 42.33% greater than that in random and greedy, respectively. This is because TOAM stimulates users to report their truthful costs with similar service qualities comparing with the other two schemes. The social welfare of the sensing system in the TOAM algorithm is 117.98% and 43.89% greater than that in random and greedy, respectively, in Figure 3d. As users are overpaid from the platform as rewards for submitting truthful prices, the increase is a little greater than that in Figure 3c.

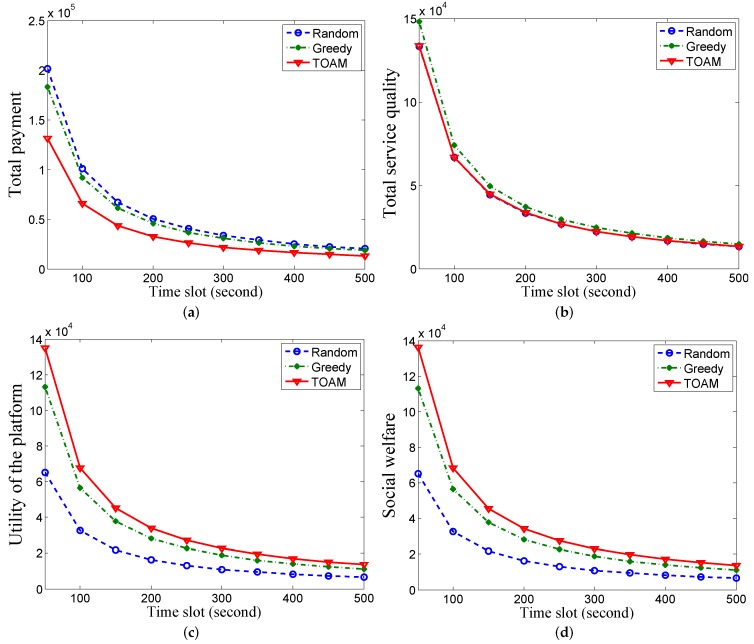

Figure 4 shows the results under synthetic MIT traces. Though the density of users in one AP is greater than that in Dartmouth, the results are similar to Figure 3 under Dartmouth traces. As these figures show similar trends, we omit other details.

Figure 4.

Performance comparisons under the MIT dataset with 100 users. (a) Comparison of average payment; (b) Comparison of average service quality; (c) Comparison of the average utility of the platform; (d) Comparison of average social welfare.

6. Related Work

At first, we review some incentive mechanisms for MCS systems that consider one or two factors of service quality, truthfulness and dynamically-arriving users respectively. Then, we retrospect some truthful single-parameter mechanisms.

6.1. Incentive Mechanisms for the Mobile Crowd Sensing System

In order to encourage users to submit timely event reports with high quality, a differentiated monetary incentive for a city management system is proposed in [24]. To estimate the quality of sensing data, the authors in [25] extend the expectation maximization (EM) algorithm combining maximum likelihood estimation and Bayesian inference. The quality of sensing data is defined as the probability that a participant submits sensing data in a valid interval. In [20], the authors present an auction-based incentive for quality-aware and fine-grained MCS in order to maximize the expected expenditure of the platform, where mobile users submit their declared qualities for subtasks and bidding prices before selection. In the above works, the quality of sensing data is considered; but users are static, and truthfulness is not guaranteed.

An online framework is proposed for an MCS system with stochastic arrivals of sensing requests and the dynamic participation of users in [26]. Based on stochastic Lyapunov optimization techniques combined with the idea of weight perturbation, the platform makes online decisions, including admissions of sensing requests and sensing time purchasing from users. In [27], the authors design a recruitment framework for MCS campaigns in opportunistic networks. In this work, users are selected optimally in order to generate the required space-time paths across the network. In [28], an online task assignment algorithm follows the mobility model of users in MCS systems so as to minimize the average makespan of assigned tasks. While the dynamic participation of users is considered in these work, users’ service qualities and dishonest behaviors are ignored.

In [29], the authors design a truthful incentive based on a combinatorial auction for a participatory sensing system, where the platform announces a set of tasks, and users take subsets of these tasks according to their preferences. The system selects an optimal set of users to complete tasks in order to maximize its utility. In [30], the authors introduce a reverse auction framework to design a truthful incentive considering the dimension of location information. In these works, truthfulness is ensured, but users’ service qualities and dynamic participation are ignored.

In [8], the authors design an incentive mechanism that characterizes both the information quality and timeliness of a specific real-time-sensed quantity simultaneously. Information quality is defined as the probability of the presence or absence of the sensing locations. Therefore, information quality can be sampled from a normal distribution with a variable learned from historical experience. In [9], the authors aim at increasing the quality of data directly and introduce gamification to location-based services. The quality indicator is calculated as the probability that the sensory data are categorized to the wrong category at one specific location. In order to increase the qualities of the spacial coverage of locations, the system shows points to users for encouraging users to move there. Considering users’ differential capabilities and uncontrollable mobility [10], designs an incentive to select a minimum subset of participants to satisfy the quality-of-information requirements of multi-tasks with the limited budget. This optimization problem is converted to a nonlinear knapsack problem. The system selects users with the maximum marginal profit under a limited budget dynamically. Users’ sensing qualities for specific locations are associated with the probabilities of arriving there based on historical records. In [31], the authors utilize users’ daily activities, which are known as a priori information, to design a recruitment scheme for participatory sensing, where the similarities of users’ behaviors are utilized to predict the quality of data. Therefore, the allocation problem becomes a collaborative filtering problem. In these works, users’ service qualities are unknown, and users participate dynamically. However, users’ dishonest behaviors are neglected. That is to say, truthfulness is not guaranteed. Besides, these works rely on historical records to start their incentive. Therefore, these above works cannot apply to a new MCS system.

The authors in [5] treat users’ participation levels as users’ service qualities and formalize a truthful incentive based on auction model. In [6], the authors propose truthful incentive mechanisms based on both single-minded and multi-minded combinatorial auctions with the consideration of the information of users’ qualities. However, users’ qualities are known as a priori information while selecting winners. In [7], a truthful incentive mechanism is designed based on a quality-driven auction with an indoor localization system as an example. A probabilistic model is proposed to evaluate the reliability of the sensing data as the users’ service qualities. In these above works, truthfulness is guaranteed. However, users are static, and users’ service qualities are assumed to be known when the sensing platform selects users. In [32], the authors propose a privacy-preserving reputation system for participatory sensing applications, where users exchange information in a lawful manner, and misbehaving can cause them loose their anonymity. However, we use the payment to design a truthful mechanism in order to guarantee users’ honest behaviors in bidding prices based on auction theory. Moreover, users’ service qualities are known as prior knowledge in [32], while they are uncertain information in our work when the platform selects the winners.

The works in [33,34] are the pioneer works on designing online truthful incentive mechanisms based on online auction. The work in [33] models users’ nature of opportunistically occurring in the crowd sensing areas. In the system, the platform decides whether to select users to undertake tasks when they arrive at the system. The authors in [34] design an online truthful incentive based on an online auction model under a budget constraint with the consideration of users’ dynamic participation. The platform selects a subset of users before a specified deadline in order to maximize the value of service, which is assumed to be a non-negative monotone submodular function. In [35], a task is also assumed to be allocated to one user and can be completed in a single slot. The authors design a near-optimal truthful incentive mechanism for the online task allocation scenario, given uncertain arrivals of tasks, dynamic users and users’ strategic behaviors. In [36], a long-term user participation incentive based on a Lyapunov-based VCG auction is provided for a time-dependent and location-aware participatory sensing system. All of these above works are designed with consideration of the dynamic users and the truthfulness of the mechanisms, but they ignore users’ differential service qualities.

6.2. Truthful Single-Parameter Mechanisms

Our work also relates to the single-parameter mechanism. Myerson [18] and Archer et al. [37] state that an allocation rule should be monotone in terms of reported bids for a truthful mechanism with single-parameter users. In [12], a general procedure is proposed to transform an ex post monotone allocation rule into a randomized mechanism for the realization of truthfulness in expectation and individual rationality. Moreover, an ex post monotone allocation rule is proposed with the consideration of the stochastic value of users. Since users are static in this allocation rule, it cannot be applied in our situation. In [38], the authors design an ex post truthful incentive via applying the transformation presented in [12] for the crowd source application, where a series of binary labeling tasks needs to be completed. While users’ service qualities are unknown, users are static in this work. In [19], the authors design an ex post monotone allocation rule and transform it via [12] to achieve a truthful-in-expectation mechanism. In this work, the data on shared routers have dynamic prioritization, and demand models are stochastic. While the rest of the capacity of routers is dynamic, the characteristic of capacity is different from users’ dynamic participation. Different from these previous work, in this paper, we design a novel monotone allocation rule with the consideration of dynamically-arriving users and transform it via [12] to achieve a truthful-in-expectation incentive in MCS systems.

7. Conclusions

In this paper, for homogenous sensing tasks in a new MCS system, we propose a truthful incentive TOAM based on online auction theory with the consideration of both uncertain service qualities and dynamically-arriving users. We analyze the three crucial properties in TOAM theoretically, which are truthfulness, individual rationality and computational efficiency. In the future, we will focus on a more complex online MCS system where users can compete for heterogeneous tasks and make proper schedules to undertake a bundle of tasks during a certain time.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (No. 61472404, No. 61472402, No. 61502457 and No. 61501125).

Author Contributions

The work presented in this paper is a collaborative development by all of the authors. Xiao Chen, Min Liu and Xiangnan He contributed to the idea of the incentive mechanism and designed the algorithms. Yaqin Zhou and Zhongcheng Li were responsible for some parts of the theoretical analysis and the paper check. Shuang Chen carried out simulations and analyzed the experimental results. All of the authors were involved in writing the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Sensorly. [(accessed on 1 August 2016)]. Available online: http://www.sensorly.com/

- 2.Mobile Millennium. [(accessed on 2 August 2016)]. Available online: http://traffic.berkeley.edu/

- 3.WeatherLah. [(accessed on 2 August 2016)]. Available online: http://www.weatherlah.com/

- 4.Mathur S., Jin T., Kasturirangan N., Chandrasekaran J., Xue W., Gruteser M., Trappe W. Parknet: Drive-by sensing of road-side parking statistics; Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services; San Francisco, CA, USA. 15–18 June 2010. [Google Scholar]

- 5.Koutsopoulos I. Optimal incentive-driven design of participatory sensing systems; Proceedings of the 2013 IEEE International Conference on Computer Communications; Turin, Italy. 14–19 April 2013. [Google Scholar]

- 6.Jin H., Su L., Chen D., Nahrstedt K., Xu J. Quality of information aware incentive mechanisms for mobile crowdsensing systems; Proceedings of the 16th ACM International Symposium on Mobile Ad Hoc Networking and Computing; Hangzhou, China. 22–25 June 2015. [Google Scholar]

- 7.Wen Y., Shi J., Zhang Q., Tian X., Huang Z., Yu H., Cheng Y., Shen X. Quality-driven auction-based incentive mechanism for mobile crowd sensing. IEEE Trans. Veh. Technol. 2015;64:4203–4214. doi: 10.1109/TVT.2014.2363842. [DOI] [Google Scholar]

- 8.Tham C.K., Luo T. Quality of contributed service and market equilibrium for participatory sensing. IEEE Trans. Mobile Comput. 2013;14:133–140. [Google Scholar]

- 9.Kawajiri R., Shimosaka M., Kashima H. Steered crowdsensing: Incentive design towards quality-oriented place-centric crowdsensing; Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing; Seattle, WA, USA. 13–17 September 2014. [Google Scholar]

- 10.Song Z., Liu C.H., Wu J., Ma J., Wang W. QoI-aware multitask-oriented dynamic participant selection with budget constraints. IEEE Trans. Veh. Technol. 2014;63:4618–4632. doi: 10.1109/TVT.2014.2317701. [DOI] [Google Scholar]

- 11.Klemperer P. What really matters in auction design. J. Econ. Perspect. 2002;16:169–189. doi: 10.1257/0895330027166. [DOI] [Google Scholar]

- 12.Babaioff M., Kleinberg R.D., Slivkins A. Truthful mechanisms with implicit payment computation; Proceedings of the 11th ACM conference on Electronic Commerce; Cambridge, MA, USA. 7–11 June 2010. [Google Scholar]

- 13.Paladi N., Gehrmann C., Michalas A. Providing end-user security guarantees in public infrastructure clouds. IEEE Trans. Cloud Comput. 2016 doi: 10.1109/TCC.2016.2525991. [DOI] [Google Scholar]

- 14.Yun C., Li X.C., Li Z.J., Jiang S.X., Li Y.L., Ji J., Jiang X.F. AirCloud: A cloud-based air-quality monitoring system for everyone; Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems; Memphis, TN, USA. 3–6 November 2014. [Google Scholar]

- 15.Li Q., Li Y., Gao J., Zhao B., Fan W., Han J. Resolving conflicts in heterogeneous data by truth discovery and source reliability estimation; Proceedings of the 2014 ACM SIGMOD International Conference on Management of Data; Snowbird, UT, USA. 22–27 June 2014. [Google Scholar]

- 16.Su L., Li Q., Hu S., Wang S. Generalized decision aggregation in distributed sensing systems; Proceedings of the 2014 IEEE Real-Time Systems Symposium; Rome, Italy. 2–5 December 2014. [Google Scholar]

- 17.Wang S., Wang D., Su L., Kaplan L. Towards cyber-physical systems in social spaces: The data reliability challenge; Proceedings of the Real-Time Systems Symposium; Rome, Italy. 2–5 December 2014. [Google Scholar]

- 18.Myerson R.B. Optimal auction design. Math. Oper. Res. 1981;6:58–73. doi: 10.1287/moor.6.1.58. [DOI] [Google Scholar]

- 19.Shnayder V., Parkes D.C., Kawadia V., Hoon J. Truthful prioritization for dynamic bandwidth sharing; Proceedings of the 15th International Symposium on Mobile Ad Hoc Networking and Computing; Philadelphia, PA, USA. 11–14 August 2014. [Google Scholar]

- 20.Wang J., Tang J., Yang D., Wang E., Xue G. Quality-aware and fine-grained incentive mechanisms for mobile crowdsensing; Proceedings of the 2014 IEEE 36th International Conference on Distributed Computing Systems; Nara, Japan. 27–30 June 2016. [Google Scholar]

- 21.Kotz D., Henderson T., Abyzov I., Yeo J. CRAWDAD Data Set Dartmouth/Campus/Movement. [(accessed on 8 March 2005)]. Available online: http://crawdad.org/dartmouth/campus/

- 22.Hsu W.J., Spyropoulos T., Psounis K., Helmy A. Modeling time-variant user mobility in wireless mobile networks; Proceedings of the 2013 IEEE International Conference on Computer Communications; Anchorage, AL, USA. 6–12 May 2007. [Google Scholar]

- 23.Balazinska M., Castro P. Characterizing mobility and network usage in a corporate wireless local-area network; Proceedings of the 2nd International Conference on Mobile Systems, Applications, and Services; San Francisco, CA, USA. 5–8 May 2003. [Google Scholar]

- 24.Mukherjee T., Chander D., Mondal A., Dasgupta K., Kumar A., Venkat A. CityZen: A cost-effective city management system with incentive-driven resident engagement; Proceedings of the 2014 IEEE 15th International Conference on Mobile Data Management; Brisbane, Australia. 15–18 July 2014. [Google Scholar]

- 25.Peng D., Wu F., Chen G. Pay as how well you do: A quality based incentive mechanism for crowdsensing; Proceedings of the 16th ACM International Symposium on Mobile Ad Hoc Networking and Computing; Hangzhou, China. 22–25 June 2015. [Google Scholar]

- 26.Han Y., Zhu Y. Profit-maximizing stochastic control for mobile crowd sensing platforms; Proceedings of the 2014 IEEE 11th International Conference on Mobile Ad Hoc and Sensor Systems; Philadelphia, PA, USA. 27–30 October 2014. [Google Scholar]

- 27.Karaliopoulos M., Telelis O., Koutsopoulos I. User recruitment for mobile crowdsensing over opportunistic networks; Proceedings of the 2015 IEEE International Conference on Computer Communications; Hong Kong, China. 26 April–1 May 2015. [Google Scholar]

- 28.Xiao M., Wu J., Huang L., Wang Y., Liu C. Multi-task assignment for crowdsensing in mobile social networks; Proceedings of the 2015 IEEE International Conference on Computer Communications; Hong Kong, China. 26 April–May 2015. [Google Scholar]

- 29.Yang D., Xue G., Fang X., Tang J. Crowdsourcing to smartphones: Incentive mechanism design for mobile phone sensing; Proceedings Of the 18th Annual International Conference on Mobile Computing and Networking; Istanbul, Turkey. 22–26 August 2012. [Google Scholar]

- 30.Feng Z., Zhu Y., Zhang Q., Ni L.M., Vasilakos A.V. TRAC: Truthful auction for location-aware collaborative sensing in mobile crowdsourcing; Proceedings of the 2014 IEEE International Conference on Computer Communication; Toronto, ON, Canada. 27 April–2 May 2014. [Google Scholar]

- 31.Zeng Y., Li D. A self-adaptive behavior-aware recruitment scheme for participatory sensing. Sensors. 2015;15:23361–23375. doi: 10.3390/s150923361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Michalas A., Komninos N. The lord of the sense: A privacy preserving reputation system for participatory sensing applications; Proceedings of 2014 the IEEE Symposium on Computers and Communications; Madeira, Portugal. 23–26 June 2014. [Google Scholar]

- 33.Zhang X., Yang Z., Zhou Z., Cai H., Chen L., Li X. Free market of crowdsourcing: Incentive mechanism design for mobile sensing. IEEE Trans. Parallel Distrib. Syst. 2014;25:3190–3200. doi: 10.1109/TPDS.2013.2297112. [DOI] [Google Scholar]

- 34.Zhao D., Li X.Y., Ma H. How to crowdsource tasks truthfully without sacrificing utility: Online incentive mechanisms with budget constraint; Proceedings of the 2014 IEEE International Conference on Computer Communication; Toronto, ON, Canada. 27 April–2 May 2014. [Google Scholar]

- 35.Feng Z., Zhu Y., Zhang Q., Zhu H., Yu J., Cao J., Ni L.M. Towards truthful mechanisms for mobile crowdsourcing with dynamic smartphones; Proceedings of the 2014 IEEE 34th International Conference on Distributed Computing Systems; Madrid, Spain. 30 June–3 July 2014. [Google Scholar]

- 36.Gao L., Hou F., Huang J. Providing long-term participation incentive in participatory sensing; Proceedings of the 2015 IEEE International Conference on Computer Communication; Hong Kong, China. 26 April–1 May 2015. [Google Scholar]

- 37.Archer A., Tardos E. Truthful mechanism for one-parameter agents; Proceedings of the 2001 IEEE Symposium on Foundations of Computer Science; Las Vegas, NV, USA. 14–17 October 2001. [Google Scholar]

- 38.Jain S., Gujar S., Bhat S., Zoeter O., Narahari Y. An incentive compatible multi-armed-bandit crowdsourcing mechanism with quality assurance. Arx. Prepr. 2014.