Abstract

Background/aims

Participant understanding is a key element of informed consent for enrollment in research. However, participants often do not understand the nature, risks, benefits, or design of the studies in which they take part. Research on medical practices, which studies standard interventions rather than new treatments, has the potential to be especially confusing to participants because it is embedded within usual clinical care. Our objective in this randomized study was to compare the ability of a range of multimedia informational aids to improve participant understanding in the context of research on medical practices.

Methods

We administered a Web-based survey to members of a proprietary online panel sample selected to match national U.S. demographics. Respondents were randomized to one of five arms: four content-equivalent informational aids (animated videos, slideshows with voiceover, comics, and text), and one no-intervention control. We measured knowledge of research on medical practices using a summary knowledge score from 10 questions based on the content of the informational aids. We used ANOVA and paired t-tests to compare knowledge scores between arms.

Results

There were 1500 completed surveys (300 in each arm). Mean knowledge scores were highest for the slideshows with voiceover (65.7%), followed by the animated videos (62.7%), comics (60.7%), text (57.2%), and control (50.3%). Differences between arms were statistically significant except between the slideshows with voiceover and animated videos and between the animated videos and comics. Informational aids that included an audio component (animated videos and slideshows with voiceover) had higher knowledge scores than those without an audio component (64.2% versus 59.0%, p<.0001). There was no difference between informational aids with a character-driven story component (animated videos and comics) and those without.

Conclusions

Our results show that simple multimedia aids that use a dual-channel approach, such as voiceover with visual reinforcement, can improve participant knowledge more effectively than text alone. However, the relatively low knowledge scores suggest that targeted informational aids may be needed to teach some particularly challenging concepts. Nonetheless, our results demonstrate the potential to improve informed consent for research on medical practices by using multimedia aids that include simplified language and visual metaphors.

Keywords: Research on medical practices, comparative effectiveness research, pragmatic clinical trials, multimedia, video, informed consent, research ethics

Introduction

Clinical researchers rely on the informed consent process to demonstrate respect for the autonomy of research participants. Central to this process is the assumption that research participants understand the nature, risks, benefits, and design of the study at the time they agree to participate.1–4 Typical efforts to achieve informed consent focus on the provision of information to prospective research participants, but evidence that participants actually comprehend the disclosed information is often absent,5–6 nor is it clear what degree of comprehension is needed to establish that a participant’s consent is truly “informed.” A growing body of evidence reveals that many participants do not understand the studies they join;7–11 for example, one review found that study participants understood the concept of randomization only 50% of the time.12 Not only does this evidence demonstrate that informed consent could be significantly improved, but misunderstanding of a study’s goals and processes may also result in lower participation rates.13–14

The growth of research on medical practices embedded within learning health care systems, which compares commonly used interventions rather than new interventions, further complicates the informed consent process.15 Prior work in this area has revealed widespread misconceptions and confusions about this kind of research—for example, patients’ beliefs that doctors always know which of several accepted medications is best or that research always includes a placebo control, as well as confusion about the goals of research versus clinical care.16–17 Introducing prospective participants to the concept of research on medical practices may therefore be especially challenging, as it contradicts common assumptions about medical expertise and how research studies work.

There have been a number of efforts to improve informed consent in clinical research settings by using multimedia informational aids. These multimedia aids sometimes include the use of an audio component and/or a character-driven story or narrative, among other enhanced features. However, there is no clear standard for how much of an improvement in understanding is needed to justify the cost of developing a multimedia aid, and reviews of the literature have shown these efforts to have mixed results.18–19 In some studies, multimedia aids have improved participant understanding,20–21 while others have shown no significant improvement in knowledge despite participants’ reports that they found them worthwhile.22 None of these studies have addressed understanding of research on medical practices specifically.

Our earlier work has suggested that patients perceive character-driven animated videos with an audio component to be helpful in learning about these concepts.16,23–24 Here, we present results from a randomized study comparing four content-equivalent informational aids about research on medical practices, including our original animated videos, and a control arm. We hypothesized that: (1) informational aids would improve participant understanding more than the no-intervention control, (2) audio aids would improve understanding more than non-audio aids, and (3) aids based on a character-driven story would improve understanding more than aids without a character-driven story. Our findings have implications for how the characteristics of different informational aids help prospective participants learn about research and can be applied to improve the process of informed consent for research on medical practices.

Methods

Study design

We conducted a self-administered, Web-based survey using an experimental between-group design to compare the effects of four informational aids on respondents’ understanding of core aspects of research on medical practice, including variation in medical practice and the meaning of randomization. Respondents were randomly assigned to one of four informational aid arms or a control group, which allowed us to control for potential confounders and enabled us to draw causal inferences about the effects of the informational aids on understanding.

Study sample

Survey Sampling International (SSI) made the survey available to members of its online research panel, consisting of individuals who had previously signed up to participate in survey research. Our survey was open to English-reading U.S. adults. SSI recruited panel members by generic emailed messages several times per week. Respondents received a small incentive as part of the panel’s points-based reward program. Respondents were screened to meet quota minimums matching U.S. population characteristics by age, gender, region, ethnicity, race, education, and income according to the 2014 U.S. Census. Eligible respondents were randomly assigned to one of the five study arms. We used sequential enrollment until 300 respondents had completed each arm. We determined sample size based on power calculations assuming t-tests with power=.80 to detect a difference in proportion of knowledge scores of .07 with alpha=.05. Survey administration took place between October 28 and November 9, 2015.

Survey development

We based the format of this survey on our prior survey of patients’ attitudes about research on medical practices.23–24 We followed the tailored design method for web-based surveys and adhered to basic principles of classic measurement, including multi-item operationalization, to guide question development and structure.25–26

We established face and content validity of the survey questions through expert review and cognitive interviews with prospective study participants. SSI panel members completed the survey in a mock-up of its online format while simultaneously explaining their answers via telephone to a study-team interviewer, who used a combination of the think-aloud and probing methods.27 We completed a total of 3 rounds of interviews with 15 interviews per round, iteratively refining survey questions and response categories as well as evaluating technical functionality.

Informational aids and development

We provided respondents in all arms, including the control, with a brief definition of research on medical practices in the introduction to the survey (Figure 1). Beyond this information, the informational aids were equivalent in content but different in delivery approach, including two with an audio component and two based on a character-driven story, as described below. The content of each of the four informational aids was split into two sections, each conveying information about core concepts in research on medical practices. The first section introduced the concept of variation in usual medical practices, using the example of different doctors prescribing different antihypertensive medications and describing the multiple factors that can influence a doctor’s choice to prescribe a certain medication. The second section described two approaches to research on medical practices: medical record review and randomization. It briefly described each research method and how the method can be used to compare commonly prescribed medications. The features of each informational aid are described below. The survey instrument and all informational aids are available at https://rompethics.iths.org/study-details.

Figure 1.

Definition of research on medical practices

Animated videos (audio, character-driven)

In a previous study,16,23 we developed whiteboard-animated videos with Booster Shot Media, a health communications multimedia production company. Whiteboard animation is a style of video that shows a time-lapse of the process of hand-drawing illustrations on a whiteboard background. These videos presented a character-driven story of several patient-doctor interactions. The two videos were 3:20 and 3:07 minutes long, and respondents were required to play the entirety of each video without fast-forwarding in order to advance in the survey. Further details on the development of these videos are described elsewhere.16,23

Slideshows with voiceover (audio, not character-driven)

We developed our slideshows with voiceover by beginning with the script from the animated videos. We removed the character-driven elements from the script but otherwise maintained the factual content. We developed slides to highlight the key points from the script using Microsoft PowerPoint, including stock photos from the PowerPoint clip-art gallery. The two videos were 1:11 and 2:13 minutes long, and respondents were required to play the entirety of each slideshow without fast-forwarding in order to advance in the survey.

Comics (no audio, character-driven)

We created the comics collaboratively with Booster Shot Media. These comics used the same hand-drawn style as the animated videos but were presented as still images with word balloons and text boxes, without any audio component. We maintained the character-driven story from the animated videos, making adjustments to the script to fit the comic strip format. The two comics comprised 8 and 7 rows, with 1 to 3 panels per row.

Text (no audio, not character-driven)

We presented a text-only version of the scripts from the slideshows with voiceover. The two sections were 171 and 314 words long.

Measures

Our primary outcome was respondent understanding of the information about research on medical practices provided by the informational aids. A series of knowledge questions followed each section of the informational aids. Each knowledge question was presented as a statement with response options True, False, or Don’t Know. We designed the knowledge questions to discriminate between basic recognition, recall, and inferential processing of information presented in all four informational aids.28 We refined this intent through cognitive interviews. Evaluation of the discriminatory capacity of the knowledge measure is presented in the Results section.

In addition to the knowledge questions, the survey asked about topics related to informed consent and risk in the context of research on medical practices, as well as standard demographic questions, for a total of 39 questions. Results from those questions are not reported here. The informational aids also each had a third section about informed consent, which was followed by knowledge questions specific to consent issues; these are not included in our knowledge score because they do not address our primary outcome, knowledge of research on medical practices.

Statistical analysis

We based summary knowledge scores on the sum of the number of correct responses divided by the total number of possible correct responses (10), reported as a percentage. We used data from the 300 completed surveys per study arm for analysis, evaluating within- and across-arm differences in demographics and attrition using ANOVA and cell chi-square. We report basic descriptive statistics. We used ANOVA (generalized linear models) and Tukeys t-tests for least square difference to compare knowledge scores across arms. We performed all statistical analysis using SAS© 9.4.

IRB review, informed consent, and privacy

The Stanford University, University of Washington, and University of Minnesota institutional review boards approved this study with a waiver of documentation of informed consent. SSI collected the survey data, and members of the research team only received aggregate data.

Results

Overall completion rate

Of the 2016 panel members who entered the survey portal, 1565 completed the survey and 1500 were included in final data, resulting in an overall completion rate of 74.4%. Final data excluded 65 respondents because their responses failed one or more of the following data quality parameters: (1) time to complete the survey (not counting time required for videos) was less than one-third of the median completion time; or (2) there was evidence of acquiescence bias, suggested by sequential multiple-choice questions answered at the same extreme where some variation was expected. We used data from a total of 1500 completed surveys, with 300 completes per arm, for analysis.

Respondent characteristics

Despite use of random assignment, our sample did not achieve equivalence in distribution across arms for three characteristics: Hispanic/Latino ethnicity, education, and income (Table 1). Similar distributional differences in ethnicity were also present at entry to the survey, with no discernible or interpretable pattern. No statistically significant differences in ethnicity were present in a comparison of survey completers and non-completers (p=0.8362). Distributional differences in educational level were primarily due to a lower proportion of respondents with higher educational attainment in the animated video arm compared to the other four arms. Overall, the difference in distribution of education across survey completers and non-completers was not significant. The difference in distribution of income was significant and was also present at entry to survey. Due to non-equivalence across arms, to isolate the effect of multimedia format on knowledge, we controlled for ethnicity, education, and income in our between-arm analysis.

Table 1.

Respondent characteristics

| Overall | Animated videos |

Slideshows with voiceover |

Comics | Text | Control | |

|---|---|---|---|---|---|---|

| Mean age (std) | 43.2 (16.5) |

43.4 (16.5) |

44.1 (16.8) |

44.5 (16.7) |

42.3 (16.5) |

41.6 (15.9) |

| Gender (% male) | 48.6 | 50.7 | 48.3 | 42.0 | 50.0 | 52.0 |

| Hispanic or Latino (%)** |

16.5 | 21.3 | 9.7 | 11.0 | 15.0 | 25.3 |

| Race (%) | ||||||

| Asian | 5.0 | 2.7* | 4.3 | 8.3* | 6.3 | 3.3 |

| Black or African American |

12.0 | 14.7 | 10.3 | 13.0 | 10.3 | 11.7 |

| White/Caucasian | 71.0 | 70.0 | 77.0 | 68.3 | 67.3 | 72.3 |

| Other | 12.0 | 12.7 | 8.3* | 10.3 | 16.0 | 12.7 |

| Education* | ||||||

| Less than HS | 12.0 | 10.3 | 8.7* | 12.0 | 17.7* | 11.3 |

| High School | 30.0 | 34.7 | 27.0 | 33.7 | 25.3 | 29.3 |

| Some College or Vocational |

29.0 | 32.3 | 29.7 | 21.3* | 26.7 | 35.0 |

| College Graduate | 19.0 | 16.3 | 21.0 | 19.0 | 20.0 | 18.7 |

| Post-Graduate | 10.0 | 6.3 | 13.7 | 14.0 | 10.3 | 5.7 |

| Income* | ||||||

| Less than $20k | 17.0 | 18.3 | 10.7* | 17.7 | 22.0* | 16.3 |

| $20,000–$39,999 | 20.9* | 26.3* | 23.7* | 19.3 | 17.7 | 17.7 |

| $40,000–$59,999 | 16.9 | 18.7 | 18.7 | 14.7 | 16.0 | 16.3 |

| $60,000–$79,999 | 13.4 | 12.7 | 12.7 | 13.0 | 13.0 | 15.7 |

| $80,000–$99,999 | 7.9 | 9.3 | 8.3 | 6.7 | 7.7 | 7.7 |

| $100,000–149,999 | 17.1* | 10.7* | 18.7 | 19.3 | 17.3 | 19.3 |

| $150,000 or More | 6.8* | 4.0* | 7.3 | 9.3 | 6.3 | 7.0 |

* p<.05 **; p<.01 Chi-square, ANOVA with multiple paired t-tests

Knowledge measure

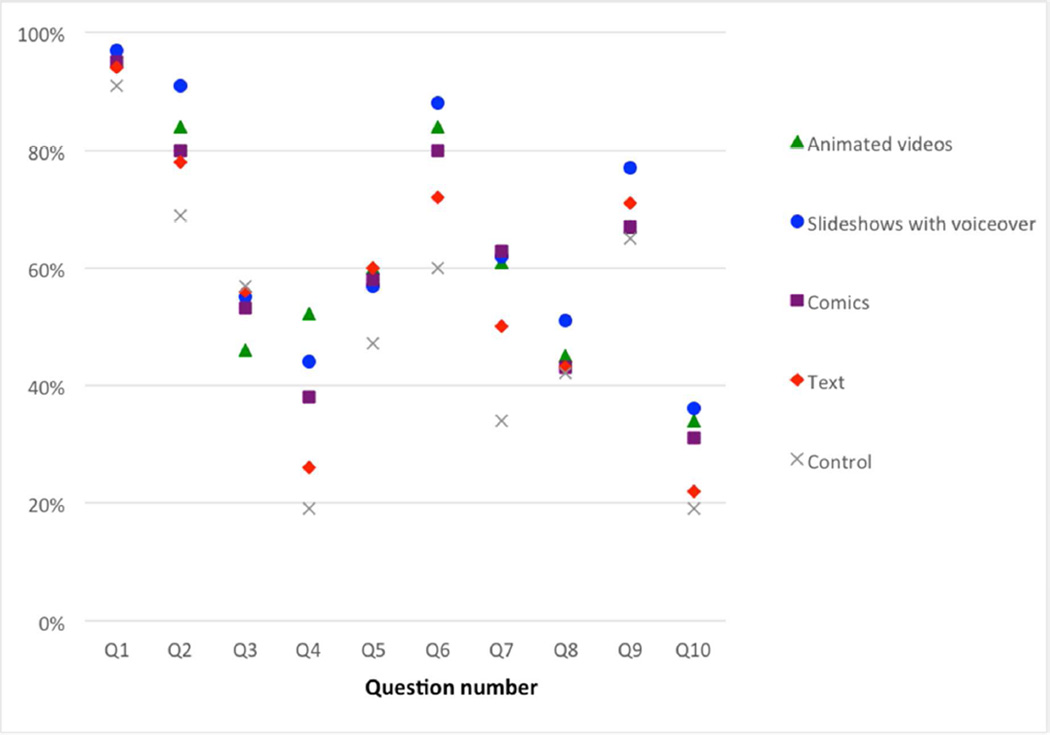

The overall mean percent correct on each question across arms ranged from a low of 28.5% (Q10) to a high of 94.3% (Q1) (Online Appendix A). There was also variation between arms for most questions: the within-question variation by arm was statistically significant (p≤.05) for all individual knowledge questions except Q8 (p=.20), providing strong support for within-arm discriminatory ability of knowledge questions (Figure 2). Further, respondents who were randomized to the slideshow with voiceover arm scored higher on 6 of the 10 knowledge questions than those in all other arms.

Figure 2.

Individual knowledge questions: percent correct by arm

Difference in knowledge scores across arms

The unadjusted mean knowledge scores were highest for respondents in the slideshow with voiceover arm (65.7 [SD=16.7]), followed by the animated video (62.7 [SD=18.8]), comic (60.7 [SD=18.5]), text (57.2 [SD=18.3]), and control (50.3 [SD=16.8]) arms.

Table 2 presents the comparison and tests for difference in mean knowledge scores between arms. The statistical test used for comparison is a Tukeys t-test, comparing mean squared differences. In light of distributional differences found for ethnicity, education, and income across arms, we controlled for these characteristics for the t-tests of significance. As indicated, the difference in knowledge between the control arm and each informational arm was statistically significant for all four informational aids (p<.0001). Differences in knowledge scores between arms were statistically significant between all arms except between the slideshows with voiceover and animated videos, and between the animated videos and comics.

Table 2.

Difference in adjusted mean knowledge scores between arms

| Animated videos |

Slideshows with voiceover |

Comics | Text | |

|---|---|---|---|---|

|

Slideshows with voiceover |

1.6 (p=.1137) |

-- | -- | -- |

| Comics | 1.6 (p=.1139) |

3.2** (p=.0015) |

-- | -- |

| Text | 3.9** (p=.0001) |

5.5** (p<.0001) |

2.3* (p=.0215) |

-- |

| Control | 8.8** (p<.0001) |

10.3** (p<.0001) |

7.2** (p<.0001) |

4.9** (p<.0001) |

Tukeys t-test standardized range (least square difference) *p≤.05; **p≤.0001 controlling for ethnicity (Hispanic/Latino), education, and income

Difference in knowledge across multimedia format of informational aids

Knowledge scores were significantly higher for the two informational aids with an audio component (animated videos and slideshows with voiceover) than in the two without (comics and text): 64.2% vs. 60.0% (p<.0001). There was no significant difference between the two informational aids with a character-driven story component (animated videos and comics) and the two without (slideshows with voiceover and text) (Table 3).

Table 3.

Comparison of adjusted mean knowledge scores between multimedia formats

| Character-driven story |

No character-driven story |

Total | |

|---|---|---|---|

| Audio | Animated videos | Slideshows with voiceover |

Audio* 64.2 |

| No audio | Comics | Text |

No audio* 59.0 |

| Total |

Character-driven story 61.9 |

No character-driven story 61.3 |

Tukeys t-test standardized range (least square difference) *p<.0001; controlling for ethnicity (Hispanic/Latino), education, and income

Discussion

Multimedia format

Overall, respondents who viewed either the slideshows with voiceover or the animated videos performed best on the knowledge questions. Each of these aids contained both audio and visual components: the slideshows combined a descriptive voiceover with minimal images and text in a bulleted, summary format, while the animated videos used voiceover to tell the story of a series of moving cartoons. Our results accord with the cognitive theory of multimedia learning, which states that people learn best when provided with limited but cohesive information simultaneously through aural and visual channels29–31 and has been supported in the empirical literature.32–33 The slideshows with voiceover may also have benefited from being relatively short and simple, allowing for low cognitive load and easy information processing,30,34 and from containing some, but not too much, text.35 Moreover, these results align with the informal feedback we received throughout our cognitive interview process from interviewees who stated that they preferred getting information through multiple channels. However, while we found a statistically significant difference between aids with and without an audio component, our results do not address the value of investing in multimedia aids to gain a relatively small increase in understanding, which is a tradeoff that may differ depending on the specific study and the content of the multimedia aid. Nonetheless, to the extent that increased understanding is indicative of a more robust informed consent process, the ability of our multimedia aids to improve prospective participants’ understanding suggests that there is room to improve informed consent.

Of our four informational aids, respondents randomized to the text-only approach performed worst on the knowledge questions; this is an important finding given that the text was identical to the narration in the slideshows with voiceover. Notably, this arm most closely approximates the traditional approach to informed consent for research, which suggests there is room for improving the consent process using one or more of our multimedia approaches. In practice, of course, traditional written informed consent is intended to be accompanied by a discussion, and in fact discussions have been shown to be one of the most effective ways of improving participant understanding.36,18–19 Our study did not include discussion in any arm, but presumably a discussion could supplement, rather than be replaced by, any of the informational aids in our study.37 Indeed, our results suggest that moving toward simple multimedia approaches to informed consent can help participants understand complex concepts, presented in a consistent and standardized manner, and facilitate more informed discussions with members of the research team. Moreover, this can be done at relatively low cost; our slideshows with voiceover were filmed entirely in-house with simple recording software. However, this does not take into account the effort and resources that we invested to develop effective language and visual metaphors when initially developing the animated videos, which we later used to create the slideshows with voiceover.

Difficult concepts

Although some questions seemed to be effectively taught by at least some of our informational aids, others performed poorly on all arms. Indeed, even in the highest-scoring arm, respondents answered on average only two-thirds of the questions correctly, which aligns with similarly low knowledge scores found in reviews of the literature on informed consent for research participation.18–19 This highlights the question of how much understanding is necessary for consent to be truly “informed.” While the Common Rule identifies required elements that must be disclosed during the informed consent process [45 CFR § 46.116], there is no standard for how well a participant must understand that information prior to consenting. Some have argued that disclosure alone, without comprehension, is insufficient for a truly “informed” consent,38–39 but alternative models do not specify what or how much participants must understand.

Our findings do not answer this question but do identify certain pitfalls to understanding that arose in the context of our study. First, we created our original animated videos for use in a separate study8–9 and therefore not all topics received equal attention, likely resulting in some topics being more effectively taught than others.

Second, some of our knowledge questions may have resulted in lower scores because they contradicted respondents’ basic assumptions about research. Prior qualitative studies have identified widespread misunderstanding about research on medical practices, particularly when participants compare it to the well-known archetype of a placebo-controlled clinical trial of new treatments.16–17 Our study suggests that at least some aspects of research on medical practices are difficult for people to understand without explicit and direct teaching. This is an important point for researchers who are interested in developing informed consent materials about topics that are unfamiliar to prospective participants, and it highlights the need for a clear approach to teaching key learning goals. Strategies could draw on those described in the educational psychology literature such as signaling important information, using visuals to highlight difficult concepts, and actively involving participants.30,34 Furthermore, participant understanding can be evaluated and the efficacy of multimedia aids strengthened with a robust needs assessment and user testing process.40

Character-driven story component

There was no significant difference between our two informational aids that were based on a character-driven story (animated videos and comics) and those that were not (slideshows with voiceover and text). For the linear transmission of information from “teacher” to “learner,” more didactic pedagogical techniques seem to perform better. However, this does not preclude the possibility that the narrative story approach that characterizes comics and animations may be effective in a different setting. Narrative story-based informational aids have been shown to be effective for targeted communications to specific sub-populations—for example, immigrants and refugees,41 low-literacy communities,42 and the mentally ill.43–44 Comics and animation may also be useful for clinical purposes that are outside the scope of our study, such as encouraging changes in health behaviors,45–48 reducing health disparities by using culturally targeted informational aids,49 or teaching information over time.46 Because the comics medium requires a collaboration with readers to construct meaning, it is essentially non-hierarchical and as such may not readily lend itself to top-down approaches to delivering information.

Moreover, our animated videos were the first of our informational aids to be created and were initially developed for another study;16, 23 in order to maintain content equivalence, the language and structure of these videos was the baseline for our other informational aids. Therefore, the benefits of our investment in producing these videos are likely understated, as they included not only the character-driven story component, but also simplification of language and development of visuals and metaphors. Indeed, shortening consent forms and making them more comprehensible has consistently proven to improve participant understanding.19

Limitations

There were differential completion rates across arms. However, the intent of our study was not to achieve external validity, but rather to achieve internal validity. Our informational aids were experiential interventions that were designed and expected to include differential respondent burden. We evaluated nonresponse patterns and confirmed that the nonresponse conformed to this assumption of differential respondent burden. Therefore, we used only data from the 300 respondents per arm who completed the survey. We also evaluated other plausible approaches and subsequent assumptions about nonresponse, which confirmed the robustness of our statistical results.

Furthermore, our sample of SSI panel members, which consisted of individuals with internet access and an interest in participating in surveys, is not generalizable to the greater U.S. population. However, our randomized design allowed us to achieve internal validity and identify intervention-specific differences between groups.

An additional limitation is that our survey presented a hypothetical scenario rather than an actual consent process and, as noted in the Discussion, did not include an opportunity to discuss the study with a researcher. While the scores on our knowledge measure revealed significant differences in understanding between arms, these scores alone are insufficient to measure the adequacy and quality of informed consent. Further study is needed to understand how these informational aids perform in the context of an actual clinical trial.

Conclusions and future directions

This study shows that, of four content-equivalent approaches to providing information about research on medical practices, our text-only informational aid was least effective at educating respondents, despite being the closest approximation to the way that research consent is typically provided in practice. Pragmatic trials in which prospective participants are randomized between consent approaches in the setting of an actual trial are needed to build on our results. In the meantime, our results show that short slideshows or videos that combine voiceover with images and visual content reinforcement can be a more effective way of educating prospective study participants. The slideshow medium is relatively simple to produce, and both slideshows and videos are adaptable to a range of technologies, such as mobile phones and websites, that can improve accessibility and engagement for many prospective participants. However, even with multimedia informational aids, overcoming the knowledge deficit about research on medical practices is a challenging task and will require concerted efforts if researchers are to enable prospective participants to give truly “informed” consent.

Supplementary Material

Acknowledgments

Thank you to Gary Ashwal and Alex Thomas of Booster Shot Media for producing our animated videos and comics; Bryant Phan for research assistance; Steven Joffe for contributions to survey design; the Greenwall Foundation for funding this study; and the National Center for Advancing Translational Sciences (NCATS) for funding the initial development of our animated videos and comics (grants UL1 TR000423-07S1 to the University of Washington/Seattle Children’s Research Institute and UL1 TR001085 to Stanford University).

References

- 1.Beauchamp TL, Childress JF. Principles of biomedical ethics. 6th. New York: Oxford University Press; 2008. [Google Scholar]

- 2.World Medical Association. Declaration of Helsinki: Ethical principles for medical research involving human subjects. [accessed 15 January 2016];2008 http://www.wma.net/en/30publications/10policies/b3/17c.pdf. [PubMed] [Google Scholar]

- 3.National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. Belmont Report: Ethical principles and guidelines for the protection of human subjects research. [accessed 15 January 2016];1979 http://www.hhs.gov/ohrp/humansubjects/guidance/belmont.html. [PubMed]

- 4.Vol. 2. Washington, D.C.: U.S. Government Printing Office; 1949. [accessed 15 January 2016]. Trials of war criminals before the Nuremberg military tribunals under Control Council Law No. 10; pp. 181–182. http://www.hhs.gov/ohrp/archive/nurcode.html. [Google Scholar]

- 5.Tait AR, Voepel-Lewis T. Digital media: a new approach for informed consent? JAMA. 2015;313:463–464. doi: 10.1001/jama.2014.17122. [DOI] [PubMed] [Google Scholar]

- 6.Schreiner MS. Can we keep it simple? JAMA Pediatri. 2013;167:603–605. doi: 10.1001/jamapediatrics.2013.152. [DOI] [PubMed] [Google Scholar]

- 7.Cohn E, Larson E. Improving participant comprehension in the informed consent process. J Nurs Scholarsh. 2007;39:273–280. doi: 10.1111/j.1547-5069.2007.00180.x. [DOI] [PubMed] [Google Scholar]

- 8.Sugarman J, Lavori PW, Boeger M, et al. Evaluating the quality of informed consent. Clin Trials. 2005;2:34–41. doi: 10.1191/1740774505cn066oa. [DOI] [PubMed] [Google Scholar]

- 9.Agre P, Campbell FA, Goldman BD, et al. Improving informed consent: the medium is not the message. IRB. 2003;25:S11–S19. [PubMed] [Google Scholar]

- 10.Joffe S, Cook EF, Cleary PD, et al. Quality of informed consent in cancer clinical trials: a cross-sectional survey. Lancet. 2001;358:1772–1777. doi: 10.1016/S0140-6736(01)06805-2. [DOI] [PubMed] [Google Scholar]

- 11.Sugarman J, McCrory DC, Hubal RC. Getting meaningful informed consent from older adults: a structured literature review of empirical research. J Am Geriatr Soc. 1998;46:517–524. doi: 10.1111/j.1532-5415.1998.tb02477.x. [DOI] [PubMed] [Google Scholar]

- 12.Falagas ME, Korbila IP, Giannopoulou KP, et al. Informed consent: how much and what do patients understand? Am J Surg. 2009;198:420–435. doi: 10.1016/j.amjsurg.2009.02.010. [DOI] [PubMed] [Google Scholar]

- 13.Jenkins V, Fallowfield L. Reasons for accepting or declining to participate in randomized clinical trials for cancer therapy. Br J Cancer. 2000;82:1783–1788. doi: 10.1054/bjoc.2000.1142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sutherland HJ, Lockwood GA, Till JE. Are we getting informed consent from patients with cancer? J R Soc Med. 1990;83:439–443. doi: 10.1177/014107689008300710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wilfond BS, Magnus DC. The potential harms and benefits from research on medical practices. Hastings Cent Rep. 2015;45:5–6. doi: 10.1002/hast.440. [DOI] [PubMed] [Google Scholar]

- 16.Kelley M, James C, Kraft SA, et al. Patient perspectives on the learning health system: the importance of trust and shared decision making. Am J Bioeth. 2015;15:4–17. doi: 10.1080/15265161.2015.1062163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Weinfurt KP, Bollinger JM, Brelsford KM, et al. Patients' views concerning research on medical practices: implications for consent. AJOB Empir Bioeth. 2016;7:76–91. doi: 10.1080/23294515.2015.1117536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nishimura A, Carey J, Erwin PJ, et al. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC Med Ethics. 2013;14:28. doi: 10.1186/1472-6939-14-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Flory J, Emanuel E. Interventions to improve research participants’ understanding in informed consent for research. JAMA. 2004;292:1593–1601. doi: 10.1001/jama.292.13.1593. [DOI] [PubMed] [Google Scholar]

- 20.Karunaratne AS, Korenman SG, Thomas SL, et al. Improving communication when seeking informed consent: a randomised controlled study of a computer-based method for providing information to prospective clinical trial participants. Med J Aust. 2010;192:388–392. doi: 10.5694/j.1326-5377.2010.tb03561.x. [DOI] [PubMed] [Google Scholar]

- 21.Hutchison C, Cowan C, McMahon T, et al. A randomised controlled study of an audiovisual patient information intervention on informed consent and recruitment to cancer clinical trials. Br J Cancer. 2007;97:705–711. doi: 10.1038/sj.bjc.6603943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hoffner B, Bauer-Wu S, Hitchcock-Bryan S, et al. Entering a clinical trial: is it right for you? A randomized study of the clinical trials video and its impact on the informed consent process. Cancer. 2012;118:1877–1883. doi: 10.1002/cncr.26438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cho MK, Magnus D, Constantine M, et al. Attitudes toward risk and informed consent for research on medical practices: a cross-sectional survey. Ann Intern Med. 2015;162:690–696. doi: 10.7326/M15-0166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kraft SA, Cho MK, Constantine M, et al. A comparison of institutional review board and patient views on consent for research on medical practices. Clin Trials. 2016 doi: 10.1177/1740774516648907. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dillman DA, Smyth JD, Christian LM. Internet, mail, and mixed-mode surveys: the tailored design method. Hoboken, NJ: Wiley & Sons; 2009. [Google Scholar]

- 26.Nunnally JC, Bernstein IH. Psychometric theory. 3rd. New York: McGraw-Hill, Inc.; 1994. [Google Scholar]

- 27.Collins D. Pretesting survey instruments: an overview of cognitive methods. Qual Life Res. 2003;12:229–238. doi: 10.1023/a:1023254226592. [DOI] [PubMed] [Google Scholar]

- 28.Nadeau R, Niemi RG. Educated guesses: the process of answering factual knowledge questions in surveys. Public Opin Q. 1995;59:323–346. [Google Scholar]

- 29.Mayer RE. Multimedia learning. 2nd. New York: Cambridge University Press; 2009. [Google Scholar]

- 30.Mayer RE, Moreno R. Nine ways to reduce cognitive load in multimedia learning. Educ Psychol. 2003;38:43–52. [Google Scholar]

- 31.Mayer RE, Moreno R. Animation as an aid to multimedia learning. Educ Psychol Rev. 2002;14:87–99. [Google Scholar]

- 32.Houts PS, Doak CC, Doak LG, et al. The role of pictures in improving health communication: a review of research on attention, comprehension, recall, and adherence. Patient Educ Couns. 2006;61:173–190. doi: 10.1016/j.pec.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 33.Moreno R, Mayer RE. Cognitive principles of multimedia learning: the role of modality and contiguity. J Educ Psychol. 1999;91:358–368. [Google Scholar]

- 34.Plass JL, Homer BD, Hayward EO. Design factors for educationally effective animations and simulations. J Comput High Educ. 2009;21:31–61. [Google Scholar]

- 35.Shneerson C, Windle R, Cox K. Innovating information-delivery for potential clinical trials participants. What do patients want from multi-media resources? Patient Educ Couns. 2013;90:111–117. doi: 10.1016/j.pec.2012.06.031. [DOI] [PubMed] [Google Scholar]

- 36.Kass NE, Taylor HA, Ali J, et al. A pilot study of simple interventions to improve informed consent in clinical research: feasibility, approach, and results. Clin Trials. 2015;12:54–66. doi: 10.1177/1740774514560831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Henry J, Palmer BW, Palinkas L, et al. Reformed consent: adapting to new media and research participant preferences. IRB. 2009;31:1–8. [PMC free article] [PubMed] [Google Scholar]

- 38.Faden RR, Beauchamp TL. A history and theory of informed consent. New York: Oxford University Press; 1986. [PubMed] [Google Scholar]

- 39.Manson NC, O’Neill O. Rethinking informed consent in bioethics. New York: Cambridge University Press; 2007. [Google Scholar]

- 40.Aronson ID, Marsch LA, Acosta MC. Using findings in multimedia learning to inform technology-based behavioral health interventions. Transl Behav Med. 2013;3:234–243. doi: 10.1007/s13142-012-0137-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wieland ML, Nelson J, Palmer T, et al. Evaluation of a tuberculosis education video among immigrants and refugees at an adult education center: a community-based participatory approach. J Health Commun. 2013;18:343–353. doi: 10.1080/10810730.2012.727952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Afolabi MO, Bojang K, D’Alessandro U, et al. Multimedia informed consent tool for a low literacy African research population: development and pilot-testing. J Clin Res Bioeth. 2014;5:178. doi: 10.4172/2155-9627.1000178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Harmell AL, Palmer BW, Jeste DV. Preliminary study of a web-based tool for enhancing the informed consent process in schizophrenia research. Schizophr Res. 2012;141:247–250. doi: 10.1016/j.schres.2012.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Dunn LB, Lindamer LA, Palmer BW, et al. Enhancing comprehension of consent for research in older patients with psychosis: a randomized study of a novel consent procedure. Am J Psychiatry. 2001;158:1911–1913. doi: 10.1176/appi.ajp.158.11.1911. [DOI] [PubMed] [Google Scholar]

- 45.Moran MB, Murphy ST, Frank LB, et al. The ability of narrative communication to address health-related social norms. Int Rev Soc Res. 2013;3:131–149. doi: 10.1515/irsr-2013-0014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kreuter MW, Homes K, Alcaraz K, et al. Comparing narrative and informational videos to increase mammography in low-income African American women. Patient Educ Couns. 2010;81:S6–S14. doi: 10.1016/j.pec.2010.09.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Wise M, Han JY, Shaw B, et al. Effects of using online narrative and didactic information on healthcare participation for breast cancer patients. Patient Educ Couns. 2008;70:348–356. doi: 10.1016/j.pec.2007.11.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hinyard LJ, Kreuter MW. Using narrative communication as a tool for health behavior change: a conceptual, theoretical, and empirical overview. Health Educ Behav. 2007;34:777–792. doi: 10.1177/1090198106291963. [DOI] [PubMed] [Google Scholar]

- 49.Murphy ST, Frank LB, Chatterjee JS, et al. Comparing the relative efficacy of narrative vs nonnarrative health messages in reducing health disparities using a randomized trial. Am J Public Health. 2015;105:2117–2123. doi: 10.2105/AJPH.2014.302332. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.