Abstract

Complex patterns of neural activity appear during up-states in the neocortex and sharp waves in the hippocampus, including sequences that resemble those during prior behavioral experience. The mechanisms underlying this replay are not well understood. How can small synaptic footprints engraved by experience control large-scale network activity during memory retrieval and consolidation? We hypothesize that sparse and weak synaptic connectivity between Hebbian assemblies are boosted by pre-existing recurrent connectivity within them. To investigate this idea, we connect sequences of assemblies in randomly connected spiking neuronal networks with a balance of excitation and inhibition. Simulations and analytical calculations show that recurrent connections within assemblies allow for a fast amplification of signals that indeed reduces the required number of inter-assembly connections. Replay can be evoked by small sensory-like cues or emerge spontaneously by activity fluctuations. Global—potentially neuromodulatory—alterations of neuronal excitability can switch between network states that favor retrieval and consolidation.

Author summary

Synaptic plasticity is the basis for learning and memory, and many experiments indicate that memories are imprinted in synaptic connections. However, basic mechanisms of how such memories are retrieved and consolidated remain unclear. In particular, how can one-shot learning of a sequence of events achieve a sufficiently strong synaptic footprint to retrieve or replay this sequence? Using both numerical simulations of spiking neural networks and an analytic approach, we provide a biologically plausible model for understanding how minute synaptic changes in a recurrent network can nevertheless be retrieved by small cues or even manifest themselves as activity patterns that emerge spontaneously. We show how the retrieval of exceedingly small changes in the connections across assemblies is robustly facilitated by recurrent connectivity within assemblies. This interaction between recurrent amplification within an assembly and the feed-forward propagation of activity across the network establishes a basis for the retrieval of memories.

Introduction

The idea of sequential activation of mental concepts and neural populations has deep roots in the history of the cognitive sciences [1–3] as well as its share of criticism [4]. In one of the most influential works in neuroscience, Donald Hebb extended this concept by suggesting that neurons that fire simultaneously should be connected to each other, thus forming a cell assembly that represents an abstract mental concept [5]. He also suggested that such assemblies could be connected amongst each other, forming a network of associations in which one mental concept can ignite associated concepts by activating the corresponding assemblies. Hebb referred to the resulting sequential activation as well as the underlying circuitry as “phase sequence”. We will refer to such connectivity patterns as “assembly sequences”.

The notion of Hebbian assemblies has triggered a huge number of experimental studies (reviewed in [6]), but relatively few experiments have been dedicated to the idea of assembly sequences [7, 8]. Many theoretical studies focused on feedforward networks, also known as synfire chains [9–12]. Synfire chains are characterized by a convergent-divergent feedforward connectivity between groups of neurons, where pulse packets of synchronous firing can propagate through the network. Few works were also dedicated on synfire chains embedded in recurrent networks [13–15], however, without explicitly considering recurrent connectivity within groups.

In this study, we combine the concept of feedforward synfire chains with the notion of recurrently connected Hebbian assemblies to form an assembly sequence. Using numerical simulations of spiking neural networks, we form assemblies consisting of recurrently connected excitatory and inhibitory neurons. The networks are tuned to operate in a balanced regime where large fluctuations of the mean excitatory and inhibitory input currents cancel each other. In this case, distinct assemblies that are sparsely connected in a feedforward fashion can reliably propagate transient activity. This replay can be triggered by external cues for sparse connectivities, but also can be evoked by background activity fluctuations for larger connectivities. Modulating the population excitability can shift the network state between cued-replay and spontaneous-replay regimes. Such spontaneous events may be the basis of the reverberating activity observed in the neocortex [16–18] or in the hippocampus [19–21]. Finally, we show that assembly sequences can also be replayed in a reversed direction (i.e., reverse replay) as observed during replay of behavior sequences [22, 23].

Results

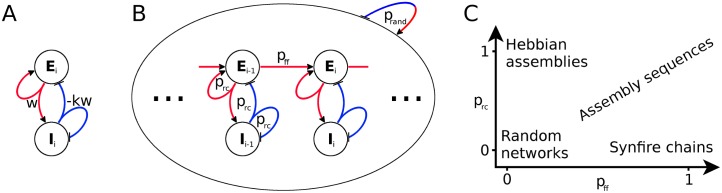

To test Hebb’s hypothesis on activity propagation within a recurrent network, we use a network model of excitatory and inhibitory conductance-based integrate-and-fire neurons. The network has a sparse random background connectivity prand = 0.01 [24]. We form a neural assembly (Fig 1A) by picking M excitatory (M = 500 if not stated otherwise) and M/4 inhibitory neurons and connecting them randomly with probability prc, resulting in a mutually coupled excitatory and inhibitory population. The new connections are created independently and in addition to the background connections. To embed an assembly sequence in the network, we first form 10 non-overlapping assemblies. The assemblies are then connected in a feedforward manner where an excitatory neuron from one group projects to an excitatory neuron in the subsequent group with probability pff (Fig 1B). Thus, by varying the feedforward and the recurrent connectivities, we can set the network structure anywhere in the spectrum between the limiting cases of synfire chains (pff > 0, prc = 0) and uncoupled Hebbian assemblies (pff = 0, prc > 0), as depicted in Fig 1C.

Fig 1. Network connectivity.

A: Schematic of an assembly i consisting of an excitatory (Ei) and an inhibitory (Ii) population. Red and blue lines indicate excitatory and inhibitory connections, respectively. The symbols w and −kw denote total synaptic couplings between populations. B: Sketch of network connectivity. The inhomogeneous network is randomly connected with connection probability prand. A feedforward structure consisting of 10 assemblies (only i − 1 and i shown) is embedded into the network. Each assembly is formed by recurrently connecting its neurons with probability prc. Subsequent assemblies are connected with feedforward probability pff between their excitatory neurons. C: Embedded structure as a function of connectivities.

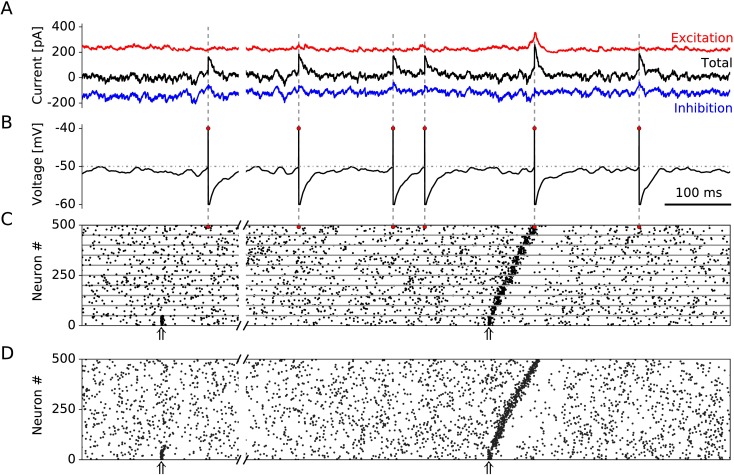

To ensure that the spontaneous activity of the network is close to an in-vivo condition, we use Hebbian plasticity of inhibitory connections [25], which has been shown to generate a balance of excitatory and inhibitory currents in individual neurons (Fig 2A). As a consequence, spikes are caused by current fluctuations (Fig 2B), and the network settles into a state of asynchronous irregular (AI) firing (Fig 2C).

Fig 2. Example of 1 second network activity.

The first 250 ms depict the dynamics of a network with random and recurrent connections only (prc = 0.1). The same network after embedding feedforward connectivity (pff = 0.04) is shown in the last 750 ms. A: Input currents experienced by an example neuron. The excitatory and inhibitory inputs are denoted by the red and blue traces, respectively. The sum of all currents (synaptic, injected, and leak currents) is shown in black. B: Membrane potential of the same neuron. Red dots denote the time of firing. C: Raster plot of spike times of 500 neurons (50 neurons per group are shown). The red dots correspond to the firing of the example neuron in A and B. The stimulation times of the first assembly are denoted with upward arrows. D: Raster plot of a continuous sequence. Each neuron is connected to its M neighbours (in the range ) with probability prc (left-hand side); and afterwards (right-hand side), a feedforward connectivity pff to the following M neurons (in the range ) is introduced.

To simulate a one-shot sequence learning paradigm, we initially embed assemblies that have recurrent connectivity prc only and are not connected via feedforward connections (Fig 2, left-hand side). A stimulation of the first assembly does not evoke a replay. Then, in a sham learning event, new feedforward connections are created between subsequent assemblies followed by a short phase (∼ 5 seconds) with inhibitory plasticity turned on in order to properly balance the network. If we then stimulate the first group in the embedded assembly sequence (Fig 2C, right-hand side), the network responds with a wave of activity that traverses the whole sequence, as hypothesised by Hebb [5]. We refer to such a propagation of activity wave as replay. As excitatory and inhibitory neurons are part of the assemblies, they both have elevated firing rates during group activation. Despite the high population transient firing rates (∼ 100 spikes/sec for excitatory, and ∼ 60 spikes/sec for inhibitory neurons when using a Gaussian smoothing window with width σ = 2 ms) single neurons are firing at most one spike during assembly activation. Because excitatory neurons in an assembly transiently increase their population firing rate from 5 to 100 spikes/sec, a replay can be inferred from the large change in activity, which resembles replay in hippocampal CA networks [19]. On the other hand, interneurons have higher background firing rates of ∼ 20 spikes/sec and smaller maximum firing rates of ∼ 60 spikes/sec during replay. As a result, interneurons have a much lower ratio of peak to background activity than excitatory neurons in our model, in line with the reported lower selectivity of interneurons [26].

We chose the particular wiring scheme of discrete assemblies partly due to the resemblance of the discrete windows of activity defined by the fast oscillations during hippocampal replay: ripples during sharp-wave ripples (SWRs) and gamma cycles during theta sequences. Additionally, our approach facilitates the model description and gives a leverage for an analytical treatment. Accordingly, in Fig 2A–2C, we modeled discrete assemblies of size M = 500, which have a distinct recurrent connectivity prc = 0.1 within each assembly, and a feedforward connectivity pff = 0.04 between two assemblies in the sequence. However, in biological networks, assemblies could potentially overlap, making a clear-cut distinction between feedforward and recurrent connectivities difficult. To study assembly sequences in a more continuously wired sequence, we use an extreme case where no assemblies are defined at all. All neurons are arranged in a linear sequence, and every neuron is connected to its M = 500 neighbouring excitatory cells (M/2 preceding and M/2 succeeding) with probability prc. Recurrent connections to and from inhibitory neurons are embedded analogously in a continuous manner. To imitate the connectivity pattern from the discrete model, every excitatory neuron is connected to the M following neurons without overlapping with the recurrent connections (i.e., the range from to ) with probability pff. After stimulating the first M neurons with a transient input, the whole sequence is replayed (Fig 2D). Compared to the discrete assembly sequence (Fig 2C) where the same connection probabilities were used, the replay is continuous and qualitatively similar. In what follows, however, we return to the discrete assemblies because this description facilitates a connection of simulations with an analytical treatment.

Sparse feedforward connectivity is sufficient for replay

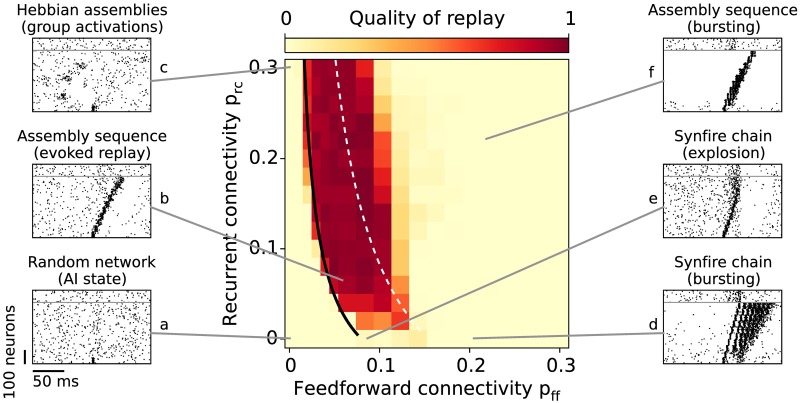

Whether an assembly sequence is replayed is largely determined by the connectivities within and between assemblies. Therefore, we first study how the quality of replay depends on the recurrent (prc) and the feedforward (pff) connectivities. The network dynamics can be roughly assigned to regimes where the connectivity is too weak, strong enough, or too strong for a successful replay. We use a quality measure of replay, which determines whether activity propagates through the sequence without evoking a “pathological” burst of activity (Fig 3). In such “pathological” cases the spatiotemporal structure of replay is often preserved while the background activity deviates from the AI state, or the whole network is involved in the events. To disregard such events, the quality measure punishes replays that (1) evoke bursting of neurons within assemblies during activation or (2) activate the whole network (for details see Materials and Methods).

Fig 3. Evoked replay.

Assembly-sequence activation as a function of the feedforward pff and the recurrent prc connectivities. The color code denotes the quality of replay, that is, the number of subsequent groups firing without bursting (see Materials and Methods). The black curve corresponds to the critical connectivity required for a replay where the slope c of the transfer function (See Materials and Methods and Eq 1) is matched manually to fit the simulation results for connectivities prc = 0.08 and pff = 0.04. The slope c is also estimated analytically (dashed white line). The raster plots (a-f) illustrate the dynamic regimes observed for different connectivity values; neurons above the gray line belong to the background neurons.

Naturally, for a random network (pff = 0, prc = 0, Fig 3a) the replay fails because the random connections are not sufficient to drive the succeeding groups. In the case of uncoupled Hebbian assemblies (e.g., pff = 0, prc = 0.30), groups of neurons get activated spontaneously (Fig 3c), which is reminiscent to the previously reported cluster activation [27] but on a faster time scale. Already for sparse connectivity (e.g., pff = prc = 0.06) the assembly-sequence replay is successful (Fig 3b). In the case of denser recurrence (prc ≈ 0.10), a pulse packet propagates for even lower feedforward connectivity (pff ≈ 0.03). The feedforward connectivity that is required for a successful propagation decreases with increasing recurrent connectivity because assemblies of excitatory and inhibitory neurons can increase small fluctuations of the input through “balanced amplification” [28, 29] as summarized in Materials and methods, section “Balancing the Network”.

For high feedforward (pff ≲ 0.10) but low recurrent (prc ≲ 0.10) connectivity, the replay has low quality. In this case, excitatory neurons receive small recurrent inhibitory input compared to the large feedforward excitation, because the recurrent connection probability is lower than the feedforward one. Due to the lack of sufficiently strong inhibitory feedback within the assembly (compared to the strong feedforward excitation), the propagating activity either leads to run-away excitation (Fig 3e), also called synfire explosion [30, 31], or to epileptiform bursting (Fig 3d). When both recurrent and feedforward connectivities are high, the inhibition is able to keep the propagating activity transient (Fig 3f). However, because of the strong input each neuron is firing multiple times within a small time window. The fact that neurons in each group (except the first) are firing multiple times during a replay alters the spatio-temporal structure of the sequence. While activity propagates from one group to another, neurons do not necessary spike in order due to the many emitted spikes. Another reason to assign low quality to such replays is the fact that the network dynamics is deviating from the AI background state because neurons that are part of the sequence tend to fire almost exclusively during replays but not outside replays.

To get an analytical understanding of the network, we use a linear approximation of the network dynamics to derive conditions under which replay is successful. The key determinant for replay is an amplification factor , which measures how large is the rate ri+1 in group i+1 in relation to the rate in the previous group i.

In the case where the amplification factor is smaller than one (ri+1 < ri), the activity propagating through the assembly sequence will decrease at each step and eventually vanish, while for amplification larger than one (ri+1 > ri) one would expect propagating activity that increases at each step in the sequence. An amplification factor κ(pff, prc) = 1 represents the critical value of connectivity for which the replay is marginally stable, and the magnitude of activations is similar across groups. In the Materials and Methods we show that a linear model can approximate the amplification factor by

| (1) |

where c = 0.25 nS-1 is a constant that fits the model to the data (see Materials and Methods). We can interpret κ as an “effective feedforward connectivity” because the recurrent connectivity (prc) effectively scales up the feedforward connectivity pff. We can match the analytical results for critical connectivities to the numerical simulation, and show a qualitative fit between the approaches (black line in Fig 3).

We note that the number of excitatory synapses that is needed for an association, M2(prc + pff), weakly depends on the position on the line κ = 1. By solving we find that the minimum number of new connections required for a replay is obtained for prc = 0 because lines for which prc + pff = const have slope of −1 in Fig 3, and the slope of the line defined by κ = 1 is more negative. For example, when prc = 0.0, we need 40 new synapses; for prc = 0.05, we need 50 new synapses; and for prc = 0.2, 111 synapses are required for a new association. However, as feedforward connections might be created/facilitated on demand in one-shot learning, it is advantageous to keep their number low at the cost of higher recurrent connectivity, which has more time to develop prior to the learning. We extend this arguments further in the Discussion.

In summary, the recurrent connections within an assembly play a crucial role in integrating and amplifying the input to the assembly. This facilitation of replay is predominantly due to the excitatory-to-excitatory (E-E) recurrent connections, and not due to the excitatory-to-inhibitory (E-I) connections, a connectivity also known as “shadow pools” [31]. We tested that embedding shadow pools and omitting the E-E connectivity within assemblies has no beneficial effect on the quality of replay.

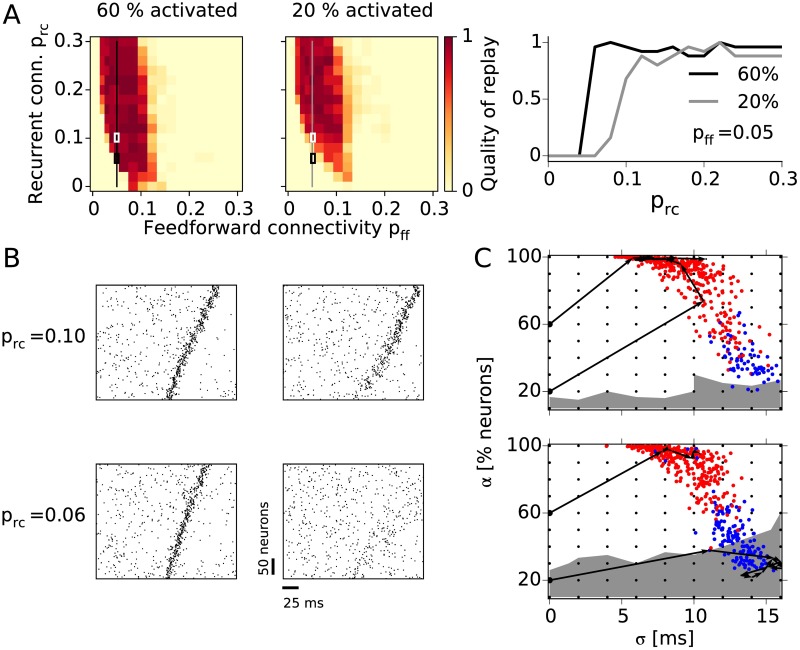

Recurrent connections are important for pattern completion

Neural systems have to deal with obscure or incomplete sensory cues. A widely adopted solution is pattern completion, that is, reconstruction of patterns from partial input. We examine how the network activity evolves in time for a partial or asynchronous activation of the first assembly.

To determine the capability of our network to complete patterns, we quantify the replay when only a fraction of the first group is stimulated by external input. If 60% of the neurons in the first group (strong cue) are synchronously activated (Fig 4A, left panel), the quality of replay is virtually the same as in the case of full stimulation (100% activated) in Fig 3. However, when only 20% of the neurons (weak cue) are simultaneously activated (Fig 4A, middle panel), we see a deterioration of replay mostly for low recurrent connectivities. The effect of the recurrent connections is illustrated in the right-most panel in Fig 4A where quality of replay is shown as a function of prc while the feedforward connectivity was kept constant (pff = 0.05).

Fig 4. Pattern completion.

A: Quality of replay after partial activation of the first group for cue size 60% (left panel) and 20% (middle) as a function of feedforward and recurrent connectivity. The right-most panel shows the quality replay after a cue activation (20% and 60%) as a function of the recurrent connectivity (prc) while the feedforward connectivity is constant (pff = 0.05). B: Examples of network activity during 60% (left) and 20% (right) cue activation. The top and bottom raster plots correspond to assembly sequences with higher (prc = 0.10, top) and lower (prc = 0.06, bottom) recurrent connectivity, highlighted in A with white and black rectangles, respectively. C: State-space portraits representing the pulse-packet propagation. The activity in each group is quantified by the fraction of firing excitatory neurons (α) and the standard deviation of their spike times (σ). The initial stimulations are denoted with small black dots while the colored dots denote the response of the first group to the stimulations; red dot if the whole sequence is activated, and blue otherwise. Stimulations in the region with white background result in replays, while stimulating in the gray region results in no replay. The black arrows illustrate the evolution of pulse packets during the replays in B. Top: prc = 0.10; bottom: prc = 0.06.

Small input cues lead to a weak activation of the corresponding assembly. In the case of stronger connectivity (e.g., prc) this weak activity can build up and result in a replay as shown in the example from Fig 4B. The top and bottom rows of raster plots correspond to two assembly sequences with different recurrent connectivities, as highlighted by the rectangles in Fig 4A, while left and right columns show the activity during strong and weak cues, respectively. In the case of pff = 0.05 and prc = 0.10 (Fig 4B, top-right), the weak cue triggers a wide pulse packet with large temporal jitter in the first groups, which gradually shapes into a synchronous pulse packet as it propagates through the network. On the other hand, for a smaller recurrent connectivity (prc = 0.06), the 20% partial activation triggers a rather weak response that does not result in replay (Fig 4B, bottom-right).

The quality of replay depends not only on the number of neurons that are activated but also on the temporal dispersion of the pulse packet. Here, we adopt a quantification method that represents the activity evolution in a state-space portrait [10]. Fig 4C shows the time course of the fraction α of cells that participate in the pulse packet and the temporal dispersion σ of the packet as the pulse propagates through the network. The state-space representation of two assembly sequences with equal feedforward (pff = 0.05) but different recurrent connectivity are shown in Fig 4C (top: prc = 0.10, bottom: prc = 0.06). For each assembly sequence we repeatedly stimulated the first group with varying cue size α and time dispersion σ, depicted by the black dots. Depending on the strength and dispersion of the initial stimulation, the dynamics of a network can enter one of two attractor points. For high α and low σ the pulse packet propagates, entering the so-called synfire attractor (white background). On the other hand, for low α and high σ the pulse packet dies out resulting in low asynchronous firing (gray background). The black-arrow traces in Fig 4C are example trajectories that describe the propagating pulse packets from Fig 4B in the (α − σ) space.

To summarize, increasing both the recurrent and feedforward connectivity facilitates the replay triggered by weak and dispersed inputs. Recurrent connectivity is particularly important for pattern completion.

Spontaneous replay

An interesting feature of assembly sequences is the potential emergence of spontaneous activations, that is, a replay when no specific input is given to the network. Random fluctuations in the network can be amplified by the feedforward structure and give rise to a spontaneous wave of propagation.

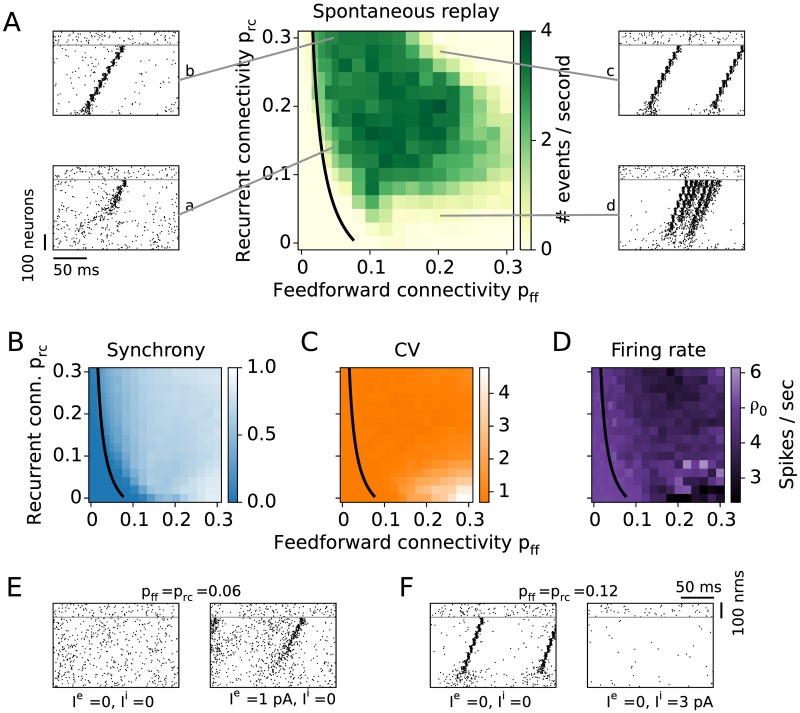

We find that spontaneous and evoked replay share various features such as sequential group activation on the background of AI network activity (Fig 5A, rasters a and b). As in the case of evoked replay, for exceedingly large connectivities the network dynamics can be dominated by epileptiform bursting activity (Fig 5A, rasters c and d).

Fig 5. Spontaneous network activity.

A: The rate of spontaneous sequence activation is measured in the unperturbed network. The black curve is the analytical result for the lower bound of successful propagation from Fig 3. Examples of spontaneous replays for different connectivities are shown in the raster plots a-d. Synchrony (B), coefficient of variation (C), and firing rate (D) are averaged over the neurons in the last group of the sequence. E: Spontaneous events modulated by an external input. For low enough connectivities no spontaneous events occur (left). A small additional constant current input to the whole excitatory population (Ie = 1 pA) generates spontaneous replays (right). F: A densely connected network shows replays (left). Once the inhibitory population receives an additional constant current input (Ii = 3 pA), the firing rate decreases and no spontaneous events occur (right).

To assess spontaneous replay, we quantify the number of replay events per time taking into account their quality, i.e., huge bursts of propagating activity are disregarded as replay. The rate of spontaneous activation increases as a function of both the feedforward (pff) and the recurrent (prc) connectivity (Fig 5A). For large connectivities (pff, prc > 0.20) the quality of the spontaneous events is again poor and mostly dominated by strong bursts (Fig 5A, raster c). The dynamics of networks with large feedforward and low recurrent connections is dominated by long-lasting bursts of activity consisting of multiple sequence replays within each burst (Fig 5A, raster d). The maximum rate of activations does not exceed 4 events per second because the inhibitory synaptic plasticity adjusts the inhibition such that the excitatory firing rate is close to 5 spikes/sec.

The starting position of spontaneous replays largely depends on the network connectivity. Sequences with low prc are seldom initiated in the first group(s), while for high prc spontaneous replays occur predominantly at the beginning of the embedded sequence. Spontaneous replays for sequences with low prc arise from noise fluctuations that are amplified mainly by the underlying feedforward connections. Fluctuations propagate through a few groups until they result in a full-blown replay. On the other hand, to explain the preference of starting position at the beginning of the sequence for high prc, we refer to the case of disconnected Hebbian assemblies (Fig 3A, panel c) that get activated by the noise fluctuations. In case of weak feedforward connectivity (e.g., pff < 0.02), these fluctuations do not always activate the following assemblies due to insufficient feedforward drive. On the other hand, for pff > 0.03 even a weak activation of an assembly will lead to a replay of the rest of the sequence. If replays were to start at random locations in the sequence, neurons in the later section of the sequence would participate in more replays than those earlier in the sequence, increasing the firing rate in these neurons. The inhibitory plasticity, which homeostatically regulates the rate, will hence increase the amount of inhibition in these later assemblies, with the effect of reducing the background activity. Because this in turn suppresses the fluctuations that trigger replays, spontaneous replays are less likely to be initiated in later assemblies.

To better characterize spontaneous dynamics, we refer to more extensive measures of the network dynamics. First, to account for deviations from the AI network state, we measure the synchrony of firing among neurons within the assemblies. To this end, we calculate the average pairwise correlation coefficient of spike trains of neurons within the same group. A low synchrony (value ∼0) means that neurons are uncorrelated, while a high synchrony (value ∼1) reveals that neurons fire preferentially together and seldom (or not at all) outside of an assembly activation. Because the synchrony builds up while activity propagates from one group to the next, a synchronization is most pronounced in the latter groups of the sequence. Therefore, we use correlations within the last group of the sequence as a measure of network synchrony (Fig 5B). The average synchrony is low (∼0) for low connectivities (pff, prc < 0.10) and increases as a function of both pff and prc. In the case of high prc, neurons participating in one assembly excite each other, and hence tend to fire together. On the other hand, for high pff, neurons within an assembly receive very similar input from the preceding group, so they fire together. This attachment of single neurons to group activity has two major consequences: first, it alters the AI state of the network, and second, it alters the stochastic behavior of the neurons, leading to more deterministic firing and bursting.

The network exhibits frequent epileptiform bursting in the case of high feedforward and low recurrent connectivities (raster plot examples in Fig 3, panel d, and Fig 5A, panel d). To assess this tendency of neurons to fire in bursts, we calculate the coefficient of variation (CV) for individual neurons’ spike trains. The average CV of neurons in the last group of the sequence exhibits Poisson-like irregular firing (CV value ∼ 1) for a large range of parameters (Fig 5C). However, for high pff (≥ 0.10) and low prc (≤ 0.10), the CV value exceeds 1, in line with irregular and bursting firing. In this parameter region, small fluctuations of activity in the first groups of the sequence are strongly amplified by the underlying feedforward connectivity, leading to ever increasing activity in the following groups (Fig 5A, panel d). Because of the variable shapes and sizes of these bursts, they are not always classified as spontaneous activations in Fig 5A. Highly bursty firing (CV > 3) and high synchrony (∼ 1) suggest that the network cannot be properly balanced.

To test whether the inhibitory plasticity can balance the network activity when assembly sequences are embedded, we measure the average firing rate in the last group of the sequence (Fig 5D). The firing rate deviates from the target rate of 5 spikes/sec mostly for high feedforward connectivity (pff ≳ 0.15). This inability of inhibition to keep the firing rate at the target value can be explained by the frequent replays that shape a stronger inhibitory input during the balancing of the network. Once the inhibition gets too strong, neurons can fire only when they receive excessive amount of excitation. Thus, in the case of high clustering, e.g., strong assembly connectivity, the inhibitory plasticity prevents the neurons from reaching high firing rates, but is unable to sustain an AI state of the network.

Control of spontaneous and cued replay by external input

Further, we investigate how spontaneous and cued replay are related. The black line in Fig 5A refers to the analytical approximation for connectivities that enable evoked replay. Compared to the connectivity region of successfully evoked replays in Fig 3, the region for spontaneous replays in Fig 5 is slightly shifted to the top and to the right. Therefore, in only a narrow area of the parameter space, sequences can be replayed by external input but do not get spontaneously activated. This finding suggests that to embed a sequence with high signal-to-noise ratio of propagation, the connectivities should be chosen appropriately, in line with previous reports [32]. In what follows we show that the size of this region can be controlled by external input to the network.

We demonstrate how a small amount of global input current to all excitatory or all inhibitory neurons can modulate the network and shift it between AI and spontaneous-replay regimes (Fig 5E and 5F). In the first example, the connectivities are relatively low (pff = prc = 0.06) such that replay can be evoked (Fig 3) but no spontaneous activations are present (Fig 5A and 5E, left). After injecting a small additional current of only 1 pA into the whole excitatory population, the network becomes more excitable, i.e., the firing rate rises from 5 to 12 spikes/sec and spontaneous replays do arise (Fig 5E, right).

On the other hand, in a network with high connectivities (pff = prc = 0.12), replay can be reliably evoked (Figs 3 and 4A) and also occurs spontaneously (Fig 5A). An additional input current of 3 pA to the inhibitory population decreases the firing rate of the excitatory population from 5 to 0.33 spikes/sec and shifts the network from a regime showing frequent spontaneous replays to a no-replay, AI regime (Fig 5F, left and right, respectively). Nevertheless, replays can still be evoked as in Fig 3. Hence, the spontaneous-replay regime and the average firing rate in the AI state can be controlled by global or unspecific external current.

In summary, the balanced AI network state and successfully evoked replay of assembly sequences can coexist for a range of connectivities. For higher connectivities, the underlying network structure amplifies random fluctuations, leading to spontaneous propagations of activity between assemblies. A dynamical control of the rate of spontaneous events is possible through external input, which modulates the network activity and excitability. In the brain, such a switching between regimes could be achieved via neuromodulators, in particular via the cholinergic or adrenergic systems [33, 34].

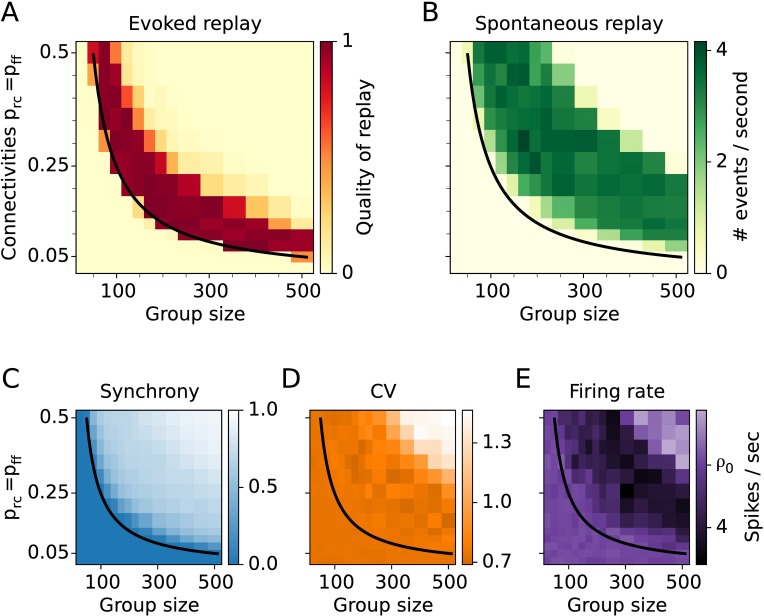

Smaller assemblies require higher connectivity

So far, we have shown basic properties of sequences at fixed assembly size M = 500. To determine the role of this group size in replay, we vary M and the connectivity while keeping the size of the network fixed. As we have already explored how recurrent and feedforward connections determine replay individually, we now consider the case where they are equal, i.e., pff = prc = p.

Assembly sequences can be successfully replayed after stimulation for various assembly sizes (Fig 6A). Smaller assemblies require denser connectivity (e.g., p = 0.25 for M = 100), while larger assemblies allow sparser connectivity (e.g., p = 0.05 for M = 500). Moreover, assemblies as small as 20 neurons are sufficient to organize a sequence given the condition of all-to-all connectivity within and between assemblies. The analytically derived critical value of effective connectivity κ = 1 is in agreement with the numerical simulations (black line in Fig 6A).

Fig 6. Assembly-sequence activation for various group sizes and connectivities.

A: Simulation results for the quality of replay. B: Rate of spontaneous replay. C: Synchrony. D: Coefficient of variation E: Firing rate. ρ0 = 5 spikes/sec is the target firing rate. In C, D, and E quantities are averaged over the neurons in the last group of the sequence. The black line is an analytical estimate for the evoked replay as in Figs 3 and 5.

To further characterize the network dynamics for varying group size, we measure the rate of spontaneous activations of assembly sequences in undisturbed networks driven solely by constant input. As indicated in Fig 6B, spontaneous replays occur for a limited set of parameters resembling a banana-shaped region in the (M, p) plane. The parameter region for spontaneous replays partly overlaps with that of evoked replay. Again, there is a narrow range of parameters to the right of the black line in Fig 6B for which sequences can be evoked by external input while not being replayed spontaneously. As shown above, the size of this region can be controlled by external input to the whole network (Fig 5E and 5F).

To further assess the spontaneous dynamics, we measure the firing synchrony of neurons within the last group. The synchrony grows as function of both connectivity and group size (Fig 6C). The fact that the synchrony approaches the value one for higher connectivity and group size indicates that the network dynamics gets dominated by spontaneous reactivations. The simulation results reveal that neurons always fire rather irregular with coefficient of variation (CV) between 0.7 and 1.4 (Fig 6D). Because the recurrent and the feedforward connectivities are equal (pff = prc = p), the inhibition is always strong enough and does not allow epileptiform bursting activity. This behavior is reflected in a rather low maximal value of the (CV<1.4) compared to the results from Fig 5, where the CV could exceed values of 4 for low prc. The measured firing rates in the last assembly are at the target firing rate of ρ0 = 5 spikes/sec for parameter values around and below the critical value κ = 1 (Fig 6E). However, for increasing connectivity p and increasing group size M, the firing rate deviates from the target, indicating that the inhibitory plasticity cannot keep the network fully balanced.

To conclude, the assembly size M plays an important role in the network activity. The critical values of connectivity and group size for successful propagation are inversely proportional. Thus, the analytics predicts that larger assemblies of several thousands neurons require only a fraction of a percent connectivity in order to propagate synchronous activity. However, for this to happen, the group size M must be much smaller than the network size NE. Here NE was fixed to 20,000 neurons for easier comparison of scenarios, but results are also valid for larger networks (see Materials and Methods). The good agreement between the mean-field theory and the numerical results suggests that the crucial parameter for assembly-sequence replay is the total input one neuron is receiving, e.g., the number of input synapses.

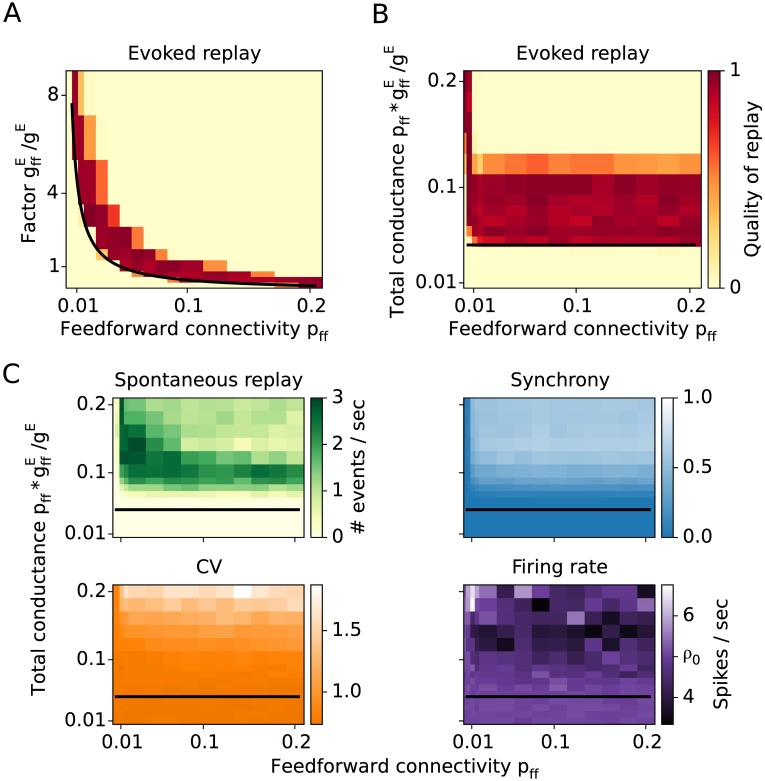

Stronger synapses are equivalent to more connections

Up to this point, all excitatory synaptic connections in our model had constant and equal strengths. By encoding an assembly sequence we implicitly altered the structural connectivity by creating new synaptic connections. This case of structural plasticity can also occur when silent synapses are turned into functionally active connections upon learning [35, 36]. However, learning new associations might also be possible through a change of synaptic strength of individual connections [37, 38]. If a sequence is to be learned through synaptic plasticity, then instead of increasing the connectivity between groups of neurons, the synaptic conductances could be increased as well. To test whether these two types of plasticity are equivalent in our approach, we embed assembly sequences with various feedforward connectivities pff and various feedforward conductances , while keeping the recurrent connectivity (prc = 0.06) and recurrent conductances (gE = 0.1 nS) constant.

Numerical results show that feedforward connectivity and feedforward conductance have identical roles in the replay of a sequence. That is, the sparser the connections, the stronger synapses are required for the propagation of activity. The analytical estimate (Fig 7A, black line corresponds to ) predicts that the product of pff and is the essential parameter for replay.

Fig 7. Feedforward conductance versus feedforward connectivity.

A: Quality of replay as a function of connectivity and synaptic strength. B: The replay as a function of connectivity and total feedforward conductance input shows that the propagation is independent of connectivity as long as the total feed-forward input is kept constant. C: Spontaneous network dynamics described by the rate of spontaneous replay, synchrony, CV, and firing rate.

That this analytical prediction is fulfilled in the numerical simulations becomes clearer when we show the replay quality as a function of the feedforward connectivity and the total feedforward input a neuron is receiving (Fig 7B). It is irrelevant whether the number of connections are changed or their strength, what matters is their product. This rule breaks only for sparse connectivities (pff < 0.01), i.e. when the mean number of feedforward connections between two groups is low (< 5). Therefore, the number of relevant connections cannot be reduced to very low numbers.

Consistent with earlier findings, the quality of replay is high above a certain strength of the total feedforward conductance (≳ 0.05 in Fig 5B) and for pff ≥ 0.01. However, for sufficiently large feedforward input (), the replay of sequences is severely impaired as the network is in a state of highly synchronous bursting activity (Fig 7B), which is similar to the results shown in Figs 5 and 6.

We also examined sequences that are formed by increasing existing background connections between the assemblies by a factor pff/prand, rather than by adding additional connections. Replays are possible also in this condition and they are indistinguishable from networks with increased feedforward connectivities.

The rule that the total input determines the network behavior also holds for spontaneous activity. Spontaneous replay rate, CV, synchrony, and firing rate all vary as a function of the total input (Fig 7C), and only weakly as a function of the connectivity or the conductance alone. Similar to the previous results in Figs 5 and 6, for it is possible to evoke a replay while preserving the AI state of the network. Increasing the total input beyond this value drives the network into a state of spontaneous replay with increased synchrony.

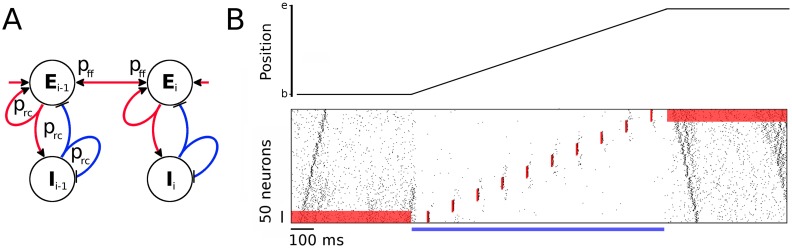

Forward and reverse replay in assembly sequences with symmetric connections

The assembly-sequence model discussed until now contains asymmetric connections, i.e., neurons from one group project extensively within the same and the subsequent group but not to the previous group. We showed that such feedforward assembly sequences are capable of propagating activity, which we call replay. Thus, the proposed model may give an insight on the replay of behavioral sequences that have been observed in the hippocampus [19]. However, further experiments revealed that sequences are also replayed in the inverse temporal order than during behavior, so-called reverse replay [22, 23]. The direction of this replay also depended on the context, i.e., when the animal was at the beginning of the path, forward replays prevailed; while after traversing the path, more reverse replays were detected (but see [39]). This suggests that the replay activity might be cued by the sensory input experienced at the current location of the animal.

As the feedforward structure adopted in the network model is largely asymmetric, the assembly sequence is incapable of reverse replay in its current form. To be able to activate a sequence in both directions, we modify the network and add symmetric connectivity between assemblies [40, 41]. The symmetric STDP window that has been reported recently in the hippocampal CA3 area in vivo [42] would allow for strong bidirectional connections. In such a model, an assembly of neurons does not project only to the subsequent assembly but also to the preceding, and both projections are random with probability pff (Fig 8A). While this connectivity pattern decreases the group clustering and makes the sequence more continuous, it does not lead to full merge of the assemblies because the inhibition remains local for each group.

Fig 8. Symmetric assembly sequence.

A: Schematic of an assembly sequence with symmetric connections between groups. B: Virtual rat position on a linear track (top) and the corresponding neuronal activity (bottom) as a function of time for 2 seconds. The rat rests at position “b” for half a second, then moves from “b” to “e” with constant speed for one second, where it rests for another 500 ms. While the rat is immobile at both ends of the track, a positive current input Ie = 2 pA is applied to the excitatory population of the first and last assembly as shown by the red background in the raster plot. Spontaneous replays start from the cued assemblies. During exploration, however, the network activity is decreased by a current Ie = −10 pA injected to the whole excitatory population, denoted with a blue horizontal bar. Strong sensory input during traversal activates the location-specific assemblies but does not result in any replay. The timing and location of the stimulations is denoted with red vertical bars in the raster plot. Recurrent and feedforward connectivities are prc = 0.15 and pff = 0.03, respectively.

Interpreting this network as a model for hippocampal activity during spatial navigation of a virtual rat on a linear track (Fig 8B, top), we test the idea that external input can switch the network between a spontaneous-replay state during rest and a non-replay, spatial-representation state during locomotion. During immobility at the beginning of the track, a context-dependent input cue is mimicked by a constant current Ie = 2 pA injected into the excitatory neurons of the first assembly (Fig 8B, red bar from 0 to 500 ms). The elevated firing rate of the first assembly results in a spontaneous forward replay, similar to the experimental findings during resting states at the beginning of a linear track [22, 23].

After the initial 500 ms resting period, an external global current of −10 pA is injected into the whole excitatory population to decrease network excitability and to mimic a state in which the rat explores the environment. In addition, to model place-specific sensory input that is locked to theta oscillations, we apply a strong and brief conductance input (as in Fig 2) every 100 ms to the assembly that represents the current location. In this situation, the assemblies fire at their corresponding locations only. There is, however, a weak activation of the neighboring assemblies that does not result in a replay. An extension of the model including lateral inhibition and short-term plasticity would possibly enable theta sequences that span in one direction only [43]. Such an extension is, however, beyond the scope of the current manuscript.

At the end of the track, we retract the global external current to return to the virtual resting state for the last 500 ms of the simulation, and the network switches back to higher mean firing rates. A context-dependent sensory cue to the last group (Ie = 2 pA current injected continuously) then leads to a spontaneous reverse replay, similar to experimental findings at the end of a linear track [22, 23].

In the absence of a context-dependent current injection during virtual resting state, spontaneous replays start at around the middle of the sequence (as in Fig 5) and propagate in forward or reverse direction. As noise fluctuations are gradually amplified while propagating between assemblies, it is rare to find a spontaneous event that is simultaneously replayed in both directions. In our simulations (Fig 8), we assumed that the starting position of replay is cued by the sensory input from the current location. However, it has been shown that replays during theta sequences are rather segmented and represent the environment in discrete “chunks” [44]. These segments are not uniformly distributed but tend to cover the space between physical landmarks, noteworthy positions in the environment. The finding of Gupta et al. [44] suggests that there might be other mechanisms controlling the starting position of replay other than the sensory input. Currently, it is an open question whether SWR replays represent the environment also in a segmented manner from a landmark to a landmark.

In summary, we show that given symmetric connectivity between assemblies, transient activity can propagate in both directions. Large negative external currents injected into all excitatory neurons can decrease network excitability and thus block the replay of sequences. On the other hand, spontaneous replay can be cued by a small increase in the firing rate of a particular assembly. Interestingly, once a replay is initiated, it does not change direction, in spite of the symmetric connectivity. An active assembly receives feedback inhibition from its inhibitory subpopulation, which prevents immediate further activations and hence a reversal of the direction of propagation.

Discussion

We revived Hebb’s idea on assembly sequences (or “phase sequences”) where activity in a recurrent neural network propagates through assemblies [5], a dynamics that could underlie the recall and consolidation of memories. An important question in this context is how learning of a series of events can achieve a strong enough synaptic footprint to replay this sequence later. Using both numerical simulations of recurrent spiking neural networks and an analytical approach, we provided a biologically plausible model for understanding how minute synaptic changes can nevertheless be uncovered by small cues or even manifest themselves as activity patterns that emerge spontaneously. We showed how the impact of small changes in the connections between assemblies is boosted by recurrent connectivity within assemblies. This interaction between recurrent amplification within an assembly and the feedforward propagation of activity establishes a possible basis for the retrieval of memories. Our theory thus provides a unifying framework that combines the fields of Hebbian assemblies and assembly sequences [5], synfire chains [9, 10], and fast amplification in balanced recurrent networks that are in an asynchronous-irregular state [25, 28].

Main conclusions from our work are that the effective coupling between assemblies is a function of both feedforward and recurrent connectivities, and that the network can express three main types of behavior: 1. When the coupling is weak enough, assembly sequences are virtually indistinguishable from the background random connections, and no replays take place. 2. For sufficiently strong coupling, a transient input to some assembly propagates through the sequence, resulting in a replay. 3. For even stronger coupling, noise fluctuations get amplified by the underlying structure, resulting in spontaneous replays. Each of these three regimes has a certain advantage in performing a particular task. Weak coupling is appropriate for imprinting new sequences if the network dynamics is driven by external inputs rather than controlled by the intrinsically generated activity. Intermediate coupling is suitable for recollection of saved memories; sequences remain concealed and are replayed only by specific input cues; otherwise, the network is in the asynchronous-irregular, spontaneous state. For strong coupling, spontaneous replays might be useful for offline recollection of stored sequences when there are no external input cues. Importantly, the network behaviour and the rate of spontaneous events depends not only on the coupling but can be controlled by modulating the network excitability through external input. Neuromodulator systems, for example the cholinergic and the adrenergic systems [33, 34] might therefore mediate the retrieval process.

Related models

Assembly sequences are tightly related to synfire chains, which were proposed [9] as a model for the propagation of synchronous activity between groups of neurons. Diesmann et al. [10] showed for the first time that synfire chains in a noisy network of spiking neurons can indeed support a temporal code. It has been shown, however, that the embedding of synfire chains in recurrent networks is fragile [13, 30], because on the one hand, synfire chains require a minimal connectivity to allow propagation, while on the other hand, a dense connectivity between groups of neurons can generate unstable network dynamics. Therefore, Aviel et al. [31] introduced “shadow pools” of inhibitory neurons that stabilize the network dynamics for high connectivity. The network fragility can also be mitigated by reducing the required feedforward connectivity: inputs from the previous assembly are boosted by recurrent connections within the assembly. This approach was followed by Kumar et al. [14], who examined synfire chains embedded in random networks with local connectivity, thus, implicitly adopting some recurrent connectivity within assemblies as proposed by the assembly-sequence hypothesis; nevertheless, their assemblies were fully connected in a feedforward manner. Recently, it was shown that replay of synfire chains can be facilitated by adding feedback connections to preceding groups [45]. However, this Hebbian amplification significantly increased the duration of the spike volleys and thus decreased the speed of replay. Our model circumvents this slowing effect by combining the recurrent excitation with local feedback inhibition, effectively replacing Hebbian amplification by a transient “balanced amplification” [28].

Other analytical studies have used the Fokker-Planck approach to describe the propagation of pulse packets in synfire chains [46, 47]. In particular, Monasson and Rosay [48] have used diffusion analysis to explore the interplay between different environments encoded in the network and their effects on the activity propagation during replay. To store sequences, further classes of models were proposed, e.g., “winner-takes-all” [49–51] and “communication through resonance” [52]. However, the activity propagation in these models has an order of magnitude slower time scales than the synfire chain or the assembly sequence, and thus, are not suitable for rapid transient replays.

The spontaneous replay in our network bears some resemblance with the population bursts that occur in a model with supralinear amplification of precisely synchronised inputs [53, 54]. Adding such nonlinearities to the conductances in our model might decrease even further the connectivity required for the assembly-sequence replay. Another model class, which relies on lognormal conductance distributions, has been proposed as a burst generator for sharp-wave ripples (SWRs) [55]. The model accounts for spontaneously generated stereotypical activity that propagates through neurons that are connected with strong synapses.

Other computational models have focused more on different aspects of the SWR events. Taxidis et al. [56], for example, have proposed a hippocampal model where a CA3 network rhythmically generates bursts of activity, which propagate to a predominantly inhibitory CA1 network that generates fast ripple oscillations. The ripple generation by inhibitory networks is studied in a greater detail in Malerba et al. [57]. Azizi et al. [58] have explored the properties of a network that stores the topology of several environments and have shown that spike-frequency adaptation is an important mechanism for the movement of the activity bump within and between environments. In another modeling study, Romani and Tsodyks [43] proposed that short-term synaptic depression is a potential mechanism for explaining the hippocampal activity both during mobility (theta-driven activity) and during immobility (fast replays).

Another class of models that aims to explain the origin of SWR events relies on the electrical coupling between axons of pyramidal cells in the CA3/CA1 regions [59–61]. In a numerical model [62] it has been shown that the axonal plexus could explain the occurrence of SWs, the fast ripple oscillation, and moreover, account for the forward and reverse replay of sequences. Nevertheless, anatomical data to show the existence of such connections is still scarce [63].

Relation between recurrent and feedforward connectivity

What is the most efficient set of connectivities in terms of numbers of synapses used? To create an assembly of M neurons and to connect it to another assembly of the same size, we need M2(prc + pff) excitatory-to-excitatory synapses. The constraint κ = 1 (Eq 7) then leads to a minimum total number of synapses at prc = 0. This result is somewhat surprising because it suggests that our proposed recurrent amplification provides a disadvantage.

However, another constraint might be even more important: to imprint an association in one-shot learning, as for example required for episodic memories, it might be an advantage to change as few synapses as possible so that one can retrieve the memory later via a replay. Therefore, pff should be low, in particular lower than the recurrent connectivity that is bound by the morphological connectivity that includes also weak or silent synapses. Minimizing pff under the constraint κ = 1 implies, however, maximizing prc. Such large connectivities might require longer time to develop. A large prc is compatible with one-shot learning only if assemblies (that are defined by increased prc among a group of neurons) can be set up prior to the (feedforward) association of assemblies. Thus, episodic memories could benefit from strong preexisting assemblies. For setting up such assemblies, long time periods might be available to create new synapses and to morphologically grow synapses. Thus, we predict that for any episodic memory to be stored in one-shot learning in hippocampal networks such as CA3, a sufficiently strong representation of the events to be associated does exist prior to successful one-shot learning. In this case, pff (i.e., connectivity in addition to prand) can be almost arbitrarily low. A natural lower limit is that the number of synapses per neuron Mpff is much larger than 1, say 10 as a rough estimate (in Fig 3 we have Mpff ∼ 30 for a rather low value of prc = pff, and 10 for prc = 0.30; even 5 or more very strong synapses are sufficient in Fig 7). This can be interpreted in two ways: (1) Every neuron should activate several neurons in the subsequent assembly, and (2) every neuron in an assembly to be activated should receive several synapses from neurons in the previous assembly.

For example in the modeled network, for pff = 0.02 and Mpff > 10 we obtain M > 500, which is in agreement with an estimated optimal size of assemblies in the hippocampus [64]. The total number of feedforward synapses required for imprinting an association is then M2 pff > 5,000, which is a relatively small number compared to the total number of background synapses for NE = 20,000 and prand = 0.01. Scaling up the network accordingly (see Materials and Methods) to the size of a rodent CA3 network, i.e., NE = 240,000 (a typical number for the rat hippocampus, e.g., [65, 66]), the number of new associative synapses is M2 pff > 17,000, while the total connections are more than 0.5 ⋅ 109.

To conclude, abundant recurrent connections within assemblies can decrease the feedforward connectivity required for a replay to almost arbitrary low values. Moreover, the ratio of memory synapses to background synapses decreases as the network is scaled to bigger size.

Mechanisms for assembly-sequence formation

For sequence replay, increasing the number of connections between groups has the same effect as scaling up the individual connection strengths. We conclude that structural and synaptic plasticity could play an equivalent role in the formation of assembly sequences. In the current study we have not considered plasticity mechanisms that could be mediating the formation of assembly sequences. Previous attempts of implementing a spike-timing-dependent plasticity (STDP) rule with an asymmetric temporal window [67–69] in recurrent networks led to structural instabilities [70–72]. However, it has been shown that under certain conditions the asymmetric STDP rule could encode sequences of connections [54], and moreover, maintain strong bidirectional synapses [73]. More sophisticated learning rules better matched the experimentally observed plasticity protocols [74–76], and these rules combined with various homeostatic mechanisms could form Hebbian assemblies that remained stable over long time periods [40, 41, 77]. Moreover, it has been shown that the triplet-based STDP rules [74, 75] lead to strong bidirectional connections [40, 41], a network motif that has been reported in multiple brain regions [24, 78–81]. Recent experimental work on the plasticity of the CA3-to-CA3 pyramidal cell synapses has revealed a symmetric STDP temporal curve [42]. Such a plasticity rule can be responsible for the encoding of stable assembly representations in the hippocampus.

Several plasticity rules have been successfully applied in learning sequences [7, 54, 73, 82–85]. However, these studies focused purely on sequence replay and did not take into account its interaction with a balanced, asynchronous irregular background state.

Relations to hippocampal replay of behavioral sequences

The present model may explain the fast replay of sequences associated with the sharp-wave ripple (SWR) events, which originate in the CA3 region of the hippocampus predominantly during rest and sleep [86]. SWRs are characterized by a massive neuronal depolarization reflected in the local field potential [87]. Moreover, during SWRs, pyramidal cells in the CA areas fire in sequences that reflect their firing during prior awake experience [19]. Cells can fire in the same or in the reverse sequential order, which we refer to as forward and reverse replay, respectively [22, 23]. Our model, however, can not account for the slower replays that occur at near behaviour time scales during REM sleep [88].

According to the two-stage model of memory trace formation [86], the hippocampus is encoding new episodic memories during active wakefulness (stage one). Later, these memories are gradually consolidated into neocortex through SWR-associated replays (stage two). It has been proposed that acetylcholine (ACh) modulates the flow of information between the hippocampus and the neocortex and thereby mediates switches between these memory-formation stages [89]. During active wakefulness, the concentration of ACh in hippocampus is high, leading to partial suppression of excitatory glutamatergic transmission [33] and promoting synaptic plasticity [90]. In this state, a single experience seems to be sufficient to encode representations of the immediate future in an environment [91]. On the other hand, the level of ACh decreases significantly during slow-wave sleep [92], releasing the synaptic suppression and resulting in strong excitatory feedback synapses, which suggests that this boost of recurrent and feedback connections leads to the occurrence of SWRs. In line with this hypothesis, the present model shows that increasing the synaptic strengths shifts the assembly-sequence dynamics from a no-replay regime to a spontaneous-replay regime. Also, we demonstrated that this regime supports both forward and reverse replay if assemblies are projecting symmetrically to each other and if recurrent connectivity exceeds severalfold the feedforward coupling.

Dragoi and Tonegawa [20, 93] showed that sequences can be replayed during SWRs also prior to the first exposure of the environment in which these sequences are represented. This finding challenges the standard framework according to which sequences are imprinted during exploration of the environment, i.e., the two-stage memory model [86]. An alternative model was presented by Sen Cheng [94] proposing that the recurrent CA3 synaptic weights are relatively constant during learning, and no plasticity in CA3 is required during the formation of new memories. According to the CRISP model [94], the storage of sequences is an intrinsic property of the CA3 network, and these sequences are formed offline prior to utilization due to the maturation of newly generated granule cells in the dentate gyrus. The model presented in this manuscript concerns the storage of sequences in a recurrent network and is not in contradiction with the idea of preexisting sequences.

Our model deploys a single uniform inhibitory population which is, likely, an oversimplification of cortical and subcortical networks that are rich in expressing various interneuron types [95, 96]. However, the roles of the different inhibitory neurons during various brain states, and in particular, during SWRs are not well known. Strong candidates for interneurons that might be balancing the run-away excitation during SWR replay are the basket cells due to their fast dynamics and strong synapses. Moreover, they are one of the most active inhibitory neurons during SWRs. OLM cells with their slower input on the distal dendrites are good candidates for priming which assemblies/sequence might be replayed prior to the event.

In summary, a prediction of our assembly-sequence model is that prior to being able to store and recall a memory trace that connects events, strong enough representations of events in recurrently connected assemblies are necessary because recalling a minute memory trace requires amplification within assemblies. Another prediction of this model is based on the fact that the network is in an asynchronous-irregular state during the time intervals between replays. Hence, by increasing the activity of the excitatory neurons or by disinhibiting the network, e.g., by decreasing the activity of the interneuron population specialized in keeping the balance, one could increase the rate of spontaneous replays. Such disinhibition might explain the counter-intuitive observation that SWRs can be evoked by the activation of interneurons [97, 98]. Our model thus links a diverse set of experimental results on the cellular, behavioral, and systems level of neuroscience on memory retrieval and consolidation [99].

Materials and methods

The network simulations as well as the data analyses were performed in Python (www.python.org). The neural network was implemented in Brian [100]. For managing the simulation environment and data processing, we used standard Python libraries such as NumPy, SciPy, Matplotlib, and SymPy.

Neuron model

Neurons are described by a conductance-based leaky integrate-and-fire model, where the subthreshold membrane potential Vi(t) of cell i obeys

| (2) |

The cells’ resting potential is Vrest = −60 mV, its capacitance is C = 200 pF, and the leak conductance is Gleak = 10 nS, resulting in a membrane time constant of 20 ms in the absence of synaptic stimulation. The variables and are the total synaptic conductances describing the time-dependent synaptic inputs to neuron i. The excitatory and inhibitory reversal potentials are VE = 0 mV and VI = −80 mV, respectively. Iext = Iconst + Ix is an externally applied current. To evoke activity in the network, a constant external current Iconst = 200 pA is injected into each neuron, which evokes a regular, intrinsically oscillating activity in the neuron, if considered in isolation. However, embedding such neurons in random recurrent networks can lead to irregular activity, as outlined below in the following two subsections. Only if explicitly stated (e.g., Figs 5 and 8), small additional current inputs Ix are applied to excitatory or inhibitory neurons, which we denote as Ie and Ii, respectively. As the membrane potential Vi reaches the threshold Vth = −50 mV, neuron i emits an action potential, and the membrane potential Vi is reset to the resting potential Vrest for a refractory period τrp = 2 ms.

The dynamics of the conductances and of a postsynaptic cell i are determined by the spiking of the excitatory and inhibitory presynaptic neurons. Each time a presynaptic cell j fires, the synaptic input conductance of the postsynaptic cell i is increased by for excitatory synapses and by for inhibitory synapses. The input conductances decay exponentially with time constants τE = 5 ms and τI = 10 ms. The dynamics of the total excitatory conductance is described by

| (3) |

Here the sum runs over the presynaptic projections j and over the sequence of spikes f from each projection. The time of the fth spike from neuron j is denoted by , and δ is the Dirac delta function. The inhibitory conductance is described analogously.

Amplitudes of recurrent excitatory conductances and excitatory conductances on inhibitory neurons are denoted with and , respectively. If not stated otherwise, all excitatory conductance amplitudes are fixed and equal (), which results in EPSPs with an amplitude of ≈ 0.1 mV at resting potential. The recurrent inhibitory synapses are also constant () while the inhibitory-to-excitatory conductances are variable (see below). Irrespectively of the synaptic type, the delay between a presynaptic spike and a postsynaptic response onset is always 2 ms.

Network model

The modeled network consists of NE = 20,000 excitatory and NI = 5,000 inhibitory neurons. Our results do not critically depend on the network size (see Section ‘Scaling the network size’ below). Initially, all neurons are randomly connected with a sparse probability prand = 0.01.

A cell assembly is defined as a group of recurrently connected excitatory and inhibitory neurons (Fig 1A). The assembly is formed by picking M excitatory and M/4 inhibitory neurons from the network; every pair of pre- and post-synaptic neurons within the assembly is randomly connected with probability prc. The new connections are created independently and in addition to the already existing ones. Thus, if by chance two neurons have a connection due to the background connectivity and are connected due to the participation in an assembly, then the synaptic weight between them is simply doubled. Unless stated otherwise, assemblies are hence formed by additional connections rather than stronger synapses.

In the random network, we embed 10 non-overlapping assemblies with size M = 500 if not stated otherwise. The groups of excitatory neurons are connected in a feedforward fashion, and a neuron from one group projects to a neuron of the subsequent group with probability pff (Fig 1B). Such a feedforward connectivity is reminiscent of a synfire chain. However, classical synfire chains do not have recurrent connections (prc = 0, pff > 0), while here, neurons within a group are recurrently connected even beyond the random background connectivity (prc > 0, pff > 0). We will refer to such a sequence as an “assembly sequence”. By varying the connectivity parameters prc and pff, the network structure can be manipulated to obtain different network types (Fig 1C). In the limiting case where feedforward connections are absent (prc > 0, pff = 0) the network contains only largely disconnected Hebbian assemblies. In contrast, in the absence of recurrent connections (prc = 0, pff > 0), the model is reduced to a synfire chain embedded in a recurrent network. Structures with both recurrent and feedforward connections correspond to Hebbian assembly sequences.

To keep the network structure as simple as possible and to be able to focus on mechanisms underlying replay, we use non-overlapping assemblies and we do not embed more than 10 groups. Nevertheless, additional simulations with overlapping assemblies and longer sequences indicate that our approach is in line with previous results on memory capacity [15, 64, 101]. Advancing the theory of memory capacity is, however, beyond the scope of this manuscript.

Balancing the network

A naive implementation of the heterogeneous network as described above leads, in general, to dynamics characterized by large population bursts of activity. To overcome this epileptiform activity and ensure that neurons fire asynchronously and irregularly (AI network state), the network should operate in a balanced regime. In the balanced state, large excitatory currents are compensated by large inhibitory currents, as shown in vivo [102, 103] and in vitro [104]. In this regime, fluctuations of the input lead to highly irregular firing [105, 106], a pattern observed in the cortex [9, 107] as well as in the hippocampus during non-REM sleep [108, 109].

Several mechanisms were proposed to balance numerically simulated neural networks. One method involves structurally modifying the network connectivity to ensure that neurons receive balanced excitatory and inhibitory inputs [110, 111]. It was shown that a short-term plasticity rule [112] in a fully connected network can also adjust the irregularity of neuronal firing [113].

Here, we balance the network using the inhibitory-plasticity rule [25]. All inhibitory-to-excitatory synapses are subject to a spike-timing-dependent plasticity (STDP) rule where near-coincident pre- and postsynaptic firing potentates the inhibitory synapse while presynaptic spikes alone cause depression. A similar STDP rule with a symmetric temporal window was recently reported in the layer 5 of the auditory cortex [114].

To implement the plasticity rule in a synapse, we first assign a synaptic trace variable xi to every neuron i such that xi is incremented with each spike of the neuron and decays with a time constant τSTDP = 20 ms:

The synaptic conductance from inhibitory neuron j to excitatory neuron i is initialized with value and is updated at the times of pre/post-synaptic events:

where 0 < η ≪ 1 is the learning-rate parameter, and the bias α = 2ρ0τSTDP is determined by the desired firing rate ρ0 of the excitatory postsynaptic neurons. In all simulations, ρ0 has been set to 5 spikes/sec, which is at the upper bound of the wide range of rates that were reported in the literature: e.g., 1–3 spikes/sec in [87]; 3–6 spikes/sec in [115]; 1–76 spikes/sec in [116]; 0.43–3.60 spikes/sec in [117]; 1–11 spikes/sec in [118].

Existence of background connections and an implementation of the described inhibitory STDP rule drives typically the network into a balanced AI state. The excitatory and the inhibitory input currents balance each other and keep the membrane potential just below threshold while random fluctuations drive the firing (Fig 2A and 2B). The specific conditions to be met for a successful balance are discussed in the Results section. Similar effects could be achieved also in the absence of random background connections when input with appropriate noise fluctuations is applied to the neurons. We find this scenario, however, less realistic as neurons would be largely disconnected.

In the AI network regime, any perturbation to the input of an assembly will lead to a transient perturbation in the firing rate of the neurons within it. In the case of strong recurrent connections within the assembly, a small excitatory perturbation will lead to a stronger firing of both the excitatory as well as the inhibitory neurons. This amplification of input fluctuations into larger activity fluctuations is, unlike the Hebbian amplification, fast and does not show slowing of the activation dynamics for large connectivities. This phenomenon of transient pattern completion is known as balanced amplification [28], where it is essential that each assembly has excitatory and inhibitory neurons and strong recurrent connectivity. Another advantage of the inhibitory subpopulations is the rapid negative feedback that can lead to enhanced memory capacity of the network [119].

Simulations and data analysis

Each network simulation consists of 3 main phases:

1. Balancing the network. Initially, the population activity is characterized by massive population bursts with varying sizes (avalanches). During a first phase, the network (random network with embedded phase sequence) is balanced for 50 seconds with decreasing learning rate (0.005 ≥ η ≥ 0.00001) for the plasticity on the inhibitory-to-excitatory synapses. During this learning, the inhibitory plasticity shapes the activity, finally leading to AI firing of the excitatory population. Individual excitatory neurons then fire roughly with the target firing rate of 5 spikes/sec, while inhibitory neurons have higher firing rates of around 20 spikes/sec, which is close to rates reported in the hippocampus [87, 117]. After 50 seconds simulation time, the network is typically balanced.

2. Reliability and quality of replay. In a second phase, the plasticity is switched off to be able to probe an unchanging network with external cue stimulations. All neurons from the first group/assembly are simultaneously stimulated by an external input so that all neurons fire once. The stimulation is mimicked by adding an excitatory conductance in Eq 3 (gmax = 3 nS) that is sufficient to evoke a spike in each neuron. For large enough connectivities (prc and pff), the generated pulse packet of activity propagates through the sequence of assemblies, resulting in a replay. For too small connectivities, the activity does not propagate. For excessively high connectivities, the transient response of one group results in a burst in the next group and even larger responses in the subsequent groups, finally leading to epileptiform population bursts of activity (Fig 3).

To quantify the propagation from group to group and to account for abnormal activity, we introduce a quality measure of replay. The activity of a group is measured by calculating the population firing rate of the underlying neurons smoothed with a Gaussian window of 2 ms width. We extract peaks of the smoothed firing rate that exceed a threshold of 30 spikes/sec. A group is considered to be activated at the time at which its population firing rate hits its maximum and is above the threshold rate. Activity propagation from one group to the next is considered to be successful if one group activates the next one within a delay between 2 and 20 ms. A typical delay is about 5 ms, but in the case of extremely small pff and large prc the time of propagation can take ∼ 15 ms. Additional rules are imposed to account for exceeding activity and punish replays that lead to run-away firing. First, if the activity of an assembly exceeds a threshold of 180 spikes/sec (value is chosen manually for best discrimination), the group is considered as bursting, and thus, the replay is considered as failed. Second, if the assembly activity displays 2 super-threshold peaks that succeed each other within 30 ms, the replay is unsuccessful. Third, a “dummy group” (of size M) from the background neurons is used as a proxy for detecting activations of the whole network. In case that the dummy group is activated during an otherwise successful replay, the replay is failed. Thus, for each stimulation the “quality of replay” has a value of 1 for successful and a value of 0 for unsuccessful replays. The quality of replay for each set of parameters (Fig 3) is an average from multiple (≳ 5) stimulations of 5 different realizations of each network.

Additionally, we test the ability of the assembly sequence to complete a pattern by stimulating only a fraction of the neurons in the first group (Fig 4). Analogously to the full stimulation, the quality of replay is measured.

3. Spontaneous activity. In the last phase of the simulations, no specific input is applied to the assemblies. As during the first phase of the simulation, the network is driven solely by the constant-current input Iconst = 200 pA applied to each neuron, and plasticity is switched off.