Abstract

Our acoustical environment abounds with repetitive sounds, some of which are related to pitch perception. It is still unknown how the auditory system, in processing these sounds, relates a physical stimulus and its percept. Since, in mammals, all auditory stimuli are conveyed into the nervous system through the auditory nerve (AN) fibers, a model should explain the perception of pitch as a function of this particular input. However, pitch perception is invariant to certain features of the physical stimulus. For example, a missing fundamental stimulus with resolved or unresolved harmonics, or a low and high-level amplitude stimulus with the same spectral content–these all give rise to the same percept of pitch. In contrast, the AN representations for these different stimuli are not invariant to these effects. In fact, due to saturation and non-linearity of both cochlear and inner hair cells responses, these differences are enhanced by the AN fibers. Thus there is a difficulty in explaining how pitch percept arises from the activity of the AN fibers. We introduce a novel approach for extracting pitch cues from the AN population activity for a given arbitrary stimulus. The method is based on a technique known as sparse coding (SC). It is the representation of pitch cues by a few spatiotemporal atoms (templates) from among a large set of possible ones (a dictionary). The amount of activity of each atom is represented by a non-zero coefficient, analogous to an active neuron. Such a technique has been successfully applied to other modalities, particularly vision. The model is composed of a cochlear model, an SC processing unit, and a harmonic sieve. We show that the model copes with different pitch phenomena: extracting resolved and non-resolved harmonics, missing fundamental pitches, stimuli with both high and low amplitudes, iterated rippled noises, and recorded musical instruments.

Author Summary

By means of a sound's pitch, we can easily discern between low and high musical notes, regardless of whether they originate from a guitar, piano or a vocalist. The relation between different sounds that yield the same percept is what makes pitch an interesting subject of research. Today, despite extensive research, the mechanism behind this physical to perceptual transformation is still unclear. The large dynamic range of the cochlea combined with its nonlinear nature makes the modeling and understanding of this process a challenging task. Given a large amount of physiological and psychological data, a general explanation consistent with many of these phenomena would be a major step in elucidating the nature of pitch perception. In this paper, we recast the problem in the general framework of sparse coding of sensory stimuli. This framework, initially developed for the visual modality, posits that the goal of the neural representation is to represent the flow of sensory information in a concise and parsimonious way. We show that applying this principle to the problem of pitch perception can explain many perceptual phenomena.

Introduction

The perception of pitch is an important feature of speech recognition and perception of musical melodies. It conveys information of prosody and speaker identity; it helps in grouping different tones into one auditory object; and it conveys information about melody and harmony. The sensation of a pitch, usually associated with the periodicity of a given physical stimulus, is usually perceived as having two dimensions: pitch class and pitch height. The pitch class, or the pitch chroma, is the set of all pitches that are related by whole octave numbers and is known in musical theory as "octave equivalence"; the pitch height is the continuum perception of sound from low to high. The percept of pitch is so inherent in us that usually even a slight repetition in time is needed to create it. When dealing with harmonic signals, pitch is usually related to the first harmonic, the fundamental frequency, of that signal. Even though most natural sounds are not strictly periodic, pitch is still clearly perceived and used by the brain in various hearing-related tasks. A unique property of pitch perception is that it is a many-to-many mapping: a similar pitch can be perceived by different acoustic stimuli, and a given acoustic stimulus can yield different percepts of pitch. This property is the reason that makes pitch an interesting property of the mind, but it is also the reason that makes it hard to explain. The question arises: How does a brain manage to perform this task?

For almost a century, pitch properties have been extensively researched both experimentally and theoretically. Generally, most of the existing models that have emerged from this research activity can be divided into two main categories: (1) temporal models and (2) spectral models [1]. Modern temporal models, which are currently regarded as prominent, are usually based on autocorrelation principles [2–4]. These models rely on the fact that periodic stimuli, with the same perceived pitch but with possibly different spectral harmonic content, have the same temporal periodic response. For example, a signal consisting of the first six consecutive harmonics will have the same temporal period as a signal that contains just three successive harmonics. Thus, both signals are likely to reveal the same perception of pitch. The predictions of the temporal models are consistent with a large number of psychoacoustic properties of pitch perception, including: (a) the missing fundamental case, also known as virtual pitch, which is the pitch of a harmonic series that does not include its fundamental frequency; (b) the pitch shift effect, which is the perception of a signal with shifted, equally spaced, harmonic components that yield ambiguous pitches [5]; and (c) the invariance to the stimuli amplitude levels, which is an inherent property of the autocorrelation process, in accordance with psychophysical measurements [6]. There is also neurophysiological evidence for reliably predicting pitches for different stimuli [7,8] based on calculations of the autocorrelation of a cat’s AN population response. On the other hand, it seems that temporal models perform too well compared to human psychophysics. Consider for example the case of resolved and unresolved stimuli. Low harmonics are known to be resolved, meaning they are transformed into distinct rate activity within AN fibers and with distinct peaks at certain CFs. On the other hand, higher harmonics, approximately the 5th to 10th and above [9], are unresolved in the sense that having these harmonics in the same stimulus they share the same spatial area along the cochlea. Temporal models cope well with both types of stimuli [10] because autocorrelation accounts for the interaction between the different harmonic components of the signal. However, previous measurements have shown that stimuli composed of resolved (low) harmonics are usually more salient than stimuli that are composed of unresolved (high) harmonics [11,12]. Another example of the excessive performance of these temporal models over human performance is the case of the transposed tones [13]. Transposed tones of low harmonic stimuli are designed to have, using modulation, the same auditory peripheral representation as their low harmonic counterparts. These experiments suggest that temporal information alone is not sufficient and that tonotopic organization must be considered [14]. Finally, temporal models require certain physiological structures in the auditory neural pathway to work. In particular, the autocorrelation functionality requires the existence of (at least) 40ms long tapped delay lines [15]. But at present there is currently no physiological evidence to support such mechanism.

A second major class of models is the spectral theory for pitch. These models are based on the tonotopic organization, or mapping, from stimulus frequencies to stimulated spatial locations along the cochlea; high frequencies resonate the basal parts of the cochlea while low frequencies resonate its apical parts. These vibrations are transduced into the auditory system through the innervation of the auditory nerve (AN) fibers. The spatial arrangement of these ANs along the cochlea means that each of these neurons is most responsive to a specific frequency, which is denoted as its characteristic frequency (CF). Spectral models exploit this mapping to extract the frequency components of the incoming stimuli. A prominent implementation of these models is the class of pattern-matching models [16–18]. The overall structure shared by these types of models is composed of two main phases: the first extracts the spectral components of the stimulus from the AN population activity, and the second matches the resulting spectral pattern with the model's existing templates. Each of these templates is indexed to match different pitches, and a percept of a particular pitch is the best probable match between a given stimulus and a certain template.

Similarly to the temporal models, the predictions of these models are also consistent with a large number of psychoacoustic properties of pitch [19–22]. However, there are also psychoacoustic phenomena that are difficult to explain within this framework. One main disadvantage of these models is their inability to infer pitches that are composed of high harmonic components. As mentioned above, the cochlea decomposes the stimulus’ frequencies into spatial locations, which are represented by the CFs, and not all harmonics transduce into auditory activities in the same way. As a result, spectral models cannot easily account for pitches of unresolved stimuli.

Common to these models is the use of the AN population response to extract features from a given stimulus. These features are then translated into a scalar that represents the pitch percept. The problem of feature extraction from input stimuli has been studied in more general settings [23,24], and it is instructive to consider this approach in a wider sense. The general task of all modalities is the need to process streams of incoming sensed data abundant with information, and to extract desired low dimensional properties from it. For example, in the visual system a low dimensional percept of an object’s orientation is extracted from the activities of photoreceptors in the retina at the time of the stimulus (high dimensional input). Likewise, in the case of the auditory system, the input signal is a continuous auditory stream. It is composed of the spiking activity of approximately 30,000 ANFs in a healthy human adult and lasts for the duration of the whole stimulus. Yet, the auditory system usually extracts relatively low-dimensional and slow changing features such as the pitch of that signal. Namely, the perception of the pitch height and the pitch class is represented by just two dimensions as opposed to about 30,000 dimensions for each ANF that changes over time.

Today there are well developed and closely related mathematical frameworks that specialize in feature extraction. Specifically, we refer to a family of algorithms known as sparse coding (SC). This mathematical technique has many applications displaying a wide variety of variants and flavors [25]. Additionally, it seems that the SC approach is in accordance with the known physiology of the central nervous system, that of the auditory system and of other physiological modalities [23].

In this paper we apply a SC algorithm to predict pitches for different signals. We apply it to the AN population responses taken from simulations of known cochlear models [26–29]. The SC algorithm concurrently uses both the spatial and temporal domain. In this sense, the proposed model is a hybrid of the “classic” temporal and the spectral pitch models mentioned above. We show that this type of model can predict a variety of psychoacoustic properties of pitch. Specifically, the model can infer the pitches of missing fundamental complex tones; it exhibits the psychoacoustic phenomena of pitch shifts; and it is invariant to stimuli levels. Our results suggest that the principle of sparse coding can explain relatively high perception functionality such as pitch.

Model Overview

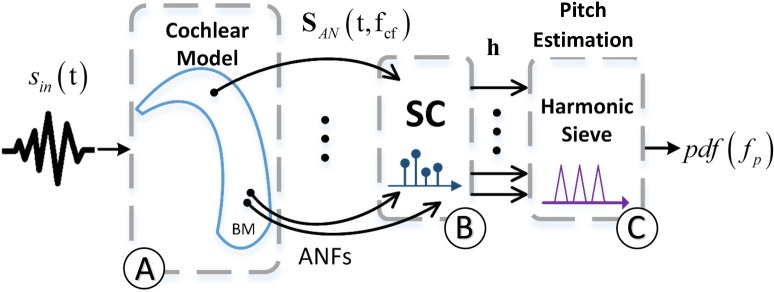

The proposed model consists of three main parts: (i) a cochlear model that translates auditory stimulus, sin(t), into the AN population activity, SAN(t,fCF) (Fig 1A); (ii) a sparse coding (SC) unit that represents the AN population response as a sparse (few non-zeros) set of coefficients, h. (Fig 1B); (iii) a readout unit that translates the active coefficients in h into a probability density function pdf(fp) of pitches. To compare the model with known psychoacoustic phenomena, we set the estimated stimulus’ pitch to be the maximum value of that pdf function, sp ∈ R1 (Fig 1C). This scalar represents the most plausible pitch for the particular given input signal, sin(t). Each part is briefly described in the following sections.

Fig 1. The model.

The model is composed of three main sections: (A) The cochlear model [26–29] transduces a one-dimensional input stimulus, sin(t), into a two-dimensional matrix that represents the AN population response, SAN(t,fCF). (B) The AN’s spatiotemporal response is introduced into the sparse coding (SC) block to produce the sparse coefficient vector, h. The vector h carries invariant information of the input stimulus that we refer to as pitch cues. The (sparse) information in h represents harmonics in sin(t). (C) Finally, the likelihood probability of the pitch given the vector h is extracted and denoted as pdf(fp).

The Cochlear Model

For low and medium sound levels, each location along the BM represents a specific frequency, the CF of this location. We denote these frequencies by fCF. The cochlear models that we use in this paper [26–29] transduce a stimulus, , into instantaneous rates of the ANs, SAN(t,fCF) ∈ RT×N. Accordingly, Ta is the total length of the stimulus in samples; T is a segment out of Ta for the cochlear response; and N is the number of the CF channels in the cochlear model. Throughout this paper, regardless of the cochlear model that we use, we always set T = 5ms; this 5ms interval is taken from the end of the total Ta = 15ms cochlear simulation.

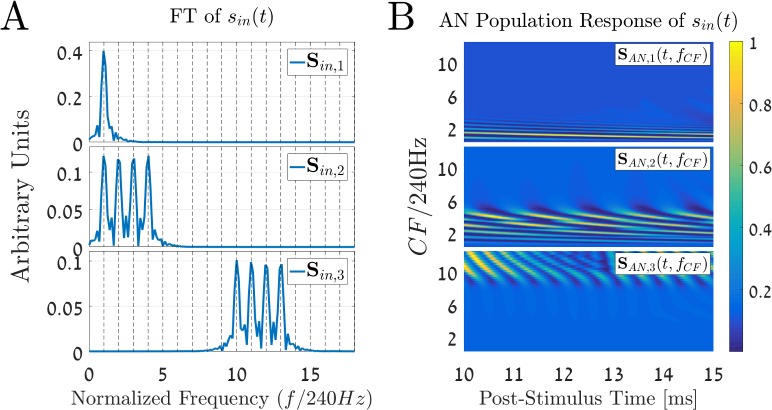

Fig 2B shows the cochlear model [26] responses SAN(t,fCF) for the following three stimuli

Fig 2. Different complex harmonic stimuli with the same pitch.

(A) The Fourier transform (FT) of three complex harmonics stimuli with a fundamental frequency of f0 = 240 Hz. The three signals have different spectral components: Sin,1(f) is composed of the first harmonic component of f0; Sin,2(f) consists of the first four successive harmonics of f0; and Sin,3(f) is formed from of the 10–13 harmonics. (B) The corresponding output of the cochlear model [26], i.e., the AN population responses for the three stimuli. The y-axis represents the normalized characteristic frequencies (CFs), which is CF divided by f0, on a linear scale, and the x-axis shows the post-stimulus time in milliseconds. The cochlear input is a 15ms long stimulus, and the resulting output is taken from the last 5ms. Note the different patterns of the AN activities that correspond to the three different cases: a stimulus with low frequencies excites the apical parts of the cochlea (lower part in the images), while a stimulus with higher frequencies excites the basal parts. Note also that the AN population responses define unique spatiotemporal patterns of activities for each of the stimuli. All the three stimuli have relatively low sound levels (30 dB SPL), which means that the cochlea response is linear.

| (1) |

The parameter f0 = 240 Hz is the fundamental frequency of the given harmonic series. The amplitude of each of the stimuli, g30dB, is given in Pascals and is equivalent to 30 dB SPL in this case. Fig 2A represents the Fourier transform (FT) of these three stimuli, Sin,r(f) = FT{sin,r(t)}, which denote the three different cases for r ∈ {1, 2, 3}, respectively. The first stimulus, Sin,1(f), consists of the first fundamental component, f0. The second stimulus, Sin,2(f), includes the first four successive harmonics of f0, and Sin,3(f) contains the 10th to 13th harmonics of f0. Note that although these stimuli sound differently, it is known [9,30,31] that these stimuli, especially the first and second ones, yield the same percept of pitch, namely f0.

The normalized AN population responses, SAN,r(t,fCF), of all the three stimuli, r ∈ {1, 2, 3}, are depicted in Fig 2B. The matrix SAN,r(t,fCF) is presented in the figure as a color-coded image, where the x-axis represents the post-stimulus time (10ms to 15ms), and the y-axis represents the normalized CFs relative to the stimuli’s fundamental frequency, i.e., fCF /f0. It is clear from Fig 2B that the AN population responses depend on the spectrum of the input signal. For instance, the SAN,1(t,fCF), that corresponds to the 240 Hz sine wave, shows local AN activities only in lower CFs (at the apical part of the cochlea). On the other hand, the SAN,3(t,fCF), which is composed of the 10–13 harmonics of f0, yields activity at higher CFs (towards the basal part of the cochlea).

It is noteworthy that each frequency component in the auditory stimulus reflects the ANs activity of specific location along the cochlea. Hence, each of these AN population responses has its own spatiotemporal typical pattern.

The Sparse Coding Phase

Next, we wish to exploit the unique aforementioned spatiotemporal structures of the AN population responses. Given the AN response to a specific stimulus, we wish to represent it as a weighted sum of a small number of response primitives using the following optimization:

| (2) |

Where the operator ∥∙∥2 specifies the Euclidian norm, ∥⋅∥1 is the ℓ1-norm. The vector vAN ∈ RT∙N is the vectorized AN response; the matrix D ∈ RT∙N×M is a collection of M primitives known as the dictionary; the vector h ∈ RM is the (sparse) coefficient vector; and λ is a scalar that controls the sparseness of the solution h. Note that the entries in h assign weights to different atoms in D, and thus we have h[k] ≥ 0 for all k ∈ [1,M]. There are various numerical techniques [25] to solve Eq 2 for h; here we chose to use LASSO, a linear regression with ℓ1-norm regularization [32,33].

The dictionary D can either be learned from examples [24,34–37], or chosen according to some prior knowledge (see for example [25], Ch. 12). In this paper, we opt for the latter option but will also explore the effect of a different dictionary later on. Thus, we chose those primitives, which within the SC paradigm are known as atoms, to be the AN population response to pure sine waves,

| (3) |

In this equation, d ∈ [1,gM] is the index of the atom, each one is created by its own sd(t) stimulus; the parameter fd ∈ [100 Hz, 20k Hz] is the frequency that this particular atom represents. When solving for Eq 2, we may need the dictionary to account for different phases of an incoming stimulus. One simple way to achieve that is to use groups g ≥ 1 of atoms with the same frequencies fd but with different phases ϕg = 2π/g ⋅ k, k ∈ [0,g−1]. This technique can be seen as a simplified variant of the group-lasso algorithms [38,39]. Solving for Eq 2, the solution of the sparse coefficient vector contains entries that belong to the same group (same frequency, different phases). These entries represent the same frequency and should not “compete”. Thus, the entries of each group are summed together, forming the final sparse vector h ∈ RM. In the following, we would assume that g = 1 for simplicity (i.e., each atom is a group that is created by a sine with zero phase). We would use the extended scheme (g > 1) when dealing with stimuli of random or unknown phase (see Iterated Rippled Noise and Musical Notes).

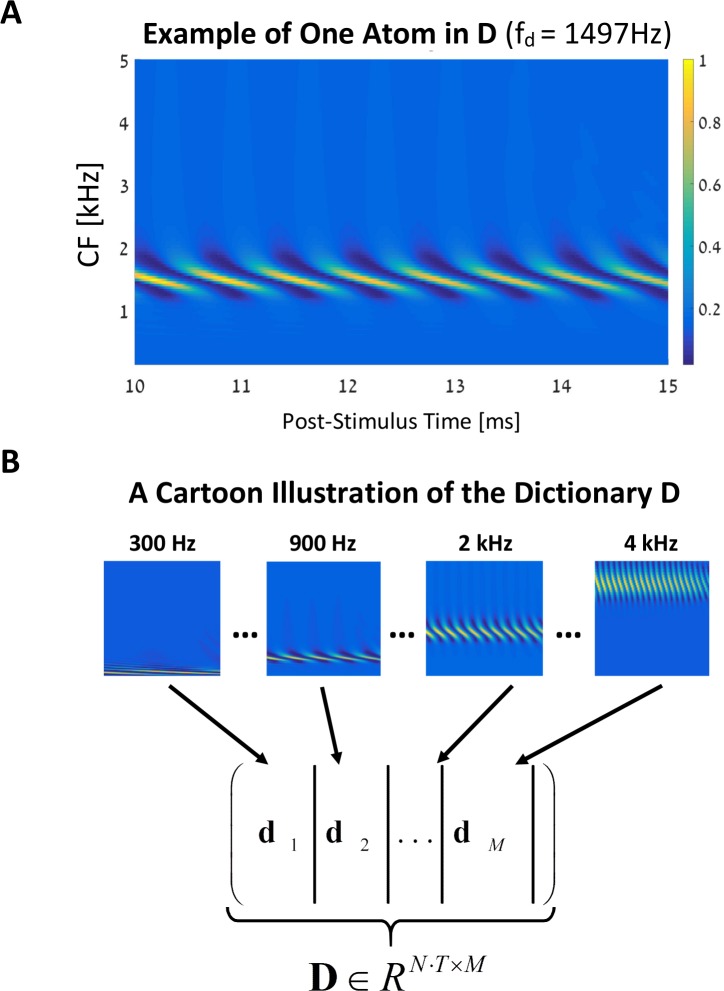

Fig 3A shows an example of an atom d ∈ RT⋅N, g = 1, that corresponds to a sinusoid stimulus of about fd = 1.5k Hz. Fig 3B shows a typical dictionary that is a concatenation of M such atoms, i.e., D = [d1,…,dM]. As mentioned, the scalar λ = 0.01 in Eq 2 determines the sparseness of the solution h. On the one hand, increasing λ assigns more weight to the ℓ1-norm (the second term in Eq 2) and so leads to a sparser solution for h (i.e., more components in h are set to zero). But this sparseness comes at the expense of the matching between vAN and Dh (the first term in Eq 2) and for a large enough λ the solution becomes trivial, h = 0. On the other hand, setting λ = 0.0 usually leads to a non-sparse solution, that is, most of the entries in h are nonzero. With regard to this observation, the solution of Eq 2 decreases to an ordinary least-square (LS) solution, without any sparseness considerations.

Fig 3. Composing the dictionary D.

(A) An example of one atom in D. It is the AN population response to a sine wave of 1.5k Hz tone generated by the cochlear model [26]. The atom was normalized to a peak value of 1, as for all other population responses. The y-axis of the two-dimensional matrix represents the CF along the BM, and the x-axis is the post-stimulus time in milliseconds. (B) Each of the atoms, dj, j ∈ [1, M], is vectorized into a column in D. These M columns are concatenated to form the dictionary matrix D. All the input signals used for the creation of the dictionary have the same level of 30 dB SPL (i.e., at the cochlear linear region). In this example, we used only one atom per group (g = 1).

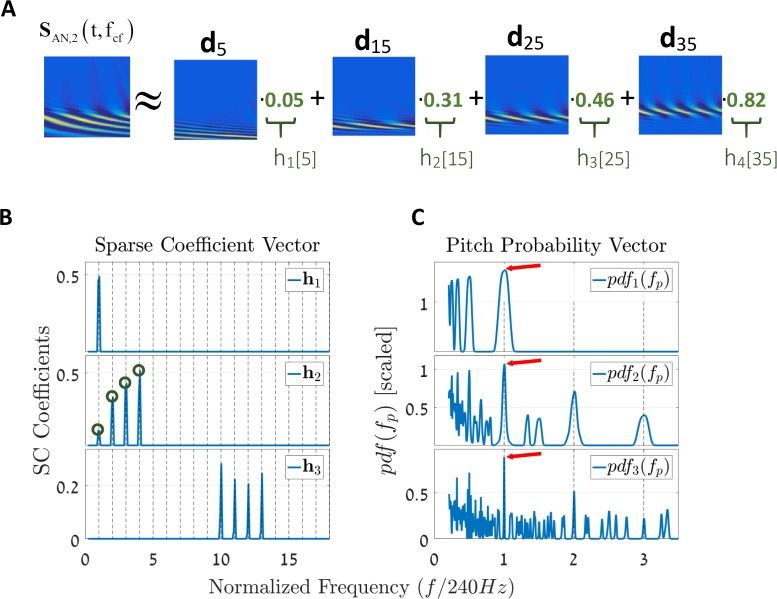

As a simplified example, consider the AN population response of the second stimulus case (Fig 2B). Solving for h, the AN population response is equivalent to a linear combination of just four atoms in D, that is vAN ≈ 0.05 ⋅ d5 + 0.31 ⋅ d15 + 0.46 ⋅ d25 + 0.82 ⋅ d35 (Fig 4B, green circles in h2). Solving for Eq 2 for the other three signals, we get the solutions of h1, h2, and h3, respectively (Fig 4B). Note that there is a clear similarity between the FT of the input signals (Fig 2A) and the derived coefficients of h1, h2, and h3. Explicitly, the spectral structures of the stimuli are reconstructed by the SC algorithm, yet one can see that the three expressions are not alike. For example, there is a difference between the magnitudes of the FT coefficients of sin,2(t) and the nonzero entries in h2, meaning that the SC decomposition is not in general an FT decomposition.

Fig 4. The sparse coefficient vector h and the final pitch probability vector.

(A) A simplified view of the SC methodology. The algorithm decomposed the two-dimensional signal SAN,2(t,fCF) into a linear combination of four atoms (columns) within D. This is a simplified view that shows the primary values in h2 (green indices) multiplied by the atoms. (B) The sparse coefficient solution vectors, hk, for the three cases (k ∈ [1,2,3]). The green circles in the figure of h2 correspond to the four terms in the simplified example of (A). All x-axes are normalized by the fundamental frequency f0 = 240 Hz for convenience. Observe that the solutions for hk resemble that of the FT for the respective stimuli (Fig 2A). (C) Using the pitch estimation unit (harmonic sieve), we can easily map the information in hk, for k ∈ [1,2,3], into a pitch probability vector, pdf(fp). Each of the y-axes of the pdfs functions is multiplied by a constant (x100) for visual clearance. The red arrows indicate the locations of the maximum peaks, all of which are shown to occur at the fundamental harmonic. In other words, it is most probable that all three stimuli represent the same pitch. Still, note that other options are also plausible, especially in rational ratios of f0.

Pitch Estimation

The output of the SC stage is a sparse vector h (Fig 4B) that represents the weights of each atom in the dictionary D. We wish to relate a single pitch percept for each such vector to facilitate a comparison with human psychophysics' tests. We do this by computing the likelihood for each possible pitch [16,19,40], by assuming a generative model of a harmonic series for each pitch. The resulting likelihood function is a normalized product of the vector h with a template for the specific pitch in question:

| (4) |

where is an interpolated version of the vector h (see Methods); each row of the matrix G (fp,σp) ∈ RP×P contains Gaussian functions centered around the harmonics of the pitch nfp as weights; and is the normalization factor of the pdf. The derived probability density functions pdfr(fp), for r ∈ {1, 2, 3}, are depicted in Fig 4C. The three pdfs are aligned, and the x-axis is normalized by the fundamental frequency fo for convenience. The red arrows show that the maximum peaks in each of the three cases are pointing at fo. Note that the maximum peak in the probability distribution is just one option among many. For example, in the first case, in which sin,1(t) is composed of just the fundamental spectral component, the pdf1(fp) indicates that other pitches are also possible; specifically, these other options occur at subharmonics of fo. Adding spectral components into the stimulus, i.e., in the second case of pdf2(fp), narrows the width of the peaks. Additionally, more peaks appear at harmonics that are not complete ratios of fo. In the third case of sin,3(t), which contains the 10th-13th harmonics, the probability function is denser. This thickening of the pdf indicates that the third stimulus is perceived as having less salience than the other two (for a detailed treatment of salience, see Resolved and Unresolved Harmonics). Additional peaks are formed (not shown, but see the same effect in Resolved and Unresolved Harmonics) in its probability function, pdf3(fp), around the ‘center-of-mass’ of the spectral components (i.e., the flocus around the 10th-13th harmonics[1]).

Finally, a standard paradigm in psychoacoustic experiments is to yield a scalar, i.e., specific pitch or a pitch difference, per a given stimulus. Moreover, it is a known practice in psychoacoustics to restrict the participants (or to modulate the results) to a one octave interval (see for example [41–43]). Thus, the collapsing of the resulting probability function, the pdf (fp), into a single scalar, the inferred pitch, is a straightforward and convenient procedure that enables us to compare the model at hand with known psychophysical results. In this paper we defined the estimated pitch for a given stimulus as

| (5) |

Additionally, in some of the displayed cases, we follow the convention by limiting the inferred pitch to an octave around the fundamental frequency.

Results

Below, we demonstrate the ability of the proposed model to match known psychoacoustic phenomena qualitatively. Using these phenomena, we illustrate how the various components of the model contribute to its performance.

Why Do We Need Sparse Representation?

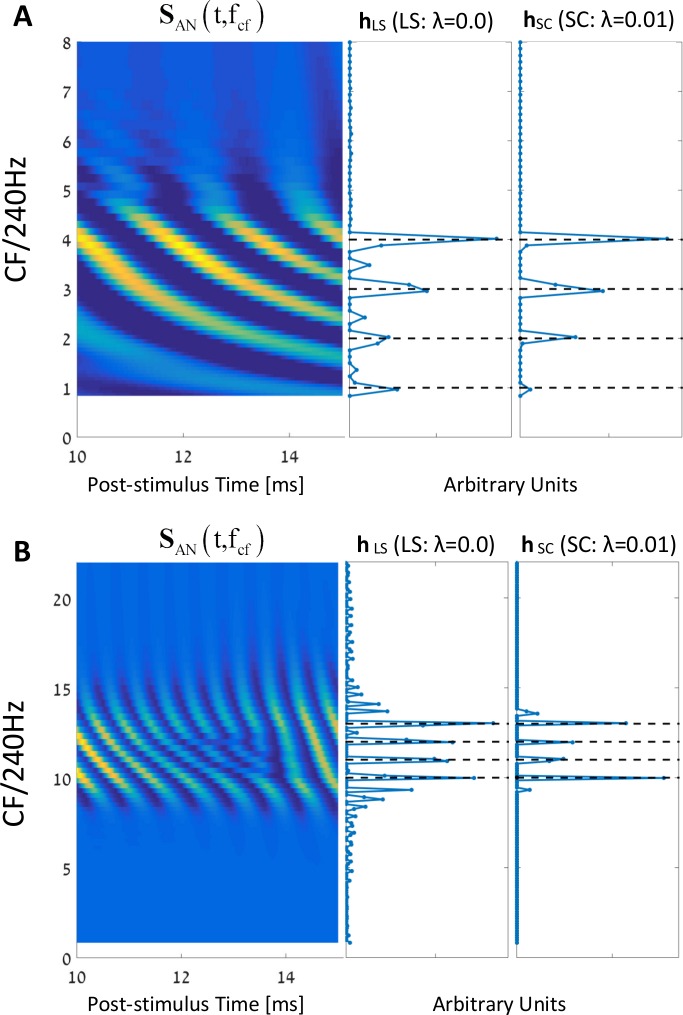

To demonstrate the advantage of using sparse coding algorithms, we compared the performance of the algorithm (Eq 2) for sparse (λ = 0.01) and non-sparse solutions (λ = 0, Least squares). The resulting vectors, hLS and hSC, for the two cases are shown in Fig 5 for the two aforementioned 30 dB SPL stimuli of sin,2(t) and sin,3(t). In the case of sin,2(t), there is little difference between the two solutions (Fig 5A). For the case of sin,3(t), which includes relatively higher (non-resolved) frequency components, the difference is more substantial (Fig 5B). Still, although the LS solutions (hLS) yield more nonzero coefficients than those of the sparse ones (hSC), the solutions can visibly be related to the harmonic structures of the two input stimuli (compare the resulting h vectors with the FTs in Fig 2B).

Fig 5. Comparing LS with SC.

(A) From left to right: the AN population response for a harmonic complex with the 1st – 4th harmonics. The y-axis is the CFs normalized by the fundamental frequency, in a linear scale (f0 = 240 Hz). The x-axis indicates the post-stimulus time (between 10ms to 15ms). Next, the h coefficient vectors for the LS case (λ = 0.0) and for the sparse case (λ = 0.01). (B) Same as in (A) but for a complex tone stimulus that contains the harmonics 10th–13th. Note that for the lower harmonic stimulus (A), the results between the two cases, i.e., hLS vs. hSC, are almost identical. On the other hand, for the stimuli with the higher harmonics (B), the difference is more substantial. Specifically, there are much more nonzero coefficients in hLS than in hSC that are unrelated to the original spectrum structure of the signal (compare with the FTs in Fig 2A).

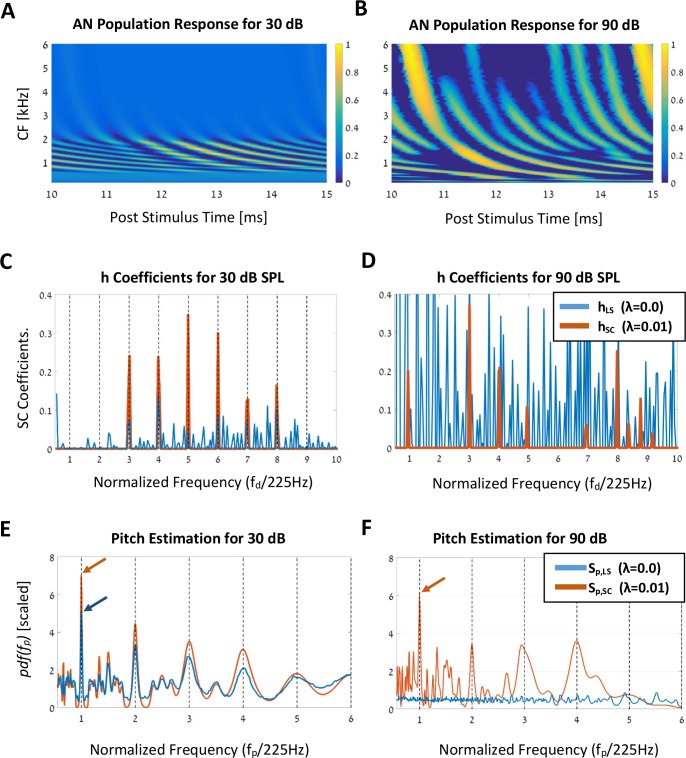

The benefits of the sparse representation are evident when we introduce stimuli with high volume levels. Fig 6 compares the processing of a missing fundamental harmonic series with six harmonic components (k ∈ [3,8]),

| (6) |

Fig 6. Stimulus level invariance.

(A) The AN population response for the missing-fundamental harmonic complex tone of Eq 6, with f0 = 225 Hz. The stimulus has an amplitude level of 30 dB SPL, and the AN population response is normalized to one, as usual. The x-axis shows post-stimulus time, and the y-axis denotes the (linear) mapping between locations along the cochlea and CFs. (B) The AN population response for the same spectral structure as in A (3–8 harmonics), but for a stimulus level of 90 dB SPL. For this relatively high stimulus level, the nonlinearity effects of the cochlea over the AN population response are apparent. (C–D) The solutions of the LS case (hLS) and the SC case (hSC) for the 30 dB (C) and 90 dB SPL (D) stimulus levels, respectively. (E–F) Probability functions of the LS (Sp,LS) and the SC (Sp,SC) cases, for the two amplitude levels, respectively. In the 30 dB SPL case (E), the same pitch is succesfully estimated for both the LS and the SC simulations (blue and red arrows indicate maximum peaks). However, for the 90 dB SPL case (F) only the SC solution proved to be robust and invariant to the stimulus level, as desired (red arrow indicates maximum peaks). In order to account for the cochlear nonlinearities due to the changing in the stimuli levels, all simulations of the AN fibers in this section were made using Carney's cochlea model (Zilany’s et al. [27–29]).

The fundamental frequency is set to f0 = 225 Hz, and the amplitude gL corresponds to either 30 dB (Fig 6A) or 90 dB SPL. In these simulations, to account for the nonlinearities of the cochlea in the case of the higher sound level, we used the more updated model of Zilany et al. [27–29]. Fig 6A shows that for the 30 dB SPL signal, the AN population response is limited to a small region around the CFs of the stimulus’ spectral components, as expected. On the other hand, for the 90 dB SPL case (Fig 6B), the AN population response is dramatically different as it spreads out along the whole cochlea. Moreover, since the response is heavily saturated, the spatiotemporal patterns of peaks and troughs for each of the AN fibers are biased relative to the case of the moderate sound levels. Despite this significant difference in the AN responses between different sound levels, psychoacoustic measurements indicate that the sensation of pitch is robust to that effect [6,44].

The output of the SC model, h, is shown in Fig 6C and 6D for the two stimuli levels, 30 and 90 dB-SPL, respectively. Each panel shows both the sparse (SC, λ = 0.01, red) and non-sparse (LS, λ = 0.0, blue) solutions. As can be expected, the number of nonzero components in the vector h is much smaller for the SC solution when compared with that obtained by the LS algorithm. This sparseness applies to both sound levels, but the difference between the two solutions is much more noticeable for higher sound level (compare the blue line in Fig 6C with that of Fig 6D). Specifically, the LS solutions for the two stimuli levels are fundamentally different, and there is no apparent preservation of the spectral components of the input stimulus. Thus, the LS solution is variant to sound level, as opposed to what we would have expected from a representation of a pitch in the auditory system [45]. In comparison, the SC solutions do manage to preserve their overall structure. While the two SC solutions are not identical, both have only a few non-zero terms that directly relate to the frequency components of the input stimulus. Consequently, it seems that the sparse requirement in Eq 2, at the expense of the accuracy of the ordinary LS solution, contributes to the invariant representation of the stimulus in the vector h, regardless of its sound level.

In order to understand the effect of these different representations on pitch perception, we compare the resulting probability density functions (the pdf(fp)) for the two stimulus levels and both LS and SC solutions (Fig 6E and 6F). For the low sound level (Fig 6E and 6F), the two solutions are alike, and there is no apparent benefit to using one over the other: both curves (blue and red) have peaks at the same frequencies, and the maximum probability point equals that of the stimulus' fundamental frequency, i.e., . The result is substantially different for the 90 dB SPL amplitude. In this case, the pdf that corresponds to the solution of the LS algorithm has lost all resemblance with the stimulus' frequency components—it is just a flat, noisy curve. In comparison, the pdf that corresponds to the SC solution still has a clear indication of the original stimulus properties. In particular, the pdf curve (red line in Fig 6F) peaks at the harmonics of the fundamental f0, with a maximum peak at .

Effect of Different Dictionaries

Unlike, for example, the Fourier transform, the SC transform enables the use of various dictionaries that can be set according to some desired specifications. A standard option is to train a dictionary according to desired optimal constraints [35], but this is certainly not a prerequisite. For instance, when mapping patches of images into their parsimonious representations, one can choose to set the atoms of the dictionary as the basis of the discrete cosine transform, which is a straightforward and efficient choice (see for example Ch.12 in [25]).

In the current study, we checked two families of dictionaries: the first (Dsine) contains atoms created by sine stimuli, and the second (Dstack) contains atoms created from harmonics tones stimuli (harmonic stack). Specifically, each of the atoms in Dsine was produced by stimuli of one tone with random (uniformly distributed) amplitudes, and the atoms of Dstack were created by complex tone stimuli (harmonics 1st-6th) of the same moderate amplitude level (see Methods)

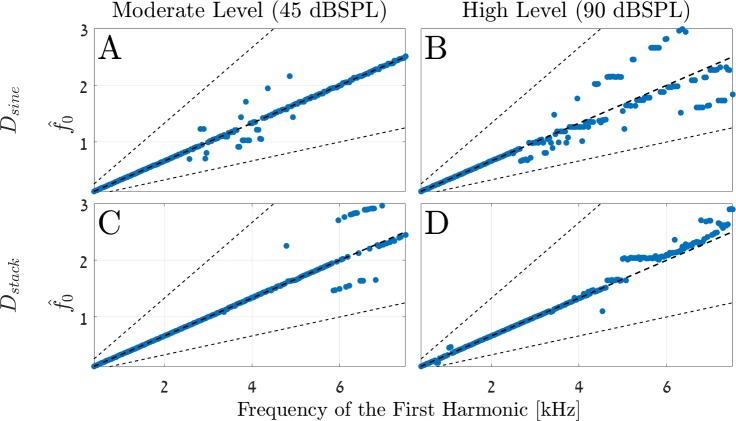

The two dictionaries were checked with both stimuli of 45dB SPL (Fig 7A–7C) and high amplitude levels of 90dB SPL (Fig 7B–7D). All simulations had the same spectral structure as given by Eq 6, i.e., all signals were complex tones with the 3–8 harmonics (the missing fundamental case). The results show the maximum peaks (blue dots) of the resulting pdfs that are taken from an interval of an octave around the fundamental frequency, as is the practice in psychoacoustic measurements (see for example [41,42]).

Fig 7. Comparing the performance of different dictionaries over moderate and high amplitude stimulus levels.

All simulations have the same spectral structure (Eq 6). This spectral structure is simulated for various fundamental frequencies, f0, and the figures show the estimated pitches for each such case (i.e., the maximum peak in each pdf). The estimations are taken from an interval of ± 0 .5 octaves around f0. Each row, i.e., figures A-B and figures C-D, show the estimation results of the SC model for the two dictionaries Dsine, and Dstack, respectively (see text). The column subplots refer to different stimuli levels: moderate (45dB SPL), and high (90dB SPL) amplitudes. The x-axis denotes the location of the first harmonic within the stimuli (i.e., the 3rd harmonic); the thick black dashed lines define the main octave (f0), and the thin black dashed lines define the lower and upper octaves, i.e., 0.5 f0 and 2f0, respectively. (A-B) At low frequencies, up to about 4k Hz of the lower harmonic in the complex stimulus, the estimations of the Dsine dictionary converge to the expected frequencies for both moderate and high stimuli. However, from 4k Hz and above, the pitch estimations for the high stimuli levels diverge from the main octave to other ratios of f0. (C-D) The pitch estimations of the Dstack dictionary converge to the main octave better for the low and high frequencies and for both amplitudes.

Comparing the four subplots of Fig 7, the dissimilarities between the results of the two dictionaries demonstrate quantitatively different results but qualitatively similar performances, at least in the lower frequency regions. For example, the two dictionaries yield relatively good estimations of the pitches up to about 4k Hz of the first stimulus’ harmonic (the third harmonic). For frequencies higher than 4k Hz, there is more variance around the expected fundamental frequency (thick black dashed middle line). Specifically, the 90dB SPL estimations for both Dsine and Dstack seem to be horizontally spread (Fig 7B–7D). From our experience, these deviations can be reduced by using dictionaries of higher resolution (i.e., with more cochlear channels and more atoms). However, due to the naïve structure of this model, there is a computational limit to the dictionary size that we can use. This restriction will hopefully be alleviated by a future model (see Discussion).

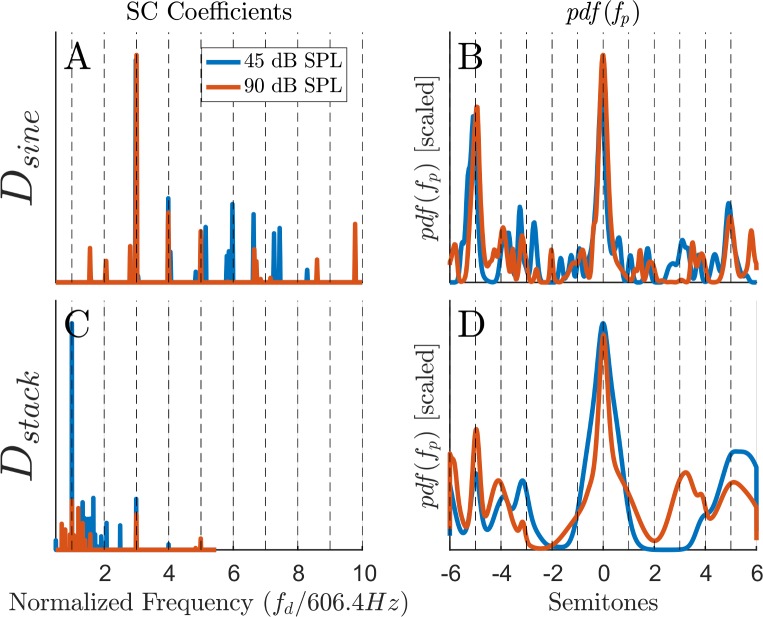

Fig 8 shows in more detail four selected examples of f0 = 606.4 Hz. The two h vectors in Fig 8A are taken from the moderate and high amplitudes levels of the Dsine dictionary case. The performance of the model for the 90dB level is a bit degraded compared to the 45dB level, as expected (see also Fig 6). Specifically, in the 90 dB SPL, the model estimates lower coefficients in h due to the nonlinearity of the cochlea. The resulting pdf, i.e., the probability for a particular pitch given these SC coefficients, is shown in Fig 8B. Fig 8C and 8D show the h vectors and pdfs for the second dictionary, the Dstack. Comparing Fig 8A with Fig 8C for the respected amplitude levels shows that the two SC vectors have different coefficients. This difference is due to the particular structure of the atoms in each of the two dictionaries above. Still, in spite of this structural differences, the h vectors have nonzero coefficients over indices that represent harmonics of f0. Consequently, the resulting pdfs have qualitatively similar results and the maximum peaks appear at the same location. Thus, the result is that the SC model predicts the same pitches for these two cases. Interestingly, the harmonic sieve is designed, in principle, to be optimal in the case of Dsine (and under certain assumptions, see [19,40]). Even so, it can still be used for the Dstack dictionary and yield good results. Note that it might be that for the Dstack dictionary there is a better (in the aforementioned optimal sense) representation for the harmonic sieve, but we did not pursue this path any further.

Fig 8. Detailed results for f0 = 606.4Hz.

The selected examples are taken from Fig 7 and show the SC coefficient vectors h and the pdfs for the two dictionaries and for the two amplitudes. (A, C) The SC coefficient vectors h for the Dsine and Dstack dictionaries, respectively. (B, D) The resulting pdfs, over one octave around f0 = 606.4Hz, for the Dsine and Dstack dictionaries, respectively. Note the difference between the SC coefficients of the two dictionaries, but the qualitative resemblance between the two pdfs.

In summary, this section emphasizes the notion that choosing a dictionary can improve or reduce the performance of the model in different aspects. Thus, one emerging interesting question from the above discussion is which dictionary can be acquired in a biologically compelling manner to match psychoacoustic and physiological measurements best? In this paper, however, we do not address this issue, but we have chosen to focus on demonstrating that parsimonious representation of an auditory information can explain relatively high cognitive tasks, i.e., the percept of a pitch. In what follows we assume a dictionary that is built of a single tone. Hopefully, the choosing of such a relatively simple dictionary, instead of a more intricate one, would prove to be clear and emphasize the qualitative abilities of such an approach.

Resolved and Unresolved Harmonics

The harmonics of a periodic signal are spatially distributed along the BM. Because of BM properties, low harmonics create separate peaks that are translated into distinct excitation patterns in the activities of the ANs. Since higher harmonics, on the other hand, do not yield such distinct peaks, these harmonics do not have distinct excitation patterns. Consequently, low harmonics are referred to as resolved and higher harmonics, approximately at the 5th–10th harmonics [14], as unresolved. For unresolved stimuli, the temporal aspects of AN response convey more information about the pitch than the spatial aspects of that response. Thus, using stimuli with resolved and unresolved harmonics is a controlled way to inspect the temporal processing of a pitch in the auditory system.

Broadly speaking, temporal models, such as the summary autocorrelation function (SACF) [4], disregard the resolvability of the stimulus. This is because such models account for the interactions between the harmonic components of the periodic signal whether they are resolved or not [44]. This indifference, however, stands in contrast to psychoacoustic observations [11,12,14,46].

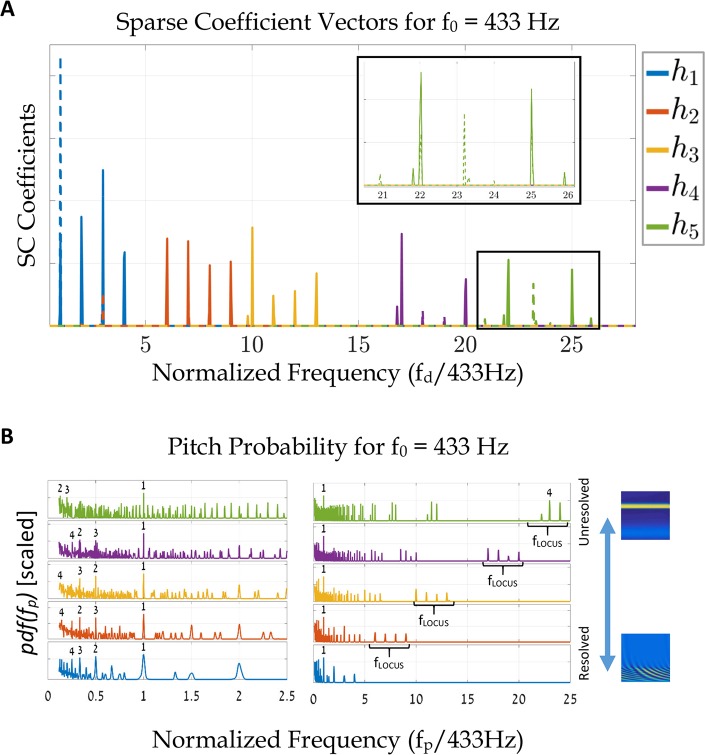

The current model combines temporal and spatial aspects of the AN response within its atoms. Thus, it is interesting to examine the model’s response to this class of stimuli. We compare five stimuli of complex tones (Fig 9),

| (7) |

Fig 9. Resolved vs. unresolved representation of harmonic cues.

(A) The solutions hk, k ∈ [1, 5], for the stimuli of Eq 7. We compare the SCs of the two dictionaries, Dsine (lines) and Dstack (dashed lines). Dsine consists of tone-atoms and Dstack consists of complex tones that contain six harmonics with decreasing amplitudes (1 to 1/6). All stimuli contain four harmonics of the same fundamental frequency, f0 = 433 Hz, but at different spectral locations (r ∈ {1, 6, 10, 17, 22}). The x-axis is normalized by f0 for convenience. The correlation between the SC solutions and the stimuli' spectral components (Eq 7) are apparent. Note that signals with low-frequency components (such as h1) have more prominent nonzero coefficients than those of the higher harmonics (e.g., h5). A closer look at h5 (the inset) shows that only two of the four harmonics are successfully reconstructed (the 23 and 24 tones of the 22–25 harmonics). (B) Pitch probabilities (pdfs) for the five complex tones for the Dsine (see text). The right figure shows all fp frequencies and the left one views fewer octaves around f0. The numbers above the curves state the four prominent peaks of the pdfs, from the highest (1) to the fourth lower peak. Observe that all five solutions peak at the first harmonic, that is, the model predicts the same 433 Hz pitch for all stimuli. Additionally, most of the other plausible pitches, i.e., other peaks, are usually located at harmonic ratios of f0, that is, they represent octave equivalence options. It is also instructive to note the fLOCUS frequencies in the right figure of (B). These peaks indicate the additional possibility of perceiving the pitches at the locus of the stimuli spectral energy and not of f0 [1]. All simulations were performed with Slaney's model and with a sound level of 45 dB SPL.

In Eq 7, each stimulus has the same spectral structure, that is, four consecutive harmonics, but the spectral locations of harmonics vary. Specifically, the spectral location of the first harmonic in each signal is set by r ∈ {1, 6, 10, 17, 22}. Additionally, the fundamental frequency is configured to f0 = 443 Hz, and the gains (g45 dB) of all stimuli are equivalent to 45 dB SPL.

Fig 9A shows the sparse coefficient vectors h for all five stimuli. Additionally, we compare the SCs of the two dictionaries, Dsine (lines) and Dstack (dashed lines). Dsine consists of tone-atoms and Dstack consists of complex tones that contain six harmonics with decreasing amplitudes (1 to 1/6). All the atoms are created with 60 dB SPL stimuli. As can be seen, there is a slight difference between the results, but overall both dictionaries yield the same SCs, pdfs, and pitch estimations. For that reason, we focused the rest of the analysis on the Dsine results.

In Eq 7, Low values of r represent stimuli with resolved harmonics while larger values (r > 5) represents stimuli with unresolved harmonics. Observe (Fig 9A) that for most of the stimuli, the four prominent spectral components of h are successfully reconstructed. Still, there is an apparent degradation (from resolved to unresolved stimuli) in the amplitude of the coefficient terms. For resolved stimuli, e.g., the 1st–4th harmonics stimulus (r = 1), the h vector holds prominent terms equivalent to the frequency components of the input signal. As r increases, the estimated terms in h decrease; for example, in the 22nd–25th harmonics stimulus (r = 22), the vector h only contains two out of the four equivalent frequency components of the input signal (Fig 9A, inset). This degradation is due to the reduced ability of the AN population to phase-lock with high frequency stimuli. Thus, the match between the atoms and the stimuli is less accurate, which results in smaller SC coefficients.

Fig 9B shows the corresponding pdfs of each of the five stimuli. The right panel shows the entire pdf while the left panel focuses on the vicinity of the fundamental f0 = 443 Hz. The numbers in the figure indicate the arrangement in a descending order of the peak heights, starting from the highest peak (peak number 1). For all stimuli, the maximum of each density function was obtained at the fundamental frequency. It is instructive to note that for each of the stimuli, other local maxima, indicating other pitch possibilities, are allocated at harmonic ratios of f0. For example, in the case of the lowest harmonic complex (r = 1), maximum peaks in the pdf are also available at the f0/4, f0/2, and 2f0. These other options represent the octave equivalence of the perceived pitch. Usually, humans perceive these options to be the same pitch, or to have the same pitch chroma. Hence, in psychoacoustic measurements, this mixing between octaves is generally not considered as an error [41–43] (specifically, see SI in [41]).

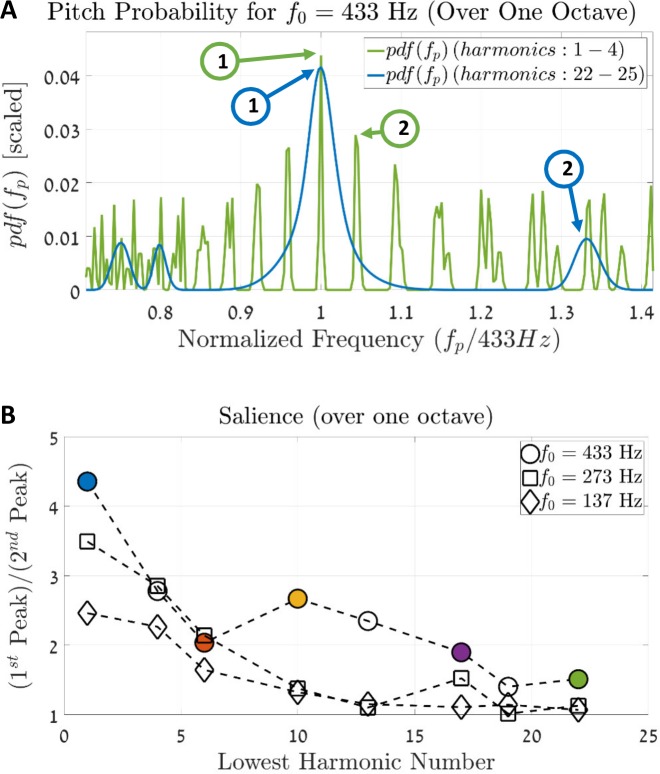

In Fig 10 we compare the pdfs of the two complex tones of Fig 9. The stimulus that contains the 1–4 harmonics is shown in blue while the stimulus that contains the 22–25 harmonics is in green. This comparison is done over one octave to avoid the other pitch equivalence solutions (which are approximately at the same height of the 1st peak). By inspection (Fig 10A), the ratio between the 1st and the 2nd peaks is higher for the resolved stimulus (blue line) compared to that of the unresolved stimulus (green line). We chose to denote this difference in the ratios as the salience of the stimuli. Fig 10B shows a comparison of additional stimuli with different fundamental frequencies; each one is a complex tone that contains four consecutive tones. The colors of the circles match the stimuli colors of Fig 9. Note that the salience of all stimuli decline with the increase of the location of the first harmonic, i.e., as the harmonics transcend from resolved to unresolved. Finally, stimuli with high harmonics also have additional peaks around the locus of the harmonic components (see the fLOCUS peaks in Fig 9B). These phenomena are consistent with known physiological data [9].

Fig 10. Salience of complex tones.

(A) A Comparison between the two probability functions of the complex tones from Fig 9: the blue line is the pdf of the complex harmonic tone with the 1–4 harmonics, and the green line is the pdf of the fifth stimulus, which comprises 22–25 harmonics. The x-axis is limited to one octave in order to compare the pitch's relative heights and without considering the octave equivalence of consecutive harmonics. The blue and green arrows show the 1st and the 2nd largest peaks of the two curves, respectively. Computing the ratio for each curve between the 1st and the 2nd peaks yields a measure of the pitch's salience; a larger ratio indicates a more prominent percept of tha pitch. (B) Calculating the ratio between the 1st and 2nd peaks for harmonic tones with four consecutive tones at different harmonic numbers. The x-axis indicates the location of the first harmonic in each stimulus, and the y-axis shows the ratio between the 1st and the 2nd peaks (as demonstrated in (A)). Colored circles indicate the relevant stimuli that are shown in Fig 9.

To conclude, despite the model’s seemingly spatially-based nature, it can derive the pitch of unresolved harmonics to some extent. Unlike purely temporal models, however, it penalizes these stimuli in relation to resolved ones. Note that this penalty is a consequence of the cochlear properties and not of the SC module. Specifically, this penalty was not introduced artificially into the model—it is an implicit property of the atoms and stems directly from the properties of the cochlear model.

Pitch Shift of Inharmonic Equally Spaced Tones

Perceived pitches are usually considered within the context of periodic signals. For example, the perceived pitch of a complex tone is its fundamental frequency, f0, whether it exists in the complex or not. Consider the following harmonic series

| (8) |

For Δf = 0, human subjects usually perceive the pitch of sin(t) as f0, the fundamental frequency of the harmonic signal [47]. This is true even for cases when f0 is not present in the signal, i.e., k0 > 1. For increased Δf > 0, the expression in Eq 8 is no longer harmonic. Nonetheless, previous psychoacoustic experiments have revealed that human subjects do manage to perceive pitches with these shifted stimuli, and the detected pitches are approximately shifted on a linear scale relative to the fundamental frequency [9,40,47–49]. These kind of stimuli are important because they demonstrate that pitch detection does not follow the stimulus' envelope, which does not change in this case (this is not true for stimuli with unresolved harmonics); nor does it follow the spacing between frequency components of a stimulus [1]. Thus, the phenomenon of pitch shift was used as a counter example for models that exploit the temporal envelope of a stimulus or other of its temporal features, such as zero crossing, peaks, etc. [18].

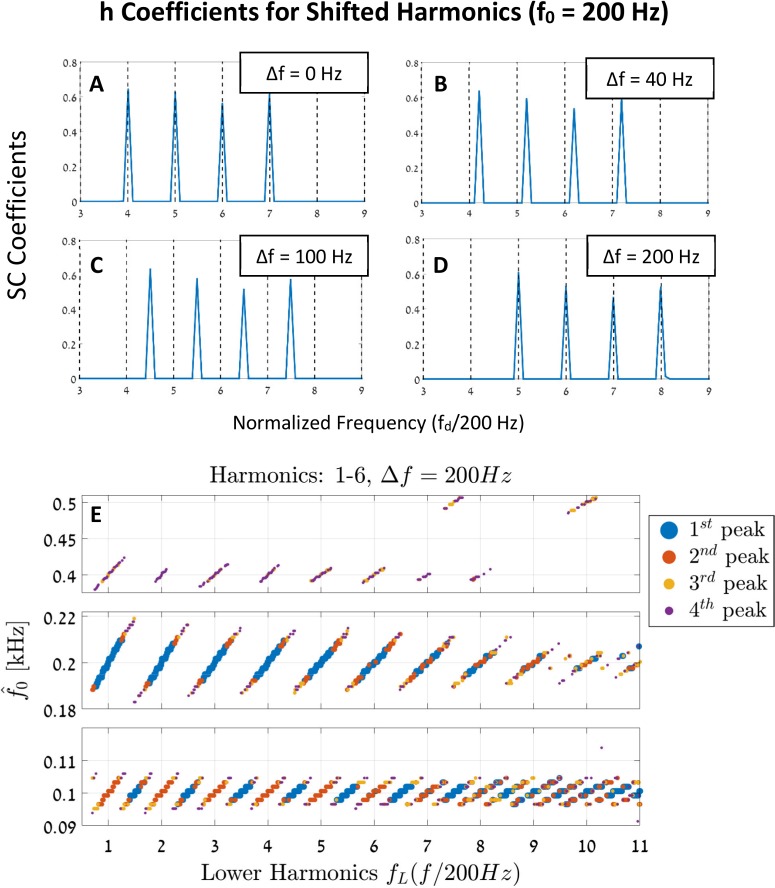

Fig 11A–11D shows the model's solutions of the sparse coefficient vectors, h, for different shifted signals (Eq 8). In these simulations, the input signal, sin(t), has four frequency components that are set to k ∈ [4,7], and the (missing) fundamental frequency is set to f0 = 200 Hz. In this example, setting Δf = 0 creates a stimulus sin(t) that is a complex harmonic series of f0 = 200 Hz. For a shift of Δf = 40 Hz, the stimulus is no longer a complex tone of f0 = 200 Hz, and a shift of Δf = 100 Hz changes the stimulus to be a complex tone of f0 = 100 Hz (with the [9, 11, 13, 15] harmonics). Finally, for a frequency shift of Δf = 200 Hz, the input signal is once again a complex tone of the fundamental f0 = 200 Hz, but this time with the [5, 6, 7, 8] harmonics. Following these observations, we would expect the model to exhibit this ambiguity by the frequency shift Δf, and to alternate its predictions between the frequencies of f0, 0.5f0, 2f0, etc.

Fig 11. Pitch shift of equally spaced harmonics.

(A-D) The vectors h for complex harmonic stimuli that contain the four harmonics of 4–7 (Eq 8). The x-axis denotes fd normalized by the fundamental frequency, f0 = 200Hz. The four figures show the stimulus in Eq 8 for the cases of Δf = 0 Hz, 40 Hz, 100 Hz, and 200 Hz, respectively. The zero shift case represents a regular complex harmonic signal. The 40 Hz shift is no longer a complex tone of 200 Hz. The third option (C) is a harmonic complex of 100 Hz (with the harmonics 9, 11, 13, and 15). Finally, the Δf = 200 Hz shift results again in a complex harmonics of f0 = 200 Hz but this time with the 5–8 harmonics. (E) The peaks of the probability functions, pdf(fp), for 500 uniformly shifted stimuli. Each stimulus is given by Eq 8, i.e., each signal includes the first four terms (1–4) of the fundamental f0 = 200Hz, plus an incremental frequency shift of Δf. The x-axis denotes the frequency of the lowest harmonic component of the input stimulus (f0 + Δf) normalized by f0 for visual clarity. The y-axis denotes the estimated pitch. To demonstrate the ambiguity of this process, we included the first four largest peaks of each of the resulted pdfs. We focused the view along the 100 Hz, 200 Hz, and 400 Hz in the y-axis; all other regions are mostly empty. Note the linear shifts in the pitch estimations and the changing of these slopes as a function of Δf [47].

We performed 500 simulations of sin(t) (Eq 8) with f0 = 200 Hz. Each signal contains the first six harmonics (i.e., k ∈ [1, 6]), and each is simulated with a different Δf. Fig 11E shows the estimated frequency as a function of the lower harmonic component fL in the input signal. For each of the stimuli, the corresponding pdfs are calculated, and the four highest peaks are indicated. We chose to include the four prominent peaks of each pdf to show the ambiguities of these signals. The estimated pitches are clustered in lines as a function of the lower frequency fL, consistent with the known “first effect of pitch shift” [47]. One can also see that as the shift Δf increases, the slopes of the estimated lines slightly decrease, in accordance with the known psychoacoustic phenomenon [47].

To conclude, the current model qualitatively reproduces the known psychoacoustic phenomenon of pitch shift, even though these aperiodic signals are not part of the model's dictionary. This implies that the current model: generalizes to complex new stimuli; it does not depend on the stimulus temporal envelope for cues; and it does not use the spacing between the stimulus harmonics to estimate pitch.

Transposed Tones

Transposed tones (TTs) were first introduced to explore the relative sensitivity of the auditory system for binaural timing stimuli [50]. Oxenham et al. [13] used these signals to check the relation between the tonotopic organization in the cochlea and the perception of pitch. The motivation of using these signals is to introduce low-frequency temporal structures into the basal part of the cochlea that usually processes high frequencies. TTs are produced by the modulation of half-wave rectified sine waves with carrier waves. Due to the limited synchronization and low-pass properties of the basilar membrane, the outer hair cells, and the ANs, the fine details of the carrier waves would be negligible. Thus, the results are half-wave rectified sine waves of low frequency (f0) in high CFs regions (> 4 kHz). In this manner, there is a separation between spatial locations and fine temporal structures along the cochlea.

Measurements carried out by Oxenham et al. [13] showed that subjects could not estimate the fundamental frequency of the TT stimuli. This inability means that the spatial arrangement of CFs along the cochlea is essential for the perception of pitch. Oxenham et al. have also shown that the summary autocorrelation function (SACF), a well-known temporal model, is indifferent to TTs and thus to the tonotopic organization in the cochlea. When applying TTs to the analysis of the SACF, the model does manage to extract the correct fundamental frequencies, in contrast to the aforementioned psychoacoustic evidence.

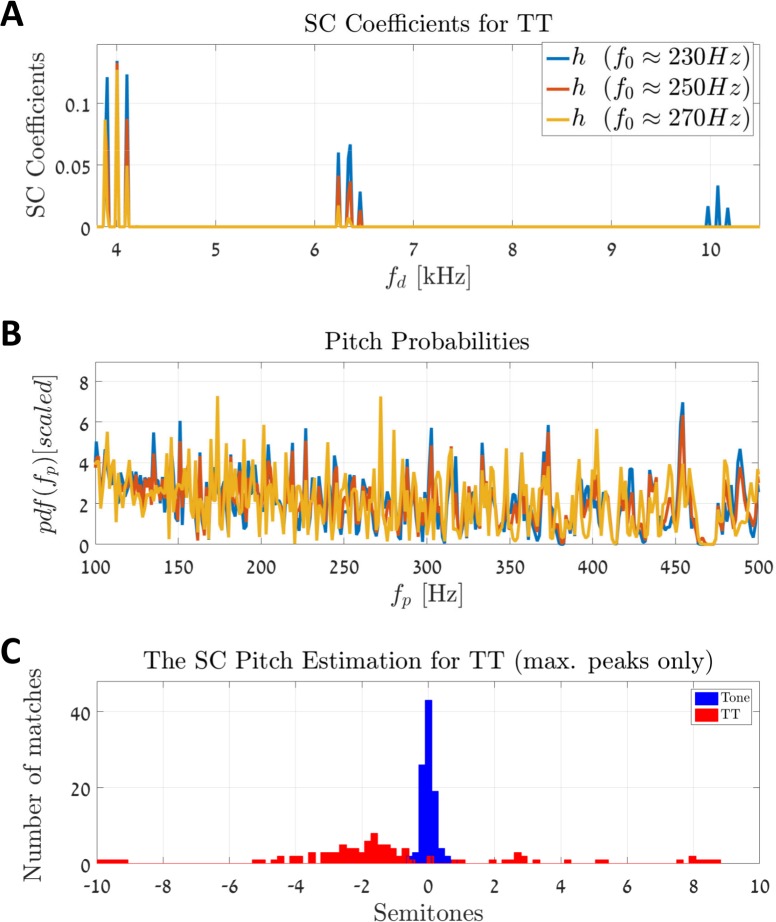

In the case of the proposed model, each region along the cochlea is characterized by its local spatiotemporal activities. These localized patterns are embedded in the atoms for each CF. Hence, we predicted that the SC model would not be able to separate between spatial and temporal processing. Fig 12 shows the processing of TT stimuli by the SC model. We first considered three TTs with the fundamentals f0 = 230 Hz, 250 Hz, and 270 Hz. The expression for each of these TTs is given by

| (9) |

Fig 12. Transposing low-frequency tones into high-frequency regions of the cochlea.

(A) An example of three sparse coefficient vectors, h, for the three frequencies f0 = 229 Hz, 249 Hz, and 269.7 Hz. The resulting h vectors have the same nonzero indices, i.e., these stimuli cannot be differentiated based on their sparse representations. (B) The pdfs of the three TTs are noisy and inconclusive, as expected. (C) Predictions of 100 epochs; only the 1st peak in the pdf is considered. There are two distinct types of stimuli: (i) pure tones (blue), and (ii) TTs (red). Both stimuli are simulated with incremental fundamental frequencies of f0 ∈ [100 Hz, 500 Hz]. Each stimulus is normalized relative to the fundamental f0. The model could estimate the f0 of the pure tones with a high degree of accuracy but could not predict those of the TTs at all (compare with [13]).

In this equation, is a low-pass filtered version of a rectified sine wave (see Methods), and each of these tones is modulated by the three carriers: fc,1 = 4 kHz, fc,2 = 6.35 kHz, and fc,3 = 10.08 kHz. Fig 12A shows the results of the sparse coefficient vectors (h) for the above three TT stimuli. It is apparent that the three sparse representations occupy the same indices, i.e., the same frequencies fd in h. Therefore, the SC model cannot distinguish between these stimuli based on their sparse representations. And indeed, the pdfs of these sparse vectors are inconclusive (Fig 12B).

Next, we simulated a batch of 100 epochs (Fig 12C). Each epoch contained the TT of Eq 9, and each had an incremented fundamental frequency taken from the interval f0 ∈ [100 Hz, 500 Hz] (red bars in Fig 12C). We repeated the simulation also for pure tones (blue bars in Fig 12C) and compared the two by normalizing the measurements with the respective f0. The results are consistent with the findings of Oxenham et al., that is, the SC model could not estimate the f0 for the TT stimuli successfully.

Iterated Rippled Noise

Delaying a signal of broadband white noise and adding it back to the original one creates a signal known as rippled noise. When this process of delaying and adding is repeated, a signal known as iterated rippled noise (IRN) is created. These signals contain temporal regularities in the time domain and spectral peaks at the reciprocal of the delayed time in the spectrum domain. Due to the nature of these signals, human listeners report perceiving two sensations: a tonal part that amounts to the pitch of the reciprocal of the delay (d ms) and an additional noisy sensation [51,52]. Repeating the iteration process results in a more prominent sensation of the tonal pitch [53].

Adding the delayed noisy signal back to the original one with a gain of one (delay-add) yields a signal with spectral peaks that are located at the reciprocal of the delay time d. But adding the delayed signal with a gain of minus one (delay-subtract) yields a signal with peaks in the power spectrum that are shifted by 1/2d, as if the delay-subtract signal is an odd-harmonic complex of half the frequency of the delay-add version. Delay-add stimulus raises a sensation of pitches of 1/d Hz, whereas delay-subtract is usually perceived to be more ambiguous and yields pitches that are slightly higher or lower than 1/d Hz [53].

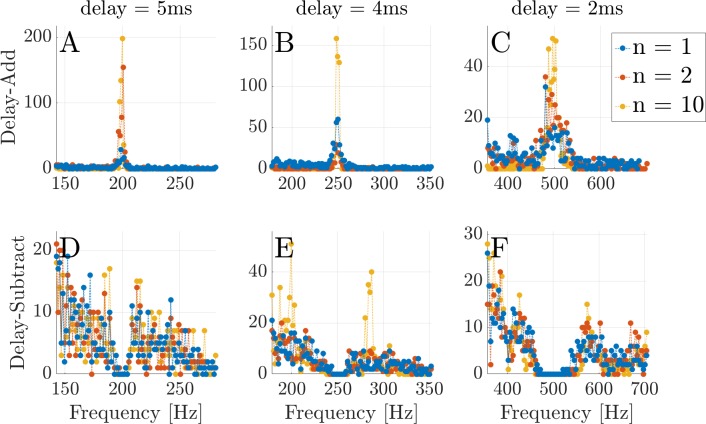

Simulations of delay-add and delay-subtract stimuli with the delays of d = 2, 4, and 5 ms, are shown in Fig 13. These signals are created as follows: for a white noise, x(t), the iterated signal sn(t) is created by

| (10) |

for i = 1,…,nitr, and nitr is the number of iterations (e.g., 1, 2, or 10). All simulations were done using Carney's model (Zilany et al. [27–29]) with stimuli levels of 70dB SPL; the dictionary contained 1000 sine-atom groups and each group has 10 time-shifts (phases) in it (g = 10, Eq 3).

Fig 13. Iterated rippled noise for different time delays and repetitions.

The figures show the results of 500 simulations for each case of IRN stimulus. Each subplot along the columns show the delays of d = 5, 4, and 2 ms that correspond to the fundamental frequencies of 200, 250, and 500 Hz, respectively. The subplots in the first row show the delay-add simulations, and the lower row shows the delay-subtract simulations. The results are derived from the first peaks of the resulting pdfs, and all estimations are taken from an interval of one octave around the appropriate fundamental frequency [42]. Simulations are done using Carney's model (Zilany et al. [27–29]) with stimuli of 70 dB SPL. The dictionary contained 1000 groups of sine-atoms with distinct CFs and 10 phases in each group (g = 10, Eq 3).The blue dots indicate rippled noise (one repetition), red points correspond to IRN with 2 repetitions, and yellow dots are for the 10 repetitions. (A-C) The delay-add simulations show distinct peaks around the 1/d frequencies. (D-F) The delay-subtract simulations show accumulation of the inferred pitches at frequencies equal to or greater than 1/d±10%, but the results for this case are noisy and inaccurate relative to psychoacoustic measurements.

In the first row (Fig 13A–13C), which contains the delay-add cases, a clear peak appears at the reciprocal of the delay, 1/d, as expected. As the number of repetitions increases, so does the prediction quality of the model, i.e., more estimations are concentrated around 1/d Hz. In Fig 13D–13F we show the delay-subtract cases for the same delays. In these simulations (Fig 13E and 13F), the inferred pitches are located around the reciprocal of the delays, as expected. But we would also expect the measurements to peak at approximately 1/d±10%, which does not happen.

Musical Notes

Music in the Western culture is based on a musical scale that relates periodic (or quasiperiodic) sounds to their fundamental frequencies. Thus, musical instruments that are based on this musical scale produce harmonic sounds based on these fundamental frequencies. As such, different instruments have different spectral coloring (i.e., timbre), but human listeners can perceive and compare the fundamental frequencies between the instruments [54]. This ability is due to the pitch perception property of clustering periodic (or quasiperiodic) sounds into classes, i.e., musical notes.

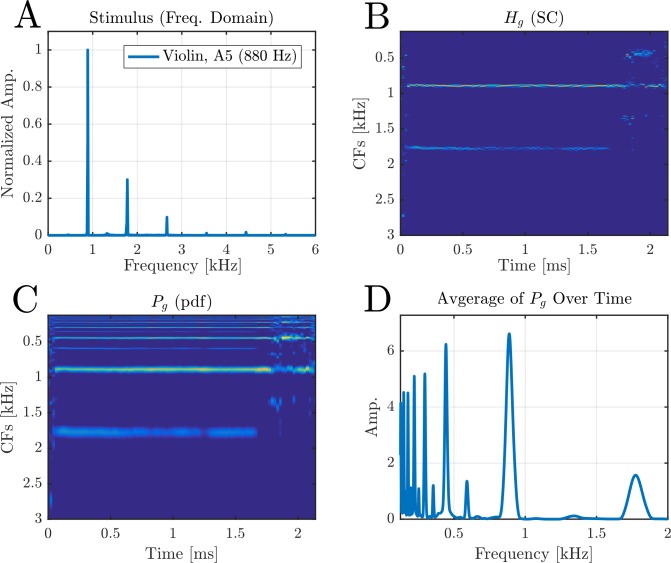

In this section, we checked the SC model with recorded musical notes [55]. For this, we used a dictionary with 1000 atoms, each of which had ten different phases (g = 10, Eq 3). Each recorded stimulus was divided into Tsteps = 100 time steps that were analyzed separately. Fig 14A shows the FT of a recorded violin note of A5 (880 Hz) played with a bow (arco). At each time step Tsteps, Eq 3 is solved separately to obtain . Next, the coefficients of each group are summed together to get the SC vector h ∈ RM. The matrix is the aggregation of all h ∈ RM over Tsteps = 100 steps (Fig 14B). Each of the columns of Hg (the SC vectors) are then processed by the harmonic sieve unit to produce the probability of that time step. The collection of all these pdf is given by the matrix (Fig 14C). Finally, to have one single probability for each stimulus, the matrix Pg is averaged over the time domain and normalized appropriately (Fig 14D). As in previous cases, the pitch of the signal is defined as the maximum point in this pdf.

Fig 14. Analyzing a recorded stimulus of a violin.

(A) The Fourier transform of the recorded signal. This is a note of A5 (880 Hz) played by a bow (arco). The 880 Hz and its harmonics are clearly seen. (B) Each time step Tsteps of the stimulus is processed separately. The results are collected to form the columns of the matricx Hg. (C) Each of the SC vectors (columns) of Hg are processed by the harmonic sieve separately to produce the pitch probability of that time step (Pg). (D) To compare between simulations, we average over the time steps to extract the most prominent pitch of the signal. The result is the usual pdf vector, and the estimated pitch is set to the maximum of this pdf.

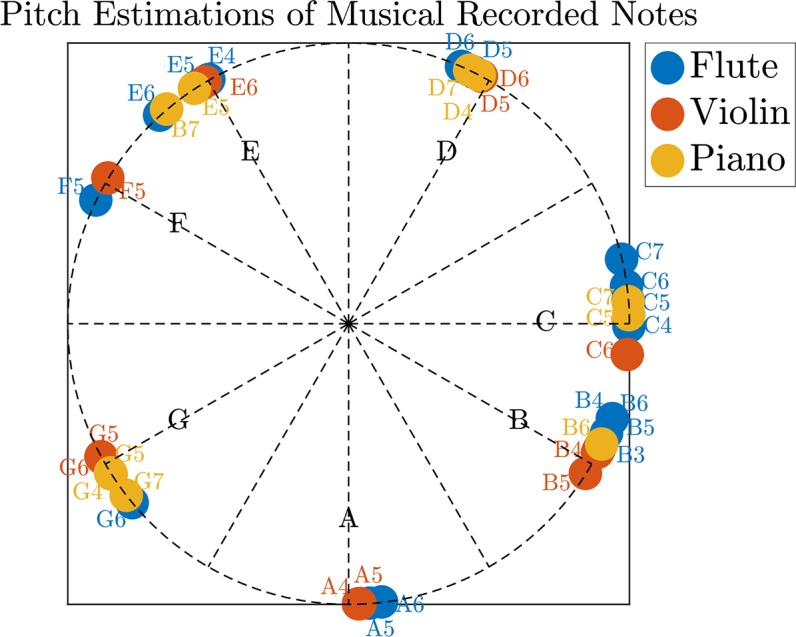

We repeated this procedure with recorded notes of a flute, a violin, and a piano (Fig 15). All results are shown on a chromatic scale. Each dot in Fig 15 is the estimated pitch of a recorded instrument; the colored text indicates the played note.

Fig 15. Results for musical notes on a chromatic scale.

We analyzed three musical instruments: a flute, a violin, and a piano for different notes. The results are shown on a chromatic musical scale (equal-tempered). The colored labels along the colored dots specify the notes played in specific recordings. All of the recordings were downloaded from [55]. Although not exact, the model does manage to assign most of the measurements to the right note (pitch).

Discussion

We showed that a model based on the sparse coding of the spatiotemporal pattern of auditory nerve responses is consistent with many pitch perception phenomena. The model represents input stimuli as sparse linear combinations of atoms, where each atom is derived from the AN population response to a pure tone.

Since the perception of pitch can be elicited by a variety of different stimuli [31], we tested the model on various such categories. We demonstrated that the sparse representation arising from a given stimulus at different sound levels could be linked to the spectral components of that stimulus, giving rise to a level-invariant representation of a pitch. Resolved and unresolved stimuli lead to a different pitch estimate in the model, with the difference stemming directly from cochlear properties. Inharmonic stimuli were used to show that the model can generalize to new stimuli while relying neither on the spacing between harmonics nor the temporal envelope of the stimulus. Next, we demonstrate that the use of the ANs spatiotemporal patterns as atoms force a tonotopic structure into the model. Consequently, it cannot estimate transposed tones (TTs), in accordance with known psychoacoustic measurements [13]. We showed that the model complies with IRN stimuli, and it is also able to process the recorded sounds of musical notes.

The focus of this paper was the application of sparse coding to the problem of pitch perception. The particular choice of the supporting elements used here (i.e., cochlear model, pitch estimation unit, and LASSO) are somewhat arbitrary. First, we chose standard, biologically inspired, cochlear models [26–29]. Second, we implemented the sparse coding (SC) algorithm by a known algorithm with available implementations, the LASSO [32]. Other plausible choices are presented in the literature, such as matching pursuit algorithms [25,56] (see [57] for different implementation in the auditory system). Third, the final pitch-estimation phase was implemented as an instance of the commonly used [58] harmonic sieve (via pattern-matching models). This construction enables an algorithm-level view [59] of the topic at hand.

The SC Model and Related Models for Pitch

The SC model presented here combines both spatial and temporal aspects of the AN population response. On the one hand, the SC model is based on atoms that have limited spatial support, namely, the nonzero section along the BM that is given by the equivalent rectangular bandwidth of the cochlea [60]. On the other hand, each atom also includes temporal information about the activities of the AN fibers at that spatial location.

The current model is structured in a similar manner to pattern-matching models [5,16,18]. The Fourier-like spectrum analyzer that extracts the resolved harmonic components of a given stimulus [18] is modified to include both the cochlea and the SC modules. Both models use templates to associate an estimated pitch with presented stimuli [58,61]. Despite these similarities, there is a fundamental difference between pattern-matching models and the current one. The atoms (templates) used in this study contain both spatial and temporal activities (Fig 3). Consequently, although the patterns of the AN population activities may be spectrally unresolved, there is still enough spatiotemporal information for identifying the different harmonics (Fig 9). Additionally, the SC does not rely on synthetic bases such as the sine and cosine of the Fourier transform (FT) but actual AN activities. Of course, these bases are also unresolved for high tones which means that the model exhibits less salience with unresolved stimuli, again, in line with psychoacoustic experiments [13]. Note that since the SC model implicitly inherits this property for high tones from the known attributes of the cochlear model, there is no need to add this feature into the model explicitly.

Historically, models of pitches were linked to harmonic analysis theories. Thus, it is instructive to note the mathematical connection between the SC model and the FT. Indeed, for the particular case of deprecating the dictionary matrix D into a square matrix, and setting λ = 0, the optimal solution for any periodic signal x of Eq 2 is given by the FT. In this case, the optimal solution is for h to be the coefficients of the FT and D its matrix [62]. In the SC case, applying λ ≠ 0 enables the use of biologically oriented, non-orthogonal, and redundant dictionaries [63]. In this sense, the current proposed model can be seen as an extension of classic pattern-matching models.

How does the SC model compare with temporal ones? Traditional temporal models, the most prominent of which are based on the summary autocorrelation function (SACF), also exploit temporal features from the AN population responses [2,3,10,64]. However, there are several open issues with these type of models. First, SACF models need to have long tapped delay lines for the correlation module, i.e., about 40ms and maybe more if noise is accounted for [15,65]. Currently, however, there is no physiological evidence to support such structures [9,31]. In contrast, the SC model exploits local spatiotemporal features without the need for long tapped delay lines. For that reason and to keep the model biologically plausible, we chose to use only short time segments of 5ms (see [66]). Different temporal interval durations were also tested. For shorter time intervals, e.g., 2ms, the SC model acts as a place-rate model; i.e., it managed to estimate only low resolved signals by their spatial activities along the BM. Longer time intervals also improved the model predictions for unresolved stimuli, up to a maximal estimation of about 10ms. Another issue associated with temporal models is that they treat resolved and unresolved frequencies in a similar manner in contrast to known psychoacoustic measurements [11,12,14,46]. This may imply that tonotopic organization is not necessary for auditory processing, but, again, physiological evidence suggests otherwise [13]. Tonotopic organization is preserved in the auditory system up to the auditory cortex for all mammals [67–70], and current evidence suggests that pitch processing is also sensitive to it [9,14].

Recently, Laudanski et al. [71] proposed a structural theory of pitch that considers both the spatial and the temporal aspects of the AN population response. Within this framework, the perception of pitch is derived from correlated activity in pairs of points in the spatiotemporal representation of AN activities. These two points are not necessarily located along the temporal activity of the same AN fiber (pure temporal processing), nor between different AN fibers at a particular time (pure spatial processing).

Both the structural theory and the proposed SC model are strongly related. In the SC model we incorporate the so-called cross-channel delays of the structural theory in the spatiotemporal patterns of the atoms. Specifically, cross-channel delays of a stimulus are compared to other cross-channel delays of the model that are embedded in the atoms. We think, however, that approaching the problem of pitch estimation from the SC aspect offers considerable benefits. First, the SC approach provides a mathematical framework that generalizes to other modalities whereas the structural theory approach offers a more specific pointwise approach [23]. Second, cross-channel delays of the structural theory can be simply acquired under the SC method by using predesigned atoms (as shown in this paper) or unsupervised training of atoms (see [36,72–74] to name just a few). These techniques were already tested, including within the auditory system [57], with great success. Thus, the SC framework can explain different possible options for such cross-channel correlations.

Other theories and models that exploit the spatial, the temporal, or both, include: the spatial cross-correlation theory of Loeb et al. [75]; de Cheveigne’s solution for the problem of tapped delay lines in temporal theories [65]; Carney’s model of phase-opponency [76], Shamma et al.’s lateral-inhibition and cross-correlation-matrix model [77–79], and the MASD of Cedolin et al. [80]. Note, however, that, these models consider only a small subset of the whole two-dimensional spatiotemporal structure created by the AN fibers. For example, Loeb et al. proposed comparing two locations along the BM that vibrate with the same phase, that is, a spatial comparison without the time domain. De Cheveigne proposed to compensate phase shifts between adjacent cochlear filters, i.e., extracting temporal lags and discarding the spatial information; and Cedolin et al. proposed a model that is based on spatial derivation between cochlear filters with a temporal summation, namely, accounting for the differences between two adjacent cochlear filters and averaging over the time domain. Additionally, these models account well for resolved stimuli but not for unresolved ones [71].

The Pitch Estimation Module

The use of the harmonic sieve can be considered from different perspectives. First, from the probabilistic point of view it can be seen as an implementation of a likelihood function: the probability of a particular pitch given a set of (parsimonious) coefficients h. This approach originates from the pattern-matching theory, and since the proposed model can be seen as an extension to the pattern-matching models, the same theoretical and experimental motivations also apply here. For example, following Goldstein et al. [16], we chose the templates of the harmonic sieve to be Gaussian functions [16,40,58,81]. It might be that for different dictionaries, e.g., dictionaries that contain harmonic stacks, there are better options, but we were not concern with optimizing this feature in this paper.

Second, from the physiological perspective, the harmonic sieve can be thought of as a simple feedforward neural network. In such instance, a set of Gaussian templates of one tone (one row in the matrix G) can be seen as a neuron with a modulated selectivity curve, i.e., a neuron that responds to a particular tone and its successive octaves. For examples of such implementations, see [61,82]. Finally, the harmonic sieve can be considered as a simple (i.e., linear) readout function that extracts the perceived pitch from the activities of the spatiotemporal receptive fields and introduces it in a manner that enables an easy comparison with psychoacoustic data.

Third, from the biological perspective, it had been shown [83] that harmonic templates of this sort can emerge naturally from basic processing in the auditory periphery. Specifically, Shamma et al. demonstrated that the fundamental features include: frequency analysis, fast changing delays at the CFs, phase-locking, and half-wave rectification. All of these properties part of the cochlear models that we used.

The Dictionary

In this paper, we checked two types of dictionary families: the first is created by sine stimuli while the second is created by stimuli of harmonic tones (i.e., harmonic stacks). These dictionary types were constructed and tested for various amplitude levels. Choosing a dictionary can influence the congruity of the SC model’s results with psychoacoustic measurements: the sensitivity of the model to resolved and unresolved stimuli, the response to low and high stimuli levels, etc. All these features emerge from the cochlear properties that are encapsulated within the atoms. Thus, an important question is which dictionary can be acquired in a biologically compelling manner and will best match psychoacoustic measurements? We intend to investigate this interesting question further in a future paper. However, in this paper we focused on the main premise—that parsimonious representation of auditory information can explain relatively high cognitive tasks, such as that of the percept of a pitch.

Accordingly, we chose to work, for the most part, with a simple dictionary of pure tones. Indeed, pure sinusoidal stimuli are rare in our natural acoustic surroundings [84] and there is no guarantee that the auditory system has access to such components at all. However, note that there is a subtle difference between plausible stimuli for a (hypothetical) training process and the outcome of this process, the atoms themselves. This is an important distinction because it implies that it is reasonable to assume that a learning process over natural-like stimuli, for example, vowels and consonants, can yield local spatiotemporal atoms and not necessarily stack-like atoms. Moreover, there is circumstantial physiological evidence to support similar spatiotemporal structures along different areas in the auditory system. For example, Norman-Haignere et al. [85] researched specific regions in the anterior auditory cortex that strongly react to resolved harmonic tones, and, to a lesser extent, to unresolved ones. Additionally, Carney et al. found, in the anteroventral cochlear nucleus [66], cells that have distinct spatiotemporal tuning patterns in response to pure tones.

Acquiring a dictionary directly from the AN population response is not the necessarily the only implementation nor is it the optimal one. A different approach that has been successfully applied and has many variants [24,34–37] is to perform an unsupervised training from a randomly selected stimulus set that represents sampled statistics of the input domain. These unsupervised learning algorithms create dictionaries that are oriented to specific kind of inputs, for example, natural sounds [73]. Subsequently, they usually generate dictionaries that lead to sparser and more accurate results. It is thus important to realize that the proposed SC technique is not limited to a particular set of atoms (see for example Ch. 12 in [25]).

Plausible Implementations by Artificial Neural Networks