Abstract

Background: Grounding health claims in an evidence base is essential for determining safety and effectiveness. However, it is not appropriate to evaluate all healthcare claims with the same methods. “Gold standard” randomized controlled trials may skip over important qualitative and observational data about use, benefits, side effects, and preferences, issues especially salient in research on complementary and integrative health (CIH) practices. This gap has prompted a move toward studying treatments in their naturalistic settings. In the 1990s, a program initiated under the National Institutes of Health was designed to provide an outreach to CIH practices for assessing the feasibility of conducting retrospective or prospective evaluations. The Claim Assessment Profile further develops this approach, within the framework of Samueli Institute's Scientific Evaluation and Review of Claims in Health Care (SEaRCH) method.

Methods/Design: The goals of a Claim Assessment Profile are to clarify the elements that constitute a practice, define key outcomes, and create an explanatory model of these impacts. The main objective is to determine readiness and capacity of a practice to engage in evaluation of effectiveness. This approach is informed by a variety of rapid assessment and stakeholder-driven methods. Site visits, structured qualitative interviews, surveys, and observational data on implementation provide descriptive data about the practice. Logic modeling defines inputs, processes, and outcome variables; Path modeling defines an analytic map to explore.

Discussion: The Claim Assessment Profile is a rapid assessment of the evaluability of a healthcare practice. The method was developed for use on CIH practices but has also been applied in resilience research and may be applied beyond the healthcare sector. Findings are meant to provide sufficient data to improve decision-making for stakeholders. This method provides an important first step for moving existing promising yet untested practices into comprehensive evaluation.

Keywords: : complementary and integrative health, evaluation, qualitative methods, evaluability assessment, patient-centered research

Introduction

Many people make assumptions that the care they receive is grounded firmly in evidence. It was famously reported that only about 15% of medical practices are evidence-based.1 Since the 1980s, a push toward growing evidence-based medicine and practice has occurred. More recently, an analysis of common medical treatments determined that the effectiveness of 46% of medical treatments is unknown.2 Certainly, tried and true treatments with years of success and minimal adverse events may not warrant experimental testing (such as aspirin for headache), but many complementary and integrative health (CIH) practices and interventions or newly “discovered” or innovative technologies must be evidence-based.

However, a problem arises when results attained from the primary methods for studying causation (double-blind, randomized, controlled clinical trials [RCTs]) do not translate into the delivery setting or are impossible to conduct or resource. Further, evidence-based medicine that relies heavily on RCTs tends to exclude important qualitative and observational information about the use and benefits of some therapies, information that is often essential for patient-centered care.3 The results of the Institutes of Medicine roundtable on evidence-based medicine found that beyond basic efficacy and safety, the use of gold standard trials and large outcomes studies to establish effectiveness and variation is often impractical.4 It went on to suggest that “the speed and complexity with which new medical interventions and scientific knowledge are being developed often make RCTs difficult or even impossible to conduct.”1 Thus, new methods whereby data are collected from and applied in naturalistic healthcare contexts are needed. To fill these gaps in methodology, the Scientific Evaluation and Review of Claims in Health Care (SEaRCH)5 is a systematic, stepwise, streamlined set of methods to evaluate CIH practice claims. It uses a sequenced, synergistic program of methods that includes field investigations for claims in practice; systematic reviews6 for current evidence; and expert panels7 for determining application, policy, or patient preference and the direction for further research. This sequence allows a progression through the incremental steps necessary for healthcare evaluation. A key component of SEaRCH is the Claim Assessment Profile (CAP), which studies treatments in their naturalistic setting and offers the first step toward evidence-based medicine for healthcare practices where only anecdotal evidence currently exists. The evolution of the CAP, its methods, and applications are described here.

Field Investigations for Healthcare Claims

Conducting research within a complex practice setting is challenging. In 1995, the National Institutes of Health's Office of Alternative Medicine created the Field Investigation and Practice Assessment (FIPA) program. Under FIPA, the Office of Alternative Medicine conducted dozens of site visits of CIH practices around the world. The major goals of this program were to (1) develop interest in research of complementary and alternative medicine practices that offered promising therapies for specific diseases, (2) assess the feasibility of conducting a practice outcomes evaluation, and (3) evaluate cases to see whether sufficient data existed to conduct retrospective and/or prospective outcomes studies. The Office of Alternative Medicine followed up on these site visits by contracting with the Centers for Disease Control and Prevention to conduct formal field studies of promising CIH clinical practices. The FIPA program was further developed in 2003 under the congressionally mandated Complementary and Alternative Medicine Research for Military Operations and Health Care Program and was renamed the Epidemiological Documentation Service. Run for several years by the Foundation for Alternative and Integrative Medicine (formerly named National Foundation of Alternative Medicine), the program was later transferred to Samueli Institute in 2008, where it was further developed, tested, and renamed SEaRCH.5

Generally, field investigations are performed when the use of descriptive studies would be valuable for understanding practice use and generating hypotheses before application of other analytic study designs, or to determine when the existing practice data are sufficient to warrant further study. These initial field investigation methods have been formalized and enhanced to deliver the CAP within the SEaRCH program.

Methods/Design

The CAP provides an in depth, cross-sectional snapshot of the characteristics of a clinic, practice, program, or product to determine what the practice is, what it purports to do (the claim), and whether it would be feasible to collect and analyze data about the claim within the naturalistic setting of the practice. The CAP method requires expertise in program evaluation and qualitative research methods, with subject matter expertise in the claim being assessed. The CAP draws on several established methods, including rapid assessment processes developed over the last 20 years and program theory-driven evaluation science, which offer innovative and highly collaborative techniques for figuring out causal links.8,9 The CAP is conducted in the spirit of “appreciative inquiry,” in that it is a strengths-based collaboration with clients to discover “the best of what is.”10

The CAP is not intended to be a comprehensive outcome evaluation; however, its methods are nonetheless systematic and rigorous. The approach is designed to (1) describe and clarify a claim, (2) gather information about the current practice, and (3) determine the practice's capacity to participate in further evaluation. This is done through a collaborative data collection approach with structured qualitative interviews, site visits, administrative/structural data, and other observational data gathered by the evaluation team. This method has been used previously to understand practice implementation and move claims along to the next level of study. By conducting field investigations via a CAP, clients are informed on how to articulate the structures and organization of their practice, how to frame research questions, and how to proceed with further research and evaluation. The outcome of a CAP is a descriptive report of the practice, its activities, its claims, and its readiness for future research.

Methodologic Summary of CAP

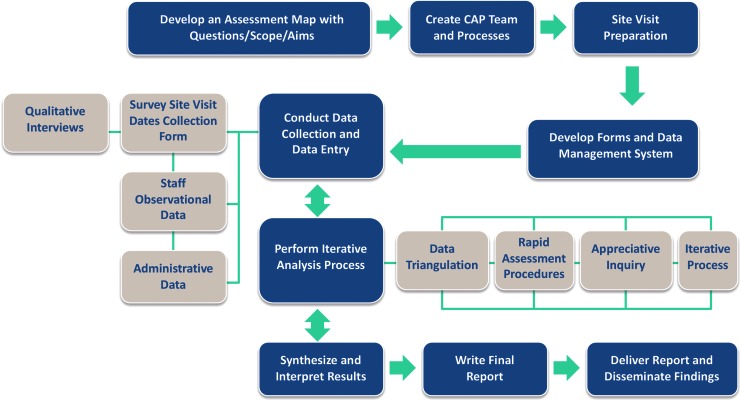

For implementation of a CAP, research evaluators meet with clients to discuss the scope of the claim and decide whether this method is appropriate for the client. An evaluator works with the client to design a clear research question and approach for the assessment, including the purpose, background, and specific aims. Then a team is assembled, including members from the practice and evaluators, in a collaborative effort, which is the keystone of rapid assessment process, program theory-driven evaluation science, and appreciative inquiry.9,11,12 A site visit is planned and a tailored questionnaire is created for the client.

During the site visit, interviews are conducted and observational and survey data are collected. An iterative data collection and analysis method is used, during which evaluation team members work in close collaboration with the staff at the site and use data triangulation to support and refute findings that emerge with other team members and the site staff to maximize their expertise.8 The evaluation is team-based and appreciative in that it concentrates efforts in the study first and foremost on the positive elements of the program.11

Research questions, outcomes, and causal links are defined in collaboration with the client by using logic modeling based on program theory.9 This approach uncovers the outcomes that are most impactful to participants and provides data from which to glean the most appropriate patient-centered research design.

A final report is produced. It describes the practice; what can be concluded about the research question formulated; what data exist that can support the claim; and what are the readiness, capacity, and willingness of the site staff for further evaluation.

There are criticisms that the findings can be less valid, reliable, and credible than those obtained through more formal methods. However, McNall and Foster-Fishman13 countered these criticisms with an in-depth study of these rapid evaluation methods in which they highlighted the importance of increasing trustworthiness of findings through the use of a confirmatory and dependability audit trail process. That process was recommended by Guba and Lincoln (1989) as critical for ensuring that methods are logical and strategically employed and that findings accurately portray stakeholder perceptions.14

Comparison with similar approaches

Program evaluation

Program evaluation is asystematic, comprehensive assessment method for collecting, analyzing, and using information about programs, policies, and practices. Although program evaluation may share some methods with the CAP (such as logic modeling), it provides analytic insights on effectiveness efficiency. Program evaluation is useful when a practice has been established long enough that there are data to support assessment of structure, process, and outcomes. A CAP might be the first step to investigate whether a program evaluation is feasible.

Case studies/best case series

The CAP provides a detailed description of the claim and may include a case study and best case series. However, best case series often are not feasible or useful for many claims, and they still require description of the practice and claim. The CAP aim is to define the research questions, impacts, and evaluability, whereas a case study or best case series has predetermined sets of outcomes against which the case is measured. Often the data for a best case series do not exist in a practice.15

Rapid evaluation and assessment methods

These methods can be a quick and relatively inexpensive way to provide an understanding of constraints and facilitating factors of a practice. Many types of rapid appraisal, assessment, and evaluation methods have emerged in response to the limited resources available for evaluation and in order to provide faster turn-around for findings. The five most common rapid assessment methods that informed the development of the CAP are rapid assessment process, rapid ethnographic assessment, real-time evaluation, rapid-feedback evaluation, and appreciative inquiry.16,17

Knowledge, attitudes, and practices

A knowledge, attitude and practices (KAP) survey is a rapid assessment method with predefined questions formatted in standardized questionnaires that provide access to quantitative and qualitative information. KAP surveys reveal misconceptions or misunderstandings that may be obstacles to the activities that a program would like to implement and potential barriers to behavior change in its participants. It is essentially a formative evaluation based on survey data of the population being served and is often used in international program evaluation.18 While the KAP and CAP share methods (e.g., rapid timeline, surveys), the CAP focuses on evaluability assessment whereas the KAP focuses on formative issues, such as challenges to program delivery and impact from participant perspectives.

Common features for all of the rapid evaluation techniques used to create the CAP include the collection of quantitative data (in the form of data collected through surveys and the review of existing data sets) and qualitative data (in the form of formal and informal interviews with key informants/stakeholders and naturalistic observations). The common processes include participatory research that targets populations (clients, providers, practitioners, and patients) involved in framing of the study and data triangulation during analysis. The work is team-based and usually involves the collaboration of team members throughout the process, from planning and data collection to the interpretation of findings. The data collection and analysis are iterative and can involve analysis of data while information is still being gathered, as well as the use of preliminary findings to guide decisions about additional data collection.

In summary, the advantages of rapid methods are that they are low cost, quickly conducted, and flexible enough to explore new ideas. Disadvantages of rapid methods are that they relate to specific communities, localities, healthcare centers, or clinics, so the results are difficult to generalize.

Use of the CAP

The CAP is useful in a variety of settings and purposes (three examples are described below), but when does it make sense to use it? The rapid timeline of the method suggests the suitable context: when there is a vital need for information on which action will be taken soon. The findings are instrumental and not meant to provide hypothesis testing; rather, they are meant to develop explanatory models to inform hypothesis generation. The CAP provides information about evaluation readiness and capacity of the practice to conduct research. The data provide sufficient quality to assist clients in decision-making about next steps in the evaluation cycle. A required condition for a successful CAP is a client who is open to learning and ready to take action on the basis of findings. The CAP provides information to the client on the possibilities, readiness, and resources for further evaluation of their health claim. Further, the assessment results can engage expert review for such uses as setting research agendas, deciding on clinical appropriateness, determining patient relevance, or developing policies. It is useful for clinicians, practitioners, patients, administrators, researchers, or product developers wherever there is a health claim that is anecdotal or based on unknown evidence. A clinician or CIH practitioner may be seeing positive results from their treatments and are motivated toward evaluation as a way of understanding their own treatment effects, as well as for improving their practice and making it more widely used, accepted, and understood by the general public.

A case example of a CAP study is the assessment of a large integrative cancer clinic in Canada.19 Samueli Institute was invited to work with Canada's first and foremost, MD-led, comprehensive cancer care center that uses an internationally recognized model of integrated care. Since 1997, it has provided care to more than 5500 British Columbians, with a primary focus on cancer. The center was interested in strengthening its evaluation efforts, and the Canadian government and a local nonprofit foundation were interested in knowing more about the clinic's practices and outcomes. Using the CAP method, evaluators helped to clarify, describe, and document the practices at the clinic in terms of care provided, delivery of services, and expected changes in specific outcomes for the practitioners and patients. Evaluation staff joined forces with center staff in logic modeling and causal path mapping exercises. Outcomes were defined and a path model was created, forming the basis for future rigorous scientific evaluation. Further, evaluators assessed the readiness and resources of the practice to engage in research through review of existing data collection capabilities. The CAP method clarified the claim and gathered information about the current practice in order to provide the public with an independent description of the practice as delivered, as well as determining the practice's readiness and ability to be involved in research. This work was instrumental in moving the center toward its next steps in outcomes evaluation, which was a prospective trial.

Another example is in the application of the CAP to define research questions and expected impacts for a resilience education training program within a behavioral health department. The program staff were creating and delivering contents to participants. but they did not have the research acumen needed to design an evaluation. Therefore, a CAP was conducted that described the program and provided a logic model that defined the structure, process, and outcomes of interest. The results from the CAP fed directly into a comprehensive mixed methods program evaluation.

A final example of the application of these techniques was conducted on a product that is a noninvasive, drug-free alternative therapy developed for individuals with fibromyalgia, delayed-onset muscle soreness, and phantom limb pain. The product consists of iron, nickel, and chromium fibers woven into a nylon fabric that can be custom made into socks, gloves, jackets, blankets, or limb covers. Various forms or sizes are applied to painful areas of the body. This product was being used in a solo physician practice with a chiropractic doctor, receptionist, and insurance/billing professional. The clinic had access to an ethics/independent review board committee for research but was not affiliated with a university-based collaborator. Samueli Institute implemented a CAP that defined the product, its application, and outcome claim in phantom limb pain. This led to a retrospective observational study followed by a randomized, placebo-controlled clinical trial.

Figure 1 offers a visual guide for the steps in the CAP.

FIG. 1.

Basic steps in the Claim Assessment Profile (CAP).

Data analysis

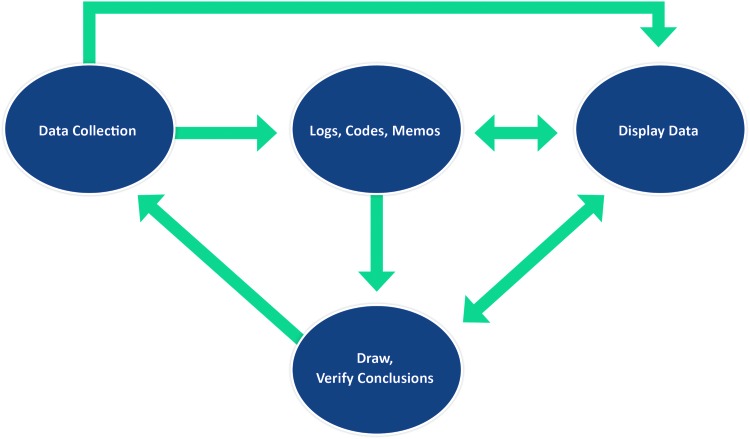

The types of data produced from the surveys and interviews implemented in the CAP dictate the data analysis approach. Descriptive data are analyzed by organizing the responses into topics to be reviewed and written up in a narrative report. This approach is based on Yin's work on case report research.20 The exploratory questions yield data that require an analytic coding technique to refine concepts for summarizing in a final report. This is based on the work of Miles and Huberman, whose analytical coding approach is illustrated in a graphical display.21 If the client is oriented toward operationalizing outcome variables and the underlying causal links, then time is spent during the site visit for logic modeling in order to find what the staff and participants feel is most impactful. See Figure 2 for the model of the iterative and interactive data analysis approach.

FIG. 2.

Data analysis methods for iterative and interactive model.

Producing final deliverables

After data collection and analysis are completed, evaluators synthesize and interpret the findings in a tailored report that meets client goals and aims as outlined in a statement of work. The final deliverable for the client is a narrative summary description of the practice along with a logic model and causal path model. While delivering the CAP results to the client is the first and foremost aim, there are other possible avenues through which results can be disseminated if the client is interested, including through publication of a manuscript in peer-reviewed journals, presentations at conferences, reports for expert panels, press releases, and public forums. Further, the questions developed during the CAP process can be passed along to the next phase in the SEaRCH program, which involves conducting systematic reviews6 of current evidence outside the practice in support of the claim, which helps in determining generalizability. If the client has requested that an expert panel be convened to review and recommend next steps from the CAP findings, then the results are disseminated to expert panel members.

Limitations

The limitations of this methods paper are that we were not able to provide specific examples of data from previous CAP reports because we do not have permission to publish data from client reports of programs that are beginning their evaluation processes.

Discussion

CIH practices vary considerably from site to site and practice to practice. The first step in evaluation of CIH is to accurately describe the practice. The CAP is a rapid assessment approach that describes the background, characteristics, and procedures of a clinic, practice, program, or product in order to define research questions and assess readiness and resources for engaging in research of the claim. The CAP is part of a suite of methods called SEaRCH, which was created to advance promising CIH practices into evaluation. This approach has been developed and tested primarily to aid in the rigorous and scientific evaluation of clinical practices that are already in use. However, the approach was designed with the flexibility for application beyond healthcare and wellness. There are numerous methods for evaluation of health practices, but many of the most rigorous scientific methods, such as RCTs or quasi-experimental studies, are expensive and difficult to conduct for clinical staff, who may not have the research background, capacity, experience, or resources to perform evaluation activities. The CAP process is the first step toward making research feasible for numerous health and wellness treatment modalities where clinical, anecdotal, or case-study evidence exists or the evidence is unknown.

Acknowledgments

This project was partially supported by award number W81XWH-08-1-0615-P00001 (U.S. Army Medical Research Acquisition Activity). The views expressed in this article are those of the authors and do not necessarily represent the official policy or position of the U.S. Army Medical Command or the Department of Defense, or those of the National Institutes of Health, Public Health Service, or the Department of Health and Human Services.

The authors would like to acknowledge Mr. Avi Walter for his assistance with the overall SEaRCH process developed at Samueli Institute and Ms. Cindy Crawford and Ms. Viviane Enslein for their assistance with manuscript preparation.

Contributions: L.H. and W.J. developed and designed the methodology of the CAP and the SEaRCH process. Both were involved in drafting of the manuscript and revising it for important intellectual content, have given final approval of the version to be published, and take responsibility for all portions of the content.

Author Disclosure Statement

No competing financial interests exist.

References

- 1.Institute of Medicine. The National Academies Collection: Reports funded by National Institutes of Health. Evidence-Based Medicine and the Changing Nature of Healthcare: 2007 IOM Annual Meeting Summary Washington, DC: National Academies Press, National Academy of Sciences, 2008 [PubMed] [Google Scholar]

- 2.Garrow JS. What to do about CAM: how much of orthodox medicine is evidence based? BMJ 2007;335(7627):951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jonas W. The evidence house: how to build an inclusive base for complementary medicine. West J Med 2001;175:79–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Olsen L, Aisner D, McGinnis JM. The learning healthcare system: workshop summary (IOM roundtable on evidence-based medicine). Washington, DC: National Academies Press, 2007 [PubMed] [Google Scholar]

- 5.Jonas W, Crawford C, Hilton L, Elfenbaum P. Scientific Evaluation and Review of Claims in Healthcare (SEaRCH™): a streamlined, systematic, step-wise approach for determining “what works” in healthcare. J Altern Complement Med 2017. [Epub ahead of print]; DOI: 10.1089/acm.2016.0291 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Crawford C, Boyd C, Jain S, et al. Rapid Evidence Assessment of the Literature (REAL): streamlining the systematic review process and creating utility for evidence-based health care. BMC Res Notes 2015;8:631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Coulter I, Elfenbaum P, Jain S, Jonas W. SEaRCH expert panel process: streamlining the link between evidence and practice. BMC Res Notes 2016;9:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Beebe J. Rapid Assessment Process: An Introduction. Walnut Creek, CA: AltaMira, 2001 [Google Scholar]

- 9.Donaldson SI. Program Theory-Driven Evaluation Science: Strategies and Applications. Mahwah, NJ: Erlbaum, 2007 [Google Scholar]

- 10.Preskill H, Coghlan A. Appreciative Inquiry and Evaluation. New Directions for Program Evaluation. San Francisco: Jossey-Bass, 2003 [Google Scholar]

- 11.Hammond S. The Thin Book of Appreciative Inquiry. 2nd ed. Plano, TX: Thin Books, 1987 [Google Scholar]

- 12.Scrimshaw N, Gleason G. Rapid Assessment Procedures: Qualitative Methodologies for Planning and Eevaluation of Health Related Programmes. Boston, MA: International Nutrition Foundation for Developing Countries (INFDC), 1992 [Google Scholar]

- 13.McNall M, Foster-Fishman PG. Methods of rapid evaluation, assessment, and appraisal. Am J Eval 2007;28:151–168 [Google Scholar]

- 14.Guba E, Lincoln Y. Fourth Generation Evaluation. Newbyry Park, CA: Sage, 1989 [Google Scholar]

- 15.Vanchieri C. Alternative therapies getting notice through best case series program. J Natl Cancer Inst 2000;92:1558–1560 [DOI] [PubMed] [Google Scholar]

- 16.Coulter I. Evidence summaries and synthesis: necessary but insufficient approach for determining clinical practice of integrated medicine? Integr Cancer Ther 2006;5:282–286 [DOI] [PubMed] [Google Scholar]

- 17.Wholey JS. Evaluation and Effective Public Management. Boston: Little Brown; 1983 [Google Scholar]

- 18.USAID. KAP Survey Model (Knowledge, Attitudes, Practices). Online document at: https://www.spring-nutrition.org/publications/tool-summaries/kap-survey-model-knowledge-attitudes-and-practices Accessed November10, 2016

- 19.Hilton L, Elfenbaum P, Jain S, et al. Evaluating integrative cancer clinics with the claim assessment profile: an example with the InspireHealth Clinic. Integr Cancer Ther 2016. [Epub ahead of print]; DOI: 10.1177/1534735416684017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yin RK. Case Study Research: Design and Methods. Thousand Oaks, CA: Sage, 1984 [Google Scholar]

- 21.Miles M, Huberman A. Qualitative Data Analysis: An Expanded Sourcebook. Thousand Oaks, CA: Sage, 1994 [Google Scholar]