Abstract

Glaucomatous visual field progression has both personal and societal costs and therefore has a serious impact on quality of life. At the present time, intraocular pressure (IOP) is considered to be the most important modifiable risk factor for glaucoma onset and progression. Reduction of IOP has been repeatedly demonstrated to be an effective intervention across the spectrum of glaucoma, regardless of subtype or disease stage. In the setting of approval of IOP-lowering therapies, it is expected that effects on IOP will translate into benefits in long-term patient-reported outcomes. Nonetheless, the effect of these medications on IOP and their associated risks can be consistently and objectively measured. This helps to explain why regulatory approval of new therapies in glaucoma has historically used IOP as the outcome variable. Although all approved treatments for glaucoma involve IOP reduction, patients frequently continue to progress despite treatment. It would therefore be beneficial to develop treatments that preserve visual function through mechanisms other than lowering IOP. The United States Food and Drug Administration (FDA) has stated that they will accept a clinically meaningful definition of visual field progression using Glaucoma Change Probability criteria. Nonetheless, these criteria do not take into account the time (and hence, the speed) needed to reach significant change. In this paper we provide an analysis based on the existing literature to support the hypothesis that decreasing the rate of visual field progression by 30% in a trial lasting 12–18 months is clinically meaningful. We demonstrate that a 30% decrease in rate of visual field progression can be reliably projected to have a significant effect on health-related quality of life, as defined by validated instruments designed to measure that endpoint.

Keywords: Glaucoma, Intraocular pressure, Neuroprotection, Perimetry, Progression, Clinical trials

1. Introduction

Glaucoma is characterized by progressive, irreversible damage to the optic nerve resulting in serious vision loss and blindness. This gradual progression of vision loss has both personal and societal costs and therefore has a serious impact on quality of life (QOL). The purpose of this document is to provide the scientific basis and methodological approach for measuring visual field progression in prospective clinical trials and relating it to a clinically meaningful decline in visual function.

At the present time, intraocular pressure (IOP) is considered to be the most important modifiable risk factor for glaucoma onset and progression. Reduction of IOP has been repeatedly demonstrated to be an effective intervention across the spectrum of glaucoma, regardless of subtype or disease stage (AGIS, 2000; CNTGS, 1998b; Gordon et al., 2002; Heijl et al., 2002; Lichter et al., 2001; Miglior et al., 2005). It is therefore not surprising that numerous medications to reduce IOP have been approved by regulatory agencies worldwide. In the setting of approval of IOP-lowering therapies, it is expected that effects on IOP will translate into benefits in long-term patient-reported outcomes. Aside from a recent randomized clinical trial (RCT) in the United Kingdom (Garway-Heath et al., 2015) the direct link between a specific pharmaceutical product to lower IOP and the prevention or delay of visual field progression has not been established nor are these medications specifically approved for such a functional outcome. Nonetheless, the effect of these medications on IOP and their associated risks can be consistently and objectively measured. This helps to explain why regulatory approval of new therapies in glaucoma has historically used IOP as the outcome variable.

Although all approved treatments for glaucoma involve IOP reduction, patients frequently continue to progress despite treatment. Both RCTs and clinical practice demonstrate that a substantial number of patients progress despite significant IOP-lowering therapy, and the risk of blindness over long periods of time is considerable. It would therefore be tremendously beneficial to develop treatments that preserve visual function through mechanisms other than lowering IOP.

In this paper we provide an analysis based on the existing literature to support the hypothesis that decreasing the rate of visual field progression by 30% in a trial lasting 12–18 months is clinically meaningful. We use three independent and mutually supportive methods to support this hypothesis:

We demonstrate that a 30% decrease in rate of visual field progression can be reliably projected to have a significant effect on health-related quality of life (HRQOL), as defined by validated instruments designed to measure that endpoint. This line of reasoning is based on population-based studies that assess visual fields and HRQOL.

The United States Food and Drug Administration (FDA) has stated that they will accept a clinically meaningful definition of visual field progression with Glaucoma Change Probability (GCP) criteria: “Visual field changes may be acceptable as a clinically relevant primary endpoint provided a between-group difference in field progression is demonstrated. The progression of visual field loss will be suspected if five or more reproducible points, or visual field locations, have significant changes from baseline beyond the 5% probability levels for the GCP analysis” (Weinreb and Kaufman, 2009). Nonetheless, these criteria do not take into account the time (and hence, the speed) needed to reach significant change. We demonstrate that this event-based outcome corresponds to a rate (slope) of visual field progression equal to or faster than −0.5 dB/yr for at least five abnormal test locations at baseline. This line of reasoning is supported by data from clinical trials that assessed both event-based and trend-based outcomes.

We demonstrate that a 30% decrease in progression rate with a trend-based analysis of visual field data is equivalent to that seen with a 2–3 mm Hg decrease in IOP. This line of reasoning is supported by data from non-regulatory clinical trials and other key studies that investigated the relationship between IOP and visual field change. That level of IOP decrease is considered clinically meaningful in patients with glaucoma who are progressing while on IOP-lowering therapy.

This paper presents the background for these arguments and reviews the critical literature related to the epidemiology of glaucoma, effects on QOL, measurement of progression, and detection of the effects of therapeutic intervention. Wherever possible, we use data derived from large-scale population-based and/or randomized studies to avoid bias. We use this reasoning to conclude that a therapy which results in a 30% decrease in visual field progression rate over 12–18 months in patients with glaucoma is clinically meaningful, and therefore would be valuable for treating glaucoma patients who are progressing despite IOP-lowering therapy.

2. Glaucoma – change in population demographics and socioeconomic burden

2.1. Glaucoma is a leading cause of blindness in the United States and worldwide

2.1.1. Background

The glaucomas are a group of chronic eye diseases that damage the optic nerve and for which there are currently no clinically-proven methods to reverse damage. Patients with glaucoma present to eye health professionals with varying degrees of severity. In its early stages, glaucoma damage is relatively asymptomatic for four reasons: (1) patients are frequently unaware of damage in the peripheral visual field; (2) the pace of progression is often slow (but continuous); (3) there is tremendous redundancy in the sensory system, including the ability of the visual cortex to fill-in loss of visual field; and (4) the binocular nature of vision means that one eye may compensate for early losses in the other. As progression occurs, the patient may unconsciously compensate for a steadily worsening visual field, contrast sensitivity, and even color vision.

However, at the same time that the disease is progressing, there is visual dysfunction from visual field damage and contrast sensitivity loss which is reflected in slow but relentless development of problems with everyday life (e.g., driving, reading, and risk of falls). Eventually, there is vision-related disability, loss of visual acuity (VA) and legal blindness from severely constricted visual fields, poor VA, or both.

Major risk factors for developing glaucoma include higher IOP, greater cup-to-disc ratio, decreased central corneal thickness (CCT), older age, pseudoexfoliation syndrome, genetic factors, and ocular perfusion pressure (AGIS, 2002; De Moraes et al., 2012b; Gordon et al., 2002; Leske et al., 2007; Lichter et al., 2001; Miglior et al., 2005; Musch et al., 2009). These and other risk factors also increase the chance of progressive disease severity.

2.1.2. Glaucoma is a common condition which increases in prevalence as the population ages

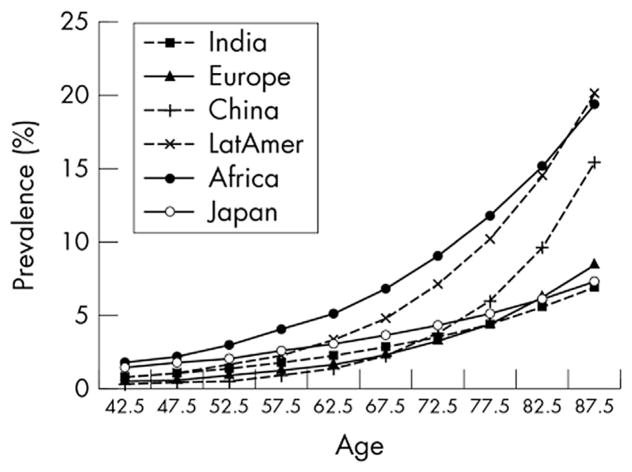

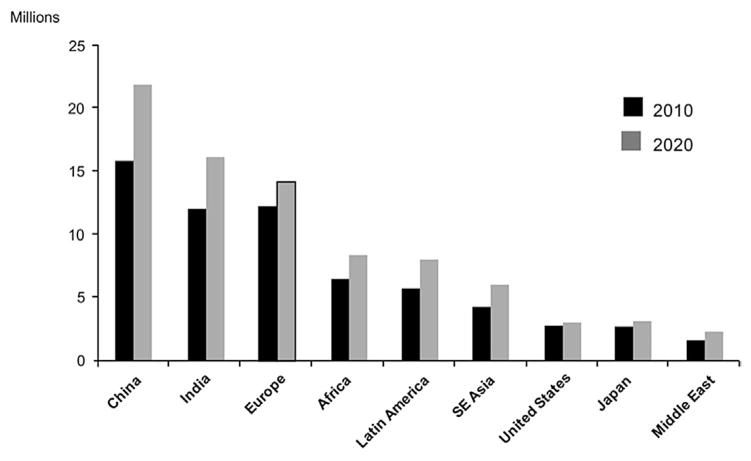

In the year 2000, glaucoma affected nearly 68 million persons worldwide and caused bilateral blindness in almost 7 million persons(Quigley, 1996). A 2006 review of worldwide glaucoma prevalence models estimated that by 2010 glaucoma would be the second leading overall cause of blindness in the world and leading cause of irreversible blindness, affecting 60.5 million people with prevalence varying worldwide (Quigley and Broman, 2006) (Fig. 1). Open-angle glaucoma (OAG) was estimated to affect 2.22 million people in the United States in 2002 (Fig. 2) (Friedman et al., 2004). Over 8.4 million people were estimated to be bilaterally blind from primary glaucoma in 2010, rising to 11.1 million by 2020.

Fig. 1. Estimated prevalence of glaucoma in 2010 based on prevalence model data.

Source: Quigley HA, Broman AT. The number of people with glaucoma worldwide in 2010 and 2020. Br J Ophthalmol. Mar 2006;90(3):262–267.

Fig. 2. Estimated prevalence of glaucoma – 2010 and 2020.

Source: Adapted from Quigley HA, Broman AT. The number of people with glaucoma worldwide in 2010 and 2020. Br J Ophthalmol. Mar 2006;90(3):262–267.

Previous estimates based on blindness prevalence surveys (Resnikoff et al., 2004) suggested that 12% of world blindness (4.4 million people) was caused by glaucoma. Blindness estimates differ because of methodological issues with prevalence surveys frequently assigning the most “treatable” disease as the primary cause of blindness. As such, cataract is often assumed to be more treatable than glaucoma, which leads to an underestimation of glaucoma blindness. Moreover, these numbers probably underestimated the true glaucoma prevalence because they were based on population-based studies that defined OAG without regard to IOP level and required both disc and field tests showing abnormal results to define glaucoma, as opposed to other definitions that rely mostly on the presence of glaucomatous optic neuropathy (GON). Wolfs et al. evaluated this conservative definition for OAG (Wolfs et al., 2000), and determined that it was likely to specify those with definite disease. In addition, failure to test the visual field can miss up to one third of those with the disease (Tielsch et al., 1991). Disc examination alone is not adequately specific, and studies that use “expert” subjective assessment of disc and field may lack reproducibility (Tielsch et al., 1988; Varma et al., 1992).

Glaucoma is most commonly a disease of the elderly, and its prevalence is likely to continue to increase throughout the world as life expectancy rises. Life expectancy has increased considerably during the last 50 years (by 10 years in the United States (Peters et al., 2013b)) and is expected to increase further. As life expectancy increases, not only will glaucoma prevalence increase, but glaucoma patients will be exposed to the disease for a longer period of time, further increasing the lifetime risk of blindness from glaucoma.

At present, treatment of OAG is directed at IOP lowering, which continues to be the only proven and treatable risk factor for the disease. There are several modalities of treatment for lowering IOP, including medicinal therapy, laser surgery, and incisional surgery. However, lowering of IOP does not halt all cases of progression (AAO, 2010; De Moraes et al., 2012b; Drance et al., 2001; Gordon et al., 2002; Leske et al., 2007; Musch et al., 2009). In some individuals with progression, it is not practical to sufficiently lower the IOP. In other individuals, factors other than IOP alone, or in combination with IOP, may be damaging the optic nerve.

2.1.3. Population-based studies demonstrate high rates of blindness due to glaucoma

2.1.3.1. Study 1: Malmo, Sweden

Population-based studies have provided important information regarding the burdens of glaucoma as a main cause of blindness. Based upon the World Health Organization (WHO) criteria for low vision (0.05 [20/400] ≤ VA < 0.3 [20/60] and/or 10° ≤ central visual field <20°) and blindness (VA < 0.05 [20/400] and/or central visual field <10°), in a study performed in Malmo, Sweden, investigators defined the following four categories of low vision and blindness with glaucoma as the main cause: (1) unilateral low vision: patients with low vision in one eye; (2) bilateral low vision: patients with low vision in the best eye; (3) unilateral blindness: patients blind in one eye; (4) bilateral blindness: patients with both eyes blind, mainly caused by glaucoma in at least one eye (Peters et al., 2013b). The date of the glaucoma diagnosis was set to the date of the first reliable visual field showing a glaucomatous defect. The time for low vision or blindness was the first visit when the standard automated perimetry (SAP) result was centrally constricted to less than 20° or 10°, respectively, or when VA was permanently reduced to below 0.3 (20/60) or 0.05 (20/400), respectively.

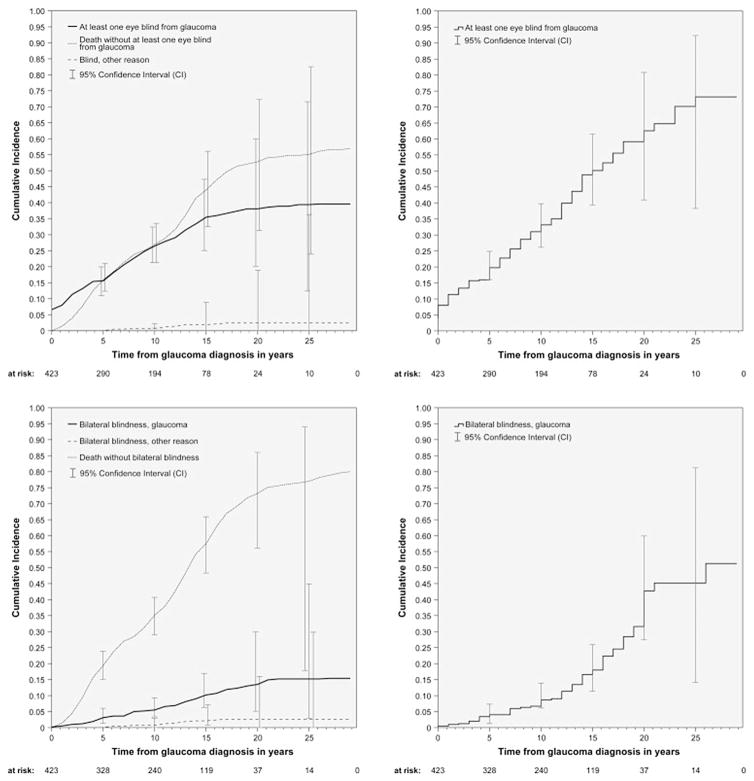

In this study of lifetime risk for blindness, a large proportion of patients (42.2%) were blind from glaucoma in at least one eye at the last hospital or Habilitation and Assistive Technology Service visit, and 16.4% were bilaterally blind from glaucoma. The cumulative risk for unilateral and bilateral blindness from glaucoma was considerable and many blind patients were blind for more than three years. Numbers of patients with low vision and blindness from glaucoma at the last visit are shown in Table 1.

Table 1.

Number of patients with low vision and blindness from glaucoma at last visit where (1) visual field data was available at diagnosis; (2) where visual field data was available from follow-up; and (3) all included patients (Peters et al., 2013b).

| All patients (n = 592) n (%) |

Follow-up Only group (n = 169) n (%) |

Data at diagnosis group (n = 423) n (%) |

|

|---|---|---|---|

| Unilateral low vision | |||

| OAG | 52 (8.8) | 13 (7.7) | 39 (9.2) |

| Bilateral low vision | |||

| OAG + OAG | 7 (1.2) | 2 (1.2) | 5 (1.2) |

| OAG + other cause | 5 (0.9) | 1 (0.6) | 4 (0.9) |

| In total: | 12 (2.0) | 3 (1.8) | 9 (2.1) |

| Unilateral blindness | |||

| OAG | 153 (25.8) | 51 (30.2) | 102 (24.1) |

| Bilateral blindness | |||

| OAG + OAG | 67 (11.3) | 22 (13.0) | 45 (10.6) |

| OAG + other cause | 30 (5.1) | 10 (5.9) | 20 (4.7) |

| In total | 97 (16.4) | 32 (18.9) | 65 (15.4) |

OAG = open-angle glaucoma.

The Data at Diagnosis group represents patients with visual field data available at the time of diagnosis. The Follow-up Only group represents patients diagnosed outside and later referred to the Skåne University Hospital, and for whom the first visual field data were available after the time of diagnosis.

Source: Peters D, Bengtsson B, Heijl A. Lifetime risk of blindness in open-angle glaucoma. Am J Ophthalmol. Oct 2013; 156(4): 724–730.

Other reasons for unilateral blindness were age-related macular degeneration (AMD) (26 patients), a combination of cataract and other disease (10 patients), and other causes (32 patients). Seventeen patients were bilaterally blind because of reasons other than glaucoma (16 from AMD, 1 patient from other reason). A combination of causes for blindness was found in one eye of seven blind patients. In patients who developed blindness attributable to glaucoma, the median time with bilateral blindness was two years (range,1–13, mean 3.0 ± 3.1). Patients who became bilaterally blind from glaucoma did so at a median age of 86 years (range, 66–98; mean 85.7 ± 6.1). Thirteen patients (13.5% of blind patients and 2.2% of all patients) became blind before the age of 80 years (Peters et al., 2013a, b).

The median duration with diagnosed glaucoma was 12 years (range, 1–29; mean 11.2 ± 6.6), with 74.7% (316 of 423 patients) of patients having their glaucoma diagnosis for more than six years. The cumulative incidence for blindness in at least one eye and bilateral blindness from glaucoma was 26.5% and 5.5%, respectively, at 10 years and 38.1% and 13.5%, respectively, at 20 years after diagnosis (Fig. 3, top left and bottom left). The corresponding cumulative incidence for blindness caused by other reason was 0.7% and 0.7%, respectively, at 10 years and 2.4% and 2.6%, respectively, at 20 years. The Kaplan-Meier estimates for blindness in at least one eye caused by glaucoma were 33.1% at 10 years and 73.2% at 20 years (Fig. 3, top right) and 8.6% at 10 years and 42.7% at 20 years for bilateral blindness from glaucoma (Fig. 3, bottom right) (Peters et al., 2013a, b).

Fig. 3. Cumulative incidence rates for unilateral and bilateral blindness caused by glaucoma.

Source: Peters D, Bengtsson B, Heijl A. Lifetime risk of blindness in open-angle glaucoma. Am J Ophthalmol. Oct 2013;156(4):724–730.

2.1.3.2. Study 2: Olmsted County, Minnesota

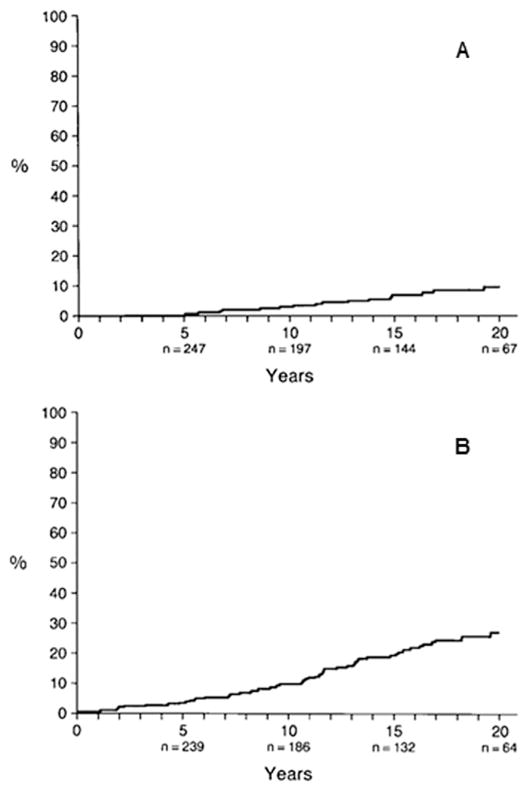

A study conducted in Olmsted County, Minnesota included 295 residents newly diagnosed with and treated for OAG between 1965 and 1980, with a mean follow-up of 15 years (standard deviation ± 8 years) (Hattenhauer et al., 1998). Legal blindness, defined as a best-corrected VA of 20/200 or worse, and/or visual field constricted to 20° or less in its widest diameter with the Goldmann III4e test object or its equivalent on SAP, secondary to glaucomatous loss, was measured. The mean age at diagnosis of OAG was 66 years (±14 years), and the median age was 68 years. Of the 295 patients, 29 (10%) were blind in at least one eye at diagnosis. Five of the 29 patients were bilaterally blind and 24 were unilaterally blind. The cumulative probability of blindness from classic glaucoma (defined as two or more of the following three characteristics: increased IOP, visual field defects, or glaucomatous optic nerves), treated ocular hypertension, and unsupported glaucoma (glaucoma without documented clinical features) at 20 years was estimated to be 9% (95% confidence interval (CI), 5%–14%) for both eyes (Table 2, Fig. 4A) and 27% (95% CI, 20%–33%) in one eye (Table 3, Fig. 4B).

Table 2.

Kaplan-Meier cumulative probability of glaucoma-related blindness at 20 years in both eyes.

| Blindness by visual acuity and/or visual field (%) | Blindness by visual acuity alone (%) | Blindness by visual field alone (%) | |

|---|---|---|---|

| All glaucomaa (n = 295) | 9 | 5 | 8 |

| Treated ocular hypertension (n = 191) | 4 | 2 | 4 |

| Classic glaucoma (n = 100) | 22 | 16 | 16 |

Includes treated ocular hypertension, classic glaucoma, and unsupported glaucoma.

Source: Hattenhauer MG, Johnson DH, Ing HH, et al. The probability of blindness from open-angle glaucoma. Ophthalmology. Nov 1998; 105(11):2099–2104.

Fig. 4. Kaplan-Meier cumulative probability of glaucoma-related blindness in both eyes (A) and at least one eye (B).

Source: Hattenhauer MG, Johnson DH, Ing HH, et al. The probability of blindness from open-angle glaucoma. Ophthalmology. Nov 1998;105(11):2099–2104.

Table 3.

Kaplan-Meier cumulative probability of glaucoma-related blindness at 20 years in at least one eye.

| Blindness by visual acuity and/or visual field (%) | Blindness by visual acuity alone (%) | Blindness by visual field alone (%) | |

|---|---|---|---|

| All glaucomaa (n = 295) | 27 | 11 | 23 |

| Treated ocular hypertension (n = 191) | 14 | 5 | 11 |

| Classic glaucoma (n = 100) | 54 | 27 | 50 |

Includes treated ocular hypertension, classic glaucoma, and unsupported glaucoma.

Source: Hattenhauer MG, Johnson DH, Ing HH, et al. The probability of blindness from open-angle glaucoma. Ophthalmology. Nov 1998; 105(11):2099–2104.

Analyzed solely by VA criteria, there was a 5% probability of bilateral blindness at 20 years (95% CI, 2%–9%). The probability of bilateral blindness based solely on visual field criteria was 8% probability at 20 years (95% CI, 4%–13%). Because some patients were diagnosed blind by both VA and visual field criteria, the sum of VA and visual field cumulative probabilities for blindness exceeded 9%. The majority of patients (270 of 295) in the study were diagnosed with primary OAG. The cumulative probability of blindness for this subgroup of patients with OAG was also evaluated. The probability of blindness in both eyes and in at least one eye was calculated to be 9% (95% CI, 4%–11%) and 26% (95% CI, 19%–33%) at 20 years, respectively. There were 21 patients diagnosed with bilateral glaucoma who were unilaterally blind at diagnosis. For the endpoint of blindness in both eyes, there was no significant difference between genders (P = 0.41), but there was a significant effect of older age (P = 0.02). Similarly, in blindness affecting at least one eye, there was no significant difference between genders (P = 0.44) but a significant effect of older age on the risk of becoming blind (P < 0.001).

Blindness occurred more often by visual field criteria than by VA criteria. It was not uncommon for patients to have double arcuate defects but still have 20/20 VA. Because of this, establishing the degree of functional visual impairment from a disease such as OAG can be difficult. Perhaps the most important factor in assessing visual compromise is that of functional visual constraints in the context of activities of daily living (Hattenhauer et al., 1998).

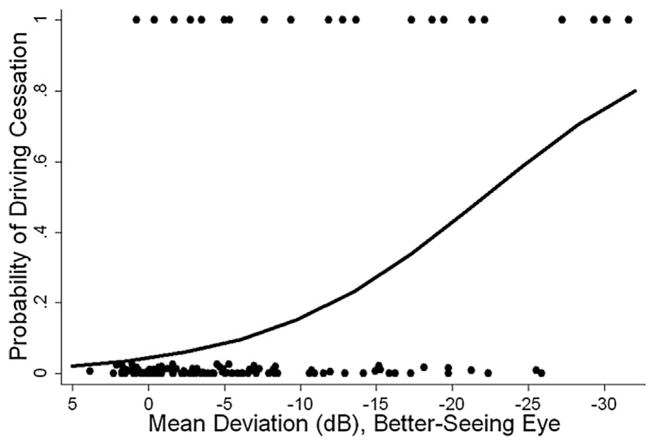

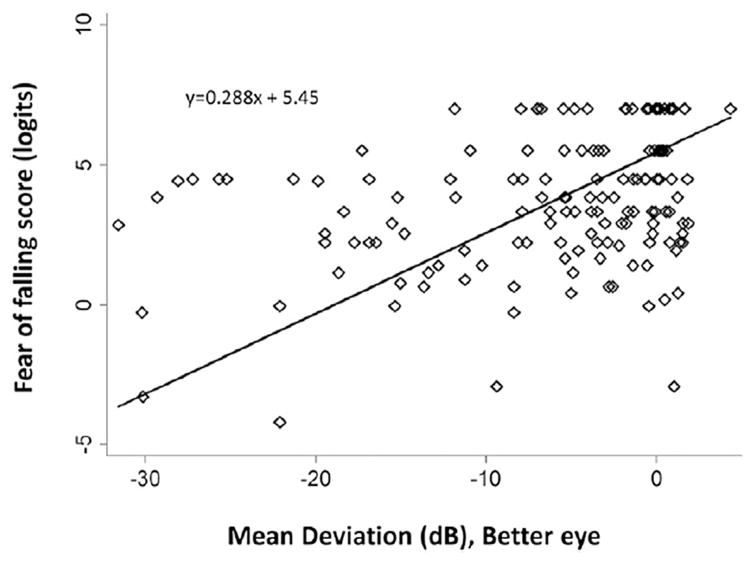

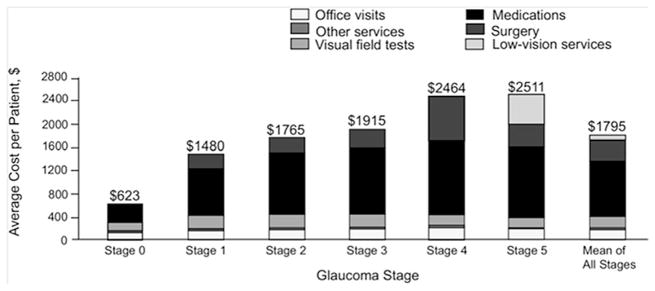

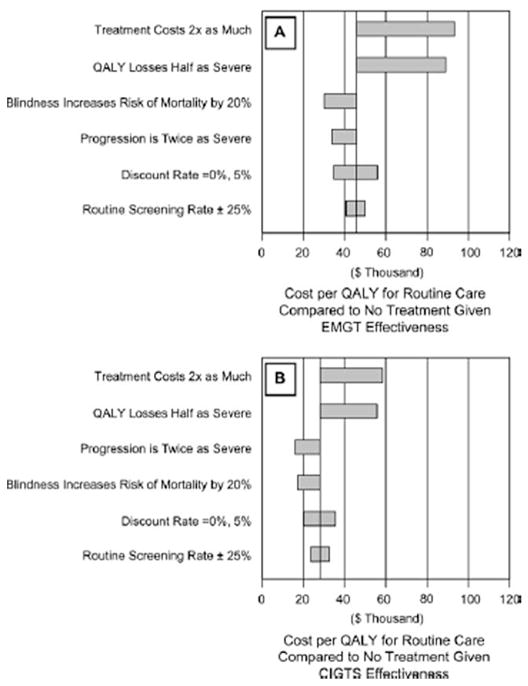

2.1.3.3. Study 3: United Kingdom

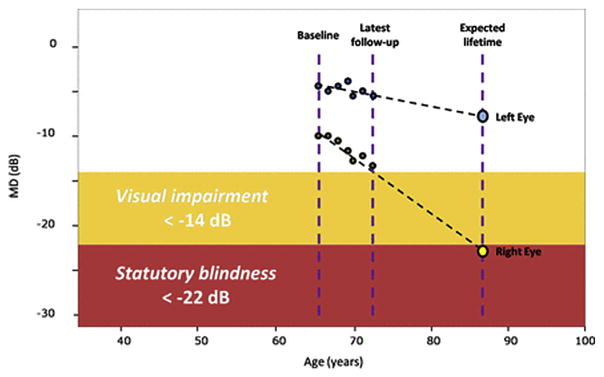

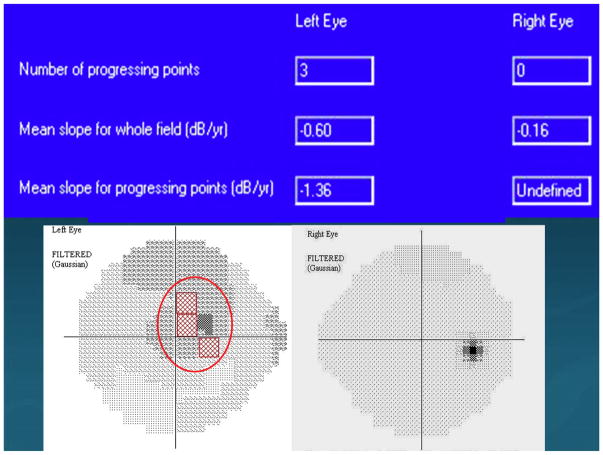

In the United Kingdom, Saunders et al. evaluated the proportion of patients in glaucoma clinics progressing at rates that would result in visual disability within their expected lifetime (Saunders et al., 2014). Based on criteria defined by the US Social Security Administration (SSA) with SAP, a mean deviation (MD) of −14 dB or worse in the better eye was deemed ‘visual impairment’ and a MD of −22 dB or worse in the better eye corresponded to the visual field definition of ‘statutory blindness’ (Fig. 5) (Administration; Saunders et al., 2014).

Fig. 5. Visual field series from the left and right eyes of a patient demonstrate a linear rate of loss in each eye (dB/year).

Source: Saunders LJ, Russell RA, Kirwan JF, McNaught AI, Crabb DP. Examining visual field loss in patients in glaucoma clinics during their predicted remaining lifetime. Invest Ophthalmol Vis Sci. Jan 2014;55(1):102–109.

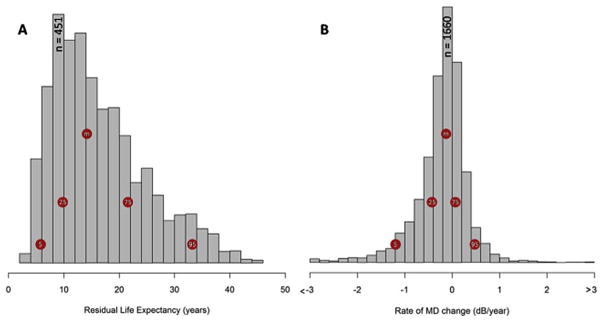

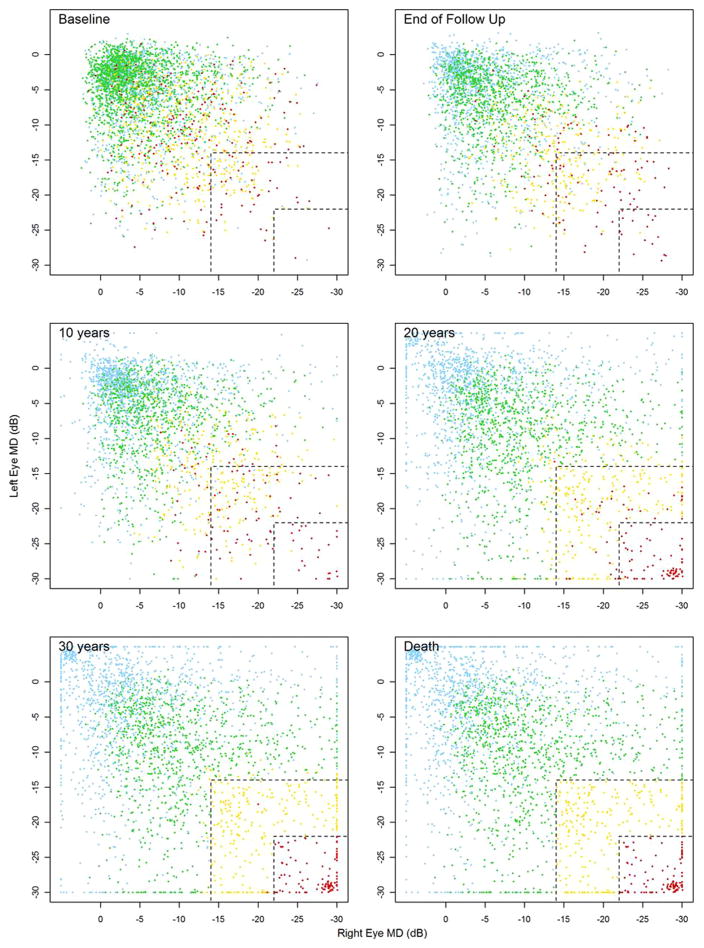

Fig. 6A and B demonstrate the distribution of patient eye follow-up times, patient residual life expectancy, and progression rates in all eyes, respectively. It is apparent from Fig. 6B that the majority (74%–95%) of eyes had a decrease in MD approximating ± 0.5 dB/yr. A smaller proportion of patient eyes progressed at a rate worse than −1.0 dB/yr (6.9%–8.2%) and 3.0% (2.7%–3.4%) of eyes progressed faster than −1.5 dB/yr. Of the 3359 patients with a visual field series from both eyes (Table 4), 5.2% progressed to statutory blindness (both eyes progressing to a MD worse than −22.0 dB) with a further 10.4% progressing to visual impairment (both eyes progressing to an MD level of worse than −14.0 dB) in their expected residual lifetime (Fig. 7).

Fig. 6. Distribution of patient eye follow-up times, patient residual life expectancies, and progression rates in all eyes.

Source: Saunders LJ, Russell RA, Kirwan JF, McNaught AI, Crabb DP. Examining visual field loss in patients in glaucoma clinics during their predicted remaining lifetime. Invest Ophthalmol Vis Sci. Jan 2014;55(1):102–109.

Table 4.

The proportion of patients likely to suffer VF impairment in the course of their lifetime.

| Visual impairment at death | % No impairment (95% CIa) | % Visual impairment (95% CIa) | % Statutory blindness (95% CIa) |

|---|---|---|---|

| Including patients with a visual field series for each eye only, n = 3359 | 84.4 (83.2–85.6) | 10.4 (9.4–11.4) | 5.2 (4.5–6.0) |

| All patients best-case scenario, n = 3790 | 84.9 (83.7–86.1) | 10.0 (9.0–11.0) | 5.1 (4.3–5.8) |

| All patients worst-case scenario, n = 3790 | 81.5 (80.2–82.8) | 11.5 (10.4–12.5) | 7.1 (6.2–7.9) |

95% CIs were calculated with the normal approximation of a binomial distribution.

Source: Saunders LJ, Russell RA, Kirwan JF, McNaught AI, Crabb DP. Examining visual field loss in patients in glaucoma clinics during their predicted remaining lifetime. Invest Ophthalmol Vis Sci. Jan 2014; 55(1): 102–109.

Fig. 7. A series of scatterplots showing MD in left (y-axis) and right (x-axis) eyes at baseline, at the end of follow-up, through extrapolating current rates of MD deterioration, after 10, 20, and 30 years of follow-up and at the end of expected lifetime. Both eyes in the plot had to fulfill the original inclusion criteria. The patients are colored according to their visual disability status at expected time of death. Blue represents a patient where at least one of the eyes has a positive slope over time, green represents progression, but no significant impairment by the end of the patient’s lifetime, yellow represents degradation to visual impairment (−14 dB or worse in both eyes), and red corresponds to statutory blindness in both eyes (below–22 dB). It is worth noting that most of the red symbols are not found in the top left corner of the baseline plot where both eyes are at an early stage of glaucoma.

Source: Saunders LJ, Russell RA, Kirwan JF, McNaught AI, Crabb DP. Examining visual field loss in patients in glaucoma clinics during their predicted remaining lifetime. Invest Ophthalmol Vis Sci. Jan 2014;55(1):102–109

When fewer than three VF’s were available, the data were analyzed either as a the “best-case scenario” (0 dB/yr progression) or “worst-case scenario” (−1.5 dB/yr progression). The best-case scenario produced similar results to those considering eyes with two series, but under the “worst-case scenario” the number of patients at risk of statutory blindness increased to 7.1%, with a further 11.5% at risk of visual impairment (Table 4). When just patients with series in both eyes tested were considered, 159 of the 175 patients (90.9% CI, 86.6%–95.1%) who reached statutory blindness had an MD worse than −6 dB in at least one eye at baseline. This MD level is equivalent to what is considered to be at least a “moderate defect” for one criterion of the Hodapp-Parrish-Anderson index (Hodapp et al., 1993). Patients who were predicted to progress to statutory blindness were approximately 70% more likely to have moderate damage (MD worse than −6 dB) in at least one eye at baseline than patients not predicted to progress to this stage (positive likelihood ratio, 1.7; 95% CI, 1.6–1.8). Put differently, 1.1% (CI, 0.6%–1.6%) of the patients who were had early visual field defects, with an MD better than −6 dB in both eyes (44% of the study population), progressed to statutory blindness. Strikingly, almost 60% (52.0%–66.4%) of patients progressing to statutory blindness had one eye with an MD already worse than −14 dB in at least one eye at baseline.

The main conclusion of this study is therefore that late diagnosis of glaucoma is the most important risk factor for visual impairment and blindness (Saunders et al., 2014).

2.1.4. Glaucoma treatment decreases progression but has risks

Compelling evidence provided by the studies described above supports the notion that a method of assessing risk of progression from ocular hypertension to loss of functional vision for individual patients is needed. Weinreb et al. described the first attempt to reach that aim with the available data and several assumptions regarding disease progression to model the risk of blindness in patients with ocular hypertension (Weinreb et al., 2004). Overall, their calculations suggest that treatment may reduce the risk of progressing from untreated ocular hypertension to blindness by an estimated range of 1.2%–8.1% over 15 years (range of differences in risk of progression between untreated and treated patients according to different models).

How can these calculations affect our decision-making in managing the ocular hypertension patient? One approach to this problem is to determine the number of patients that need to be treated to prevent unilateral blindness in one patient (“number-needed-to-treat” [NNT]). The NNT is calculated with the following formula: 1/(difference in absolute risk between no treatment and treatment groups). Based on their estimates, between 12 and 83 patients with ocular hypertension will require treatment to prevent one patient from progressing to unilateral blindness over a 15-year period (Table 5). Similar models are warranted for patients with established disease at different severity levels.

Table 5.

Estimated risk of progression from ocular hypertension to unilateral blindness in treated patients and number-needed to treat to prevent blindness in one patient over 15 years.

| Data set used in calculation | OHTS (Kass et al., 2002) + St. Lucia (AGIS VF criteria) (Wilson et al., 2002) | OHTS (Kass et al., 2002) + Olmsted data (Hattenhauer et al., 1998) for Glaucoma to unilateral blindness | Olmsteddata (Hattenhauer et al., 1998) for ocular hypertension to unilateral blindness |

|---|---|---|---|

| Risk of progression, untreated | 1.5% | 2.6% | 10.5% |

| Risk of progression, treateda (0.23 the risk of untreated) | 0.3% | 0.6% | 2.4% |

| Difference between risks | 1.2% | 2.0% | 8.1% |

| Number needed to treat (1/difference) | 83 | 50 | 12 |

AGIS = Advanced Glaucoma Intervention Study; EMGT = Early Manifest Glaucoma Trial; OHTS = Ocular Hypertension Treatment Study; VF = visual field.

Treatment benefits found in OHTS (Gordon et al., 2002) and EMGT (Heijl et al., 2002).

Source: Weinreb RN, Friedman DS, Fechtner RD, et al. Risk assessment in the management of patients with ocular hypertension. Am J Ophthalmol.. Sep 2004; 138(3): 458–467.

While treatment effectively slows progression, adverse effects of therapy can themselves have effects on HRQOL and visual function.

3. Measurement of visual field progression

3.1. Background

Visual field analysis (perimetry) is a critical part of detecting and following functional loss in patients with glaucoma because VA is usually not affected until late in the disease. Although historically manual methods of perimetry such as tangent screen and Goldmann visual field analysis were used, in the modern era most cooperative patients are followed with automated perimetry with Humphrey, Octopus, or similar devices.

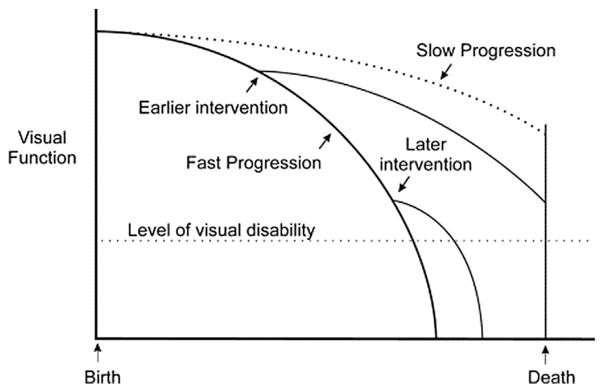

Because glaucoma is a progressive disease, it is critically important to accurately detect and measure visual field progression in individual patients. In recent years, attention has been focused on developing methods to detect early glaucoma damage and progression. However, knowing the rate of disease progression with trend-based analysis in an individual is fundamental to the long-term goal of preservation of vision in patients with glaucoma. The ability to differentiate fast progressors from slow progressors would direct appropriately aggressive treatment to those who are at highest risk for visual disability (Fig. 8).

Fig. 8. Effect of timing of intervention on rate of progression.

Source: Caprioli J. The importance of rates in glaucoma. Am J Ophthalmol.. Feb 2008;145(2):191–192.

Tools to measure the rate of functional glaucomatous damage are critically important to patient care and have been a focus of research for more than 30 years. As described in the Introduction, one of our goals with this paper is to show that glaucoma visual field outcomes measured with rates of MD deterioration (dB/year) can be shown to be clinically meaningful even when the length of follow-up is as short as 12–18 months.

There are several issues that can make it difficult to measure visual field progression. As glaucoma becomes advanced, a diffuse (non-localized) component of visual field loss can manifest. In fact, diffuse loss can be present even in early disease as the only sign of visual field damage (Chauhan et al., 1997). Any index that is selectively sensitive to localized visual field defects may underestimate the amount of damage present in advanced disease. The progression of glaucoma may not be linear and would depend on the instrument being used to assess progression. The long-term behavior of a glaucoma progression index or similar indices in a large group of patients will help to determine the most appropriate model to use. A regression analysis of any visual field index requires a sufficiently large number of fields. The results of such analyses with fewer than eight measurements, for example, may become less reliable and more poorly predictive. The signal-to-noise ratio problem of visual field measurements can be reduced by averaging repeated measurements; the noise is reduced and the signal becomes more easily detectable (Casas-Llera et al., 2009). There are, of course, practical limitations to how many visual fields to which a patient can be subjected.

The rate of glaucoma progression varies across individuals. Since some persons tend to have a low rate of progression, not all of those affected will become visually impaired during their lifetimes. If patients with a near-zero risk of visual impairment could be identified, a source of excess treatment and excess monitoring—-with all of its side effects and costs—could be identified. The occurrence of future visual impairment (depicted as a certain amount of visual field loss) can be predicted from the current visual field loss of a patient together with his or her rate of progression (Chauhan et al., 2008; Holmin and Krakau, 1982).

This seemingly attractive concept has at least two major limitations. First, a reliable measurement of the rate of progression is required. Such a measurement, however, takes at least 5 years if typical clinic-based intervals for visual field measurements are used(Jansonius, 2010). Note that in clinical trials, significantly more visual fields spaced more frequently are used to assess progression rates in a shorter time. In the clinic, however, reliable information on visual field progression will not be available at the time of the initial decision-making, and may never become available in the aging patient. Hence, initial decision-making is necessarily based on general knowledge of rates of progression as found in observational clinical studies and trials.

Second, the remaining number of years of life has to be known. This number is usually approximated by the difference between the current age and the median life expectancy at birth, the latter being in between 80 and 85 years of age in the western world. This approximation, however, is unsuitable for estimating life expectancy in the elderly, as the median age-of-dying becomes higher with age. Moreover, it does not take into account variability in survival. As a consequence, many glaucoma patients are diagnosed, monitored, and treated at an age considerably beyond the age corresponding to their median life expectancy at birth. To make a proper estimate of the life expectancy of glaucoma patients, life expectancy should be adjusted for the age already reached, a concept known as residual life expectancy. More importantly, an estimate of the upper limit of life expectation should be taken into account rather than the median residual life expectancy, in order to deal with variability in survival (Wesselink et al., 2011). It is therefore helpful to communicate with the patient’s primary health care provider regarding medical conditions, particularly comorbidities associated with the leading causes of mortality.

3.2. Measuring glaucomatous visual field damage

Glaucoma management aims to preserve the patient’s vision, and by extension, prevent vision-related disability. Tests of vision, such as perimetry, are therefore of considerable clinical importance. Visual field testing aims to locate damaged areas in a patient’s field of vision with automated algorithms that systematically measure the patient’s ability to identify different intensities of light stimuli and hence determine their threshold of contrast sensitivity in the measured “island of vision”.

The most commonly used measurement unit is the decibel (dB), which is 10 times the log of the reciprocal of the light stimulus contrast, as measured in lamberts (L) (i.e., dB = 10 * log10 [1/L]), where 0 dB is defined as the greatest contrast stimulus that the instrument can present, and so varies between manufacturers. The results at each test location can be summarized by global perimetric indices, such as the MD, visual field index (VFI), and pattern standard deviation (PSD) in the Humphrey perimeter (Carl Zeiss, Meditec, Inc.). The visual field MD is calculated by averaging the age-corrected threshold sensitivities after adjusting for each test location’s variability and eccentricity. The weighting procedure allots greater weight to points with smaller inter-test variability, which mainly comprises the most central test locations. The VFI employs pointwise deviations from age-corrected sensitivities that fall outside normal limits (P < 5%) which are calculated as percentages (%) to produce a weighted average also based on eccentricity. The PSD is the standard deviation of the values in the pattern deviation (PD) plot. This plot is derived from the total deviation (TD) plot, which depicts age-corrected threshold sensitivities for all tested locations. The PD plot values are calculated after subtracting the value of the 85th percentile of highest sensitivity deviation from all the values in the TD plot. This procedure minimizes the effect of causes of diffuse sensitivity loss due to cataract and other types of media opacity. The Octopus perimeter (Haag Streit, GmbH) provides global indices that are analogous to the MD and the PSD, namely the mean sensitivity (MS) and loss variance (LV), respectively.

3.3. Effects of visual field severity and population aging on measurements of glaucoma progression

The interpretation of results from standard automated perimetry (SAP) is challenging because visual field measurements are variable, as revealed by psychophysical experiments with frequency-of-seeing procedures (Chauhan et al., 1993; Spry et al., 2001) and test-retest clinical studies (Heijl et al., 1989; Piltz and Starita, 1990). Variability of SAP measurements requires frequent monitoring and/or a long period of time to accurately detect true disease progression (Gardiner and Crabb, 2002; Spry et al., 2002).

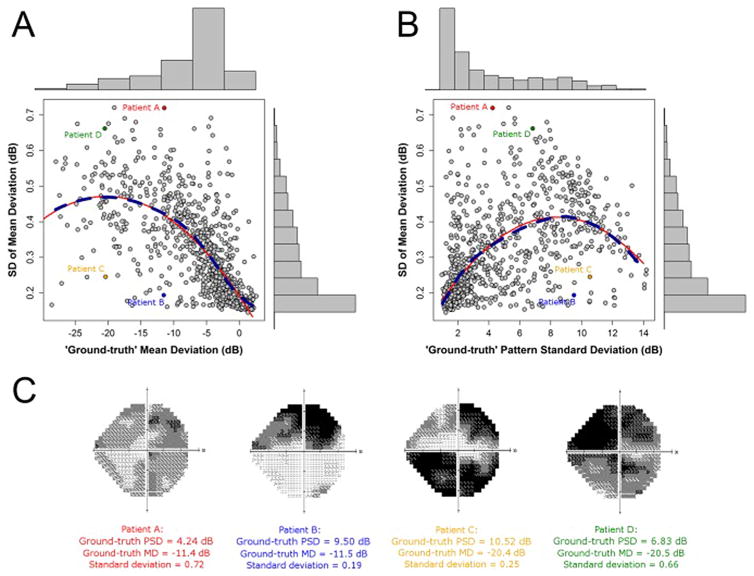

Studies that investigated the relationship between MD variability, the levels of MD and PSD, and the pattern of point-wise visual field damage showed that MD variability is a function of the absolute level of MD and PSD (Administration; Hodapp et al., 1993; Saunders et al., 2014). The dashed dark blue lines in Fig. 9 indicate the locally weighted polynomial regression, which gives an indication of how variability changes, on average, with the change in level of MD. The red lines, on the other hand, illustrate the results of fitting a second order model with a quadratic predictor (Russell et al., 2013).

Fig. 9. Variability in Mean Deviation.

Source: Russell RA, Garway-Heath DF, Crabb DP. New insights into measurement variability in glaucomatous visual fields from computer modelling. PloS ONE. 2013;8(12):e83595.

Fig. 9A suggests that variability tends to increase as the level of MD worsens, with some evidence that variability peaks around −20 dB. Fig. 9B suggests that variability tends to increase as the level of PSD increases, with some evidence that variability peaks around 8 dB of the PSD. For visual field loss associated with early glaucoma, where a significant amount of MD loss is defined as approximately −2 dB, variability is half that observed when MD is equal to −10 dB, which corresponds to the visual field loss associated with more severe glaucoma.

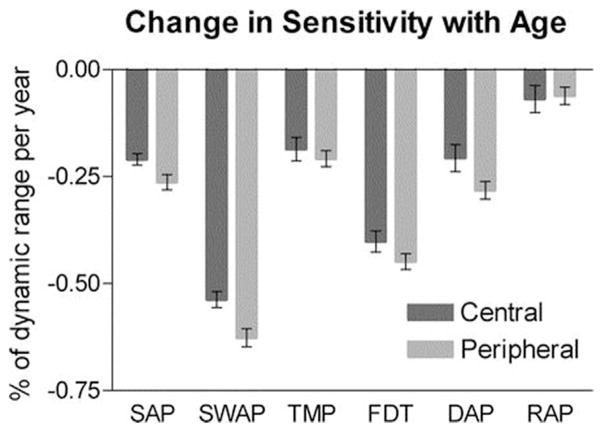

It has now been well-established that most visual functions decline with age both for the fovea and the periphery (Strasburger et al., 2011), and it is common practice to take this into account when assessing function in pathologic eyes, particularly in cases of glaucomatous loss. However, the relative rates of decline of different functions are unclear. For example, it has been suggested that processes mediated by magnocellular pathways, which have been reported as being responsible for the frequency-doubling illusion, are more affected by age than those mediated by parvocellular pathways (Steinman et al., 1994). For achromatic SAP, Heijl et al. estimated the rate of age-related loss as being 0.6–0.7 dB per decade and greater peripherally than centrally (Heijl et al., 1987). Wild et al. estimated loss as being 0.7 dB per decade for SAP and 1.96 dB per decade for blue-on-yellow short wavelength automated perimetry (SWAP) (Wild et al., 1998). Johnson et al. found that the reduction in sensitivity of short wavelength-sensitive cone pathways (such as those stimulated in SWAP) resulting from aging was greater than that for longer wavelength-sensitive cone pathways (Johnson et al., 1988). For frequency doubling technology (FDT) perimetry, Adams et al. estimated loss resulting from aging as being approximately linear at 0.6 dB per decade up to the age of 70 and greater after that age (Adams et al., 1999). Demirel et al. reported an age-related decline in detection acuity perimetry (DAP) of 0.04–0.05 logarithm of the minimum angle of resolution (logMAR) depending on eccentricity and of 0.03 logMAR for resolution acuity perimetry (RAP) (Demirel et al., 2012). Care must be taken when comparing these results directly because of the different scales on which results are measured with each test and the differing dynamic ranges of the instruments (Gardiner et al., 2006).

Vision function tests provide a tool to quantify progression as a function of age. Fig. 10 shows the slope of a linear regression of sensitivity against age averaged over all locations in the central and peripheral regions. The error bars represent the 95% CI for the mean. The aging effect is seen to be greatest for SWAP and FDT, least for RAP, and approximately equal for the other tests. Aging had a slightly greater effect peripherally than centrally, and this was statistically significant for SAP, SWAP, and FDT (Gardiner et al., 2006). Therefore, aging affects subject performance in visual field tests, namely achromatic SAP (more commonly used to define glaucoma and its severity), leading to worse threshold sensitivities resulting in greater variability, as described above.

Fig. 10. Slope of a linear regression of sensitivity against age averaged over all locations in the central and peripheral regions.

Source: Gardiner SK, Johnson CA, Spry PG. Normal age-related sensitivity loss for a variety of visual functions throughout the visual field. Optom Vis Sci. Jul 2006;83(7):438–443.

3.4. Effects of IOP lowering on visual field progression

With regard to the previously mentioned issues relating IOP and visual field deterioration, RCTs in glaucoma have provided invaluable information regarding the role of IOP, treatment modalities, and risk factors for disease onset and progression (Table 6 and Table 7). The discussion below will show how the findings of RCTs with regard to IOP treatment effects and rates of MD progression can be considered with respect to trend analysis.

Table 6.

Major glaucoma studies – objectives and results.

| Study | Aim | Result |

|---|---|---|

| Ocular Hypertension Treatment Study (Gordon et al., 2002; Kass et al., 2002) | Efficacy and safety of topical ocular medications in preventing or delaying the development of POAG in individuals with raised IOP (1636 patients) | With mean IOP-lowering of 22.5%, the probability of developing glaucomatous change (optic disc or field change) was 4.4% in the medication group and 9.5% in the observation group at 60 months. Baseline age, vertical cup disc ratio, visual field abnormalities, and IOP were good predictors of progression. Corneal thickness was a powerful predictor of progression |

| Glaucoma Laser Trial (GLT, 1995) | Efficacy and safety of argon laser trabeculoplasty or medicine as initial treatment in POAG (271 patients) | Eyes treated with laser trabeculoplasty had slightly reduced IOP (1.2 mm Hg) and improved visual field (0.6 dB) after median follow-up of 7 years |

| Collaborative Initial Glaucoma Treatment Study (Lichter et al., 2001) | Effects of randomizing patients to either initial medical or surgical treatment (607 patients) | Surgery lowered the IOP more than medical treatment (average during follow-up 14–15 mm Hg vs 17–18 mm Hg), but with no statistical difference in visual field progression over 5 years |

| Early Manifest Glaucoma Treatment Study (Heijl et al., 2002; Leske et al., 2003) | Effects of treatment with a topical β blocker and laser trabeculoplasty versus observation in patients with newly detected POAG (255 patients) | Progression was less frequent in the treatment group (45% vs 62%) with median follow-up of 6 years, Other important predictors of glaucoma progression included lens exfoliation, bilateral glaucoma, IOP >21 mm Hg, more advanced visual field loss, disc hemorrhages, and age ≥ 68 years |

| Collaborative Normal Tension Glaucoma Study (CNTGS, 1998a) | Effect of pressure lowering (30%) on optic nerve damage and field loss in normal tension glaucoma (140 patients) | Only 12% of treated patients progressed (optic disc and visual field progression) compared with 35% in the untreated group |

| Advanced Glaucoma Intervention Study (AGIS, 2000) | Effect of treatment sequences of laser trabeculoplasty and trabeculectomy (surgery) in advanced glaucoma (776 eyes of 581 patients) | Outcome depended on race. In patients who had laser trabeculoplasty first, black patients were at a lower risk than white patients of failure. In patients who received surgery first, black patients were at a higher risk of first failure than white patients. Patients with lower IOP had less progression |

| United Kingdom Glaucoma Treatment Study (Garway-Heath et al., 2015) | Effect of treatment on vision preservation in patients given latanoprost (258 patients) compared with those given placebo (258 patients) | Visual field preservation was significantly longer in the latanoprost group than in the placebo group: adjusted hazard ratio (HR) 0.44 (95% CI 0.28–0.69) |

POAG = primary open-angle glaucoma. IOP = intraocular pressure.

Source: Modified from Weinreb RN, Khaw PT. Primary open-angle glaucoma. Lancet. May 22 2004; 363(9422):1711–1720.

Table 7.

Major progression studies and outcomes in treated and untreated patients.

| Author(s) (Study) | No. of patients and studly designa | Disease and criteriaa | Definition of failure/end point | Mean study length | Progression of treated patients | Progression of untreated patients | Comments |

|---|---|---|---|---|---|---|---|

| Kass et al. (Kass et al., 2002) (OHTS) | 1636; prospective, randomized to no treatment or medication (20% reduction in IOP; ≤24) | Ocular hypertension: IOP 24–32 in one eye and 21–32 in the other eye; no detectable glaucomatous damage | Reproducible VF abnormalities or disc deterioration | 72 months (median) | At 60 months, 4.4% cumulative probability of developing OAG | At 60 months, 9.5% cumulative probability of developing OAG | |

| Hattenhauer et al. (Hattenhauer et al., 1998) and Oliver et al. (Oliver et al., 2002) (Olmsted) | 295; retrospective | 3 groups treated pts; (1) 114 OAG (2 of 3 criteria: IOP ≥21, ON or VF defects); (2) 177 ocular hypertension (treated IOP ≥21); (3) 4 undocumented OAG | Legal blindness | 15 years ± 8 years | 9% overall probability of bilateral blindness at 20 years (4% for ocular hypertension; 22% for OAG); 27% probability of unilateral blindness | No untreated patient | Various VF evaluation techniques; IOP variability while on therapy a risk factor for progression |

| Wilson et al. (Wilson et al., 2002) (St. Lucia) | 205; follow-up; untreated pts | OAG and suspects with either elevated IOP, abnormal optic s and/or VFs | VF loss | 10 years | 55% of 146 right eyes and 52% of 141 left eyes showed VF progression; probability of blindness in 10 years = 16% | All study participants were black | |

| AGIS Investigators (AGIS, 2002) | 789 eyes of 591 pts; prospective randomized to 1 of 2 surgical sequences, ATT or TAT | Advanced POAG (some field loss and inadequately controlled with maximal medical therapy); one of 9 combinations of IOP; VF, and ON defect | Sustained reduction of VF or VA; these outcomes were specifically defined in the methods | 8 to 13 years; 10.8 years (median) | 30% of eyes had sustained VF loss (10-year probability: ATT = 0.39, TAT = 0.33); 30% had VA loss (10-year probability: ATT = 0.32, TAT = 0.41) | No untreated | At least one intervention in all eyes |

| Lichter et al. (Lichter et al., 2001) (CIGTS) | 607; prospective randomized to medical therapy | Newly diagnosed OAG and 1 of 3 combinations of qualifying criteria: IOP, VF, and disc findings | Primary outcome was VF loss; secondary outcomes were VA, IOP, cataract | 60 months | Medical therapy: substantial VF loss in 10.7%; VA loss in 3.9% | No untreated | |

| or trabeculectomy | Surgical therapy: VF loss in 13.5%; VA loss in 7.2% | ||||||

| Heijl et al. (Heijl et al., 2002) (EMGT) | 255; prospective, randomized; laser trabeculectomy plus betaxolol or no treatment | Early OAG; visual defects; median IOP = 20 | VF and ON outcomes | 6 years (median); 51–102 months | 58/129 (45%) progressed; progression delayed compared with controls | 78/126 (62%) progressed | |

| Garway-Heath et al. (UKGTS) | 516; prospective randomized to latanoprost or placebo. | Newly diagnosed OAG; disc, IOP, and VF | Repeatable VF loss | 12–24 months | 35/231 (15%) at 24 months | 59/230 (25%) at 24 months | Treatment differences were significant at 12 and 18 months |

AGIS = Advanced Glaucoma Intervention Study; ATT = argon laser trabeculoplasty (ALT)-trabeculectomy-trabeculectomy; CIGTS = Collaborative Initial Glaucoma Treatment Study; EMGT = Early Manifest Glaucoma Trial; IOP = intraocular pressure; OAG = open-angle glaucoma; OHTS = Ocular Hypertension Treatment Study; ON = optic nerve; POAG = primary open-angle glaucoma; pts = patients; TAT = trabeculectomy-ALT-trabeculectomy; VA = visual acuity; VF = visual field.

Units of measure for IOP are mm Hg.

Source: Modified from Weinreb RN, Friedman DS, Fechtner RD, et al. Risk assessment in the management of patients with ocular hypertension. Am J Ophthalmol. Sep 2004; 138(3): 458–467.

3.5. Randomized clinical trials and glaucoma progression

3.5.1. Early Manifest Glaucoma Trial (EMGT)

The Early Manifest Glaucoma Trial (EMGT) randomized patients with newly diagnosed glaucoma to treatment and observation arms, confirming the important effect of baseline IOP levels and IOP-lowering on progression (Leske et al., 2007). In addition, lower CCT, presence of exfoliation syndrome, and older age were related to progression. The updated analyses of the trial also suggest a potential role for vascular factors on progression, given the positive associations with low ocular systolic perfusion pressure, low systolic blood pressure, and cardiovascular disease history.

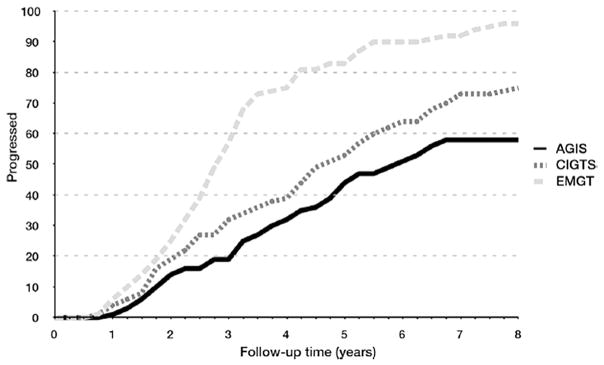

The effects of EMGT treatment were maintained over time and continued to suggest an approximate halving of risk (and an 18-month delay in progression) even after longer follow-up. Of note, 59% of treated versus 76% of untreated patients progressed based on SAP after a median of eight years of follow-up, despite an average IOP reduction of 25% in the treatment group (Leske et al., 2007). The hazard ratio (HR) for baseline IOP was 1.77, revealing that for each mm Hg higher baseline IOP the risk of progression increased by 77% on average. For follow-up IOP, each 1 mm Hg lower pressure decreased the risk by 10%.

These findings demonstrate the value of baseline IOP as a risk factor, as well as the effect of IOP-lowering treatment. When examining the role of follow-up IOP in patients with higher vs. lower baseline IOP, a similar magnitude of effects was observed. These results confirm the importance of IOP lowering in patient management, regardless of the IOP levels at baseline. In addition, progression was considerably and significantly faster in older than in younger patients (P = 0.002).

The frequency of disc hemorrhages at follow-up was also a significant risk factor for progression among all patients which, along with the blood pressure findings, suggests that vascular abnormalities may be an additional variable leading to continued progression independent of IOP reduction.

Given the high proportion of progressing patients despite therapy (almost 60%), there is an unmet need for additional modalities of glaucoma therapy, such as those targeting IOP-independent factors. When assessing the rate of visual field loss with the MD, the median and interquartile rates of visual function loss in the observation group of the EMGT were −0.40 (1.05) dB/year. Thus, inter-patient variability was large. Mean rates were considerably higher than medians: −1.08 dB/year. In the treated group, the median MD rate was reduced by approximately 50% (Heijl et al., 2002, 2009). This can be translated into an average decrease of median MD rate of progression from −0.40 to −0.20 dB/year in treated patients. The mean change in visual field loss from baseline to the end of follow-up, as expressed by the MD, was a worsening by 2.24 dB in the treatment group and 3.90 dB in the observation group. A separate analysis has shown that the amount of visual field deterioration needed to reach EMGT-defined visual field progression (3 locations) is associated with a worsening in MD by 2.26 dB (Heijl et al., 2002). For 5 locations, the MD worsening should be approximately 3.7 dB.

3.5.1.1. Effect of IOP lowering on progression

Since the average IOP reduction was 25% (20.6–15.45 mm Hg), a 4.5 mm Hg treatment effect was associated with a 50% reduction in rates of progression. Assuming a linear relationship, this translates to a treatment effect of 2–3 mm Hg to decelerate the rate of MD progression by 30% (Heijl et al., 2009) (see also the 3 points in the Introduction). The EMGT investigators also showed that the median change in number of significant test locations (defined at P < 0.5% in the pattern deviation map) was 0.06 per month in the observation arm (Heijl et al., 2002). This translates into a median change of 5 significant test locations in approximately 6.9 years. More importantly, the study showed that visual function as measured by SAP affected vision-targeted QOL up to six years after study enrollment, as measured with HRQOL questionnaires throughout the trial (Hyman et al., 2005).

Not all test locations in the 24-2 visual field progress similarly. In fact, they progress at highly variable rates depending on multiple factors, including eccentricity (Fig. 10) and depth of the defect (e.g.: floor effect; see also Section 3.1 below). Nonetheless, if the MD rate of progression is given as X dB/yr, a simplifying assumption is that on average each test location progresses at X dB/yr. Therefore, based upon the EMGT data described above, a clinically meaningful definition of visual field progression according to the FDA would translate into approximately 3.7 dB/6.9 years = 0.53 dB/yr in terms of pointwise rates of progression.

3.5.2. Collaborative Initial Glaucoma Treatment Study (CIGTS)

The Collaborative Initial Glaucoma Treatment Study (CIGTS) compared visual field outcomes between patients randomized to filtering surgery (trabeculectomy) versus medical therapy (Musch et al., 2009).

Patients who underwent trabeculectomy maintained the same average visual field scores over the 5-year follow-up. The results showed that initial surgery resulted in a 0.36 unit worse visual field score than initial medical treatment (P = 0.03); however, when the influence of cataract extraction on visual field data was included in the model, the difference decreased to 0.28 units and was only marginally significant (P = 0.07) (Lichter et al., 2001). The rate of significant visual field loss, defined as a 3-unit increase in visual field score, at five years was similar in both treatment groups. Almost 11% of medically treated and 14% of surgically treated patients had significant visual field progression during their follow-up.

3.5.2.1. Translating IOP lowering into effect on progression

Given the use of a visual field score system that prevents the determination of rates of MD change, a comparison between IOP-treatment effects and changes in rate of progression cannot be objectively calculated.

Predictors of significant visual field loss in both groups were time in study, increasing age, diabetes, nonwhite race, and cataract development (Musch et al., 2009). Regarding HRQOL parameters, patients assigned to trabeculectomy suffered, on average, a 3-letter loss of vision on the Early Treatment Diabetic Retinopathy Study (ETDRS) chart after initial treatment, whereas medically treated eyes had stable VA during the first year. Vision decreased in both groups after the first year and average VA was similar in both treatment arms at the 4-year follow-up. Over the 5-year follow-up period, 3.9% of medically treated patients developed a 15-letter or greater loss in VA compared with 7.2% of patients who were surgically treated. Major predictors of 15 + letters of visual loss were initial surgical treatment, cataract development, nonwhite race, diabetes, and worse baseline vision.

The local eye symptoms subscale of the symptom and health problem checklist asked questions about eye irritation, foreign body sensation, eye pain, red eye, tearing, skin sensitivity, and ptosis. At the 12-month visit, patients in the trabeculectomy group reported significantly worse scores in all categories. At 5 years, scores were still higher in all categories in patients who underwent trabeculectomy but were only statistically significant for eye irritation and ptosis. The visual function subscale asked about blurred vision, difficulty with bright lights, visual problems with steps, and visual distortion. Surgically treated patients reported more difficulty with almost every symptom at every time point. The differences were found to be statistically significantly different with blurred vision, difficulty with bright lights, and visual problems with steps at the 12-month time point.

3.5.3. Ocular Hypertension Treatment Study (OHTS)

The Ocular Hypertension Treatment Study (OHTS) compared the effects of IOP-lowering therapy on the risk of OAG development among subjects with statistically high IOP despite normal visual field results and optic nerve head evaluation (Gordon et al., 2002).

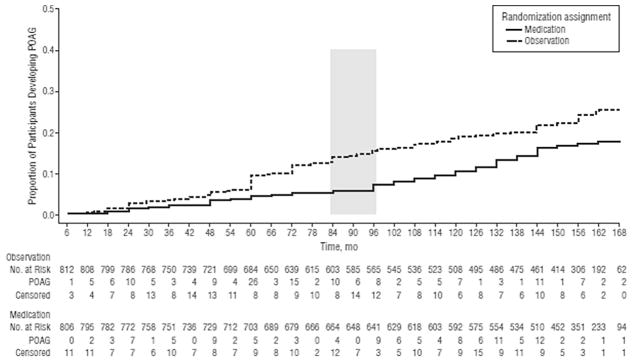

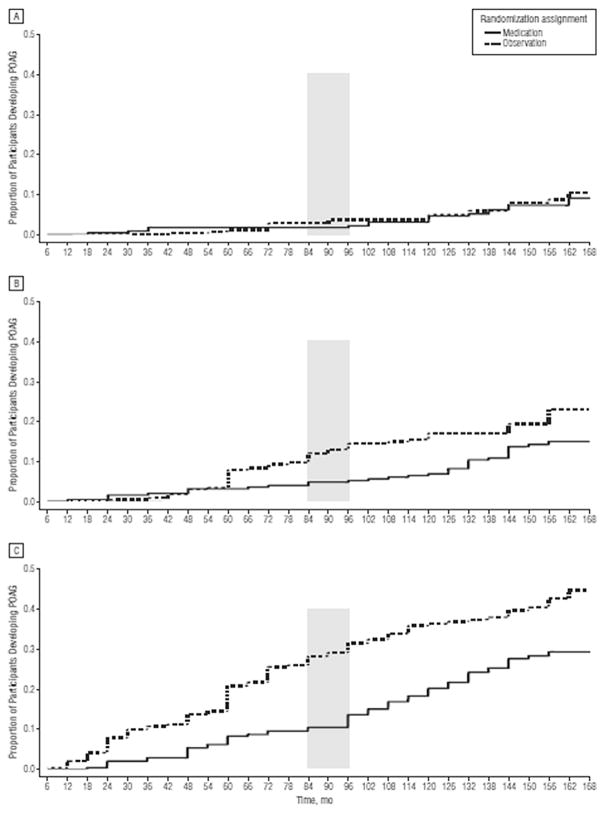

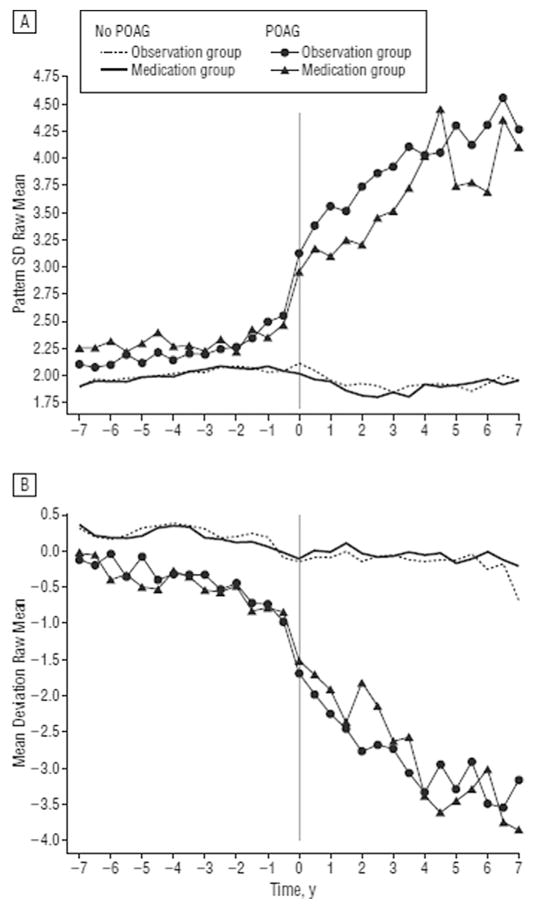

The cumulative proportion of participants in the original observation group who developed POAG at 13 years was 0.22 (95% CI, 0.19–0.25), versus 0.16 (95% CI, 0.13–0.19) in the original medication group (P = 0 0.009). Among participants at the highest third of baseline risk of developing OAG, the cumulative proportion who developed POAG was 0.40 (95% CI, 0.33–0.46) in the original observation group and 0.28 (95% CI, 0.22–0.34) in the original medication group (Figs.11 and 12) (Kass et al., 2010). Other issues of risk calculation in the OHTS are discussed in detail in a separate publication (Gordon et al., 2007).

Fig. 11. Survival plot of the cumulative probability of developing primary open-angle glaucoma (POAG) in the Ocular Hypertension Treatment Study over the entire course of the study (February 1994 to March 2009) by randomized group. The number of participants at risk was those who had not developed POAG at the beginning of each 6-month period. Participants who did not develop POAG and withdrew before the end of the study were censored from their last completed visit. Participants who did not develop POAG and died were censored at their date of death. The shaded column indicates initiation of medication in the original observation group.

Source: Kass MA, Gordon MO, Gao F, et al. Delaying treatment of ocular hypertension: the Ocular Hypertension Treatment Study. Arch Ophthalmol. Mar 2010;128(3):276–287.

Fig. 12. Survival plot of the cumulative probability of developing primary open-angle glaucoma (POAG) in the Ocular Hypertension Treatment Study during the entire course of the study by randomized group for participants with the lowest tertile (<6.0) (A), middle tertile (6.0%–13%) (B), and highest tertile (>13%) (C) of baseline predicted 5-year risk of POAG. Participants who did not develop POAG and withdrew before the end of the study were censored from the interval of their last completed visit. Participants who did not develop POAG and died were censored at their date of death. The shaded column indicates initiation of medication in the original observation group.

Source: Kass MA, Gordon MO, Gao F, et al. Delaying treatment of ocular hypertension: the Ocular Hypertension Treatment Study. Arch Ophthalmol. Mar 2010;128(3):276–287.

These findings suggest that individuals at high risk of developing OAG would benefit from more frequent examinations and early preventive treatment. When looking at the rates of visual field change, the average MD progression rate was −0.08 ± 0.20 dB/yr (±SD) during the trial. Eyes that converted to OAG (n = 359) had significantly worse MD progression rates (−0.26 ± 0.36 dB/yr) than non-POAG eyes (n = 2250; −0.05 ± 0.14 dB/yr; P < 0.001, Fig. 13). Eyes that reached POAG endpoints based on only visual field change (n = 74; −0.29 ± 0.31 dB/yr) or only optic disc change (n = 158; −0.12 ± 0.19 dB/yr) had significantly worse MD progression rates than non-POAG eyes (both P < 0.001). Eyes that reached POAG endpoints for both visual field and optic disc change (n = 127) deteriorated more rapidly (−0.42 ± 0.46 dB/yr) than eyes showing only VF change (P = 0.017) or only optic disc change (P < 0.001) (Demirel et al., 2012).

Fig. 13. Survival plot of the cumulative probability of developing primary open-angle glaucoma (POAG) in the Ocular Hypertension Treatment Study during the entire course of the study by randomized group for participants with the lowest tertile (<6.0) (A), middle tertile (6.0%–13%) (B), and highest tertile (>13%) (C) of baseline predicted 5-year risk of POAG. Participants who did not develop POAG and withdrew before the end of the study were censored from the interval of their last completed visit. Participants who did not develop POAG and died were censored at their date of death. The shaded column indicates initiation of medication in the original observation group.

Source: Kass MA, Gordon MO, Gao F, et al. Delaying treatment of ocular hypertension: the Ocular Hypertension Treatment Study. Arch Ophthalmol. Mar 2010;128(3):276–287.

3.5.4. Advanced Glaucoma Intervention Study (AGIS)

The Advanced Glaucoma Intervention Study (AGIS) investigated the efficacy of two treatment modalities in patients with severe disease.

Besides the key findings described in numerous reports (AGIS, 2000; Nouri-Mahdavi et al., 2004a, 2004b), the AGIS investigators presented for the first time an analysis of rates of visual field change (trend-based analysis) in a cohort from a RCT. In one of their reports, a total of 161 eyes (of 161 patients) were evaluated. In 64 (40%) eyes, the visual field became worse after 8 years, according to their trend-based progression criteria looking at pointwise rates.

The following variables were found to be significantly different between the progressing and non-progressing groups on univariate analysis: sum of pointwise slopes of sensitivity change during the first 4 years (P < 0.001), age (P < 0.001), and disease severity measured with the AGIS scores (P = 0.001). Neither average IOP nor IOP fluctuation during the first 4 years of follow-up was significantly different between the progressing and nonprogressing eyes. On multivariate analysis, the following two variables were associated with fast visual field progression: a more negative sum of slopes during the first 4 years (P < 0.001) and older age (P = 0.049) (Nouri-Mahdavi et al., 2004a, 2004b).

This finding depicts how measured rates of visual field change in a given period can be predictive of future functional outcomes. Eyes progressing at faster rates are more likely to reach progression endpoints during further follow-up. Hence, assessment of patients previously progressing at faster rates (or at high risk of progression) may be helpful in designing studies investigating IOP- and non-IOP lowering therapies within relatively short periods of follow-up.

3.5.4.1. Effect of IOP lowering on progression

Since the difference in MD rates of progression between treatment arms was not published, one cannot estimate the relationship between IOP-lowering effects and changes in rates of MD progression in the AGIS.

3.5.5. Low-pressure Glaucoma Treatment Study (LoGTS)

The Low-pressure Glaucoma Treatment Study (LoGTS) was a multicenter, double-masked, prospective RCT that aimed to investigate visual field outcomes in low-pressure glaucoma patients treated with either a topical beta-adrenergic antagonist (timolol maleate 0.5%) or alpha 2-adrenergic agonist (brimonidine tartrate 0.2%) (De Moraes et al., 2012b).

Of the 127 participants, 69 (54%) were randomized to timolol and 58 (46%) to brimonidine (P = 0.20). Forty-eight eyes (48/253; 19%) of 40 patients (40/127; 31%) met the predefined trend analysis pointwise linear regression (PLR) progression criteria (31 patients randomized to timolol; 9 patients randomized to brimonidine, P < 0.01).

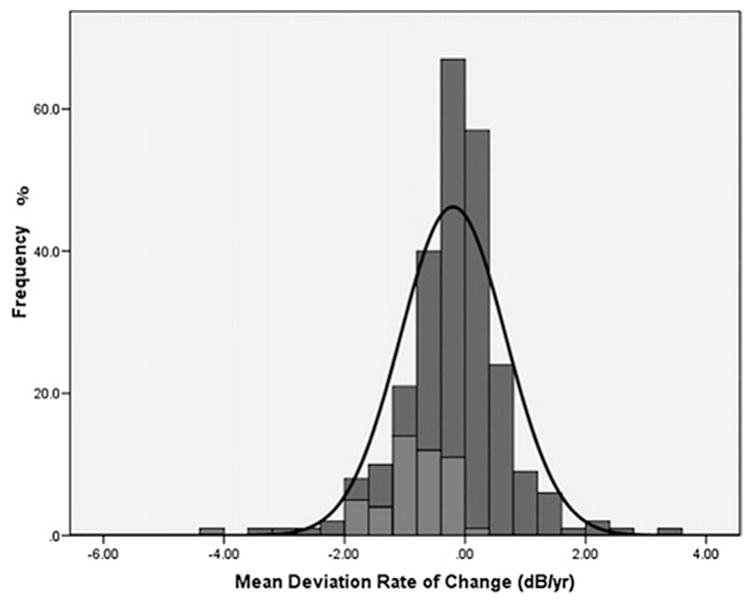

As expected, the rate of MD change (dB/yr) was significantly faster in eyes that met the progression criteria than in those that did not (−0.87 ± 0.7 versus −0.04 ± 0.8 dB/yr, P < 0.01, Fig. 14). In the multivariate analysis (Table 8), older age (HR = 1.41/decade older, 95% CI, 1.05 to 1.90, P = 0.022), use of medications for systemic hypertension (HR = 2.53, 95% CI, 1.32 to 4.87, P = 0.005), and lower MOPP during follow-up (HR = 1.21/mm Hg, 95% CI, 1.12 to 1.31, P < 0.001) were associated with increased risk of progression, whereas randomization to brimonidine was significantly associated with decreased risk of progression (HR = 0.26, 95% CI, 0.12–0.55, P < 0.001) (De Moraes et al., 2012b).

Fig. 14. Low-pressure Glaucoma Treatment Study: Comparison of mean deviation (MD) rates of change (dB/yr) between progressing (light gray) and nonprogressing (dark grey) eyes based on the pointwise linear regression criteria. The black curve corresponds to Gaussian curves based on the estimates from study patients.

Source: De Moraes CG, Liebmann JM, Greenfield DS, Gardiner SK, Ritch R, Krupin T. Risk factors for visual field progression in the Low-Pressure Glaucoma Treatment Study. Am J Ophthalmol. Oct 2012;154(4):702–711.

Table 8.

Low-pressure Glaucoma Treatment Study: Cox Proportional Hazards Multivariate Model with a Backward Elimination Approach based on likelihood ratios.a

| Parameter | Hazard ratio | 95% Confidence interval | P value |

|---|---|---|---|

| Randomization (brimonidine) | 0.26 | 0.12 to 0.55 | <0.001 |

| Age (per decade older) | 1.41 | 1.05 to 1.90 | 0.022 |

| Use of systemic antihypertensives | 2.53 | 1.32 to 4.87 | 0.005 |

| Mean ocular perfusion pressure during follow-up (per mm Hg lower) | 1.21 | 1.12 to 1.31 | <0.001 |

Variables were entered in the model if P < 0.05 and removed if P > 0.10 in the saturated multivariate model.

Source: adapted from De Moraes CG, Liebmann JM, Greenfield DS, Gardiner SK, Ritch R, Krupin T. Risk factors for visual field progression in the Low-Pressure Glaucoma Treatment Study. Am J Ophthalmol.. Oct 2012; 154(4): 702–711.

3.5.5.1. Effect of IOP lowering on progression

Since there was no difference in IOP reduction between the two treatment arms, the IOP-treatment effect needed to achieve a minimum 30% decrease in rate of MD progression cannot be estimated from the LoGTS data. However, the data from this trial can be used to see whether trend-based and event-based analysis methods correlated with each other, because the trial had three outcome measures, one event-based and two trend-based. The (trend-based) MD rate of progression for patients reaching the (event-based) Glaucoma Change Probability (GCP) progression endpoint (N = 43) was −0.87 dB/yr [SD = 0.6] and for those who did not (N = 210) was −0.62 dB/yr [SD = 0.8]. This difference was statistically significant (P < 0.001) (De Moraes et al., 2012b).

The correlation between the two fundamentally different progression criteria provides additional support for the use of trend-based outcomes with rates of MD progression as an alternative significant outcome for clinical trials to the more conventional event-based outcomes.

3.5.6. United Kingdom Glaucoma Treatment Study (UKGTS)

Although numerous clinical trials demonstrated that IOP-lowering (with different agents or procedures) significantly lower the risk of glaucoma progression, no placebo-controlled trials have assessed whether a specific agent can effectively preserve visual function. Moreover, the length of these trials was relatively long (typically 5 years). In the UKGTS, the investigators, for the first time, tested the hypothesis that a specific anti-glaucoma medication (latanoprost) can preserve visual function measured in a shorter period of time (2 years) when compared to placebo (Garway-Heath et al., 2015).

Patients with newly diagnosed open-angle glaucoma (258 in each group) were randomized to receive either latanoprost 0·005% or placebo eye drops and were initially planned to be followed for 24 months. Visual field progression was defined if at least three visual field locations deteriorated more than baseline at the 5% levels in two consecutive reliable visual fields and at least three visual field locations (note necessarily the same previous three locations) also deteriorated more than baseline at the 5% levels in the two subsequent consecutive reliable visual fields with 24-2 SITA testing.

At 24 months, mean IOP reduction was 3.8 mm Hg in the latanoprost group and 0.9 mm Hg in the placebo group. Visual field preservation was significantly longer in the latanoprost group than in the placebo group (HR: 0.44, 95% CI 0.28–0.69; P = 0.0003). Strikingly, statistically significant difference between treatment groups were already evident at 12 months (HR: 0.47; 0.23–0.95; P = 0.035) and 18 months (HR: 0.43; 0.26–0.71; P = 0.001). It is important to note, however, that effect sizes between a treatment group and placebo are expected to be larger than when two active agents are compared. Hence, we cannot make any conclusions regarding what would be the minimum length of follow-up to detect significant differences if instead of placebo, the investigators used another drug, laser, or incisional surgery.

3.5.6.1. Effect of IOP lowering on progression

A 3.8 mm Hg IOP reduction with latanoprost translated into a 56% risk reduction in 24 months, 57% in 18 months, and 53% in 12 months. The median change in visual field MD between baseline examination and the time at which progression was confirmed was −1.6 dB. No data are yet available on differences in rates of MD change or number of progression visual field locations between treated and untreated patients.

3.6. Large cohort studies and glaucoma progression

Besides the RCTs, other cohort studies also showed sustained visual field progression even in populations treated with different IOP-lowering modalities.

3.6.1. Canadian Glaucoma Study (CGS)

In the non-randomized Canadian Glaucoma Study (CGS), patients reaching an endpoint based on event-based analysis underwent 20% or greater reduction in IOP (Chauhan et al., 2010). Trend analysis with rates of MD change was used to assess the effects of treatment and potential risk factors for progression in that sample.

Patients who reached 0, 1, or 2 event-based progression endpoints had a median of 18, 23, and 25 examinations, respectively. The median MD rate in progressing patients prior to the first endpoint was significantly worse compared with those with no progression (−0.35 and 0.05 dB/yr, respectively).

Increasing age was associated with a worse MD rate, but female gender and mean follow-up IOP were not. Interestingly, the investigators found that anticardiolipin antibody level was associated with a significantly worse MD rate compared with a normal anticardiolipin antibody level (−0.57 and −0.03 dB/yr, respectively), once again suggesting the role of systemic risk factors (in addition to IOP) on rates of progression (Chauhan et al., 2010).

3.6.1.1. Effect of IOP lowering on progression

All CGS subjects entered the study with a minimum IOP reduction from untreated values of at least 30%. Of note, after the first progression endpoint, the median IOP decreased from 18.0 to 14.8 mm Hg (20% in individual patients), resulting in a significant MD rate change from −0.36 to −0.11 dB/yr (70% slower). For those patients who reached a second progression endpoint, the IOP was lowered by 18% on average, and the median MD rate of change decreased from −1.07 to −0.83 dB/yr (18% slower).

3.6.2. New York Glaucoma Progression Study (NY-GAPS)

In the first report of the New York Glaucoma Progression Study (NY-GAPS), 205 patients with treated, established glaucoma were followed for an average of 6.5 years and performed approximately 12 visual fields (De Moraes et al., 2009). Patients were divided into 3 groups: initial superior defect (group A; n = 79; MD, −3.4 [1.9] dB), initial inferior defect (group B; n = 61; MD, −3.4 [1.8] dB), and both hemifields affected (group C; n = 65; MD, −4.2 [1.5] dB).

Group C progressed faster than did groups A and B (P < 0.02). Multivariate analysis showed significant effect of higher baseline IOP, thinner corneas, and initial damage to both hemifields of faster rates of progression with PLR. In another report comparing patients with high- and low-pressure glaucoma, patients with high-pressure were significantly older (mean ± SD: 72.6 ± 9.4 years vs. 62.7 ± 12.8 years, P < 0.01), had higher mean IOPs (16.5 ± 3.2 mm Hg vs. 13.3 ± 2.0 mm Hg, P < 0.01) and greater CCT (544.0 ± 35.7 μm vs. 533.9 ± 35.9 μm; P = 0.01). During a similar period, high-tension glaucoma patients progressed globally almost twice as rapidly as did those with statistically normal pressures (−0.64 ± 0.7 dB/yr vs. −0.35 ± 0.3 dB/yr, P < 0.01), which became non-significant after adjustment for differences in age, mean IOP, and CCT.

In a multivariate model, variables significantly associated with progression were higher mean IOP (odds ratio [OR], 1.09, P = 0.03) and lower CCT (OR per microns thinner: 1.37, P = 0.03). Progression within the paracentral VF was more common in the NTG group (75% vs. 57.3%, P = 0.04).

The most important factor associated with paracentral progression among eyes that reached a progression outcome was the diagnosis of low-pressure glaucoma. Baseline central visual loss (within the central four points on the 24-2 VF) occurred more frequently in low-pressure glaucoma patients (58.9% versus 31.8% of eyes, P < 0.01) (Ahrlich et al., 2010). In a larger cohort with 587 eyes of 587 patients, the univariate model of risk of progression based on PLR criteria revealed that an increased risk of fast visual progression was associated with, older age (OR, 1.19 per decade; P = 0.01), baseline diagnosis of exfoliation syndrome (OR, 1.79; P = 0.01), lower CCT (OR, 1.38 per 40 μm thinner; P < 0.01), a detected disc hemorrhage (OR, 2.31; P < 0.01), presence of beta-zone parapapillary atrophy (OR, 2.17; P < 0.01), and all IOP parameters (mean follow-up, peak, and fluctuation; P < 0.01). In the multivariable model, peak IOP (OR, 1.13; P < 0.01), lower CCT (OR, 1.45 per 40 μm thinner; P < 0.01), a detected disc hemorrhage (OR, 2.59; P < 0.01), and presence of beta-zone parapapillary atrophy (OR, 2.38; P < 0.01) were associated with visual field progression (De Moraes et al., 2012b).

3.6.2.1. Effect of IOP lowering on progression

The similarity of the results of this study with those of RCT’s that used event-based progression endpoints (e.g., OHTS, EMGT) also support the contention that trend-based analysis with MD rates of progression can be used to measure clinically significant treatment effects that are consistent with FDA statements.

Moreover, progressing eyes and stable eyes had a mean (SD) global rate of VF change of −1.0 (0.8) dB/yr and −0.20 (0.4) dB/yr, respectively (P < 0.01) and the difference in mean IOP values between progressing and non-progressing eyes was 2.6 mm Hg.

4. Nature of glaucomatous visual field progression

4.1. Progression usually occurs in already abnormal areas

In addition to knowing the rate of visual field progression in glaucoma, it is important to know the pattern of progression. This knowledge can shed light on where to look for progression and whether intervention may be more beneficial. Glaucomatous visual field progression occurs most often in already abnormal areas, and is reflected by deepening of the defect and extension to contiguous areas.

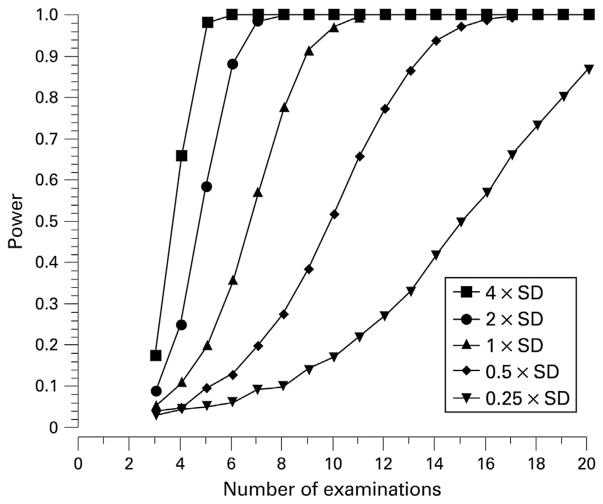

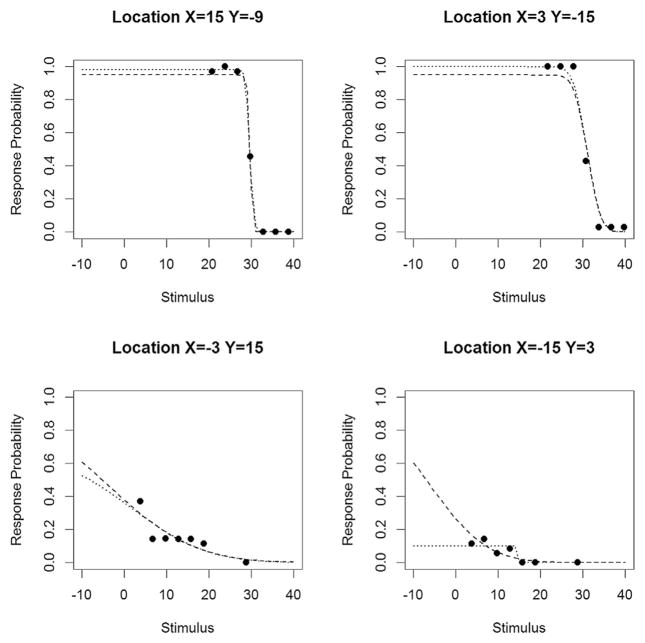

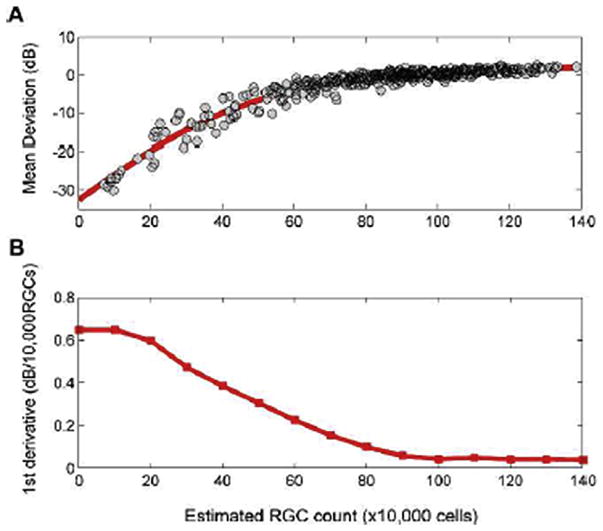

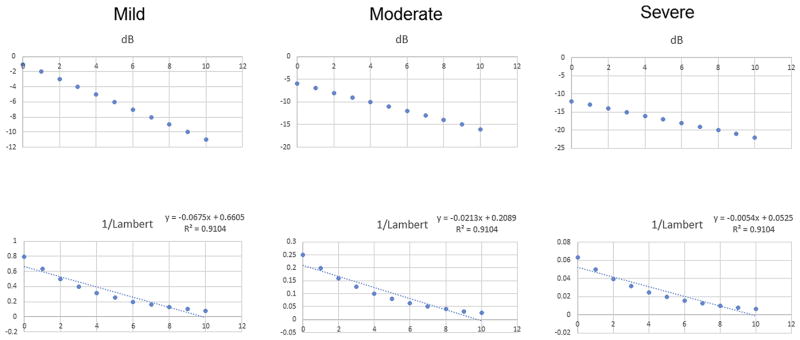

Mikelberg and Drance studied 42 eyes of 42 patients with glaucoma to determine the pattern of progression of their visual field defects (Mikelberg and Drance, 1984). In 33 eyes (79%) the scotomas became denser with progression. Enlargement occurred in 22 eyes (52%) and 21 eyes (50%) developed new scotomas. Increased density of the scotomas was the only manifestation of change in ten eyes (24%), three eyes (7%) showed enlargement only, and six eyes (14%) showed only new scotomas. Seventeen eyes (57%) with single hemifield involvement maintained a defective single hemifield throughout the follow-up period.