Abstract

Background

Resident handoff communication skills are essential components of medical education training. There are no previous systematic reviews of feedback and evaluation tools for physician handoffs.

Objective

We performed a systematic review of articles focused on inpatient handoff feedback or assessment tools.

Methods

The authors conducted a systematic review of English-language literature published from January 1, 2008, to May 13, 2015 on handoff feedback or assessment tools used in undergraduate or graduate medical education. All articles were reviewed by 2 independent abstractors. Included articles were assessed using a quality scoring system.

Results

A total of 26 articles with 32 tools met inclusion criteria, including 3 focused on feedback, 8 on assessment, and 15 on both feedback and assessment. All tools were used in an inpatient setting. Feedback and/or assessment improved the content or organization measures of handoff, while process and professionalism measures were less reliably improved. The Handoff Clinical Evaluation Exercise or a similar tool was used most frequently. Of included studies, 23% (6 of 26) were validity evidence studies, and 31% (8 of 26) of articles included a tool with behavioral anchors. A total of 35% (9 of 26) of studies used simulation or standardized patient encounters.

Conclusions

A number of feedback and assessment tools for physician handoffs in several specialties have been studied. Limited research has been done on the studied tools. These tools may assist medical educators in assessing trainees' handoff skills.

Introduction

Handoffs, the “process of transferring primary authority and responsibility for providing clinical care to a patient from 1 departing caregiver to 1 oncoming caregiver,”1 have been demonstrated to be a significant causative factor in medical errors.2

Educators have noted that feedback3 and assessment4 are essential facilitators of learning.5 The Accreditation Council for Graduate Medical Education (ACGME) requires programs to monitor handoffs6 to ensure resident competence in this vital communication skill. To provide effective resident monitoring, programs will need handoff feedback and assessment tools.

Although we identified 3 systematic reviews focused on studies of handoff curricula,7–9 none focused on handoff feedback or assessment tools. Therefore, we conducted a systematic review of the published English-language literature to identify and assess published research on these tools.

Methods

Literature Search

An experienced medical librarian (E.M.J.) conducted a comprehensive literature search for English-language articles published on inpatient, shift-to-shift handoffs between January 1, 2008, and May 13, 2015, in Ovid MEDLINE, Ovid MEDLINE In-Process & Other Non-Indexed Citations, Journals@Ovid, CINAHL (EBSCOhost), and “ePub ahead of print” in PubMed. We chose relevant controlled vocabulary and keywords to capture the concepts of handoff, including its multiple synonyms (provided as online supplemental material).

All article titles were independently reviewed for inclusion by at least 2 trained reviewers (from the following group: J.D., C.E., M.M., L.A.R.). If either reviewer selected a reference, the full text was ordered for further review. Using this strategy, 1497 articles were obtained. The percent agreement on initial independent selection of articles for further review was 94%. Interrater reliability using Cohen's kappa was κ = 0.72 (P < .001).

All full-text articles were reviewed by teams of 2 trained reviewers (from the following: J.D., C.R., C.E., M.M.). In cases where reviewers disagreed, articles were discussed by the team until consensus was reached. To identify other relevant articles, the reference sections of all included articles were checked by 2 independent research assistants (C.E. and M.M.).

Inclusion and Exclusion Criteria

At the outset, we developed a comprehensive systematic review protocol, including operational definitions, inclusion and exclusion criteria, and search strategy details. Feedback was defined as any formative process of providing information or constructive criticism that could help improve handoff performance. Assessment was defined as a summative process of assessing performance related to knowledge, content, attitudes, behaviors, or skills.

Articles meeting the following criteria were eligible for review: included medical students, residents, fellows, or attending physician's inpatient, shift-to-shift handoffs; had either quantitative or qualitative research data; and the research focused on feedback or assessment tools aimed at the learner. Exclusion criteria included articles that focused on interhospital or intrahospital transfer, were anecdotal or had no data, or were letters to the editor, commentaries, editorials, or newsletter articles.

Abstraction Process

The team used an iterative process to develop and pilot test an abstraction form designed to confirm final eligibility for full review, assess article characteristics, and extract data relevant to the study. Each article was independently abstracted by 2 of 3 trained reviewers (J.D., C.E., M.M.). The 2 abstractors, along with an author independent to the abstraction process (L.A.R.) discussed and combined the 2 abstractions into a final version. All abstraction disagreements were minor and were resolved during discussions between the reviewers.

Quality Assessment

The team used the Medical Education Research Study Quality Instrument (MERSQI) developed by Reed et al10 to assess quality. It is an 18-point, 6-domain instrument designed specifically for medical education research. The 6 domains are study design, sampling, type of data, validity of assessment instruments' scores, data analysis, and outcomes evaluated. Since its introduction in 2007, multiple studies have shown evidence of its validity and reliability.10–12 Studies were quality scored on each item via team consensus to arrive at final MERSQI scores. As described in its original use,10 the total MERSQI score was calculated as the percentage of total achievable points. This percentage was then adjusted to a standard denominator of 18 to allow for comparison of MERSQI scores across studies.

Response rate is the proportion of those eligible who completed the posttest or survey. For intervention studies, this is the proportion of those enrolled who completed the intervention assessment. For outcomes, handoff demonstration measures were considered skill acquisition if the handoff measure was done once during an intervention, and behavioral demonstration if there were multiple measurements over time in an actual health care setting. If a study measured multiple levels of outcomes, it was given the score corresponding to the highest level of outcome it measured.

Types of Data Reported

We categorized data reported into 4 types: content, process, handoff organization, and professionalism. These were defined as (1) content, which describes items included in the handoff related to a patient's health-related history, treatment management or planning, or hospital course or updating these items; (2) process, which evaluates or assesses environmental or other components of a quality handoff (eg, limiting interruptions, quiet location); (3) handoff organization, which describes adherence to a predefined order of handoff items, patients to be handed off, or coherence and understandability of handoff presentation; and (4) professionalism, which describes provider conduct and appropriateness in the health care setting and relationships with colleagues.

Validity evidence was grouped according to the 5-category validity framework developed by Beckman et al13 and expanded by Cook and Lineberry14: content, internal structure, response process, relationships with other variables, and consequences.

Content included face validity, adapting items from an existing instrument, stakeholder review, literature search, or previous publication. Internal structure included all forms of reliability, factor analysis, or internal consistency. Pilot testing was included as part of response process, whether data of the pilot were reported or not. Relationships with other variables was shortened to “relational” and included correlation to any outside factor or tool. Consequences included any potential objective change or outcome (regardless of whether there was a change or not and regardless of whether the change was intended or not) after feedback or assessment was implemented, as well as any impact on the evaluator or evaluee.13,14

Results

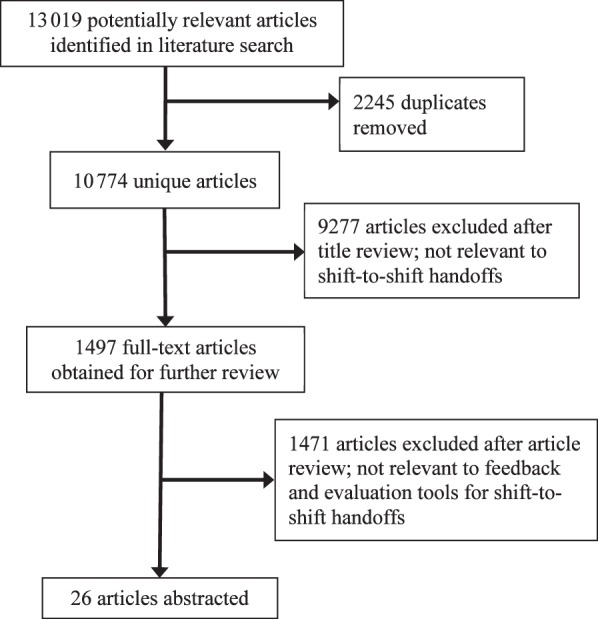

Our search strategy yielded 10 774 unique articles (total with duplicates 13 019). After reviewing the search, we identified 26 articles (32 tools) published between January 1, 2008, and May 13, 2015, that focused on inpatient handoff feedback or assessment tools (figure). Of these articles, 3 were relevant to feedback only,27,29,34 8 to assessment only,15,18,19,21,23,31,33,35 and 15 to feedback and assessment (tables 1 and 2).16,17,20,22,24–26,28,30,32,36–40 Copies of some tools are available from the authors on request.

Figure.

Study Selection Process for a Systematic Review of the Literature (2008–2015) on Feedback and Assessment Tools for Shift-to-Shift Handoffs

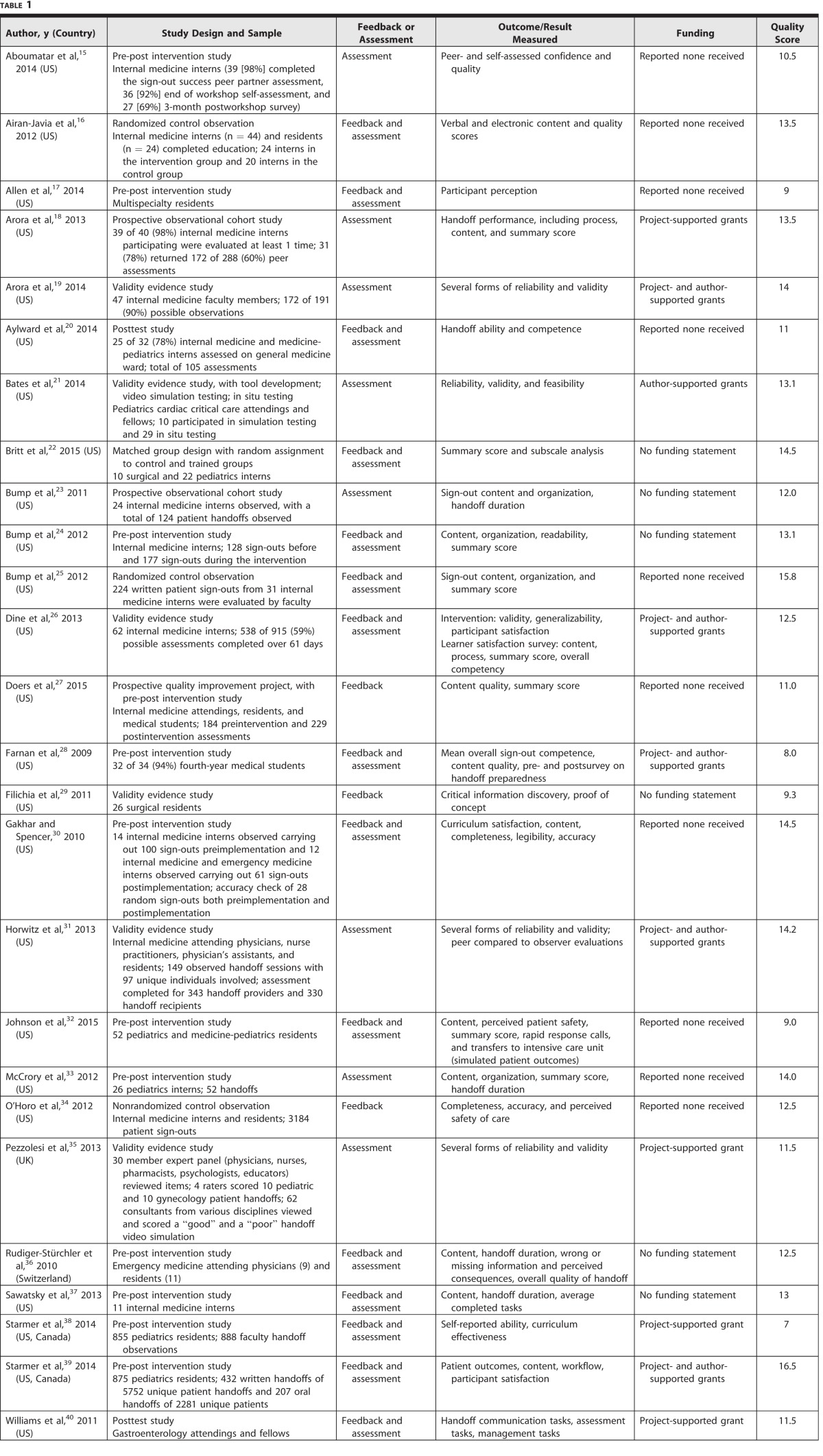

Table 1.

Brief Summary of Articles Included in a Systematic Review of Feedback and Assessment Tools for Shift-to-Shift Handoff (2008–2015)

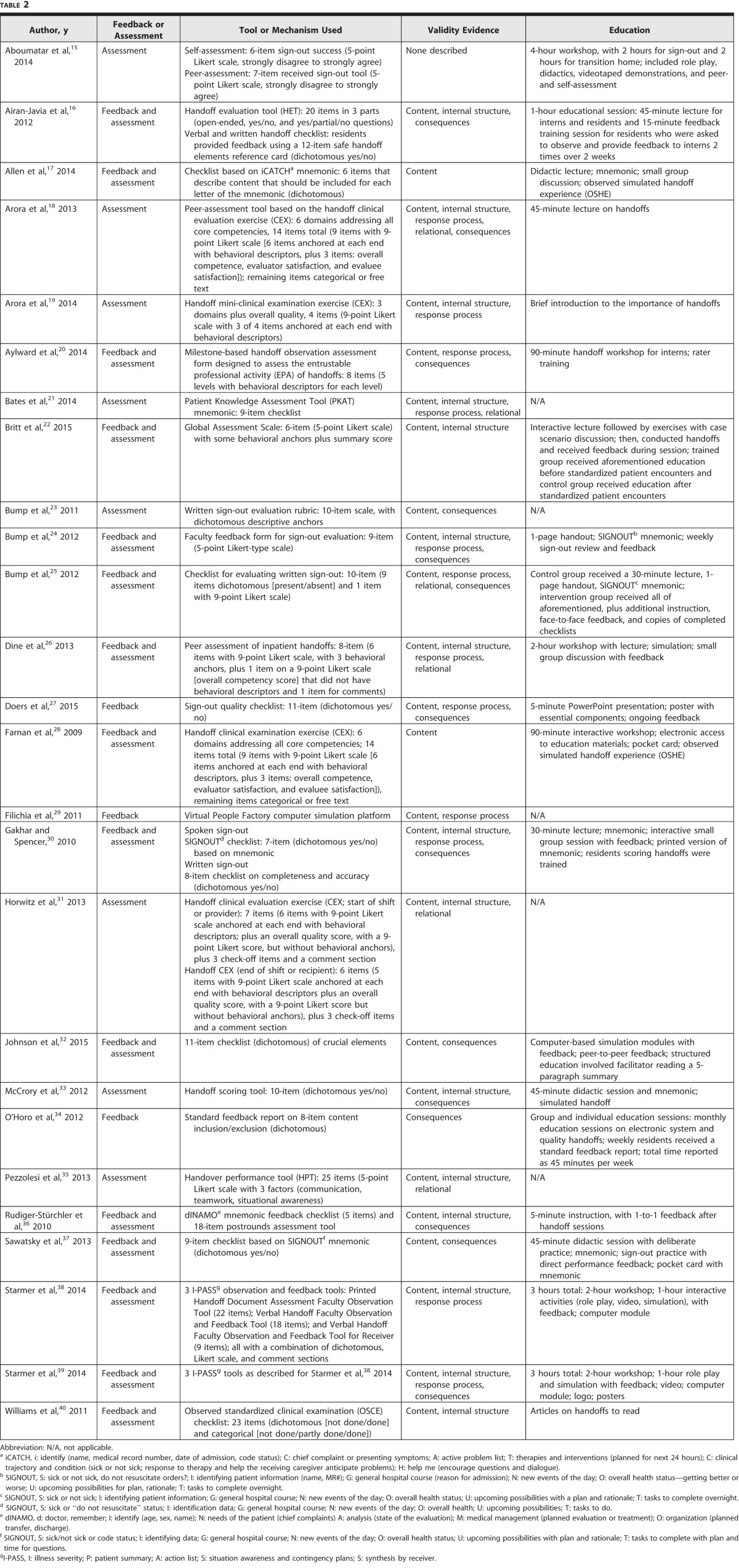

Table 2.

Description of Tools and Education Included in a Systematic Review of Feedback and Assessment Tools for Shift-to-Shift Handoff (2008–2015)

The mean quality score of the studies was 12.2 (SD = 2.4; range = 7–16.5; possible maximum = 18). The consistently lowest-scoring domains were study design (mean = 1.5, SD = 0.62), outcome (mean = 1.7, SD = 0.42), and sampling (mean = 1.7, SD = 0.54). Ten studies (38%) reported funding; however, the mean quality score was identical for funded and unfunded studies (12.2).

Most of the studies occurred in the United States (22 of 26, 85%).15–34,37,40 Only 2 studies occurred entirely outside of the United States,35,36 and 2 more occurred in both Canada and the United States.38,39 There were several different types of study designs among the articles. The study design most commonly used was pre-post intervention (11 of 26, 42%).15,17,24,28,30,32,33,36–39 Other study designs included validity evidence only (6 of 26, 23%)19,21,26,29,31,35; randomized control trial (2 of 26, 7.7%)16,25; posttest study (2 of 26, 7.7%)20,40; observational study (2 of 26, 7.7%)18,23; and matched group design with random assignment to control and trained groups (1 of 26, 3.8%).22 The studies included the specialties of internal medicine (12 of 26, 46%)15,16,18,19,23–27,31,34,37; pediatrics (3 of 26, 12%)33,38,39; pediatric cardiac critical care (1 of 26, 3.8%)21; surgery (1 of 26, 3.8%)29; emergency medicine (1 of 26, 3.8%)36; and gastroenterology (1 of 26, 3.8%).40 Several of the studies used participants from more than 1 specialty (7 of 26, 27%).17,20,23,28,30,32,35 The participants involved in the most studies were interns and residents (21 of 26, 81%)15–18,20,22–27,29–34,36–39 but also included attending physicians (7 of 26, 27%),19,21,27,31,36,38,40 fellows (2 of 26, 7.7%),21,40 medical students (2 of 26, 7.7%),27,28 nurse practitioners (1 of 26, 3.8%),31 and physician assistants (1 of 26, 3.8%).31 One study focused on physicians but also included pharmacists, nurses, psychologists, and educators (1 of 26, 3.8%).35

Feedback

Feedback methods varied. Most often, feedback was provided 1-on-1 to learners (15 of 18, 83%).17,20,22,24,25,28–30,32,34,36–40 However, 17% (3 of 18) of the articles reported that feedback was provided in group sessions as part of an intervention or curriculum.16,26,27 All but 1 article with feedback26 showed statistically significant improvements in at least 1 component assessed.

The most commonly used method was to provide feedback to the learner once or during 1 session (11 of 18 studies, 61%).18,22,26,28–30,32,37–40 Some studies provided feedback to learners more than once (7 of 18 studies, 39%).16,20,24,25,27,34,36 Studies providing feedback over time showed varied results, ranging from significant increases in handoff provider satisfaction with personal verbal handoff quality preintervention to postintervention20 and significant improvements on all measured content and organization (2 of 3, 67%)29 to mixed results, with some elements improved (inclusion of advanced directives and anticipatory guidance) and no improvement in organization nor readability (1 of 3, 33%).24

Of the 18 studies, 3 (17%) provided feedback for several weeks or months.24,27,34 All reported some improvements over time, with 1 study documenting statistically significant improvement in overall quality score.27

Feedback provided to the learners usually included content of the handoff (17 of 18, 94%).16,17,20,24–30,32,34,36–40 All studies measuring content compared to a control or preintervention group showed an improvement.16,24,27,30,36,39 A few studies provided feedback on the process of the handoff (6 of 18, 33%).16,20,28,37–39

Varied outcomes of explicit content were measured after feedback. Code status was the most frequent item that showed statistically significant improvement in inclusion during handoffs after feedback.24,30,37,39 Other items that were often statistically improved after feedback were medications,16,30,39 anticipatory guidance,24,27,30,34,37,39 and diagnostic tests/results.27,36,39 Occasionally, some content items were omitted more frequently after feedback, such as major medical problems16 or asking if the receiver had any questions.35

Assessment

The assessment process was measured in heterogeneous ways across studies. The Handoff Clinical Evaluation Exercise (CEX) or tools based on it were the most commonly used.18,19,28,31 Articles with assessment tools used several types of outcome measures, including content-based (22 of 23, 96%)15–21,23–26,28,30–33,35–40; process-based (11 of 23, 48%)16,18–20,28,31,35,37–40; perception of professionalism (11 of 23, 48%)18–20,22,26,28,31,35,38–40; and organizational measures (17 of 23, 74%).15,16,18–20,21–26,28,31,33,35,38,39

Five articles included more than 1 assessment tool (table 2): 1 with self-perception and receiver-perception of handoff15; 1 with verbal and written assessment30; 1 with separate tools for the giver and receiver31; and 2 with 3 tools (1 each for printed, verbal giver, and verbal receiver).38,39 One study used a single tool in a global assessment of a trainee in roles of both sender and receiver.18

Feedback and Assessment

In 7 studies, the person providing feedback and/or assessment received training.16,25,28,30,37–39 Of the studies that contained both feedback and assessment, 4 had tools exclusively for feedback,16,36,38,39 although many studies used their assessment tools as a feedback guide.17,20,23,24–28,30,33

Seven studies assessed the accuracy of handoff content with 4 embedding this in the tool,20,25,38,39 2 by independent retrospective chart review,30,34 and 1 by querying senior faculty.36 In addition, 7 studies used tools that assessed whether or not the content of the handoff was updated.16,18,23–26,37

Learners were evaluated using audiotapes16 and videotapes24,33,37 in several studies. In 2 of the studies using videotape, learners were able to review the recordings for educational purposes.33,37 Two studies used real patient handoffs, 1 with audiotape16 and 1 with videotape,37 and 2 used simulated handoffs.22,33 All 4 demonstrated significant improvements, either in pre- to postcomparisons33,37 or when compared to a control group.16,22 The observed simulated handoff experience was used in 2 studies,17,28 and the objective standardized clinical examination was used in 1 study.40 Overall, 9 studies used some form of simulation, standardized patient encounter, or standardized resident encounter.17,22,26,28,32,33,38–40 Three studies used a combination of educational/simulation and workplace testing.37–39

Six articles focused solely on describing or offering validity evidence for a tool.19,21,26,29,31,35 Other studies, not specifically aimed at validation, also reported various types of validity evidence (table 2). Eight articles used behavioral anchors for at least some levels of tool items,18–20,22,23,26,28,31 with 2 using anchors for all levels.20,23

Discussion

Our systematic review of the literature yielded 26 articles and 32 tools relevant to feedback and assessment of inpatient handoff communication. The interventions and outcomes measured varied widely across the studies. As expected, most articles showed that using feedback and/or assessment improved the content or organization measures included in the respective tools. Process and professionalism measures were less reliably improved. Two studies measured perceived safety,32,34 and 1 study measured actual patient outcomes (medical errors and adverse events).39

Handoff communication errors have been linked to adverse patient outcomes, which has led to a national focus on the need to improve handoff communication. However, the existing literature on handoff feedback and assessment tools has not demonstrated a clear link between use of these tools and improved patient outcomes. Although Starmer and colleagues39 demonstrated improved patient outcomes, their study included a bundle of interventions (not solely the use of a handoff feedback/assessment tool). There is no clear link between use of the tool itself and patient outcomes.

The tools identified were diverse. One reason for this is that different specialties and institutions may require different types of handoffs with different relevant information. To address this, some handoff experts have proposed the concept of flexible standardization, a core set of universally accepted components that can be modified for a specific institution or specialty as needed.41–43 This would apply to feedback and assessment tools. In addition, patient handoffs must provide a balance between consistent content and necessary flexibility in diverse patient scenarios. Feedback and assessment tools should address this dynamic tension.

The Handoff CEX or tools based on it are the most widely studied tools we identified; however, even these tools require further research to confirm their effectiveness. Due to the recent nature of this body of literature (2009–2015), and the relatively small number of studies (26) and tools identified (32), it is too early to definitively identify the best tools for particular disciplines and/or learner levels. We hope that with time and further study a rich body of feedback and assessment tools for handoffs will develop.

Overall, the items included in the assessment tools were mainly content based, followed by organizational measures. Professionalism and process-based measures were used less often in evaluating learners. If the goal of providing feedback and/or assessment is to improve handoff content, then checklist tools assessing presence/absence will suffice. However, we believe that there are factors other than content that make a quality handoff. While process, organization, and professionalism can be assessed using dichotomous (yes, no) or categorical (never, rarely, occasionally, usually, always) scoring, learners may benefit more from tools with descriptive behavioral anchors. We identified 8 tools with at least some behavioral anchors.18–20,22,25,26,28,31

Handoff is a skill that requires deliberate practice in order to master. In fact, it is 1 of the most important skills for incoming interns to learn before residency.44 Simulation, standardized patient encounters, and role play would be ideal modalities for safely teaching and assessing this important skill. Indeed, 9 of 26 (35%) studies in this review used some form of simulation or standardized encounter.17,22,26,28,32,33,38–40 One of these 9 studies (11%)28 used medical students, and 3 (33%)22,26,33 specifically mentioned including interns. In the future, the use of simulation or objective standardized clinical examinations to assess graduating medical student and intern competency in handoffs may help ensure patient safety.

It is recognized that regular feedback is important in the acquisition of clinical skills.3,45 However, only 39% (7 of 18) of feedback articles provided feedback more than once. One study34 introduced a new electronic handover system and showed that implementing the electronic system without feedback increased omissions of both allergies and code status. When feedback was implemented, allergy and code status omissions were reduced, and an improvement was seen in inclusion of patient location, patient identification information, and anticipatory guidance.34 Doers et al27 suggested that providing feedback to medical students, residents, and attending physicians once a month was an effective way to sustain improvements in handoff quality, and Dine et al26 showed that at least 10 peer assessments during a single rotation and 12 to 24 across multiple rotations were needed to adequately assess handoff skills. Clearly, more research is needed to answer the question about how much feedback is sufficient.

Handoffs require mastery of a complex set of diverse skills (eg, communication, teamwork, prioritization, organization). Aylward and colleagues20 identified handoffs as an example of an entrustable professional activity (EPA), an activity requiring multiple tasks and responsibilities that faculty can progressively entrust learners to perform independently.46 Handoffs, viewed as EPAs, require feedback over time; however, this will require adequate faculty development and time to provide the needed feedback and assessment. This creates an entirely new set of issues, as faculty may have different ideas about what constitutes an effective versus ineffective handoff. In addition, effective feedback requires specific skills that faculty may not possess. Finally, there are competing demands on faculty time. Each of these will need to be addressed by medical education leadership.

Who evaluates learners may play a role in the validity and reliability of the assessment. Of the 26 studies, 7 explicitly stated that the person providing assessment or feedback received training.16,25,28,30,37–39 Using standardized videos and the Handoff CEX tool, Arora et al19 found that internal medicine faculty could reliably discriminate different levels of performance in each domain. Peer assessments, while feasible, show evidence of leniency,18,31 and their impact on resident workload is unclear.18 These studies suggest that well-trained or experienced external observers are necessary to ensure adequate assessment of learners' handoff skills.

Funding is an important consideration in medical education studies, and it can impact study quality.10 However, in our study the mean quality score was 12.2 (possible range = 1–18) for funded and unfunded research. Less than half of the studies reported receiving project or author funding (10 of 26, 39%), and only 1 of the funded studies measured patient outcomes. Showing benefit to patients is the ultimate goal; however, funding studies that measure this can be quite expensive. It will be important in the future to identify handoff measures that are proven to both improve the handoff itself and translate into improved patient safety.

This review is limited by the search strategies used. Some relevant studies may have been quality improvement studies, which may not be reported in the peer-reviewed literature.47 Although our comprehensive search strategy to identify relevant articles minimizes the risk of missing germane articles, it does not eliminate the possibility. Finally, the heterogeneity of the studies in both methodology and interventions limits the conclusions that can be drawn.

Conclusion

We identified 26 studies on handoff feedback and assessment containing 32 tools. These tools were exclusively hospital based but spanned many specialties. No single tool arose as best for any particular specialty or use. Assessment and ongoing feedback are important components for improving physician handoffs. The tools we identified or their components can be used as templates for medical educators wishing to develop handoff feedback and assessment tools that incorporate institutional and specialty-specific needs.

Supplementary Material

References

- 1. Patterson ES, Wears RL. . Patient handoffs: standardized and reliable measurement tools remain elusive. Jt Comm J Qual Patient Saf. 2010; 36 2: 52– 61. [DOI] [PubMed] [Google Scholar]

- 2. Arora V, Johnson J, Lovinger D, et al. Communication failures in patient sign-out and suggestions for improvement: a critical incident analysis. Qual Saf Health Care. 2005; 14 6: 401– 407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Ende J. Feedback in clinical medical education. JAMA. 1983; 250 6: 777– 781. [PubMed] [Google Scholar]

- 4. Newble DI, Jaeger K. . The effect of assessments and examinations on the learning of medical students. Med Educ. 1983; 17 3: 165– 171. [DOI] [PubMed] [Google Scholar]

- 5. Watling C. . Resident teachers and feedback: time to raise the bar. J Grad Med Educ. 2014; 6 4: 781– 782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Accreditation Council for Graduate Medical Education. Common Program Requirements. http://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements. Accessed October 26, 2016. [Google Scholar]

- 7. Buchanan IM, Besdine RW. . A systematic review of curricular interventions teaching transitional care to physicians-in-training and physicians. Acad Med. 2011; 86 6: 628– 639. [DOI] [PubMed] [Google Scholar]

- 8. Gordon M, Findley R. . Educational interventions to improve handover in health care: a systematic review. Med Educ. 2011; 45 11: 1081– 1089. [DOI] [PubMed] [Google Scholar]

- 9. Masterson MF, Gill RS, Turner SR, et al. A systematic review of educational resources for teaching patient handovers to residents. Can Med Educ J. 2013; 4 1: e96– e110. [PMC free article] [PubMed] [Google Scholar]

- 10. Reed DA, Cook DA, Beckman TJ, et al. Association between funding and quality of published medical education research. JAMA. 2007; 298 9: 1002– 1009. [DOI] [PubMed] [Google Scholar]

- 11. Yucha CB, Schneider BS, Smyer T, et al. Methodological quality and scientific impact of quantitative nursing education research over 18 months. Nurs Educ Perspect. 2011; 32 6: 362– 368. [DOI] [PubMed] [Google Scholar]

- 12. Reed DA, Beckman TJ, Wright SM. . An assessment of the methodologic quality of medical education research studies published in The American Journal of Surgery. Am J Surg. 2009; 198 3: 442– 444. [DOI] [PubMed] [Google Scholar]

- 13. Beckman TJ, Cook DA, Mandrekar JN. . What is the validity evidence for assessments of clinical teaching? J Gen Intern Med. 2005; 20 12: 1159– 1164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Cook DA, Lineberry M. . Consequences validity evidence: evaluating the impact of educational assessments. Acad Med. 2016; 91 6: 785– 795. [DOI] [PubMed] [Google Scholar]

- 15. Aboumatar H, Allison RD, Feldman L, et al. Focus on transitions of care: description and evaluation of an educational intervention for internal medicine residents. Am J Med Qual. 2014; 29 6: 522– 529. [DOI] [PubMed] [Google Scholar]

- 16. Airan-Javia SL, Kogan JR, Smith M, et al. Effects of education on interns' verbal and electronic handoff documentation skills. J Grad Med Educ. 2012; 4 2: 209– 214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Allen S, Caton C, Cluver J, et al. Targeting improvements in patient safety at a large academic center: an institutional handoff curriculum for graduate medical education. Acad Med. 2014; 89 10: 1366– 1369. [DOI] [PubMed] [Google Scholar]

- 18. Arora VM, Greenstein EA, Woodruff JN, et al. Implementing peer evaluation of handoffs: associations with experience and workload. J Hosp Med. 2013; 8 3: 132– 136. [DOI] [PubMed] [Google Scholar]

- 19. Arora VM, Berhie S, Horwitz LI, et al. Using standardized videos to validate a measure of handoff quality: the handoff mini-clinical examination exercise. J Hosp Med. 2014; 9 7: 441– 446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Aylward M, Nixon J, Gladding S. . An entrustable professional activity (EPA) for handoffs as a model for EPA assessment development. Acad Med. 2014; 89 10: 1335– 1340. [DOI] [PubMed] [Google Scholar]

- 21. Bates KE, Bird GL, Shea JA, et al. A tool to measure shared clinical understanding following handoffs to help evaluate handoff quality. J Hosp Med. 2014; 9 3: 142– 147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Britt RC, Ramirez DE, Anderson-Montoya BL, et al. Resident handoff training: initial evaluation of a novel method. J Healthc Qual. 2015; 37 1: 75– 80. [DOI] [PubMed] [Google Scholar]

- 23. Bump GM, Jovin F, Destefano L, et al. Resident sign-out and patient hand-offs: opportunities for improvement. Teach Learn Med. 2011; 23 2: 105– 111. [DOI] [PubMed] [Google Scholar]

- 24. Bump GM, Jacob J, Abisse SS, et al. Implementing faculty evaluation of written sign-out. Teach Learn Med. 2012; 24 3: 231– 237. [DOI] [PubMed] [Google Scholar]

- 25. Bump GM, Bost JE, Buranosky R, et al. Faculty member review and feedback using a sign-out checklist: improving intern written sign-out. Acad Med. 2012; 87 8: 1125– 1131. [DOI] [PubMed] [Google Scholar]

- 26. Dine JC, Wingate N, Rosen IM, et al. Using peers to assess handoffs: a pilot study. J Gen Intern Med. 2013; 28 8: 1008– 1013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Doers ME, Beniwal-Patel P, Kuester J, et al. Feedback to achieve improved sign-out technique. Am J Med Qual. 2015; 30 4: 353– 358. [DOI] [PubMed] [Google Scholar]

- 28. Farnan JM, Paro JA, Rodriguez RM, et al. Hand-off education and evaluation: piloting the observed simulated hand-off experience (OSHE). J Gen Intern Med. 2009; 25 2: 129– 134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Filichia L, Halan S, Blackwelder E, et al. Description of web-enhanced virtual character simulation system to standardize patient hand-offs. J Surg Res. 2011; 166 2: 176– 181. [DOI] [PubMed] [Google Scholar]

- 30. Gakhar B, Spencer AL. . Using direct observation, formal evaluation, and an interactive curriculum to improve the sign-out practices of internal medicine interns. Acad Med. 2010; 85 7: 1182– 1188. [DOI] [PubMed] [Google Scholar]

- 31. Horwitz LI, Rand D, Staisiunas P, et al. Development of a handoff evaluation tool for shift-to-shift physician handoffs: the handoff CEX. J Hosp Med. 2013; 8 4: 191– 200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Johnson DP, Zimmerman K, Staples B, et al. Multicenter development, implementation, and patient safety impacts of a simulation-based module to teach handovers to pediatric residents. Hosp Pediatr. 2015; 5 3: 154– 159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. McCrory MC, Aboumatar H, Custer JW, et al. “ABC-SBAR” training improves simulated critical patient hand-off by pediatric interns. Pediatr Emerg Care. 2012; 28 6: 538– 543. [DOI] [PubMed] [Google Scholar]

- 34. O'Horo JC, Omballi M, Tran TK, et al. Effect of audit and feedback on improving handovers: a nonrandomized comparative study. J Grad Med Educ. 2012; 4 1: 42– 46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Pezzolesi C, Manser T, Schifano F, et al. Human factors in clinical handover: development and testing of a ‘handover performance tool' for doctors' shift handovers. Int J Qual Health Care. 2013; 25 1: 58– 65. [DOI] [PubMed] [Google Scholar]

- 36. Rudiger-Stürchler M, Keller DI, Bingisser R. . Emergency physician intershift handover—can a dINAMO checklist speed it up and improve quality? Swiss Med Wkly. 2010; 140: w13085. [DOI] [PubMed] [Google Scholar]

- 37. Sawatsky AP, Mikhael JR, Punatar AD, et al. The effects of deliberate practice and feedback to teach standardized handoff communication on the knowledge, attitudes, and practices of first-year residents. Teach Learn Med. 2013; 25 4: 279– 284. [DOI] [PubMed] [Google Scholar]

- 38. Starmer AJ, O'Toole JK, Rosenbluth G, et al. Development, implementation, and dissemination of the I-PASS handoff curriculum: a multisite educational intervention to improve patient handoffs. Acad Med. 2014; 89 6: 876– 884. [DOI] [PubMed] [Google Scholar]

- 39. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014; 371 19: 1803– 1812. [DOI] [PubMed] [Google Scholar]

- 40. Williams R, Miler R, Shah B, et al. Observing handoffs and telephone management in GI fellowship training. Am J Gastroenterol. 2011; 106 8: 1410– 1414. [DOI] [PubMed] [Google Scholar]

- 41. Turner P, Wong MC, Yee KC. . A standard operating protocol (SOP) and minimum data set (MDS) for nursing and medical handover: considerations for flexible standardization in developing electronic tools. Stud Health Technol Inform. 2009; 143: 501– 506. [PubMed] [Google Scholar]

- 42. Arora VM, Manjarrez E, Dressler DD, et al. Hospitalist handoffs: a systematic review and task force recommendations. J Hosp Med. 2009; 4 7: 433– 440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Boggan JC, Zhang T, DeRienzo C, et al. Standardizing and evaluating transitions of care in the era of duty hour reform: one institution's resident-led effort. J Grad Med Educ. 2013; 5 4: 652– 657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Pereira AG, Harrell HE, Weissman A, et al. Important skills for internship and the fourth-year medical school courses to acquire them: a national survey of internal medicine residents. Acad Med. 2016; 91 6: 821– 826. [DOI] [PubMed] [Google Scholar]

- 45. Anderson PA. . Giving feedback on clinical skills: are we starving our young? J Grad Med Educ. 2012; 4 2: 154– 158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. ten Cate O. . Nuts and bolts of entrustable professional activities. J Grad Med Educ. 2013; 5 1: 157– 158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Davidoff F, Batalden P. . Toward stronger evidence on quality improvement. Draft publication guidelines: the beginning of a consensus project. Qual Saf Health Care. 2005; 14 5: 319– 325. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.