SUMMARY

The primate brain contains a hierarchy of visual areas, dubbed the ventral stream, which rapidly computes object representations that are both specific for object identity and robust against identity-preserving transformations like depth-rotations [1, 2]. Current computational models of object recognition, including recent deep learning networks, generate these properties through a hierarchy of alternating selectivity-increasing filtering and tolerance-increasing pooling operations, similar to simple-complex cells operations [3, 4, 5, 6]. Here we prove that a class of hierarchical architectures and a broad set of biologically plausible learning rules generate approximate invariance to identity-preserving transformations at the top level of the processing hierarchy. However, all past models tested failed to reproduce the most salient property of an intermediate representation of a three-level face-processing hierarchy in the brain: mirror-symmetric tuning to head orientation [7]. Here we demonstrate that one specific biologically-plausible Hebb-type learning rule generates mirror-symmetric tuning to bilaterally symmetric stimuli like faces at intermediate levels of the architecture and show why it does so. Thus the tuning properties of individual cells inside the visual stream appear to result from group properties of the stimuli they encode and to reflect the learning rules that sculpted the information-processing system within which they reside.

RESULTS

The ventral stream rapidly computes image representations that are simultaneously tolerant of identity-preserving transformations and discriminative enough to support robust recognition. The ventral stream of the macaque brain contains discrete patches of cortex that support the processing of images of faces [8, 9, 10, 11]. The face patches are arranged along an occipito-temporal axis (from the middle lateral (ML) and middle fundus (MF) patches, through the antero-lateral (AL) to the antero-medial (AM) patch (Figure 1A) [13]. Along this axis response latencies increase systematically, suggesting sequential forward-processing [7]. Selectivity to spatial position, size, and head orientation decrease from ML/MF to AM [7], replicating the general trend of the ventral stream [14, 1]. In accord with these properties, many hierarchical models of object recognition [15, 14, 16] and face recognition [4, 17, 18] feature a progression from view-specific early processing stages to view-invariant later processing stages. They do so by successive pooling over view-tuned units.

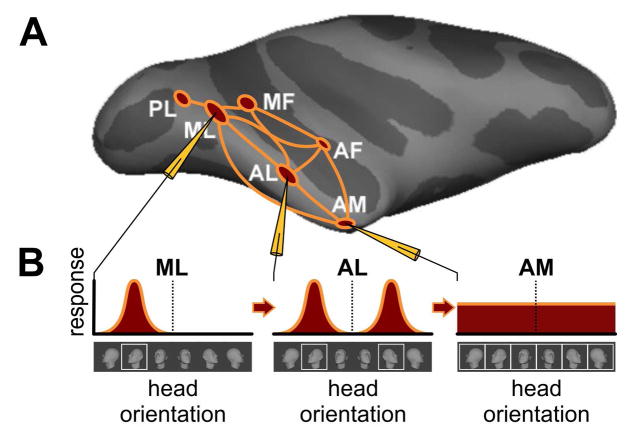

Figure 1. Schematic of the macaque face-patch system[12, 13, 7].

(A) Side view of computer-inflated macaque cortex with six areas of face-selective cortex (red) in the temporal lobe together with connectivity graph (orange). Face areas are named based on their anatomical location: PL, posterior lateral; ML; middle lateral; MF, middle fundus; AL, anterior lateral; AF, anterior fundus ; AM, anterior medial (3), and have been found to be directly connected to each other to form a face-processing network [12]. Recordings from three face areas, ML, AL, AM, during presentations of faces at different head orientations revealed qualitatively different tuning properties, schematized in B. (B) Prototypical ML neurons are tuned to head orientation, e.g., as shown, a left profile. A prototypical neuron in AL, when tuned to one profile view, is tuned to the mirror-symmetric profile view as well. And a typical neuron in AM is only weakly tuned to head orientation. Because of this increasing invariance to in-depth rotation, increasing to invariance to size and position (not shown) and increased average response latencies from ML to AL to AM, it is thought that the main AL properties, including mirror-symmetry, have to be understood as transformations of ML representations, and the main AM properties as transformations of AL representations [7].

Neurons in the intermediate face area AL, but not in preceding areas ML/MF, exhibit mirror-symmetric head orientation tuning [7]: an AL neuron tuned to one profile view of the head typically responds similarly to the opposite profile, but not to the front view (Figure 1B). This phenomenon is not predicted by classical and current hierarchical view-based models of the ventral stream, thus calling into question the idea that such models replicate the operations of the ventral stream.

Model Assumptions

Invariant information can be decoded from inferotemporal cortex, and the face areas within it, roughly 100ms after stimulus presentation [19, 20]. This, it has been argued, is too fast of a timescale for feedback to play a large role [21, 19, 22]. Thus while the actual face processing system might operate in other modes as well, fundamental properties of shape-selectivity and invariance need to be explained as a property of feedforward processing. We thus consider here a feed-forward face-processing model.

The population of neurons in ML/MF is highly face selective [9]. Thus, as in earlier computational models of face perception [23, 24, 18], we assume the existence of a functional gate that routes only images of face-like objects at the input of the face system.

We make the standard assumption that a neuron’s basic operation is a pooled dot product between inputs x and synaptic weight vectors { }, which, in our settings, correspond to rotation in depth, gi ∈ G, of template wk. This yields a complex-like cell computing

| (1) |

where η:ℝ → ℝ is a nonlinear function e.g., squaring [25], (Supplemental Mathematical Appendix Section 1.1). We call μ⃑(x) ∈ ℝK the signature of image x.

Approximate View Invariance

The model described so far encodes a given image of a face x by its similarity to a set of stored images of rotated in depth familiar faces (called templates) acquired during the algorithm’s (unsupervised) training. The key observation to understand why the computation in eq. (1) gives a view-tolerant representation is that the similarity function of two faces has a sharp peak when they are at the same orientation. That is, 〈x, giwk〉 is maximal when x and giwk depict faces at the same orientation, even when they depict different identities. Thus since eq. (1) sums over all template orientations it is always dominated by the contribution from the templates at orientations matching x. In other words, it is approximatively unchanged by rotation. (We prove this in Supplemental Mathematical Appendix Section 1).

Since this model is based on stored associations of frames, (see Figure 2) it can be interpreted as taking advantage of temporal continuity to learn the simple-to-complex wiring from their view-specific to view-tolerant layers. They associate temporally adjacent frames from the video of visual experience as in, e.g., [26]. This yields a view-tolerant signature because, in natural video, adjacent frames almost always depict the same object [27, 28, 29, 30, 26]. Short videos containing a face almost always contain multiple views of the same face. There is considerable evidence from physiology and psychophysics that the brain employs a learning rule taking advantage of this temporal continuity [31, 32, 33, 34].

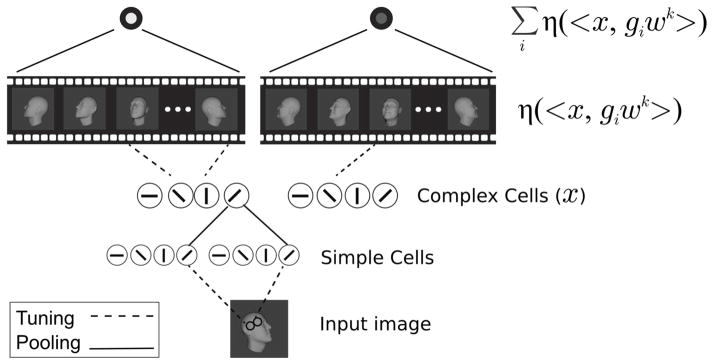

Figure 2. Illustration of the model.

Inputs are first encoded in a V1-like model. Its first layer (simple cells) corresponds to the S1 layer of the HMAX model. Its second layer (complex cells) corresponds to the C1 layer of HMAX [14]. In the view-based model, the V1-like encoding is then projected onto stored frames giwk at orientation i, from videos of transforming faces k = 1,…,K. Finally, the last layer is computed as μk = Σiη(〈x, giwk〉). That is, the kth element of the output is computed by summing over all responses to cells tuned to views of the kth template face. In the PCA-model, the V1-like encoding is instead projected onto templates describing the ith PC of the kth template face’s transformation video. The pooling in the final layer is then over all the PCs derived from the same identity. That is, it is computed as . In both the view-based and PCA models, units in the output layer pool over all the units in the previous layer corresponding to projections onto the same template individual’s views (view-based model) or PCs (PCA-model).

Thus, our assumption here is that in order to get invariance to non-affine transformations (like rotation in depth), it is necessary to have a learning rule that takes advantage of the temporal coherence of object identity. More formally, this procedure achieves tolerance to rotation in depth because the set of rotations in depth approximates the group structure of affine transformations in the plane (see Supplemental Mathematical Appendix Section 1). For the latter case, there are theorems guaranteeing invariance without loss of selectivity [35, 2].

Biologically Plausible Learning

The algorithm described so far can provide an invariant representation but is potentially biologically implausible: it requires the storing of discrete views observed during development. Instead we propose a more biologically plausible Hebb-like mechanism. Instead of storing separate frames, cortical neurons update their synaptic weights according to a Hebb-like rule. Over time, they become tuned to basis functions encoding different combinations of the set of views. Different Hebb-like rules lead to different sets of basis functions such as Independent Components (IC) or Principal Components (PC) [36]. Since each of the neurons becomes tuned to one of these basis functions instead of one of the views, a set of basis functions replaces the giwk in the pooling equation. The question then is whether invariance is still present.

The surprising answer is that supervised backpropagation learning and most unsupervised learning rules will learn approximate invariance to viewpoint for an appropriate training set (see Supplemental Mathematical Appendix Section 2 for proof). This is the case for unsupervised Hebb-like plasticity rules such as Oja’s, Foldiak’s trace rule, and ICA, all of which provide bases over which the pooling equation provides invariance. One such Hebbian learning scheme is Oja’s rule [37, 38]. It can be derived as the first order expansion of a normalized Hebb rule. The assumption of this normalization is plausible, because homeostatic plasticity mechanisms are widespread in cortex [39].

For learning rate α, input x, weight vector w, and output y = 〈x,w〉, Oja’s rule is

| (2) |

The weights of a neuron updated according to this rule will converge to the top principal component (PC) of the neuron’s past inputs, that is to an eigenvector of the input’s covariance C [37]. Thus the synaptic weights correspond to the solution of the eigenvector-eigenvalue equation Cw = λw. Plausible modifications of the rule -- involving added noise or inhibitory connections with similar neurons -- yield additional eigenvectors [40, 38]. This generalized Oja rule works as an online algorithm that computes the principal components of an incoming stream of images.

The outcome of learning is dictated by the underlying covariance of the inputs. Thus, in order for familiar faces to be stored so that the neural response modeled by Eq. 1 tolerates rotations in depth of novel faces, we propose that Oja-type plasticity leads to representations for which the synaptic templates are given by principal components (PCs) of an image sequence depicting the depth-rotation of face k. Consider an immature functional unit exposed, while in a plastic state, to all depth-rotations of a face. Learning will converge to the eigenvectors corresponding to the top r eigenvalues and thus to the subspace spanned by them. Supplemental Mathematical Appendix Section 2 eq. 8 shows that for each template face k, the signature obtained by pooling over all PCs represented by different is an invariant. This is analogous to Eq. 1 with giwk replaced by the i-th PC. Supplemental Mathematical Appendix Section 2 also shows that other learning rules for which the solutions are not PCs but a different set of basis functions, generate invariance as well---for instance, independent components. Figure 3B verifies that the signatures obtained by pooling over PCs are view-tolerant through a simulation of the task of matching unfamiliar faces despite depth-rotations.

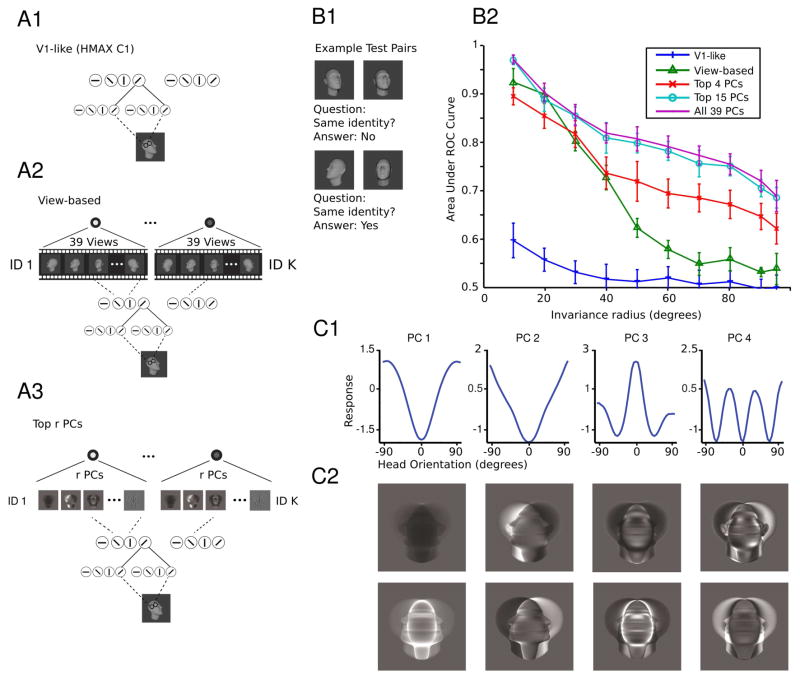

Figure 3. Model performance on the task of same-different face pair matching.

(A1–3) The structure of the models tested in B. (A1) The V1-like model encodes an input image in the C1 layer of HMAX which models complex cells in V1 [14]. (A2) The view-based model encodes an input image as where x is the V1-like encoding. (A3) The Top r PCs model encodes an input image as where x is the V1-like encoding. (B1–2) The test of depth-rotation invariance required discriminating unfamiliar faces. That is, the template faces did not appear in the test set so this is a test of depth-rotation invariance from a single example view. (B1) In each trial, two face images appear and the task is to indicate whether they depict the same or different faces. They may appear at different orientations from each other. To classify an image pair (a,b) as depicting the same or a different individual, the cosine similarity of the two representations was compared to a threshold. The threshold was varied systematically in order to compute the area under the ROC curve (AUC). (B2) In each test, 600 pairs of face images were sampled from the set of faces with orientations in the current testing interval. 300 pairs depicted the same individual and 300 pairs depicted different individuals. Testing intervals were ordered by inclusion and were always symmetric about 0° the set of frontal faces; i.e., they were [−x, x] for x = 5°,…, 95°. The radius of the testing interval x, dubbed the invariance radius, is the abscissa. AUC declines as the range of testing orientations is widened. As long as enough PCs are used, the proposed model performs on par with the view-based model. It even exceeds its performance if the complete set of PCs is used. Both models outperform the baseline HMAX C1 representation. The error bars were computed over repetitions of the experiment with different template and test sets, see Supplemental Methods. (C1–2) Mirror symmetric orientation tuning of the raw pixels-based model. 〈xθ, wi〉2 is shown as a function of the orientation of xθ. Here each curve represents a different PC. Below are shown the PCs visualized as images. They are either symmetric (even) or antisymmetric (odd) about the vertical midline. See also Figure S1.

Mirror Symmetry

Consider the case where, for each of the templates wk, the developing organism has been exposed to a sequence of images showing a single face rotating from a left profile to a right profile. Faces are approximately bilaterally symmetric. Thus, for each face view giwk, its reflection over the vertical midline g−iwk will also be in the training set. It turns out that this property---along with the assumption of Oja plasticity, but not other kinds of plasticity---is sufficient to explain mirror symmetric tuning curves. The argument is as follows.

Consider a face, x and a set of its rotations in 3D

where rθi is a rotation matrix in 3D of angle θi, w.r.t., e.g., the z axis.

Projecting onto 2D we have

Note now that, due to the bilateral symmetry, rθ−nx = RP(rθnx), n = 0,…,N where R is the reflection operator around the z axis. Thus the above set can be written as

where xn = P(rθnx), n = 0, …, N. Thus the set consists of a collection of orbits w.r.t. the group G = {e, R} of the templates {x0, …, xN}.

This property of the training set is needed in order to show that the signature μ(x) computed by pooling over the solutions to any equivariant learning rule, e.g., Hebb, Oja, Foldiak, ICA, or supervised backpropagation learning, is approximately invariant to depth-rotation (Supplemental Mathematical Appendix Sections 1–2).

The same property of the training set, in the specific case of the Oja learning rule, is used to prove that the solutions for the weights (i.e., the PCs) are either even or odd (Supplemental Mathematical Appendix Section 3). This in turn implies that the penultimate stage of the signature computation: the stage where η(〈x,w〉) is computed, will have orientation tuning curves that are either even or odd functions of the view angle.

Finally, to get mirror symmetric tuning curves like those in AL, we need one final assumption: the nonlinearity before pooling at the level of the “simple” cells in AL must be an even nonlinearity such as η(z) = z2. This is the same assumption as in the energy model of [25]. This assumption is needed in order to predict mirror symmetric tuning curves for the neurons corresponding to odd solutions to the Oja equation. The neurons corresponding to even solutions have mirror symmetric tuning curves regardless of whether η is even or odd.

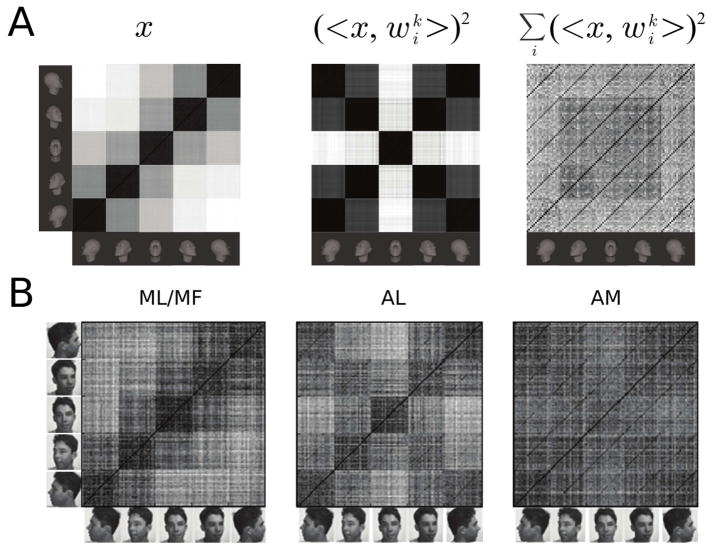

An orientation tuning curve is obtained by varying the orientation of the test image θ. Figure 3C shows example orientation tuning curves for the model based on a raw pixel representation. It plots 〈xθ,wi〉2 as a function of the test face’s orientation for five example units tuned to features with different corresponding eigenvalues. All of these tuning curves are symmetric about 0° -- i.e., the frontal face orientation. Figure 4A shows how the three model layers represent face view and identity and Figure 4B shows the same for populations of neurons recorded in ML/MF, AL, and AM. The model is the same one as in Figure 3B/C.

Figure 4. Population representations of face view and identity.

(A) Model population similarity matrices corresponding to the simulation of Figure 3B were obtained by computing Pearson’s linear correlation coefficient between each test sample pair. (Left) The similarity matrix for the V1-like representation, the C1 layer of HMAX [14]. (Middle) The similarity matrix for the penultimate layer of the PCA-model of Figure 3B. It models AL. (Right) The similarity matrix for the final layer of the PCA-model of Figure 3B. It models AM. (B) Compare the results in (A) to the corresponding neuronal similarity matrices from [7]. See also Figure S1.

These results imply that if neurons in AL learn according to a broad class of Hebb-like rules, then there will be invariance to viewpoint. Different AM cells would come to represent components of a view-invariant signature---one per neuron. Additionally, if the learning rule is of the Oja-type and the output nonlinearity is, at least roughly, squaring, then the model predicts that on the way to view invariance, mirror-symmetric tuning emerges, as a necessary consequence of the intrinsic bilateral symmetry of faces. In contrast to the Oja/PCA case, we show through a simulation analogous to Figure 3 that ICA does not generally yield mirror symmetric tuning curves (Supplemental Figure S1).

DISCUSSION

Neurons in the face network’s penultimate processing stage (AL) are tuned symmetrically to head orientation [7]. In the model proposed here, AL’s mirror symmetric tuning is explained as a necessary step along the way to computing a view-tolerant representation in the final face patch: AM. The argument begins with a theoretical characterization of how an idealized temporal association learning scheme could learn view-tolerant face representations like those in AM. It then proceeds by considering which of the various biologically-plausible learning rules satisfy requirements coming from the theory while also predicting mirror symmetric representation in the computation’s penultimate stage. It turns out that the Oja-like plasticity is the only biologically-plausible rule that fits.

This argument suggests that Oja-like plasticity may indeed be driving learning in AL. We now discuss potential implications of this hypothesis. While Oja’s learning rule was originally motivated on computational grounds [37], it is now believed to be a widespread mechanism in cortex because it describes the interaction of LTP, LTD, and synaptic scaling (a form of homeostatic plasticity) [39]. Biophysical mechanisms underlying homeostatic plasticity include activity-dependent scaling of AMPA receptor recycling rates [39]. It has been observed in mouse visual cortex in vivo under bi/monocular deprivation paradigms [41, 42] and is believed to be particularly important in developmental critical periods [43]. Such synaptic scaling has not yet been described in the face patch network, however, the present work can be seen as lending support to the hypothesis that it may act there. Just as monocular deprivation paradigms are used to study homeostatic plasticity in visual cortex, analogous “face deprivation” paradigms may reveal homeostatic plasticity in the developing face patch network.

In particular, to the best of our knowledge, this is the first account that explains why cells in the face network’s penultimate processing stage, AL, are tuned symmetrically to head orientation. This shows that feed-forward processing hierarchies can capture the main progression of face representations observed in the macaque brain. The mirror symmetry result is especially significant because it shows how a time-contiguous learning rule operating within this architecture can give rise to a counter-intuitive property that is not intrinsic to the temporal sequence of the stream of visual images impinging on the eyes. Rather, it arises as an interaction between an intrinsic property of the geometry of an object, here bilateral symmetry, and the computational strategy of the information-processing hierarchy, that is view-invariance.

Our model is designed to account only for the feed-forward processing in the face patch network (≤ 80ms from image onset). The representations computed in the first feedforward sweep are likely used to provide information about a few basic questions such as the identity or pose of a face. Feedback processing is likely more important at longer timescales. What computations might be implemented by the feedback processing occurring on longer timescales? One hypothesis addressed by the recent work of [44] combines a feedforward network like ours---also showing mirror-symmetric tuning of cell populations---with a probabilistic generative model. Thus our feedforward model may serve as a building block for future object-recognition models addressing brain areas such as prefrontal cortex, hippocampus and superior colliculus, integrating feed-forward processing with subsequent computational steps that involve eye-movements and their planning, together with task dependency and interactions with memory.

Supplementary Material

Acknowledgments

This material is based upon work supported by the Center for Brains, Minds, and Machines (CBMM), funded by NSF STC award CCF-1231216. This research was also sponsored by grants from the National Science Foundation (NSF-0640097, NSF-0827427), the National Eye Institute (R01 EY021594-01A1), and AFOSR-THRL (FA8650-05-C-7262). Additional support was provided by the Eugene McDermott Foundation. W.A.F. is a New York Stem Cell Foundation-Robertson Investigator.

Footnotes

AUTHOR CONTRIBUTIONS

J.Z.L., Q.L., F.A., W.A.F., and T.P. designed the experiments and analyses and wrote the paper. J.Z.L., Q.L., and F.A. conducted the experiments and performed the analyses.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.DiCarlo JJ, Zoccolan D, Rust NC. How does the brain solve visual object recognition? Neuron. 2012;73:415–434. doi: 10.1016/j.neuron.2012.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Anselmi F, Leibo JZ, Rosasco L, Mutch J, Tacchetti A, Poggio T. Unsupervised learning of invariant representations. Theor Comput Sci. 2016;633:112–121. [Google Scholar]

- 3.Serre T, Oliva A, Poggio T. A feedforward architecture accounts for rapid categorization. Proc Natl Acad Sci USA. 2007;104:6424–6429. doi: 10.1073/pnas.0700622104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bart E, Ullman S. Class-based feature matching across unrestricted transformations. IEEE Trans Pattern Anal Mach Intell. 2008;30:1618–1631. doi: 10.1109/TPAMI.2007.70818. [DOI] [PubMed] [Google Scholar]

- 5.Rolls ET. Invariant visual object and face recognition: neural and computational bases, and a model. VisNet Front in Comput Neurosci. 2012:6. doi: 10.3389/fncom.2012.00035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Krizhevsky A, Sutskever I, Hinton G. ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst (NIPS) 2012 [Google Scholar]

- 7.Freiwald WA, Tsao DY. Functional Compartmentalization and Viewpoint Generalization Within the Macaque Face-Processing System. Science. 2010;330:845–851. doi: 10.1126/science.1194908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tsao DY, Freiwald WA, Knutsen TA, Mandeville JB, Tootell R. Faces and objects in macaque cerebral cortex. Nat Neurosci. 2003;6:989–995. doi: 10.1038/nn1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tsao DY, Freiwald WA, Tootell R, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ku SP, Tolias AS, Logothetis NK, Goense J. fMRI of the Face-Processing Network in the Ventral Temporal Lobe of Awake and Anesthetized Macaques. Neuron. 2011;70:352–362. doi: 10.1016/j.neuron.2011.02.048. [DOI] [PubMed] [Google Scholar]

- 11.Afraz A, Boyden ES, DiCarlo JJ. Optogenetic and pharmacological suppression of spatial clusters of face neurons reveal their causal role in face gender discrimination. Proc Natl Acad Sci USA. 2015;112:6730–6735. doi: 10.1073/pnas.1423328112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Moeller S, Freiwald WA, Tsao DY. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science. 2008;320:1355–1359. doi: 10.1126/science.1157436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tsao DY, Moeller S, Freiwald WA. Comparing face patch systems in macaques and humans. Proc Natl Acad Sci USA. 2008;105:19514–19519. doi: 10.1073/pnas.0809662105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Riesenhuber M, Poggio T. Hierarchical models of object recognition in cortex. Nat Neurosci. 1999;2:1019–1025. doi: 10.1038/14819. [DOI] [PubMed] [Google Scholar]

- 15.Poggio T, Edelman S. A network that learns to recognize three-dimensional objects. Nature. 1990;343:263–266. doi: 10.1038/343263a0. [DOI] [PubMed] [Google Scholar]

- 16.Bart E, Byvatov E, Ullman S. View-invariant recognition using corresponding object fragments. Proc Euro Conf Comp Vision (ECCV) 2004 [Google Scholar]

- 17.Leibo JZ, Liao Q, Anselmi F, Poggio T. The Invariance Hypothesis Implies Domain-Specific Regions in Visual Cortex. PLoS Comput Biol. 2015;11:e1004390. doi: 10.1371/journal.pcbi.1004390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Farzmahdi A, Rajaei K, Ghodrati M, Ebrahimpour R, Khaligh-Razavi SM. A specialized face-processing model inspired by the organization of monkey face patches explains several face-specific phenomena observed in humans. Scientific Reports. 2016:6. doi: 10.1038/srep25025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast Readout of Object Identity from Macaque Inferior Temporal Cortex. Science. 2005;310:863–866. doi: 10.1126/science.1117593. [DOI] [PubMed] [Google Scholar]

- 20.Meyers EM, Borzello M, Freiwald WA, Tsao DY. Intelligent Information Loss: The Coding of Facial Identity, Head Pose, and Non-Face Information in the Macaque Face Patch System. J Neurosci. 2015;35:7069–7081. doi: 10.1523/JNEUROSCI.3086-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–522. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- 22.Isik L, Meyers EM, Leibo JZ, Poggio T. The dynamics of invariant object recognition in the human visual system. J Neurophys. 2014;111:91–102. doi: 10.1152/jn.00394.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bruce V, Young A. Understanding face recognition. Brit J Psychol. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- 24.Tan C, Poggio T. Neural Tuning Size in a Model of Primate Visual Processing Accounts for Three Key Markers of Holistic Face Processing. PLoS ONE. 2016;1:e0150980. doi: 10.1371/journal.pone.0150980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Adelson EH, Bergen JR. Spatiotemporal energy models for the perception of motion. J Opt Soc Am A. 1985;2:284–299. doi: 10.1364/josaa.2.000284. [DOI] [PubMed] [Google Scholar]

- 26.Isik L, Leibo JZ, Poggio T. Learning and disrupting invariance in visual recognition with a temporal association rule. Front Comput Neurosci. 2012:6. doi: 10.3389/fncom.2012.00037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hinton GE, Becker S. An unsupervised learning procedure that discovers surfaces in random-dot stereograms. Proc. Int. Jt. Conf. Neural Netw; Washington DC. 1990. [Google Scholar]

- 28.Földiák P. Learning invariance from transformation sequences. Neural Comput. 1991;3:194–200. doi: 10.1162/neco.1991.3.2.194. [DOI] [PubMed] [Google Scholar]

- 29.Wiskott L, Sejnowski TJ. Slow feature analysis: Unsupervised learning of invariances. Neural Comput. 2002;14:715–770. doi: 10.1162/089976602317318938. [DOI] [PubMed] [Google Scholar]

- 30.Berkes P, Turner RE, Sahani M. A Structured Model of Video Reproduces Primary Visual Cortical Organisation. PLoS Comput Biol. 2009;5:e1000495. doi: 10.1371/journal.pcbi.1000495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Miyashita Y. Neuronal correlate of visual associative long-term memory in the primate temporal cortex. Nature. 1988;335:817–820. doi: 10.1038/335817a0. [DOI] [PubMed] [Google Scholar]

- 32.Wallis G, Bülthoff HH. Effects of temporal association on recognition memory. Proc Natl Acad Sci USA. 2001;98:4800–4804. doi: 10.1073/pnas.071028598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cox DD, Meier P, Oertelt N, DiCarlo JJ. ‘Breaking’ position-invariant object recognition. Nat Neurosci. 2005;8:1145–1147. doi: 10.1038/nn1519. [DOI] [PubMed] [Google Scholar]

- 34.Li N, DiCarlo JJ. Unsupervised Natural Visual Experience Rapidly Reshapes Size- Invariant Object Representation in Inferior Temporal Cortex. Neuron. 2010;67:1062–1075. doi: 10.1016/j.neuron.2010.08.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Anselmi F, Rosasco L, Poggio T. On invariance and selectivity in representation learning. 2015 arXiv preprint arXiv:1503.05938. [Google Scholar]

- 36.Hassoun MH. Fundamentals of Artificial Neural Networks. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- 37.Oja E. Simplified neuron model as a principal component analyzer. J Math Biol. 1982;15:267–273. doi: 10.1007/BF00275687. [DOI] [PubMed] [Google Scholar]

- 38.Oja E. Principal components, minor components, and linear neural networks. Neural Netw. 1992;5:927–935. [Google Scholar]

- 39.Abbott LF, Nelson SB. Synaptic plasticity: taming the beast. Nat Neurosci. 2000;3:1178–1183. doi: 10.1038/81453. [DOI] [PubMed] [Google Scholar]

- 40.Sanger TD. Optimal unsupervised learning in a single-layer linear feedforward neural network. Neural Netw. 1989;2:459–473. [Google Scholar]

- 41.Keck T, Keller GB, Jacobsen RI, Eysel UT, Bonhoeffer T, Hübener M. Synaptic scaling and homeostatic plasticity in the mouse visual cortex in vivo. Neuron. 2013;80:327–334. doi: 10.1016/j.neuron.2013.08.018. [DOI] [PubMed] [Google Scholar]

- 42.Hengen KB, Lambo ME, Van Hooser SD, Katz DB, Turrigiano GG. Firing rate homeostasis in visual cortex of freely behaving rodents. Neuron. 2013;80:335–342. doi: 10.1016/j.neuron.2013.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Turrigiano GG, Nelson SB. Homeostatic plasticity in the developing nervous system. Nat Rev Neurosci. 2004;5:97–107. doi: 10.1038/nrn1327. [DOI] [PubMed] [Google Scholar]

- 44.Yildirim I, Kulkarni TD, Freiwald WA, Tenenbaum JB. Efficient and robust analysis-by-synthesis in vision: A computational framework, behavioral tests, and modeling neuronal representations. Proc Ann Conf Cog Sci Soc 2015 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.