Summary

Longitudinal monitoring of biomarkers is often helpful for predicting disease or a poor clinical outcome. In this paper, We consider the prediction of both large and small-for-gestational-age births using longitudinal ultrasound measurements, and attempt to identify subgroups of women for whom prediction is more (or less) accurate, should they exist. We propose a tree-based approach to identifying such subgroups, and a pruning algorithm which explicitly incorporates a desired type-I error rate, allowing us to control the risk of false discovery of subgroups. The proposed methods are applied to data from the Scandinavian Fetal Growth Study, and are evaluated via simulations.

Keywords: Fetal Growth, Personalized Medicine, Prediction, Recursive Partitioning, Shared Random Effects Models

1. Introduction

In obstetrics, it is common to monitor fetal development by collecting repeated ultrasound measurements, which may be useful for predicting a variety of poor pregnancy outcomes (Albert, 2012). For example, estimated fetal weight (EFW), a derived summary of multiple anthropometric ultrasound measurements (Hadlock et al., 1991), is often measured repeatedly in high risk pregnancies. The use of longitudinal ultrasound measurements to predict a binary outcome at birth has been considered by a number of authors, including Albert (2012); Zhang et al. (2012) and Liu and Albert (2014). Much of this research has been on how to best use these longitudinal measurements to predict a given outcome (i.e. selecting the best modeling framework) and, more generally, on whether or not the longitudinal measurements are actually useful in predicting that outcome. In this context, the longitudinal biomarker measurements are considered to be “useful” when they improve prediction of the binary outcome over standard covariates for the entire population; however, it is also possible that good predictive accuracy is only seen in a subgroup of the population. If such subgroups exist, it is desirable that they be identified in order to guide the design of follow-up schemes in future studies.

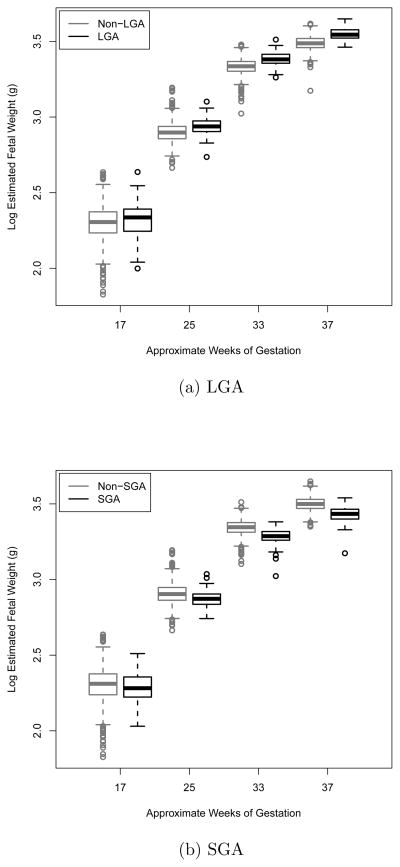

This work was motivated by the Eunice Kennedy Shriver National Institute of Child Health and Human Development (NICHD) study of successive small-for-gestational-age births in Scandinavia, a prospective study designed to investigate the etiology and consequences of intrauterine growth retardation (Bakketeig et al., 1993). The study population consisted of multiparous women of Caucasian origin who spoke one of the Scandanavian languages and had a singleton pregnancy, and each woman was scheduled to receive an ultrasound at approximately 17, 25, 33 and 37 weeks of gestation. Zhang et al. (2012) showed that longitudinal measurements taken at approximately 17 to 25 weeks of gestation were not predictive of large-for-gestational-age (LGA) birth, while measurements taken closer to full term (37 weeks) were highly predictive of LGA. This is illustrated in figure 1(a), where we can see that separation in the distribution of EFW between LGA and non-LGA at approximately 17 weeks of gestation is minimal, but gets progressively larger until, at approximately 37 weeks of gestation, the groups are quite distinct. Looking at figure 1(b), a similar trend can be seen for small-for-gestational-age (SGA) birth. While important clinically, these results suggest that early monitoring of a pregnancy for LGA and SGA may not be useful. Given these results, two natural clinical questions are: (1) are there subgroups of women for whom early monitoring for LGA or SGA may be effective and (2) are there some women for whom prediction is more or less accurate if all available measurements are used? The focus of this paper will be on the development of statistical methods which can be used to identify such subgroups.

Fig. 1.

Log estimated fetal weight at nominal observation times

One common way to identify subgroups is to use tree-based methods (Negassa et al., 2005; Hothorn et al., 2006; Su et al., 2008, 2009; Foster et al., 2011; Lipkovich et al., 2011; Loh et al., 2015). Recently, a number of authors have also proposed Bayesian trees-based methods, such as Bayesian additive regression trees (BART) (Chipman et al., 2010; Bleich et al., 2014). Tree-based methods work by partitioning the data into subgroups defined by the covariates, within which a simple model is fit to predict the outcome (Hastie et al., 2009). Tree-based methods make only mild model assumptions, and are capable of handing large numbers of covariates with complicated interactions. Moreover, their simple structure makes them easy to interpret. Though much consideration has been given to the use of tree-based approaches for identifying treatment-by-covariate interactions, we are unaware of any previous work on methods designed to identify subgroups of enhanced predictive accuracy, tree-based or otherwise. The latter will be the focus of this paper. Specifically, we propose a tree-based approach to identifying subgroups, within which longitudinal biomarker measurements may be especially useful in predicting a subsequent binary outcome.

A well-known problem in subgroup analysis is that of false positive findings (Yusuf et al., 1991; Peto et al., 1995; Assmann et al., 2000; Brookes et al., 2001; Berger et al., 2014). Tree-based methods recursively choose the “best” partition of the remaining data until some pre-defined “stopping” conditions are met, often leading to very large initial trees. These initial trees are then “pruned,” i.e. weak partitions are collapsed using some pruning function, reducing the initial tree to a less complex subtree. This pruning can help to remove spurious partitions, but often will still result in overfitting, i.e. partitioning of the data, even when no true subgroups exist. We consider this type of false positive finding to be a type-I error. In the case of our example, this means partitioning the data when the truth is that the longitudinal biomarker measurements are equally useful in predicting the binary outcome across the population. In this paper, we propose a parametric bootstrap-based procedure to explicitly incorporate the desired type-I error rate into our pruning algorithm. Though not considered in this paper, an alternative approach is to take a Bayesian perspective and control for multiplicity by assigning prior probabilities to possible subgroup effects (Berger et al., 2014).

The remainder of this paper is organized as follows. In Section 2, we introduce methods for prediction of a binary outcome using longitudinal measurements, and propose a tree-based approach to identifying subgroups in which this prediction is especially good (or bad). In Section 3, the proposed methods are applied to data from the Scandinavian Fetal Growth Study (Bakketeig et al., 1993) and in Section 4 we present simulation studies to examine the performance of the proposed methodology. A discussion is given in Section 5.

2. General Methods and Extension to Subgroup Identification

Let Di, i = 1, . . . , N be the binary outcome indicator of interest for subject i, where Di = 1 if the subject experienced the outcome and Di = 0 otherwise, and let Yi = (Yi1, . . . , Yini)T be the vector of longitudinal biomarker measurements for subject i, taken at times ti1, . . . , tini. Additionally, let Z be an N × p baseline covariate matrix with columns Z1, . . . , Zp and rows z1, . . . , zN.

2.1. The Shared Random Effects Model

To predict the binary outcome using the longitudinal biomarker, we employ the shared random effects (SRE) model (Albert, 2012). This method is designed to jointly model the longitudinal biomarker process and the binary outcome, conditional on some random effects. These random effects are shared by both models, which introduces a correlation between the biomarker and binary outcome. Because our ultimate interest is in predicting LGA and SGA births using longitudinal fetal growth measurements, we consider a specific version of the SRE model which has been shown to be appropriate in this case. Specifically, we assume that the longitudinal measurements follow a linear mixed model:

| (1) |

where β are fixed effect parameters, bi are random effects, and errors εij follow independent normal distributions with mean 0 and variance . We use the general notation Xi and Wi to represent the fixed and random effect design matrices, respectively, for subject i. In this paper, we will consider the special case where

however, we have kept these matrices distinct in (1) because in practice, these two matrices need not be the same. We limited ourselves to quadratic time effects, as this model performed very well for the Scandinavian Fetal Growth data, but if desired, higher order terms could easily be added. It is worth noting that the variance of the estimated fetal weight changes with time. Though not obvious from the statement of the model, we have found that the random effects in (1) are able to effectively capture this heteroskedasticity, giving estimated variances which are very similar to the observed variances at each time point. In addition, though we have not included main effects for zi in (1), such terms could certainly be added. We chose to exclude these terms because our interest is in the prediction of Di using Yi, and adding these baseline covariates to (1) did not have a noticeable impact on this prediction. Intuitively, we think this happens because, if such terms are left out, they are essentially absorbed by the random intercept, and are thus still “accounted for” in (1).

We introduce the association between Di and the longitudinal marker through the shared random effects, bi. That is, we link the binary outcome Di to the longitudinal marker:

| (2) |

where, Φ is the probit link function, and t* is a time point near the time at which the binary outcome is generally observed (e.g. 39 weeks, a time point near the time of birth). Thus, the longitudinal measurements are related to the probability of experiencing the outcome Di = 1 through an individual’s predicted measurement at time t*. For simplicity, we use the same t* value for all subjects. Note that the strength of the association between the binary outcome and the longitudinal marker measurements is controlled by α in (2), i.e. if α = 0, P(Di = 1|Yi, zi) = P (Di = 1|zi), meaning Di and Yi are independent given zi. To simplify parameter estimation, the random effects are assumed to follow a multivariate normal distribution with mean 0 and variance V.

As discussed by Albert (2012), when the random effects are assumed to follow a normal distribution, parameter estimates can be obtained using a two-stage pseudo-likelihood approach. In stage 1, we fit the linear mixed model (1) using standard software, and obtain the posterior mean of the random effects for subject i, b̂i, i.e. the empirical Bayes estimator. In stage 2, the conditional likelihood of D given Y and Z is maximized given the parameter estimates from stage 1. This conditional likelihood can be obtained following arguments similar to Albert (2012). In particular, note that when X ~ N(μ, σ2). Thus, P(Di = 1|Yi, zi) can be expressed as follows:

In the next section, covariate-by-ci(t*) interaction terms will also be included in Model (2) in order to construct a regression tree; however, the conditional likelihood-based estimation procedure described above still holds in principle. More details will be given below.

2.2. Subgroup Identification

To identify subgroups, we follow the general CART framework. That is, we begin by splitting the data into two subgroups, or nodes, {i : zik > ωk} and {i : zik ≤ ωk}, by finding the covariate zk, k ∈ [1, 2, . . . , p] and corresponding cutpoint ωk which maximize the splitting criterion of interest. Note that for each k ∈ [1, 2, . . . , p], ωk could potentially be any one of the unique values of covariate zk observed in the data. This process is then repeated within each of these “child” nodes. We continue in this fashion until the data can no longer be subdivided, based on some pre-defined rules which govern the size of the tree. For instance, one may wish to limit the depth of the tree (i.e. how many times the above process is repeated), or set a minimum number of subjects that must be present in order to further subdivide a particular node in the tree. This procedure generally results in a large initial tree. Once this initial tree is obtained, a pruning algorithm is used to collapse “weak” splits, thereby reducing the large initial tree to the optimal subtree (given a specific pruning function). Details of our specific splitting criteria and pruning algorithm are given below. For the remainder of the paper, ξ will denote indices of subjects in the child node within which additional splits are currently being evaluated. When we are choosing the first split, ξ includes all subjects, but for subsequent splits, ξ will only contain a subgroup of the subjects.

2.2.1. Choosing Splits

Identifying subgroups of the population within which prediction based on joint models (1) and (2) is especially accurate amounts to identifying subgroups within which ci(t*) is especially useful in classifying Di. In other words, we would like to identify covariate-by-c(t*) interactions. To achieve this, we will choose splits using a statistic which is analogous to that of Su et al. (2009). Let ψk be the one-dimensional partition of ξ defined by covariate zk and cutpoint ωk. Our splitting criterion, sP (ψk) (or more generally sP (ψ)), will be the squared statistic which would be used to test H0 : α2 = 0 in the model

| (3) |

i.e. sP (ψk) = [α̂2/SE(α̂2)]2, where SE(α̂2) is a standard error estimate for α̂2. Note that, in (3), the level of enhancement in the region zk > ωk is controlled by the magnitude of α2, i.e. if α2 = 0, this region is not enhanced. Thus, when evaluating splits, we will choose the zk/ωk pair which maximizes sP (ψk), as this suggests the highest degree of enhancement. Under Model (3), and following arguments similar to those used in Section 2.1 for Model (2), we have

| (4) |

We obtain sP(ψ) by maximizing the conditional log-likelihood of D given Z, Y:

| (5) |

with respect to η0, η1, α0, α1 and α2, where Ψ(4) denotes the conditional probability defined by equation (4). In practice, when maximizing (5), bi is replaced by the empirical Bayes estimator, b̂i, i.e. E(ci(t*)|Yi, zi) is replaced by , and we replace var[E(ci(t*)|Yi, zi) − ci(t*)] with an estimate of var[ĉi(t*)−ci(t*)] (see Albert (2012) for additional details). As noted by Albert (2012), equation (4) allows for the estimation of η0, η1, α0, α1 and α2 accounting for the calibration error in using a plug-in estimator for bi. Occasionally, when analyzing real data, the hessian matrix for η0, η1, α0, α1 and α2 can be “nearly singular,” causing numerical problems. To combat this, we include a very small ridge penalty on η0, η1, α0, α1 and α2 (specifically, ) in (5) when analyzing real data. This does not noticeably impact the parameter estimates, but dramatically improves numerical stability.

It is worth noting that, in order to identify the optimal split in practice, all possible cutpoints for each of the p covariates must be considered every time we partition the data, which means sP(ψk) is generally computed a very large number of times when constructing a tree. Thus, constructing a tree using sP (ψ) can be computationally expensive, particularly when some or all of the covariates are continuous. For this reason, we also consider a splitting criterion based on a linear model, which is more computationally efficient than sP (ψ). In particular, we consider the square of the statistic that would be used to test H0 : γ3 = 0 in the model

| (6) |

This criterion will be referred to as sL(ψ), and can be obtained using standard software. In practice, ci(t*) is replaced by ĉi(t*) to obtain sL(ψ). It should be noted that when fitting model (6), we assume ĉi(t*), i = 1, …, n are iid normal random variables. Though ĉi(t*), i = 1, …, n are not independent in the finite sample because of the plug-in estimator β̂, they are asymptotically independent following the results of Jiang (1998), and thus we feel that our assumptions of independence and normality are reasonable.

Following the brief arguments outlined below, (6) can be viewed as an approximation to (3). Note that , so (3) can be approximately rewritten as

Furthermore, note that if (6) is assumed to hold, so that , and in addition, we assume that P(Di = 1|zi) follows a logistic model:

| (7) |

then we have

Thus, the linear model (6) is compatible with the probit model (3).

2.2.2. Pruning and Controlling Type-I Error

To select the “optimal” subtree, we employ the interaction-complexity measure proposed by Su et al. (2009):

| (8) |

where T is some tree, T − T̃ is the set of internal nodes of T, ||T − T̃ is the number of internal nodes of T, i.e. its complexity, G(h) is the splitting criterion value (sP or sL) corresponding to node h, and λ ≥ 0 is a complexity parameter. Choosing λ = 0 returns the initial tree (i.e. no pruning), and increasing λ produces a nested sequence of subtrees, eventually leading to a “null” tree, containing no splits (if true, this means that predictive accuracy is the same for all subjects).

As previously mentioned, in order to control the risk of false positives, we explicitly incorporate the desired type-I error rate into the selection of λ. To do this, we employ a parametric bootstrap based on the joint models (1) and (2). Model (2) can be viewed as a “null” model, as it assumes a fixed α for the entire population. Thus, we can use the resulting estimated conditional probabilities to perform a parametric bootstrap. Suppose we have fit the joint models (1) and (2), giving estimates of P(Di = 1|Yi, zi) for each subject, as well as random effect estimates, b̂1, …, b̂N. In addition, suppose we have obtained a tree, T0, using the above algorithm with one of the proposed splitting criteria. We now select λ as follows:

Using the estimated probabilities, generate new binary outcome indicators, , where .

Using data , obtain a tree, T(1).

Using (8), obtain the sequence of λ values which correspond to each subtree, and select the smallest value of λ which returns the null tree, say λ(1).

Repeat steps 2 and 3 M − 1 times, giving M “null” complexity parameter values, λ(1), …, λ(M).

Choose the “final” λ value, λδ, to be the 1 − δth percentile of λ(1), …, λ(M), where δ is the desired type-I error rate. The “final” tree, Tδ, is then found by using (8) to obtain the subtree for T0 which corresponds to λδ.

Because the bootstrap data is generated under a null model, using λδ to select the final tree should lead to the desired type-I error. Note that, though we use (8), we select the tuning parameter value which corresponds to a desired type-I error rate, rather than that which minimizes (8) (or a cross-validated estimate of (8)). For the remainder of this paper, λδP and λδL will denote the λδ values for trees constructed using sP(ψ) and sL(ψ) respectively.

2.2.3. Evaluation of Identified Subgroups

The selection of a non-null tree suggests that one or more subgroups exist; however, once a tree is selected, one will generally wish to assess the degree of enhancement within each of the identified subgroups, which in our case means assessing the strength of the relationship between ci(t*) and Di within each subgroup. To do this for a given subgroup, say A, we simply obtain the within-A test statistic for α, i.e. α̂A/SE(α̂A), by re-fitting (2) using only subjects in A. We then compare âA/SE(α̂A) to the complete-data statistic, α̂/SE(α̂). Note that, because trees may vary widely in complexity, A could be one-dimensional or multi-dimensional. It is desirable that we remain consistent with the form of the model used to choose splits, so when choosing splits with sL(ψ), we assess enhancement of identified subgroups using the linear analog of (2). That is, the enhancement of A is assessed using γ̂1A/SE(γ̂1A) based on ci(t*) = γ0 + θTzi + γ1Di + εi. These statistics may be positive or negative, and any value which is larger than the complete data value in magnitude suggests potential enhancement. Rather than suggesting a ‘hard’ rule for determining enhancement, we recommend that the practitioner compute these statistics and use them as a guide to describe the characteristics of the identified subgroups. If preferred, one could instead directly compare the within-A estimate and the complete data estimates (i.e. without rescaling by the standard errors).

2.2.4. Evaluating Early Prediction

Recall that one of our goals is to identify subjects for whom accurate prediction can be achieved using only early measurements, i.e. those taken at or before some pre-specified time t̃. To address this issue, we will consider the use of reduced-data random effect estimates to compute our splitting criteria.

When considering all biomarker measurements, splits are chosen using the best linear unbiased prediction (BLUP) of bi, which for subject i is

| (9) |

where V̂ is the estimated random effect variance, σ̂ε is the estimated error standard deviation, β̂ is the fixed effect parameter estimate, and Ini is the ni × ni identity matrix. Thus, to assess early prediction, we simply compute (9) using only those observations which were taken at or before time t̃. That is, we select splits using the reduced data BLUP of bi:

| (10) |

where the superscript Ωi(t̃) indicates that only the rows which correspond to observations taken at or before time t̃ are used. It should be noted that V̂, σ̂ε and β̂ in (10) are exactly the same as in (9), i.e. they are calculated using data from all time points. The proposed methods are designed to use data from all time points in order to determine if prediction based on a subgroup of biomarker measurements is more accurate in certain subgroups, so it is sensible to use all data to obtain these estimates, as the true underlying values of these parameters are fixed with respect to the number of biomarker observations.

3. Application to Fetal Growth Data

We are interested in identifying subgroups of women for whom prediction of LGA or SGA based on some or all of the available ultrasound measurements is more (or less) accurate, should they exist. This is an important problem, as early prediction could have implications for intervention. A SGA birth might indicate growth restriction, while a LGA birth could be associated with an increased likelihood of being overweight in adulthood. If such a subgroup is identified, it could also have implications for the number and timing of ultrasound measurements in pregnancy. For instance, should everybody receive multiple ultrasounds throughout pregnancy, or should some women only receive them earlier or later in pregnancy? In addition, the identification of subgroups may suggest that risk stratification should be done separately within these subgroups.

To this end, the proposed methods were applied to data from the NICHD study of successive small-for-gestational-age births in Scandinavia. The study included 1945 multiparous women of Caucasian origin who spoke one of the Scandanavian languages, had a singleton pregnancy, and were registered by the study center before 20 weeks of gestation (Zhang et al., 2012). About 30% of these women were a random reference sample from a Nordic population of Caucasians, and the rest were considered to be at high risk for a SGA birth, meaning they had previously delivered a low-birthweight (< 2750 g) infant, experienced a stillborn pregnancy or neonatal death, experienced two or more spontaneous miscarriages, had a history of phlebitis, had initial systolic blood pressure above 140 mmHg, had a previous preterm birth, had a prepregnancy weight at first clinic visit of less than 50 kg, currently smoked or smoked at conception, or currently used alcohol (Zhang et al., 2012). Each woman was scheduled to receive an ultrasound at approximately 17, 25, 33 and 37 weeks of gestation. In order to compare subgroup identification when predicting with only early measurements versus using complete longitudinal data, we limit ourselves to the observations with complete baseline covariate data, resulting in an analysis sample of 1699 subjects.

We considered estimated fetal weight on the log scale, which was measured at approximately 17, 25, 33 and 37 weeks of gestation. Using this biomarker, we separately considered, the prediction of LGA and SGA births. The baseline covariates considered as candidates to potentially define subgroups in this analysis were the mother’s age (continuous), smoking behavior (average number of cigarettes smoked per day - essentially continuous), body mass index (BMI) (continuous) and history of previous SGA birth (binary – yes or no). To obtain estimates of the fixed effect parameters, and the error and random effect variances, (1) was fit using the ‘complete’ data, i.e. all available observations on all 1699 subjects. For both LGA and SGA, we constructed trees using the complete data BLUPs, as well as those based only on measurements taken at or before 35, 27 and 19 weeks of gestation. That is, we considered t̃= 41 weeks (the maximum observed gestational age), 35 weeks, 27 weeks and 19 weeks. This was done twice for each outcome; once using sL(ψ) and once using sP (ψ). Thus, in total, 16 trees were constructed. To help ensure numerical stability, each initial tree was required to have at least 20 observations in each node, at least five cases and controls in each node, and was grown to a maximum depth of five. We also include a small ridge penalty (10−6) when maximizing (5), as noted in Section 2.2.1. All initial trees were pruned using (8) by selecting the complexity parameter value corresponding to a type-I error level of 0.05 based on 1000 bootstrap data sets, i.e. λ0.05P for sP (ψ) and λ0.05L for sL(ψ).

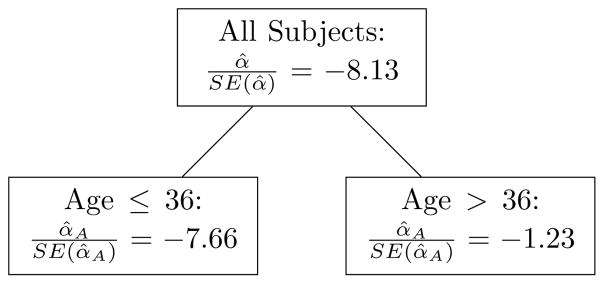

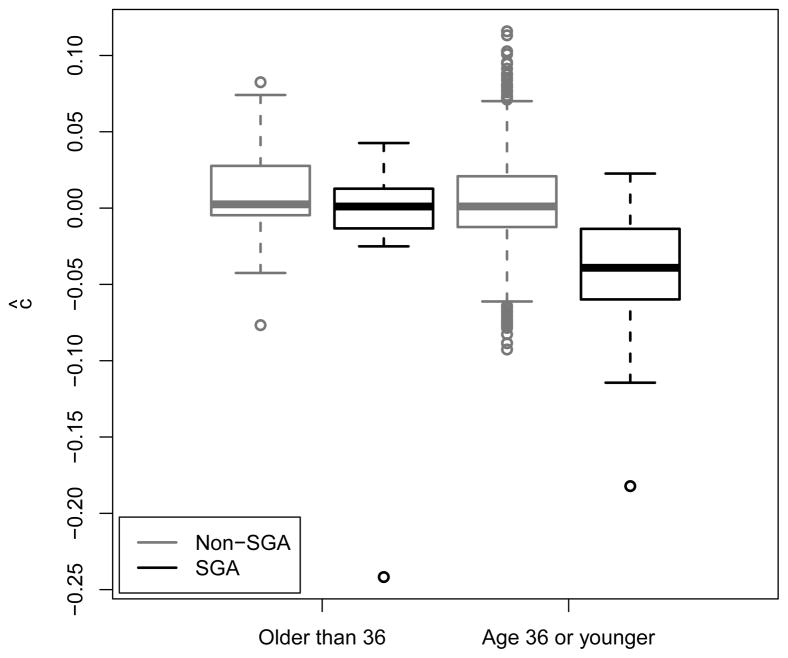

Among the 16 trees we considered, only the SGA tree constructed using the complete data BLUPs and Probit splitting criterion (sP (ψ)) was found to be non-null at the 0.05 level. In fact, the largest complexity parameter value which corresponds to this tree is larger than all but five of the 1000 boostrap λP values, meaning that this tree is actually non-null at the 0.005 level. Thus, this tree would still have been identified with a Bonferroni correction within each outcome (i.e. set the type-I error level at ). As shown in Figure 2, the identified tree suggests that prediction of SGA birth based on measurements taken at or before 41 weeks of gestation is quite good for women who are 36 years of age or younger (n = 1629), but is relatively poor for women over the age of 36 (n = 70). This potential interaction is illustrated in figure 3, where we can see considerable separation between SGA and non-SGA births in the younger group, but only minimal separation in the older group.

Fig. 2.

SGA tree for t̃= 41 weeks, identified using sP (ψ).

Fig. 3.

SGA interaction box plot for t̃= 41

4. Simulations

To assess the performance of the proposed methods, a simulation study was performed. For six of our scenarios, 1000 data sets of size 1000 were generated from the joint models:

where ci(4.5) = bi0 +4.5bi1 +4.52bi2, A = {z : z1 = 1, z2 = 1}, ε’s were iid N (0, 0.5), Z’s were iid Bernoulli(0.5), observation times were t = 1, 2, 3, and 4, bi ~ N (0, V), with

and the correlation among the random effects was 0.4. The choice of t* was motivated by our interest in fetal growth, with 4.5 being analogous to a gestational age of approximately 39 weeks. The probit model intercept of −1.3 was chosen to give a prevalence of approximately 20%.

Scenario 1 is a null case in which, for all subjects, the longitudinal measurements are useless for predicting the binary outcome. Scenario 2 is also null, i.e. no true subgroup exists, but in this case, prediction is very good for all subjects. In Scenario 3, prediction is moderately good for subjects with zi1 = 1 and zi2 = 1, but for all others, Yi is useless for predicting Di. In Scenario 4, prediction is at least moderately good for all subjects, and slightly enhanced for those with zi1 = 1 and zi2 = 1. In Scenario 5, prediction is again at least moderately good for all subjects, but in this case, subjects with zi1 = 1 and zi2 = 1 are very strongly enhanced. Scenarios 1–5 were chosen to assess the performance of the proposed methods when all longitudinal measurements are used (i.e. t̃= 4); however, Scenario 6 is an “early prediction” case, with α0 = 0.1 and α1 = 2 being selected so that prediction using only observations taken at time t̃ = 1 was poor overall, but good for subjects with zi1 = 1 and zi2 = 1. Thus, in Scenario 6, only the first biomarker measurement will be used to select splits.

We also considered a scenario based on the Scandinavian Fetal Growth data, which we refer to as Scenario 7. To obtain all parameter values for this scenario, the Scandinavian data were modeled using binary versions of the three continuous baseline covariates to allow for computational efficiency in the simulations. In particular, mother’s age was defined to be 1 if it was greater than 36 and 0 otherwise (the subgroup identified in our real data analysis - about 5% of values are 1s), and mother’s BMI and average cigarettes smoked per day were defined to be 1 for values larger than the median and 0 otherwise. History of SGA birth was not changed, as it was already binary (approximately 25% 1s). Once the parameter estimates were obtained, we generated 1000 data sets of size 1700 (the number of subjects in the Scandinavian data set) from the models:

where ε’s were iid N(0, 0.023). Covariates , and were analogous to the binary age, binary BMI, history of SGA birth, and binary cigarettes smoked covariates mentioned above, and were thus generated as iid Bernoulli random variables with “success” probabilities of 0.05, 0.5, 0.25 and 0.5, respectively. Observation times were t = 1, 2, 3, and 4, and bi was multivariate normal with covariance and correlation matrices (respectively)

For each simulated data set, we constructed initial trees using sP (ψ) and sL(ψ). To help ensure numerical stability, these trees were required to have at least 20 observations in each node, at least five cases and controls in each node, and were grown to a maximum depth of five. These initial trees were pruned using (8) based on a desired type-I error rate of 0.05 obtained from 1000 bootstrap samples. In order to evaluate the ability of our procedure to identify “truly” enhanced individuals, we consider an “automated” version of the enhancement classification step. In particular, if a non-null tree was selected, terminal nodes for which α̂A > α̂ were classified as “enhanced.” The final identified subgroup consisted of all terminal nodes which were classified as enhanced, and whenever a subgroup was identified, we computed the sensitivity, specificity, positive predictive value, negative predictive value and the number of subjects it contained.

Looking at Table 1, we can see that, for non-null cases, i.e. Scenarios 3–5, trees constructed using sP (ψ) have higher power to detect a tree than those constructed using sL(ψ). As might be expected, when a clearly enhanced subgroup exists, as in Scenarios 3 and 5, both criteria have very good power, and in Scenario 4, where everyone has at least moderately good prediction, and the true subgroup is only mildly enhanced, both methods appear to lose power. This is especially true for sL(ψ), which only identified a non-null tree 35 % of the time in Scenario 4. In the null Scenarios (1 and 2), both criteria led to approximately the correct type-I error rate, suggesting that, in this case, the parametric bootstrap may be incorporated into the pruning process to effectively control type-I error.

Table 1.

Simulation results

| Splitting Criterion | Sens. | Spec. | PPV | NPV | Subgroup Size

|

Prop. Tree non-null | |

|---|---|---|---|---|---|---|---|

| Est. | True | ||||||

| Scenario 1 | |||||||

| sP (ψ)1 | - | 0.97 | - | 1.00 | 33.10 | 0 | 0.062 |

| sL(ψ)1 | - | 0.97 | - | 1.00 | 30.67 | 0 | 0.060 |

| Scenario 2 | |||||||

| sP (ψ)1 | - | 0.98 | - | 1.00 | 22.69 | 0 | 0.046 |

| sL(ψ)2 | - | 0.98 | - | 1.00 | 20.38 | 0 | 0.043 |

| Scenario 3 | |||||||

| sP (ψ)1 | 0.97 | 0.95 | 0.92 | 0.99 | 278.58 | 250.76 | 0.972 |

| sL(ψ)3 | 0.93 | 0.94 | 0.91 | 0.98 | 276.98 | 250.75 | 0.941 |

| Scenario 4 | |||||||

| sP (ψ)3 | 0.68 | 0.89 | 0.77 | 0.92 | 252.55 | 250.75 | 0.692 |

| sL(ψ)4 | 0.34 | 0.91 | 0.63 | 0.83 | 152.16 | 250.73 | 0.354 |

| Scenario 5 | |||||||

| sP (ψ)5 | 1.00 | 0.96 | 0.93 | 1.00 | 283.22 | 250.77 | 1.00 |

| sL(ψ)3 | 0.89 | 0.91 | 0.85 | 0.97 | 288.41 | 250.78 | 0.891 |

| Scenario 66 | |||||||

| sP (ψ)4 | 0.87 | 0.92 | 0.86 | 0.97 | 279.69 | 250.8 | 0.877 |

| sL(ψ)1 | 0.72 | 0.90 | 0.80 | 0.93 | 253.71 | 250.76 | 0.729 |

| Scenario 7 | |||||||

| sP (ψ)7 | 0.83 | 1.00 | 1.00 | 0.99 | 72.83 | 85.10 | 0.833 |

| sL(ψ)7 | 0.53 | 0.99 | 0.95 | 0.98 | 63.79 | 85.10 | 0.538 |

Due to numerical issues, results are based on 996, 994, 995, 992, 976 and 974 data sets, respectively.

Splits were chosen using only the first longitudinal measurement, i.e. t̃= 1.

Sens., Spec., PPV and NPV denote the average (of the non-null values among the 1000 simulated data sets) sensitivity, specificity, positive predictive value and negative predictive value, respectively. To calculate these, subjects in terminal nodes classified as “enhanced” were considered to be “called enhanced” and those in the true subgroups (i.e. those with z1 = z2 = 1 for Scenarios 3–6; those with for Scenario 7) were considered to be “truly enhanced.”

Looking now at sensitivity, specificity, and positive and negative predictive values, we can see that, in Scenarios 3–5, sP (ψ) trees tend to outperform those based on sL(ψ), though in Scenarios 3 and 5, both methods do an excellent job of identifying truly enhanced individuals, while also avoiding falsely classifying non-enhanced individuals as “enhanced.” In Scenario 4, both methods have good specificity and negative predictive values, but seem to have more difficulty identifying truly enhanced subjects, especially when splits are chosen using sL(ψ). Trees based on sP (ψ) still perform relatively well in this scenario

Looking at the results for Scenario 6, we can see that the proposed methods were very successful at identifying those subjects for whom accurate prediction based on only the first longitudinal marker measurement was possible, particularly when splits were chosen using sP (ψ). Again we see relatively good sensitivity, specificity, and positive and negative predictive values for both splitting criteria, suggesting that both methods can effectively identify the truly enhanced individuals, without falsely classifying too many non-enhanced individuals as “enhanced.”

In the “fetal growth” scenario (Scenario 7), trees constructed using sP (ψ) again had good power and sensitivity, and excellent specificity, positive and negative predictive values. Trees constructed using sL(ψ) also had excellent specificity, positive and negative predictive values, but were null nearly half of the time, and thus lacked sensitivity. This may be due to the fact that the true subgroup was quite small, consisting of only about 5% of the population. Overall, the proposed methods are very promising for identifying the correct subgroup. In practice, we recommend constructing trees using sP (ψ) unless the computational burden is unreasonable, as this approach can be much more powerful, particularly when only modest subgroups exist. When very strong subgroups exist, either method should work well.

5. Discussion

We considered a tree-based approach to identifying subgroups for which longitudinal biomarker measurements are useful in predicting a subsequent binary outcome, and proposed the use of the parametric bootstrap to explicitly incorporate the desired type-I error rate into our pruning algorithm. A simulation study was undertaken, and the proposed methods were found to effectively identify truly enhanced individuals in a number of different scenarios, while also effectively controlling the type-I error.

In this paper, our goal was to identify subgroups within which prediction based on a specific, pre-defined model is especially good (or bad). That is, we focused on using the subgroup analysis with the hopes of improving our understanding of the predictive accuracy of the chosen model. Alternatively, one could consider first implementing a subgroup identification procedure in order to help determine which prediction model should be used for a particular application. It may be interesting to consider this further.

In the context of prediction, Hothorn et al. (2006) considered the use of model-based splitting criteria, and proposed a conditional inference framework, which allowed them to overcome the issues of overfitting and variable selection bias brought on by recursive partitioning procedures. An area of future research could be to consider a similar conditional framework in the context of subgroup identification. In addition to helping with overfitting and variable selection bias, this could help us to reduce the computational burden of our procedure, as the framework of Hothorn et al. (2006) does not require the evaluation of all possible splits for nominal covariates.

We propose a splitting criterion, sP (ψ), based on our posited model, as well as sL(ψ), a criterion based on a linear approximation. We favor sP (ψ), as it is consistent with the proposed modeling framework; however, we realize the immense computational burden for this approach. For example, using the Biowulf Linux cluster at the NIH (http://biowulf.nih.gov), implementing our entire procedure for a single data set from simulation Scenario 7 took approximately 15 hours using sP (ψ), whereas when sL(ψ) was used, implementing the entire procedure on a single data set took approximately 7 minutes on average. Thus, though it suffers from some loss of power in a few scenarios, sL(ψ) is an attractive alternative to sP (ψ).

The proposed methods were motivated by and applied to data from the NICHD study of successive small-for-gestational- age births in Scandinavia. Though further validation is needed, the results of this analysis suggest that, for women over the age of 36, prediction (using joint models (1) and (2)) of SGA birth from estimated fetal weights taken at or before 41 weeks of gestation may be considerably less accurate than that for women who are 36 years of age or younger.

Acknowledgments

This research is supported by the Intramural Research Program of the National Institutes of Health (NIH), NICHD. For all simulations, we utilized the high performance computational capabilities of the Biowulf Linux cluster at NIH, Bethesda, MD (http://biowulf.nih.gov). We thank Professor Xiaogang Su for sharing his recursive partitioning code with us. We would also like to thank the Associate Editor and two anonymous reviewers for their helpful comments, which we believe led to a greatly improved version of this manuscript.

References

- Albert PS. A linear mixed model for predicting a binary event from longitudinal data under random effects misspecification. Statistics in Medicine. 2012;31(2):143–154. doi: 10.1002/sim.4405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assmann SF, Pocock SJ, Enos LE, Kasten LE. Subgroup analysis and other (mis)uses of baseline data in clinical trials. The Lancet. 2000;355(9209):1064–1069. doi: 10.1016/S0140-6736(00)02039-0. [DOI] [PubMed] [Google Scholar]

- Bakketeig LS, Jacobsen G, Hoffman HJ, Lindmark G, Bergsj P, Molne K, Rdsten J. Pre-pregnancy risk factors of small-for-gestational age births among parous women in scandinavia. Acta Obstetricia et Gynecologica Scandinavica. 1993;72(4):273–279. doi: 10.3109/00016349309068037. [DOI] [PubMed] [Google Scholar]

- Berger JO, Wang X, Shen L. A bayesian approach to subgroup identification. Journal of Biopharmaceutical Statistics. 2014;24(1):110–129. doi: 10.1080/10543406.2013.856026. [DOI] [PubMed] [Google Scholar]

- Bleich J, Kapelner A, George EI, Jensen ST. Variable selection for bart: An application to gene regulation. The Annals of Applied Statistics. 2014 Sep;8(3):1750–1781. [Google Scholar]

- Brookes ST, Whitley E, Peters TJ, Mulheran PA, Egger M, Davey Smith G. Subgroup analyses in randomised controlled trials: quantifying the risks of false-positives and false-negatives. Health technology assessment (Winchester, England) 2001;5(33):1–56. doi: 10.3310/hta5330. [DOI] [PubMed] [Google Scholar]

- Chipman HA, George EI, McCulloch RE. Bart: Bayesian additive regression trees. Annals of Applied Statistics. 2010 Mar;4(1):266–298. [Google Scholar]

- Foster JC, Taylor JM, Ruberg SJ. Subgroup identification from randomized clinical trial data. Statistics in Medicine. 2011:2867–2880. doi: 10.1002/sim.4322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadlock F, Harrist R, Martinez-Poyer J. In utero analysis of fetal growth: a sonographic weight standard. Radiology. 1991 Oct;181(1):129133. doi: 10.1148/radiology.181.1.1887021. [DOI] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer; 2009. [Google Scholar]

- Hothorn T, Hornik K, Zeileis A. Unbiased recursive partitioning: A conditional inference framework. Journal of Computational and Graphical Statistics. 2006;15(3):651–674. [Google Scholar]

- Jiang J. Asymptotic properties of the empirical blup and blue in mixed linear models. Statistica Sinica. 1998;8:861–885. [Google Scholar]

- Lipkovich I, Dmitrienko A, Denne J, Enas G. Subgroup identification based on differential effect searcha recursive partitioning method for establishing response to treatment in patient subpopulations. Statistics in Medicine. 2011;30(21):2601–2621. doi: 10.1002/sim.4289. [DOI] [PubMed] [Google Scholar]

- Liu D, Albert PS. Combination of longitudinal biomarkers in predicting binary events. Biostatistics. 2014;15(4):706–718. doi: 10.1093/biostatistics/kxu020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loh WY, He X, Man M. A regression tree approach to identifying subgroups with differential treatmenteffects. Statistics in Medicine. 2015;34(11):1818–1833. doi: 10.1002/sim.6454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Negassa A, Ciampi A, Abrahamowicz M, Shapiro S, Boivin JF. Tree-structured subgroup analysis for censored survival data: Validation of computationally inexpensive model selection criteria. Statistics and Computing. 2005;15(3):231–239. [Google Scholar]

- Peto R, Collins R, Gray RN. Large-scale randomized evidence: Large, simple trials and overviews of trials. Journal of Clinical Epidemiology. 1995;48(1):23–40. doi: 10.1016/0895-4356(94)00150-o. [DOI] [PubMed] [Google Scholar]

- Su X, Tsai C-L, Wang H, Nickerson DM, Li B. Subgroup analysis via recursive partitioning. Journal of Machine Learning Research. 2008;10:141–158. [Google Scholar]

- Su X, Zhou T, Yan X, Fan J, Yang S. Interaction trees with censored survival data. The International Journal of Biostatistics. 2009;4(1) doi: 10.2202/1557-4679.1071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yusuf S, Wittes J, Probstfield J, Tyroler HA. Analysis and interpretation of treatment effects in subgroups of patients in randomized clinical trials. Journal of the American Medical Association. 1991;266(1):93–98. [PubMed] [Google Scholar]

- Zhang B, Tsiatis AA, Laber EB, Davidian M. A robust method for estimating optimal treatment regimes. Biometrics. 2012;68(4):1010–1018. doi: 10.1111/j.1541-0420.2012.01763.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J, Kim S, Grewal J, Albert PS. Predicting large fetuses at birth: do multiple ultrasound examinations and longitudinal statistical modelling improve prediction? Paediatric and Perinatal Epidemiology. 2012;26(3):199–207. doi: 10.1111/j.1365-3016.2012.01261.x. [DOI] [PMC free article] [PubMed] [Google Scholar]