Abstract

Objectives

The purpose of this study was twofold: 1) to determine the effect of an acute simulated unilateral hearing loss on children’s spatial release from masking in two-talker speech and speech-shaped noise, and 2) to develop a procedure to be used in future studies that will assess spatial release from masking in children who have permanent unilateral hearing loss. There were three main predictions. First, spatial release from masking was expected to be larger in two-talker speech than speech-shaped noise. Second, simulated unilateral hearing loss was expected to worsen performance in all listening conditions, but particularly in the spatially separated two-talker speech masker. Third, spatial release from masking was expected to be smaller for children than for adults in the two-talker masker.

Design

Participants were 12 children (8.7 to 10.9 yrs) and 11 adults (18.5 to 30.4 yrs) with normal bilateral hearing. Thresholds for 50%-correct recognition of Bamford-Kowal-Bench sentences were measured adaptively in continuous two-talker speech or speech-shaped noise. Target sentences were always presented from a loudspeaker at 0° azimuth. The masker stimulus was either co-located with the target or spatially separated to +90° or −90° azimuth. Spatial release from masking was quantified as the difference between thresholds obtained when the target and masker were co-located and thresholds obtained when the masker was presented from +90° or − 90°. Testing was completed both with and without a moderate simulated unilateral hearing loss, created with a foam earplug and supra-aural earmuff. A repeated-measures design was used to compare performance between children and adults, and performance in the no-plug and simulated-unilateral-hearing-loss conditions.

Results

All listeners benefited from spatial separation of target and masker stimuli on the azimuth plane in the no-plug listening conditions; this benefit was larger in two-talker speech than in speech-shaped noise. In the simulated-unilateral-hearing-loss conditions, a positive spatial release from masking was observed only when the masker was presented ipsilateral to the simulated unilateral hearing loss. In the speech-shaped noise masker, spatial release from masking in the no-plug condition was similar to that obtained when the masker was presented ipsilateral to the simulated unilateral hearing loss. In contrast, in the two-talker speech masker, spatial release from masking in the no-plug condition was much larger than that obtained when the masker was presented ipsilateral to the simulated unilateral hearing loss. When either masker was presented contralateral to the simulated unilateral hearing loss, spatial release from masking was negative. This pattern of results was observed for both children and adults, although children performed more poorly overall.

Conclusions

Children and adults with normal bilateral hearing experience greater spatial release from masking for a two-talker speech than a speech-shaped noise masker. Testing in a two-talker speech masker revealed listening difficulties in the presence of disrupted binaural input that were not observed in a speech-shaped noise masker. This procedure offers promise for the assessment of spatial release from masking in children with permanent unilateral hearing loss.

INTRODUCTION

Pediatric permanent unilateral hearing loss (UHL) represents a growing concern in the audiology community. This concern is due to the wide variability in developmental outcomes observed among children with permanent UHL and the lack of consensus regarding the audiologic management for this population. Children with permanent UHL often experience developmental difficulties, even in cases of mild UHL. This could be due, in part to reduced access to binaural cues, which are important for speech perception in complex listening environments (Quigley & Thomure 1969; Bess et al. 1986; Brookhouser et al. 1991; Lieu et al. 2010, 2012, 2013). Specifically, binaural cues facilitate spatial release from masking (SRM) when a target talker (e.g., the teacher) is spatially separated from background talkers (e.g., classmates). For adults with normal hearing, SRM is more pronounced when the masker is acoustically and perceptually complex (e.g., 1 or 2 competing talkers) relative to when the masker is noise or babble composed of many talkers (e.g., Arbogast et al. 2002; Freyman et al. 2001). Adults with permanent or simulated UHL achieve significantly less SRM than their counterparts with normal bilateral hearing, and this deficit is exacerbated in the presence of relatively complex maskers (e.g., two-talker speech; Marrone et al. 2008; Rothpletz et al. 2012). While previous studies demonstrate that children with permanent UHL experience degraded speech understanding in relatively steady maskers (e.g., Bess et al. 1986; Ruscetta et al. 2005; Lieu et al. 2013), their ability to achieve SRM in the presence of complex maskers has not been systematically investigated. This is a critical gap in the literature considering recent evidence that the real-world performance of children with permanent bilateral hearing loss is better predicted by speech recognition in a two-talker than a steady noise masker (Hillock-Dunn et al. 2015). The purpose of this study was twofold: 1) to determine the effect of an acute simulated UHL on SRM in two-talker speech and speech-shaped noise for children, and 2) to develop a procedure to be used in future studies that will assess SRM in children who have permanent UHL.

Population-based prevalence estimates of permanent UHL among children ages 6 to 19 years range from 3% to 6%, depending on how UHL is defined (Bess et al. 1998; Niskar et al. 1998; Fortnum et al. 2001; Ross et al. 2010). Conventional wisdom has been that, with one normal-hearing ear, children with UHL will acquire speech and language normally and achieve age-appropriate developmental milestones. It is now recognized that children with UHL are at an increased risk for academic, cognitive, social-emotional, speech, and language problems relative to their peers with normal hearing in both ears (Bess & Tharpe 1984; Borg et al. 2002; Lieu et al. 2010; Ead et al. 2013). For instance, an estimated 36% to 54% of school-age children with UHL require educational assistance and/or receive speech-language therapy, and at least one-third of them experience behavioral problems in the classroom (Bess & Tharpe 1984; Oyler et al. 1988; Dancer et al. 1995; Sedey et al. 2002; Lieu et al. 2012). However, there are considerable individual differences in developmental outcomes among children with UHL. Despite increased early identification, variability in outcomes contributes to the lack of consensus regarding audiologic management for this population and results in a costly, failure-based model of intervention (Knightly et al. 2007; Porter & Bess 2011; Fitzpatrick et al. 2014). The field of audiology currently lacks assessment tools that predict which children with UHL are most at risk for functional communication deficits. A potential explanation for this void is that the majority of conventional clinical tools fail to capture the difficulties faced by children with UHL in the complex listening conditions they encounter in their everyday lives.

One of the reasons children with UHL often experience poorer outcomes than their peers with normal bilateral hearing is their lack of access to binaural cues. Head shadow effect, binaural squelch, and binaural summation are the three binaural effects traditionally associated with the benefit of listening with two ears relative to one in multi-source environments (Bronkhorst & Plomp 1988). In natural listening environments, listeners often turn to face a target of interest, such that it originates from 0° azimuth in listener-centric coordinates. Under these conditions, the target stimulus reaching the two ears is functionally identical. In contrast, when a masker is spatially separated from the target in azimuth, it will arrive at the listener’s ears at different times and with different intensities. Listeners are able to use these interaural time differences (predominantly below 1500 Hz) and interaural intensity differences (predominantly above 1500 Hz) to their advantage. The signal-to-noise ratio (SNR) will be better at the ear furthest from the masker source due to the high-frequency acoustic shadow the head casts over that ear. By virtue of this head shadow effect, the listener has the opportunity to attend to the ear with the better SNR to improve speech recognition performance by 3 to 8 dB (Bronkhorst & Plomp 1988). Listeners also use information from the ear with the less favorable SNR. Access to interaural time differences associated with the target and masker stimuli at the two ears improves listeners’ performance by 3 to 7 dB; this effect is known as binaural squelch (e.g., Levitt & Rabiner 1967; Bronkhorst & Plomp 1988; Hawley et al. 2004). Even when the target and masker both originate from the front of a listener and there are no interaural time or level differences, listeners benefit from having access to two neural representations of the target and masker stimuli. This binaural cue is known as binaural summation, and it typically improves speech recognition performance in noise by 1 to 3 dB (Bronkhorst & Plomp 1988; Davis et al. 1990; Gallun et al. 2005).

The binaural benefit associated with spatially separating the target and masker on the azimuth is referred to as SRM. Under complex listening conditions, this improvement is thought to rely largely on auditory stream segregation, in which interaural difference cues are used to perceptually differentiate the target and masker streams (Licklider 1948; Bronkhorst & Plomp 1988; Bregman 1990; Zurek 1993; Freyman et al. 1999). SRM is often expressed as the difference in speech recognition performance between a condition in which target and masker stimuli are co-located in the front of the listener and a condition in which the target and masker stimuli are perceived to originate from different locations on the azimuthal plane. By assessing SRM, we can estimate the extent to which a listener uses binaural cues for hearing in complex listening environments.

In adults, SRM increases as target-masker similarity or stimulus uncertainty increases, presumably due to the increasing role of informational masking when the target and masker are co-located (Brungart 2001b; Freyman et al. 2001; Arbogast et al. 2002; Durlach et al. 2003; Culling et al. 2004; Hawley et al. 2004). Speech recognition in steady maskers such as speech-shaped noise is traditionally associated with energetic masking (e.g., Fletcher 1940; also see Stone et al. 2014). Energetic masking is the consequence of overlapping excitation patterns on the basilar membrane, reducing the fidelity with which target and masker stimuli are represented in the auditory periphery. Speech recognition in competing speech involves both energetic and informational masking. In contrast to energetic masking, informational masking reflects a reduced ability to segregate and selectively attend to a particular auditory object despite adequate peripheral encoding (Brungart 2001b). For a given angular separation, the SRM observed for informational maskers is typically much larger than observed for energetic maskers. This is thought to reflect the greater segregation challenge in informational maskers. On average, adults with normal hearing bilaterally achieve 6–7 dB SRM in the presence of noise maskers, but around 18 dB SRM in the presence of one or two competing talkers (Carhart et al. 1969; Kidd et al. 1998; Bronkhorst 2000; Arbogast et al. 2002; Hawley et al. 2004).

Permanent or simulated UHL reduces SRM in adults. Rothpletz et al. (2012) demonstrated that SRM is essentially eliminated for adults with mild to profound permanent UHL for a speech-on-speech recognition task involving target and masker sentences that were digitally processed to substantially reduce energetic masking. SRM is also eliminated for a speech-on-speech recognition task when adults with normal hearing listen with an earplug and earmuff to simulate a mild UHL (average attenuation = 38.1 dB; Marrone et al. 2008). Limited access to the binaural difference cues supporting SRM may not fully explain the deficit associated with UHL in adults, however. When the target and masker are co-located in front of the listener, a condition in which both interaural time and level differences are functionally eliminated, adults with mild to profound UHL perform up to 4.5 dB worse than adults with normal hearing under conditions associated with substantial informational masking (Rothpletz et al. 2012). These results indicate that UHL degrades speech perception for adults in the presence of informational masking, whether or not interaural difference cues are available. Moreover, this decrease in performance for adults with UHL is observed even for mild hearing losses.

Similar to adults, children with normal hearing achieve greater SRM in the presence of informational relative to energetic masking. In general, SRM in young children with normal hearing ranges from 3 to 11 dB, depending on the stimuli and test conditions used (Litovsky 2005; Lovett et al. 2012). While some studies indicate that SRM continues to develop through childhood for complex maskers, (Yuen & Yuan 2014), other data indicate that SRM is mature by 3 years of age (Litovsky 2005). On a four-alternative forced-choice spondee identification task, Johnstone and Litovsky (2006) found that 5- to 7-year-olds achieved significantly greater SRM in the presence of unaltered speech and time-reversed speech (3.4 dB and 6.7 dB, respectively) relative to modulated noise (0.5 dB). The overall finding that children achieved greater SRM for speech-based relative to noise maskers was interpreted as being due to greater informational masking. Although it is unclear why the SRM for time-reversed speech was greater than that observed for unaltered speech, the authors posited that the novelty of the time-reversed speech may have resulted in relatively greater informational masking, and therefore greater SRM.

There are relatively few data pertinent to SRM in school-age children with permanent sensorineural UHL, but available data indicate reduced benefit of target/masker spatial separation and poorer speech recognition overall for children with UHL (e.g., Bess et al. 1986; Bovo et al. 1988; Hartvig et al. 1989; Kenworthy et al. 1990; Updike 1994; Lieu et al. 2010; Noh & Park 2012; Lieu et al. 2013; Reeder et al. 2015). For example, Reeder et al. (2015) reported that 7- to 16-year-olds with moderately-severe to profound sensorineural UHL performed worse than age-matched peers with normal hearing on a range of tasks. These tasks included: monosyllabic word recognition in quiet and four-talker babble, sentence recognition in spatially diffuse restaurant noise, and spondee recognition in quiet, single-talker speech, and multitalker babble presented from 0°, +90°, or −90° azimuth. While the deficits associated with UHL tended to be largest when the target and masker originated from different locations in space, poorer performance relative to children with normal hearing was also observed in quiet and when the masker was co-located with the target. Poorer performance in children with UHL, even in the absence of a binaural difference cue, is consistent with the results of Bess et al. (1986); that study tested 6- to 13-year-olds with either normal hearing or moderate to severe sensorineural UHL and found a detrimental effect of UHL when the target stimulus was presented in quiet to the ear with normal hearing sensitivity. Data from Bess et al. (1986) also support the idea that the ability to benefit from SRM is related to a child’s listening challenges in daily life. Specifically, individual children with UHL who benefitted least from the head shadow effect also tended to experience more difficulty in school than those who were better able to use the head shadow effect (Bess et al. 1986). These studies suggest that children with UHL, who have compromised access to binaural cues, experience marked difficulties in complex listening environments characterized by multiple, co-located or spatially separate competing sound sources.

While previous studies of spatial hearing in children demonstrate a detrimental effect of UHL, none of those studies has explicitly considered the effect of informational versus energetic masking. We know that informational masking is associated with an especially pronounced SRM in children with normal hearing (Johnstone & Litovsky 2006; Misurelli & Litovsky 2015), and initial data are consistent with the idea that UHL in children is particularly detrimental in speech-based maskers (Reeder et al., 2015). Further motivation for considering children’s performance in the presence of informational masking is based on recent data from Hillock-Dunn et al. (2015). That study evaluated masked speech recognition in children with bilateral sensorineural hearing loss, with the target and masker both coming from 0° azimuth. Parental reports of their children’s everyday communication difficulties were strongly correlated with speech recognition in the two-talker speech masker, but not the speech-shaped noise masker. These data suggest that children’s susceptibility to informational masking has the potential to provide valuable new information about the communication abilities of children with hearing loss outside the confines of the audiology booth.

The present study was designed to better understand the effects of UHL in children, particularly with respect to SRM in low and high informational masking contexts. The approach was to examine the effects of an acute conductive UHL, produced with an earplug and earmuff, on SRM in two-talker speech and speech-shaped noise for children and adults with normal bilateral hearing. While there are important differences between conductive and sensorineural hearing loss (reviewed by Gelfand 2009), and between acute and permanent hearing loss (e.g., Kumpik et al. 2010), the goal was to better understand how children and adults with normal hearing sensitivity use the cues available to them under these conditions. Listeners with normal bilateral hearing completed an open-set sentence recognition task in the presence of speech-shaped noise or two-talker speech. The target was presented from the front of the listener, and the masker was either co-located with the target or spatially separated to one side. Each listener served as his or her own control by completing the SRM task in the context of normal bilateral hearing (no plug) and a simulated UHL.

There were three main predictions. First, SRM was expected to be larger in two-talker speech than in speech-shaped noise, as observed previously. Considering that SRM is dependent on the quality of binaural cues, it was predicted that the differential effect of masker might only be observed in the no-plug conditions. Second, the simulated UHL was expected to worsen performance in all listening conditions, but particularly in the spatially separated two-talker speech masker. Third, SRM was expected to be smaller for children than for adults in the two-talker masker. This expectation was based on the observations that (1) development of SRM may extend into childhood for complex maskers (Yuen & Yuan 2014, but see Litovsky 2005) and (2) the binaural masking level difference (MLD) becomes adult-like later in development for noise stimuli thought to introduce informational masking (Grose et al. 1997).

MATERIALS AND METHODS

Participants

Participants were 12 children (ages 8.7 to 10.9 yrs) and 11 adults (18.5 to 30.4 yrs). Criteria for inclusion were: (1) air-conduction hearing thresholds less than or equal to 20 dB HL for octave frequencies from 250 Hz to 8000 Hz, bilaterally (ANSI 2010); (2) native speaker of American English; and (3) no known history of chronic ear disease. This research was approved by the Institutional Review Board of the University of North Carolina at Chapel Hill.

Stimuli and conditions

Target stimuli were Revised Bamford-Kowal-Bench (BKB) sentences (Bench et al. 1979) spoken by an adult female native speaker of American English. The BKB corpus includes 21 lists of 16 sentences, each with 3–4 keywords, for a total of 50 keywords per list. These stimuli have previously been used in our lab to examine masked speech perception for children as young as 5 years of age (e.g., Hall et al. 2012). Recordings were made in a sound-treated room, digitized at a resolution of 32 bits and a sampling rate of 44.1 kHz, and saved to disk as wav files. These files were root-mean-square (RMS) normalized and down sampled to 24.4 kHz before presentation.

Each sentence was recorded a minimum of two times. Three adults with normal hearing listened to the sentence corpus to verify the sound quality of the recordings. The adults included two audiology graduate students and one Ph.D.-level research audiologist, all of whom were native speakers of American English. Sentences were presented diotically at a comfortable loudness level through headphones (Sennheiser; HD25). Listeners were instructed to mark any words or sentences with undesirable sound quality characteristics (e.g., distortion, peak clipping, irregular speaking rate, and excessively rising or falling intonation). Based on this feedback, a subset of sentences was re-recorded and edited. Two of the adults conducted a final listening check of the full sentence corpus.

The masker was either two-talker speech or speech-shaped noise, and it was presented continuously over the course of a threshold estimation track. Following Calandruccio et al. (2014), the two-talker masker was composed of recordings of two female talkers, each reading different passages from the children’s story Jack and the Beanstalk (Walker 1999). The female talkers were recorded separately. Each of the individual masker streams was manually edited to remove silent pauses of 300 ms or greater. The rationale for this editing was to reduce opportunities for dip listening. Each masker stream was RMS-normalized before summing. The result was a 1.4-min masker sample, which ended with both talkers saying a complete word. The speech-shaped noise masker had the same long-term magnitude spectrum as the two-talker speech masker. At the outset of each trial, masker playback started at the beginning of the associated audio file. Due to the nature of the adaptive tracking procedure, each track ended at different time points in the masker.

A custom MATLAB script was used to control selection and presentation of stimuli. Target and masker stimuli were processed through separate channels of a real-time processor (Tucker Davis Technology; RZ6), amplified (Applied Research Technology; SLA-4), and presented through a pair of loudspeakers (JBL; Professional Control 1). Target sentences were always presented from a loudspeaker in front of the listener (0° azimuth). The masker stimulus was either co-located with the target (0° azimuth) or spatially separated to the right (+90° azimuth) or left (−90° azimuth) of the listener.

Procedure

General procedure

Speech reception thresholds (SRTs) were measured to assess performance on an open-set sentence recognition task. Participants were seated in the center of a 7 × 7 ft, single-walled sound-treated booth, approximately 3 ft from each of two loudspeakers. Experimental stimuli were calibrated with the microphone suspended 2 ft above the chair in which participants were seated, at the level of the center of the loudspeaker cone; this was also the approximate position of participants’ ears when seated. Chair height was not adjusted for individual listeners. For conditions in which the target and masker were spatially separated, the loudspeaker associated with the target depended on the desired masker position. By changing the physical orientation of the participant’s chair, the participant always directly faced the speaker associated with the target stimulus. The side of the simulated UHL and the order of testing in each listening mode (no plug or simulated UHL) were counterbalanced across participants within each age group4.

In the simulated-UHL listening condition, participants completed the speech recognition task with each masker (two-talker speech and speech-shaped noise) at 0° azimuth, +90° azimuth, and −90° azimuth. The SRTs in the three simulated UHL conditions will be referred to as SRT[simUHL/co-loc] (target and masker co-located), SRT[simUHL/msk-ipsi] (masker ipsilateral to the simulated UHL), and SRT[simUHL/msk-contra] (masker contralateral to the simulated UHL). In the no-plug listening condition, participants completed the task with the masker at 0° azimuth and +90° azimuth. The SRTs in these two no-plug conditions will be referred to as SRT[no-plug/co-loc] and SRT[no-plug/msk-side], respectively.

During testing, participants wore an FM transmitter (Sennheiser; ew 100 G3) with a lapel microphone. The microphone was attached to the participant’s shirt, positioned within 6 inches of his/her mouth. The participant’s verbal responses were presented to an examiner seated outside the booth via an FM receiver coupled to high-quality headphones (Sennheiser; HD25). This approach optimized the SNR for the observer, who also monitored the participant’s face through a window throughout testing.

Participants were informed that they would first hear continuous speech or noise from the front or side loudspeaker, and then a sentence spoken by a female from the front loudspeaker. They were instructed to ignore the continuous background sounds and verbally repeat each sentence produced by the female from the front loudspeaker. Participants were told to make their best guess of the sentence even if they only heard one word because scoring was conducted on a word-by-word basis. The examiner scored each keyword as correct or incorrect. Keywords were only marked “correct” if the entire word was correctly repeated, including pluralization and tense. The maximum response window for each trial was 5 sec after the end of the target sentence presentation. If the participant did not respond within this window, the tester marked all keywords as incorrect. Formal feedback was not given; however, encouragement was provided for children through social reinforcement (e.g., smiling and a head nod).

Unilateral hearing loss simulation

Unilateral hearing loss was simulated using a foam earplug [Howard Leight Max Small; Noise Reduction Rating (NRR) 30 dB] and a supra-aural earmuff (Howard Leight Thunder T3; NRR 30 dB), both placed by the examiner. For the UHL simulation, the earplug was deeply inserted into the participant’s ear canal, and the supra-aural earmuff was placed over the pinna to optimize attenuation. The supra-aural earmuff was modified to remove the ear cup contralateral to the simulated UHL. The headband of the supra-aural earmuff was adjusted for comfort and to ensure that the contralateral ear was not obstructed. A right unilateral hearing loss was simulated in 6 of 12 children and 6 of 11 adults. The average attenuation provided by the earplug and earmuff combination was measured behaviorally in the sound field at 0° azimuth. Given the amount of testing time required to complete the study, detection thresholds with and without the simulated UHL were only measured for a warble tone at 500 Hz, 1000 Hz, and 2000 Hz. The ear contralateral to the simulated UHL was masked with a 50 dB HL noise band centered on the test frequency, delivered via insert earphone (Etymotic, ER-3A); this insert earphone was not worn during speech recognition testing. Thresholds were assessed with and without the earplug + earmuff combination, and the difference was taken to estimate the amount of attenuation provided by the UHL simulation. This procedure was completed before speech recognition testing for one child and after speech recognition testing for 10 children. Average attenuation values were not obtained for one child due to participant fatigue. Attenuation values were obtained for only two adult participants at the time of the main experiment; additional values were subsequently obtained in seven newly recruited normal-hearing adults (ages 20.1 to 35.1 years). On average, the simulated UHL condition resulted in a moderate flat conductive hearing loss. Additional details appear in the results section.

Threshold estimation

A 1-up, 1-down tracking procedure (Levitt 1971) was used to estimate speech recognition thresholds corresponding to the average SNR required for 50% correct sentence identification. The overall level of the target plus masker was fixed at 60 dB SPL. This level was chosen based on the range of conversational speech level in noisy environments (Olsen 1998) and the average attenuation achieved through the UHL simulation. Using a higher overall target plus masker level would have resulted in greater audibility in the simulated UHL condition. Each run was initiated at a SNR of 10 dB. The SNR was increased by increasing the signal level and decreasing the masker level if one or more keywords were missed. The SNR was reduced by decreasing the signal level and increasing the masker level if all keywords were correctly identified. An initial step size of 4 dB was reduced to 2 dB after the first two reversals. Runs were terminated after eight reversals. The SRT was estimated by computing the average SNR at the final six reversals.

The first target sentence presented to a participant was selected randomly from the entire set of BKB sentences. Thereafter, sentences were presented in sequential order, ensuring that no sentences were repeated. Each run required 16–20 sentences. A minimum of two SRTs was estimated for each condition. Data collection in each condition continued until two estimates within 3 dB of each other were obtained. This criterion was typically met with two estimates. Adults required more than two runs at a rate of 15% in speech-shaped noise and 13% in two-talker speech. Children required more than two runs at a rate of 10% in speech-shaped nose and 12% in two-talker speech. The mean of the two estimates within 3 dB of each other represented the final SRT used for the subsequent analyses. Testing for children required two visits to the laboratory: one to complete the simulated-UHL conditions, and one to complete the no-plug conditions. In the presence of a simulated UHL, total testing time was 1.5 to 2 hrs. It took less than 1 hr to complete testing in the no-plug conditions. Adults completed all testing in 1 visit, typically lasting 2 to 2.5 hrs. All listeners were given breaks throughout testing.

Results were evaluated using repeated-measures ANOVA. Subsequent simple main effects testing used Bonferroni adjustment for multiple comparisons. A significance criterion of α = 0.05 was adopted. The SRM was quantified as the difference between thresholds obtained when the target and masker were co-located and thresholds obtained when the masker was presented from +/− 90° from the midline, as follows:

RESULTS

For children, the average amount of attenuation provided by the earplug and earmuff at 500 Hz, 1000 Hz, and 2000 Hz was 44.8 (SD = 5.20), 42.3 (SD = 6.3), and 39.5 (SD = 4.6) dB SPL, respectively. For adults, attenuation at these frequencies was 44.2 (SD = 10.4), 47.9 (SD = 5.9), and 40.2 (SD = 5.1) dB SPL. For both groups, the simulated UHL resulted in a moderate conductive UHL.

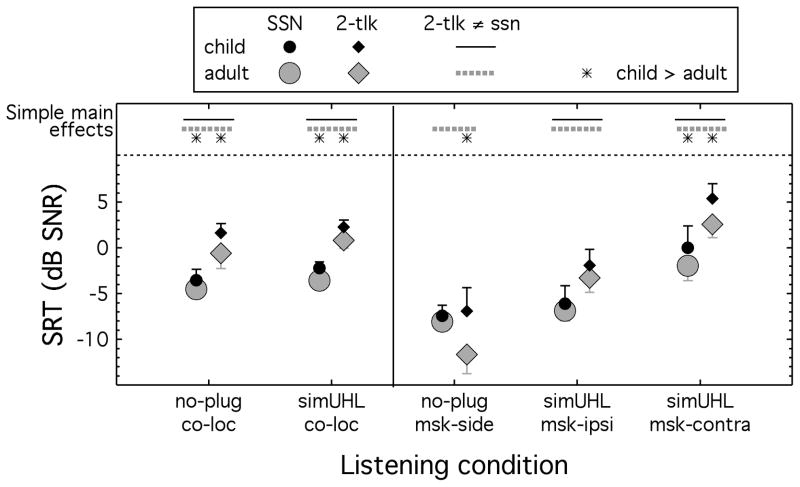

Figure 1 shows results of speech recognition testing, with SRTs for children and adults with and without simulated UHL in each target-masker configuration plotted separately. The SRTs obtained in the speech-shaped noise masker are represented by circles, and those obtained in the two-talker speech masker are represented by diamonds. Shading designates age group, with black symbols representing data for children and gray symbols representing data for adults. Error bars represent one standard deviation. Higher thresholds indicate poorer performance. Stars and lines in the top panel of the figure reflect results of simple main effects testing, described below. Mean SRTs by listener group and condition, as well as the associated standard deviations, are also reported in Table 1.

Figure 1.

Group average SRTs (in dB SNR) required to reach 50% correct sentence recognition are shown for children and adults in all listening conditions, indicated on the abscissa. Results in the left panel reflect those obtained in co-located target-masker conditions, while those in the right panel reflect those obtained in spatially separated target-masker conditions. Symbol shape indicates masker condition. Circles indicate SRTs obtained in speech-shaped noise, while diamonds indicate SRTs obtained in two-talker speech. Symbol shading and size designate age group. Small black symbols represent data for children, and large grey symbols represent data for adults. Error bars represent one standard deviation of the mean. Results of simple main effects testing appear at the top of each panel. Stars indicate significant differences between SRTs of children and adults within a condition. Lines indicate significant effects of maker type within data of either children (solid black lines) or adults (dashed grey lines).

Table 1.

Mean speech recognition thresholds (SRTs, dB SNR) with and without simulated UHL for children and adults in each masker. The target and masker were either co-located (co-loc), or the masker was presented from the side (msk-side); in the simulated UHL condition, the masker was presented either ipsilateral (msk-ipsi) or contralateral (msk-contra) to the simulated loss. Standard deviations appear below each mean, in parentheses.

| No plug | Simulated UHL | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Two-talker speech | Speech-shaped noise | Two-talker speech | Speech-shaped noise | |||||||

| Co-loc | Msk-side | Co-loc | Msk-side | Co-loc | Msk-ipsi | Msk-contra | Co-loc | Msk-ipsi | Msk-contra | |

| Child n = 12 | 1.61* (1.03) | −6.90* (2.55) | −3.56* (1.21) | −7.43 (1.18) | 2.28* (0.77) | −1.92 (1.73) | 5.39* (1.60) | −2.22* (0.68) | −6.11 (1.96) | 0.01* (2.39) |

| Adult n = 11 | −0.61* (1.67) | −11.67* (2.08) | −4.52* (0.51) | −8.08 (0.96) | 0.83* (0.85) | −3.27 (1.61) | 2.56* (1.42) | −3.59* (0.83) | −6.86 (1.10) | −1.97* (1.62) |

Indicates mean difference between children and adults is significant (p < .05) with Bonferroni adjustment for multiple comparisons.

Overall there was a trend for thresholds to be higher for children than adults, higher in the two-talker masker than the speech-shaped noise masker, and higher for the simulated-UHL than the no-plug listening condition. However, the magnitude of these trends differed in detail across listening conditions, maskers, and age groups. For example, the mean child/adult difference in the no-plug/msk-side condition was 4.8 dB in the two-talker masker, but only 0.6 dB in the speech-shaped noise masker. Average SRTs were more than 3-dB higher in the two-talker masker than the speech-shaped noise masker for both groups in most conditions (see Table 1). The one exception was the no-plug/msk-side condition for adults, where the average SRT was 3.6-dB lower in the two-talker masker than the speech-shaped noise masker (−11.7 dB versus −8.1 dB, middle of Figure 1). For both children and adults, the simulated UHL elevated thresholds in the speech-shaped noise by approximately 1 dB in the co-located condition (left panel, Figure 1), 1.3 dB in the msk-ipsi condition, and 6.5 dB in the msk-contra condition (right panel, Figure 1). Larger effects of simulated UHL were seen for the spatially separated target and two-talker masker, where the average effect of simulated UHL was 5.0 dB (child) and 8.4 dB (adult) in the msk-ipsi condition, and 12.3 dB (child) and 14.2 dB (adult) in the msk-contra condition.

A repeated-measures analysis of variance was conducted to evaluate the trends observed in Figure 1. There were two levels of masker (two-talker speech, speech-shaped noise), five levels of listening condition (no-plug/co-loc, no-plug/msk-side, simUHL/co-loc, simUHL/msk-ipsi, simUHL/msk-contra), and two levels of the between-subjects factor of age group (child, adult). Results from this analysis are shown in Table 2. All three main effects reached significance, as did two of the two-way interactions. Of particular importance, the three-way interaction (Masker x Listening Mode x Age Group) was statistically significant. This reflects the fact that the child-adult difference was consistent across listening conditions for the speech-shaped noise, but not the two-talker speech masker (middle panel, Figure 1). Because of this statistically significant three-way interaction, all lower-order effects should be treated with caution.

Table 2.

Results of an rmANOVA evaluating the effects of age group (child, adult), masker (two-talker speech, speech-shaped noise), and listening condition (no-plug/co-loc, no-plug/msk-side, simUHL/co-loc, simUHL/msk-ipsi, simUHL/msk-contra) on SRTs.

| Source | F | df | p | partial η2 |

|---|---|---|---|---|

| Age Group | 43.04 | 1, 21 | <.001** | .672 |

| Masker | 581.81 | 1, 21 | <.001** | .965 |

| Listening Condition | 224.22 | 4, 84 | <.001** | .914 |

| Masker x Age Group | 26.03 | 1, 21 | <.001** | .553 |

| Listening Condition x Age Group | 1.82 | 4, 84 | .134 | .080 |

| Masker x Listening Condition | 69.54 | 4, 84 | <.001** | .768 |

| Masker x Listening Condition x Age Group | 6.00 | 4, 84 | <.001** | .222 |

p < .05

p < .001

Simple main effects testing revealed that SRTs were lower for adults than children in all cases except for the no-plug/msk-side condition in speech-shaped noise (p = 0.169) and the two simUHL/msk-ipsi conditions (p = 0.066 and p = 0.276 for speech-shaped noise and two-talker maskers, respectively). SRTs were lower for the speech-shaped noise than the two-talker masker in all cases except the no-plug/msk-side condition for adults (p = 0.350). SRTs were significantly lower for the no-plug/co-loc condition than the simUHL/co-loc in all cases except for the child data with the two-talker masker (p = 0.208). Significant differences resulting from simple main effects testing are represented in the top panel of Figure 1. Within a given listening condition, a significant effect of masker is indicated by a solid line for children and a dashed line for adults. Stars indicate that children’s thresholds are significantly higher than adults’ for a given masker and listening condition.

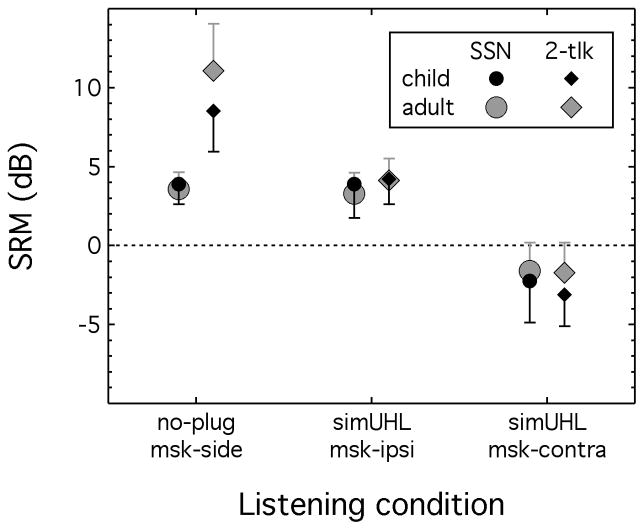

Figure 2 shows the average SRM achieved by children and adults with and without simulated UHL in two-talker speech and speech-shaped noise. As with Figure 1, circles represent data obtained in speech-shaped noise, and diamonds represent data obtained in two-talker speech. Symbol shading indicates results obtained from either children (black) or adults (grey). Error bars represent one standard deviation. Positive SRM values indicate better speech recognition performance when the target and masker were spatially separated relative to when they were co-located in azimuth.

Figure 2.

Group average SRM (in dB) for children and adults with and without simulated UHL in two-talker speech and speech-shaped noise. SRM was calculated as the difference between thresholds obtained when the target and masker were co-located and thresholds obtained when the masker was presented from +90° or −90° on the azimuth. Circles represent SRM achieved in speech-shaped noise, and diamonds represent SRM achieved in two-talker speech. Symbol shading and size indicate results obtained from either children (small, black) or adults (large, grey). Error bars represent one standard deviation of the mean.

All listeners benefited from spatial separation of target and masker stimuli along the azimuthal plane in the no-plug listening conditions (left third of figure); this benefit was approximately 6-dB larger in the two-talker speech than the speech-shaped noise masker. In the simulated-UHL listening conditions, a positive SRM was observed only when the masker was presented ipsilateral to the ear with the simulated UHL. For the speech-shaped noise, SRM was similar in the no-plug/msk-side and simUHL/msk-ipsi conditions, with overall means of 3.7 dB (SD = 1.17) and 3.6 dB (SD = 1.79), respectively. That is, access to stimuli from the side of the masker did not improve SRM. In contrast, for the two-talker speech, SRM in the no-plug/msk-side condition was larger than for the simUHL/msk-ipsi condition, with overall means of 9.7 dB (SD = 3.00) and 4.2 dB (SD = 1.47), respectively. When the masker was presented contralateral to the simulated UHL, the SRM was −2.5 dB (SD = 2.04): that is, when the masker was presented to the ear with better hearing sensitivity, performance was degraded relative to the co-located baseline.

A repeated-measures analysis of variance was conducted to evaluate the trends in the SRM results shown in Figure 2. There were two levels of masker (two-talker speech, speech-shaped noise), three levels of listening condition (no-plug/msk-side, simUHL/msk-ipsi, simUHL/msk-contra), and two levels of the between-subjects factor of age group (child, adult). Results from this analysis are shown in Table 3. There was a significant main effect of masker and a significant main effect of listening condition, but no main effect of age group. There was a significant interaction between masker and listening condition, and between masker and age group; the other interactions failed to reach significance. Simple main effects testing indicate that SRM was larger for the two-talker than the speech-shaped noise masker in the no-plug/msk-side condition (9.7 dB versus 3.7 dB, respectively), but not in the simUHL/msk-ipsi or the simUHL/msk-contra conditions (p = 0.181 and p = 0.255, respectively). The SRM within a masker type differed across the three listening conditions in all cases except the no-plug/msk-side and simUHL/msk-ipsi conditions for the speech-shaped noise masker (p = 1.00). The SRM was significantly different between groups in the two-talker speech (p = .015) but not the speech-shaped noise masker (p = .835).

Table 3.

Results of an rmANOVA evaluating the effects of age group (child, adult), masker (two-talker speech, speech-shaped noise), and listening condition (no-plug/msk-side, simUHL/msk-ipsi, simUHL/msk-contra) on spatial release from masking.

| Source | F | df | p | partial η2 |

|---|---|---|---|---|

| Age Group | 2.03 | 1, 21 | .169 | .088 |

| Masker | 57.99 | 1, 21 | <.001** | .734 |

| Listening Condition | 187.87 | 2, 42 | <.001** | .899 |

| Masker x Age Group | 6.63 | 1, 21 | .018* | .240 |

| Listening Condition x Age Group | 1.51 | 2, 42 | .233 | .067 |

| Masker x Listening Condition | 55.42 | 2, 42 | <.001** | .725 |

| Masker x Listening Condition x Age Group | 1.84 | 2, 42 | .171 | .081 |

p < .05

p < .001

One question of interest is whether the magnitude of attenuation provided in the simulated UHL was associated with the amount of SRM children experienced. Based on the results of Reeder at al (2015), this association was predicted for attenuation at 500 Hz, but not at the other frequencies. Without correcting for multiple comparisons, the two-tailed bivariate correlation between children’s SRM and attenuation values at 500 Hz was statistically significant in the two-talker masker for the simUHL/msk-contra listening condition (r = −.609, p = .047), but not in the other masker conditions (p ≥ .283). Correlations between SRM and thresholds at 1000 and 2000 Hz were not significant. These results are consistent with the idea that attenuation at low frequencies is more detrimental to SRM than attenuation at higher frequencies.

DISCUSSION

The goals of this study were to: 1) determine the effect of an acute simulated UHL on children’s SRM in two-talker speech and speech-shaped noise, and 2) develop a method to be used in future studies that will assess SRM in children who have permanent UHL. The main findings are: 1) SRM is particularly robust in the presence of informational masking for both children and adults with normal hearing, 2) disruption of binaural cues via an acute simulated UHL has different consequences for SRM in the two maskers, and 3) this procedure offers promise for the assessment of SRM in children with permanent UHL. Although it was not the main focus of this study, performance in the co-located conditions will be discussed first because they serve as the baseline condition for quantifying SRM.

Performance in co-located conditions (baseline)

When the target and masker were co-located, performance tended to be better in the no-plug than the simulated-UHL listening condition. This difference was statistically significant for adults in both maskers and for children in the speech-shaped noise masker. There was no statistically significant effect of a simulated UHL on children’s performance in the co-located two-talker masker. The present results are broadly consistent with previous data comparing performance between no-plug and simulated-UHL conditions for co-located target and masker stimuli. Van Deun et al. (2010) assessed performance with and without a unilateral earplug and earmuff for 8-year-olds and adults on the Leuven Intelligibility Number Test in the presence of speech-weighted noise. Comparing performance with and without the simulated UHL in the co-located target and masker condition, children and adults performed similarly and demonstrated statistically significant binaural summation (average 1.3 dB and 1.4 dB, respectively). For comparison, values of summation in speech-shaped noise in the present dataset were 1.3 dB for children and 0.9 dB for adults. Mean values of summation in the two-talker masker were 0.7 dB for children and 1.4 dB for adults.

It is not clear how to think about the statistically non-significant summation for children in the two-talker masker. It is possible that summation does not differ across age groups or across maskers, and failure to find a statistically significant effect in the two-talker masker for children is a chance finding. Keep in mind that the summation effects observed in the present dataset were small. However, there is some precedent in the literature for less robust summation in a two-talker masker with a simulated UHL. For example, Marrone et al. (2008) did not observe a statistically significant effect of binaural summation for adults on a closed-set speech recognition task in the presence of same-sex two-talker speech. In that study, adults with normal hearing listened with and without a simulated UHL using a unilateral earplug and earmuff (average attenuation for speech = 38.1 dB). There was a statistically non-significant 0.5-dB difference between SRTs obtained with and without the simulated UHL in the co-located target-masker condition (Marrone et al. 2008).

Summation effects have not consistently been demonstrated in studies of listeners with permanent UHL. We cannot demonstrate summation in listeners with permanent UHL by comparing speech recognition scores when listening with two ears with normal hearing relative to one ear with normal hearing. Rather, summation effects are estimated by comparing performance of listeners with permanent UHL to that of listeners with normal bilateral hearing. Recall that Reeder et al. (2015) examined performance of children with permanent UHL and their peers with normal hearing on a four-alternative forced-choice spondee identification task in the presence of competing speech maskers; data figures suggest only a 0.3-dB summation effect. However, Rothpletz et al. (2012) observed a 4.5-dB summation effect when comparing performance between adults with and without permanent UHL on a speech-on-speech recognition task designed to produce minimal energetic masking. They suggested that adults with normal bilateral hearing have better selective auditory attentional capabilities than adults with permanent UHL (Rothpletz et al. 2012).

Effects of spatial separation

Effects of a simulated UHL on performance in spatially separated maskers are discussed in terms of SRM. Recall that SRM was calculated for three conditions: in the absence of a simulated UHL (SRM[no-plug/msk-side]), and for maskers positioned ipsilateral or contralateral to the simulated UHL (SRM[simUHL/msk-ipsi] and SRM[simUHL/msk-contra]). As illustrated in Figure 2, SRM tended to decrease with the introduction of a simulated UHL for both children and adults, and for both speech-shaped noise and two-talker speech maskers. In the two-talker speech conditions, SRM decreased by 5.6 dB when the masker was ipsilateral to the simulated UHL, and by 12.2 dB when it was contralateral. For the speech-shaped noise conditions, SRM decreased by 5.7 dB when the masker was presented contralateral to the simulated UHL; the effect of simulated UHL was not statistically significant when the masker was ipsilateral to the simulated UHL. In both maskers, children and adults obtained a negative SRM -- worse performance than the co-located baseline -- when the masker was presented contralateral to the simulated UHL.

The magnitude of SRM observed in a relatively steady noise masker for children and adults in the present study is broadly consistent with that found in previous studies. For instance, Van Deun et al. (2010) measured performance on a number recognition task in the presence of speech-weighted noise. Similar to the present study, Van Deun et al. (2010) used an earplug and earmuff to simulate UHL in adults and 8-year-olds with normal hearing. In that study, the SRM[no-plug/msk-side] was 3.9 dB (children) and 4.0 dB (adults); the SRM[simUHL/msk-ipsi] was 4.2 dB (children) and 4.6 dB (adults). In the present study, the SRM[no-plug/msk-side] was 3.9 dB (children) and 3.6 dB (adults), and SRM[simUHL/msk-ipsi] was 3.9 (children) and 3.3 dB (adults). Considering the difference in task difficulty (number recognition versus open-set sentence recognition) and differences in methodology between the two studies, our results are in line with those reported by Van Deun et al. (2010).

The values of SRM observed for adults in the two-talker masker can also be compared with those obtained in other studies using maskers associated with informational masking. Rothpletz et al. (2012) measured SRM for adults using the Coordinate Response Measure (CRM; Bolia et al. 2000) paradigm in a single-talker speech masker. The CRM paradigm is a closed-set speech recognition task. In the single-talker masker, the CRM is sensitive to informational masking (e.g., Brungart 2001a). To further minimize energetic and maximize informational masking, Rothpletz et al. (2012) digitally processed the target and masker speech to minimize spectral overlap between the target and masker stimuli. Similar to the present study, target speech was always presented from 0° azimuth, and the masker was presented from 0°, +90°, or −90° azimuth. They found that adults with permanent UHL achieved 3.9 dB SRM when the single-talker masker was presented ipsilateral to the ear with UHL, and −2.5 dB SRM when it was presented contralateral to the ear with UHL. Adults with simulated UHL in the present study achieved 4.1 dB SRM when the two-talker masker was presented ipsilateral to the ear with UHL (SRM[simUHL/msk-ipsi]) and −1.7 dB SRM when it was presented contralateral to the ear with simulated UHL (SRM[simUHL/msk-contra]).

As discussed in the Introduction, there are few studies of masked speech recognition or SRM in children with permanent or simulated UHL using maskers associated with informational masking. The most relevant comparison can be made between our results and those obtained by Reeder et al. (2015). As a reminder, Reeder et al. (2015) assessed performance of 7- to 16-year-olds with moderately-severe to profound sensorineural UHL on a range of speech recognition tasks. One of their tasks assessed masked spondee recognition in single-talker male and female maskers as well as in multitalker babble presented from 0°, +90°, or −90° azimuth. To maximize stimulus uncertainty, thereby increasing informational masking, masker presentation was pseudorandomized for each spondee presentation. Although SRM was not calculated on the spondee recognition task, data figures are consistent with an SRM of 9 dB for children with normal hearing. This is similar to 8.5-dB SRM[no-plug/msk-side] observed for children in the present study in the two-talker masker. For children with permanent UHL, SRM in the Reeder at al. (2015) study was 7 dB when the masker was ipsilateral to the UHL and 0 dB when the masker was contralateral to the UHL. In contrast, children with simulated UHL in the present study achieved an SRM of 4.2 dB when the masker was ipsilateral to the simulated UHL (SRM[simUHL/msk-ipsi]) and −3.1 dB when the masker was contralateral to the simulated UHL (SRM[simUHL/msk-contra]). One challenge in comparing the present results with those of Reeder et al (2015) is that values of SRM based on those published data represent average performance in each of three maskers -- single male talker, single female talker, and multitalker babble -- whereas the present study used a two-talker masker. Another consideration is that the masker was unpredictable from interval to interval in the study of Redder et al. (2015); the resulting stimulus uncertainty could have increased informational masking. It is therefore difficult to compare the relative influence of informational masking on the results obtained across the two studies.

Effect of masker on spatial release from masking

Based on previous data, it was predicted that the benefit of spatially separating the target and masker would be larger for the two-talker speech relative to speech-shaped noise masker, due to greater informational masking with the two-talker masker. It is often argued that spatial separation of target and masker stimuli facilitates auditory stream segregation. When tested without the earplug, both children and adults achieved more SRM in the two-talker masker than the speech-shaped noise masker. In the no-plug condition, SRM for the two maskers differed by 4.6 dB for children and 7.5 dB for adults. These results are consistent with data from previous studies showing that listeners achieve greater SRM on a speech recognition task in the presence of competing speech than competing noise (e.g., Freyman et al. 1999, 2001, 2004; Arbogast et al. 2002, 2005; Johnstone & Litovsky 2006). For instance, Freyman et al. (1999) measured adults’ speech recognition for syntactically correct, nonsense sentences in the presence of speech-spectrum noise or competing single-talker speech. The target and competing speech were both produced by female talkers. Target speech was always presented from the front of the listener (0° azimuth), and the competing noise or speech was presented from +60° azimuth. The SRM was 8 dB for the speech-spectrum noise compared with 14 dB for the single-talker speech masker (Freyman et al. 1999).

In contrast to the no-plug data, the masker type did not affect SRM in the simulated UHL conditions for children or adults. The SRM for both children and adults in two-talker speech and speech-shaped noise was approximately 4 dB when the masker was presented ipsilateral to the simulated UHL, and approximately −2 dB when the masker was presented contralateral to the simulated UHL. This pattern of results was very similar for the speech-shaped noise and the two-talker masker. Comparing SRM in the simulated UHL and no-plug conditions suggests that the availability of binaural cues has very different consequences for the two maskers. In the speech-shaped noise, introducing a simulated UHL ipsilateral to the masker has no effect on SRM. This observation is consistent with the idea that improved SNR in the ear contralateral to the masker (head shadow) is responsible for SRM with a speech-shaped noise masker. In contrast, introducing the two-talker speech masker ipsilateral to the UHL reduces SRM from 8.5 dB to 4.2 dB in children, and from 11.1 dB to 4.1 dB in adults. These results suggest that the benefit of access to binaural cues cannot be attributed entirely to the head shadow effect when the masker is two-talker speech. The benefit of access to cues available in the ear ipsilateral to the masker is sometimes described as squelch. The different mix of binaural cues contributing to performance in the two maskers could be related to relative contributions of informational masking in the baseline condition, where the target and masker are co-located; two-talker speech is thought to introduce substantially more informational masking than speech-shaped noise. The present results are therefore consistent with the idea that squelch plays an important role for speech recognition in an informational masker, but little to no role in an energetic masker.

Child-adult differences in SRM for the two-talker masker

The final prediction was that adults would benefit more than children would from binaural cues when the target and two-talker masker were spatially separated. We expected this age effect to be most evident in the no-plug conditions since listeners would have full access to the binaural cues that support SRM. Results are consistent with the idea that children obtain a smaller SRM than adults when the target is spatially separated from the two-talker speech. While there was no three-way interaction in the analysis of SRM, the largest mean difference between SRM for children and adults (2.6 dB) was observed for the two-talker masker in the no-plug/msk-side condition; in contrast, the child-adult difference ranged from 1.4 to −0.6 dB in the other five test conditions.

There is no consensus on the developmental trajectory of SRM in children. Studies from Litovsky and colleagues have concluded that children as young as 3 years of age show adult-like SRM on masked speech recognition tasks in the presence of competing speech or noise (e.g., Litovsky 2005; Garadat & Litovsky 2007). However, other studies suggest that development of SRM is not complete until adolescence (e.g., Cameron et al. 2006). For instance, Yuen and Yuan (2014) measured SRM on the Mandarin Pediatric Lexical Tone and Disyllabic Word Picture Identification Task in Noise (MAPPID-N) in adults and 4.5- to 9-year-olds. The MAPPID-N is a closed-set forced-choice speech recognition task utilizing speech-shaped noise as the masker. Children achieved approximately 3-dB less SRM than adults on the disyllabic word subtest and approximately 4-dB less SRM than adults on the lexical tone subtest. Importantly, regression analyses on the child data suggest that children’s SRM significantly improved with age on both subtests, and that age accounted for 32–34% of the variance in children’s SRM.

The psychoacoustic literature provides additional support for the idea that SRM is immature for school-age children when tested using an informational masker. Specifically, that literature indicates that children may not process binaural cues as efficiently as adults under some conditions. One way to measure the binaural auditory system’s sensitivity to interaural time and level differences is the masking level difference (MLD; Hirsh 1948; Grose et al. 1997). The MLD is estimated by measuring thresholds in two conditions: with the target and masker presented diotically and with those stimuli presented dichotically. The difference in thresholds for these two conditions is the MLD. For narrowband maskers, the MLD is smaller for school-age children than adults (Grose et al. 1997). This child-adult difference is thought to reflect children’s inability to use the interaural difference cues to capitalize on stimulus cues present in the masker envelope minima (Hall et al. 2004). Immaturity in the ability to use binaural cues in a narrowband masker may reflect the same limitations as observed in the two-talker masker of the present dataset. For instance, children’s immature ability to use binaural cues to capitalize on target speech cues in the fluctuating two-talker masker could have contributed to the observed child-adult difference in SRM[no-plug/msk-side] for that masker in the present data.

Extent of simulated UHL

Average degree of simulated UHL

There is precedent for a correlation between SRM and low-frequency hearing thresholds for children with UHL. Reeder et al. (2015) reported that children with lower (better) thresholds in their normal-hearing ear at 500 Hz had lower (better) adaptive SRTs when competing single-talker male or female speech was spatially separated to the side of their normal-hearing ear (r = 0.71, p < 0.05); this association was not observed with multi-talker babble. Reeder et al. (2015) suggested that even a minimal difference in hearing sensitivity is important for binaural processing. Without correcting for multiple comparisons, the two-tailed bivariate correlation between children’s SRM in the two-talker masker and attenuation values at 500 Hz in the present dataset was statistically significant for the simUHL/msk-contra listening condition (r = −.609, p ≤ .05); this was the only statistically significant correlation. This result suggests that, as degree of simulated UHL increased at 500 Hz, children benefited less from spatial separation of the two-talker masker when it was spatially separated to the side of their normal-hearing ear. A parallel analysis was not performed on adult data because speech data and attenuation values were collected from different individuals in all but two cases. An association between low-frequency simulated UHL and SRM is also broadly consistent with the results of Noble et al. (1994), who reported that, as degree of low-frequency conductive hearing loss increased, localization performance in the horizontal plane decreased. Similar results for SRM would be predicted to the extent that SRM relies on the same binaural cues as localization.

One limitation of the present protocol is that attenuation was only measured at 500, 1000, and 2000 Hz. While attenuation values for adults and children were largely comparable across this range, we cannot rule out the possibility that attenuation differed between groups above 2000 Hz or below 500 Hz.

Acute versus chronic UHL

A goal of the present study was to examine the effects of an acute simulated UHL on SRM, as a preliminary step towards developing methods of assessing SRM in children with long-standing UHL. There is evidence to suggest that the auditory system adapts to disrupted binaural input over time (Kumpik et al. 2010). For instance, Kumpik et al. (2010) investigated the effect of training on free-field localization of flat-spectrum or random-filtered noise stimuli in adults with normal bilateral hearing who had an earplug placed in one ear. Relative to the no-plug condition, placement of a unilateral earplug significantly reduced localization performance from ≥85% correct to ≤50% correct. Adults who received localization training with the unilateral earplug for 7–8 days showed significant improvement in free-field localization abilities for flat-spectrum noise. These results suggest that the auditory system can adapt to disrupted binaural input by reweighting localization cues (Kumpik et al. 2010). However, Kumpik et al. (2010) did not see evidence of adaptation within a single test session. Further evidence that adaptation to disrupted binaural input does not occur within a single test session comes from Slattery and Middlebrooks (1994). In that study, adults with normal bilateral hearing completed a localization task in the presence of a unilateral earplug and earmuff. Performance was assessed immediately after placing the earplug and after 24-hrs experience wearing the earplug; the earmuff was placed over the earplug only during testing. Performance on the localization task did not differ between the two time points. It is likely that results from the present study would be different had the listeners acclimatized to listening with the simulated UHL over a period longer than 24 hrs.

Conductive versus sensorineural hearing loss

There are important differences between the impacts of conductive versus sensorineural hearing loss that should be considered when interpreting our data. In the present study, we simulated a conductive UHL by occluding one ear with an earplug and earmuff. Conductive hearing loss results in attenuation of air-conducted auditory stimuli, while sensorineural hearing loss results in attenuation and distortion of air-conducted auditory stimuli (Plomp 1978, 1986; Dreschler & Plomp 1980, 1985; Glasberg & Moore 1989). In adults with moderate hearing loss, symmetrical conductive hearing loss is relatively more detrimental to sound source localization in the horizontal plane than symmetrical sensorineural hearing loss (Noble, Byrne, & Lepage 1994). This performance difference has been attributed to disruption of low-frequency interaural time cues in listeners with conductive hearing loss. For listeners with conductive hearing loss, reduced effectiveness of air conducted sound and increased reliance on bone conduction leads to a loss or reduction of cochlear isolation (Noble et al. 1994) and consequent reduction of binaural difference cues. This is relevant to the current study because SRM and localization are thought to rely on some of the same binaural cues. The distortion of air-conducted sound in sensorineural hearing loss and the disruption of bone-conducted interaural cues in conductive hearing loss may affect SRM in ways that are not captured by the simulated conductive UHL evaluated in the present study. This possibility will be addressed in future studies of children with permanent conductive and sensorineural UHL.

Conclusions

Overall, our findings confirm that children and adults with normal bilateral hearing experience greater SRM for primarily informational as opposed to energetic masking. Given that the effect of masker type on SRM was essentially eliminated in the presence of a simulated UHL, these results suggest that the detriment of listening with disrupted binaural input is more evident in competing two-talker speech than speech-shaped noise for both children and adults. This was a first step towards applying this method of testing to children with permanent UHL. We expect that listeners with permanent UHL will experience some of the same deficits that listeners with simulated UHL demonstrated on the present task. However, we expect that degree and type of hearing loss and the adoption of compensatory listening strategies could impact the results obtained in listeners with permanent UHL. The present data suggest that even mild to moderate degrees of conductive hearing loss may eliminate SRM and potentially result in functional communication difficulties. The finding that binaural squelch plays an important role in SRM for competing two-talker speech, but not speech-shaped noise, could also have implications for audiologic rehabilitation and preferential classroom seating. Specifically, some children may benefit from more aggressive audiologic treatment options in the presence of competing speech. Given the association between speech recognition performance in a two-talker masker and children’s real-world listening difficulties (Hillock-Dunn et al. 2015), assessment of SRM in a two-talker masker may provide important insight into the difficulties children with UHL face in their everyday environments, such as classrooms.

Acknowledgments

Source of Funding: This work was supported by the National Institute of Deafness and Other Communication Disorders (NIDCD, R01 DC011038; NIDCD, R01 DC000397).

We are grateful to the members of the Human Auditory Development Laboratory for their assistance with data collection. Portions of this article were presented at the 43rd Annual Scientific and Technology Meeting of the American Auditory Society, Scottsdale, AZ, March 2016, and at the Auditory Development: From Cochlea to Cognition conference, Seattle, WA, August 2015.

Footnotes

There is no precedent in the literature to expect a difference between SRTs for a makser at +90° or −90° azimuth in normal-hearing listeners who are listening without simulated hearing loss. However, there are inconsistent findings in the literature regarding UHL laterality on patient outcomes (e.g., speech recognition in noise). In this study, laterality of simulated UHL did not have a statistically significant effect on performance.

Conflicts of Interest

No conflicts of interest were declared.

References

- American National Standards Institute. ANSI S3.6-2010, American National Standard Specification for Audiometers. New York, NY: American National Standards Institute; 2010. [Google Scholar]

- Arbogast TL, Mason CR, Kidd G. The effect of spatial separation on informational and energetic masking of speech. The Journal of the Acoustical Society of America. 2002;112(5):2086–2098. doi: 10.1121/1.1510141. [DOI] [PubMed] [Google Scholar]

- Arbogast TL, Mason CR, Kidd G. The effect of spatial separation on informational masking of speech in normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America. 2005;117(4 Pt 1):2169–2180. doi: 10.1121/1.1861598. [DOI] [PubMed] [Google Scholar]

- Bench J, Kowal Å, Bamford J. The Bkb (Bamford-Kowal-Bench) Sentence Lists for Partially-Hearing Children. British Journal of Audiology. 1979;13(3):108–112. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- Bess FH, Dodd-Murphy J, Parker RA. Children with minimal sensorineural hearing loss: prevalence, educational performance, and functional status. Ear and Hearing. 1998;19(5):339–354. doi: 10.1097/00003446-199810000-00001. [DOI] [PubMed] [Google Scholar]

- Bess FH, Tharpe AM. Unilateral Hearing Impairment in Children. Pediatrics. 1984;74(2):206–216. [PubMed] [Google Scholar]

- Bess FH, Tharpe AM, Gibler AM. Auditory Performance of Children with Unilateral Sensorineural Hearing Loss. Ear and Hearing. 1986;7(1):20–26. doi: 10.1097/00003446-198602000-00005. [DOI] [PubMed] [Google Scholar]

- Bolia RS, Nelson WT, Ericson MA, et al. A speech corpus for multitalker communicartions research. The Journal of the Acoustical Society of America. 2000;107(2):1065–1066. doi: 10.1121/1.428288. [DOI] [PubMed] [Google Scholar]

- Borg E, Risberg A, McAllister B, et al. Language development in hearing-impaired children, Establishment of a reference material for a “Language test for hearing-impaired children “, LATHIC. International Journal of Pediatric Otorhinolaryngology. 2002;65:15–26. doi: 10.1016/s0165-5876(02)00120-9. [DOI] [PubMed] [Google Scholar]

- Bovo R, Martini A, Agnoletto M, et al. Auditory and Academic Performance of Children with Unilateral Hearing Loss. Scandinavian Audiology Supplementum. 1988;30:71–74. [PubMed] [Google Scholar]

- Bregman A. Auditory scene analysis: The perceptual organization of sound. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- Bronkhorst AW. The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acustica. 2000;86:117–128. [Google Scholar]

- Bronkhorst AW, Plomp R. The effect of head-induced interaural time and level differences on speech intelligibility in noise. The Journal of the Acoustical Society of America. 1988;83(4):1508–16. doi: 10.1121/1.395906. [DOI] [PubMed] [Google Scholar]

- Brookhouser PE, Worthington DW, Kelly WJ. Unilateral Hearing Loss in Children. Laryngoscope. 1991;101(12):1264–72. doi: 10.1002/lary.5541011202. [DOI] [PubMed] [Google Scholar]

- Brungart DS. Evaluation of speech intelligibility with the coordinate response measure. The Journal of the Acoustical Society of America. 2001a;109(5):2276–2279. doi: 10.1121/1.1357812. [DOI] [PubMed] [Google Scholar]

- Brungart DS. Informational and energetic masking effects in the perception of two simultaneous talkers. The Journal of the Acoustical Society of America. 2001b;109:1101–1109. doi: 10.1121/1.1345696. [DOI] [PubMed] [Google Scholar]

- Brungart DS, Simpson BD, Ericson MA, et al. Informational and energetic masking effects in the perception of multiple simultaneous talkers. The Journal of the Acoustical Society of America. 2001;110(5 Pt 1):2527–2538. doi: 10.1121/1.1408946. [DOI] [PubMed] [Google Scholar]

- Calandruccio L, Gomez B, Buss E, et al. Development and Preliminary Evaluation of a Pediatric Spanish-English Speech Perception Task. American Journal of Audiology. 2014;23:158–172. doi: 10.1044/2014_AJA-13-0055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cameron S, Dillon H, Newall P. The Listening in Spatialized Noise test: Normative data for children. International Journal of Audiology. 2006;45:99–108. doi: 10.1080/14992020500377931. [DOI] [PubMed] [Google Scholar]

- Carhart R, Tillman TW, Greetis ES. Perceptual Masking in Multiple Sound Backgrounds. The Journal of the Acoustical Society of America. 1969;45(3):694–703. doi: 10.1121/1.1911445. [DOI] [PubMed] [Google Scholar]

- Culling JF, Hawley ML, Litovsky RY. The role of head-induced interaural time and level differences in the speech reception threshold for multiple interfering sound sources. The Journal of the Acoustical Society of America. 2004;116(2):1057–1065. doi: 10.1121/1.1772396. [DOI] [PubMed] [Google Scholar]

- Dancer J, Burl NT, Waters S. Effects of unilateral hearing loss on teacher responses to the SIFTER: Screening Instrument for Targeting Educational Risk. American Annals of the Deaf. 1995;140:291–294. doi: 10.1353/aad.2012.0592. [DOI] [PubMed] [Google Scholar]

- Davis A, Haggard M, Bell I. Magnitude of diotic summation in speech-in-noise tasks: performance region and appropriate baseline. British Journal of Audiology. 1990;24(1):11–6. doi: 10.3109/03005369009077838. [DOI] [PubMed] [Google Scholar]

- Dreschler WA, Plomp R. Relation between psychophysical data and speech perception for hearing-impaired subjects. I. The Journal of the Acoustical Society of America. 1980;68(6):1608–1615. doi: 10.1121/1.385215. [DOI] [PubMed] [Google Scholar]

- Dreschler WA, Plomp R. Relations between psychophysical data and speech perception for hearing-impaired subjects. II. The Journal of the Acoustical Society of America. 1985;78(4):1261–1270. doi: 10.1121/1.392895. [DOI] [PubMed] [Google Scholar]

- Durlach NI, Mason CR, Shinn-Cunningham BG, Arbogast TL, Colburn HS, Kidd G., Jr Informational masking: Counteracting the effects of stimulus uncertainty by decreasing target-masker similarity. The Journal of the Acoustical Society of America. 2003;114(1):368–379. doi: 10.1121/1.1577562. [DOI] [PubMed] [Google Scholar]

- Ead B, Hale S, DeAlwis D, et al. Pilot study of cognition in children with unilateral hearing loss. International Journal of Pediatric Otorhinolaryngology. 2013;77(11):1856–1860. doi: 10.1016/j.ijporl.2013.08.028. [DOI] [PubMed] [Google Scholar]

- Fitzpatrick EM, Whittingham J, Durieux-Smith A. Mild Bilateral and Unilateral Hearing Loss in Childhood: A 20-Year View of Hearing Characteristics, and Audiologic Practices Before and After Newborn Hearing Screening. Ear and Hearing. 2014;35(1):10–18. doi: 10.1097/AUD.0b013e31829e1ed9. [DOI] [PubMed] [Google Scholar]

- Fletcher H. Auditory patterns. Reviews of Modern Physics. 1940;12(1):47–65. [Google Scholar]

- Fortnum HM, Summerfield AQ, Marshall DH, et al. Prevalence of permanent childhood hearing impairment in the United Kingdom and implications for neonatal hearing screening: questionnaire based ascertainment study. British Medical Journal. 2001;323(7312):536–540. doi: 10.1136/bmj.323.7312.536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freyman RL, Balakrishnan U, Helfer KS. Spatial release from informational masking in speech recognition. The Journal of the Acoustical Society of America. 2001;109(5):2112–2122. doi: 10.1121/1.1354984. [DOI] [PubMed] [Google Scholar]

- Freyman RL, Balakrishnan U, Helfer KS. Effect of number of masking talkers and auditory priming on informational masking in speech recognition. The Journal of the Acoustical Society of America. 2004;115(5):2246–2256. doi: 10.1121/1.1689343. [DOI] [PubMed] [Google Scholar]

- Freyman RL, Helfer KS, McCall DD, et al. The role of perceived spatial separation in the unmasking of speech. The Journal of the Acoustical Society of America. 1999;106(6):3578–3588. doi: 10.1121/1.428211. [DOI] [PubMed] [Google Scholar]

- Gallun FJ, Mason CR, Kidd G., Jr Binaural release from informational masking in a speech identification task. The Journal of the Acoustical Society of America. 2005;118:1614–25. doi: 10.1121/1.1984876. [DOI] [PubMed] [Google Scholar]

- Garadat SN, Litovsky RY. Speech intelligibility in free field: Spatial unmasking in preschool children. The Journal of the Acoustical Society of America. 2007;121(2):1047–1055. doi: 10.1121/1.2409863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelfand S. Essentials of Audiology. 3. New York: NY: Thieme Medical Publishers, Inc; 2009. Auditory System and Related Disorders; pp. 157–204. [Google Scholar]

- Glasberg BR, Moore BC. Psychoacoustic abilities of subjects with unilateral and bilateral cochlear hearing impairments and their relationship to the ability to understand speech. Scandinavian Audiology Supplementum. 1989;32:1–25. [PubMed] [Google Scholar]

- Grose JH, Hall JW, Dev MB. MLD in Children: Effects of Signal and Masker Bandwidths. Journal of Speech, Language, and Hearing Research. 1997;40:955–959. doi: 10.1044/jslhr.4004.955. [DOI] [PubMed] [Google Scholar]

- Hall JW, Buss E, Grose JH, et al. Effects of Age and Hearing Impairment on the Ability to Benefit from Temporal and Spectral Modulation. Ear and Hearing. 2012;33(3):340–348. doi: 10.1097/AUD.0b013e31823fa4c3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall JW, Buss E, Grose JH, et al. Developmental Effects in the Masking-Level Difference. Journal of Speech, Language, and Hearing Research. 2004;47:13–20. doi: 10.1044/1092-4388(2004/002). [DOI] [PubMed] [Google Scholar]

- Hartvig Jensen J, Angaard Johansen P, Borre S. Unilateral sensorineural hearing loss in children and auditory performance with respect to right/left ear differences. British Journal of Audiology. 1989;23:207–213. doi: 10.3109/03005368909076501. [DOI] [PubMed] [Google Scholar]

- Hawley ML, Litovsky RY, Culling JF. The benefit of binaural hearing in a cocktail party: Effect of location and type of interferer. The Journal of the Acoustical Society of America. 2004;115(2):833–843. doi: 10.1121/1.1639908. [DOI] [PubMed] [Google Scholar]