Abstract

Objectives

At a minimum, unilateral hearing loss (UHL) impairs sound localization ability and understanding speech in noisy environments, particularly if the loss is severe to profound. Accompanying the numerous negative consequences of UHL is considerable unexplained individual variability in the magnitude of its effects. Identification of co-variables that affect outcome and contribute to variability in UHLs could augment counseling, treatment options, and rehabilitation. Cochlear implantation as a treatment for UHL is on the rise yet little is known about factors that could impact performance or whether there is a group at risk for poor cochlear implant outcomes when hearing is near-normal in one ear. The overall goal of our research is to investigate the range and source of variability in speech recognition in noise and localization among individuals with severe to profound UHL and thereby help determine factors relevant to decisions regarding cochlear implantation in this population.

Design

The present study evaluated adults with severe to profound UHL and adults with bilateral normal hearing. Measures included adaptive sentence understanding in diffuse restaurant noise, localization, roving-source speech recognition (words from 1 of 15 speakers in a 140° arc) and an adaptive speech-reception threshold psychoacoustic task with varied noise types and noise-source locations. There were three age-gender-matched groups: UHL (severe to profound hearing loss in one ear and normal hearing in the contralateral ear), normal hearing listening bilaterally, and normal hearing listening unilaterally.

Results

Although the normal-hearing-bilateral group scored significantly better and had less performance variability than UHLs on all measures, some UHL participants scored within the range of the normal-hearing-bilateral group on all measures. The normal-hearing participants listening unilaterally had better monosyllabic word understanding than UHLs for words presented on the blocked/deaf side but not the open/hearing side. In contrast, UHLs localized better than the normal hearing unilateral listeners for stimuli on the open/hearing side but not the blocked/deaf side. This suggests that UHLs had learned strategies for improved localization on the side of the intact ear. The UHL and unilateral normal hearing participant groups were not significantly different for speech-in-noise measures. UHL participants with childhood rather than recent hearing loss onset localized significantly better; however, these two groups did not differ for speech recognition in noise. Age at onset in UHL adults appears to affect localization ability differently than understanding speech in noise. Hearing thresholds were significantly correlated with speech recognition for UHL participants but not the other two groups.

Conclusions

Auditory abilities of UHLs varied widely and could be explained only in part by hearing threshold levels. Age at onset and length of hearing loss influenced performance on some, but not all measures. Results support the need for a revised and diverse set of clinical measures, including sound localization, understanding speech in varied environments and careful consideration of functional abilities as individuals with severe to profound UHL are being considered potential cochlear implant candidates.

INTRODUCTION

Individuals with unilateral hearing loss (UHL), particularly if the loss is severe to profound, report that listening in noise and localizing sound are difficult tasks; however, the extent of the difficulty has not been fully appreciated by others. Numerous observations challenge the customary notion that communication and localization problems have a minimal impact on daily life for those with UHL. Responses from patients with UHL to questions of perceived handicap in real-life situations suggested two dominant factors influence communication and localization: hearing asymmetry between ears and auditory sensitivity (Gatehouse et al. 2004). A subsequent examination of questionnaire responses (Noble et al. 2004) reported that patients with asymmetric hearing were significantly more disabled than those with symmetric hearing loss for speech recognition, spatial hearing, and quality of sound. A more recent study evaluated the effects of hearing mode [normal hearing (NH), a cochlear implant (CI), or a hearing aid (HA)] in the better ear of individuals who also had severe to profound hearing loss in the contralateral ear (Dwyer et al. 2014). Using the subscale analysis described by Gatehouse and Akeroyd (2006), results were not significantly different between the three groups (NH, CI, HA) on six of the 10 subscales (i.e., speech in noise, multiple-stream processing and switching, localization, distance and movement, segregation of sounds, listening effort) of the Speech Spatial and Qualities (SSQ) Hearing Scale (Gatehouse and Noble 2004). In other words, listening was perceived as equally challenging among these unilateral listeners regardless of whether the individual’s only hearing ear had NH, a CI or a HA.

Problems experienced by individuals with UHL are related to the disadvantages of monaural compared to binaural hearing. Binaural hearing offers several advantages to improve listening in noise including the head-shadow effect (i.e., the head acts as a buffer to improve the signal-to-noise ratio at the ear distant from the noise) and the squelch effect (i.e., adding the ear closest to the noise source improves speech perception). Individuals with bilateral NH show a significant binaural advantage for speech understanding in noise (Abel et al. 1982; Bronkhorst et al. 1988; Feuerstein 1992; Hawley et al. 1999). Understanding soft speech is enhanced with bilateral input due to binaural-summation effects, which improve signal detection (Hirsh 1948). Although few studies have addressed listening at soft presentation levels, UHL presumably affects listening at increased distances or when speech intensities are lowered (Bess and Tharpe 1986; Noh et al. 2012). Bilateral input also adds redundancy and thus improves information processing. Without a binaural system, as in the case of UHL, binaural-summation effects are lost. Impaired sound localization is commonly reported for individuals with UHL (Douglas et al. 2007; Dwyer et al. 2014; Humes et al. 1980; Olsen et al. 2012; Rothpletz et al. 2012; Slattery et al. 1994). NH listeners primarily use interaural time differences (ITDs) and interaural level differences (ILDs) to compare signals arriving at the two ears and to determine the sound-source location in the horizontal plane. For vertical and front/back localization, additional spectral shape cues are generated from the pinnae and head position to assist the listener (Agterberg et al. 2014; Algazi et al. 2001; Middlebrooks 1992; Wightman et al. 1997). UHL reduces the ability to compare signals between ears and therefore diminishes localization accuracy.

Accompanying the numerous negative consequences of UHL is considerable unexplained individual variability in the magnitude of its effects. For example, on localization tasks, some individuals with UHL showed fair accuracy in their ability to localize whereas others did not (Firszt et al. 2015; Rothpletz et al. 2012; Slattery and Middlebrooks 1994; Van Wanrooij et al. 2004). Among five UHL patients studied by Slattery and Middlebrooks (1994), two patients could not localize and performed similarly to NH controls that had one ear plugged, while three patients with long term unilateral deafness had significantly better localization. In a study of localization training for adults with unilateral severe to profound hearing loss, localization scores varied considerably both prior to and following training (Firszt et al. 2015). Likewise, Rothpletz et al. (2012) reported varied performance on a localization task among adults with UHL. Identification of co-variables that influence outcomes and contribute to variability among individuals with UHL is needed and could augment counseling, treatment options and rehabilitation. It is particularly important as treatment options advance to address the deficits of UHL. While contralateral routing of sound devices are available (e.g. osseointegrated and contralateral routing of signal hearing devices), they do not restore hearing to the poor ear and therefore cannot provide access to binaural processing advantages. Cochlear implantation as a treatment for UHL is on the rise (Arndt et al. 2011; Firszt, et al. 2012; Kitterick et al. 2016; Vermeire et al. 2009; Zeitler et al. 2015), yet little is known about factors that could impact performance or whether there is a group at risk for poor CI outcomes when hearing is normal or near-normal in one ear. Finally, more objective evaluations of monaural abilities are needed to inform future studies of outcomes provided by sensory devices.

The overall goal of our research is to investigate the range and source of variability in speech recognition in noise and localization among individuals with severe to profound UHL and thereby help determine factors relevant to decisions regarding cochlear implantation in this population. In the present study, speech recognition and localization were assessed and quantified in adults with UHL and compared to listeners with NH bilaterally. Measures addressed areas known to be challenging for individuals with UHL and included aspects designed to replicate real-life listening situations. Speech recognition was tested using stimuli presented from roved locations, at average and soft intensity levels. Speech-reception thresholds were measured with noise sources that varied by type and location. Sound localization was assessed in the horizontal plane. In addition, results were compared with NH individuals listening with one ear. That is, the study included unilateral listeners who had not adapted to UHL to further explore whether individuals with chronic UHL learned to use cues provided by a single hearing ear. Finally, it is unclear whether speech recognition in noise and localization abilities develop differently in adults with early-onset versus later-onset hearing loss. Therefore, we compared results among two groups of adults with UHL who differed in age at onset of severe to profound hearing loss (SPHL).

MATERIALS AND METHODS

This study was reviewed and approved by the Human Research Protection Office at Washington University School of Medicine (WUSM).

Participants

Adults with SPHL in one ear and normal hearing in the other ear (i.e. unilateral hearing loss) were recruited from the outpatient audiology and otology clinics at WUSM. In general, upon enrollment of a UHL participant, two additional age-gender-matched participants with normal hearing (NH) bilaterally were recruited through the University’s participant volunteer program. One NH match was tested listening unilaterally (NH-unilateral) with the same ear (right or left) as the UHL participant and the other NH match was tested listening bilaterally (NH-bilateral). This resulted in three similarly-sized groups matched for age and gender. One NH-bilateral participant’s data were excluded from all analyses due to extreme outlier (poorer) results on multiple measures. Descriptive information for the three participant groups is provided in Table 1. There were 26 UHL adults with a mean age of 49.1 years including 15 females, 11 males; 13 each with right and left ear deafness. The mean pure-tone average (PTA) across all frequencies (.25 – 8 kHz) for the intact ear was 16.2 dB HL and for the deaf ear was 110.4 dB HL. The mean age at onset of SPHL was 27.3 years and ranged from at birth to 61 years. Eight of the participants had SPHL onset at a very young age (by age 3 years) and nine of the participants had recent SPHL onset (within 3 years of testing). The NH-unilateral group included 25 participants, 15 females, 10 males; 12 listened with the right ear and 13 listened with the left ear during testing. The mean age for the NH-unilateral participants was 48.8 years and the mean PTA across all frequencies for the tested ear was 11.9 dB HL. The NH-bilateral group consisted of 23 adults, 15 females and 8 males with a mean age of 49.7 years and a mean PTA across all frequencies and both ears of 12.5 dB HL. There was no significant difference in hearing for the NH ears of the three groups.

Table 1.

Participant group descriptive statistics

|

Group |

Age (years) |

Hearing (dB) Avg .25–8 kHz of NH ear(s) |

Hearing (dB) Avg .25–8 kHz of SPHL ear |

Age Onset SPHL (years) |

Length of Deafness (years) |

|---|---|---|---|---|---|

| UHL (n = 26) |

49.1 (12.9) 25 – 71 |

16.2 (7.9) 4.4 – 30.0 |

110.4 (10.6) 78.3 – 121.3+ |

27.3 (22.7) 0 – 61 |

21.9 (21.8) <1 – 72 |

| NH-unilateral (n = 25) |

48.8 (13.7) 22 – 71 |

11.9 (4.5) 3.8 – 23.1 |

na | na | na |

| NH-bilateral (n = 23) |

49.7 (11.6) 22 – 67 |

12.5 (5.9) 2.5 – 25.6 |

na | na | na |

Note: SPHL = severe to profound hearing loss; UHL = unilateral hearing loss; NH = normal hearing; na = not applicable. For each participant group means, standard deviations (in parentheses) and ranges are indicated.

Test Measures

All testing occurred in double-walled sound booths with the participant comfortably seated. Test stimuli and presentation equipment were calibrated for accuracy and consistency. One ear of the NH-unilateral participants was blocked with a plug and muff. Specifications for the E-A-R plug used were 41, 43, 42, 38, 48, and 47 dB of attenuation at 25, .5, 1, 2, 4, and 8 kHz, respectively. For a subset of participants, addition of a Howard Leight earmuff (used in combination with the E-A-R plug) resulted in average attenuation by frequency of 46, 51, 49, 51, 41, 42, 50, 52, and 54 dB at .25, .5, .75, 1, 2, 3, 4, 6, and 8 kHz, respectively or an average attenuation of 48 dB across frequencies.

Speech understanding in noise was evaluated with the Hearing In Noise Test (HINT; Nilsson et al. 1994) in the R-Space (Compton-Conley et al. 2004; Revit et al. 2002). The R-Space is a sound system that consists of eight loudspeakers equally spaced in a 360-degree array with each loudspeaker 24 inches from the center of the participant’s head. Restaurant noise was presented from each loudspeaker at 60 dB SPL to create a diffuse noisy environment that replicated a real-life challenging listening situation. HINT sentences were presented from the front loudspeaker (0 degrees azimuth) beginning at +6 dB signal-to-noise ratio (SNR). The SNR was adapted to be easier or more difficult based on participant responses using a 4-dB step size for the first four presentations, followed by a 2-dB step size for the remaining 16 presentations. The SNR for these 16 presentations, plus the SNR that would have been used for a 17th presentation, were averaged to estimate the SNR required for 50% accuracy (SNR-50). Two 20-sentence lists were administered and averaged for a final score.

Localization and word recognition were measured using a Roving Consonant Vowel-Nucleus Consonant (CNC) measure. CNC words from American English lists created at the University of Melbourne (Skinner et al. 2006) were presented randomly from loudspeakers along a 140 degree arc at 60 dB SPL (intensity roved ±3 dB). This test used 15 loudspeakers (numbered 1–15) arranged 10 degrees apart on a horizontal plane. The participant was seated approximately three feet in front of the center loudspeaker (#8) and was unaware that five loudspeakers were inactive (#2, 4, 8, 12, 14). Each test administration included 100 presentations, with 10 words presented randomly from each of the 10 active loudspeakers with the carrier “Ready” (average stimuli duration of 400 ms). The participants were instructed to face the center loudspeaker between each presentation but were allowed head turns during each carrier-word presentation. After each presentation, the participant repeated the word and indicated the source loudspeaker number. For localization, a root-mean-square (RMS) error score was calculated as the mean target-response difference, irrespective of error direction (the square root of the quotient resulting from the sum of each target-response difference squared and divided by the number of trials). The task was administered twice and the two RMS error scores were averaged. For word recognition, the percent of words correctly identified for each administration was averaged. Both localization and word-recognition scores also were calculated based on the side of presentation. As a result, in addition to the total scores, the UHL and NH-unilateral participants had ipsilateral-side and contralateral-side scores (relative to the NH ear of UHL participants or the open ear of NH-unilateral participants). NH-bilateral participants had right-side and left-side scores.

An Adaptive Speech-Reception Threshold (SRT) psychoacoustic task, modified from the task described by Litovsky and Johnstone (2005;2006), was also administered. Testing was completed with three different loudspeaker configurations. Spondees were spoken by a male talker and always presented from a front-facing loudspeaker, 0 degrees azimuth and 1.5 meters from the participant. Competing noise was presented from each of three loudspeakers: the front loudspeaker, a loudspeaker 90 degrees to the right and a loudspeaker 90 degrees to the left. In addition to a quiet condition, there were two types of single-talker noise (a female talker and a male talker each presenting Harvard IEEE sentences) and one type of multi-talker babble (MTB). When present, noise was 60 dB SPL. For each loudspeaker configuration (noise front, noise right and noise left), spondees were initially presented at 60 dB SPL. A four-alternative forced-choice task without feedback determined subsequent presentation levels using an adaptive paradigm based on participant responses that continued through four reversals. Each of four spondee words was presented in a quadrant of the computer screen; the participants made a selection by touching one of the four boxed words. Noise conditions varied pseudo randomly and were tracked independently resulting in an SRT (average of the last three reversals) for each noise condition and each loudspeaker configuration (e.g. MTB noise front, MTB noise left, MTB noise right, female-talker noise front, female-talker noise left, etc.) for a total of nine SRTs.

Data Analysis

Analysis of data for outliers and distribution resulted in one NH-bilateral participant’s data being eliminated. For data sets that were not normally distributed non-parametric statistics were used. An ANOVA or the Kruskal-Wallis H test was used to identify main effects. For significant findings, post-hoc comparisons were conducted with Bonferroni corrected t-tests or Mann-Whitney U tests and significance was set at p ≤ 0.05. The Pearson or Spearman tests analyzed correlations between variables. A more stringent significance was used for correlations, p ≤ 0.005, due to multiple comparisons.

RESULTS

Speech Recognition and Localization

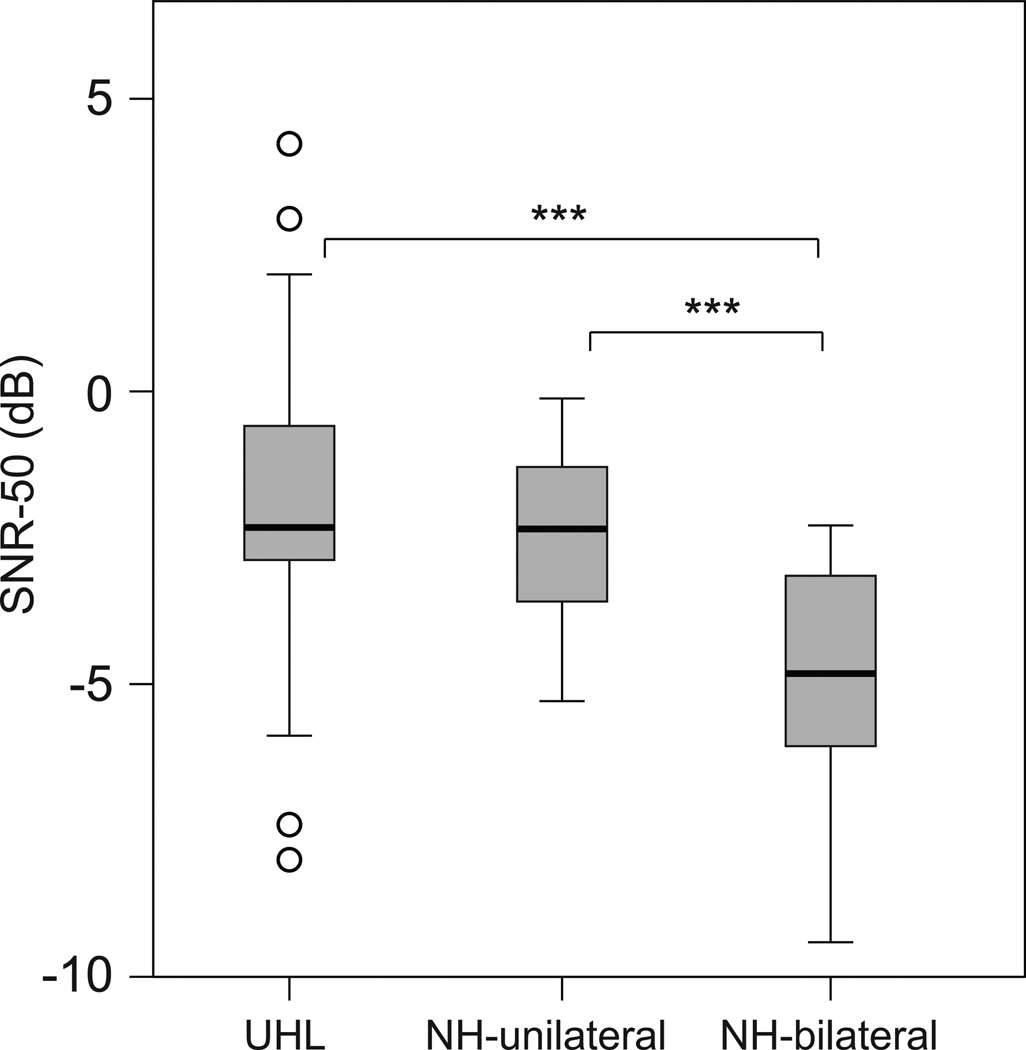

Box plots in Figure 1 show each group’s median and range of responses for sentence recognition in restaurant noise as measured in the R-Space. There was a significant group difference, H(2) = 19.4, p < 0.001. Post-hoc comparisons found no significant differences between the UHL and NH-unilateral groups (p > 0.05), however the NH-bilateral group understood sentences in noise at significantly lower SNRs than the two unilateral listening groups, NH-bilateral vs. UHL U = 107.5, p < 0.001; NH-bilateral vs. NH-unilateral U = 104.5, p < 0.001.

Figure 1.

Box plots indicate the range of performance in the R-Space for each participant group. The box depicts the interquartile range transected by the median; tails represent the 10th to 90th percentiles, and outliers are indicated with open circles. Brackets and asterisks denote significant differences, ***p< 0.001. UHL indicates unilateral hearing loss; NH, normal hearing; SNR-50, speech in noise ratio for 50 percent accuracy.

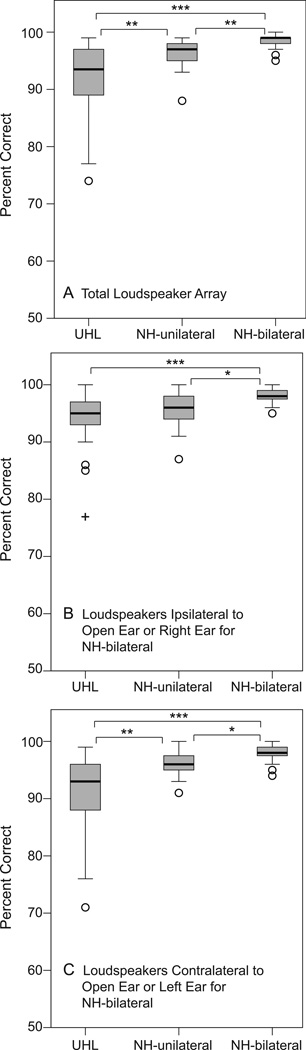

CNC word-recognition results are shown in Figure 2. Panel A has box plots for results from the full array of loudspeakers. Panel B shows results from the loudspeakers located ipsilateral to the NH ear of UHL participants, the open ear of NH-unilateral participants, and the right ear of NH-bilateral participants. Panel C shows results from loudspeakers located on the contralateral side (i.e. deaf ear of UHL, blocked ear of NH-unilateral, left ear of NH-bilateral). There was a significant group effect that was present whether including results from the full loudspeaker array or either half of the array, H = 16.8 – 29.0, ps < 0.001. Bonferroni post hoc Mann Whitney comparisons indicated higher word recognition from the NH-bilateral group than either of the other two unilateral listener groups for all three loudspeaker array analyses, U = 53 – 173, ps < 0.05. The NH-unilateral group scores were higher than the UHL group and the differences were significant in the full array analysis (U = 166.5, p < 0.01) as well as the contralateral side analysis (U = 179.5, p < 0.01) but not the ipsilateral side analysis (p > 0.05). None of the groups had significantly different word recognition when comparing results from the ipsilateral and contralateral sides (comparing box plots from panels B and C for each group; ps > 0.05).

Figure 2.

Box plots indicate the range of word-recognition performance for each participant group. The box depicts the interquartile range transected by the median; tails represent the 10th to 90th percentiles, and outliers are indicated with open circles and the extreme outlier with a plus symbol. Brackets and asterisks denote significant differences, *p< 0.05, **p< 0.01, ***p< 0.001. UHL indicates unilateral hearing loss; NH normal hearing. Panel A are results from the total loudspeaker array; Panel B from loudspeakers ipsilateral to the open ear (or right ear of NH-bilateral participants); Panel C from loudspeakers contralateral to the open ear (or left ear of NH-bilateral participants).

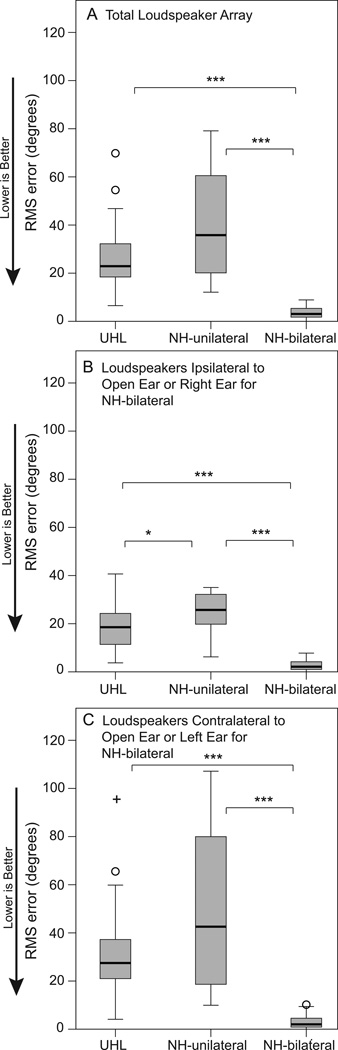

Figure 3 provides box plots of localization RMS error results following the same format as Figure 2. Results from the total array are displayed in panel A with individual side results in panels B (ipsilateral to the NH or open ear for unilateral listeners and the right ear for NH-bilateral listeners) and C (contralateral or left ear side presentations). Consistent with word-recognition results, there was a significant group effect for all three analyses, H = 45.6 – 48.8, ps < 0.001. As expected, the NH-bilateral group localized significantly better than either unilateral listening group whether including results from all loudspeakers or either half of the array, U = 0 – 16, ps < 0.001. There was no difference in results for NH-bilateral participants based on the side of presentation (comparing the right most box plots of panels B and C; p > 0.05). Both the UHL and NH-unilateral groups localized significantly better for stimuli from the ipsilateral compared to contralateral side (comparing box plots from panels B and C; UHL z = −4.2, p < 0.001; NH-unilateral z = −3.6, p < 0.001). When the stimuli were from the ipsilateral side (panel B), the UHL participants localized significantly better than the NH-unilateral participants, U = 182, p < 0.05. For both the total (panel A) and contralateral side (panel C) scores, there was a wider distribution across the second and third quartiles (shown by the gray boxes) for NH-unilateral than UHL participants; however, the group differences were not statistically significant (p > 0.05).

Figure 3.

Box plots indicate the range of localization performance for each participant group. The box depicts the interquartile range transected by the median; tails represent the 10th to 90th percentiles, and outliers are indicated with open circles or a plus symbol. Brackets and asterisks denote significant differences, *p< 0.05, ***p< 0.001. UHL indicates unilateral hearing loss; NH, normal hearing; RMS, root mean square. Panel A are results from the total loudspeaker array; Panel B from loudspeakers ipsilateral to the open ear (or right ear of NH-bilateral participants); Panel C from loudspeakers contralateral to the open ear (or left ear of NH-bilateral participants).

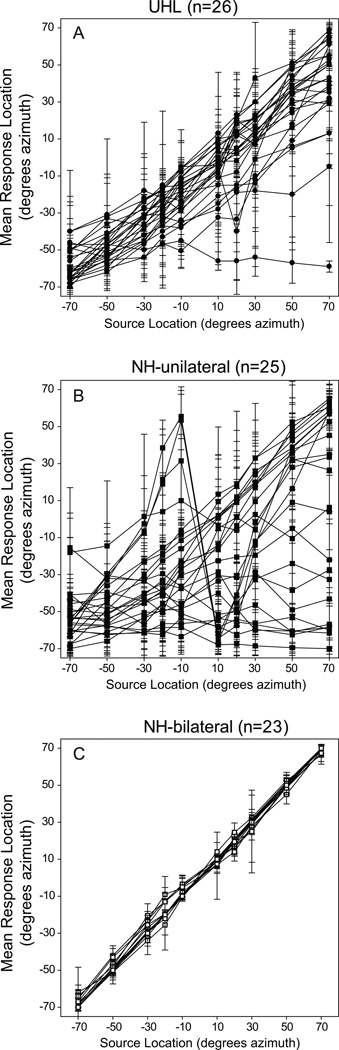

Individual localization results are shown in Figure 4. The mean and standard deviations for each loudspeaker and each individual are plotted for UHL participants in panel A, NH-unilateral participants in panel B and NH-bilateral participants in panel C. All UHL and NH-unilateral data are plotted with the −70° azimuth loudspeaker on the side of the NH/open ear (left side of x-axis and bottom of y-axis) and the 70° azimuth loudspeaker on the deaf/blocked side (right side of x-axis and top of y-axis). Results for participants who correctly identified the source of all (or most) presented stimuli would be along a diagonal line from the bottom left-hand to top right-hand corner, as seen for the NH-bilateral participants in panel C. As with the RMS error results shown in Figure 3, there is greater variability in responses for the unilateral listeners than the NH-bilateral participants (particularly for stimuli presented toward the deaf/blocked ear), with the greatest variability among the NH-unilateral participants. Mean responses for the unilateral listeners that did not loosely follow the diagonal, were usually toward the NH or open side (below the diagonal) with the exception of three NH-unilateral participants who on average heard stimuli between −10 degrees and −30 degrees azimuth as being presented from loudspeakers on their blocked side. One UHL participant and several NH-unilateral participants consistently perceived stimuli as originating from three to four loudspeakers on the farthest end of the loudspeaker array toward their NH/open ears.

Figure 4.

Each participant’s mean location response (y-axis) for each source location (x-axis) are plotted for the unilateral hearing loss (UHL) participants in Panel A, the normal hearing (NH) unilateral participants in Panel B, and the NH-bilateral participants in Panel C. Error bars denote plus or minus one standard deviation.

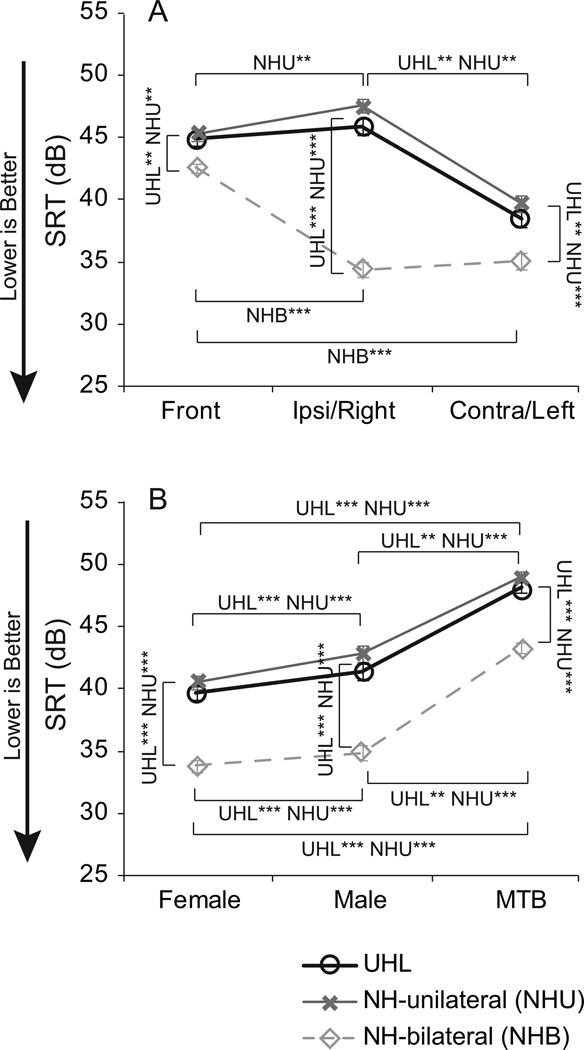

The Adaptive SRT psychoacoustic task resulted in one SRT in quiet and nine SRTs in noise. Although there was a significant main group effect for the Adaptive SRT in quiet, F(2) = 3.22, p < 0.05, follow-up pairwise comparisons were not significant (not shown; ps > 0.05). The means and standard deviations in quiet for the UHL, NH-unilateral and NH-bilateral participants were 17.5 dB (SD 6.3 dB), 16.9 dB (SD 5.1 dB), 13.7 dB (SD 5.1 dB), respectively. Noise scores for the Adaptive SRT are shown in Figure 5. Panels A and B display the same data but are averaged across different parameters. Panel A plots the means for each group and each noise-source location averaged across noise types. Panel B plots the means for each group and each noise type averaged across noise-source locations. Results for the two unilateral listening groups are similar to each other in both panels but the pattern relative to the NH-bilateral group differs. As seen in Figure 5A, there was a significant group effect for all three noise-source locations when collapsed across noise types [front F(2,71) = 9.1, p < 0.001; ipsilateral/right F(2,71) = 121.9, p < 0.001; contralateral/left F(2,71) = 135.9, p < 0.001]. Post-hoc comparisons indicated the NH-bilateral participants’ (light gray diamonds) mean SRTs were significantly better than those of the unilateral listening groups (UHL black circles, NH-unilateral medium gray Xs) (ps < 0.01); whereas, mean SRTs for the two unilateral listening groups did not differ significantly (p > 0.05). As expected, the greatest difference between the unilateral and bilateral listeners was when noise was toward the ipsilateral (open or better hearing) ear of the unilateral listeners. Although the difference between the NH-bilateral and unilateral listeners was significant for noise from the front and noise toward the contralateral (plugged or deaf) ear, these differences were much smaller.

Figure 5.

Mean Adaptive Speech-Reception Threshold (SRT) results for each participant group are plotted by the noise-source location, collapsed across noise type, along the x-axis in Panel A and by the noise type, collapsed across noise-source locations, along the x-axis in Panel B. Unilateral hearing loss (UHL) means are plotted in black, normal hearing listening unilaterally (NHU) means are plotted in medium gray, and normal hearing listening bilaterally (NHB) means are plotted in light gray. Noise-source locations (Panel A) were from the front, from the open side for unilaterals (Ipsi) or right side of NHB, and from the contralateral side of unilateral (Contra) or left side of NHB. Noise types (Panel B) were a single female talker, a single male talker and multi-talker babble (MTB). Error bars denote plus or minus one standard error. Brackets and asterisks denote significant differences, **p< 0.01, ***p< 0.001. To clarify significant levels for specific group differences, group abbreviations are noted.

Figure 5B shows that as with the noise-source locations, there was a significant group effect for all three noise types when collapsed across locations [female F(2,71) = 42.7, p < 0.001; male F(2,71) = 41.4, p < 0.001; MTB F(2,71) = 60.0, p < 0.001]. Post-hoc comparisons indicated the NH-bilateral performance was significantly better than that of the two unilateral listening groups (ps < 0.001); and the two unilateral listening groups did not differ from each other (p > 0.05). For all three groups, there was a significant difference in mean SRTs based on noise type (female vs. male for NH-bilateral p < 0.05, for all other comparisons p < 0.001). The pattern was consistent for all three groups; male-talker noise was slightly more difficult than female-talker noise, and MTB was considerably more difficult than male-talker noise.

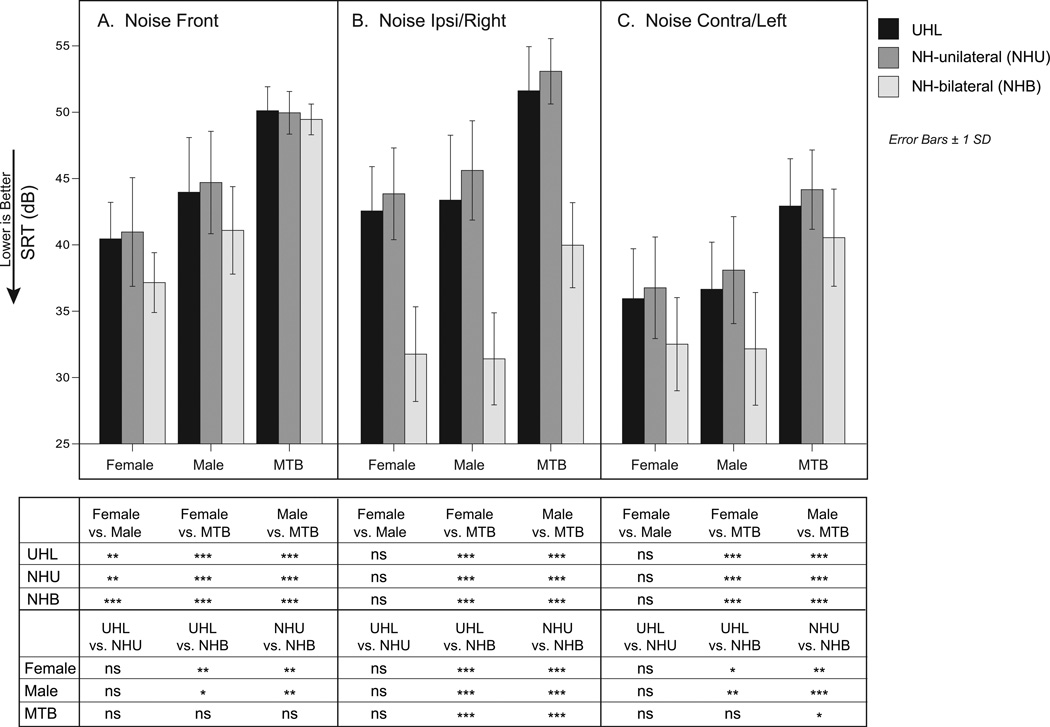

Some trends and interactions are seen more clearly when the Adaptive SRT results from Figure 5 are not collapsed across noise type or noise-source location. The bar graph in Figure 6 demonstrates the relationship between the group-mean SRTs (y-axis) for each noise-source location (Panels A, B and C) and noise type (x-axis), plotted for each hearing group (UHL in black, NH-unilateral in medium gray and NH-bilateral in light gray). Three one-way ANOVAs across hearing groups and noise types were used (one for each noise-source location) with Bonferroni adjusted post-hoc comparisons. The interaction between noise type and hearing group varied based on noise-source location. As in Figure 5, comparison of the two unilateral listening groups showed similar results regardless of noise-source location and noise type. When speech and noise were co-located from the front (Panel A), all three groups performed progressively worse as the noise type changed from female talker, to male talker, to MTB. The single-talker noise mean SRTs were 3–4 dB better for the NH-bilateral group than the unilateral groups; however, for MTB, performance between the three groups was the same. When noise was from the side (Panels B and C), performance with single-talker noise was better than with MTB for all three groups, but there was not a significant difference between the female and male talker noise types for any group. When noise was directed toward the ipsilateral (open/better) ear (Panel B), the NH-bilateral mean SRTs were 11–14 dB better than the unilateral groups’ mean SRTs. Even when noise was directed to the contralateral (plugged/deaf) ear (Panel C), the NH-bilateral mean SRTs were 2–6 dB better than those of the unilateral listeners, consistent with the idea that the NH-bilateral listeners experienced squelch. The significant differences indicated in the table below the bar graph, highlight two hearing-group trends: 1) the two unilateral listening groups did not differ significantly from each other in any condition and 2) both unilateral listening groups were significantly poorer than the NH-bilateral group in almost all conditions except MTB from the front.

Figure 6.

Group Adaptive Speech-Reception Threshold (SRT) results for each noise type and noise-source location. Results for unilateral hearing loss (UHL) are in black, normal hearing listening unilaterally (NHU) are in medium gray, and normal hearing listening bilaterally (NHB) are in light gray. Noise type is shown along the x-axis for noise front (Panel A), noise ipsi/right (Panel B) and noise contra/left (Panel C). Error bars denote plus or minus one standard error. Significant differences are indicated in the table below the graph, ns = not significant, *p< 0.05, **p< 0.01, ***p< 0.001.

Age at Onset of SPHL

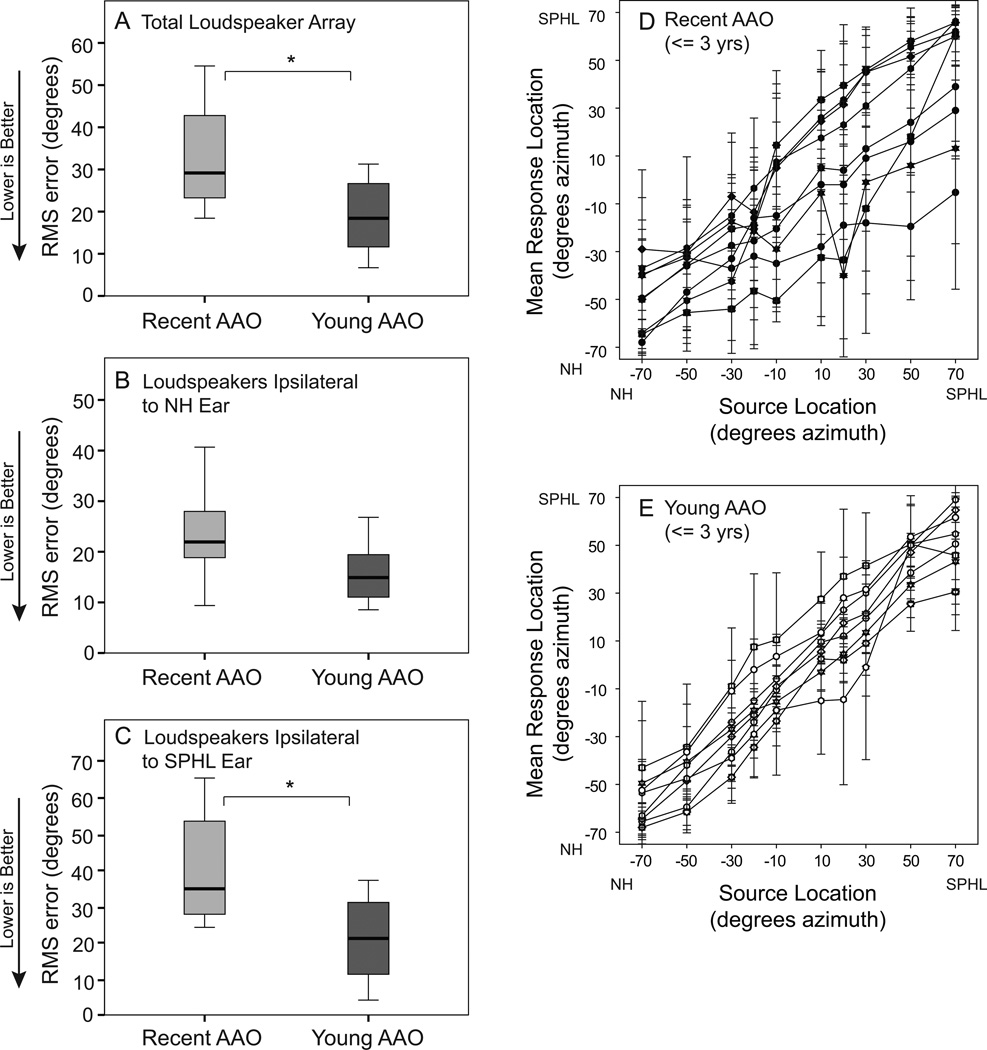

The UHL participants differed in their age at onset of SPHL. Within this group, nine had onset of SPHL within three years of testing (referred to as Recent age-at-onset, AAO) and eight had onset of SPHL by three years of age (referred to as Young AAO). Descriptive information about these two groups is provided in Table 2. Comparison of these groups indicated no significant differences for hearing, age, CNC word recognition in quiet, Adaptive SRT results or speech recognition in noise measured in the R-Space (all ps > 0.05). There was a significant difference for localization. These results are shown in Figure 7. Panels A-C are box plots of RMS error scores for the total localization array (Panel A), the NH ear side of the array (Panel B) and the SPHL side of the array (Panel C). The Young AAO group localized more accurately than the Recent AAO group across the total array (U = 12.0, z = −2.31, p < 0.05) and when sound was presented on the SPHL side (U = 13.0, z = −2.21, p < 0.05). There was not a significant difference between the groups when sound was presented on the side of the NH ear (p > 0.05). Individual localization results by source loudspeaker are plotted in Figure 7, Panel D (Recent AAO) and Panel E (Young AAO). These plots further demonstrate the difference between the two groups across the loudspeaker array. The Young AAO participants had a similar range of mean responses for stimuli from each end of the array (i.e. ± 50 and 70 degrees) whereas the Recent AAO participants varied more in mean responses for stimuli originating toward the SPHL ear (+50 to +70 degrees) and responses more comparable to the Young AAO participants for stimuli originating toward the NH ear (−50 to −70 degrees). Although these two groups differed substantially in length of SPHL, length of SPHL for the UHL group as a whole did not correlate with outcomes. Childhood exposure to monaural listening appears to be more influential than length of SPHL on the group differences in localization ability.

Table 2.

Recent AAO and Young AAO group demographic statistics

| Group | Age (years) |

Hearing (dB) Avg .25–8 kHz of NH ear |

Hearing (dB) Avg .25–8 kHz of SPHL ear |

Age Onset SPHL (years) |

Length of Deafness (years) |

|---|---|---|---|---|---|

| Recent AAO (n = 9) |

47.1 (11.3) 30 – 62 |

15.0 (9.1) 4.4 – 29.4 |

116.1 (3.3) 111.3 – 121.3+ |

45.7 (11.3) 29 – 61 |

1.6 (1.1) <1 – 3 |

| Young AAO (n = 8) |

43.8 (16.9) 25 – 72 |

15.1 (6.3) 5.0 – 23.1 |

107.3 (10.1) 90.0 – 120.1+ |

0.8 (1.4) 0 – 3 |

43.0 (16.7) 25 – 72 |

Note: Recent AAO = onset of SPHL within 3 years of testing; Young AAO = onset of SPHL by 3 years of age; SPHL = severe to profound hearing loss; means, standard deviations (in parentheses) and ranges are indicated.

Figure 7.

Localization results for participant subgroup depending on age at onset of severe to profound hearing loss (SPHL). In Panels A, B and C, box plots indicate the range of performance for participants with recent age at onset of SPHL (Recent AAO; within three years of testing) shown in gray on the left and for participants with young age at onset of SPHL (Young AAO; by age three years) shown in black on the right. The box depicts the interquartile range transected by the median; tails represent the 10th to 90th percentiles. Root mean square (RMS) error in degrees is indicated along the y-axis. Brackets and asterisks denote significant differences, *p< 0.05. Panel A are results from the total loudspeaker array; Panel B from loudspeakers on the side of the normal hearing ear; Panel C from loudspeakers from the side of the ear with SPHL. Each participant’s mean location response (y-axis) for each source location (x-axis) are plotted for the Recent AAO participants in Panel D and Young AAO in Panel E. Error bars denote plus or minus one standard deviation.

Hearing Thresholds

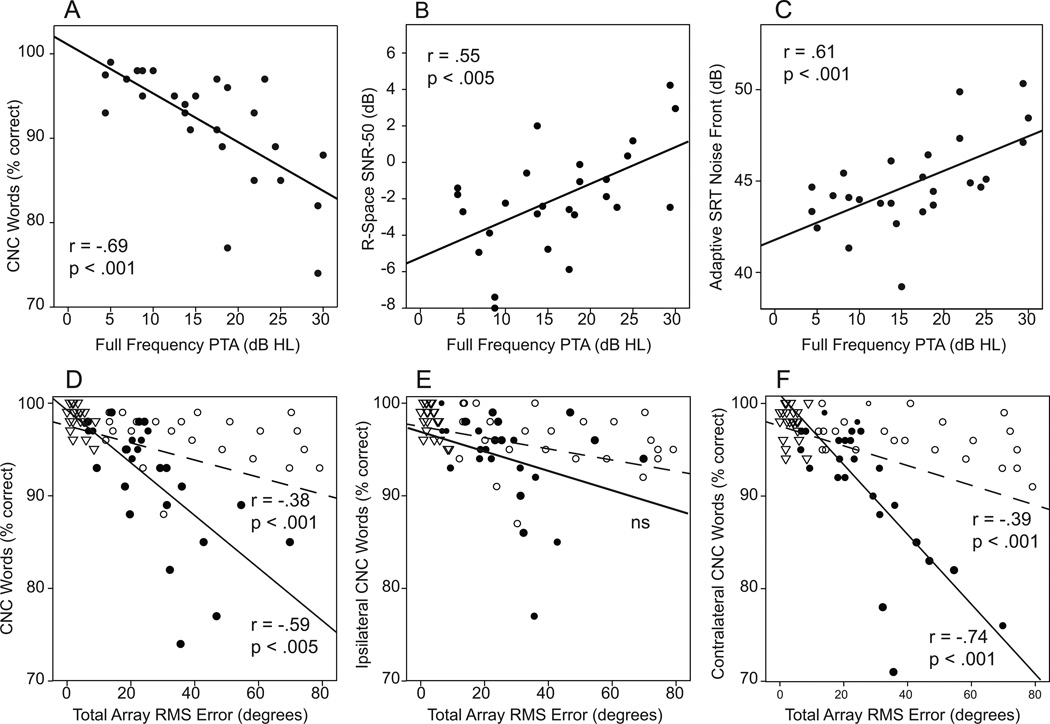

Hearing thresholds in the better ear, particularly high-frequency thresholds, have been shown to correlate with outcome measures in UHL adults (Agterberg et al. 2014; Firszt et al. 2015). In the current study, correlational analysis was completed using two measures of better-ear hearing [full-frequency PTA (FFPTA; .25 – 8 kHz) and high frequency PTA (HFPTA; 6 and 8 kHz)] compared to speech recognition. Using either FFPTA or HFPTA, there was a significant correlation with speech-recognition measures for the UHL participants but not for the two groups of NH listeners. Correlations were similar for both PTA calculations and all three speech-recognition measures (rs .55 - .69; ps all < 0.005). Scatter plots in the top row of Figure 8 demonstrate the correlation between FFPTA and CNC word recognition (Panel A), R-Space SNR-50 (Panel B) and the Adaptive SRT with noise from the front (Panel C) for the UHL group. Better FFPTAs were associated with better performance on each of these measures. Because hearing, particularly high frequency hearing in the better ear of UHL adults, has correlated with localization ability, we compared FFPTA and HFPTA with RMS error scores for each participant group. Although there were no significant correlations based on our p < 0.005 criteria for any group (data not shown), there was a trend (r = .47, p = 0.015) for poorer HFPTA to be correlated with poorer RMS error scores in UHLs and for poorer FFPTA to be correlated with poorer RMS error scores in NH bilateral listeners (r = .56, p = 0.006). Hearing thresholds in the low frequencies have also been shown to correlate with localization ability (Middlebrooks et al. 1991; Yost 2000). Among the UHL participants, the mean FFPTA (.25 – 8 kHz) in the poor ear was 110 dB (range 78.3 to >120 dB) and did not correlate with localization error scores; likewise, the UHL participants’ low-frequency hearing (average of .25 and .5 kHz) in the poor ear did not correlate with RMS error scores (ps > 0.05).

Figure 8.

Scatter plots depict correlations for hearing levels in the better ear and localization ability or speech-recognition measures. The top row plots show the relation between better ear hearing (full-frequency pure tone average) and CNC word recognition (Panel A), R-Space SNR-50 (Panel B) and Adaptive SRT with noise from the front (Panel C) for unilateral hearing loss (UHL) participants. The bottom row plots demonstrate the relation between localization ability (RMS error with the total array) and CNC word recognition when words were presented across the total array (Panel D), from the loudspeakers ipsilateral to the open/right ear (Panel E) and from loudspeakers contralateral to the open /left ear (Panel F). Participant groups are indicated by filled circles for UHL, open circles for NH-unilateral, and open triangles for NH-bilateral. Regression lines for the UHL group are solid and for the entire group of participants are dashed. Correlations for each are indicated near the regression lines.

The second row of Figure 8 shows the relationship between CNC word-recognition and localization RMS-error scores for all participants when tested on the Roving CNC task. The r- and p-values indicated on each plot are the partial correlations when controlling for FFPTA. In each plot, subgroup results are indicated as filled circles for UHL, open circles for NH-unilateral, and open triangles for NH-bilateral. The solid regression lines are for the UHL subgroup and dashed regression lines for the entire group of participants. The plot to the far left (Panel D) shows that the NH-bilateral participants scored well on both measures, the NH-unilateral group in general scored well on CNC word recognition but there was a spread of scores for localization, and the UHL group had a spread of performance for both measures. There was a moderate correlation between word recognition and sound localization for the UHL participants (r = −.59, p < 0.005). For the UHL participants, there was no significant correlation (Panel E) between CNC word-recognition results and sound localization if words were presented from the NH side. In contrast, there was a strong correlation between CNC word recognition if words were presented from the SPHL side (Panel F, r = −.74, p < 0.001). Participants who had poorer recognition of words presented from the side of their deaf ear also had poorer sound localization ability, even when accounting for any contribution of hearing level (FFPTA). There was no correlation between RMS error and scores on the Adaptive SRT and R-Space measures after controlling for FFPTA.

Finally, we compared test results based on ear of stimulation for the unilateral listening groups and there were no significant ear differences on any measure (ps > 0.05). Likewise, there was not a significant difference for the NH-bilateral participants when speech was presented from the right or left side on the Roving CNC task (localization and word recognition) or Adaptive SRT (ps > 0.05).

DISCUSSION

This study investigated and quantified speech recognition in adults with either unilateral or bilateral hearing using varied and roving source-locations and varied noise types. Localization abilities were examined using intensity-roved stimuli. Three age-gender-matched adult groups were assessed: NH who listened bilaterally (NH-bilateral), NH who listened unilaterally with one ear blocked (NH-unilateral), and UHL (one ear with SPHL, one ear with NH) who listened with the intact ear. The inclusion of NH adults listening with one ear, as well as the inclusion of UHLs with early-onset versus later-onset hearing loss, allowed us to explore the effects of experience with unilateral listening on speech recognition and localization outcomes.

In the current study, the unilateral listeners (UHL and NH-Unilateral) were at a significant disadvantage compared to the binaural listeners (Figure 1) for understanding speech in noise. Speech recognition in noise is affected by several variables including the speech stimulus (e.g., talker clarity, sentence length), noise stimulus (e.g., type, signal-to-noise ratio), source-location of the noise (e.g., front, back), listening environment (e.g., room acoustics, distance from talker) and listener characteristics (e.g., age, hearing loss) (Boothroyd 2006; Donaldson et al. 2003; Hawkins et al. 1984; Hawley et al. 2004; Mullennix et al. 1989; Sommers et al. 2011; Uchanski et al. 1996). There were significant and meaningful differences in the ability to understand speech in the presence of varied noise types between unilateral and bilateral listeners. When sentence stimuli came from the front and restaurant noise surrounded the listener, NH-bilateral listeners achieved significantly lower SNRs compared to either unilateral listening group. SNR affects speech recognition and can be quantified as a percent change in speech understanding. For example, a 1 dB improvement in SNR in NH listeners has been shown to equate to 10.6% improvement in speech intelligibility (Soli et al. 2008). Litovsky et al. (2006) also reported, in unilateral cochlear implant recipients, that a 3 dB lower SNR was equivalent to 28 percentage points improvement in speech recognition (or 9.3 percentage points per dB). In the current study, mean differences between the NH and unilateral groups were 2.36 dB (NH-unilateral) and 2.91 dB (UHL), differences that would equate to approximately 22–31 percentage points decreased speech intelligibility in noise for the unilateral listeners compared to the bilateral listeners. In everyday noisy environments, differences of this magnitude/degree could be critical for successful conversation. As noted in previous studies, variability in performance was large among individuals with UHL. While 12 UHL participants had SNR-50 scores on the R-Space task within the range of the NH-bilateral group (UHL scores −8.0 to −2.4 dB, NH-bilateral scores −9.4 to −2.3 dB), 13 UHL participants had scores poorer than any of the NH-bilateral group (−1.9 to 4.2 dB).

Using a measure that combined roved word understanding in quiet and localizing the source of the presented word (Roving CNC measure), the current study results also indicated that unilateral listeners were at a disadvantage compared to binaural listeners for both word understanding (Figure 2) and localization (Figure 3). NH-unilateral participants had better monosyllabic word understanding than UHL participants in quiet when the location of the words was roved, particularly for words presented on the deaf/blocked side (Figure 2C). In contrast, UHL participants localized better than NH-unilateral participants, particularly for stimuli presented on the NH/open side (Figure 3B). This result suggested that UHL participants had adapted to single-ear listening or learned strategies to improve localization but not speech recognition. Even so, there was a significant correlation between word recognition and localization ability primarily for the contralateral side (Figure 8). Although the UHL participants had learned strategies to improve localization, the poorest localizers were also those with the poorest roving-source word recognition. Presumably the fact that the word-recognition and localization results were obtained with the same stimuli during the same task contributed to this finding. Since there was no significant group difference in hearing thresholds of the NH/open ear for the two unilateral groups, it is not entirely clear why NH-unilateral participants would recognize words better than UHLs when presented on the contralateral side. Three UHL participants had slightly poorer FFPTAs (5 dB) and four UHL participants had poorer HFPTAs (5–15 dB) than the NH-unilateral group. This difference was not enough to identify a statistically significant difference in PTAs between the two groups but may have influenced word-recognition results. Another possibility might be insufficient ear blocking of the NH-unilateral participants. There was an average of 48 dB of attenuation with the plug/muff combination, ranging from 41 – 54 dB by frequency. The speech stimuli for the Roving CNC measure were 57–63 dB SPL (60 dB SPL, intensity roved ±3 dB) which may have allowed a very low level of audibility for the NH-Unilateral listeners in the blocked ear. If there were instances of insufficient ear blocking to the level that it aided in word recognition, it was not evident for the R-Space, Adaptive SRT, and localization tasks. With respect to listening in noise, the noise for the R-Space and Adaptive SRT may have masked any benefit from possible insufficient blocking. With respect to localization, monaural listening experience by UHL participants may have offset the potential benefit of low level audibility for the inexperienced NH-unilateral listeners.

Numerous studies have documented impaired localization ability in adults and children with UHL (Agterberg et al. 2014; Bess, Tharpe et al. 1986; Firszt, et al. 2012; Firszt et al. 2015; Humes et al. 1980; Reeder et al. 2015; Rothpletz et al. 2012; Slattery and Middlebrooks 1994; Van Wanrooij and Van Opstal 2004). In the current study, although localization was poorer for UHLs and NH-unilateral listeners than those with bilateral NH, several UHL individuals performed better than chance (59 degrees on this task) with RMS error scores ranging from 6.5 to 70 degrees. Slattery and Middlebrooks (1994) also showed slightly better performance for unilaterally deaf adults on a localization measure compared to NH participants listening monaurally (with one ear plugged and muffed), although group means were not significantly different for their small sample. Individually, there was remarkable variation in localization ability among their five UHL participants who had some similar attributes. All were reported to have been diagnosed before age 3 with SPHL and onset was assumed congenital; four participants had complete unilateral deafness and one participant had low frequency thresholds of 40 dB HL through 1 kHz with severe to profound thresholds at 2 kHz and above. This participant and one with complete SNHL responded like NH participants listening with one ear (i.e., poor localization), whereas the remaining three UHLs could localize with fairly good accuracy on either the hearing or impaired side. In another study (Rothpletz et al. 2012), two of 12 adults with UHL localized just slightly worse than NH individuals; however, they also had better hearing in the poor ear with three-frequency PTAs in the mild to moderately impaired range. In the current study, among the UHL participants, three participants had poor-ear low frequency thresholds between 30–50 dB at .25 and .5 kHz which probably enhanced their localization performance; however, a wide range of localization ability remained even when low-frequency hearing was severe to profound, with several individuals scoring substantially better than chance but none within the range of the NH-bilateral participants. Interestingly, all UHL participants, even those with some low-frequency hearing, reported a sense of guessing during the localization task that was comparable to their frustrations in everyday real-life listening environments.

For co-located versus spatially separated speech and noise in the current study, performance varied across groups and noise type based on the noise-source location (Figures 5 and 6). Spatially separated speech and noise results were consistent with those of May et al. (2014) who found unaided UHL participants had greater error rates with multiple-talker compared to single-talker distractors, with same-sex compared to different-sex distractors, and with distractors toward the NH compared to the deaf ear. Rothpletz, Wightman and Kistler (2012, Experiment #2) showed that participants with UHL had poorer speech understanding than those with NH when there was no spatial separation between the target and masker presented from a loudspeaker in the soundfield. In their study, speech and noise (single-talker masker) were both presented from the front to limit binaural advantages; however, the results (i.e., the target to masker ratio corresponding to 51% correct) for NH participants were significantly better, by 4.6 dB, than results for the UHL listeners. The authors note that their task was dominated by informational masking rather than energetic masking. Informational masking generally occurs when the target signal is similar to the masker; both are audible but difficult to separate. Energetic masking occurs when the speech and target contain energy in the same critical bands at the same time; one of the signals may not be audible due to physical interactions between the signal and masker. In the current study, UHL participants scored significantly poorer (3 dB) than NH-bilateral participants on the Adaptive SRT task when speech and noise were from the front and the masker was either a single female or male talker; however, no differences between groups were observed when the noise was MTB (Figure 6, Panel A). The MTB consisted of 20 talkers and thus was primarily energetic masking, whereas the single-talker noise provided a combination of energetic and informational masking. Energetic masking has been described as having a greater impact on peripheral auditory processes; informational masking has a greater impact on cognitive-attentional processes (Brungart 2001; Freyman et al. 1999; Kidd et al. 1994). The mostly energetic masking of the MTB affected unilateral and bilateral listeners in a similar manner; however, the single-talker noise with informational masking that potentially required more cognitive-attentional processing had greater negative consequences for the unilateral groups. This was evident for co-located stimuli with both same-sex talkers (male target-male masker) and different-sex talkers (male target-female masker). Perhaps listening with two ears helped the NH-bilateral listeners distinguish the target talker when the interferer was a single talker; however, this would be a somewhat surprising explanation because the target speech and maskers were co-located and binaural spatial cues were likely unavailable to facilitate separation. Another possibility might be that bilateral listening made it easier to listen in the gaps of the fluctuating background noise, reducing the effects of informational masking.

In the current study, localization was significantly poorer for NH-unilateral listeners than UHLs, and among UHLs who were differentiated by age at onset, those with later and more recent onset performed poorer than those with earlier onset (Figure 7). Both of these results suggest that some individuals with UHL learn strategies to extract monaural cues, presumably spectral cues, to enhance their localization performance and that experience or amount of time as a unilateral listener has a positive effect. Reeder et al. (2015) found a correlation between age at test and localization ability among a group of 11 children with primarily congenital UHL which was not present in a NH matched group. This further supports the notion that experience and time assist in the development of localization abilities among unilateral listeners. Monaural localization skills seem to require time, although time does not guarantee skill acquisition. Whether deafness occurs during an early developmental period or not, may influence the ability to learn monaural localization over time. In NH adults with an occluded ear, measures of horizontal localization showed no improvement with multiple practice sets over a short period of three sequential days (Abel et al. 2008). Animal studies, where longer periods of monaural occlusion can be performed, showed improvements in localization with experience, especially when ferrets were raised with a single ear (King et al. 2000). Adult ferrets also demonstrated improved spatial localization after a several-month period of monaural occlusion, but this did not reach the accuracy obtained prior to plugging one ear (King et al. 2000). Even faster improvements were noted in these animals when training occurred during the early weeks of monaural plugging.

Several studies have investigated the characteristics of sound-localization plasticity (i.e., capacity for change) by monaural plugging in animals and humans with combined exposure to training. Kumpik, Kacelnik & King (2010) showed that humans with continuous unilateral ear plugging had progressive localization recovery after one week of daily training when a consistent flat spectrum noise was used for the training and testing. If random spectral variation was introduced into the training, localization improvement did not occur. Behavioral training was examined in a study with ferrets trained to localize sounds with a broadband stimulus (Kacelnik et al. 2006); the frequency of training determined the degree of plasticity, greater and faster improvements were associated with more training. Adults with severe to profound UHL also showed improved localization with a localization-specific training protocol (Firszt et al. 2015). Participants with the poorest RMS error scores benefitted the most and likewise, those with the best pre-training abilities benefitted the least. Furthermore, age at onset of SPHL and better-ear hearing thresholds contributed to localization performance. Overall, these results in humans and animals suggest that training might be considered in the rehabilitation of those who listen with a single ear.

The above-mentioned localization studies support the idea that adaptation or plasticity following unilateral hearing loss, both in development and adulthood, can improve localization performance, but do not improve performance for other aspects of binaural hearing, such as binaural unmasking (auditory improvements gained from the signal and noise being spatially separated). In the current study, we found no relation between RMS error scores and spatial release from masking among UHLs as measured on the Adaptive SRT measure (difference in SRTs for co-located speech and noise versus speech to the front and noise to either the SPHL or NH ear). Rothpletz, Wightman and Kistler (2012) also failed to find a relation between localization ability and spatial release from informational masking in adults with UHL. Although some UHLs had localization scores that approximated that of NH individuals, the benefit obtained from separating noise from the target was minimal compared to the benefit obtained by NH listeners. Other human studies support an inability to predict spatial release from masking from the ability to locate sound (Drullman et al. 2000; Hawley et al. 1999). Likewise, ferrets with monaural occlusion had poor levels for binaural unmasking even after several months and continued with low performance after removal of the plug (Moore et al. 1999). Binaural measures showed less adaptation with unilateral impairment compared to the adaptation observed in studies of localization, perhaps because binaural unmasking reflects phase detection rather than greater dependence of monaural spectral cues during localization (Keating et al. 2015; King et al. 2000).

Hearing levels in the better ear of individuals with UHL have also contributed to localization variability; those with better high frequency hearing may use monaural cues to assist in localization (Agterberg et al. 2014; Firszt et al. 2015). In the current study, the relation between hearing levels in the better ear of UHLs and RMS error was not significant when including all frequencies (FFPTA) but a non-significant trend may be present (p = 0.015) when including only high frequency thresholds (6 and 8 kHz). However, hearing levels were strongly associated with speech recognition abilities; hearing correlated with performance on CNC words, the R-Space task and the Adaptive SRT task but only among UHLs (Figure 8) and not for the other groups. This finding held true for full-frequency and high-frequency PTAs. It seems that small differences in hearing, even a few decibels, impact those with UHL more than those with normal hearing bilaterally.

Implications for Cochlear Implant Candidacy

Within the CI field, some individuals with UHL are being considered for cochlear implantation in an effort to improve communication deficits through restored hearing function to the poor ear. Initial studies have shown improvement with localization (Arndt et al. 2011; Firszt et al. 2012; Hansen et al. 2013; Zeitler et al. 2015) and speech recognition in noise, depending on the type and location (Bernstein et al. 2016; Grossman et al. 2016; Zeitler et al. 2015); however, considerable work remains to fully understand communication deficits and expected benefits from cochlear implantation. The range of performance for unaided/nonimplanted UHL participants in the current study (and others), provides a framework to gauge potential benefits gained from cochlear implantation. Factors such as age at onset of hearing loss and length of SPHL may influence or determine CI outcomes. The current study suggests that UHL individuals with pre/perilingual onset of SPHL localize better than individuals with recent SPHL onset, but exhibit the same range of (dis)abilities for speech recognition in noise. Performance by three cochlear implant recipients with pre/perilingual onset of asymmetric hearing loss demonstrated minimal bilateral benefit on objective measures from implantation of the deaf ear; however, they each continued to use their CIs and reported improved spatial hearing in everyday life (Firszt et al. 2012). Likewise, performance in children with asymmetric hearing loss where the congenital deaf ear was implanted showed minimal speech-recognition ability (Cadieux et al. 2013).

Recent animal studies suggest that unilateral deafness leads to a neuronal preference for the hearing ear and is subject to a sensitive period, that is, the preferential effect is only present with early-onset unilateral deafness (Kral et al. 2012). In addition, a comparison of congenital monaural and binaural deafness in cats found that unilateral hearing prevented loss of cortical responsiveness but also substantially reorganized aural dominance and reduced binaural responses (Tillein et al. 2016). Several human studies have shown cortical reorganization in the presence of unilateral deafness (Burton et al. 2012; Hanss et al. 2009; Khosla et al. 2003; Ponton et al. 2001); however, the effects of this reorganization for congenital verses later-onset unilateral deafness have not necessarily been distinguished. Whether later implantation of a congenital, unilaterally deaf ear is as viable as early implantation of that ear is unknown at this time. Bilaterally implanted children and adults with delayed second-ear implantation have reduced binaural benefits due to extended periods of unilateral hearing (Gordon et al. 2013; Litovsky and Gordon 2016; Litovsky and Misurelli 2016; Ramsden et al. 2005; Reeder et al. 2016; Reeder et al. 2014). Collectively, these studies suggest potential compromises to binaural benefits of cochlear implantation for individuals with prolonged unilateral deafness.

Not all individuals with unilateral SPHL will necessarily have improved localization abilities as the result of cochlear implantation. Among 10 CI recipients with asymmetric hearing loss (Firszt, et al. 2012), the best HA alone localization score was 28 degrees error and the best bilateral (HA + CI) score was 21 degrees error. In a separate study, three CI recipients with unilateral SPHL (Firszt, et al. 2012) had NH-ear alone localization scores of 20, 36 and 60 degrees error; the bilateral (NH ear + CI) scores were 18, 19 and 25 degrees error, respectively. Using the same localization methods, five UHL participants from the current study had localization RMS error scores less than 15 degrees. These five UHL participants had better localization abilities than any of the CI participants from the other two studies. Arndt et al., (2011) reported on 11 unilateral SPHL adults who received a CI. Group statistical analysis indicated a significant improvement with cochlear implantation (after six months of CI experience); however, the range of pre-implant performance (approximately 10 to 64 degrees error) encompassed much of the post-implant performance (approximately 8 to 28 degrees error). Taken together, these studies suggest that a CI will not result in improved localization abilities for every adult with unilateral SPHL, at least for the test conditions and populations described.

Individuals who are struggling with unilateral hearing loss often have varied degrees of hearing in the poor ear as well as in the better ear. In the current study, three of the UHL participants with better localization ability also had thresholds at .25 and .5 kHz in the mild to moderate hearing impaired range in the poor ear. Low-frequency hearing is a known critical factor for localization and hearing in noise, yet the impact of varied hearing losses at different frequencies, particularly for asymmetric hearing loss, is not completely understood (Byrne et al. 1998; Noble et al. 1994). In addition, better-ear hearing, particularly high-frequency hearing, may be an important factor in determining CI candidacy for patients with unilateral SPHL. Not surprisingly, for the current study, individuals with the poorest hearing in the better ear had the most difficulty with speech recognition (Figure 8). Likewise, poorer high-frequency hearing showed a trend toward poorer localization, a finding that has been reported in other studies with asymmetric hearing (Agterberg et al. 2014; Firszt et al. 2015). Multiple variables in addition to the hearing levels in each ear impact the functional ability of an individual with UHL and their potential interest in a CI. The person’s frustration with home listening demands, challenges in completing work requirements, presence or absence of debilitating tinnitus, results of hearing-aid trials, and technology options available with newer CI speech processors, all factor into the decision to consider cochlear implantation. Candidacy evaluations should include a thorough case history with confirmation of age at onset of hearing loss, particularly if the loss was diagnosed early in life, and quality of life assessments to document the impact of the unilateral loss. In addition, tests of localization and speech understanding in noise with noise toward the better-hearing ear may be the most sensitive to differences in unilateral versus bilateral hearing and best able to evaluate pre- to post-treatment improvements.

Summary.

The main findings of the study were as follows:

Individuals with UHL localized sound better than NH listeners listening monaurally, suggesting that over time, UHL participants had developed strategies for making use of monaural directional information.

Individuals with UHL were no better than NH listeners listening monaurally for speech-recognition tasks in diffuse noise or when noise and speech were spatially separated, suggesting that monaural experience does not translate to improvements in this domain.

UHL listeners who lost hearing as children were better at sound localization than UHL listeners who had recently lost hearing as adults, but this was not the case for speech recognition. Again, this suggests that experience plays an important role for learning to use monaural localization cues but perhaps not speech understanding.

UHL listeners were significantly disadvantaged in noise compared to NH listeners, regardless of the direction of the noise, even when noise was towards the deaf ear.

For UHL listeners, hearing thresholds in the better ear were correlated with speech understanding in quiet and background noise but not with localization.

Results from UHL listeners support the need for a revised and diverse set of clinical measures to evaluate CI candidacy in individuals with unilateral SPHL.

Acknowledgments

The authors thank Brandi McKenzie and Sheli Lipson for assistance with participant recruitment and data collection. This research was supported by a grant (to JBF) from the National Institutes of Health NIDCD R01DC009010. JBF and LKH serve on the audiology advisory boards for Advanced Bionics and Cochlear Americas. JBF and RMR helped design the study, analyze and interpret data, and write the paper. LKH assisted with interpretation of results and writing the manuscript.

Footnotes

Portions of these data were presented at the 2013 Conference on Implantable Auditory Prostheses, Lake Tahoe, CA, July 15, 2013 and the Ruth Symposium in Audiology and Hearing Science, James Madison University, Harrisonburg, VA, October 9, 2015.

References

- Abel SM, Alberti PW, Haythornthwaite C, et al. Speech intelligibility in noise: effects of fluency and hearing protector type. J Acoust Soc Am. 1982;71:708–715. doi: 10.1121/1.387547. [DOI] [PubMed] [Google Scholar]

- Abel SM, Lam K. Impact of unilateral hearing loss on sound localization. Applied Acoustics. 2008;69:804–811. [Google Scholar]

- Agterberg MJH, Hol MKS, Wanrooij MMV, et al. Single-sided deafness & directional hearing: contribution of spectral cues and high-frequency hearing loss in the hearing ear. Front Neurosci. 2014:8. doi: 10.3389/fnins.2014.00188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Algazi VR, Avendano C, Duda RO. Elevation localization and head-related transfer function analysis at low frequencies. J Acoust Soc Am. 2001;109:1110–1122. doi: 10.1121/1.1349185. [DOI] [PubMed] [Google Scholar]

- Arndt S, Aschendorff A, Laszig R, et al. Comparison of pseudobinaural hearing to real binaural hearing rehabilitation after cochlear implantation in patients with unilateral deafness and tinnitus. Otol Neurotol. 2011;32:39–47. doi: 10.1097/MAO.0b013e3181fcf271. [DOI] [PubMed] [Google Scholar]

- Bernstein JG, Goupell MJ, Schuchman GI, et al. Having two ears facilitates the perceptual separation of concurrent talkers for bilateral and single-sided deaf cochlear implantees. Ear Hear. 2016;37:289–302. doi: 10.1097/AUD.0000000000000284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bess FH, Tharpe AM. An introduction to unilateral sensorineural hearing loss in children. Ear Hear. 1986;7:3–13. doi: 10.1097/00003446-198602000-00003. [DOI] [PubMed] [Google Scholar]

- Bess FH, Tharpe AM, Gibler AM. Auditory performance of children with unilateral sensorineural hearing loss. Ear Hear. 1986;7:20–26. doi: 10.1097/00003446-198602000-00005. [DOI] [PubMed] [Google Scholar]

- Boothroyd A. Characteristics of listening environments: Benefits of binaural hearing and implications for bilateral management. Int J Audiol, 45 Suppl. 2006;1:12–19. doi: 10.1080/14992020600782576. [DOI] [PubMed] [Google Scholar]

- Bronkhorst AW, Plomp R. The effect of head-induced interaural time and level differences on speech intelligibility in noise. J Acoust Soc Am. 1988;83:1508–1516. doi: 10.1121/1.395906. [DOI] [PubMed] [Google Scholar]

- Brungart DS. Informational and energetic masking effects in the perception of two simultaneous talkers. J Acoust Soc Am. 2001;109:1101–1109. doi: 10.1121/1.1345696. [DOI] [PubMed] [Google Scholar]

- Burton H, Firszt JB, Holden T, et al. Activation lateralization in human core, belt, and parabelt auditory fields with unilateral deafness compared to normal hearing. Brain Res. 2012;1454:33–47. doi: 10.1016/j.brainres.2012.02.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne D, Noble W. Optimizing Sound Localization with Hearing Aids. Trends Amplif. 1998;3:51–73. doi: 10.1177/108471389800300202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cadieux JH, Firszt JB, Reeder RM. Cochlear implantation in nontraditional candidates: preliminary results in adolescents with asymmetric hearing loss. Otol Neurotol. 2013;34:408–415. doi: 10.1097/MAO.0b013e31827850b8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Compton-Conley CL, Neuman AC, Killion MC, et al. Performance of directional microphones for hearing aids: real-world versus simulation. J Am Acad Audiol. 2004;15:440–455. doi: 10.3766/jaaa.15.6.5. [DOI] [PubMed] [Google Scholar]

- Donaldson GS, Allen SL. Effects of presentation level on phoneme and sentence recognition in quiet by cochlear implant listeners. Ear Hear. 2003;24:392–405. doi: 10.1097/01.AUD.0000090340.09847.39. [DOI] [PubMed] [Google Scholar]

- Douglas SA, Yeung P, Daudia A, et al. Spatial hearing disability after acoustic neuroma removal. Laryngoscope. 2007;117:1648–1651. doi: 10.1097/MLG.0b013e3180caa162. [DOI] [PubMed] [Google Scholar]

- Drullman R, Bronkhorst AW. Multichannel speech intelligibility and talker recognition using monaural, binaural, and three-dimensional auditory presentation. J Acoust Soc Am. 2000;107:2224–2235. doi: 10.1121/1.428503. [DOI] [PubMed] [Google Scholar]

- Dwyer NY, Firszt JB, Reeder RM. Effects of unilateral input and mode of hearing in the better ear: Self-reported performance using the Speech, Spatial and Qualities of Hearing Scale. Ear Hear. 2014;35:126–136. doi: 10.1097/AUD.0b013e3182a3648b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feuerstein JF. Monaural versus binaural hearing: ease of listening, word recognition, and attentional effort. Ear Hear. 1992;13:80–86. [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Reeder RM, et al. Cochlear implantation in adults with asymmetric hearing loss. Ear Hear. 2012;33:521–533. doi: 10.1097/AUD.0b013e31824b9dfc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Reeder RM, et al. Auditory abilities after cochlear implantation in adults with unilateral deafness: a pilot study. Otol Neurotol. 2012;33:1339–1346. doi: 10.1097/MAO.0b013e318268d52d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Reeder RM, Dwyer NY, et al. Localization training results in individuals with unilateral severe to profound hearing loss. Hear Res. 2015;319:48–55. doi: 10.1016/j.heares.2014.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freyman RL, Helfer KS, McCall DD, et al. The role of perceived spatial separation in the unmasking of speech. J Acoust Soc Am. 1999;106:3578–3588. doi: 10.1121/1.428211. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Akeroyd M. Two-eared listening in dynamic situations. Int J Audiol. 2006;(45 Suppl):120–124. doi: 10.1080/14992020600783103. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Noble W. The Speech, Spatial and Qualities of Hearing Scale (SSQ) Int J Audiol. 2004;43:85–99. doi: 10.1080/14992020400050014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon KA, Wong DD, Papsin BC. Bilateral input protects the cortex from unilaterally-driven reorganization in children who are deaf. Brain. 2013 doi: 10.1093/brain/awt052. [DOI] [PubMed] [Google Scholar]

- Grossmann W, Brill S, Moeltner A, et al. Cochlear implantation improves spatial release from masking and restores localization abilities in single-sided deaf patients. Otol Neurotol. epub ahead of print. 2016 doi: 10.1097/MAO.0000000000001043. [DOI] [PubMed] [Google Scholar]

- Hansen MR, Gantz BJ, Dunn C. Outcomes after cochlear implantation for patients with single-sided deafness, including those with recalcitrant ménière’s disease. Otology & Neurotology. 2013;34:1681–1687. doi: 10.1097/MAO.0000000000000102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanss J, Veuillet E, Adjout K, et al. The effect of long-term unilateral deafness on the activation pattern in the auditory cortices of French-native speakers: influence of deafness side. BMC Neurosci. 2009;10:23. doi: 10.1186/1471-2202-10-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins DB, Yacullo WS. Signal-to-noise ratio advantage of binaural hearing aids and directional microphones under different levels of reverberation. J Speech Hear Disord. 1984;49:278–286. doi: 10.1044/jshd.4903.278. [DOI] [PubMed] [Google Scholar]

- Hawley ML, Litovsky R, Culling JF. The benefit of binaural hearing in a cocktail party: effect of location and type of interferer. J Acoust Soc Am. 2004;115:833–843. doi: 10.1121/1.1639908. [DOI] [PubMed] [Google Scholar]

- Hawley ML, Litovsky RY, Colburn HS. Speech intelligibility and localization in a multi-source environment. J Acoust Soc Am. 1999;105:3436–3448. doi: 10.1121/1.424670. [DOI] [PubMed] [Google Scholar]

- Hirsh IJ. Binaural summation; a century of investigation. Psychol Bull. 1948;45:193–206. doi: 10.1037/h0059461. [DOI] [PubMed] [Google Scholar]

- Humes LE, Allen SK, Bess FH. Horizontal sound localization skills of unilaterally hearing-impaired children. Audiology. 1980;19:508–518. doi: 10.3109/00206098009070082. [DOI] [PubMed] [Google Scholar]

- Johnstone PM, Litovsky RY. Effect of masker type and age on speech intelligibility and spatial release from masking in children and adults. J Acoust Soc Am. 2006;120:2177–2189. doi: 10.1121/1.2225416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kacelnik O, Nodal FR, Parsons CH, et al. Training-induced plasticity of auditory localization in adult mammals. PLoS Biol. 2006;4:627–638. doi: 10.1371/journal.pbio.0040071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keating P, King AJ. Sound localization in a changing world. Curr Opin Neurobiol. 2015;35:35–43. doi: 10.1016/j.conb.2015.06.005. [DOI] [PubMed] [Google Scholar]

- Khosla D, Ponton CW, Eggermont JJ, et al. Differential ear effects of profound unilateral deafness on the adult human central auditory system. J Assoc. Res Otolaryngol. 2003;4:235–249. doi: 10.1007/s10162-002-3014-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kidd G, Jr, Mason CR, Deliwala PS, et al. Reducing informational masking by sound segregation. J Acoust Soc Am. 1994;95:3475–3480. doi: 10.1121/1.410023. [DOI] [PubMed] [Google Scholar]

- King AJ, Parsons CH, Moore DR. Plasticity in the neural coding of auditory space in the mammalian brain. Proc Natl Acad Sci U S A. 2000;97:11821–11828. doi: 10.1073/pnas.97.22.11821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitterick PT, Smith SN, Lucas L. Hearing Instruments for Unilateral Severe-to-Profound Sensorineural Hearing Loss in Adults: A Systematic Review and Meta-Analysis. Ear and Hearing, Publish Ahead of Print. 2016 doi: 10.1097/AUD.0000000000000313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kral A, Hubka P, Heid S, et al. Single-sided deafness leads to unilateral aural preference within an early sensitive period. Brain. 2012 doi: 10.1093/brain/aws305. [DOI] [PubMed] [Google Scholar]

- Kumpik DP, Kacelnik O, King AJ. Adaptive reweighting of auditory localization cues in response to chronic unilateral earplugging in humans. J Neurosci. 2010;30:4883–4894. doi: 10.1523/JNEUROSCI.5488-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky R, Parkinson A, Arcaroli J, et al. Simultaneous bilateral cochlear implantation in adults: a multicenter clinical study. Ear Hear. 2006;27:714–731. doi: 10.1097/01.aud.0000246816.50820.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY. Speech intelligibility and spatial release from masking in young children. J Acoust Soc Am. 2005;117:3091–3099. doi: 10.1121/1.1873913. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Gordon K. Bilateral cochlear implants in children: Effects of auditory experience and deprivation on auditory perception. Hear Res. 2016 doi: 10.1016/j.heares.2016.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Misurelli SM. Does Bilateral Experience Lead to Improved Spatial Unmasking of Speech in Children Who Use Bilateral Cochlear Implants? Otol Neurotol. 2016;37:e35–e42. doi: 10.1097/MAO.0000000000000905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- May BJ, Bowditch S, Liu Y, et al. Mitigation of informational masking in individuals with single-sided deafness by integrated bone conduction hearing aids. Ear Hear. 2014;35:41–48. doi: 10.1097/AUD.0b013e31829d14e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middlebrooks JC. Narrow-band sound localization related to external ear acoustics. J Acoust Soc Am. 1992;92:2607–2624. doi: 10.1121/1.404400. [DOI] [PubMed] [Google Scholar]