Abstract

My Teaching Partner-Secondary (MTP-S) is a web-mediated coaching intervention, which an initial randomized trial, primarily in middle schools, found to improve teacher–student interactions and student achievement. Given the dearth of validated teacher development interventions showing consistent effects, we sought to both replicate and extend these findings with a modified version of the program in a predominantly high school population, and in a more urban, sociodemographically diverse school district. MTP-S produced substantial gains in student achievement across 86 secondary school classrooms involving 1,194 students. Gains were robust across subject areas and equivalent to moving the average student from the 50th to the 59th percentile in achievement scores. Results suggest that MTP-S can enhance student outcomes across diverse settings and implementation modalities.

Keywords: Achievement, professional development, teacher–student interactions

Given the critical need to improve student experiences in secondary grade classrooms to enhance student achievement, we know far too little about how to actually build teachers’ skills to enhance the effectiveness of their interactions with their students (Bill and Melinda Gates Foundation, 2012). Yoon and colleagues have reviewed more than 1,300 studies of the effect of teacher professional development on K–12 student outcomes, and found that only nine studies enhanced student outcomes under the What Works Clearinghouse evidence standards without reservations (Yoon, Duncan, Lee, Scarloss, & Shapley, 2007). Of the nine programs that had effects on student achievement, all involved elementary school teachers and none were at the middle or secondary school level. The Johns Hopkins Best Evidence Encyclopedia of published reports of teacher professional development efforts on secondary school student achievement identified only two programs that documented substantial impact on student achievement using rigorous designs (Center for Data-Driven Reform in Education, n.d.). Even the two programs documenting substantial impact were limited solely to specific curricular approaches to mathematics education. Although professional development with a content-based curricular focus is important, the broader challenge of enhancing the fundamental ability for teachers to connect with and motivate students across content areas has been largely unsuccessful to date.

More recently, however, the What Works Clearinghouse (2012) identified one program that met its evidence standards, though with reservations, and that sought to improve secondary school teacher effectiveness across a broad range of content areas. This program, the My Teaching Partner-Secondary (MTP-S) teacher coaching program, was unique among the programs described in the What Works Clearinghouse in that it did not target instruction or knowledge in specific content areas, but rather focused on enhancing the motivational and instructional qualities of teachers’ ongoing, daily interactions with students in a manner that applied to teachers across a range of content areas. The conceptual basis of MTP-S is provided by the Teaching Through Interactions framework, a content-independent approach to describing domains and features of teacher–student interactions that influence student academic motivation, effort, and achievement (Hamre & Pianta, 2010). MTP-S uses the domains of the well-validated Classroom Assessment Scoring System-Secondary (CLASS-S; Allen et al., 2013; Mashburn, Meyer, Allen, & Pianta, 2014; Pianta, Hamre, & Allen, 2012; Pianta, Hamre, Hayes, Mintz, & LaParo, 2008a) to operationalize this conceptual framework by providing clear behavioral anchors for describing, assessing, and intervening to change critical aspects of classroom interactions. The CLASS-S domains focus upon the extent to which teacher–student interactions build a positive emotional climate and demonstrate sensitivity to student needs for autonomy, an active role in their learning, and a sense of the relevance of course content to their lives. Focus is also placed on bolstering the use of varied instructional modalities (e.g., group work, work in pairs, etc.) and engaging students in higher order thinking and opportunities to apply knowledge to problems. Overall, the MTP-S coaching intervention is designed to enhance the fit between teacher–student interactions and adolescents’ developmental, intellectual, and social needs in an approach that aligns closely with elements of high-quality teaching that have been identified as central to student achievement (National Research Council, 2002).

The replication and extension of the MTP-S coaching intervention in the present study integrates initial workshop-based training, an annotated video library, and two years of personalized coaching followed by a brief booster workshop (Allen, Pianta, Gregory, Mikami, & Lun, 2011). During the school year, teachers send their coaches video recordings of class sessions in which they are delivering a lesson. Trained coaches review recordings that teachers submit and select brief video segments that illustrate either positive teacher interactions or areas for growth in one of the dimensions in the CLASS-S. The video segments and coach comments are posted on a private, password-protected Web site and each teacher is asked to observe his/her behavior and subsequent student reactions, and to then respond to coach prompts that call attention to the connection between teacher behavior and student responses. This is followed by a 20- to 30-minute phone conference in which the coach and teacher plan ways to enhance interactions, using the CLASS-S system as a language and heuristic lens. In the initial implementation of the program this cycle repeated approximately once every three weeks for the duration of the school year (Allen et al., 2011). This intervention extends an approach to coaching that has been developed, evaluated, and found to be effective in two separate controlled trials of prekindergarten classroom interventions. The first evaluation, with over 200 pre-kindergarten teachers, showed that exposure to MTP led to significant improvements in the quality of teachers’ interactions with children, to increases in children’s literacy skills, and decreases in problem behavior (Pianta, Mashburn, Downer, Hamre, & Justice, 2008b). A more recent experimental evaluation of MTP with over 400 teachers in 15 preschool program sites reported that exposure to MTP coaching led to significant gains in teacher–child interaction quality and children’s self-regulation and expressive language skills (Downer et al., 2014).

An initial evaluation of the MTP-S program suggested it also has significant promise at the secondary grade level. In a randomized controlled design across several rural and small town school districts, MTP-S coaching yielded 9 percentile point gains in end-of-year standardized achievement tests in MTP-S classes relative to classes in a randomly assigned, business-as-usual control group (Allen et al., 2011). However, this initial study had several important limitations. The largest limitation was significant attrition (with 33% of teachers dropping out post-randomization), which was a primary reason for What Works Clearinghouse stating that the program met its evidence standards only “with reservation.” Other limitations to the generalizability of the study included implementation with a primarily rural population of schools, teachers, and students. The challenges in small rural school districts are not considered comparable to the challenges facing large urban school districts (Provasnik et al., 2007). In addition, the study assessed primarily middle school students, thus leaving open the question of its generalizability to a primarily high school population, where issues of student disengagement and school dropout become far more serious.

Equally important, the initial version of the program (a one-year, relatively intensive intervention, requiring rapid ramp-up) was not optimally suited to the structure and needs of most schools, in which teachers appropriately take time to form trusting relationships with coaches, and have relatively little time during their school week to devote to training activities. Perhaps reflecting this, effects on student achievement from the initial intervention study were seen only in the year following completion of the intervention, rather than during the intervention itself, suggesting a relatively gradual uptake process. Thus, although the program has demonstrated effectiveness (similar to results enhancing student outcomes in pre-kindergarten classrooms; Pianta et al., 2008b) within one approach to implementation, within a slightly flawed evaluation, and with primarily middle school students, the extent of its broad and robust applicability to enhancing secondary education remains unclear.

The need for replication of initially promising programs, especially of challenging interventions implemented in complex systems, has been long recognized (Cohen, 1994; Forman et al., 2013). The present study was not designed to be an exact replication of the original intervention, however. Rather, we adopt the framework suggested by Francis (2012) in which the replication process ideally should vary implementation methods between replications, so as to best assess the extent to which the core concept underlying the intervention yields robust effects. The current study thus sought to remedy the limitations of the prior MTP-S evaluation in the secondary grades while examining a somewhat modified version designed to be more “user-friendly” for schools.

Two significant modifications to the original MTP-S coaching program were made. First the coaching program was decreased in intensity, such that the average teacher participated in five to six coaching cycles per year (as compared to ten to twelve cycles targeted in the original intervention). At the same time, the intervention was extended to take place over a two-year period instead of a one-year period. This allowed more time for teachers to form relationships with their coaches, and also allowed coaching to extend across two different classrooms of students for each teacher across the two successive years. This more distributed, lower-intensity approach to coaching was considered more suitable to the needs and demands of a large, stressed, urban school district serving a high proportion of students in poverty.

We thus viewed this randomized controlled trial as serving both replication and generalization functions (Francis, 2012). We sought to assess whether effects previously observed on student achievement would be seen in an entirely new implementation of the program, to rule out the possibility that prior findings were due to Type I error or to idiosyncrasies in the particular circumstances of implementation. Further, we sought to establish whether generalization of the MTP-S effects would be observed in a longer, less-intense implementation in a highly challenging urban school district with high concentrations of students in poverty, and among a group primarily composed of students in the high school grades. Given these changes, this study also addressed the extent to which the program could be effective during the second year of the intervention (as opposed to after the conclusion of the intervention). This latter point was considered potentially important for larger scale dissemination efforts, as program success or failure is often judged rapidly by school districts in making program continuation decisions. Many districts may not be willing to tolerate null findings at the conclusion of a new intervention to await more promising results from the end of the year following completion. Given the potential of the MTP-S approach to apply broadly across secondary school classrooms, we also assessed whether program effects differed across subject matters or at different grade levels.

METHOD

Recruitment Procedure

The research study was presented to teachers in the spring prior to the academic year in which the intervention commenced via presentations at faculty meetings held at the schools. To meet study inclusion criteria, teachers were required to work in a middle or high school, agree to randomization, and be responsible for teaching a focal course for which: (a) the participating teacher was the primary instructor; and (b) an end-of-course standardized exam was expected to be administered to assess student learning. Teachers provided written consent and study procedures were approved by a university institutional review board. Once teachers had consented and selected a focal course, parents of students in that course were invited to provide written consent and students were also asked to provide written assent to participate in the study. This evaluation was based upon teachers’ focal courses in the second year of the intervention. Sixty-four percent of invited students agreed to participate and also obtained parental informed consent.

Teachers were stratified within school, within grade level (high school vs. middle school) and within course content area (language arts/social studies/history vs. math/science) then assigned randomly to the MTP-S coaching condition or to a control group that received business-as-usual professional development (50% probability of being assigned to each condition). This stratification procedure was done so as to evenly distribute middle- versus high school–level courses and course content across the intervention and control conditions, and was successful, as seen in Table 1

Table 1.

Entry characteristics and achievement test scores by intervention versus control group

| Intervention Mean (SD) |

Control Mean (SD) |

Significance of Group Differences P |

|

|---|---|---|---|

| Number of years teaching |

10.2 (6.5) | 8.6 (6.4) | .26 |

| Prior-year achievement test score |

474.6 (39.7) | 473.5 (46.0) | .91 |

| Average class size |

22.5 (4.0) | 21.7 (3.5) | .32 |

| N (%) | N (%) | ||

| Student gender | Male: 307 (46.6%) | Male: 263 (49.1%) | .39 |

| Female: 352 (53.4%) | Female: 273 (50.9%) | ||

| Student race/ ethnicity |

Asian: 8(1.2%) | Asian: 21 (3.9%) | .77 |

| African American: 391 (59.3%) |

African American: 303 (56.5%) |

||

| Hispanic: 61(9.3%) | Hispanic: 46 (8.6%) | ||

| White: 199 (30.2%) | White: 166 (31.0%) | ||

| Students’ family < 200% of poverty line |

Yes: 245 (37.2%) No: 414 (62.8%) |

Yes: 199 (37.2%) No: 336 (62.8%) |

.99 |

| Teacher gender | Male: 18 (40.9%) | Male: 12 (29.3%) | .26 |

| Female: 26(59.1%) | Female: 29 (70.7%) | ||

| Teacher has master’s or higher degree? |

Yes: 36 (81.8%) No: 8(18.2%) |

Yes: 33 (78.6%) No: 9(21.4%) |

.71 |

| Course content area |

Math/Science: 23 (52.3%) | Math/Science: 24 (57.1%) | .65 |

| English/Social Studies: 21 (47.7%) area |

English/Social Studies: 18 (42.9%) |

||

| School level | Middle School: 4(9.5%) | Middle School: 5(11.4%) | .78 |

| High School: 38(90.5%) | High School: 39(88.6%) |

Note. Analyses used t tests, and chi-square analyses as appropriate. Significance of group differences for student race/ethnicity was calculated for minority versus nonminority group membership status.

Participants and School Context

This study included 86 secondary school teachers (30 male and 56 female) from five schools who participated for two years in MTP-S. Teachers were randomly assigned to participate in either the intervention or business-as-usual in-service training. Participating teachers had an average of 9.4 years of teaching experience (SD = 6.5). Teacher racial/ethnic composition was: 56% White, 33% African American, 7% mixed ethnicity, 1% Asian, 1% Hispanic, and 2% other. Twenty percent of teachers had a terminal BA degree, and 80% had advanced education beyond the BA degree. In contrast to the prior evaluation of MTP-S, students in this study were primarily from high school (89%) as opposed to middle school (11%). All student and teacher demographic characteristics in the MTP-S and control groups are presented in Table 1 and indicate that randomization was effective in producing equivalent samples.

The schools hosting the intervention were part of an urban district within a large urban area comprising several districts. Seventy-one percent of students in the schools were from racial ethnic minority groups, with a majority identified as African American. Participating schools ranged in size from 1,120 to 1,900 students and had staffs of teachers ranging in size from 74 to 126. Median household income for the catchment area for the school was $35,000 to $49,999. The four-year graduation rate for the school was 80.5%, which was significantly lower than the comparable statewide average. The pass rate for state SOL tests (described further below) was 83% for English and 60% for math, also significantly below statewide averages. Given the size of the schools, teachers participating in the evaluation represented only a small fraction of the teachers in a school. Because participating teachers were in any of multiple content areas (i.e., they were not concentrated within a single domain), the amount of interaction between teachers in the study is believed to be quite modest.

MTP-S Coaching Intervention

At the outset of the study, both intervention and control group teachers participated in a three-hour workshop prior to the beginning of the school year that explained the evaluation protocol. During the workshop, all teachers were asked to select a “focal class” that they anticipated to be their most academically challenging class that also had standardized end-of-year achievement test assessments. Teachers were instructed in procedures to obtain student assent/parent consent and in the process of data collection.

Teachers in the MTP-S condition then continued for the remainder of the day in a workshop led by three master teachers from the research team who were trained in the CLASS-S (Pianta et al., 2008a) and who served as the primary teacher coaches responsible for implementing the intervention throughout the year. This part of the workshop outlined the principles of the MTP-S program, with a focus upon the theoretically specified dimensions of high-quality teacher–student interactions from the CLASS-S. Teachers and their coaches from the external intervention team discussed these dimensions and watched exemplar videos of teachers employing these principles. MTP-S teachers were also randomly assigned to one of the master teacher coaches who would work with them closely throughout the academic year.

The formal structure of the workshop training and the ongoing consultation mirrored that of the highly effective original MTP program (Downer et al., 2014). However, the content of the MTP-S was uniquely tailored to the needs of adolescent students, as reflected in the CLASS-S. For example, a specific focus on “regard for adolescent perspectives” is incorporated and takes a central role. This includes recognizing the critical role of peer interaction, of opportunities for providing students with a sense of agency, and of explicit explanation of the relevance of content to adolescents’ present and future lives in creating and sustaining adolescent motivation. Similarly, a specific focus on “teacher sensitivity” is tailored around recognition of adolescent needs for autonomy and for a sense of (guided) input to classroom procedures, as well as to recognition of adolescents’ needs for social support in the classroom. The structural elements of the classroom experience were also tailored to adolescent needs, including the use of group learning formats and extended self-directed learning opportunities.

The primary elements of the MTP-S intervention took place throughout the academic year across the two years of the intervention. Coaches and MTP-S teachers participated in a carefully elaborated and manualized set of ongoing coaching cycles that revolved around review of video recordings of a teacher’s classroom interactions, considered with reference to the CLASS-S dimensions (Pianta et al., 2008a). Each of these coaching cycles began when MTP-S teachers videotaped a typical session in their focal course and mailed the video to the project office. Coaches selected brief (e.g., 1 to 2 minutes) video segments from the class of a particular teacher that were relevant to a specified CLASS-S dimension and posted them on a private Web page for that teacher. That teacher then logged in and was asked to observe his or her own behavior and student reactions, consider the connection between his or her behavior and the subsequent student reactions as seen on the video recording, and respond to the coach’s questions about that connection. This was followed by a 20- to 30-minute phone conference between the teacher and the coach to discuss instructional strategies that would foster positive teacher–student relationships and teachers’ ability to sensitively engage all students.

The video segments chosen and the questions posed by the coach were intended to target and improve specific dimensions of teacher–student interaction as specified by the CLASS-S. The cycle (teacher videotapes, coach reviews, teacher reviews, both discuss together) was repeated approximately once every six weeks for the duration of the school year. At the start of the school year, the focus of these cycles began with relational dimensions. Then, as each year progressed, the cycles moved through dimensions focused on classroom organization and instructional support. MTP-S teachers were also directed by coaches in discussions to watch video exemplars of high-quality teaching (again, as defined by the CLASS-S) on the MTP-S Web site. This process was repeated across both years of the intervention.

Measures

Student Achievement

Student academic achievement was assessed using the Commonwealth of Virginia Standards of Learning (SOL) testing system (Commonwealth of Virginia, 2005; Hambleton et al., 2000). This system is the official accreditation testing program for the Commonwealth of Virginia, which it uses to report Adequate Yearly Progress as mandated by the No Child Left Behind Act of 2001. It was first used in 1998, with a seven-year period for schools to align their curricula and adjust to the testing requirement before the school accreditation process began (Commonwealth of Virginia, 2005). The SOL program has now been in place for more than a decade, making it one of the oldest such programs in the nation. Students take SOL tests (which consist of between 45 and 63 multiple-choice questions, depending upon the specific test used) at the end of the course in core subjects taught by their teacher, and each test is standardized on a 200- to 600-point scale.

External reviewers have found that the “reliability evidence for the SOL assessments is solid and typical of high quality assessments” (Hambleton et al., 2000, p. 8). Specifically, validity is supported by evidence of strong unidimensionality and observed correlations of .50 to .80 between SOL assessments and Stanford 9 achievement tests (Hambleton et al., 2000). Reliability is supported by findings of KR-20 coefficients of .87 and .91 (Hambleton et al., 2000).

Student achievement test results were used from the standardized end-of-year assessment from teachers’ focal courses at the end of the two-year intervention. The end-of-year achievement test was directly linked to the instructional content of the classrooms under examination. Baseline achievement assessments were also obtained for each participating student using their performance on the standardized end-of-year test from the most comparable course in the same subject area they took in the prior year. Although these baseline assessments were not identical to outcome assessments (i.e., they were on the course material from the prior year), they were highly correlated with outcome assessments (r = .77; p < .001), and thus were considered appropriate to use as student-level covariates regarding baseline levels of achievement of each student. These baseline tests were assessed at the student level (i.e., they were not tracking the focal teacher’s prior year’s classes, but were simply used to obtain a baseline covariate for the students in the current class from the most comparable prior course taken by those students). Thus, intervention results were assessed by examining student achievement results at the end of Year 2 of the intervention, covarying the individual student’s prior level of achievement in a course with similar content area.

Student, Teacher, and Classroom Characteristics

School records were used to identify gender, race/ethnicity, and grade level of consented students. Records also indicated whether students came from low-income families (coded based on student eligibility for free and reduced-priced lunch, which is offered to families with incomes up to 185% of the federal poverty line). Teachers reported on their gender, race/ethnicity, years of experience teaching, and education level on a questionnaire completed during the introductory workshop. Class size was obtained from teachers’ enrollment rosters.

Sample Characteristics, Comparability, and Attrition Analyses

During initial spring recruitment, 97 teachers were selected to participate in the study. Of these, 86 completed both years of the intervention. Of the 11 teachers not available by the end of the intervention, virtually all attrition was a result of factors unrelated to program participation: three teachers had retired; three had moved out of the district, three were no longer teaching classes with end-of-year achievement tests, and two stopped participation prior to the end of the beginning of the second year of the intervention (thus not identifying a target class for the evaluation). Formal attrition analyses indicated no differences in levels of attrition across treatment and control groups and no differences between teachers who did versus did not participate in the final evaluation in teacher years of experience, gender, education level, or racial/ethnic minority status, nor was there any significant differential attrition by treatment group on any of these variables.

Analyses were run using an intent-to-treat approach, albeit with some missing data, in which all teachers for whom outcome data were available are included in the analyses, including intervention teachers who may have participated only minimally in the core components of the intervention.

RESULTS

Preliminary Analyses

Preliminary analyses considered whether nesting of classrooms within schools might significantly affect results. Hierarchical linear modeling (Raudenbush & Bryk, 2002) using SAS PROCMIXED (Singer, 1998) to predict end-of-year achievement test scores was first conducted for three level models to account for the nesting of children within teachers and teachers within schools. Models were conducted both examining these scores with no additional predictors (unconditional models) and with baseline achievement test scores as predictors (to assess the variance in the relative achievement gains over time by students). Results indicated no significant, nor near-significant school effects in either set of analyses (all p values > .35). Analyses also examined whether intervention effects tested below differed significantly across schools, and no evidence of such differences was detected. Hence, the school level of analysis was not considered further in the results reported for intervention effects. Effects of student gender, class size, teacher education level, and years of teaching were all examined and found unrelated to outcomes and hence were not considered further.

Primary Analyses

Primary Intervention Effects

Primary analyses were conducted using hierarchical linear models in which the level-1 model (Equation 1) specified that student end-of-year achievement at the end of the intervention is a function of the prior-year achievement test score, family poverty status (0 =non–low income; 1=low income), and student racial/ethnic minority group membership (0 = majority; 1 = minority).

| (1) |

In the level-2 model, course grade level was entered, along with the teachers’ study condition (MTP-S intervention=1; control group=0). The magnitude and direction of the coefficient (γ0c) indicates the estimated effect of the MTP-S intervention on end-of-year student achievement tests.

| 2 |

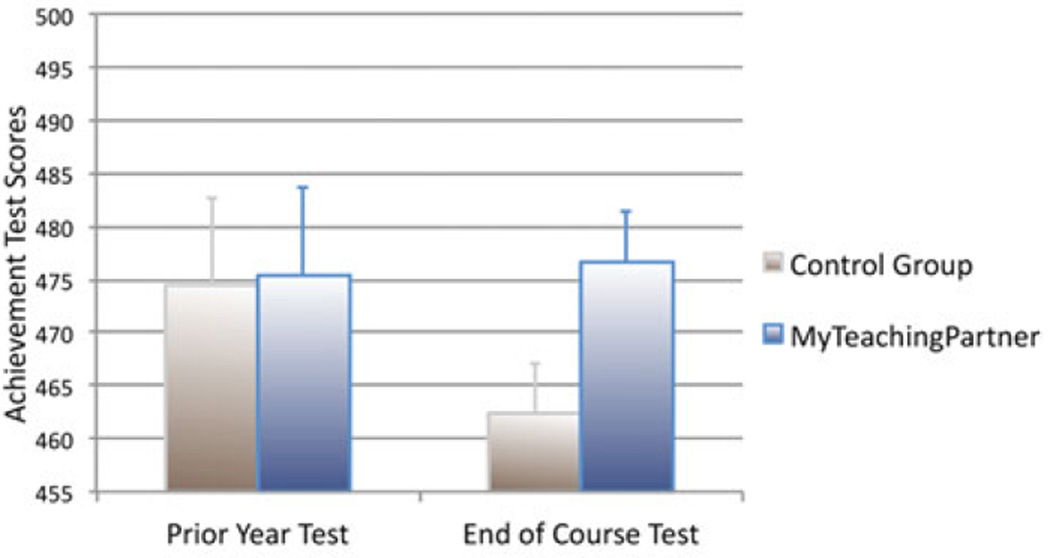

These results presented in Table 2 and Figure 1 first mention indicate significant effects of having participated in the MTP-S intervention. More specifically, after accounting for individual student baseline scores on state standards tests in the same general content area as well as a range of demographic characteristics, students whose teachers received MTP-S coaching performed significantly higher on end-of-year state standards scores during the final year of the intervention. Hedge’s g for the simple comparison of outcome SOL scores between the MTP-S and the control group was .31. After inclusion of the covariates shown in Table 2, Hedge’s g was .48. This effect size regarding the benefits of MTP coaching for student achievement was equivalent to moving the typical student in the intervention group from the 50th to the 59th percentile relative to the control group.

Table 2.

MTP-S intervention effects on student achievement (covarying prior-year achievement, demographic, and teacher factors)

| Achievement Test Results |

||

|---|---|---|

| B | SE | |

| Intercept | 87.99*** | 18.9 |

| Prior-year achievement test score | .77*** | .019 |

| Student grade level (0 = middle school; 1 = high school) | 3.87 | 8.44 |

| Student family poverty status | −2.07 | 2.28 |

| Student racial/ethnic minority status (0 = majority; 1 = minority) | −0.33 | 2.40 |

| MTP-S intervention | 14.67** | 5.39 |

Note.

p < .001.

p < .01.

Figure 1.

MTP-S effects on student achievement. Mean achievement test scores for MTP-S and control group students from the most comparable prior-year course (pretest) and the current year’s focal class (posttest), adjusted for baseline demographic factors using HLM models (Table 2). Error bars reflect standard errors from HLM models.

Tests of Potential Moderators of Intervention Effects

Multi-level models were also used to examine whether effects of the intervention differed depending upon the content area being taught (e.g., English/history/social studies vs. math/science). This was assessed by entering a centered interaction term for content area × intervention group into the equations above following entry of all other factors, including the main effect of course content area. No main effect nor moderating effect of content area was observed (p > .50), indicating that the efficacy of the intervention did not differ significantly across content areas.

Similar analyses examined potential moderating effects of teacher and classroom characteristics, including teacher age, education level, gender, and racial/ethnic minority status, as well as classroom size, grade level, and classroom composition (e.g., student gender, racial/ethnic minority status, and family poverty levels). Tests were performed by adding into the models both main effects of these variables and interaction terms created by multiplying centered variables × intervention group status. Again, no moderating effects were observed. This indicates that there were no significant differences in program effectiveness related to any of these teacher or classroom characteristics.

DISCUSSION

These results provide further evidence of the efficacy and potential generalizability of MTP-S, an intervention approach to enhancing the quality of secondary school teaching to bring about meaningful gains in student achievement. Even aside from the well-recognized overarching value of demonstrating the simple replicability of novel interventions in complex social settings (Cohen, 1994; Forman et al., 2013), these findings begin to address the two primary challenges faced by any effort to develop an effective school-based intervention. The first, and most obvious, is to demonstrate a replicable capacity to enhance student outcomes when properly implemented. Even this straightforward hurdle has proven challenging to overcome, however. Indeed, other than the MTP-S intervention, very few broad, cross-content-area teacher professional development programs have proven to improve teachers’ capacity to enhance student achievement at the secondary level (Yoon et al., 2007). A second major challenge concerns the extent to which even a promising intervention approach actually can be effectively implemented across a wide range of school settings, including those under-resourced settings not optimally suited for intervention. The present results showing MTP-S effects on student achievement—based upon implementation in a large urban district—when combined with the results of the prior evaluation of the program in smaller and more rural districts (Allen et al., 2011) now suggest that the MTP-S program is capable of meeting both of these challenges.

This study explicitly adopted an approach of replication with planned variation (Francis, 2012) in order to test the MTP-S model under a variety of both setting and implementation characteristics. The particular variation tested in this study was a modification of the program to extend over two years with somewhat lower intensity than the original implementation. This modification was deemed to be more readily incorporated into practice in most school districts, in which teachers might not have time for a more intensive approach. A second minor benefit is that, although with both approaches positive results were seen after two years, there is likely to be a slight perceptual and sustainability advantage in being able to demonstrate positive results to school districts immediately upon conclusion of a longer but less intensive intervention.

The present study also addressed methodological and sampling limitations of prior research on MTP-S. Compared to the prior evaluation of the program (Allen et al., 2011), attrition was far less of a problem in this evaluation, with only slightly over 10% of teachers not participating in the evaluation. This improvement over prior evaluation results thus largely addresses one of the most serious identified threats to the internal validity of the prior evaluation (What Works Clearinghouse, 2012). In addition, the prior implementation applied primarily to a middle school sample. Given that the costs of academic difficulty (up to and including school failure and dropout) become increasingly great with age, demonstrating that this intervention can be effective with this potentially more problematic population represents a significant advance. In addition, the prior evaluation of the intervention was conducted with a sample with a relatively smaller percentage of students who were members of racial/ethnic minority groups. The present study now demonstrates the efficacy of the program in classrooms in which Caucasian students are a minority of the student population. Both the move to considering primarily a population of high school students and of students of color significantly extend our knowledge of the likely generalizability of the MTP-S intervention to a much broader range of populations of students.

As with the prior implementation, MTP-S was again found to enhance student achievement across a wide spectrum of content areas of instruction. Notably, there was no significant difference in program impact for classrooms in which math/science versus English/social studies were being taught. The content-neutral approach of MTP-S is exceedingly rare in the literature on teacher professional development in secondary education, as even most successful intervention approaches are typically targeted at enhancing teaching of a certain content area. The present findings suggest that, unlike content-specific programs, the MTP-S approach tailored to adolescents’ overarching social and developmental needs has the potential to improve teaching across a broad range of content areas. These findings suggest the developmentally salient value of teacher–student interactions for fostering student learning and development—a result now spanning pre-K to high school—and show that the value of classroom experience for learning and development does not appear entirely due to teachers’ command of content but perhaps also to command of the teacher–student relationship (Pianta, in press).

One of the most noteworthy and unfortunate features of secondary education is that it is associated with steadily declining motivation and interest in school on the part of its adolescent participants (Eccles & Roeser, 2009). Although the decline in adolescent motivation over time is troubling, the results of this study suggest that conversely, it may also to some extent present low-hanging fruit for the right type of intervention with teachers, particularly one that fosters teachers’ more effective and developmentally attuned responses to students’ behaviors and cues. Academic gains in adolescence aren’t simply a product of quality presentation of academic material to adolescents; they also require adolescent motivation to learn such material (Pianta & Allen, 2009). This study suggested that even modest efforts to more carefully attune the classroom to adolescents’ developmental needs and goals (e.g., by providing even a modest degree of autonomy and sense of connection within the classroom [Hafen et al., 2012]) may yield large dividends in their engagement and learning (Allen & Allen, 2009; Gregory, Allen, Mikami, Hafen, & Pianta, 2014a). These findings are also consistent with emerging findings that the MTP-S coaching program is effective in reducing disciplinary referral rates for groups, such as students who are members of racial/ethnic minority groups, who may be most likely to be alienated and disaffected from the classroom setting (Gregory, Allen, Mikami, Hafen, & Pianta, 2014b). None of this is to minimize the importance of content-based instruction or intervention approaches. These findings do, however, clearly make the point that focusing solely upon such curriculum-based approaches potentially overlooks an entire class of interventions and approaches that may be of particular relevance to enhancing secondary education (Battistich, Watson, Solomon, Lewis, & Schaps, 1999).

Even when conducted across a two-year period, the intervention appears to be highly cost-effective. The intervention required approximately 20 hours of teacher in-service training, spread across two years. The full cost for the teacher coaches and video equipment was $4,000 per teacher over this period. Such costs compare favorably to the annual $2,000–$7,000 or more that districts typically spent each year on teacher in-service training (Odden, Archibals, Femanich, & Gallagher, 2002). These findings also continue a line of findings, with both the MTP coaching approach and other similar approaches, suggesting that successful educational interventions can be disseminated in a cost-effective and efficacious manner via online, distance-learning approaches (Allen et al., 2011; Downer et al., 2014; Fishman et al., 2013; Pianta et al., 2008b). They add further support to data from using the MTP coaching approach at earlier grade levels that a focused teacher-coaching approach can produce reliable gains in student achievement (Downer et al., 2014; Hamre, Pianta, Mashburn, & Downer, 2012).

Several limitations to these findings should also be noted. Given that we have not yet observed effects of this implementation of the intervention in the period after the intervention was completed (as was previously observed), we cannot yet know whether this implementation of MTP-S led to generalization of effects to new classrooms of students. However, the extension of the program across two years (as compared to a one-year, one-class intervention previously), with teachers being coached for different classes of students in the first and second years would seem likely to increase the generalizability of effects to new classes of students following completion of the intervention. Similarly, although analyses indicated no evidence of any attrition effects or initial sample differences impairing study validity, unmeasured biases due to such effects cannot be definitively ruled out. Continued replication with other school systems with different structural and demographic characteristics is also obviously still warranted. It should also be noted that although the prior-year achievement tests that were used as covariates were in fact strongly related to outcome assessments in this study, the prior-year tests were not identical to these outcome tests. Thus, although comparisons of intervention and control group scores at the same point in time are appropriate, comparisons of absolute scores across years would not be appropriate, given that different course material was being tested in different years. It would also have been useful to have data on progress of students in teachers’ classes prior to the onset of the intervention, which might have served as an effective covariate allowing for an even more focused and powerful test of the effects of the intervention.

Finally, to date, this intervention has been evaluated with teachers who volunteered to participate and were then randomly assigned. This creates a potential limit to dissemination unless or until replication demonstrates program effectiveness with teachers who are assigned to the intervention as part of their regular teaching contract. This limitation may be less restrictive than it first appears, however, as our experience has been that initial teacher participation in the intervention tended to generate considerable interest among nonparticipating teachers in the intervention, thus suggesting a process by which the intervention might be disseminated organically within a school over time. Ultimately, the degree to which the program appears motivating and engaging not only to students, but also to the teachers who participate in it, may be one of the most useful factors supporting its further dissemination and implementation.

Acknowledgments

FUNDING

This study and its write-up were supported by grants from the William T. Grant Foundation and the Institute for Education Science (R305A100367).

Footnotes

Color versions of one or more of the figures in the article can be found online at www.tandfonline.com/uree.

CONFLICT OF INTEREST

Robert Pianta is co-owner of a company charged with disseminating the MyTeachingPartner Program. However, no dissemination of the program at the secondary level is ongoing at this time.

Contributor Information

Joseph P. Allen, University of Virginia, Charlottesville, Virginia, USA

Christopher A. Hafen, University of Virginia, Charlottesville, Virginia, USA

Anne C. Gregory, Rutgers University, Piscataway, New Jersey, USA

Amori Y. Mikami, University of British Columbia, Vancouver, British Columbia, Canada

Robert Pianta, University of Virginia, Charlottesville, Virginia, USA.

REFERENCES

- Allen JP, Allen CW. Escaping the endless adolescence: How we can help our teenagers grow up before they grow old. New York, NY: Ballantine; 2009. [Google Scholar]

- Allen JP, Gregory A, Mikami AY, Lun J, Hamre BK, Pianta RC. Observations of effective teaching in secondary school classrooms: Predicting student achievement with the CLASS-S. School Psychology Review. 2013;42(1):76–98. [PMC free article] [PubMed] [Google Scholar]

- Allen JP, Pianta RC, Gregory A, Mikami AY, Lun J. An interaction-based approach to enhancing secondary school instruction and student achievement. Science. 2011;333(6045):1034–1037. doi: 10.1126/science.1207998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battistich V, Watson M, Solomon D, Lewis C, Schaps E. Beyond the three R’s: A broader agenda for school reform. The Elementary School Journal. 1999;99(5):415–432. [Google Scholar]

- Bill and Melinda Gates Foundation. Gathering feedback for teaching: Combining high-quality observations with student surveys and achievement gains (MET Project Policy and Practice Brief) Seattle, WA: Author; 2012. [Google Scholar]

- Center for Data-Driven Reform in Education. The Best Evidence Encyclopedia. (n.d.) Retrieved from http://www.bestevidence.org/index.cfm.

- Cohen J. The earth is round (p < .05) American Psychologist. 1994;49:997–1003. [Google Scholar]

- Commonwealth of Virginia. The Virginia Standards of Learning: Technical report. Richmond, VA: Author; 2005. [Google Scholar]

- Downer J, Pianta R, Burchinal M, Field S, Hamre B, Scott-Little C. Coaching and coursework focused on teacher-child interactions during language/literacy instruction: Effects on teacher outcomes and children’s classroom engagement. 2014 Manuscript submitted for publication. [Google Scholar]

- Eccles JS, Roeser RW. Schools, academic motivation, and stage-environment fit. In: Lerner RM, Steinberg L, editors. Handbook of adolescent psychology. 3rd. Vol. 3. Hoboken, NJ: John Wiley & Sons; 2009. pp. 404–434. [Google Scholar]

- Fishman B, Konstantopoulos S, Kubitskey BW, Vath R, Park G, Johnson H, Edelson DC. Comparing the impact of online and face-to-face professional development in the context of curriculum implementation. Journal of Teacher Education. 2013;64(5):426–438. [Google Scholar]

- Forman SG, Shapiro ES, Codding RS, Gonzales JE, Reddy LA, Rosenfield SA, Stoiber KC. Implementation science and school psychology. School Psychology Quarterly. 2013;28(2):77–100. doi: 10.1037/spq0000019. [DOI] [PubMed] [Google Scholar]

- Francis G. The psychology of replication and replication in psychology. Perspectives on Psychological Science. 2012;7(6):585–594. doi: 10.1177/1745691612459520. [DOI] [PubMed] [Google Scholar]

- Gregory A, Allen JP, Mikami A, Hafen CA, Pianta RC. Effects of a professional development program on behavioral engagement of students in middle and high school. Psychology in the Schools. 2014a;51:143–163. doi: 10.1002/pits.21741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregory A, Allen JP, Mikami A, Hafen CA, Pianta RC. The promise of a teacher professional development program in reducing the racial disparity in classroom discipline referrals. In: Losen DJ, editor. Closing the school discipline gap: Equitable remedies for excessive exclusion. New York, NY: Teachers College Press; 2014b. pp. 166–179. [Google Scholar]

- Hafen C, Allen JP, Mikami AY, Anne G, Hamre BK, Pianta RC. The pivotal role of adolescent autonomy in secondary classrooms. Journal of Youth & Adolescence. 2012;41(3):245–255. doi: 10.1007/s10964-011-9739-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hambleton RK, Crocker L, Cruse K, Dodd B, Plake BS, Poggio J. Review of selected technical characteristics of the Virginia Standard of Learning (SOL) assessments. Richmond, VA: Commonwealth of Virginia Department of Education; 2000. [Google Scholar]

- Hamre BK, Pianta RC. Classroom environments and developmental processes: Conceptualization & measurement. In: Meece J, Eccles J, editors. Handbook of research on schools, schooling and human development. New York, NY: Routledge; 2010. pp. 25–41. [Google Scholar]

- Hamre BK, Pianta RC, Mashburn AJ, Downer JT. Promoting young children’s social competence through the preschool PATHS curriculum and MyTeachingPartner professional development resources. Early Education & Development. 2012;23(6):809–832. doi: 10.1080/10409289.2011.607360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mashburn AJ, Meyer JP, Allen JP, Pianta RP. The effect of observation length and presentation order on the reliability and validity of teaching observations. Educational and Psychological Measurement. 2014;74:400–422. [Google Scholar]

- National Research Council. Achieving high educational standards for all. Washington, DC: National Academy Press; 2002. [Google Scholar]

- Odden AR, Archibals S, Femanich M, Gallagher HA. A cost framework for professional development. Journal of Education Finance. 2002;28(1):51–74. [Google Scholar]

- Pianta RC. Classroom processes and teacher-student interaction: Integrations with a developmental psychopathology perspective. In: Cicchetti D, editor. Developmental psychopathology. New York, NY: Wiley; (in press) [Google Scholar]

- Pianta RC, Allen JP. Building capacity for positive youth development in secondary schools: Teachers and their interactions with students. In: Shinn M, Yoshikawa H, editors. Toward positive youth development: Transforming schools and community programs. New York, NY: Oxford University Press; 2009. pp. 21–40. [Google Scholar]

- Pianta RC, Hamre BK, Allen JP. Teacher-student relationships and engagement: Conceptualizing, measuring, and improving the capacity of classroom interactions. In: Christenson SL, editor. Handbook of student engagement. New York, NY: Guildford Press; 2012. pp. 365–386. [Google Scholar]

- Pianta RC, Hamre BK, Hayes N, Mintz S, LaParo KM. Classroom assessment scoring system-Secondary (CLASS-S) Charlottesville, VA: University of Virginia; 2008a. [Google Scholar]

- Pianta RC, Mashburn AJ, Downer JT, Hamre BK, Justice L. Effects of web-mediated professional development resources on teacher-child interactions in pre-kindergarten classrooms. Early Childhood Research Quarterly. 2008b;23(4):431–451. doi: 10.1016/j.ecresq.2008.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Provasnik S, KewalRamani A, Coleman MM, Gilbertson L, Herring W, Xie Q. Status of education in rural America (NCES-2007-040) 2007 Retrieved from http://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2007040.

- Raudenbush SW, Bryk A. Hierarchical linear models: Applications and data analysis methods. Thousand Oaks, CA: Sage Publications; 2002. [Google Scholar]

- Singer JD. Using SAS PROC MIXED to fit multilevel models, hierarchical models, and individual growth models. Journal of Educational & Behavioral Statistics. 1998;23(4):323–355. [Google Scholar]

- What Works Clearinghouse. WWC quick review of the report “An interaction-based approach to enhancing secondary school instruction and student achievement”. 2012 doi: 10.1126/science.1207998. Retrieved from http://ies.ed.gov/ncee/wwc/pdf/quick_reviews/myteachingpartner_022212.pdf. [DOI] [PMC free article] [PubMed]

- Yoon KS, Duncan T, Lee SW, Scarloss B, Shapley KL. Reviewing the evidence on how professional development affects student achievement (Issues and Answers Report, REL 2007-No. 033) 2007 Retrieved from http://ies.ed.gov/ncee/edlabs/regions/southwest/pdf/REL_2007033.pdf.